Advancing Mobile Neuroscience: A Novel Wearable Backpack for Multi-Sensor Research in Urban Environments

Abstract

1. Introduction

1.1. Wearable Sensors and Outdoor Studies

1.2. Scope and Objectives

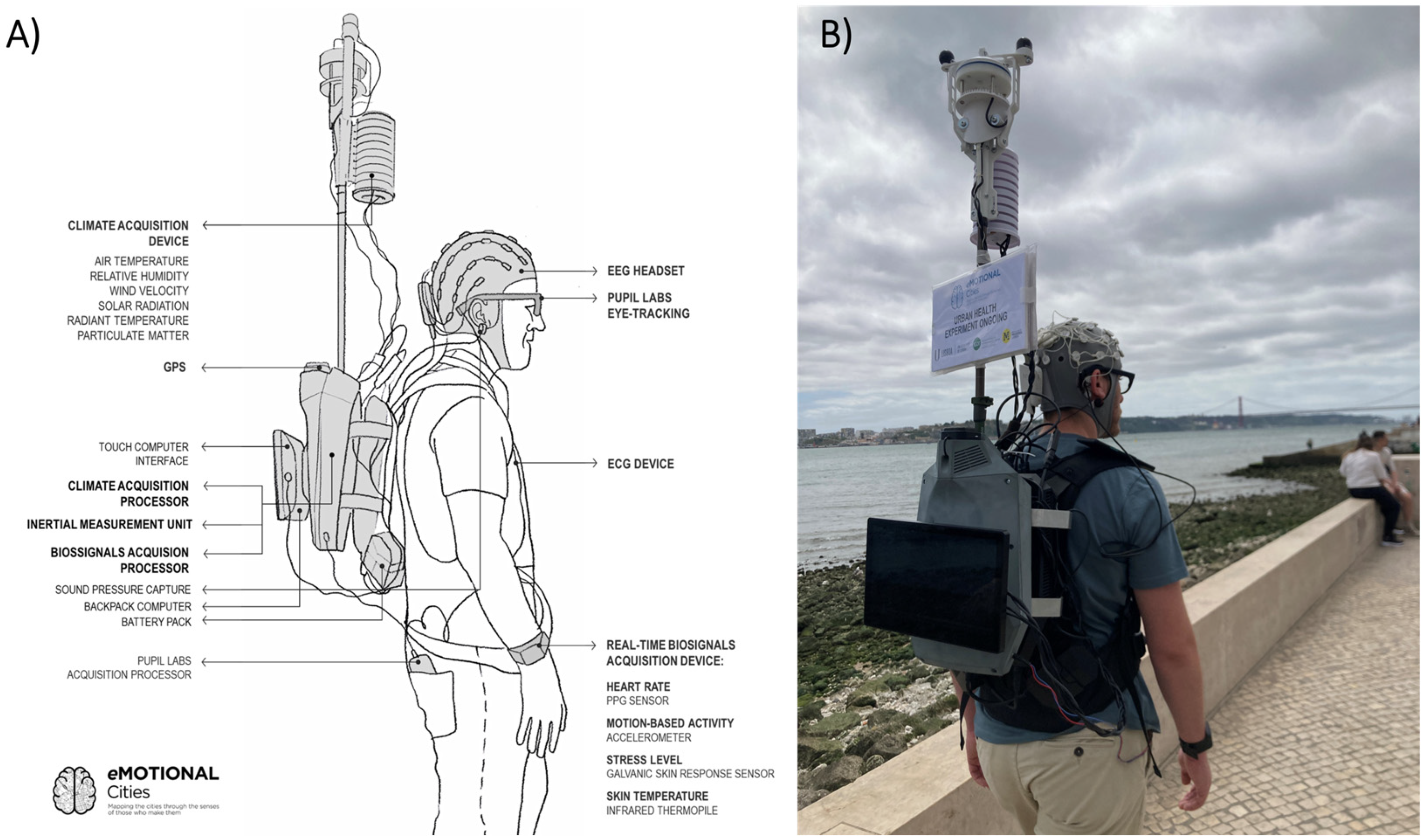

2. System Concept and Design

2.1. Sensors for Human Physiology and Behavioural Signals

2.1.1. Electroencephalogram (EEG)

2.1.2. Peripheral Physiological Signals

2.1.3. Electrocardiogram (ECG)

2.1.4. Eye-Tracker

2.1.5. Nine-Axis Inertial Measurement Unit (IMU)

2.1.6. Microphone

2.2. Environmental Sensors

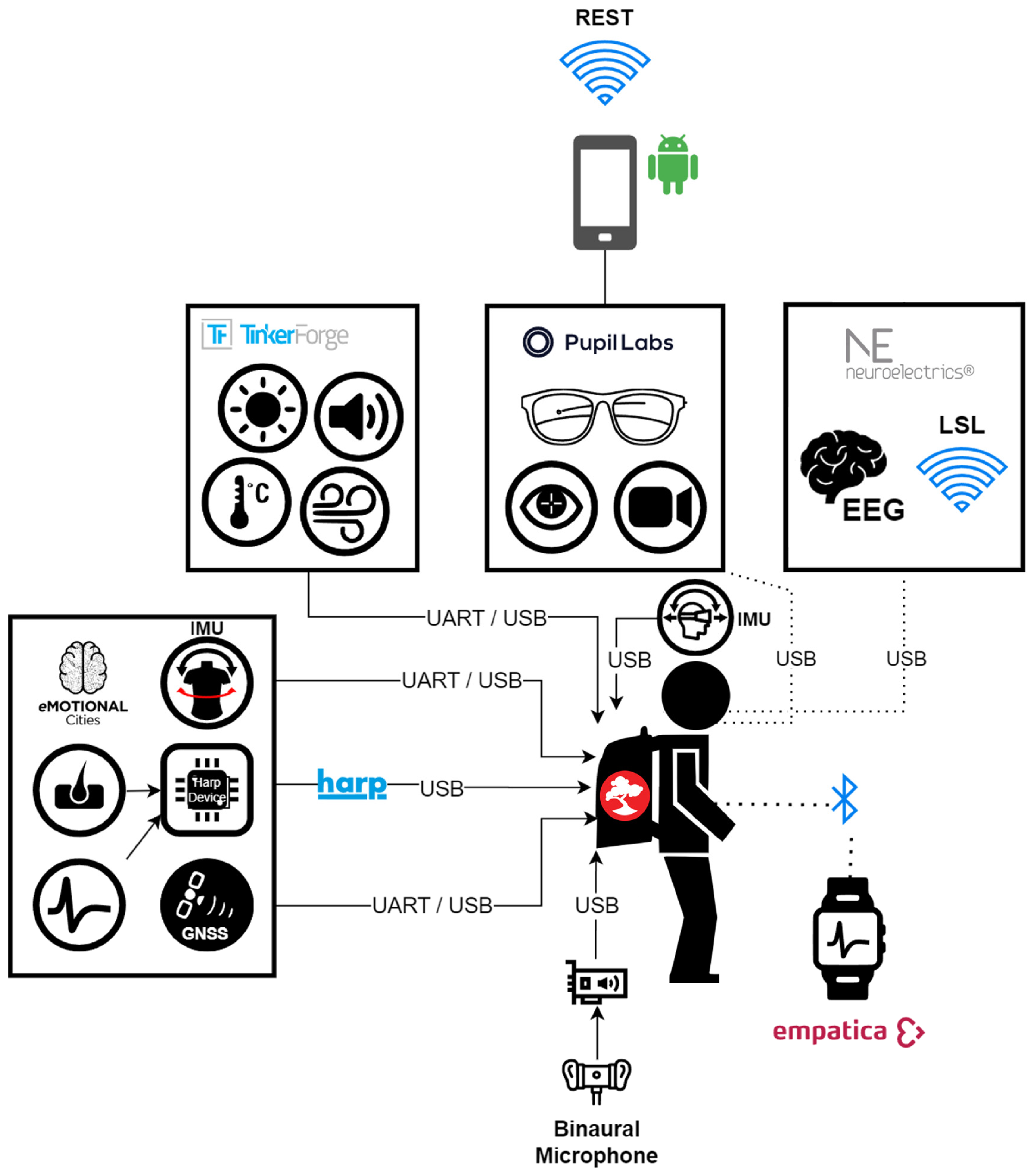

2.3. Spatiotemporal Sensor Synchronization

2.4. Integration Software

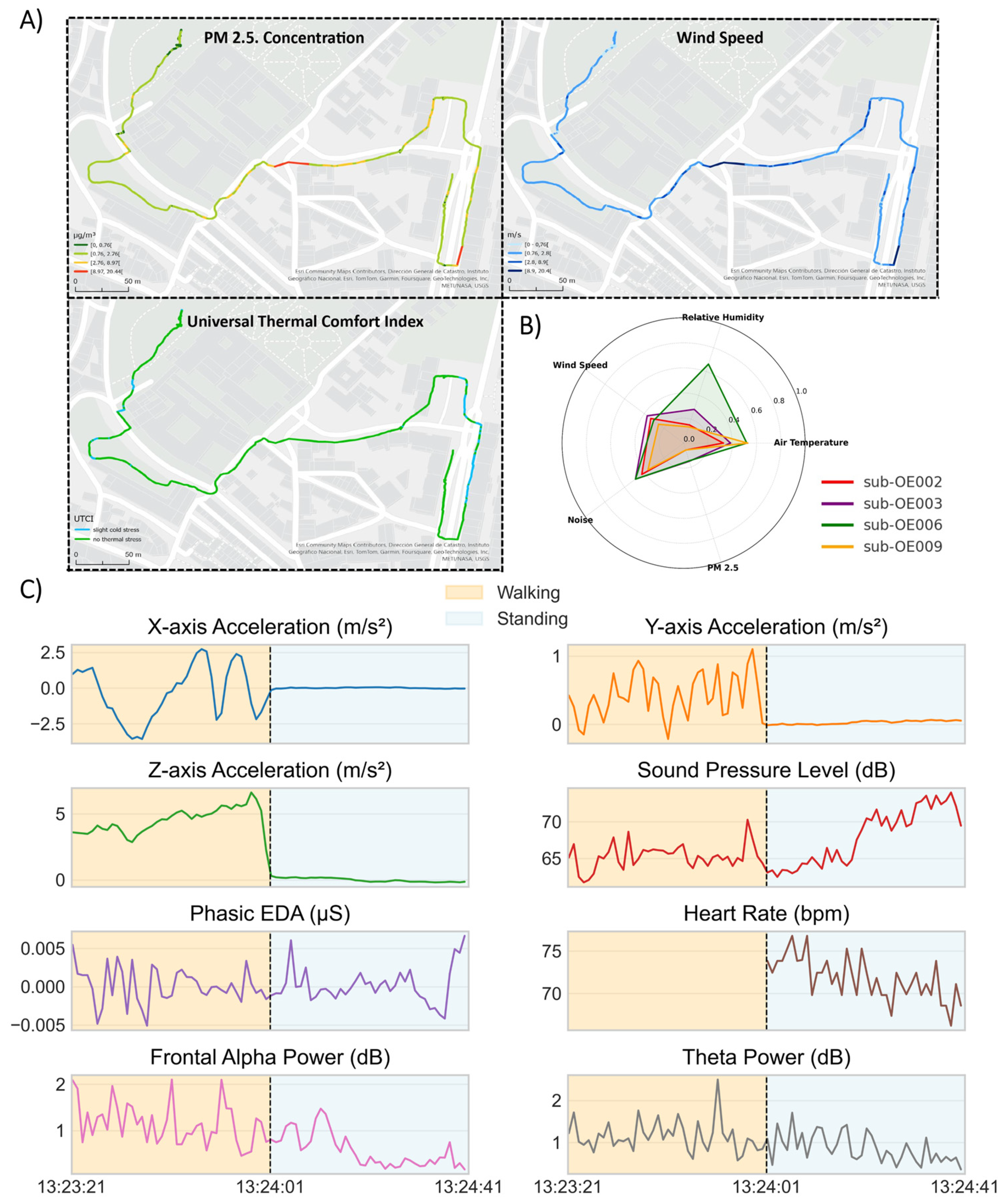

3. Experimental Setting for Testing and Results

3.1. Participants

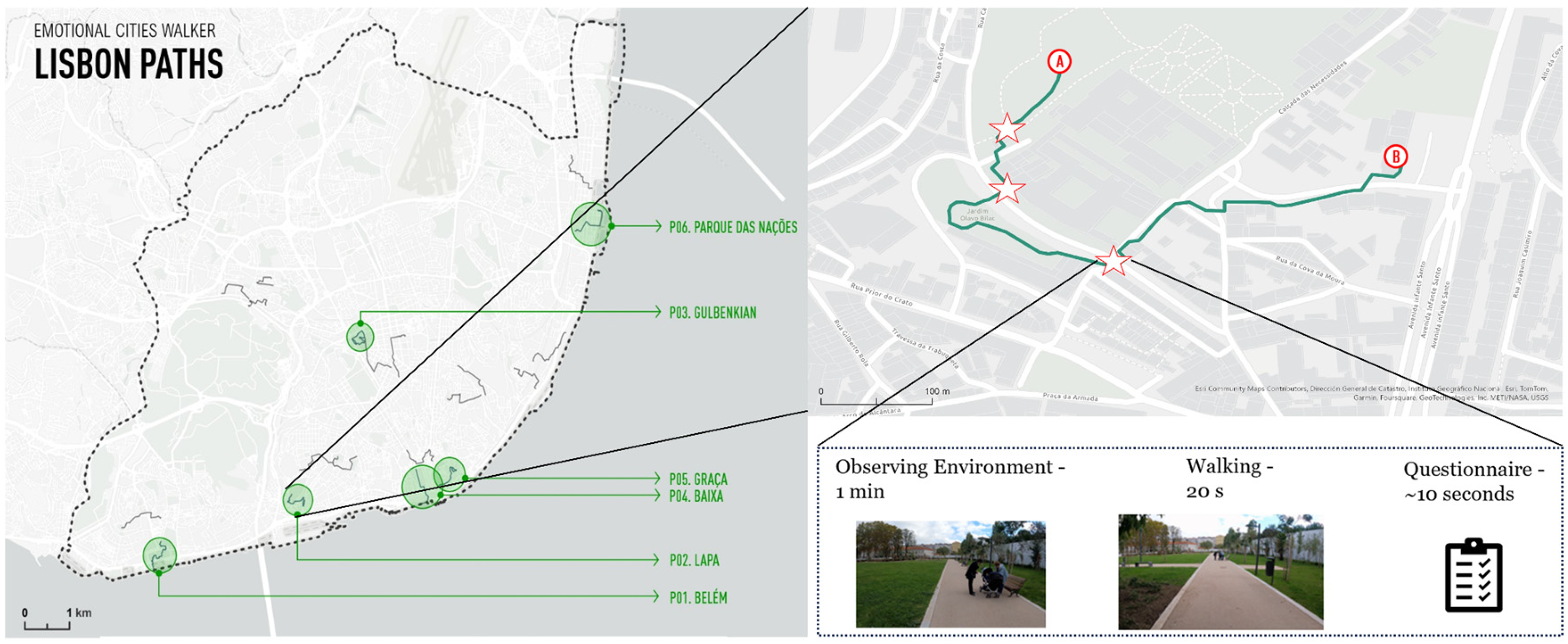

3.2. Experimental Procedures and Stimuli

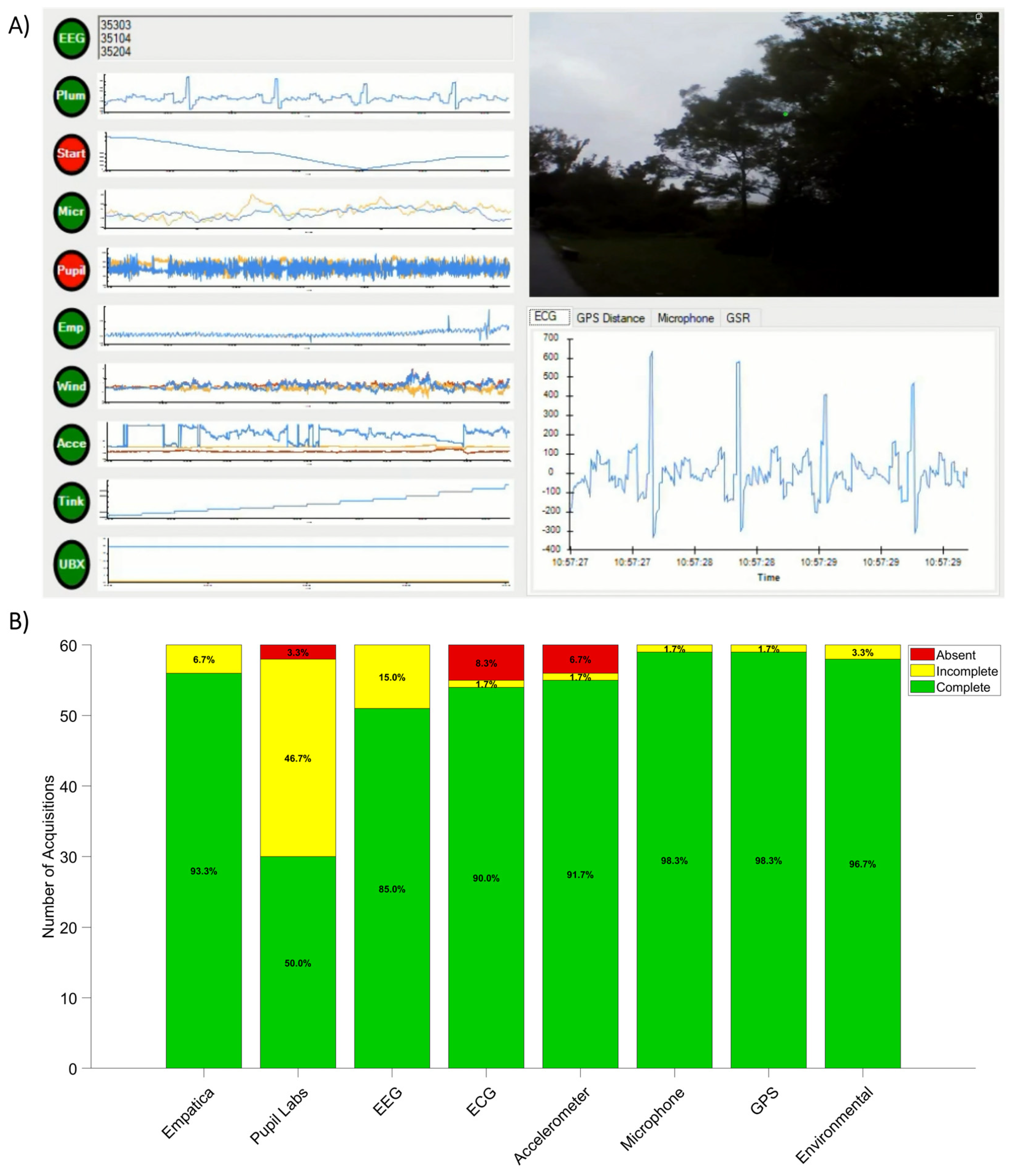

3.3. Sensor Reliability

3.4. Output Data Structure

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Corburn, J. Equitable and Healthy City Planning: Towards Healthy Urban Governance in the Century of the City. In Healthy Cities; Leeuw, E., Simos, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 31–41. [Google Scholar]

- Nations, U. Department of Economic and Social Affairs, Population Division. 2024. Available online: https://unhabitat.org/sites/default/files/2022/04/nua_tomorrow_today_together_digital_a.pdf (accessed on 17 November 2025).

- Joint Research Centre (European Commission); Baranzelli, C.; Vandecasteele, I.; Aurambout, J.-P.; Siragusa, A. The Future of Cities: Opportunities, Challanges and the Way Forward; EUR (Luxembourg. Online); Publications Office of the European Union: Luxembourg, 2019; ISBN 978-92-76-03847-4.

- Peen, J.; Schoevers, R.A.; Beekman, A.T.; Dekker, J. The Current Status of Urban-Rural Differences in Psychiatric Disorders. Acta Psychiatr. Scand. 2010, 121, 84–93. [Google Scholar] [CrossRef]

- Mitchell, R.J.; Richardson, E.A.; Shortt, N.K.; Pearce, J. Neighborhood Environments and Socioeconomic Inequalities in Mental Well-Being. Am. J. Prev. Med. 2015, 49, 80–84. [Google Scholar] [CrossRef] [PubMed]

- Alcock, I.; White, M.P.; Wheeler, B.W.; Fleming, L.E.; Depledge, M.H. Longitudinal Effects on Mental Health of Moving to Greener and Less Green Urban Areas. Environ. Sci. Technol. 2014, 48, 1247–1255. [Google Scholar] [CrossRef] [PubMed]

- White, M.P.; Elliott, L.R.; Grellier, J.; Economou, T.; Bell, S.; Bratman, G.N.; Cirach, M.; Gascon, M.; Lima, M.L.; Lõhmus, M.; et al. Associations between Green/Blue Spaces and Mental Health across 18 Countries. Sci. Rep. 2021, 11, 8903. [Google Scholar] [CrossRef] [PubMed]

- Bonifácio, A. The Role of Bluespaces for Well-Being and Mental Health. Rivers as Catalysts for the Quality of Urban Life. In Urban and Metropolitan Rivers: Geomorphology, Planning and Perception; Farguell Pérez, J., Santasusagna Riu, A., Eds.; Springer International Publishing: Cham, Switzerland, 2024; pp. 207–222. ISBN 978-3-031-62641-8. [Google Scholar]

- Tost, H.; Champagne, F.A.; Meyer-Lindenberg, A. Environmental Influence in the Brain, Human Welfare and Mental Health. Nat. Neurosci. 2015, 18, 1421–1431. [Google Scholar] [CrossRef]

- Stangl, M.; Maoz, S.L.; Suthana, N. Mobile Cognition: Imaging the Human Brain in the ‘Real World’. Nat. Rev. Neurosci. 2023, 24, 347–362. [Google Scholar] [CrossRef]

- Kuhn, R.L. A Landscape of Consciousness: Toward a Taxonomy of Explanations and Implications. Prog. Biophys. Mol. Biol. 2024, 190, 28–169. [Google Scholar] [CrossRef]

- Adli, M.; Berger, M.; Brakemeier, E.-L.; Engel, L.; Fingerhut, J.; Gomez-Carrillo, A.; Hehl, R.; Heinz, A.; Mayer, H.J.; Mehran, N.; et al. Neurourbanism: Towards a new discipline. Lancet Psychiatry 2017, 4, 183–185. [Google Scholar] [CrossRef]

- Ancora, L.A.; Blanco-Mora, D.A.; Alves, I.; Bonifácio, A.; Morgado, P.; Miranda, B. Cities and Neuroscience Research: A Systematic Literature Review. Front. Psychiatry 2022, 13, 983352. [Google Scholar] [CrossRef]

- Buttazzoni, A.; Doherty, S.; Minaker, L. How Do Urban Environments Affect Young People’s Mental Health? A Novel Conceptual Framework to Bridge Public Health, Planning, and Neurourbanism. Public Health Rep. 2022, 137, 48–61. [Google Scholar] [CrossRef]

- Bonifácio, A.; Morgado, P.; Peponi, A.; Ancora, L.; Blanco-Mora, D.A.; Conceição, M.; Miranda, B. Musings on Neurourbanism, Public Space and Urban Health. Finisterra 2023, 58, 63–88. [Google Scholar] [CrossRef]

- Pykett, J.; Osborne, T.; Resch, B. From Urban Stress to Neurourbanism: How Should We Research City Well-Being? Ann. Am. Assoc. Geogr. 2020, 110, 1936–1951. [Google Scholar] [CrossRef]

- Vigliocco, G.; Convertino, L.; De Felice, S.; Gregorians, L.; Kewenig, V.; Mueller, M.A.E.; Veselic, S.; Musolesi, M.; Hudson-Smith, A.; Tyler, N.; et al. Ecological Brain: Reframing the Study of Human Behaviour and Cognition. R. Soc. Open Sci. 2024, 11, 240762. [Google Scholar] [CrossRef] [PubMed]

- Vallet, W.; Van Wassenhove, V. Can Cognitive Neuroscience Solve the Lab-Dilemma by Going Wild? Neurosci. Biobehav. Rev. 2023, 155, 105463. [Google Scholar] [CrossRef] [PubMed]

- Casson, A.J. Wearable EEG and Beyond. Biomed. Eng. Lett. 2019, 9, 53–71. [Google Scholar] [CrossRef] [PubMed]

- Kowalski, L.F.; Lopes, A.M.S.; Masiero, E. Integrated Effects of Pavement Simulation Models and Scale Differences on the Thermal Environment of Tropical Cities: Physical and Numerical Modeling Experiments. City Built Environ. 2024, 2, 9. [Google Scholar] [CrossRef]

- Melnikov, V.R.; Christopoulos, G.I.; Krzhizhanovskaya, V.V.; Lees, M.H.; Sloot, P.M.A. Behavioural Thermal Regulation Explains Pedestrian Path Choices in Hot Urban Environments. Sci. Rep. 2022, 12, 2441. [Google Scholar] [CrossRef]

- Silva, T.; Reis, C.; Braz, D.; Vasconcelos, J.; Lopes, A. Climate Walking and Linear Mixed Model Statistics for the Seasonal Outdoor Thermophysiological Comfort Assessment in Lisbon. Urban Clim. 2024, 55, 101933. [Google Scholar] [CrossRef]

- Reis, C.; Nouri, A.S.; Lopes, A. Human Thermo-Physiological Comfort Assessment in Lisbon by Local Climate Zones on Very Hot Summer Days. Front. Earth Sci. 2023, 11, 1099045. [Google Scholar] [CrossRef]

- Silva, T.; Lopes, A.; Vasconcelos, J. A Micro-Scale Look into Pedestrian Thermophysiological Comfort in an Urban Environment. Bull. Atmos. Sci. Technol. 2024, 5, 18. [Google Scholar] [CrossRef]

- Chokhachian, A.; Ka-Lun Lau, K.; Perini, K.; Auer, T. Sensing Transient Outdoor Comfort: A Georeferenced Method to Monitor and Map Microclimate. J. Build. Eng. 2018, 20, 94–104. [Google Scholar] [CrossRef]

- Santucci, D.; Chokhachian, A. Climatewalks: Human centered environmental sensing. In Conscious Cities Anthology 2019: Science-Informed Architecture and Urbanism; 2019; Available online: https://theccd.org/article/climatewalks-human-centered-environmental-sensing/ (accessed on 17 November 2025).

- Helbig, C.; Ueberham, M.; Becker, A.M.; Marquart, H.; Schlink, U. Wearable Sensors for Human Environmental Exposure in Urban Settings. Curr. Pollut. Rep. 2021, 7, 417–433. [Google Scholar] [CrossRef]

- Salamone, F.; Masullo, M.; Sibilio, S. Wearable Devices for Environmental Monitoring in the Built Environment: A Systematic Review. Sensors 2021, 21, 4727. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Luo, J.; Liao, M.; Su, Y.; Lv, M.; Li, Q.; Xiao, S.; Xiang, J. Wearable Sensor-Based Monitoring of Environmental Exposures and the Associated Health Effects: A Review. Biosensors 2022, 12, 1131. [Google Scholar] [CrossRef]

- Gramann, K.; Gwin, J.T.; Ferris, D.P.; Oie, K.; Jung, T.-P.; Lin, C.-T.; Liao, L.-D.; Makeig, S. Cognition in Action: Imaging Brain/Body Dynamics in Mobile Humans. Rev. Neurosci. 2011, 22, 593–608. [Google Scholar] [CrossRef]

- Mavros, P.; Austwick, M.Z.; Smith, A.H. Geo-EEG: Towards the Use of EEG in the Study of Urban Behaviour. Appl. Spat. Anal. 2016, 9, 191–212. [Google Scholar] [CrossRef]

- Zander, T.O.; Lehne, M.; Ihme, K.; Jatzev, S.; Correia, J.; Kothe, C.; Picht, B.; Nijboer, F. A Dry EEG-System for Scientific Research and Brain–Computer Interfaces. Front. Neurosci. 2011, 5, 53. [Google Scholar] [CrossRef]

- Gorjan, D.; Gramann, K.; Pauw, K.D.; Marusic, U. Removal of Movement-Induced EEG Artifacts: Current State of the Art and Guidelines. J. Neural Eng. 2022, 19, 011004. [Google Scholar] [CrossRef]

- Klug, M.; Jeung, S.; Wunderlich, A.; Gehrke, L.; Protzak, J.; Djebbara, Z.; Argubi-Wollesen, A.; Wollesen, B.; Gramann, K. The BeMoBIL Pipeline for Automated Analyses of Multimodal Mobile Brain and Body Imaging Data. BioRxiv 2022. preprint. [Google Scholar] [CrossRef]

- Klug, M.; Gramann, K. Identifying Key Factors for Improving ICA-Based Decomposition of EEG Data in Mobile and Stationary Experiments. Eur. J. Neurosci. 2021, 54, 8406–8420. [Google Scholar] [CrossRef]

- Mavros, P.; Wälti, M.J.; Nazemi, M.; Ong, C.H.; Hölscher, C. A Mobile EEG Study on the Psychophysiological Effects of Walking and Crowding in Indoor and Outdoor Urban Environments. Sci. Rep. 2022, 12, 18476. [Google Scholar] [CrossRef] [PubMed]

- Aspinall, P.; Mavros, P.; Coyne, R.; Roe, J. The Urban Brain: Analysing Outdoor Physical Activity with Mobile EEG. Br. J. Sports Med. 2015, 49, 272–276. [Google Scholar] [CrossRef] [PubMed]

- Debener, S.; Minow, F.; Emkes, R.; Gandras, K.; de Vos, M. How about Taking a Low-Cost, Small, and Wireless EEG for a Walk? Psychophysiology 2012, 49, 1617–1621. [Google Scholar] [CrossRef] [PubMed]

- Buttazzoni, A.; Parker, A.; Minaker, L. Investigating the Mental Health Implications of Urban Environments with Neuroscientific Methods and Mobile Technologies: A Systematic Literature Review. Health Place 2021, 70, 102597. [Google Scholar] [CrossRef]

- Kiefer, P.; Giannopoulos, I.; Raubal, M.; Duchowski, A. Eye Tracking for Spatial Research: Cognition, Computation, Challenges. Spat. Cogn. Comput. 2017, 17, 1–19. [Google Scholar] [CrossRef]

- Kiefer, P.; Giannopoulos, I.; Raubal, M. Where Am I? Investigating Map Matching During Self—Localization with Mobile Eye Tracking in an Urban Environment. Trans. GIS 2014, 18, 660–686. [Google Scholar] [CrossRef]

- Bolpagni, M.; Pardini, S.; Dianti, M.; Gabrielli, S. Personalized Stress Detection Using Biosignals from Wearables: A Scoping Review. Sensors 2024, 24, 3221. [Google Scholar] [CrossRef]

- Reeves, J.P.; Knight, A.T.; Strong, E.A.; Heng, V.; Neale, C.; Cromie, R.; Vercammen, A. The Application of Wearable Technology to Quantify Health and Wellbeing Co-Benefits from Urban Wetlands. Front. Psychol. 2019, 10, 1840. [Google Scholar] [CrossRef]

- Kim, J.; Bouchard, C.; Bianchi-Berthouze, N.; Aoussat, A. Measuring Semantic and Emotional Responses to Bio-Inspired Design. In Design Creativity 2010; Taura, T., Nagai, Y., Eds.; Springer: London, UK, 2011; pp. 131–138. [Google Scholar]

- Kyriakou, K.; Resch, B.; Sagl, G.; Petutschnig, A.; Werner, C.; Niederseer, D.; Liedlgruber, M.; Wilhelm, F.; Osborne, T.; Pykett, J. Detecting Moments of Stress from Measurements of Wearable Physiological Sensors. Sensors 2019, 19, 3805. [Google Scholar] [CrossRef]

- Jendritzky, G.; De Dear, R.; Havenith, G. UTCI—Why Another Thermal Index? Int. J. Biometeorol. 2012, 56, 421–428. [Google Scholar] [CrossRef]

- Hojaiji, H.; Goldstein, O.; King, C.E.; Sarrafzadeh, M.; Jerrett, M. Design and Calibration of a Wearable and Wireless Research Grade Air Quality Monitoring System for Real-Time Data Collection. In Proceedings of the 2017 IEEE Global Humanitarian Technology Conference (GHTC), San Jose, CA, USA, 19–22 October 2017; pp. 1–10. [Google Scholar]

- Osborne, T. Restorative and Afflicting Qualities of the Microspace Encounter: Psychophysiological Reactions to the Spaces of the City. Ann. Am. Assoc. Geogr. 2022, 112, 1461–1483. [Google Scholar] [CrossRef]

- Johnson, T.; Kanjo, E.; Woodward, K. DigitalExposome: Quantifying Impact of Urban Environment on Wellbeing Using Sensor Fusion and Deep Learning. Comput. Urban Sci. 2023, 3, 14. [Google Scholar] [CrossRef] [PubMed]

- Askari, F.; Habibi, A.; Fattahi, K. Advancing Neuro-Urbanism: Integrating Environmental Sensing and Human-Centered Design for Healthier Cities. Contrib. Sci. Technol. Eng. 2025, 2, 37–50. [Google Scholar] [CrossRef]

- Kothe, C.; Shirazi, S.Y.; Stenner, T.; Medine, D.; Boulay, C.; Grivich, M.I.; Artoni, F.; Mullen, T.; Delorme, A.; Makeig, S. The Lab Streaming Layer for Synchronized Multimodal Recording. Imaging Neurosci. 2025, 3, IMAG.a.136. [Google Scholar] [CrossRef] [PubMed]

- Silva, T.; Ramusga, R.; Matias, M.; Amaro, J.; Bonifácio, A.; Reis, C.; Chokhachian, A.; Lopes, G.; Almeida, A.; Frazão, J.; et al. Climate Walking: A Comparison Study of Mobile Weather Stations and Their Relevance for Urban Planning, Design, Human Health and Well-Being. City Environ. Interact. 2025, 27, 100212. [Google Scholar] [CrossRef]

- Cruz, B.F.; Carriço, P.; Teixeira, L.; Freitas, S.; Mendes, F.; Bento, D.; Silva, A. A Flexible Fluid Delivery System for Rodent Behavior Experiments. eNeuro 2025, 12, ENEURO.0024–25.2025. [Google Scholar] [CrossRef]

- Silva, A.; Carvalho, F.; Cruz, B.F. High-Performance Wide-Band Open-Source System for Acoustic Stimulation. HardwareX 2024, 19, e00555. [Google Scholar] [CrossRef]

- Carvalho, F.; Silva, A.; Cruz, B.; Frazao, J.; Lopes, G. Harp: A Standard for Reactive and Self-Synchronising Hardware for Behavioural Research. 2024. Available online: https://zenodo.org/records/15874648 (accessed on 17 November 2025).

- Lopes, G.; Bonacchi, N.; Frazão, J.; Neto, J.P.; Atallah, B.V.; Soares, S.; Moreira, L.; Matias, S.; Itskov, P.M.; Correia, P.A.; et al. Bonsai: An Event-Based Framework for Processing and Controlling Data Streams. Front. Neuroinform. 2015, 9, 7. [Google Scholar] [CrossRef]

- Miranda, B.; Bonifácio, A.; Ancora, L.; Blanco Mora, D.A.; Conceição, M.A.; Amaro, J.; Meshi, D.; Kaur, A.; Hoogstraten, S.; Blanco Casares, Á.D.; et al. eMOTIONAL Cities Deliverable 5.3.—Report on the Results of the Indoor Lab Experiments. 2025. Available online: https://zenodo.org/records/15204462 (accessed on 17 November 2025).

- Gramann, K. Mobile EEG for Neurourbanism Research—What Could Possibly Go Wrong? A Critical Review with Guidelines. J. Environ. Psychol. 2024, 96, 102308. [Google Scholar] [CrossRef]

- Zhang, Z.; Amegbor, P.M.; Sabel, C.E. Assessing the Current Integration of Multiple Personalised Wearable Sensors for Environment and Health Monitoring. Sensors 2021, 21, 7693. [Google Scholar] [CrossRef]

- Niso, G.; Romero, E.; Moreau, J.T.; Araujo, A.; Krol, L.R. Wireless EEG: A Survey of Systems and Studies. NeuroImage 2023, 269, 119774. [Google Scholar] [CrossRef] [PubMed]

- Lau-Zhu, A.; Lau, M.P.H.; McLoughlin, G. Mobile EEG in Research on Neurodevelopmental Disorders: Opportunities and Challenges. Dev. Cogn. Neurosci. 2019, 36, 100635. [Google Scholar] [CrossRef] [PubMed]

- Milstein, N.; Gordon, I. Validating Measures of Electrodermal Activity and Heart Rate Variability Derived from the Empatica E4 Utilized in Research Settings That Involve Interactive Dyadic States. Front. Behav. Neurosci. 2020, 14, 148. [Google Scholar] [CrossRef] [PubMed]

- Ruffini, G.; Dunne, S.; Farres, E.; Cester, I.; Watts, P.C.P.; Silva, S.R.P.; Grau, C.; Fuentemilla, L.; Marco-Pallares, J.; Vandecasteele, B. ENOBIO Dry Electrophysiology Electrode; First Human Trial plus Wireless Electrode System. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; IEEE: Piscataway, NJ, USA, 2017; pp. 6689–6693. [Google Scholar]

| Modality | Use Cases | Outcomes |

|---|---|---|

| EEG | Band power activity and time-domain neural responses associated with emotional states or cognitive tasks in urban environments [13,39]. | Increases in global alpha and theta power have been associated with relaxation states, particularly in naturalistic environmental settings [13]. |

| Eye-tracker | Eye-tracking metrics such as fixations, saccades, blink rate, and scanpath length provide valuable indices of attentional processes in real-world environments [40]. | In spatial navigation tasks, increased visual attention to salient landmarks—as reflected in gaze fixations—enhances self-localization performance in urban environments [41]. |

| Cardiovascular | Cardiovascular metrics such as heart rate or blood volume pulse are widely used as real time indicators of autonomic stress responses in urban contexts [42]. | Wearable monitoring has revealed significant heart rate differences between wetland and urban settings, indicating measurable physiological benefits of exposure to natural environments [43]. |

| Electrodermal activity (EDA) | The EDA provides a reliable index of emotional arousal, allowing researchers to capture how different environmental features elicit affective responses [44] | Wearable sensors for EDA have identified acute moments of stress during city walks, providing fine-grained insights into how urban environments impact human well-being [45] |

| Environmental | Identification of emotional “micro-spaces” within the built environment that contribute to better wellbeing [48,49] | Air pollution, high temperature and inadequate light intensity have been shown to modulate physiological responses—acting as potential urban stressors [48,49,50] |

| Name | Brand | Data Type | Units | Sampling Rate | |

|---|---|---|---|---|---|

| Biosensors | Enobio® 32 headset | Neuroelectrics® | EEG | μV | 500 Hz |

| Pupil Invisible® | Pupil Labs GmbH | World Camera | Image Frame | 32 Hz | |

| Gaze | Pixels | 250 Hz | |||

| AD8232 Heart Rate Monitor | SparkFun® | ECG | mV | 1000 Hz | |

| Binaural OKM II Solo microphones | Soundman® | Audio | dBV/Pa | 44,100 Hz | |

| E4 wristband | Empatica Inc. | Heart rate | bpm | 1.56 Hz | |

| Blood Volume Pulse | mmHg | 64 Hz | |||

| Skin Temperature | °C | 4 Hz | |||

| Electrodermal Activity | μS | ||||

| BNO055 9-axis IMU | Bosch Sensortec GmbH | Orientation | degrees | 50 Hz | |

| Gyroscope | |||||

| Magnetometer | |||||

| Accelerometer | m/s2 | ||||

| Gravity | |||||

| Spatiotemporal Sensors * | Harp Clock | Harp | Harp Timestamp | μs | 31,250 Hz |

| GNSS ZED-F9R | u-blox | Latitude | DDªMIN’SEC” DIRECTION | 1 Hz | |

| Longitude | |||||

| Altitude | meters | ||||

| Time | hh:mm:ss | ||||

| Environmental Sensors | PTC Bricklet | Tinkerforge | Temperature | °C | 100 Hz |

| Industrial Dual 0–20 mA Bricklet | Irradiance | mA | |||

| Thermocouple Bricklet | Black Globe Temperature | °C | |||

| Particulate Matter Bricklet | Particulate Matter | μg/m3 | |||

| Sound Pressure Level Bricklet | Sound Pressure Level | dB(A) | |||

| Humidity Bricklet | Humidity | %RH | 1 Hz | ||

| Air Quality Bricklet | Air Pressure | hPa | |||

| Atmos 22 | METER Group | North Wind Speed | m/s | 2 Hz | |

| East Wind Speed | |||||

| Gust Wind |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amaro, J.; Ramusga, R.; Bonifácio, A.; Almeida, A.; Frazão, J.; Cruz, B.F.; Erskine, A.; Carvalho, F.; Lopes, G.; Chokhachian, A.; et al. Advancing Mobile Neuroscience: A Novel Wearable Backpack for Multi-Sensor Research in Urban Environments. Sensors 2025, 25, 7163. https://doi.org/10.3390/s25237163

Amaro J, Ramusga R, Bonifácio A, Almeida A, Frazão J, Cruz BF, Erskine A, Carvalho F, Lopes G, Chokhachian A, et al. Advancing Mobile Neuroscience: A Novel Wearable Backpack for Multi-Sensor Research in Urban Environments. Sensors. 2025; 25(23):7163. https://doi.org/10.3390/s25237163

Chicago/Turabian StyleAmaro, João, Rafael Ramusga, Ana Bonifácio, André Almeida, João Frazão, Bruno F. Cruz, Andrew Erskine, Filipe Carvalho, Gonçalo Lopes, Ata Chokhachian, and et al. 2025. "Advancing Mobile Neuroscience: A Novel Wearable Backpack for Multi-Sensor Research in Urban Environments" Sensors 25, no. 23: 7163. https://doi.org/10.3390/s25237163

APA StyleAmaro, J., Ramusga, R., Bonifácio, A., Almeida, A., Frazão, J., Cruz, B. F., Erskine, A., Carvalho, F., Lopes, G., Chokhachian, A., Santucci, D., Morgado, P., & Miranda, B. (2025). Advancing Mobile Neuroscience: A Novel Wearable Backpack for Multi-Sensor Research in Urban Environments. Sensors, 25(23), 7163. https://doi.org/10.3390/s25237163