A Multi-Step Grasping Framework for Zero-Shot Object Detection in Everyday Environments Based on Lightweight Foundational General Models

Abstract

1. Introduction

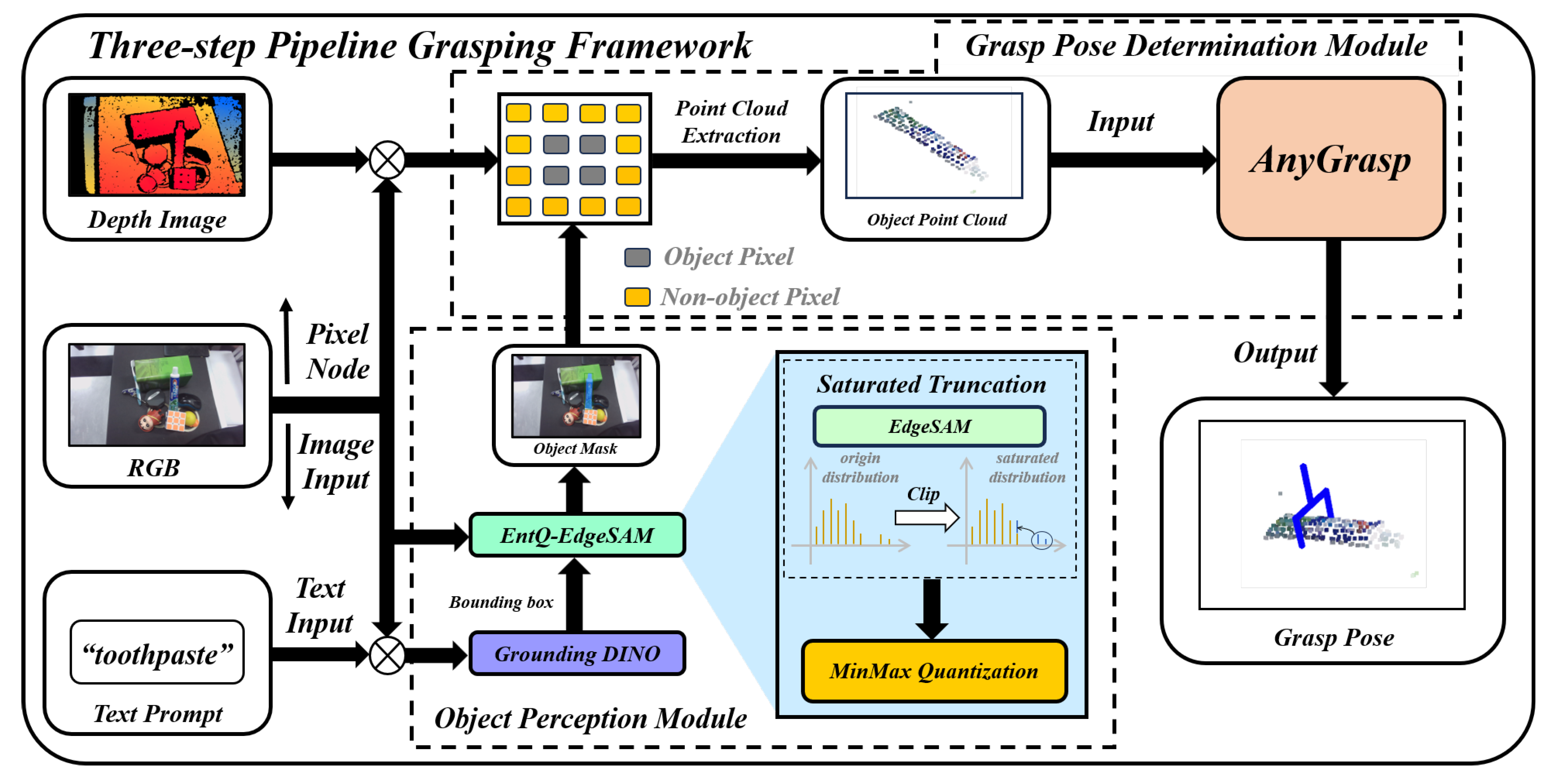

- A novel and efficient Three-step Pipeline Grasping Framework (TPGF) is proposed. This framework addresses the critical challenges of convenient deployment, zero-shot object perception in everyday environments, and low-latency performance in service robotics. By modularly integrating foundational general models (Grounding DINO and EdgeSAM) and a pre-trained grasp network (AnyGrasp), the TPGF achieves robust grasping of zero-shot objects in cluttered scenes without requiring additional training or fine-tuning.

- A Saturated Truncation strategy based on minimizing relative information entropy is proposed. This strategy specifically targets the limited computational and storage resources of household service robots. It significantly enhances the accuracy of Int8 quantization for the EdgeSAM, effectively reducing hardware overhead and enabling EntQ-EdgeSAM to achieve a 95% faster inference speed compared to the SAM used in existing grasping frameworks.

- The practical value and robustness of the TPGF through comprehensive experimentation are validated. Experimental results demonstrate the superior generalization capability of our Object Perception Module (OPM) in zero-shot object perception tasks. Furthermore, the TPGF demonstrates high recognition accuracy and grasping success rates in replicated everyday environments, providing an efficient and reliable solution for practical service robot deployment.

2. Proposed Three-Step Pipeline Grasping Framework

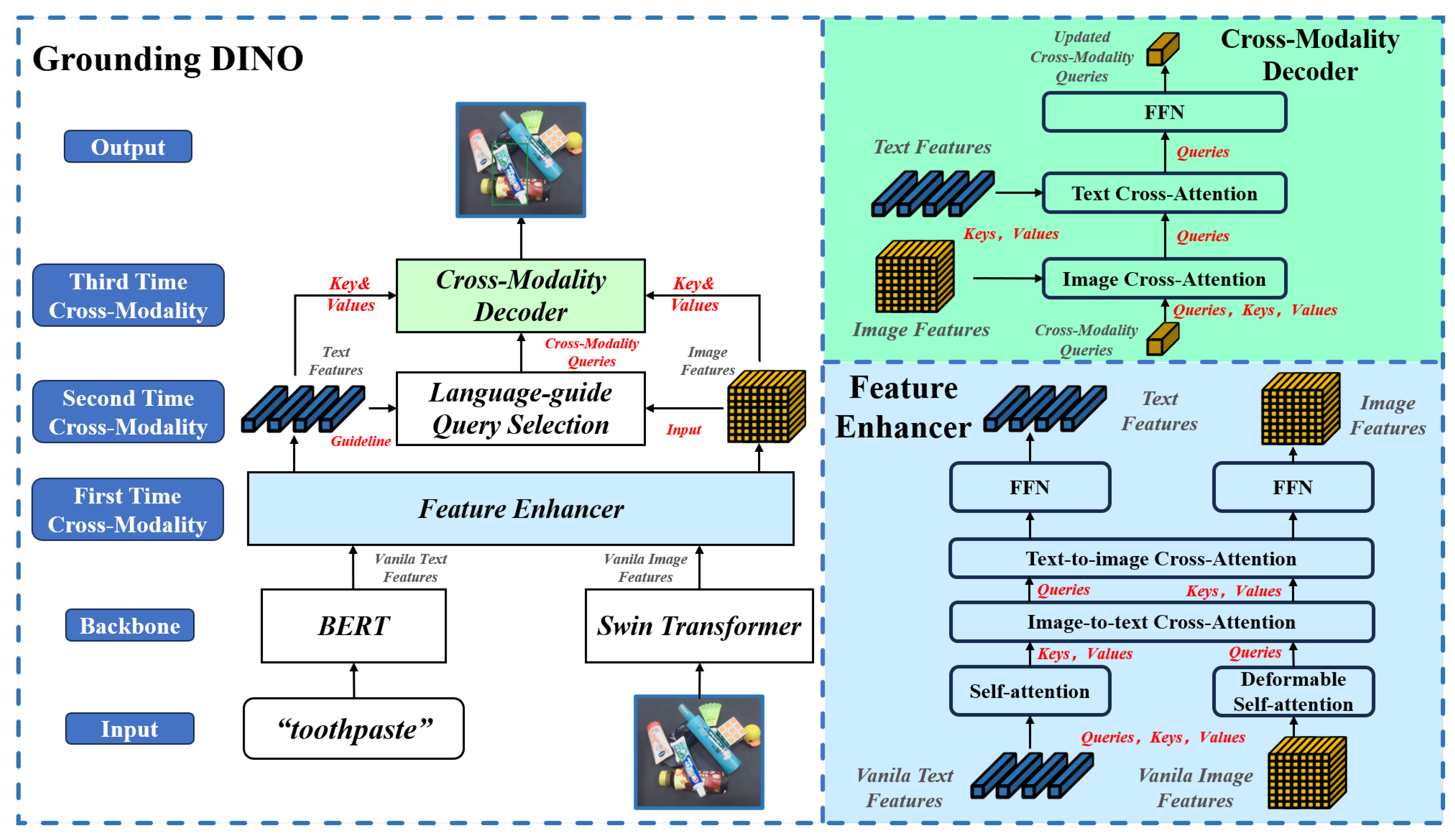

2.1. Object Perception Module Based on Foundational General Models

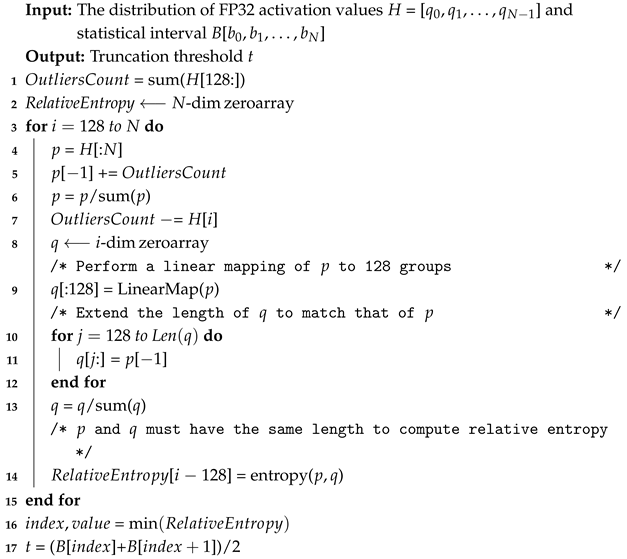

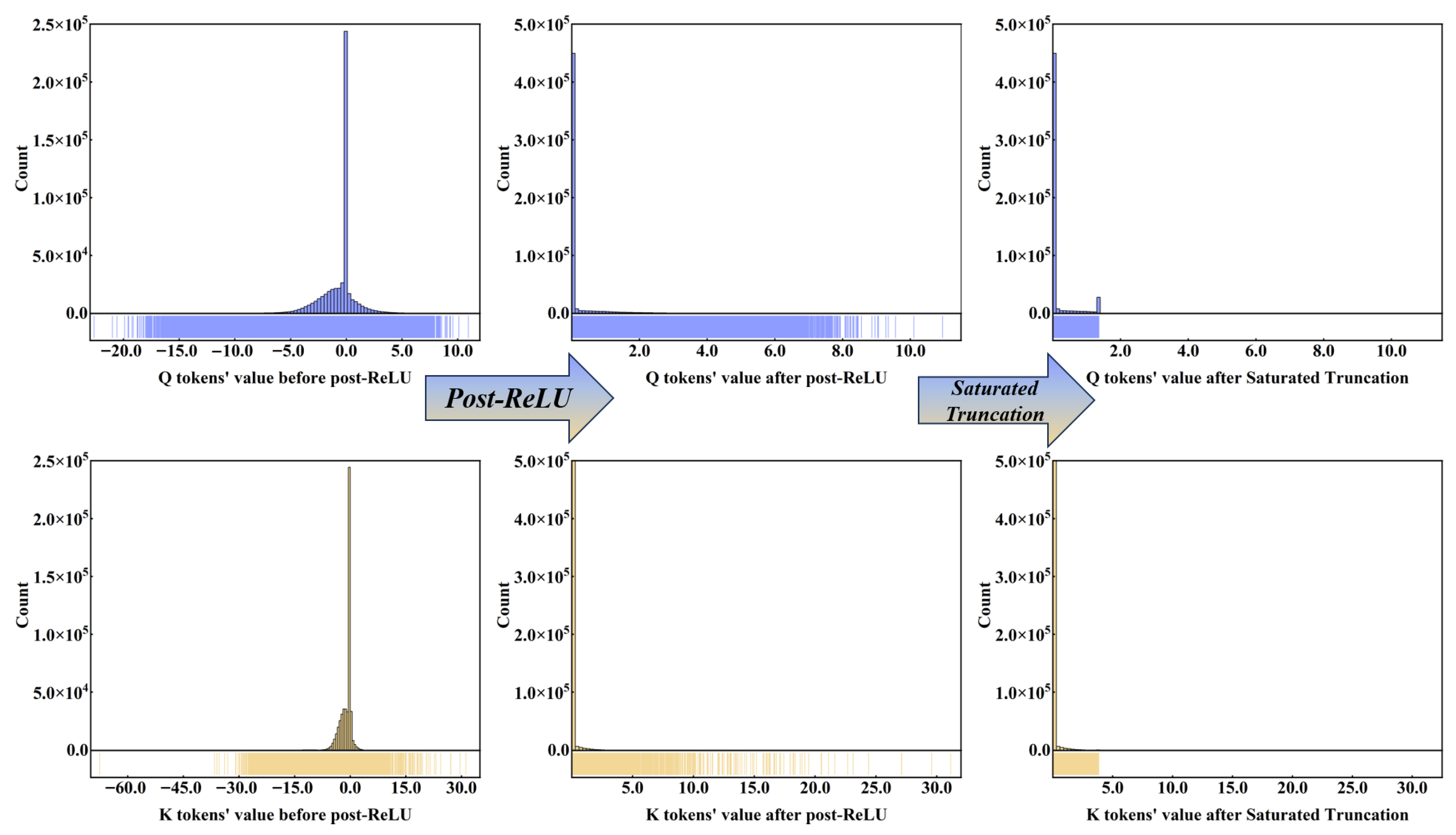

2.2. Saturated Truncation Strategy Based on Minimizing Relative Entropy

| Algorithm 1: Saturated Truncation |

|

2.3. Grasp Pose Determination Module

3. Comparison Experiments and Discussion

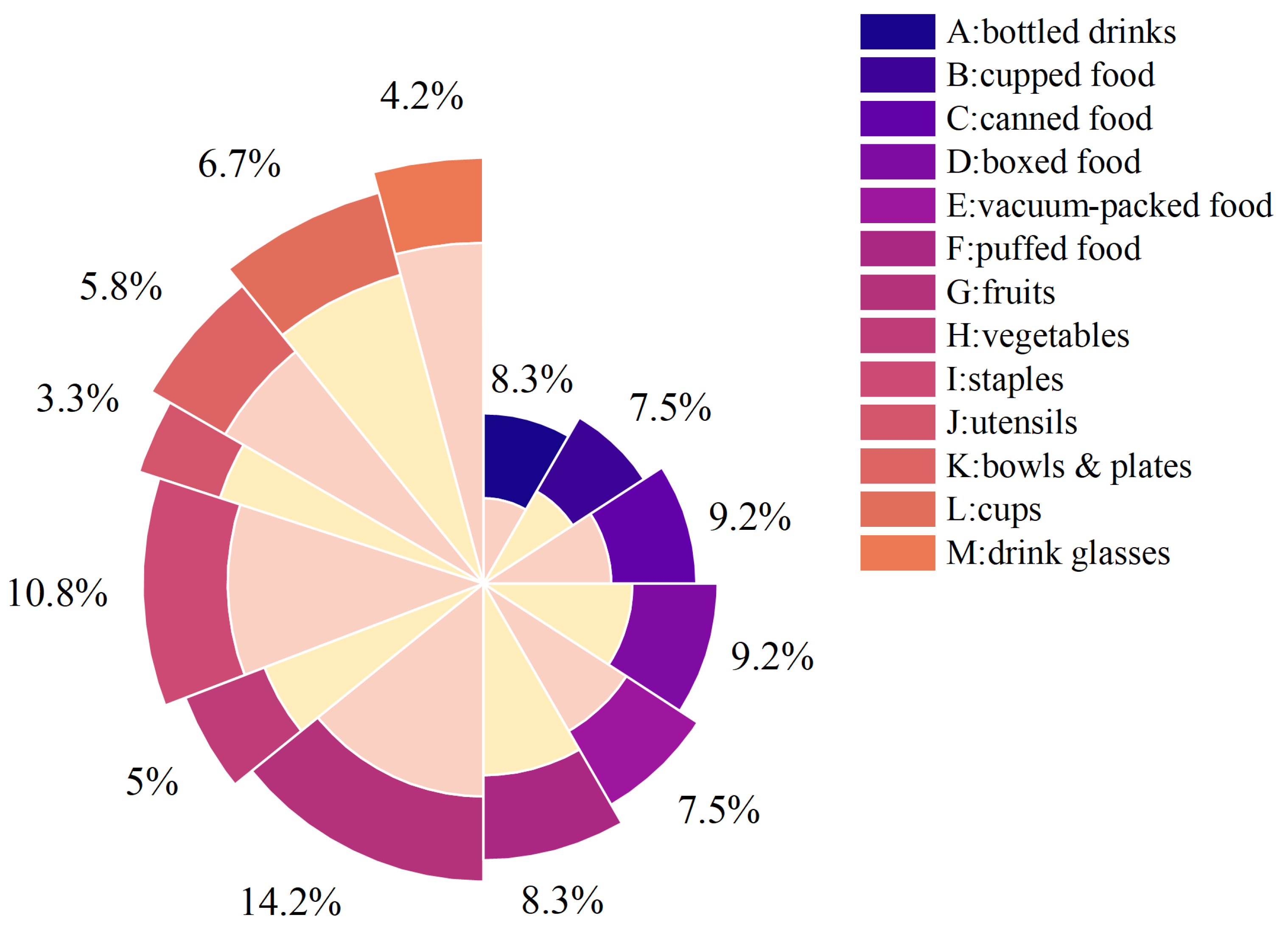

3.1. Dataset

3.2. Metrics

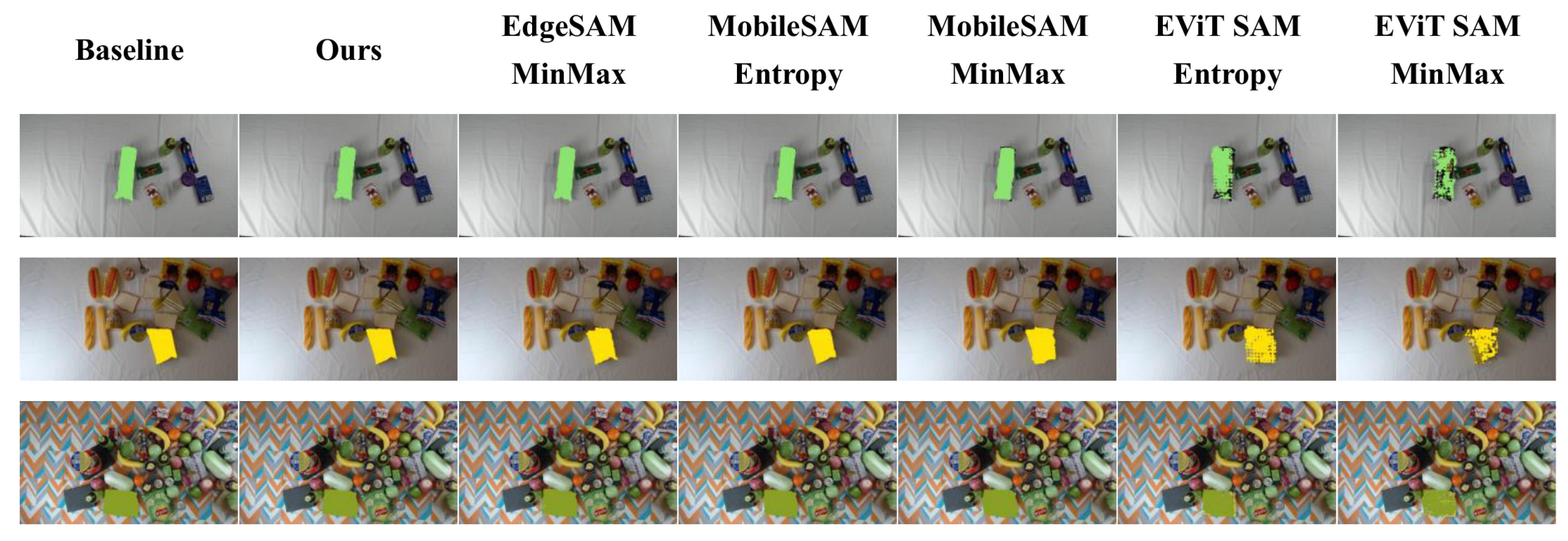

3.3. Generalization Performance Experiment

3.4. Quantization Performance Experiment

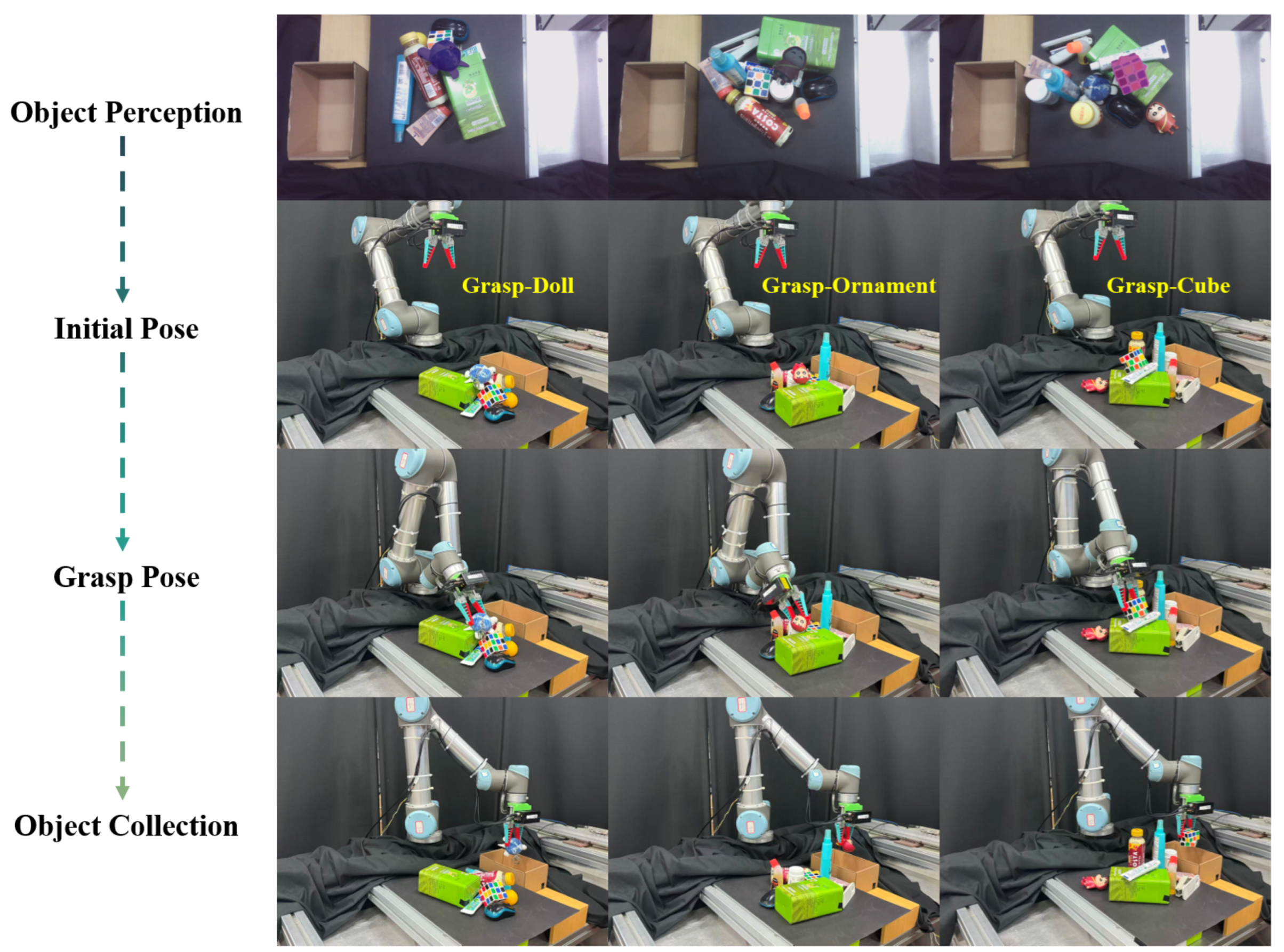

4. Grasping Experiment and Discussion

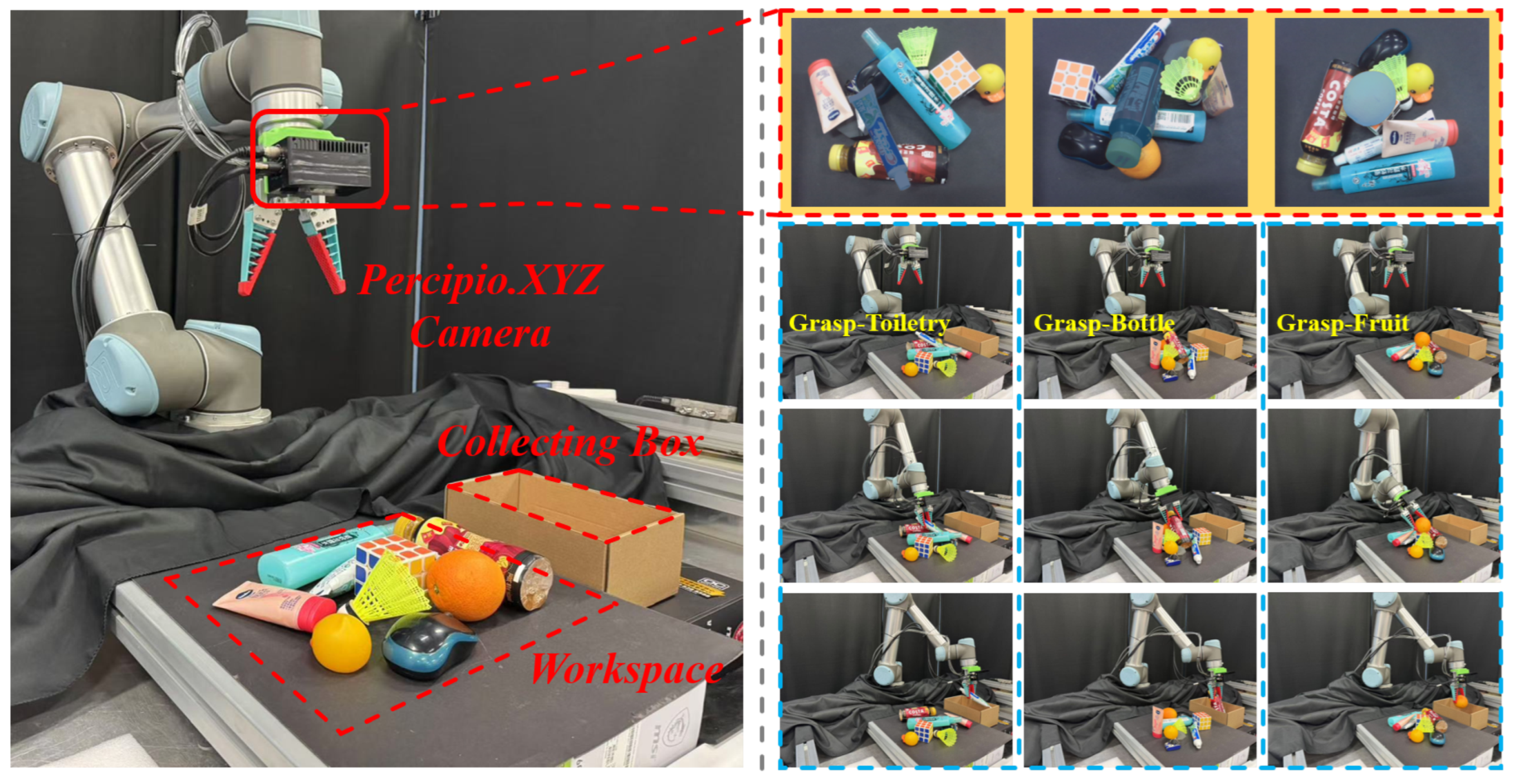

4.1. Experimental Platform

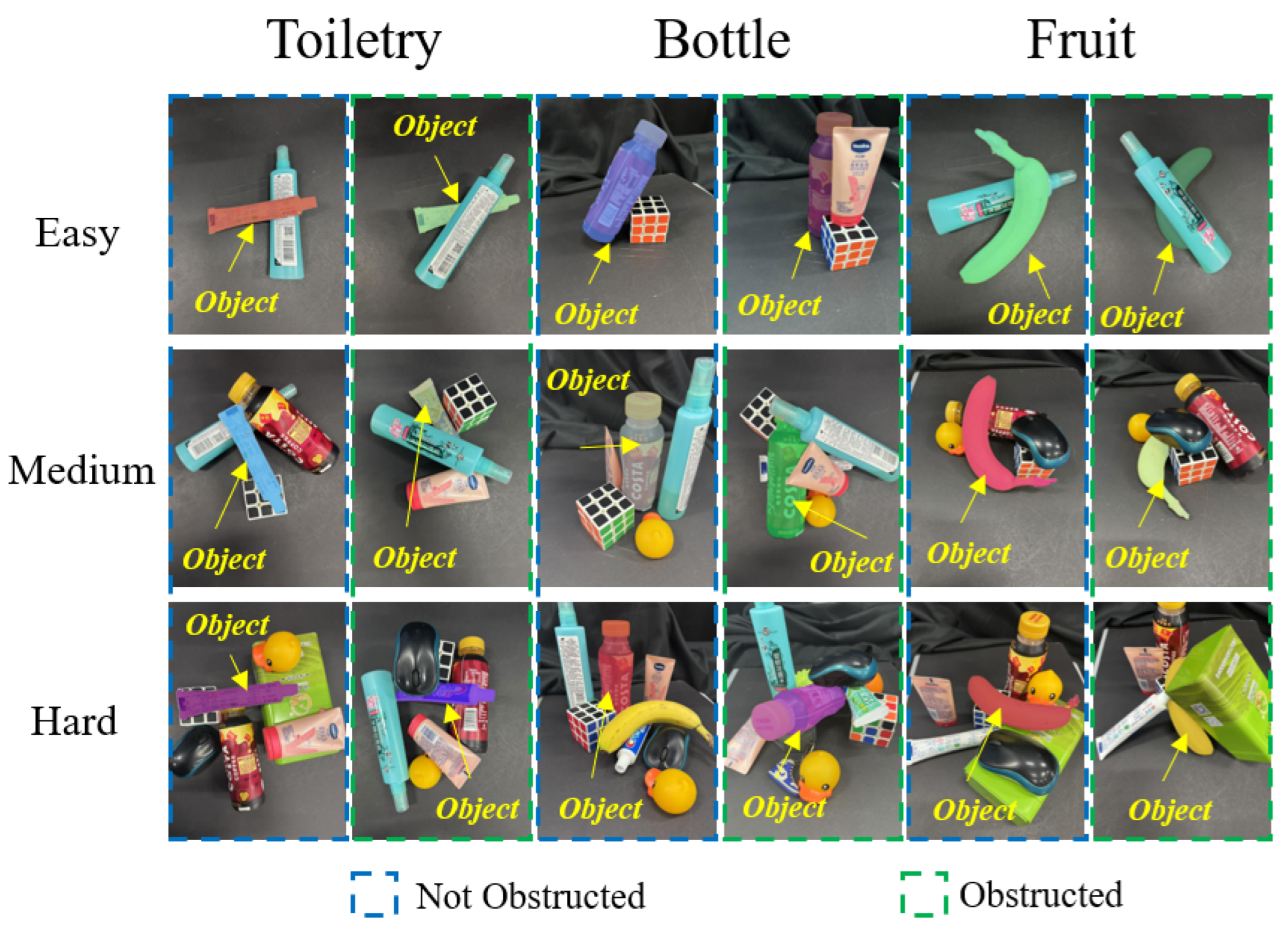

4.2. Grasping Experiments in Simulated Everyday Environments and Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fang, H.S.; Wang, C.; Gou, M.; Lu, C. GraspNet-1Billion: A Large-Scale Benchmark for General Object Grasping. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11441–11450. [Google Scholar] [CrossRef]

- Wang, C.; Fang, H.S.; Gou, M.; Fang, H.; Gao, J.; Lu, C. Graspness Discovery in Clutters for Fast and Accurate Grasp Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15944–15953. [Google Scholar] [CrossRef]

- Fang, H.S.; Wang, C.; Fang, H.; Gou, M.; Liu, J.; Yan, H.; Liu, W.; Xie, Y.; Lu, C. AnyGrasp: Robust and Efficient Grasp Perception in Spatial and Temporal Domains. IEEE Trans. Robot. 2023, 39, 3929–3945. [Google Scholar] [CrossRef]

- Ainetter, S.; Fraundorfer, F. End-to-end Trainable Deep Neural Network for Robotic Grasp Detection and Semantic Segmentation from RGB. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13452–13458. [Google Scholar] [CrossRef]

- Duan, S.; Tian, G.; Wang, Z.; Liu, S.; Feng, C. A semantic robotic grasping framework based on multi-task learning in stacking scenes. Eng. Appl. Artif. Intell. 2023, 121, 106059. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Y.; Shan, D. Unseen Object Few-Shot Semantic Segmentation for Robotic Grasping. IEEE Robot. Autom. Lett. 2023, 8, 320–327. [Google Scholar] [CrossRef]

- Su, Y.; Wang, N.; Cui, Z.; Cai, Y.; He, C.; Li, A. Real Scene Single Image Dehazing Network With Multi-Prior Guidance and Domain Transfer. IEEE Trans. Multimed. 2025, 27, 5492–5506. [Google Scholar] [CrossRef]

- Wang, N.; Cui, Z.; Su, Y.; Lan, Y.; Xue, Y.; Zhang, C.; Li, A. Weakly Supervised Image Dehazing via Physics-Based Decomposition. IEEE Trans. Circ. Syst. Video Technol. 2025. [Google Scholar] [CrossRef]

- Brohan, A.; Brown, N.; Carbajal, J.; Chebotar, Y.; Dabis, J.; Finn, C.; Gopalakrishnan, K.; Hausman, K.; Herzog, A.; Hsu, J.; et al. RT-1: Robotics Transformer for Real-World Control at Scale. arXiv 2022, arXiv:2212.06817. [Google Scholar]

- Brohan, A.; Brown, N.; Carbajal, J.; Chebotar, Y.; Choromanski, K.; Ding, T.; Driess, D.; Dubey, K.A.; Finn, C.; Florence, P.R.; et al. RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control. arXiv 2023, arXiv:2307.15818. [Google Scholar]

- Black, K.; Brown, N.; Driess, D.; Esmail, A.; Equi, M.; Finn, C.; Fusai, N.; Groom, L.; Hausman, K.; Ichter, B.; et al. π0: A Vision-Language-Action Flow Model for General Robot Control. arXiv 2024, arXiv:2410.24164. [Google Scholar]

- Intelligence, P.; Black, K.; Brown, N.; Darpinian, J.; Dhabalia, K.; Driess, D.; Esmail, A.; Equi, M.; Finn, C.; Fusai, N.; et al. π0.5: A Vision-Language-Action Model with Open-World Generalization. arXiv 2025, arXiv:2504.16054. [Google Scholar]

- Wang, N.; Yang, A.; Cui, Z.; Ding, Y.; Xue, Y.; Su, Y. Capsule Attention Network for Hyperspectral Image Classification. Remote Sens. 2024, 16, 4001. [Google Scholar] [CrossRef]

- Wang, N.; Cui, Z.; Lan, Y.; Zhang, C.; Xue, Y.; Su, Y.; Li, A. Large-Scale Hyperspectral Image-Projected Clustering via Doubly Stochastic Graph Learning. Remote Sens. 2025, 17, 1526. [Google Scholar] [CrossRef]

- Wang, N.; Cui, Z.; Li, A.; Xue, Y.; Wang, R.; Nie, F. Multi-order graph based clustering via dynamical low rank tensor approximation. Neurocomputing 2025, 647, 130571. [Google Scholar] [CrossRef]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding DINO: Marrying DINO with Grounded Pre-training for Open-Set Object Detection. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 38–55. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3992–4003. [Google Scholar] [CrossRef]

- Ceschini, D.; Cesare, R.D.; Civitelli, E.; Indri, M. Segmentation-Based Approach for a Heuristic Grasping Procedure in Multi-Object Scenes. In Proceedings of the 2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA), Padova, Italy, 10–13 September 2024; pp. 1–4. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Li, J.; Cappelleri, D.J. Sim-Suction: Learning a Suction Grasp Policy for Cluttered Environments Using a Synthetic Benchmark. IEEE Trans. Robot. 2024, 40, 316–331. [Google Scholar] [CrossRef]

- Noh, S.; Kim, J.; Nam, D.; Back, S.; Kang, R.; Lee, K. GraspSAM: When Segment Anything Model Meets Grasp Detection. arXiv 2024, arXiv:2409.12521. [Google Scholar]

- Zhao, X.; Ding, W.Y.; An, Y.; Du, Y.; Yu, T.; Li, M.; Tang, M.; Wang, J. Fast Segment Anything. arXiv 2023, arXiv:2306.12156. [Google Scholar] [CrossRef]

- Xiong, Y.; Varadarajan, B.; Wu, L.; Xiang, X.; Xiao, F.; Zhu, C.; Dai, X.; Wang, D.; Sun, F.; Iandola, F.N.; et al. EfficientSAM: Leveraged Masked Image Pretraining for Efficient Segment Anything. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 16111–16121. [Google Scholar]

- Zhang, Z.; Cai, H.; Han, S. EfficientViT-SAM: Accelerated Segment Anything Model Without Performance Loss. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 16–22 June 2024; pp. 7859–7863. [Google Scholar] [CrossRef]

- Cai, H.; Li, J.; Hu, M.; Gan, C.; Han, S. EfficientViT: Lightweight Multi-Scale Attention for High-Resolution Dense Prediction. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 17256–17267. [Google Scholar] [CrossRef]

- Zhang, C.; Han, D.; Qiao, Y.; Kim, J.U.; Bae, S.H.; Lee, S.; Hong, C.S. Faster Segment Anything: Towards Lightweight SAM for Mobile Applications. arXiv 2023, arXiv:2306.14289. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. RepViT-SAM: Towards Real-Time Segmenting Anything. arXiv 2023, arXiv:2312.05760. [Google Scholar]

- Zhou, C.; Li, X.; Loy, C.C.; Dai, B. EdgeSAM: Prompt-In-the-Loop Distillation for On-Device Deployment of SAM. arXiv 2023, arXiv:2312.06660. [Google Scholar]

- Sun, X.; Liu, J.; Shen, H.; Zhu, X.; Hu, P. On Efficient Variants of Segment Anything Model: A Survey. arXiv 2024, arXiv:2410.04960. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Han, K.; Zhang, W.; Ma, S.; Gao, W. Post-Training Quantization for Vision Transformer. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: San Francisco, CA, USA, 2021; Volume 34, pp. 28092–28103. [Google Scholar]

- Lin, Y.; Zhang, T.; Sun, P.; Li, Z.; Zhou, S. FQ-ViT: Post-Training Quantization for Fully Quantized Vision Transformer. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI-22, Vienna, Austria, 23–29 July 2022; Raedt, L.D., Ed.; IJCAI: Irvine, CA, USA, 2022; pp. 1173–1179. [Google Scholar] [CrossRef]

- Yuan, Z.; Xue, C.; Chen, Y.; Wu, Q.; Sun, G. PTQ4ViT: Post-training Quantization for Vision Transformers with Twin Uniform Quantization. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 191–207. [Google Scholar]

- Lv, C.; Chen, H.; Guo, J.; Guo, J.; Guo, J.; Ding, Y.; Liu, X.; Liu, X.; Liu, X. PTQ4SAM: Post-Training Quantization for Segment Anything. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 15941–15951. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Qi, C.R.; Chen, X.; Litany, O.; Guibas, L.J. ImVoteNet: Boosting 3D Object Detection in Point Clouds With Image Votes. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 4403–4412. [Google Scholar] [CrossRef]

- Cai, Z.; Zhang, J.; Ren, D.; Yu, C.; Zhao, H.; Yi, S.; Yeo, C.; Loy, C.C. MessyTable: Instance Association in Multiple Camera Views. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Dave, A.; Tokmakov, P.; Ramanan, D. Towards Segmenting Anything That Moves. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 1493–1502. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO, GitHub Repository. 2023. Available online: https://github.com/ultralytics (accessed on 20 November 2024).

- Xie, C.; Xiang, Y.; Mousavian, A.; Fox, D. Unseen Object Instance Segmentation for Robotic Environments. IEEE Trans. Robot. 2021, 37, 1343–1359. [Google Scholar] [CrossRef]

| Methods | Regular Tasks | Zero-Shot Tasks | ||

|---|---|---|---|---|

| mAP50 | mAP95 | mAP50 | mAP95 | |

| YOLOv8-seg | 80.99% | 19.04% | 22.18% | 2.66% |

| YOLOv9-seg | 87.87% | 29.84% | 9.17% | 0.99% |

| YOLOv10 | 89.75% | 53.29% | 11.28% | 2.03% |

| YOLOv11-seg | 79.45% | 17.79% | 20.68% | 2.55% |

| Mask R-CNN | 85.01% | 21.90% | 19.46% | 2.63% |

| Grounding DINO | 60.05% | 35.89% | 58.27% | 34.20% |

| Methods | P/R/F Scores | Regular Tasks | Zero-Shot Tasks | ||

|---|---|---|---|---|---|

| mAP50 | mAP95 | mAP50 | mAP95 | ||

| P | 0.847 | 0.901 | 0.513 | 0.916 | |

| YOLOv8-seg | R | 0.926 | 0.979 | 0.638 | 0.980 |

| F | 0.884 | 0.938 | 0.568 | 0.946 | |

| P | 0.864 | 0.910 | 0.510 | 0.915 | |

| YOLOv9-seg | R | 0.927 | 0.981 | 0.578 | 0.976 |

| F | 0.894 | 0.944 | 0.542 | 0.944 | |

| P | 0.843 | 0.900 | 0.508 | 0.915 | |

| YOLOv11-seg | R | 0.930 | 0.979 | 0.590 | 0.975 |

| F | 0.884 | 0.937 | 0.545 | 0.944 | |

| P | 0.904 | 0.951 | 0.526 | 0.940 | |

| Mask R-CNN | R | 0.889 | 0.959 | 0.562 | 0.971 |

| F | 0.896 | 0.954 | 0.543 | 0.955 | |

| P | 0.875 | 0.952 | 0.706 | 0.944 | |

| Ours | R | 0.933 | 0.981 | 0.845 | 0.984 |

| F | 0.903 | 0.966 | 0.769 | 0.963 | |

| Methods | Ours | Ed-SAM MinMax | Mo-SAM Entropy | Mo-SAM MinMax | EViT-SAM Entropy | EViT-SAM MinMax |

|---|---|---|---|---|---|---|

| Groups | ||||||

| A | 0.893 | 0.838 | 0.844 | 0.692 | 0.525 | 0.502 |

| B | 0.893 | 0.851 | 0.848 | 0.741 | 0.574 | 0.527 |

| C | 0.888 | 0.848 | 0.840 | 0.716 | 0.581 | 0.542 |

| D | 0.878 | 0.823 | 0.823 | 0.685 | 0.536 | 0.504 |

| E | 0.848 | 0.792 | 0.793 | 0.651 | 0.488 | 0.456 |

| F | 0.887 | 0.815 | 0.853 | 0.682 | 0.483 | 0.462 |

| G | 0.918 | 0.882 | 0.883 | 0.760 | 0.596 | 0.555 |

| H | 0.917 | 0.886 | 0.887 | 0.768 | 0.573 | 0.533 |

| I | 0.884 | 0.841 | 0.826 | 0.724 | 0.567 | 0.515 |

| J | 0.741 | 0.659 | 0.596 | 0.380 | 0.279 | 0.260 |

| K | 0.746 | 0.628 | 0.617 | 0.493 | 0.384 | 0.351 |

| L | 0.914 | 0.872 | 0.873 | 0.751 | 0.592 | 0.540 |

| M | 0.864 | 0.822 | 0.773 | 0.674 | 0.605 | 0.566 |

| CR | 0.437 | 0.389 | 0.403 | 0.441 | 0.234 | 0.234 |

| SAM-B-based CR | 0.035 | 0.031 | 0.043 | 0.047 | 0.329 | 0.329 |

| Inference time | 3.086 ms | 3.168 ms | 5.757 ms | 5.725 ms | 3.500 ms | 3.432 ms |

| Scene | Not Obstructed | Obstructed | ||||

|---|---|---|---|---|---|---|

|

Grasped Total |

Success Rate |

Recognition Rate |

Grasped/ Total |

Success Rate |

Recognition Rate | |

| Easy | 40/45 | 88.9% | 97.8% | 11/15 | 73.3% | 93.3% |

| Medium | 38/45 | 84.4% | 97.8% | 31/45 | 68.9% | 86.7% |

| Difficult | 39/45 | 86.7% | 97.8% | 30/45 | 66.7% | 86.7% |

| Scene | Not Obstructed | Obstructed | ||||

|---|---|---|---|---|---|---|

|

Grasped Total |

Success Rate |

Recognition Rate |

Grasped/ Total |

Success Rate |

Recognition Rate | |

| Easy | 12/15 | 80% | 86.7% | 8/15 | 53.3% | 66.7% |

| Medium | 13/15 | 86.7% | 86.7% | 11/15 | 73.3% | 80% |

| Difficult | 10/15 | 66.7% | 80% | 8/15 | 53.3% | 66.7% |

| Framework | Object Perception | Segmentation | Grasp Generation | Zero-Shot Capability | Computational Cost |

|---|---|---|---|---|---|

| Ceschini et al. [18] | Mask R- CNN (close-set detector) | SAM-H | KNN + Theil-Sen algorithm (heuristic grasping method) | Limited (uses a close-set detector) | High (uses SAM- H, 0.5 s on GPU) |

| Li et al. [20] | Grounding DINO | SAM-H | Sim-Suction-Point net (trained on a synthetic dataset) | Moderate | High (also uses SAM-H) |

| TPGF (ours) | Grounding DINO (open-set object detector) | EntQ- EdgeSAM | AnyGrasp (robust 6D grasping pose estimator) | Strong | Low (uses EntQ- EdgeSAM, 3 ms on GPU) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Zhang, T.; Zou, Y. A Multi-Step Grasping Framework for Zero-Shot Object Detection in Everyday Environments Based on Lightweight Foundational General Models. Sensors 2025, 25, 7125. https://doi.org/10.3390/s25237125

Li R, Zhang T, Zou Y. A Multi-Step Grasping Framework for Zero-Shot Object Detection in Everyday Environments Based on Lightweight Foundational General Models. Sensors. 2025; 25(23):7125. https://doi.org/10.3390/s25237125

Chicago/Turabian StyleLi, Ruibo, Tie Zhang, and Yanbiao Zou. 2025. "A Multi-Step Grasping Framework for Zero-Shot Object Detection in Everyday Environments Based on Lightweight Foundational General Models" Sensors 25, no. 23: 7125. https://doi.org/10.3390/s25237125

APA StyleLi, R., Zhang, T., & Zou, Y. (2025). A Multi-Step Grasping Framework for Zero-Shot Object Detection in Everyday Environments Based on Lightweight Foundational General Models. Sensors, 25(23), 7125. https://doi.org/10.3390/s25237125