1. Introduction

Contemporary digital cameras are limited by the capabilities of their sensors, making it difficult to capture the full dynamic range of real-world scenes. In contrast, High-Dynamic-Range (HDR) imaging can encompass a much wider range of light intensities, providing a more accurate representation of real-world luminance distributions. HDR imaging has become a key technique in modern visual applications, capable of faithfully reproducing lighting in complex scenes that contain both extremely bright and dark regions. Typical applications include photography and cinematography, where HDR enhances tonal depth and detail representation; virtual reality and game rendering, where it improves lighting realism and immersive experience; and autonomous driving and intelligent surveillance, where it helps maintain clear visibility of critical targets under challenging illumination conditions.

There are various approaches to generating HDR images, among which one of the most common methods is to reconstruct an HDR image by fusing multiple Low-Dynamic-Range (LDR) images captured under different exposure settings. However, during multi-exposure HDR reconstruction, object motion or camera shake may cause temporal inconsistencies or information loss due to overexposure, resulting in ghosting artifacts. This phenomenon remains one of the major challenges in multi-exposure HDR imaging.

To tackle the challenges associated with ghosting in HDR imaging, various methodologies have been developed. Traditional techniques commonly employ methods such as alignment-based methods [

1,

2], rejection-based methods [

3,

4,

5], and patch-based methods [

6,

7] to eliminate or align motion regions in images. However, the efficacy of these methods is largely contingent upon the performance of preprocessing techniques, such as optical flow and motion detection. And when dealing with significant scene motion, the results of these methods typically turn out to be rather unsatisfactory. With the advancement of Deep Neural Networks (DNN), several CNN-based methods [

8,

9,

10,

11,

12] have been applied in ghost-free HDR imaging. Among them, the “alignment-fusion” paradigm has shown remarkable success, especially in scenarios involving large-scale motion. Moreover, Transformer-based approaches [

13,

14], which can capture long-distance dependencies, are introduced as an alternative to CNNs. These methods further enhance HDR imaging performance and are adopted by the current mainstream state-of-the-art methods. However, Transformers still face two major challenges in ghost-free HDR imaging. On one hand, local details and global information are crucial for restoring multi-frame HDR content, while the self-attention mechanism of pure Transformers often exhibits a low-pass filtering effect, reducing the variance of input features and overly smoothing patch tokens. This occurs because self-attention essentially averages features across different patches, suppressing high-frequency information that is vital for distinguishing fine structural details, thereby limiting the Transformer’s ability to capture high-frequency local details [

13]. On the other hand, HDR images are typically high-resolution, and the computational complexity of self-attention grows quadratically with the spatial dimensions of the input feature map. This results in significant computational and memory overhead in high-resolution scenarios, restricting the practical application and scalability of Transformers in high-resolution HDR imaging tasks.

Considering that the high- and low-frequency components of an image correspond to local details and global structures, respectively, we propose a frequency-decomposition-based ghost-free HDR image reconstruction network. In both the cross-frame alignment and feature fusion stages, features are decomposed into high- and low-frequency components and processed according to their respective characteristics. Since high-frequency components represent local structures while low-frequency components characterize global information, we leverage the low-pass filtering property of average pooling (AvgPool) to decouple features into high-resolution high-frequency components and low-resolution low-frequency components.

Specifically, global motion or long-range dependencies can be effectively represented by low-frequency features without requiring high-resolution feature maps, while high-frequency features focus on fine-grained local structures that need high-resolution maps and are better modeled by local operators. Based on this, we adopt a dual-branch architecture in both stages to balance global information and local details.

In the cross-frame alignment stage, we propose the Frequency Alignment Module (FAM). The low-frequency branch employs a lightweight UNet to learn optical flow and align non-reference frames to the reference frame, efficiently capturing large-scale motion while reducing computational cost. Meanwhile, the high-frequency branch combines convolution and attention to adaptively refine edges and textures, suppressing ghosting and preserving structural consistency.

In the feature fusion stage, we design the Frequency Decomposition Processing Block (FDPB). The high-frequency branch uses a Local Feature Extractor (LFE) to capture details and enhance cross-frame high-frequency information, while the low-frequency branch adopts a Global Feature Extractor (GFE) to model long-range dependencies. To alleviate information loss caused by downsampling, we further introduce a Cross-Scale Fusion Module (CSFM) for effective cross-resolution integration.

By integrating FAM and FDPB, we propose the High–Low-Frequency-Aware HDR Network (HL-HDR), which consists of two stages: cross-frame alignment and feature fusion. FAM enables accurate motion modeling and detail preservation, while FDPB hierarchically captures both global and local contexts, leading to high-quality, ghost-free HDR reconstruction.

The main contributions are summarized as follows:

We propose a novel alignment method, FAM, in which the low-frequency branch captures large-scale motion through optical flow alignment, while the high-frequency branch refines local edges and textures, effectively suppressing ghosting.

The FDPB module, introduced in our work, addresses low-frequency components by employing a multi-scale feature extraction approach in conjunction with Transformer mechanisms to collectively capture global information. For high-frequency components, we employ small convolutional kernels and densely connected residual links to effectively extract local feature information. This strategic design in our model achieves a harmonious balance between speed and precision.

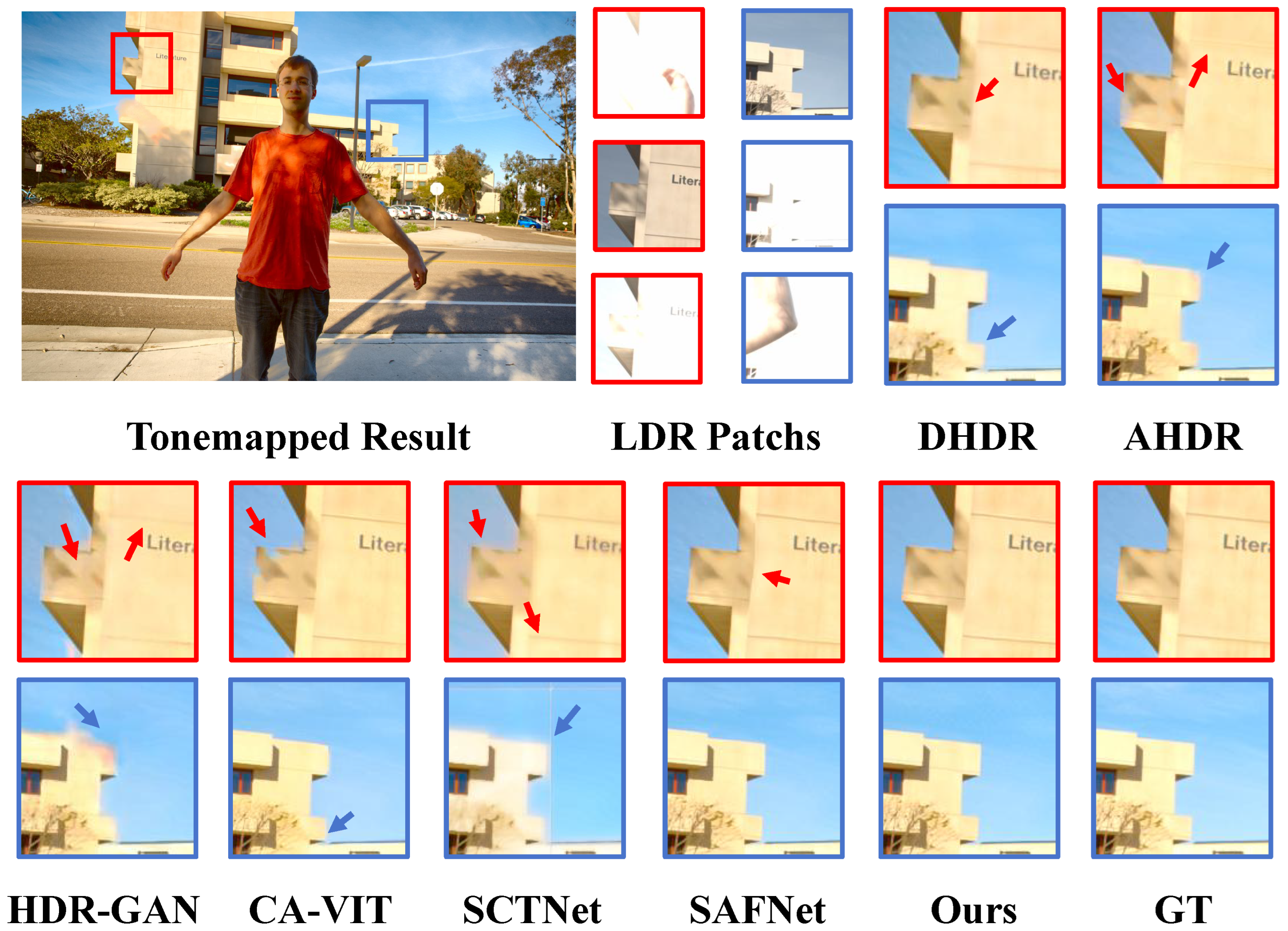

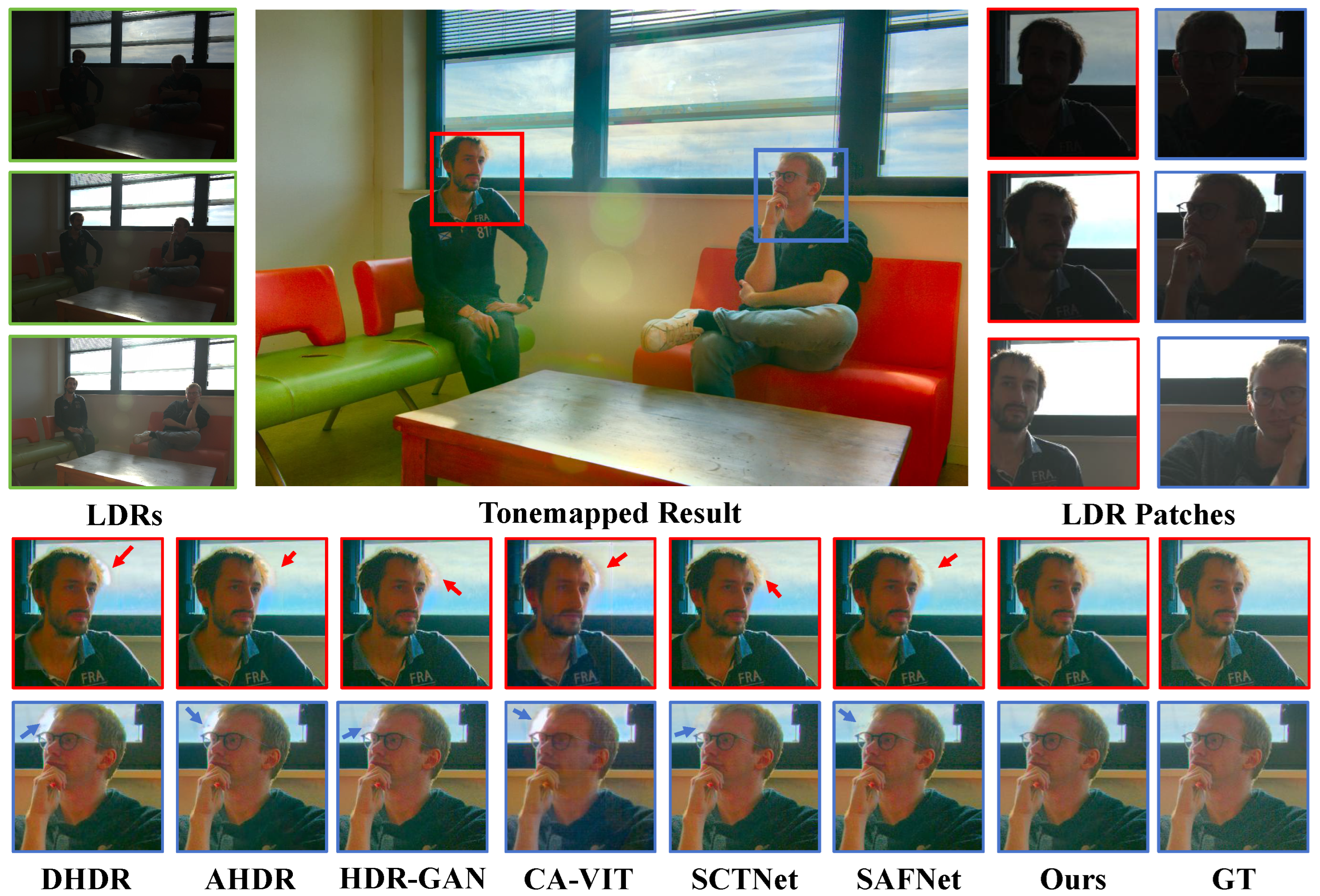

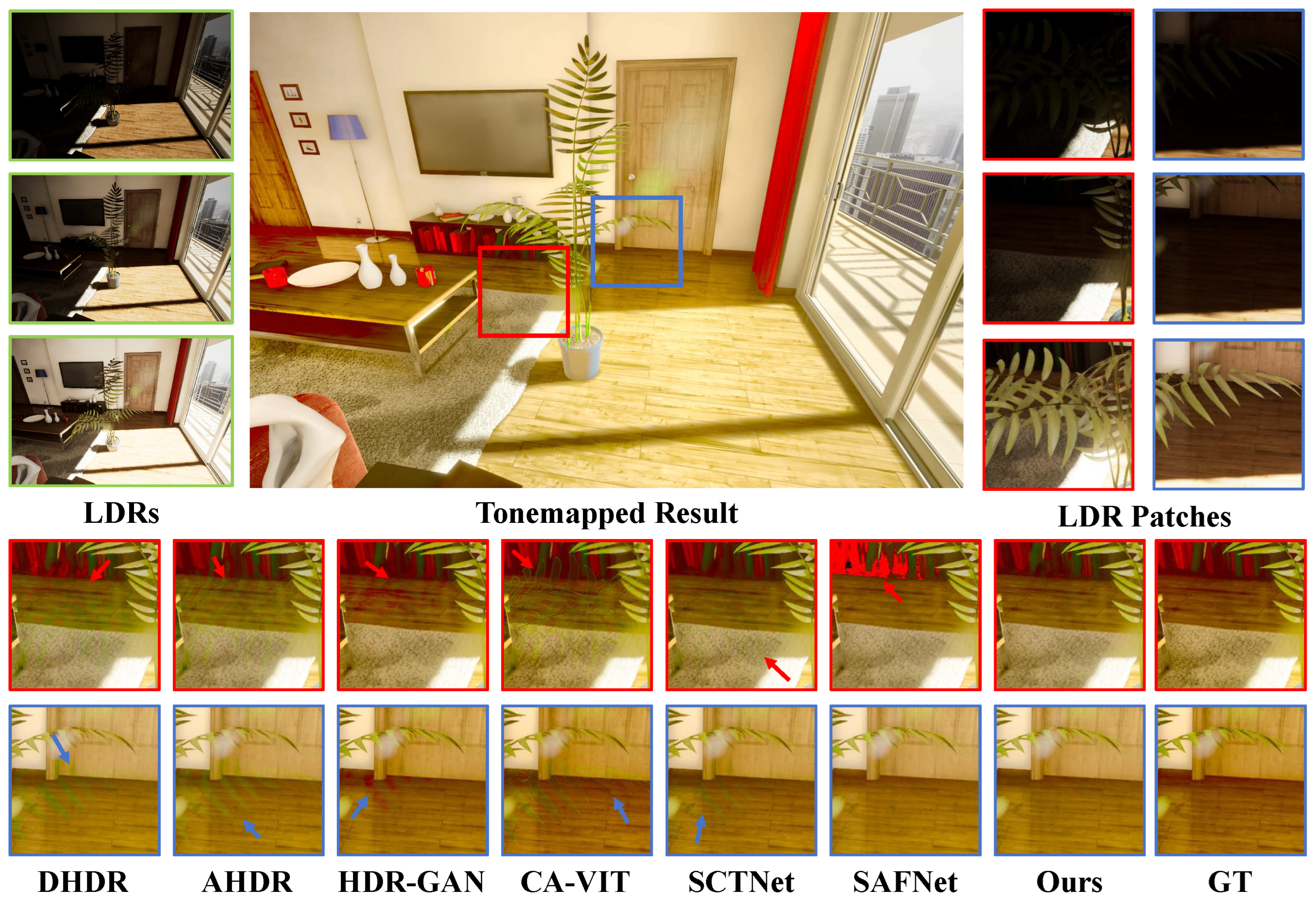

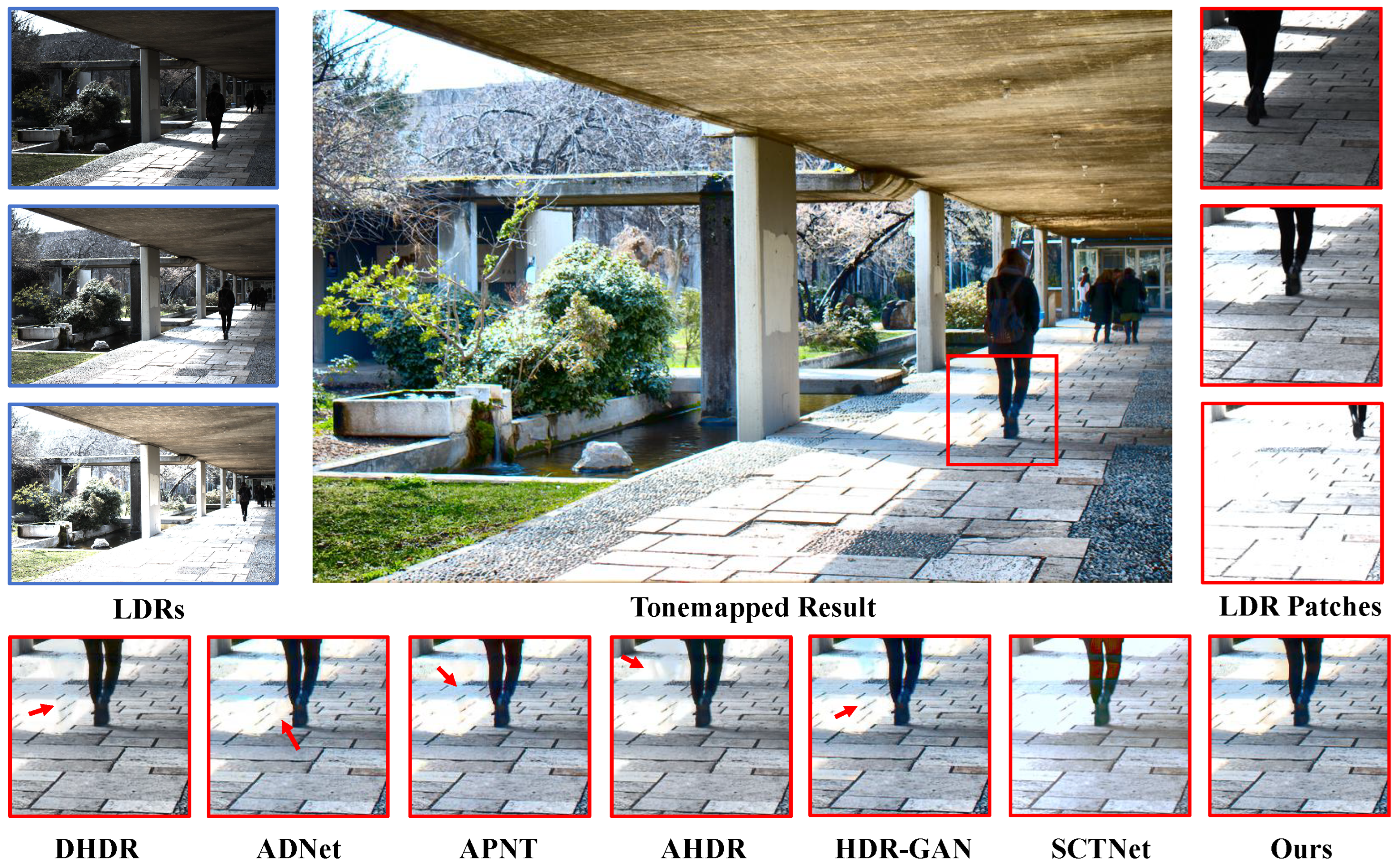

A plethora of experiments have substantiated that the proposed methodology, denoted as method HL-HDR, attains state-of-the-art (SOTA) performance in HDR imaging tasks. Furthermore, it yields visually appealing outcomes that align with human perceptual aesthetics.

This work is an extended version of our conference paper [

15] presented at the International Joint Conference on Neural Networks (IJCNN 2024). Compared with the conference version, it incorporates a substantial amount of new material. (1) To address the issue of ghosting in moving regions, we optimized the cross-frame alignment stage by designing the FAM module: the low-frequency branch aligns features using optical flow, while the high-frequency branch adaptively refines local edges and textures through convolution and attention mechanisms, effectively suppressing ghost artifacts and maintaining structural consistency. (2) We conducted comparative experiments on additional datasets and against the latest methods, fully demonstrating the advantages of our improved approach. (3) The ablation studies are more detailed and clear, thoroughly verifying and analyzing the contributions of each module.

3. Method

In a series of LDR images with varying exposure levels, images of the same scene are grouped together. Each group comprises three images: underexposed, normally exposed, and overexposed. Our goal is to fuse the information from these three LDR images to reconstruct an HDR image without ghosting artifacts. In previous research [

10,

24], the authors utilized a set of three LDR images as input, designating the normally exposed image as the reference frame. Using the input images

, our model derives the HDR image

as follows:

where

represents the HDR imaging function, and

refers to the network’s parameters.

3.1. Overview of the HL-HDR Architecture

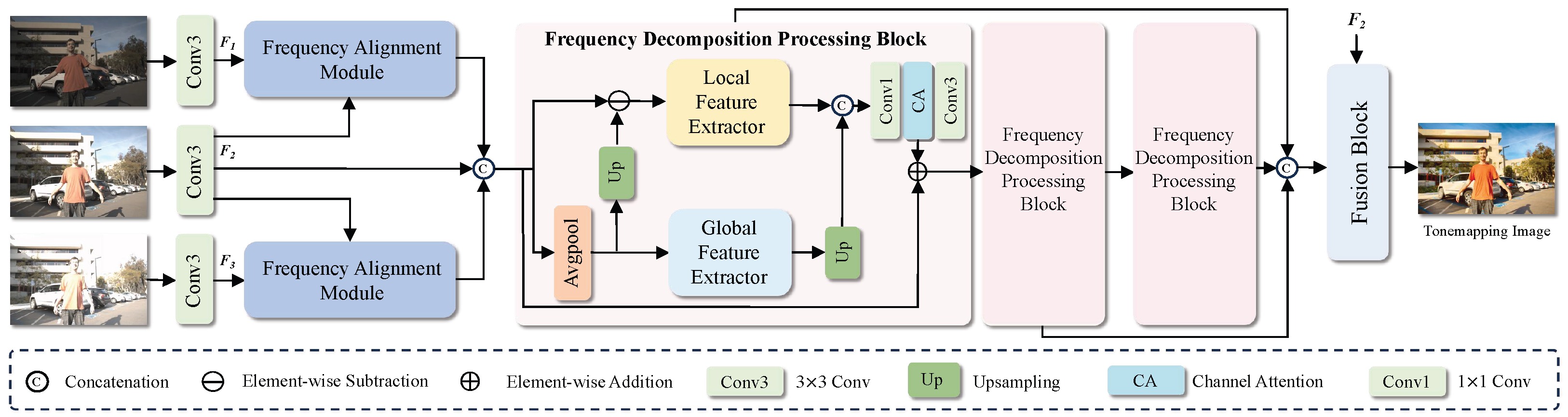

As shown in

Figure 1, the proposed HL-HDR framework consists of two main stages:

The first stage is the cross-frame alignment stage, corresponding to the Frequency Alignment Module in the figure. This module takes three images with different exposures as input and extracts shallow features through a shared-weight convolution, producing 64-channel feature maps . The feature map of the normally exposed image, , is used as the reference frame, while the underexposed feature map and overexposed feature map are aligned to it.

The second stage is the feature fusion stage, in which multiple Frequency Decomposition Processing Blocks are stacked to extract and integrate the aligned features. Specifically, the features are decomposed into high-frequency and low-frequency components and processed according to their characteristics: the high-frequency branch employs a Local Feature Extractor to capture local details through stacked convolutional blocks and enhances cross-frame high-frequency information via dense connections; the low-frequency branch uses a Global Feature Extractor to model long-range dependencies through multi-layer channel attention. The extracted high- and low-frequency features are then fused.

In the final reconstruction stage, the network reduces the number of channels while introducing long-range residual connections, ultimately reconstructing the output as a 3-channel HDR image.

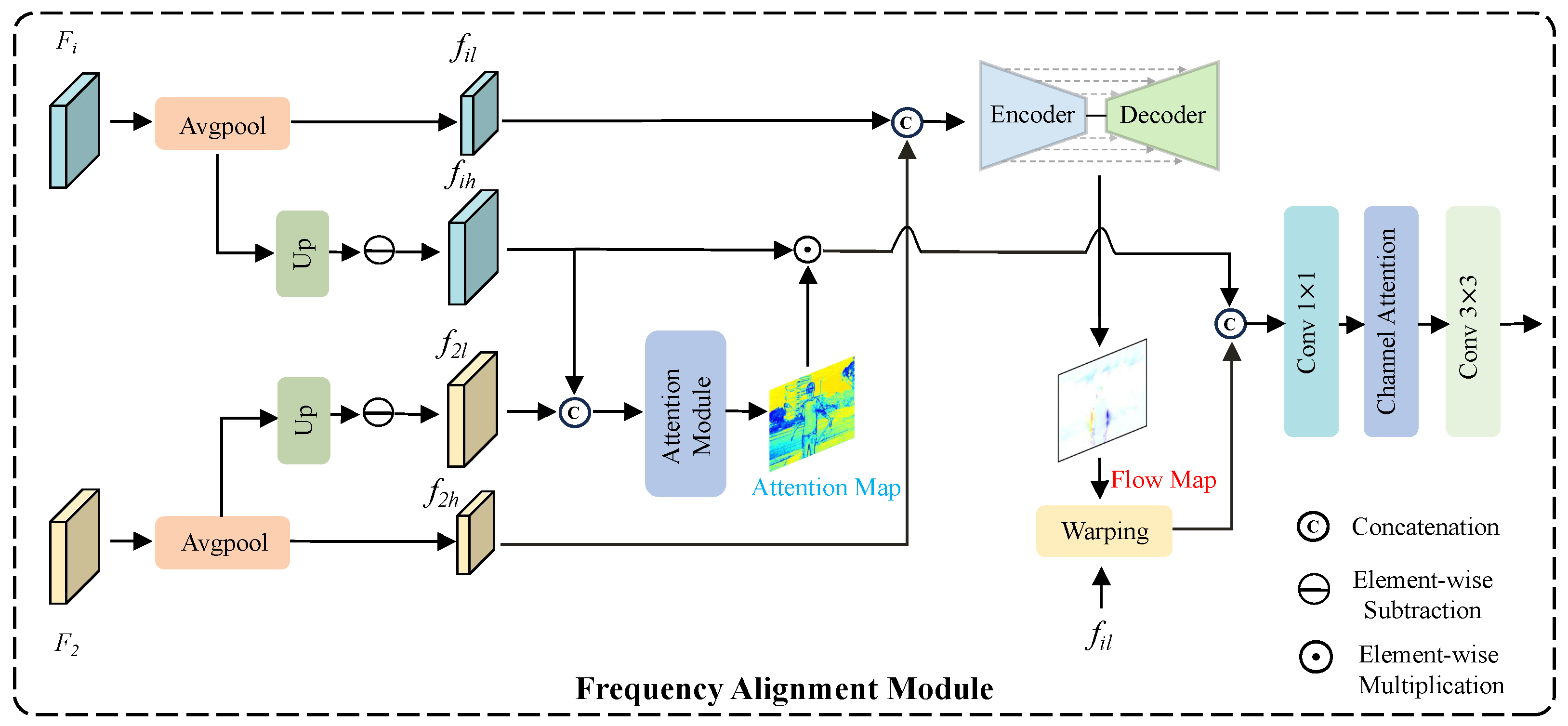

3.2. Frequency Alignment Module

To balance large-scale motion modeling and detail preservation during the alignment stage, we first decompose both the reference frame and the non-reference frames into high-frequency and low-frequency components, which are then independently aligned in separate branches. Specifically, the low-frequency components are obtained by applying average pooling to the original feature maps, while the high-frequency components are derived by subtracting the low-frequency part from the original features. This process can be formally expressed as follows:

where

refers to average pooling,

stands for bilinear interpolation upsampling.

refers to the non-reference frame, while

refers to the reference frame.

For the alignment of high-frequency components, the high-frequency features of the reference frame and non-reference frames are concatenated and fed into a convolution-based attention module to generate an attention map, which is subsequently applied to the non-reference frame features. This module is similar to the implicit alignment mechanism in AHDR [

10], guiding the network to focus on critical information across different exposures. Under the guidance of attention, the model can adaptively reweight the importance of different regions, thereby refining edges and textures, enhancing local detail restoration, and effectively suppressing ghosting while ensuring structural consistency. Formally, the process can be written as:

where

refers to the attention module, and

denotes element-wise multiplication.

For the alignment of low-frequency components, we modify the Encoder-Decoder structure of SAFNet [

11] to predict the optical flow field, which is then used to warp the low-frequency components of the non-reference frames, enabling more accurate modeling of large-scale motion. The above operations can be formulated as:

where

refers to the Encoder-Decoder structure shown in

Figure 2, and

denotes the warping operation.

Finally, the aligned low-frequency and high-frequency components are fused, followed by convolution and a channel attention module to restore channel information and further extract features. This process can be formally expressed as follows:

where

denotes the operation for integrating features at different scales,

represents channel attention,

stands for a

convolution, and

denotes a

convolution.

3.3. Frequency Decomposition Processing Block

To more clearly illustrate the high-frequency information, we present three representative examples in

Figure 3. The visualizations demonstrate that structural edges and fine-grained details are effectively captured, confirming the reliability of the “average pooling + subtraction” operation for separating high- and low-frequency information. Motivated by this observation, we adopt a similar frequency decomposition strategy during the feature fusion stage to further enhance the network’s representational capacity. As shown in

Figure 1, similar to the alignment stage, we also decompose the feature maps into high-frequency and low-frequency components during the feature fusion stage. By supplementing high-frequency details while extracting the global and background information of the image, this approach enhances local textures and edges, resulting in improved visual quality. This process can be formally expressed as follows:

where

refers to average pooling,

stands for bilinear interpolation upsampling,

stands for Local Feature Extractor,

stands for Global Feature Extractor,

denotes channel attention,

stands for 3 × 3 convolution, and

stands for 1 × 1 convolution that restores the channel count from 128 to 64.

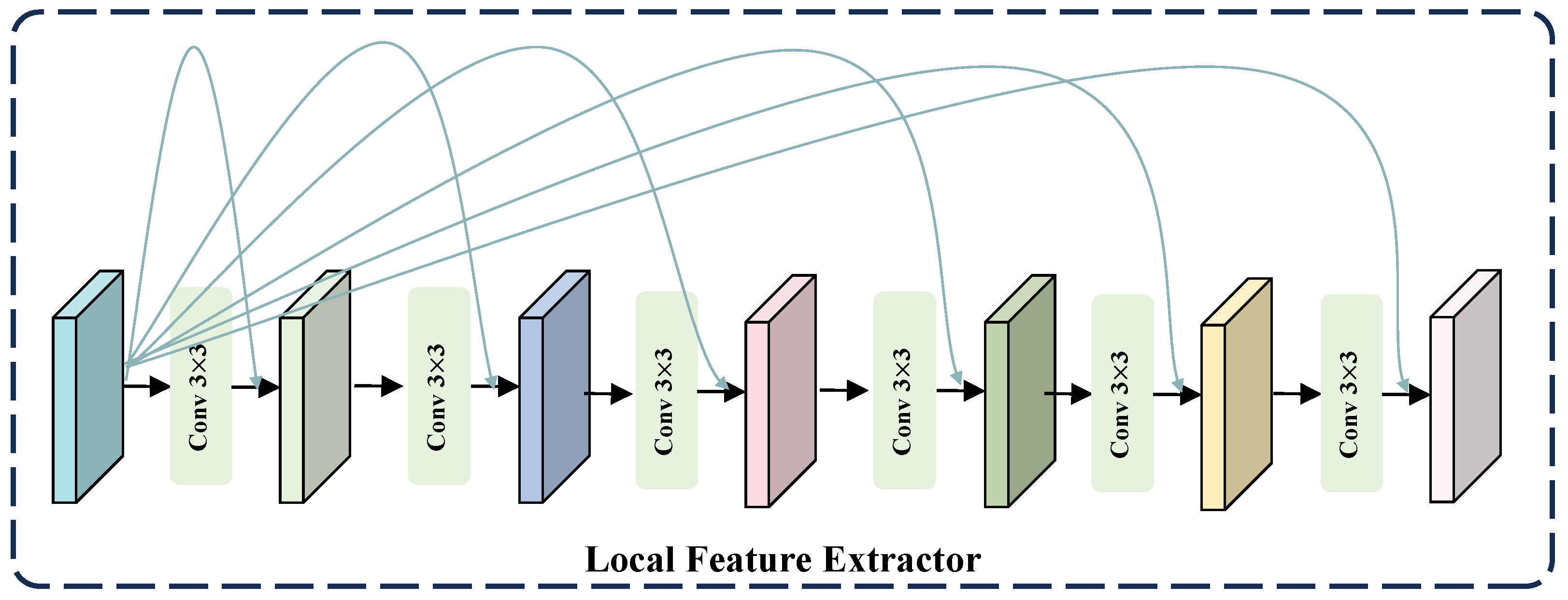

3.3.1. Local Feature Extractor

To better recover the detailed information in an image, we process the high-frequency information, which inherently contains a wealth of detail. High-frequency information requires local details; thus, the use of convolutions with small kernels allows for a more focused extraction of these details. Moreover, given the superior capability of standard residual learning in maintaining stable feature propagation and enhancing high-frequency detail representation [

25,

26,

27], we incorporate dense residual connections into the high-frequency information extraction process to fully leverage multi-level features and strengthen high-frequency detail modeling. Overall, we utilize six 3 × 3 convolutions. As depicted in

Figure 4, we only display a portion of the residual connections, but in reality, these are dense residual connections. They are not merely connections between adjacent layers, but rather, the output of each layer is merged with the outputs of all preceding layers, enabling each layer to directly access the feature information of all previous layers.

3.3.2. Global Feature Extractor

For low-frequency information, it is necessary to leverage global context to restore the overall structure and background of the image. As shown in

Figure 5, although multi-scale feature extraction enables long-range information interactions, some information may be lost during the downsampling process. To address this, we perform feature extraction at each scale to compensate for the information loss caused by downsampling. In each feature extraction layer, we introduce a Channel Transformer Block, which can establish global contextual information and possesses a global receptive field, making it highly suitable for extracting low-frequency features that depend on global information. Furthermore, to compensate for potential information loss when directly upsampling feature maps of different sizes and concatenating them, we employ the CSFM to effectively merge feature maps of varying resolutions.

Channel Transformer Block. Given that the channel-wise self-attention mechanism proposed in [

23] can effectively model cross-channel dependencies while reducing computational complexity, our Transformer architecture abandons spatial self-attention and instead adopts channel-wise self-attention to achieve more efficient feature modeling. The input

is first layer-normalized to obtain a tensor

. Then, 1 × 1 convolutions are applied to aggregate pixel-wise cross-channel context, followed by 3 × 3 depth-wise convolutions to encode channel-wise spatial context. This process generates the

(

),

(

), and

(

), which can be expressed mathematically as:

where

represents the 1 × 1 point-wise convolution and

represents the 3 × 3 depth-wise convolution.

Then reshape Q into

, reshape K into

. After this transformation, matrix multiplication can be performed, followed by a softmax operation to obtain an attention map

. Reshape V into

, allowing for matrix multiplication with A. The resulting output is reshaped into

, and finally, a residual connection is added by summing the initial feature map with the obtained feature map. The specific process is illustrated as follows:

where

is a learnable scaling parameter,

refers to the initial input feature map, and

refers to the final result.

Next, we utilize 1 × 1 Convolution to aggregate information from different channels and employ 3 × 3 depth-wise Convolution to aggregate information from spatially neighboring pixels. Additionally, we incorporate a gating mechanism to enhance information encoding. Finally, a long-range residual connection is added, summing the initial feature map with the feature map obtained at this stage.

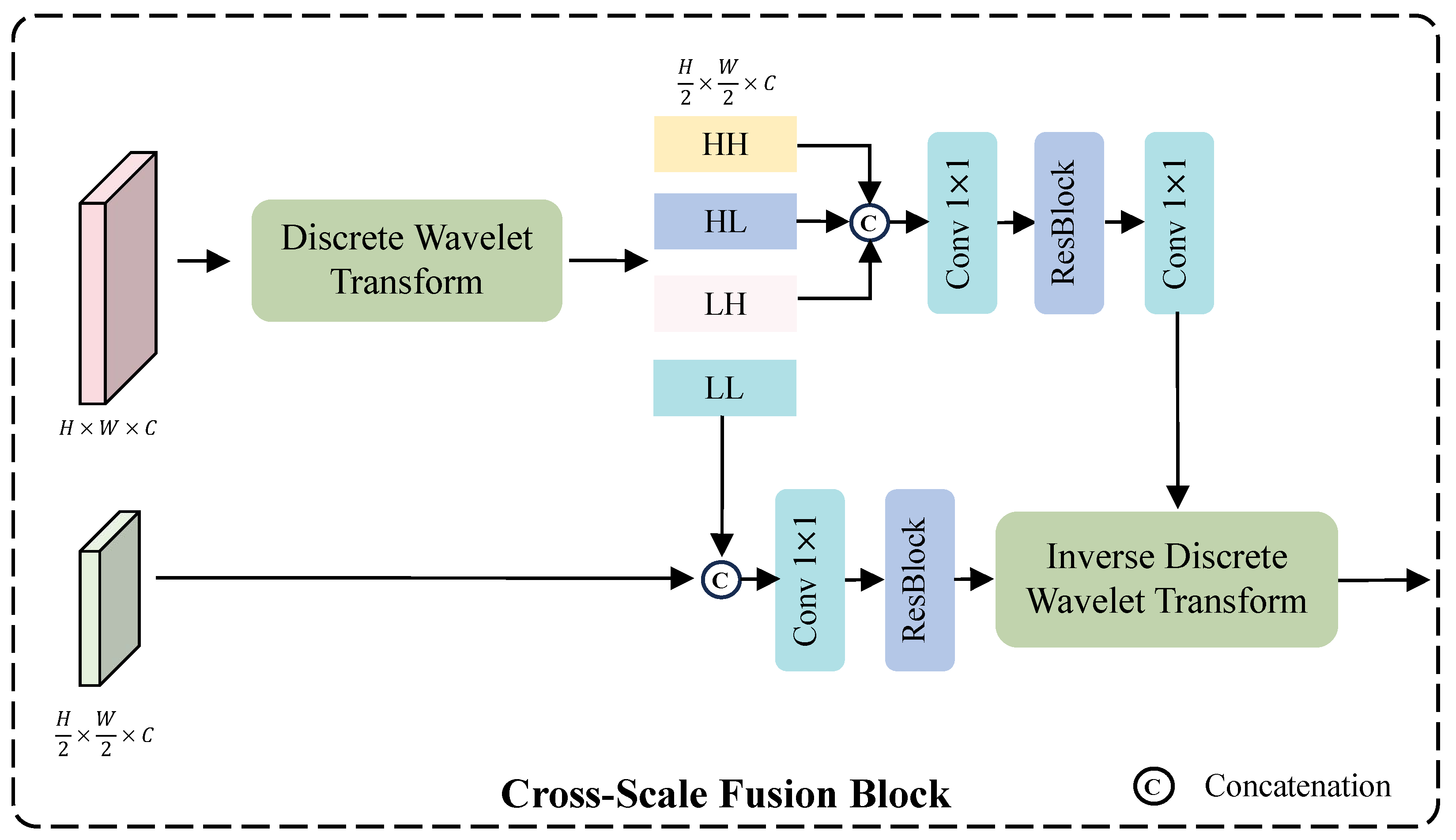

Cross-Scale Fusion Module. Due to the differing spatial resolutions of features at various scales, they are typically adjusted to a unified resolution via downsampling or upsampling for feature fusion. However, such operations may lead to the loss of important structural details, thereby affecting the final image restoration. To alleviate this problem, we introduce a wavelet-based cross-scale feature fusion strategy that fully leverages the capability of wavelet transforms in representing multi-scale image structures [

28]. As shown in

Figure 6, we employ the Haar Discrete Wavelet Transform (DWT) to decompose a feature map

into four sub-bands: LL (Low–Low), LH (Low–High), HL (High–Low), and HH (High–High). Here, “L” and “H” denote low-pass and high-pass filtering along the horizontal and vertical dimensions, respectively. Each sub-band has half the spatial resolution of the original feature map, while the number of channels remains unchanged. Among these, the LL sub-band retains the low-frequency components, representing the global structure and smooth regions of the feature. It is concatenated with the small-scale feature map

, followed by a

convolution for channel reduction (from 128 to 64) and a residual block for feature refinement. The HH, HL, and LH sub-bands preserve the high-frequency components, containing texture and edge details. After concatenation, a

convolution reduces the channels to 64, followed by a residual block for detailed feature extraction, and another

convolution restores the channel number to 192. The use of two

convolutions effectively reduces the number of parameters and computational complexity, since directly processing a 192-channel feature map would be computationally expensive. Finally, the refined high- and low-frequency features are recombined through the Inverse Discrete Wavelet Transform (IDWT) to produce the fused representation. This process can be formally expressed as follows:

where

represents the residual block.

3.4. Training Loss

Due to the typical display of HDR images after tonemapping, training the network on tonemapped images is more effective than training directly in the HDR domain. When provided with an HDR image H in the HDR domain, we compress the image’s range using the

-law transformation.

where

represents a parameter that defines the degree of compression, and

represents the tonemapped image. Throughout this work, we maintain

H within the range [0, 1] and set

to 5000.

is the predicted result obtained from our HL-HDR model, and H is the Ground Truth. Here, we employ

loss to compute the loss. Additionally, we use an auxiliary perceptual loss

for supervision [

13]. The perceptual loss measures the difference between the output image and the Ground Truth image in the feature representations of multiple layers in a pre-trained CNN, achieved by computing the mean squared error between the feature maps of each layer. We can express this as follows:

where

represents the

convolutional feature extracted from the pre-trained VGG-16 network, with

j denoting the

layer.

Therefore, our final loss function is the result of adding

and

, with different weights assigned to each, for which we introduce a parameter

. The final loss function can be expressed by the following formula:

where

is set to 0.01.

5. Conclusions

This paper presents HL-HDR, a high–low-frequency-aware HDR reconstruction network for dynamic scenes. It explicitly decomposes features into high- and low-frequency components, effectively combining global context modeling with fine detail restoration. The Frequency Alignment Module (FAM) enables precise motion estimation and structure preservation, while the Frequency Decomposition Processing Block (FDPB) supports hierarchical cross-scale feature fusion. Compared with state-of-the-art models such as LFDiff and SAFNet, HL-HDR offers clear advantages. Unlike diffusion-based models like LFDiff, which rely on iterative sampling and are computationally expensive, HL-HDR has a compact architecture, stable training, and faster convergence. Unlike lightweight CNNs such as SAFNet, which often sacrifice detail for speed, HL-HDR jointly optimizes high- and low-frequency features to balance visual quality and efficiency. Experiments on multiple public HDR benchmarks show that HL-HDR consistently improves performance. On three datasets with ground-truth HDR images, PSNR- increases by 0.05 dB, 0.62 dB, and 0.28 dB, with visual results showing better ghost removal and detail preservation. For future work, we plan to explore lightweight designs and real-time deployment, leverage diffusion models to improve generalization and robustness in complex dynamic scenes, and build larger multi-scene HDR datasets to promote practical applications.