Perimeter Security Utilizing Thermal Object Detection

Highlights

- Thermal object detection increases surveillance capabilities.

- Deploying a thermal surveillance system is a practical and economical solution.

- Thermal detection can greatly complement other surveillance systems.

- More thermal detection systems can be utilized.

Abstract

1. Introduction

2. Related Work

3. IR-Thermal Radiation

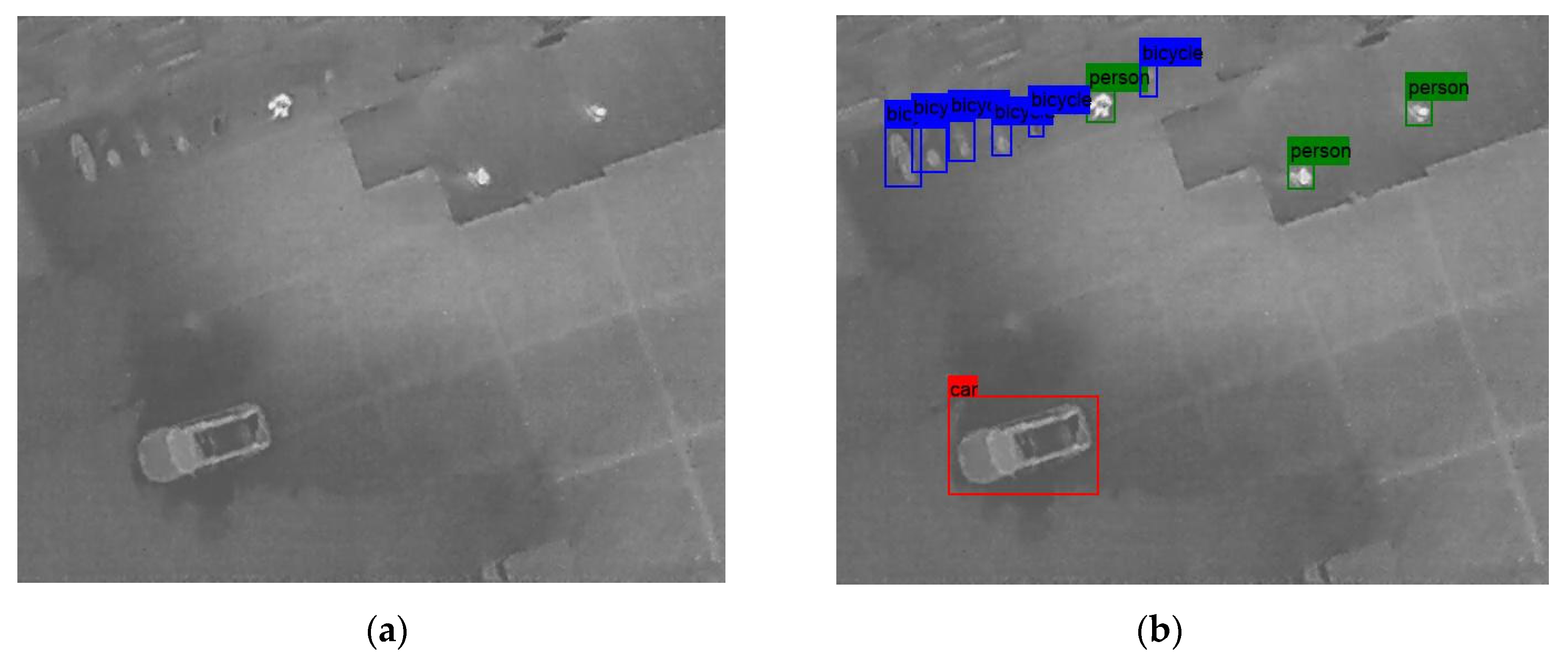

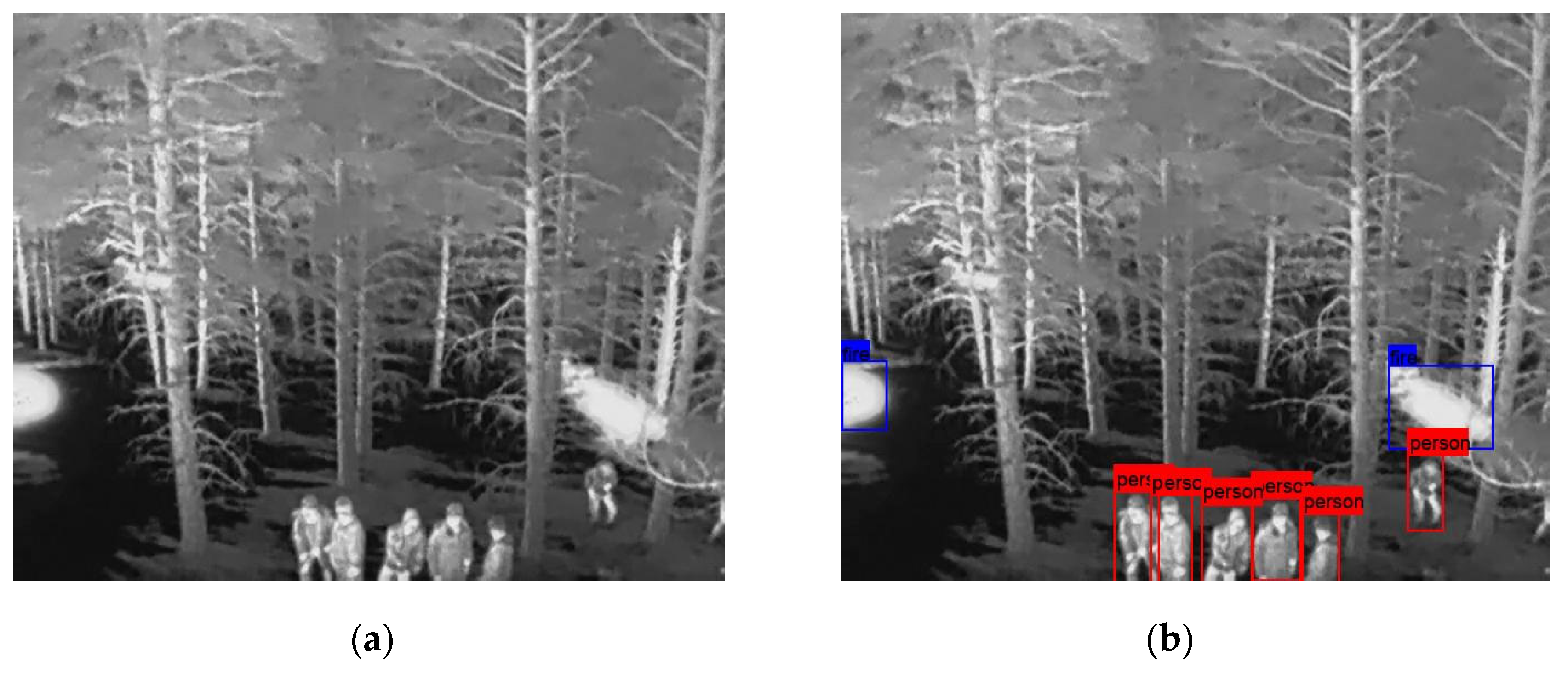

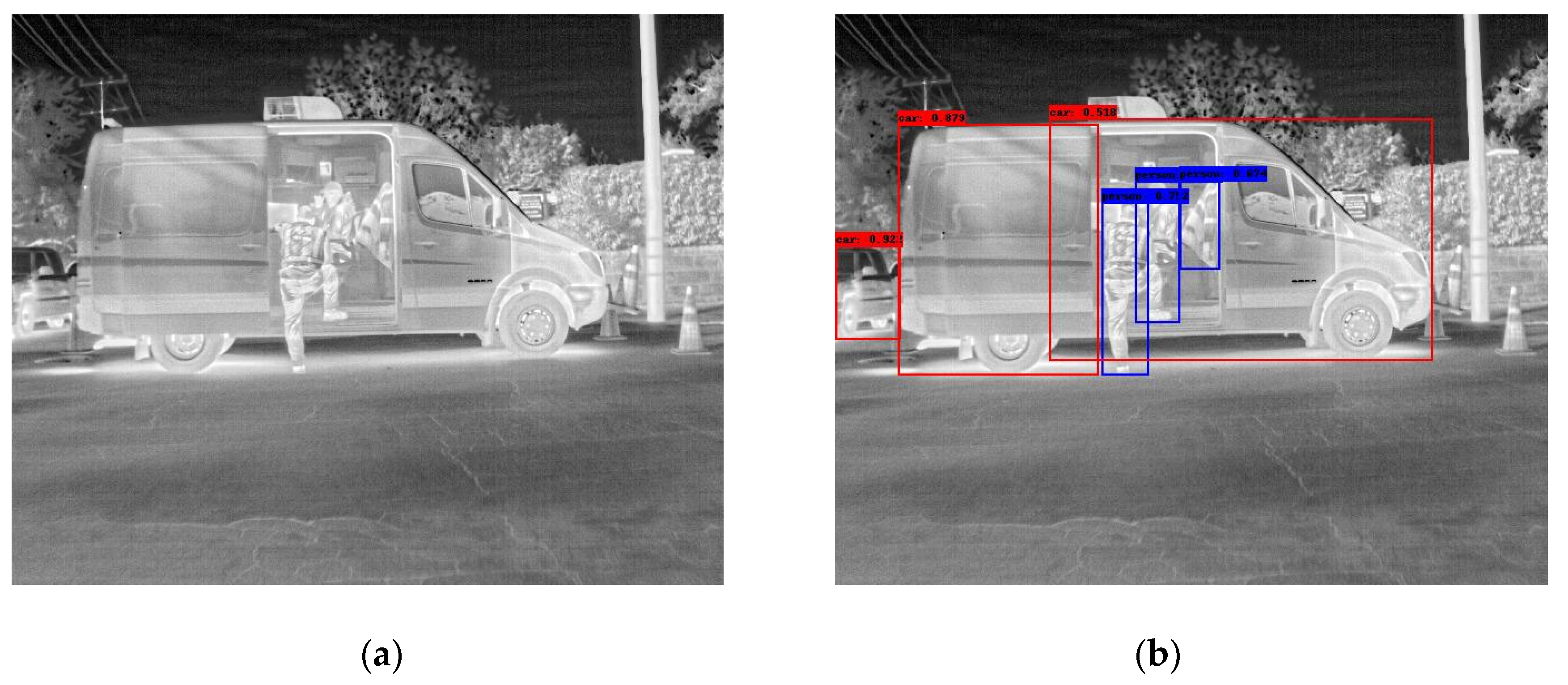

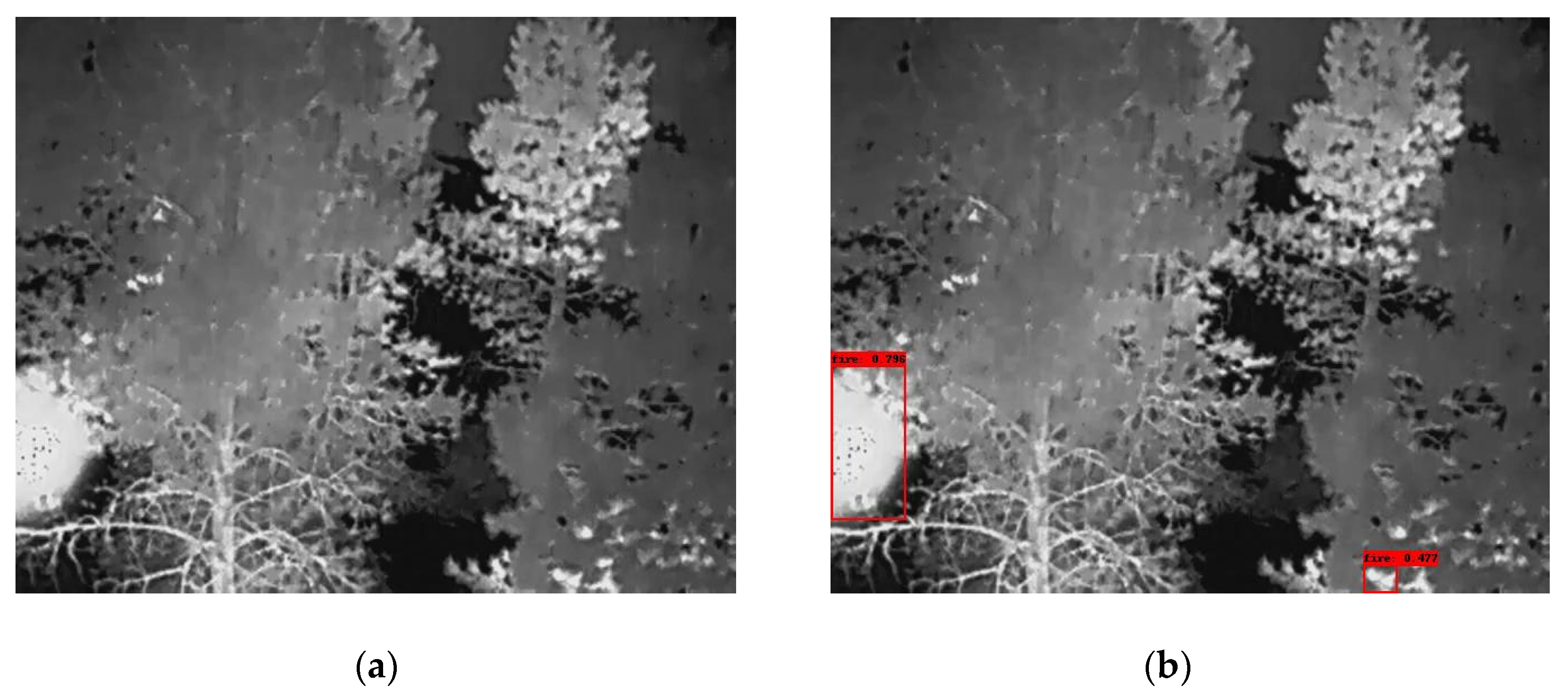

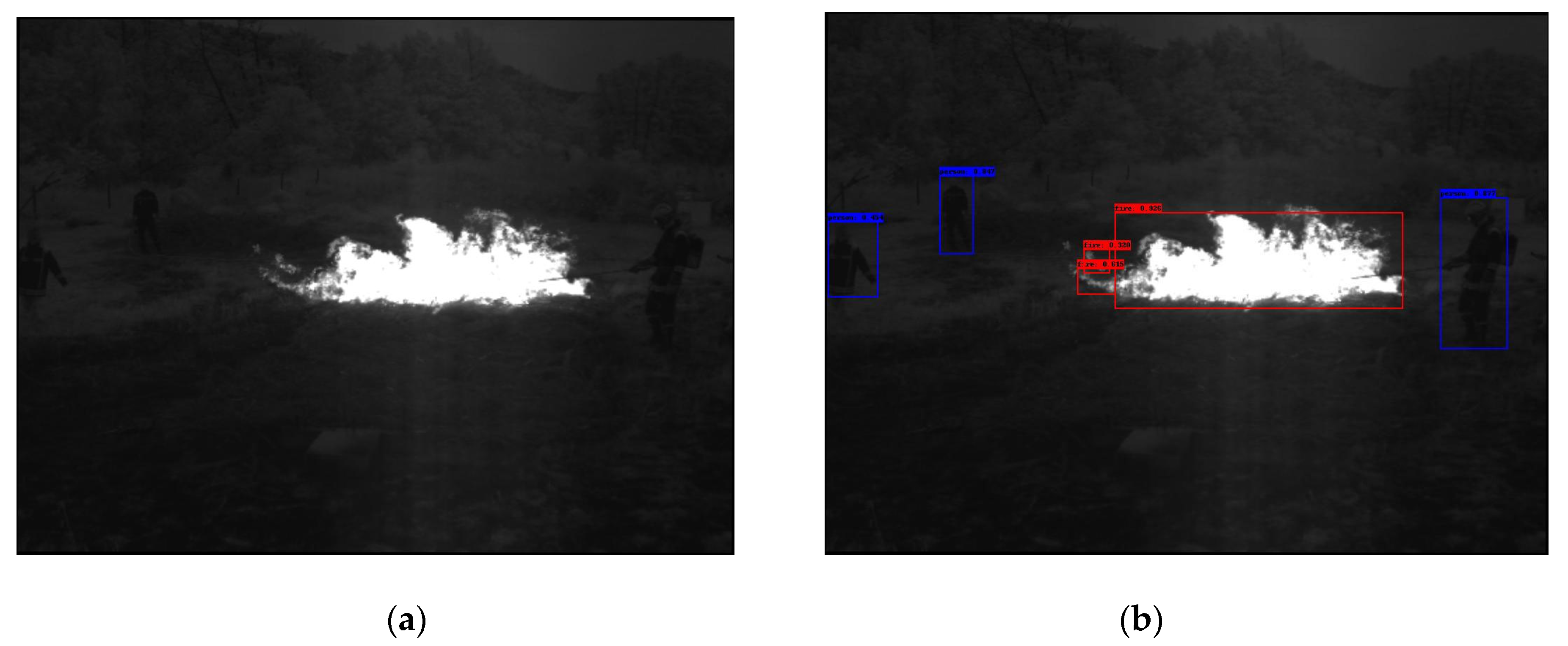

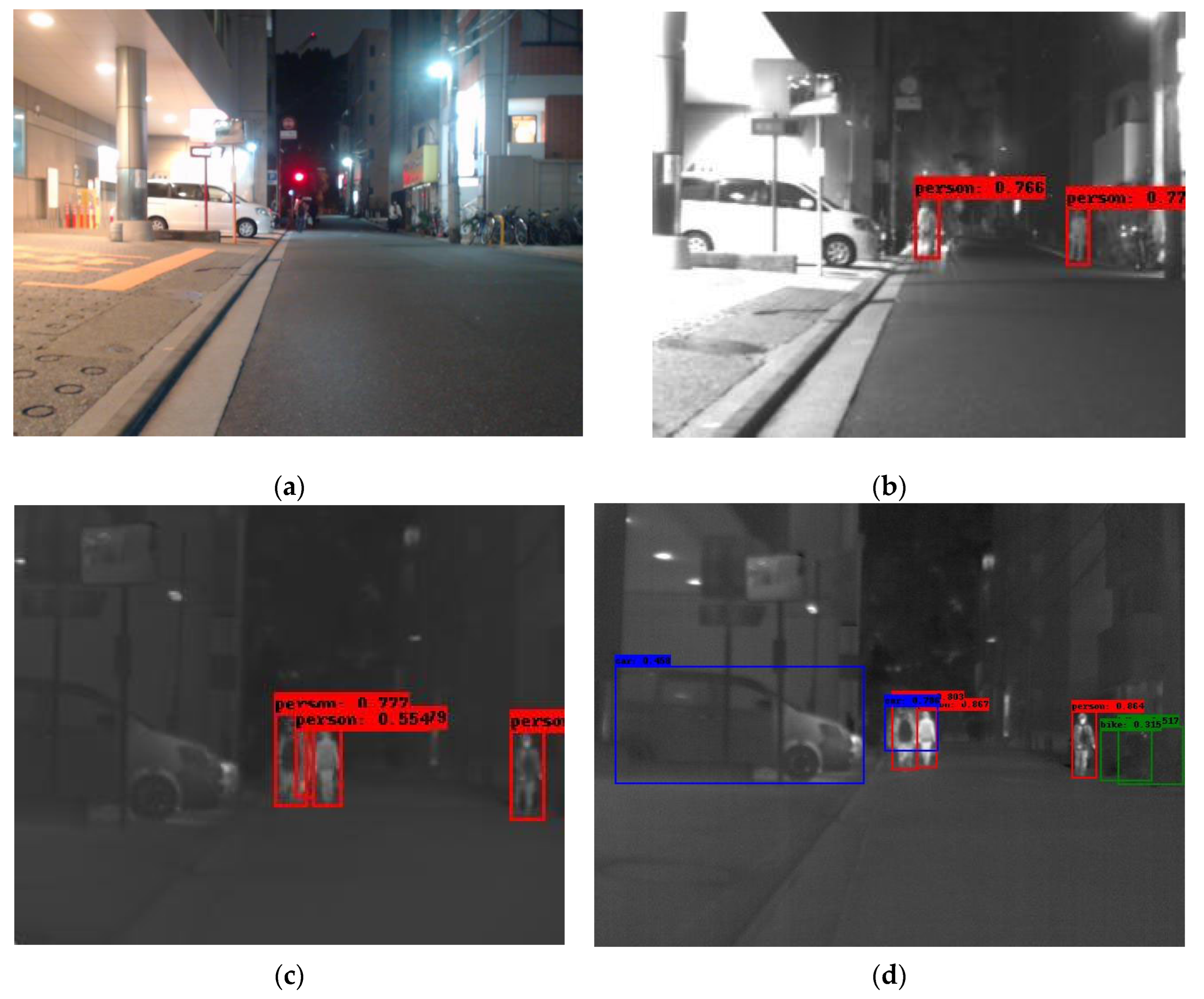

4. Datasets

5. Results

5.1. Training Parameters and Process

5.2. Models’ Efficiency

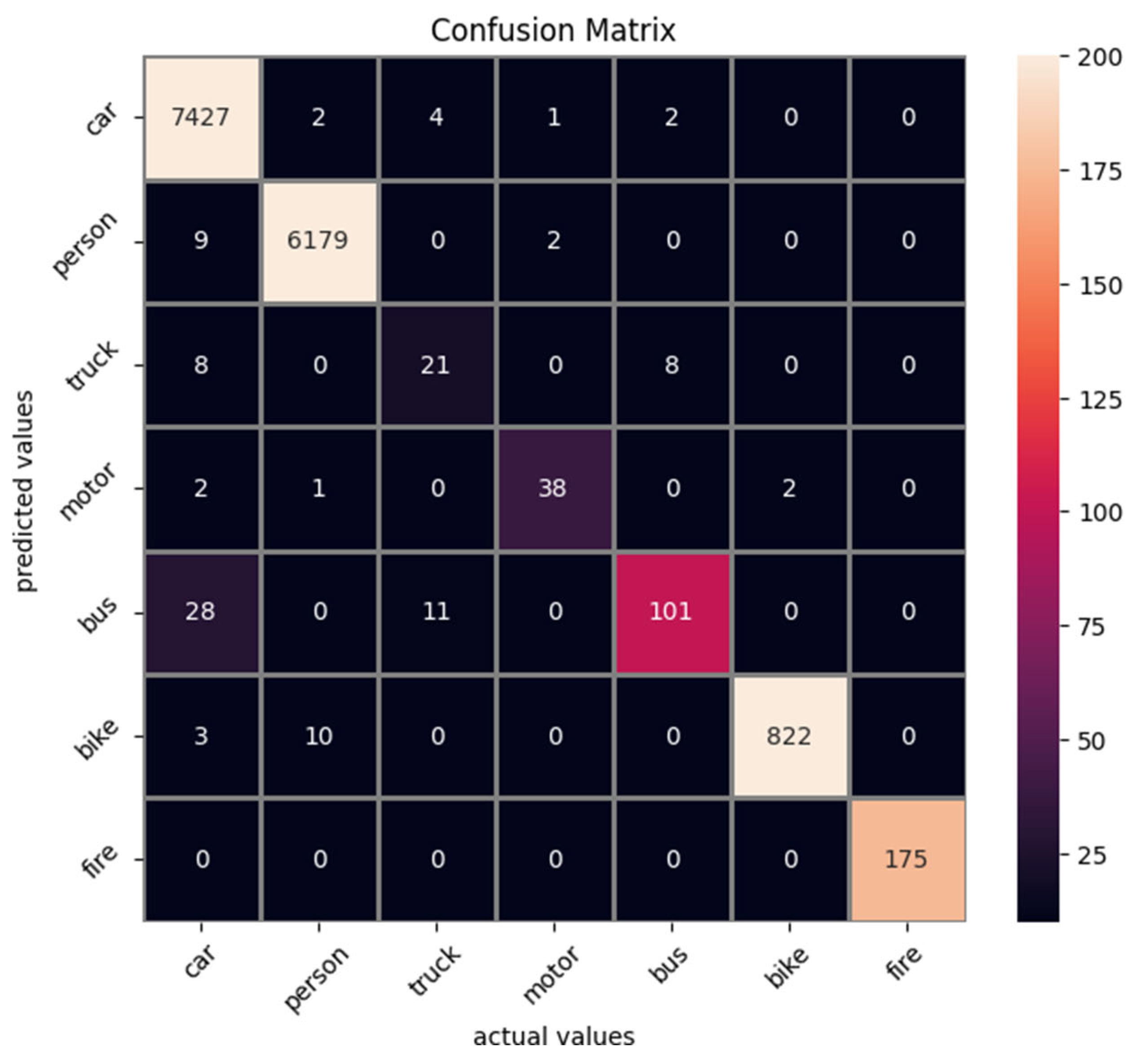

5.3. Model’s Effectiveness

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IR | Infrared |

| RGB | Red Green Blue |

| RCNN | Region-based Convolutional Neural Network |

| GB | Giga Bytes |

| CNN | Convolutional Neural Network |

| GPU | Graphics Processing Unit |

| HSV | Hue Saturation Value |

References

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the 2020 European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Krišto, M.; Ivasic-Kos, M.; Pobar, M. Thermal object detection in difficult weather conditions using YOLO. IEEE Access 2020, 8, 125459–125476. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the 2014 European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Bustos, N.; Mashhadi, M.; Lai-Yuen, S.K.; Sarkar, S.; Das, T.K. A systematic literature review on object detection using near infrared and thermal images. Neurocomputing 2023, 560, 126804. [Google Scholar] [CrossRef]

- Teledyne FLIR Homepage. Available online: https://www.flir.com/oem/adas/adas-dataset-form/ (accessed on 28 May 2025).

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A review on yolov8 and its advancements. In Proceedings of the 2024 International Conference on Data Intelligence and Cognitive. Informatics, Tirunelveli, India, 18–20 November 2024; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Lv, W.; Zhao, Y.; Chang, Q.; Huang, K.; Wang, G.; Liu, Y. Rt-detrv2: Improved baseline with bag-of-freebies for real-time detection transformer. arXiv 2024, arXiv:2407.17140. [Google Scholar]

- Nazir, A.; Wani, M.A. You only look once-object detection models: A review. In Proceedings of the 2023 10th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 15–17 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1088–1095. [Google Scholar]

- Jegham, N.; Koh, C.Y.; Abdelatti, M.; Hendawi, A. Evaluating the evolution of yolo (you only look once) models: A comprehensive benchmark study of yolo11 and its predecessors. arXiv 2024, arXiv:2411.00201. [Google Scholar] [CrossRef]

- Bai, Y.; Mei, J.; Yuille, A.L.; Xie, C. Are transformers more robust than cnns? Adv. Neural Inf. Process. Syst. 2021, 34, 26831–26843. [Google Scholar]

- Zong, Z.; Song, G.; Liu, Y. Detrs with collaborative hybrid assignments training. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 6748–6758. [Google Scholar]

- Bhusal, S. Object Detection and Tracking in Wide Area Surveillance Using Thermal Imagery. Bachelor’s Thesis, University of Nevada, Las Vegas, NV, USA, 2015. [Google Scholar]

- Drahanský, M.; Charvát, M.; Macek, I.; Mohelníková, J. Thermal imaging detection system: A case study for indoor environments. Sensors 2023, 23, 7822. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Klir, S.; Lerch, J.; Benkner, S.; Khanh, T.Q. Multi-Person Localization Based on a Thermopile Array Sensor with Machine Learning and a Generative Data Model. Sensors 2025, 25, 419. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; ChristopherSTAN; Liu, C.; Laughing; Hogan, A.; lorenzomammana; tkianai; et al. ultralytics/yolov5, version 3.0; Zenodo: Geneva, Switzerland, 2020.

- Ippalapally, R.; Mudumba, S.H.; Adkay, M.; Vardhan, H.R.N. Object detection using thermal imaging. In Proceedings of the 2020 IEEE 17th India Council International Conference (INDICON), New Delhi, India, 10–13 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Devaguptapu, C.; Akolekar, N.; MSharma, M.; NBalasubramanian, V. Borrow from anywhere: Pseudo multi-modal object detection in thermal imagery. In Proceedings of the 2019 IEEE/CVF, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhang, Z.; Huang, J.; Hei, G.; Wang, W. YOLO-IR-free: An improved algorithm for real-time detection of vehicles in infrared images. Sensors 2023, 23, 8723. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Dai, X.; Yuan, X.; Wei, X. TIRNet: Object detection in thermal infrared images for autonomous driving. Appl. Intell. 2021, 51, 1244–1261. [Google Scholar] [CrossRef]

- Choi, Y.; Kim, N.; Hwang, S.; Park, K.; Yoon, J.S.; An, K.; Kweon, I.S. KAIST multi-spectral day/night data set for autonomous and assisted driving. IEEE Trans. Intell. Transp. Syst. 2018, 19, 934–948. [Google Scholar] [CrossRef]

- Zunair, H.; Khan, S.; Hamza, A.B. Rsud20K: A Dataset for Road Scene Understanding in Autonomous Driving. In Proceedings of the 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024; pp. 708–714. [Google Scholar]

- Munir, F.; Azam, S.; Rafique, M.A.; Sheri, A.M.; Jeon, M.; Pedrycz, W. Exploring Thermal Images for Object Detection in Underexposure Regions for Autonomous Driving. arXiv 2020, arXiv:2006.00821. [Google Scholar] [CrossRef]

- Knyva, M.; Gailius, D.; Kilius, Š.; Kukanauskaitė, A.; Kuzas, P.; Balčiūnas, G.; Meškuotienė, A.; Dobilienė, J. Image processing algorithms analysis for roadside wild animal detection. Sensors 2025, 25, 5876. [Google Scholar] [CrossRef]

- Fang, N.; Li, L.; Zhou, X.; Zhang, W.; Chen, F. An FPGA-Based Hybrid Overlapping Acceleration Architecture for Small-Target Remote Sensing Detection. Remote Sens. 2025, 17, 494. [Google Scholar] [CrossRef]

- Amara, A.; Amiel, F.; Ea, T. FPGA vs. ASIC for low power applications. Microelectron. J. 2006, 37, 669–677. [Google Scholar] [CrossRef]

- Hu, Z.; Li, X.; Li, L.; Su, X.; Yang, L.; Zhang, Y.; Hu, X.; Lin, C.; Tang, Y.; Hao, J.; et al. Wide-swath and high-resolution whisk-broom imaging and on-orbit performance of SDGSAT-1 thermal infrared spectrometer. Remote Sens. Environ. 2024, 300, 113887. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Mebtouche, N.E.D.; Baha, N.; Kaddouri, N.; Zaghdar, A.; Redjil, A.B.E. Improving thermal object detection for optimized deep neural networks on embedded devices. In Proceedings of the 2022 Artificial Intelligence Doctoral Symposium, Algiers, Algeria, 18–19 September 2022; Springer Nature: Singapore, 2022; pp. 83–94. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Cheng, L.; He, Y.; Mao, Y.; Liu, Z.; Dang, X.; Dong, Y.; Wu, L. Personnel detection in dark aquatic environments based on infrared thermal imaging technology and an improved YOLOv5s model. Sensors 2024, 24, 3321. [Google Scholar] [CrossRef]

- Kumar, M.; Ray, S.; Yadav, D.K. Moving human detection and tracking from thermal video through intelligent surveillance system for smart applications. Multimed. Tools Appl. 2023, 82, 39551–39570. [Google Scholar] [CrossRef]

- Wang, Y.; Jodoin, P.M.; Porikli, F.; Konrad, J.; Benezeth, Y.; Ishwar, P. CDnet 2014: An expanded change detection benchmark dataset. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 387–394. [Google Scholar]

- Khatri, K.; Asha, C.S.; D’Souza, J.M. Detection of animals in thermal imagery for surveillance using GAN and object detection framework. In Proceedings of the 2022 International Conference for Advancement in Technology (ICONAT), Goa, India, 21–22 January 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Kniaz, V.V.; Knyaz, V.A.; Hladuvka, J.; Kropatsch, W.G.; Mizginov, V. Thermalgan: Multimodal color-to-thermal image translation for person reidentification in multispectral dataset. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zilkha, M.; Spanier, A.B. Real-time CNN-based object detection and classification for outdoor surveillance images: Daytime and thermal. In Proceedings of the 2019 Artificial Intelligence and Machine Learning in Defense Applications, Strasbourg, France, 10–12 September 2019; SPIE: Bellingham, WA, USA, 2019; Volume 11169, p. 1116902. [Google Scholar]

- Gutierrez, G.; Llerena, J.P.; Usero, L.; Patricio, M.A. A comparative study of convolutional neural network and transformer architectures for drone detection in thermal images. Appl. Sci. 2024, 15, 109. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the 2024 European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Balakrishnan, T.; Sengar, S.S. Repvgg-gelan: Enhanced gelan with vgg-style convnets for brain tumour detection. In Proceedings of the International Conference on Computer Vision and Image Processing, Chennai, India, 20–22 December 2024; Springer Nature: Cham, Switzerland, 2024; pp. 417–430. [Google Scholar]

- Yang, X.; Wang, G.; Hu, W.; Gao, J.; Lin, S.; Li, L.; Gao, K.; Wang, Y. Video Tiny-Object Detection Guided by the Spatial-Temporal Motion Information. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Vancouver, BC, Canada, 17–24 June 2023; Volume 2023. [Google Scholar]

- CVPR 2023 Anti-UAV Challenge Dataset. In Proceedings of the 3rd Anti-UAV Workshop & Challenge, Vancouver, BC, Canada, 18–22 June 2023.

- Cao, Y.; Bin, J.; Hamari, J.; Blasch, E.; Liu, Z. Multimodal object detection by channel switching and spatial attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 403–411. [Google Scholar]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3496–3504. [Google Scholar]

- Chen, Y.T.; Shi, J.; Ye, Z.; Mertz, C.; Ramanan, D.; Kong, S. Multimodal object detection via probabilistic ensembling. In Proceedings of the 2022 European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 139–158. [Google Scholar]

- Tang, H.; Li, Z.; Zhang, D.; He, S.; Tang, J. Divide-and-conquer: Confluent triple-flow network for RGB-T salient object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 1958–1974. [Google Scholar] [CrossRef] [PubMed]

- Bourlai, T.; Cukic, B. Multi-spectral face recognition: Identification of people in difficult environments. In Proceedings of the 2012 IEEE International Conference on Intelligence and Security Informatics, Washington, DC, USA, 11–14 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 196–201. [Google Scholar]

- Krišto, M.; Ivašić-Kos, M. Thermal imaging dataset for person detection. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1126–1131. [Google Scholar]

- Tran, K.B.; Carballo, A.; Takeda, K. T360Fusion: Temporal 360 Multimodal Fusion for 3D Object Detection via Transformers. Sensors 2025, 25, 4902. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Suo, J.; Wang, T.; Zhang, X.; Chen, H.; Zhou, W.; Shi, W. HIT-UAV: A high-altitude infrared thermal dataset for Unmanned Aerial Vehicle-based object detection. Sci. Data 2023, 10, 227. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulι, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Osei-Kyei, R.; Tam, V.; Ma, M.; Mashiri, F. Critical review of the threats affecting the building of critical infrastructure resilience. Int. J. Disaster Risk Reduct. 2021, 60, 102316. [Google Scholar] [CrossRef]

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. In Proceedings of the 2018 International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Steiner, A.; Kolesnikov, A.; Zhai, X.; Wightman, R.; Uszkoreit, J.; Beyer, L. How to train your vit? data, augmentation, and regularization in vision transformers. arXiv 2021, arXiv:2106.10270. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Takumi, K.; Watanabe, K.; Ha, Q.; Tejero-De-Pablos, A.; Ushiku, Y.; Harada, T. Multispectral Object Detection for Autonomous Vehicles. In Proceedings of the Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017; pp. 35–43. [Google Scholar]

| Class | Dataset | ||||

|---|---|---|---|---|---|

| FLIR-ADAS [8] | HIT-UAV [54] | Corsican Fire [55] | Flame [56] | Combined | |

| Person | ✓ (50,478) | ✓ (12,312) | ∗ (1107) | ∗ (94) | 63,991 (39.0%) |

| Car | ✓ (73,623) | ✓ (7311) | ∗ (147) | n/a | 81,081 (49.4%) |

| Bike | ✓ (7237) | ✓ (4980) | n/a | n/a | 12,217 (7.4%) |

| Motorcycle | ✓ (1116) | n/a | n/a | n/a | 1116 (0.7%) |

| Bus | ✓ (2245) | n/a | n/a | n/a | 2245 (1.4%) |

| Train | ✗ (5) | n/a | n/a | n/a | n/a |

| Truck | ✓ (829) | n/a | n/a | n/a | 829 (0.5%) |

| Traffic light | ✗ (16,198) | n/a | n/a | n/a | n/a |

| Fire Hydrant | ✗ (1095) | n/a | n/a | n/a | n/a |

| Street Sign | ✗ (20,770) | n/a | n/a | n/a | n/a |

| Dog | ✗ (4) | n/a | n/a | n/a | n/a |

| Deer | ✗ (8) | n/a | n/a | n/a | n/a |

| Skateboard | ✗ (29) | n/a | n/a | n/a | n/a |

| Stroller | ✗ (15) | n/a | n/a | n/a | n/a |

| Scooter | ✗ (15) | n/a | n/a | n/a | n/a |

| Other Vehicle | ✗ (1373) | ✗ (148) | n/a | n/a | n/a |

| Don’t Care | n/a | ✗ (148) | n/a | n/a | n/a |

| Fire | n/a | n/a | ✓ (1021) | ✓ (1689) | 2710 (1.7%) |

| Sum | 135,528 | 24,603 | 2275 | 1783 | 164,189 |

| Model | Variation | Image Resolution | Fps | Parameters (M) | GFlops |

|---|---|---|---|---|---|

| Yolov8 | small | 640 × 640 | 80 | 11.1 | 28.8 |

| 768 × 768 | 66 | 11.1 | 41.5 | ||

| 896 × 896 | 51 | 11.1 | 56.5 | ||

| 1024 × 1024 | 42 | 11.1 | 73.8 | ||

| medium | 640 × 640 | 56 | 25.9 | 79.3 | |

| 768 × 768 | 45 | 25.9 | 114.2 | ||

| 896 × 896 | 37 | 25.9 | 155.5 | ||

| 1024 × 1024 | 30 | 25.9 | 203.1 | ||

| Yolo11 | small | 640 × 640 | 56 | 9.4 | 21.7 |

| 768 × 768 | 48 | 9.4 | 31.3 | ||

| 896 × 896 | 43 | 9.4 | 42.6 | ||

| 1024 × 1024 | 35 | 9.4 | 55.6 | ||

| medium | 640 × 640 | 48 | 20.1 | 68.5 | |

| 768 × 768 | 38 | 20.1 | 98.7 | ||

| 896 × 896 | 31 | 20.1 | 134.3 | ||

| 1024 × 1024 | 25 | 20.1 | 175.4 | ||

| RT-Detr v2 | r18 | 640 × 640 | 73 | 20.0 | 61.1 |

| 768 × 768 | 63 | 20.0 | 87.1 | ||

| 896 × 896 | 58 | 20.0 | 117.8 | ||

| 1024 × 1024 | 54 | 20.0 | 153.4 | ||

| r34 | 640 × 640 | 57 | 31.3 | 93.2 | |

| 768 × 768 | 48 | 31.3 | 132.9 | ||

| 896 × 896 | 44 | 31.3 | 180.0 | ||

| 1024 × 1024 | 40 | 31.3 | 234.3 |

| Model | Variation | Image Resol. | FLIR-ADAS v2 | HIT-UAV | Corsican | Flame | Combined |

|---|---|---|---|---|---|---|---|

| Yolov8 | small | 640 × 640 | 0.429/0.647 | 0.642/0.946 | 0.805/0.970 | 0.579/0.866 | 0.501/0.749 |

| 768 × 768 | 0.435/0.665 | 0.646/0.946 | 0.827/0.974 | 0.614/0.901 | 0.509/0.751 | ||

| 896 × 896 | 0.454/0.676 | 0.650/0.948 | 0.826/0.973 | 0.585/0.873 | 0.513/0.757 | ||

| 1024 × 1024 | 0.452/0.667 | 0.649/0.949 | 0.822/0.974 | 0.586/0.870 | 0.518/0.753 | ||

| medium | 640 × 640 | 0.460/0.679 | 0.643/0.947 | 0.826/0.979 | 0.604/0.878 | 0.525/0.762 | |

| 768 × 768 | 0.461/0.680 | 0.645/0.944 | 0.823/0.972 | 0.624/0.872 | 0.532/0.765 | ||

| 896 × 896 | 0.475/0.692 | 0.653/0.948 | 0.811/0.969 | 0.623/0.907 | 0.543/0.781 | ||

| 1024 × 1024 | 0.470/0.689 | 0.653/0.947 | 0.820/0.965 | 0.597/0.849 | 0.538/0.774 | ||

| Yolo11 | small | 640 × 640 | 0.425/0.664 | 0.642/0.948 | 0.819/0.969 | 0.606/0.926 | 0.529/0.769 |

| 768 × 768 | 0.437/0.673 | 0.651/0.946 | 0.808/0.974 | 0.600/0.891 | 0.520/0.757 | ||

| 896 × 896 | 0.447/0.668 | 0.652/0.950 | 0.820/0.974 | 0.598/0.901 | 0.514/0.754 | ||

| 1024 × 1024 | 0.448/0.670 | 0.653/0.949 | 0.813/0.965 | 0.591/0.886 | 0.499/0.740 | ||

| medium | 640 × 640 | 0.451/0.681 | 0.649/0.947 | 0.817/0.970 | 0.630/0.903 | 0.525/0.770 | |

| 768 × 768 | 0.463/0.680 | 0.653/0.953 | 0.821/0.974 | 0.628/0.902 | 0.539/0.772 | ||

| 896 × 896 | 0.470/0.689 | 0.657/0.950 | 0.819/0.969 | 0.622/0.885 | 0.538/0.773 | ||

| 1024 × 1024 | 0.468/0.684 | 0.653/0.953 | 0.818/0.972 | 0.608/0.885 | 0.541/0.773 | ||

| RT-Detr v2 | r18 | 640 × 640 | 0.425/0.648 | 0.622/0.948 | 0.808/0.947 | 0.587/0.915 | 0.494/0.739 |

| 768 × 768 | 0.437/0.653 | 0.627/0.945 | 0.803/0.953 | 0.605/0.916 | 0.509/0.750 | ||

| 896 × 896 | 0.454/0.676 | 0.629/0.947 | 0.799/0.961 | 0.595/0.882 | 0.516/0.758 | ||

| 1024 × 1024 | 0.446/0.661 | 0.632/0.947 | 0.803/0.965 | 0.604/0.911 | 0.503/0.735 | ||

| r34 | 640 × 640 | 0.450/0.684 | 0.625/0.950 | 0.779/0.950 | 0.630/0.902 | 0.502/0.759 | |

| 768 × 768 | 0.440/0.682 | 0.620/0.949 | 0.789/0.954 | 0.605/0.871 | 0.509/0.752 | ||

| 896 × 896 | 0.445/0.667 | 0.608/0.938 | 0.790/0.953 | 0.601/0.879 | 0.517/0.758 | ||

| 1024 × 1024 | 0.445/0.668 | 0.594/0.926 | 0.791/0.953 | 0.651/0.888 | 0.514/0.755 |

| Model | All | Car | Person | Truck | Motorcycle | Bus | Bicycle | Fire |

|---|---|---|---|---|---|---|---|---|

| Yolov8 | 0.543 | 0.693 | 0.557 | 0.283 | 0.443 | 0.531 | 0.584 | 0.707 |

| Yolo11 | 0.541 | 0.703 | 0.566 | 0.214 | 0.479 | 0.532 | 0.587 | 0.702 |

| RT-Detr | 0.517 | 0.674 | 0.536 | 0.189 | 0.443 | 0.502 | 0.552 | 0.720 |

| Model | All | Car | Person | Truck | Motorcycle | Bus | Bicycle | Fire |

|---|---|---|---|---|---|---|---|---|

| Yolov8m 896 | 0.543 | 0.693 | 0.557 | 0.283 | 0.443 | 0.531 | 0.584 | 0.707 |

| Yolov8m 1024 | 0.538 | 0.701 | 0.564 | 0.229 | 0.460 | 0.521 | 0.583 | 0.711 |

| Yolov8m weighted dataloader 896 × 896 | 0.526 | 0.677 | 0.544 | 0.193 | 0.472 | 0.521 | 0.573 | 0.703 |

| Yolov8m weighted dataloader 1024 × 1024 | 0.519 | 0.689 | 0.552 | 0.218 | 0.407 | 0.507 | 0.564 | 0.700 |

| Yolov8m weighted classes Truckx20 | 0.543 | 0.697 | 0.558 | 0.233 | 0.465 | 0.534 | 0.583 | 0.732 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Orfanidis, G.; Ioannidis, K.; Vrochidis, S.; Kompatsiaris, I. Perimeter Security Utilizing Thermal Object Detection. Sensors 2025, 25, 6680. https://doi.org/10.3390/s25216680

Orfanidis G, Ioannidis K, Vrochidis S, Kompatsiaris I. Perimeter Security Utilizing Thermal Object Detection. Sensors. 2025; 25(21):6680. https://doi.org/10.3390/s25216680

Chicago/Turabian StyleOrfanidis, Georgios, Konstantinos Ioannidis, Stefanos Vrochidis, and Ioannis Kompatsiaris. 2025. "Perimeter Security Utilizing Thermal Object Detection" Sensors 25, no. 21: 6680. https://doi.org/10.3390/s25216680

APA StyleOrfanidis, G., Ioannidis, K., Vrochidis, S., & Kompatsiaris, I. (2025). Perimeter Security Utilizing Thermal Object Detection. Sensors, 25(21), 6680. https://doi.org/10.3390/s25216680