A Survey of Data Augmentation Techniques for Traffic Visual Elements

Highlights

- We propose a structured taxonomy specifically for the enhancement of traffic visual elements data, integrating techniques such as image transformation, Generative Adversarial Networks (GANs), Diffusion Models, and composite methods.

- We construct a comprehensive cross-comparison benchmark encompassing nearly 40 datasets and 10 evaluation metrics, which systematically reveals the performance of different augmentation strategies across key metrics including accuracy, mean average precision (mAP), and robustness.

- We demonstrate the capability of emerging generative paradigms, particularly diffusion models and multimodal composite models, in representing rare driving scenarios, and analyzes their trade-offs between computational cost and semantic consistency.

- This paper systematically consolidates diverse data augmentation strategies within the domain of traffic visual elements, thereby charting a forward-looking roadmap for researchers engaged in developing perception models.

- This paper employs a multi-layered analytical framework to systematically link augmentation strategies to real-world performance outcomes, thereby providing methodological guidance for future evaluation and research.

- This paper identifies the enhancement of data reliability and cross-domain transferability in intelligent transportation systems as a critical future direction, which is paramount for the successful deployment of autonomous driving technologies.

Abstract

1. Introduction

2. Method Overview

3. Materials and Methods

3.1. Image Transformation Data Augmentation

3.2. Data Augmentation Based on GAN

3.2.1. Related Work

3.2.2. Challenges and Innovation Strategies of GAN

3.3. Data Augmentation Based on Diffusion Model

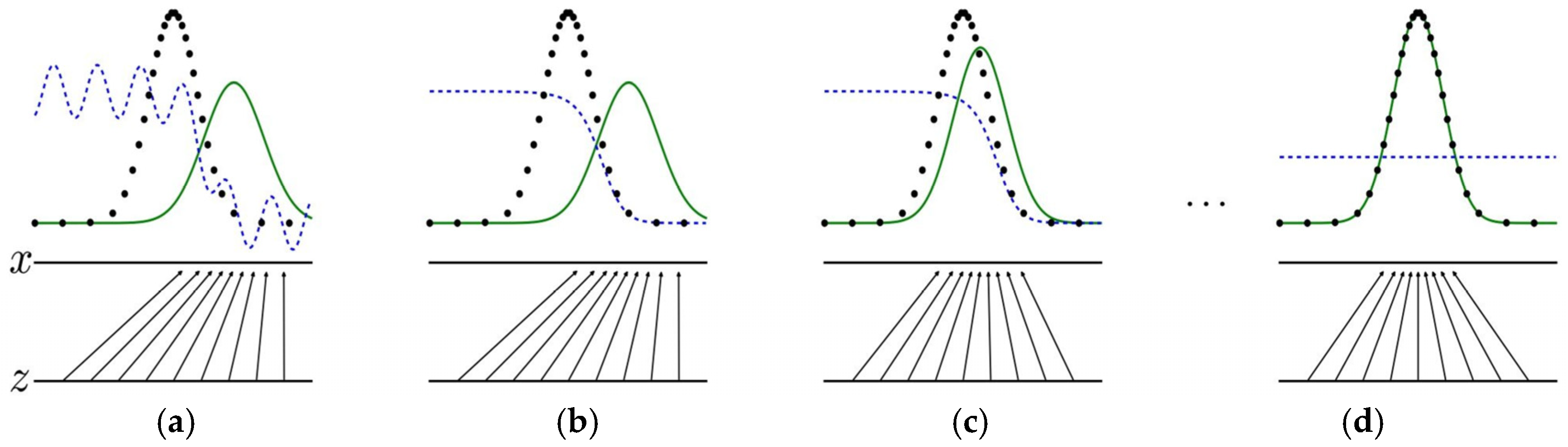

3.3.1. Related Work

3.3.2. Challenges and Innovation Strategies of Diffusion Model

3.4. Composite Data Augmentation

3.5. Selection Criteria for Four Data Augmentation Techniques

- (i)

- Enhancing visual authenticity in transportation scenarios: Existing traffic datasets often suffer from imbalanced categories and limited diversity. GANs address this by capturing fine-grained details from available data, using adversarial training between generators and discriminators to synthesize realistic images. They can also generate temporally consistent data with dynamic characteristics, improving continuity and overall model performance. Diffusion models, on the other hand, add noise to data and train neural networks to iteratively restore images. This process allows them to closely match real data distributions, generating diverse and structurally accurate traffic scene images. Their modular design further supports multi-modal inputs and offers strong structural flexibility, enabling applications in fault detection and sample synthesis.

- (ii)

- Prevalence in recent literature: A Google search with the keywords “GAN,” “Diffusion Model,” and “Traffic Scene” yielded approximately 16,700 and 16,900 cited results since 2021, respectively. This reflects their strong popularity and widespread adoption in traffic visual scene research, underscoring their practical value.

- (iii)

- Diversity in generation methods: GANs rely on adversarial synthesis with minimal architectural constraints, enabling a wide variety of model variants. Diffusion models operate through the iterative process of “adding noise and then removing it,” which can be guided by multiple conditional mechanisms. This design offers high flexibility and controllability, making them particularly powerful for structured generation tasks.

3.6. Comparison of the Challenges Between GAN and Diffusion Models

3.7. Multimodal Enhancement in GAN and Diffusion Models

3.8. Summary

4. Datasets and Evaluation Index

4.1. Datasets Related to Traffic Visual Elements

4.1.1. Traffic Sign Datasets

4.1.2. Traffic Light Datasets

4.1.3. Traffic Pedestrian Datasets

4.1.4. Vehicle Datasets

4.1.5. Road Datasets

4.1.6. Traffic Scene Datasets

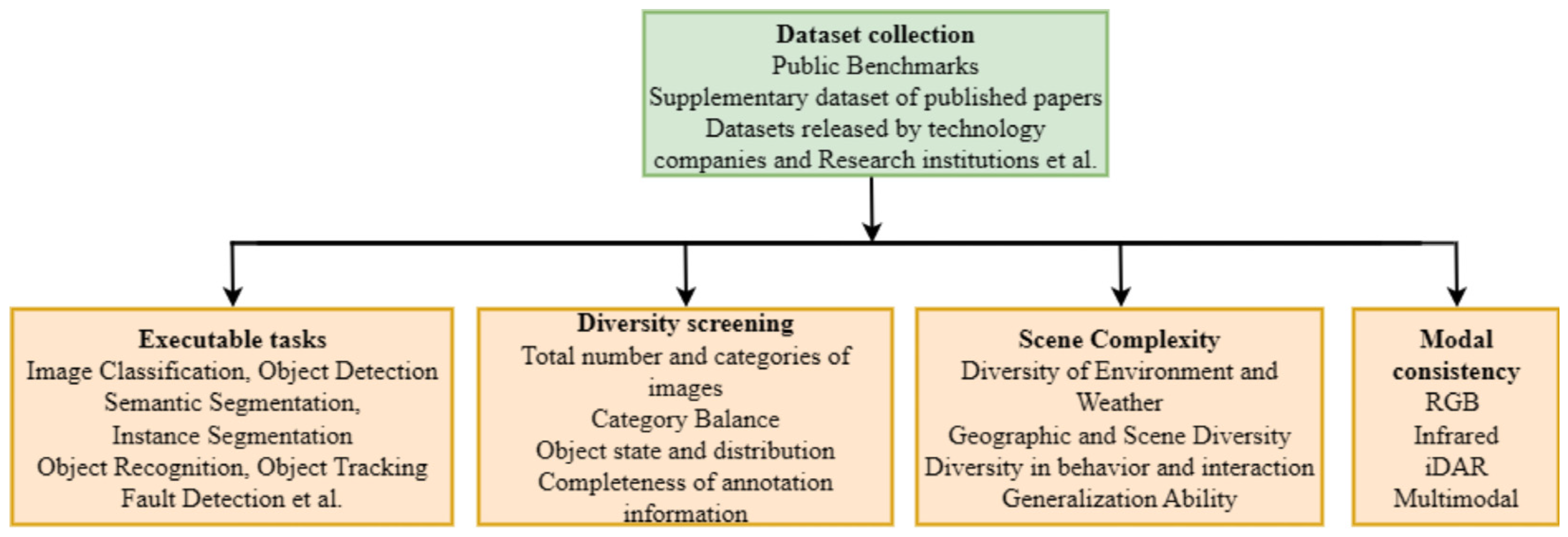

4.1.7. Process for Selecting and Filtering Datasets

4.1.8. The Gaps in Scarce Transportation Scenarios

- (i)

- Low-illumination environments: Most datasets, including TUD Brussels Pedestrian, Cityscapes, JAAD, Elektra, Udacity, NYC3DCars, GTSRB, and Detection Benchmark, focus on daytime conditions and contain few nighttime or varied weather samples. Oxford Road Boundaries incorporates seasonal and lighting variations but still lacks data for heavy rain, dense fog, or pure nighttime. Similar gaps exist in the TME Motorway Dataset and RDD 2020. In contrast, CTSDB, TT 100K, and STSD capture broader weather and lighting conditions. Mapillary Traffic Sign Dataset adds seasonal, urban, and rural variability, while NightOwls specializes in nighttime pedestrian detection across multiple European cities.

- (ii)

- Harsh weather adaptation: Many datasets lack sufficient fog, rain, or snow samples, restricting model robustness in real traffic scenarios. For instance, CCTSDB suffers from this limitation. By comparison, D2-City and ONCE contain extensive challenging weather conditions, supporting domain adaptation research. Bosch Small Traffic Lights Dataset also addresses this issue, offering diverse weather and interference for signal detection tasks.

- (iii)

- Small, occluded, and complex samples: Datasets such as LISA Traffic Sign, DriveU Traffic Light, CeyMo, and CrowdHuman attempt to address scarcity in small targets, occlusion, and cluttered scenes. LISA provides detailed annotations including occlusion. DriveU emphasizes small pixel objects. CeyMo introduces nighttime, glare, rain, and shadow. CrowdHuman enriches severe occlusion scenarios. Other datasets fill additional gaps, such as Chinese City Parking (parking lot environments), PANDA (tiny distant objects), and CeyMo (unique perspectives). These contributions improve coverage but remain incomplete and unbalanced.

- (iv)

- Regional and special scenarios: Certain datasets focus on geographic diversity and context-specific challenges. KUL Belgium Traffic Sign emphasizes sign variation, while RTSD adds extreme lighting, seasonal diversity, and Eastern European symbols absent in mainstream datasets. LISA Traffic Light covers day and night variations, complemented by LaRA’s signal videos. PTL combines pedestrians and traffic lights. ApolloScape and BDD100K expand coverage to multiple cities, environments, and weather types. Street Scene captures complex backgrounds with vehicles, pedestrians, and natural elements. Highway Workzones highlights construction zones with cones, signs, and special vehicles.

4.2. Evaluation Index

4.2.1. Inception Score (IS)

4.2.2. Fréchet Inception Distance (FID)

4.2.3. Kernel Inception Distance (KID)

4.2.4. Jensen–Shannon Divergence

4.2.5. Peak Signal-to-Noise Ratio (PSNR)

4.2.6. Structural SIMilarity (SSIM)

4.2.7. Feature Similarity Index (FSIM)

4.2.8. Learned Perceptual Image Patch Similarity (LPIPS)

4.2.9. Gradient Magnitude Similarity Deviation (GMSD)

4.2.10. Deep Image Structure and Texture Similarity (DISTS)

4.2.11. Evaluation Metrics Selection and Analysis of Perceptual and Functional Correlation

4.3. Summary

5. Discussion

5.1. Feature Extraction and Semantic Understanding Enhancement

5.2. Physical Barrier Information Dataset

5.3. Data Augmentation Strategy for Integrating Diversity and Authenticity of Traffic Visual Scenes

5.4. Generating Efficiency and Computational Cost

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AlexNet | Alex Krizhevsky Neural Network |

| CycleGAN | Cycle-Consistent Generative Adversarial Network |

| CTSD | Chinese Traffic Sign Dataset |

| GoogLeNet | Google Inception Network |

| Leaky ReLU | Leaky Rectified Linear Unit |

| MCGAN | Multi-Condition Generative Adversarial Network |

| R-CNN | Region-based Convolutional Neural Network |

| ResNet50 | Residual Network with 50 Layers |

| ReLU | Rectified Linear Unit |

| SGD | Stochastic Gradient Descent |

| VGG19 | Visual Geometry Group Network (19 layers) |

| WGAN | Wasserstein Generative Adversarial Network |

References

- Ji, B.; Xu, J.; Liu, Y.; Fan, P.; Wang, M. Improved YOLOv8 for Small Traffic Sign Detection under Complex Environmental Conditions. Frankl. Open 2024, 8, 100167. [Google Scholar] [CrossRef]

- Sun, S.Y.; Hsu, T.H.; Huang, C.Y.; Hsieh, C.H.; Tsai, C.W. A Data Augmentation System for Traffic Violation Video Generation Based on Diffusion Model. Procedia Comput. Sci. 2024, 251, 83–90. [Google Scholar] [CrossRef]

- Benfaress, I.; Bouhoute, A. Advancing Traffic Sign Recognition: Explainable Deep CNN for Enhanced Robustness in Adverse Environments. Computers 2025, 14, 88. [Google Scholar] [CrossRef]

- Bayer, M.; Kaufhold, M.A.; Buchhold, B.; Keller, M.; Dallmeyer, J.; Reuter, C. Data Augmentation in Natural Language Processing: A Novel Text Generation Approach for Long and Short Text Classifiers. Int. J. Mach. Learn. Cybern. 2023, 14, 135–150. [Google Scholar] [CrossRef]

- Azfar, T.; Li, J.; Yu, H.; Cheu, R.L.; Lv, Y.; Ke, R. Deep Learning-Based Computer Vision Methods for Complex Traffic Environments Perception: A Review. Data Sci. Transp. 2024, 6, 1. [Google Scholar] [CrossRef]

- Zhang, J.; Zou, X.; Kuang, L.D.; Wang, J.; Sherratt, R.S.; Yu, X. CCTSDB 2021: A More Comprehensive Traffic Sign Detection Benchmark. Hum.-Centric Comput. Inf. Sci. 2022, 12, 23. [Google Scholar] [CrossRef]

- Yanzhao Zhu, W.Q.Y. Traffic Sign Recognition Based on Deep Learning. Multimed Tools Appl. 2022, 81, 17779–17791. [Google Scholar] [CrossRef]

- Zhang, J.; Lv, Y.; Tao, J.; Huang, F.; Zhang, J. A Robust Real-Time Anchor-Free Traffic Sign Detector with One-Level Feature. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 1437–1451. [Google Scholar] [CrossRef]

- Yang, L.; He, Z.; Zhao, X.; Fang, S.; Yuan, J.; He, Y.; Li, S.; Liu, S. A Deep Learning Method for Traffic Light Status Recognition. J. Intell. Connect. Veh. 2023, 6, 173–182. [Google Scholar] [CrossRef]

- Moumen, I.; Abouchabaka, J.; Rafalia, N. Adaptive Traffic Lights Based on Traffic Flow Prediction Using Machine Learning Models. Int. J. Power Electron. Drive Syst. 2023, 13, 5813–5823. [Google Scholar] [CrossRef]

- Zhu, R.; Li, L.; Wu, S.; Lv, P.; Li, Y.; Xu, M. Multi-Agent Broad Reinforcement Learning for Intelligent Traffic Light Control. Inf. Sci. 2023, 619, 509–525. [Google Scholar] [CrossRef]

- Yazdani, M.; Sarvi, M.; Asadi Bagloee, S.; Nassir, N.; Price, J.; Parineh, H. Intelligent Vehicle Pedestrian Light (IVPL): A Deep Reinforcement Learning Approach for Traffic Signal Control. Transp. Res. Part C Emerg. Technol. 2023, 149, 103991. [Google Scholar] [CrossRef]

- Liu, X.; Lin, Y. YOLO-GW: Quickly and Accurately Detecting Pedestrians in a Foggy Traffic Environment. Sensors 2023, 23, 5539. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Qiao, X.; Zhao, C.; Deng, T.; Yan, F. VP-YOLO: A Human Visual Perception-Inspired Robust Vehicle-Pedestrian Detection Model for Complex Traffic Scenarios. Expert Syst. Appl. 2025, 274, 126837. [Google Scholar] [CrossRef]

- Li, A.; Sun, S.; Zhang, Z.; Feng, M.; Wu, C.; Li, W. A Multi-Scale Traffic Object Detection Algorithm for Road Scenes Based on Improved YOLOv5. Electronics 2023, 12, 878. [Google Scholar] [CrossRef]

- Lai, H.; Chen, L.; Liu, W.; Yan, Z.; Ye, S. STC-YOLO: Small Object Detection Network for Traffic Signs in Complex Environments. Sensors 2023, 23, 5307. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.-Y.; Chen, Y.-C. Traffic Light Detection Using Ensemble Learning by Boosting with Color-Based Data Augmentation. Int. J. Transp. Sci. Technol. 2024. [Google Scholar] [CrossRef]

- Li, K.; Dai, Z.; Wang, X.; Song, Y.; Jeon, G. GAN-Based Controllable Image Data Augmentation in Low-Visibility Conditions for Improved Roadside Traffic Perception. IEEE Trans. Consum. Electron. 2024, 70, 6174–6188. [Google Scholar] [CrossRef]

- Zhang, C.; Li, G.; Zhang, Z.; Shao, R.; Li, M.; Han, D.; Zhou, M. AAL-Net: A Lightweight Detection Method for Road Surface Defects Based on Attention and Data Augmentation. Appl. Sci. 2023, 13, 1435. [Google Scholar] [CrossRef]

- Dineley, A.; Natalia, F.; Sudirman, S. Data Augmentation for Occlusion-Robust Traffic Sign Recognition Using Deep Learning. ICIC Express Lett. Part B Appl. 2024, 15, 381–388. [Google Scholar] [CrossRef]

- Shi, J.; Rao, H.; Jing, Q.; Wen, Z.; Jia, G. FlexibleCP: A Data Augmentation Strategy for Traffic Sign Detection. IET Image Process 2024, 18, 3667–3680. [Google Scholar] [CrossRef]

- Li, N.; Song, F.; Zhang, Y.; Liang, P.; Cheng, E. Traffic Context Aware Data Augmentation for Rare Object Detection in Autonomous Driving. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 4548–4554. [Google Scholar] [CrossRef]

- Alsiyeu, U.; Duisebekov, Z. Enhancing Traffic Sign Recognition with Tailored Data Augmentation: Addressing Class Imbalance and Instance Scarcity. arXiv 2024, arXiv:2406.03576. [Google Scholar] [CrossRef]

- Jilani, U.; Asif, M.; Rashid, M.; Siddique, A.A.; Talha, S.M.U.; Aamir, M. Traffic Congestion Classification Using GAN-Based Synthetic Data Augmentation and a Novel 5-Layer Convolutional Neural Network Model. Electronics 2022, 11, 2290. [Google Scholar] [CrossRef]

- Chen, N.; Xu, Z.; Liu, Z.; Chen, Y.; Miao, Y.; Li, Q.; Hou, Y.; Wang, L. Data Augmentation and Intelligent Recognition in Pavement Texture Using a Deep Learning. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25427–25436. [Google Scholar] [CrossRef]

- Hassan, E.T.; Li, N.; Ren, L. Semantic Consistency: The Key to Improve Traffic Light Detection with Data Augmentation. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1734–1739. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Zhu, D.; Xia, S.; Zhao, J.; Zhou, Y.; Jian, M.; Niu, Q.; Yao, R.; Chen, Y. Diverse Sample Generation with Multi-Branch Conditional Generative Adversarial Network for Remote Sensing Objects Detection. Neurocomputing 2020, 381, 40–51. [Google Scholar] [CrossRef]

- Dewi, C.; Chen, R.C.; Liu, Y.T.; Jiang, X.; Hartomo, K.D. Yolo V4 for Advanced Traffic Sign Recognition with Synthetic Training Data Generated by Various GAN. IEEE Access 2021, 9, 97228–97242. [Google Scholar] [CrossRef]

- Wang, D.; Ma, X. TLGAN: Conditional Style-Based Traffic Light Generation with Generative Adversarial Networks. In Proceedings of the 2021 International Conference on High Performance Big Data and Intelligent Systems (HPBD&IS), Macau, China, 5–7 December 2021; pp. 192–195. [Google Scholar] [CrossRef]

- Rajagopal, B.G.; Kumar, M.; Alshehri, A.H.; Alanazi, F.; Deifalla, A.F.; Yosri, A.M.; Azam, A. A Hybrid Cycle GAN-Based Lightweight Road Perception Pipeline for Road Dataset Generation for Urban Mobility. PLoS ONE 2023, 18, e0293978. [Google Scholar] [CrossRef]

- Yang, H.; Zhang, S.; Huang, D.; Wu, X.; Zhu, H.; He, T.; Tang, S.; Zhao, H.; Qiu, Q.; Lin, B.; et al. UniPAD: A Universal Pre-Training Paradigm for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 16–22 June 2024; pp. 15238–15250. [Google Scholar] [CrossRef]

- Gou, Y.; Li, M.; Song, Y.; He, Y.; Wang, L. Multi-Feature Contrastive Learning for Unpaired Image-to-Image Translation. Complex Intell. Syst. 2023, 9, 4111–4122. [Google Scholar] [CrossRef]

- Eskandar, G.; Farag, Y.; Yenamandra, T.; Cremers, D.; Guirguis, K.; Yang, B. Urban-StyleGAN: Learning to Generate and Manipulate Images of Urban Scenes. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV 2023), Anchorage, AK, USA, 4–7 June 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Nichol, A.; Dhariwal, P. Improved Denoising Diffusion Probabilistic Models. arXiv 2021, arXiv:2102.09672. [Google Scholar] [CrossRef]

- Lu, J.; Wong, K.; Zhang, C.; Suo, S.; Urtasun, R. SceneControl: Diffusion for Controllable Traffic Scene Generation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2024), Yokohama, Japan, 13–17 May 2024; pp. 16908–16914. [Google Scholar] [CrossRef]

- Tan, J.; Yu, H.; Huang, J.; Yang, Z.; Zhao, F. DiffLoss: Unleashing Diffusion Model as Constraint for Training Image Restoration Network. In Proceedings of the 17th Asian Conference on Computer Vision (ACCV 2024), Hanoi, Vietnam, 8–12 December 2024; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2024; Volume 15475, pp. 105–123. [Google Scholar] [CrossRef]

- Mishra, S.; Mishra, M.; Kim, T.; Har, D.; Member, S. Road Redesign Technique Achieving Enhanced Road Safety by Inpainting with a Diffusion Model. arXiv 2023, arXiv:2302.07440. [Google Scholar] [CrossRef]

- Şah, M.; Direkoğlu, C. LightWeight Deep Convolutional Neural Networks for Vehicle Re-Identification Using Diffusion-Based Image Masking. In Proceedings of the HORA 2021—3rd International Congress on Human-Computer Interaction, Optimization and Robotic Applications, Ankara, Turkey, 11–13 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Lu, B.; Miao, Q.; Dai, X.; Lv, Y. VCrash: A Closed-Loop Traffic Crash Augmentation with Pose Controllable Pedestrian. In Proceedings of the IEEE Conference on Intelligent Transportation Systems(ITSC), Bilbao, Spain, 24–28 September 2023; pp. 5682–5687. [Google Scholar] [CrossRef]

- Nie, Y.; Chen, Y.; Miao, Q.; Lv, Y. Data Augmentation for Pedestrians in Corner Case: A Pose Distribution Control Approach. In Proceedings of the IEEE Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; pp. 5712–5717. [Google Scholar] [CrossRef]

- Wang, H.; Guo, L.; Yang, D.; Zhang, X. Data Augmentation Method for Pedestrian Dress Recognition in Road Monitoring and Pedestrian Multiple Information Recognition Model. Information 2023, 14, 125. [Google Scholar] [CrossRef]

- Torrens, P.M.; Gu, S. Inverse Augmentation: Transposing Real People into Pedestrian Models. Comput. Environ. Urban Syst. 2023, 100, 101923. [Google Scholar] [CrossRef]

- Bosquet, B.; Cores, D.; Seidenari, L.; Brea, V.M.; Mucientes, M.; Bimbo, A. Del. A Full Data Augmentation Pipeline for Small Object Detection Based on Generative Adversarial Networks. Pattern Recognit. 2023, 133, 108998. [Google Scholar] [CrossRef]

- Konushin, A.S.; Faizov, B.V.; Shakhuro, V.I. Road Images Augmentation with Synthetic Traffic Signs Using Neural Networks. Comput. Opt. 2021, 45, 736–748. [Google Scholar] [CrossRef]

- Lewy, D.; Mańdziuk, J. An Overview of Mixing Augmentation Methods and Augmentation Strategies. Artif. Intell. Rev. 2023, 56, 2111–2169. [Google Scholar] [CrossRef]

- Nayak, A.A.; Venugopala, P.S.; Ashwini, B. A Systematic Review on Generative Adversarial Network (GAN): Challenges and Future Directions. Arch. Comput. Methods Eng. 2024, 31, 4739–4772. [Google Scholar] [CrossRef]

- Al Maawali, R.; AL-Shidi, A. Optimization Algorithms in Generative AI for Enhanced GAN Stability and Performance. Appl. Comput. J. 2024, 359–371. [Google Scholar] [CrossRef]

- Welfert, M.; Kurri, G.R.; Otstot, K.; Sankar, L. Addressing GAN Training Instabilities via Tunable Classification Losses. IEEE J. Sel. Areas Inf. Theory 2024, 5, 534–553. [Google Scholar] [CrossRef]

- Zhou, B.; Zhou, Q.; Li, Z. Addressing Data Imbalance in Crash Data: Evaluating Generative Adversarial Network’s Efficacy Against Conventional Methods. IEEE Access 2025, 13, 2929–2944. [Google Scholar] [CrossRef]

- Peng, M.; Chen, K.; Guo, X.; Zhang, Q.; Zhong, H.; Zhu, M.; Yang, H. Diffusion Models for Intelligent Transportation Systems: A Survey. arXiv 2025, arXiv:2409.15816. [Google Scholar] [CrossRef]

- Ma, Z.; Zhang, Y.; Jia, G.; Zhao, L.; Ma, Y.; Ma, M.; Liu, G.; Zhang, K.; Ding, N.; Li, J.; et al. Efficient Diffusion Models: A Comprehensive Survey from Principles to Practices. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 7506–7525. [Google Scholar] [CrossRef]

- Shen, W.; Wei, Z.; Ren, Q.; Zhang, B.; Huang, S.; Fan, J.; Zhang, Q. Interpretable Rotation-Equivariant Quaternion Neural Networks for 3D Point Cloud Processing. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 3290–3304. [Google Scholar] [CrossRef]

- Fang, H.; Han, B.; Zhang, S.; Zhou, S.; Hu, C.; Ye, W.-M. Data Augmentation for Object Detection via Controllable Diffusion Models. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 1257–1266. [Google Scholar] [CrossRef]

- China Chinese Traffic Sign Database (CTSDB). Available online: https://nlpr.ia.ac.cn/pal/trafficdata/recognition.html (accessed on 1 March 2025).

- Zhang, J.; Jin, X.; Sun, J.; Wang, J.; Sangaiah, A.K. Spatial and Semantic Convolutional Features for Robust Visual Object Tracking. Multimed Tools Appl. 2020, 79, 15095–15115. [Google Scholar] [CrossRef]

- Zhu, Z.; Liang, D.; Zhang, S.; Huang, X.; Li, B.; Hu, S. Traffic-Sign Detection and Classification in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Volume 2016-Decem, pp. 2110–2118. [Google Scholar] [CrossRef]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. Man vs. Computer: Benchmarking Machine Learning Algorithms for Traffic Sign Recognition. Neural Netw. 2012, 32, 323–332. [Google Scholar] [CrossRef]

- Møgelmose, A.; Trivedi, M.M.; Moeslund, T.B. Vision-Based Traffic Sign Detection and Analysis for Intelligent Driver Assistance Systems: Perspectives and Survey. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1484–1497. [Google Scholar] [CrossRef]

- Ertler, C.; Mislej, J.; Ollmann, T.; Porzi, L.; Neuhold, G.; Kuang, Y. The Mapillary Traffic Sign Dataset for Detection and Classification on a Global Scale. In Proceedings of the 16th European Conference on Computer Vision (ECCV 2020), Glasgow, UK, 23–28 August 2020; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2020; Volume 12368, pp. 68–84. [Google Scholar] [CrossRef]

- Timofte, R.; Zimmermann, K.; Van Gool, L. Multi-View Traffic Sign Detection, Recognition, and 3D Localisation. Mach. Vis. Appl. 2014, 25, 633–647. [Google Scholar] [CrossRef]

- Shakhuro, V.I.; Konushin, A.S. Russian Traffic Sign Images Dataset. Comput. Opt. 2016, 40, 294–300. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.; Qi, Y.; Liu, J.; Yang, J. CTSD: A Dataset for Traffic Sign Recognition in Complex Real-World Images. In Proceedings of the VCIP 2018—IEEE International Conference on Visual Communications and Image Processing, Taichung, Taiwan, 9–12 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Swedish Transport Research Institute Swedish Traffic Sign Dataset (STSD). Available online: https://www.selectdataset.com/dataset/2bf39636f1fbe5cd1ac034c6250c9ade (accessed on 7 March 2025).

- Jensen, M.B.; Philipsen, M.P.; Møgelmose, A.; Moeslund, T.B.; Trivedi, M.M. Vision for Looking at Traffic Lights: Issues, Survey, and Perspectives. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1800–1815. [Google Scholar] [CrossRef]

- Behrendt, K.; Novak, L.; Botros, R. A Deep Learning Approach to Traffic Lights: Detection, Tracking, and Classification. In Proceedings of the Proceedings—IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1370–1377. [Google Scholar] [CrossRef]

- Fregin, A.; Müller, J.; Krebel, U.; Dietmayer, K. The DriveU Traffic Light Dataset: Introduction and Comparison with Existing Datasets. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 3376–3383. [Google Scholar] [CrossRef]

- De Charette, R.; Nashashibi, F. Real Time Visual Traffic Lights Recognition Based on Spot Light Detection and Adaptive Traffic Lights Templates. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV 2009), Xi’an, China, 3–5 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 358–363. [Google Scholar] [CrossRef]

- Yu, S.; Lee, H.; Kim, J. LYTNet: A Convolutional Neural Network for Real-Time Pedestrian Traffic Lights and Zebra Crossing Recognition for the Visually Impaired. In Proceedings of the 18th International Conference on Computer Analysis of Images and Patterns (CAIP 2019), Salerno, Italy, 3–5 September 2019; Volume 11678, pp. 259–270. [Google Scholar] [CrossRef]

- Dollár, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian Detection: An Evaluation of the State of the Art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Wojek, C.; Walk, S.; Schiele, B. Multi-Cue Onboard Pedestrian Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 794–801. [Google Scholar] [CrossRef]

- Che, Z.; Li, G.; Li, T.; Jiang, B.; Shi, X.; Zhang, X.; Lu, Y.; Wu, G.; Liu, Y.; Ye, J. D2-City: A Large-Scale Dashcam Video Dataset of Diverse Traffic Scenarios. arXiv 2019, arXiv:1904.01975. [Google Scholar] [CrossRef]

- Zhang, S.; Benenson, R.; Schiele, B. CityPersons: A Diverse Dataset for Pedestrian Detection Supplementary Material. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3213–3221. [Google Scholar] [CrossRef]

- Shao, S.; Zhao, Z.; Li, B.; Xiao, T.; Yu, G.; Zhang, X.; Sun, J. CrowdHuman: A Benchmark for Detecting Human in a Crowd. arXiv 2018, arXiv:1805.00123. [Google Scholar] [CrossRef]

- Neumann, L.; Karg, M.; Zhang, S.; Scharfenberger, C.; Piegert, E.; Mistr, S.; Prokofyeva, O.; Thiel, R.; Vedaldi, A.; Zisserman, A.; et al. NightOwls: A Pedestrians at Night Dataset. In Proceedings of the 14th Asian Conference on Computer Vision (ACCV 2018), Perth, Australia, 2–6 December 2018; Volume 11361, pp. 691–705. [Google Scholar] [CrossRef]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Are They Going to Cross? A Benchmark Dataset and Baseline for Pedestrian Crosswalk Behavior. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 206–213. [Google Scholar] [CrossRef]

- Socarrás, Y.; Serrat, J.; López, A.M.; Toledo, R. Adapting Pedestrian Detection from Synthetic to Far Infrared Images. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Sydney, Australia, 1–8 December 2013; pp. 1–7. [Google Scholar]

- Wang, X.; Zhang, X.; Zhu, Y.; Guo, Y.; Yuan, X.; Xiang, L.; Wang, Z.; Ding, G.; Brady, D.; Dai, Q.; et al. Panda: A Gigapixel-Level Human-Centric Video Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3265–3275. [Google Scholar] [CrossRef]

- Xu, Z.; Yang, W.; Meng, A.; Lu, N.; Huang, H.; Ying, C.; Huang, L. Towards End-to-End License Plate Detection and Recognition: A Large Dataset and Baseline. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 255–271. [Google Scholar] [CrossRef]

- Udacity Udacity. Available online: https://github.com/udacity/self-driving-car/tree/master/annotations (accessed on 9 March 2025).

- Mao, J.; Niu, M.; Jiang, C.; Liang, H.; Chen, J.; Liang, X.; Li, Y.; Ye, C.; Zhang, W.; Li, Z.; et al. One Million Scenes for Autonomous Driving: ONCE Dataset. arXiv 2021, arXiv:2106.11037. [Google Scholar] [CrossRef]

- Matzen, K.; Snavely, N. NYC3DCars: A Dataset of 3D Vehicles in Geographic Context. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013; pp. 761–768. [Google Scholar] [CrossRef]

- Yang, L.; Luo, P.; Loy, C.C.; Tang, X. A Large-Scale Car Dataset for Fine-Grained Categorization and Verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3973–3981. [Google Scholar] [CrossRef]

- Seo, Y.W.; Lee, J.; Zhang, W.; Wettergreen, D. Recognition of Highway Workzones for Reliable Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2015, 16, 708–718. [Google Scholar] [CrossRef]

- Caraffi, C.; Vojíř, T.; Trefný, J.; Šochman, J.; Matas, J. A System for Real-Time Detection and Tracking of Vehicles from a Single Car-Mounted Camera. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Anchorage, AK, USA, 16–19 September 2012; pp. 975–982. [Google Scholar] [CrossRef]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2022: A Multi-National Image Dataset for Automatic Road Damage Detection. Geosci. Data J. 2024, 11, 846–862. [Google Scholar] [CrossRef]

- Jayasinghe, O.; Hemachandra, S.; Anhettigama, D.; Kariyawasam, S.; Rodrigo, R.; Jayasekara, P. CeyMo: See More on Roads—A Novel Benchmark Dataset for Road Marking Detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 3381–3390. [Google Scholar] [CrossRef]

- Suleymanov, T.; Gadd, M.; De Martini, D.; Newman, P. The Oxford Road Boundaries Dataset. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 222–227. [Google Scholar] [CrossRef]

- Huang, X.; Cheng, X.; Geng, Q.; Cao, B.; Zhou, D.; Wang, P.; Lin, Y.; Yang, R. The Apolloscape Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 954–960. [Google Scholar] [CrossRef]

- Xue, J.; Fang, J.; Li, T.; Zhang, B.; Zhang, P.; Ye, Z.; Dou, J. BLVD: Building a Large-Scale 5D Semantics Benchmark for Autonomous Driving. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6685–6691. [Google Scholar] [CrossRef]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2633–2642. [Google Scholar] [CrossRef]

- Han, J.; Liang, X.; Xu, H.; Chen, K.; Hong, L.; Mao, J.; Ye, C.; Zhang, W.; Li, Z.; Liang, X.; et al. SODA10M: A Large-Scale 2D Self/Semi-Supervised Object Detection Dataset for Autonomous Driving. arXiv 2021, arXiv:2106.11118. [Google Scholar] [CrossRef]

- Ramachandra, B.; Jones, M.J. Street Scene: A New Dataset and Evaluation Protocol for Video Anomaly Detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 2558–2567. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O.; et al. NuScenes: A Multimodal Dataset for Autonomous Driving. arXiv 2020, arXiv:1903.11027. [Google Scholar] [CrossRef]

- Chang, M.-F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; et al. Argoverse: 3D Tracking and Forecasting With Rich Maps. arXiv 2019, arXiv:1911.02620. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar] [CrossRef]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. arXiv 2016, arXiv:1606.03498. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017. [Google Scholar] [CrossRef]

- Bińkowski, M.; Sutherland, D.J.; Arbel, M.; Gretton, A. Demystifying MMD GANs. arXiv 2018, arXiv:1801.01401. [Google Scholar] [CrossRef]

- Lin, J. Divergence Measures Based on the Shannon Entropy. IEEE Trans. Inf. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar] [CrossRef]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient Magnitude Similarity Deviation: A Highly Efficient Perceptual Image Quality Index. IEEE Trans. Image Process. 2014, 23, 684–695. [Google Scholar] [CrossRef]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Image Quality Assessment: Unifying Structure and Texture Similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2567–2581. [Google Scholar] [CrossRef] [PubMed]

- Assion, F.; Gressner, F.; Augustine, N.; Klemenc, J.; Hammam, A.; Krattinger, A.; Trittenbach, H.; Philippsen, A.; Riemer, S. A-BDD: Leveraging Data Augmentations for Safe Autonomous Driving in Adverse Weather and Lighting. arXiv 2024, arXiv:2408.06071. [Google Scholar] [CrossRef]

- Zhu, J.; Ortiz, J.; Sun, Y. Decoupled Deep Reinforcement Learning with Sensor Fusion and Imitation Learning for Autonomous Driving Optimization. In Proceedings of the 6th International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 November 2024; pp. 306–310. [Google Scholar] [CrossRef]

| Method Category | Specific Method | Describe | Effect | Deficiency |

|---|---|---|---|---|

| Geometric transformation | Rotation |  → → | Different angles of simulated objects. | It may lead to the loss of edge information, background filling and other problems. |

| Scaling |  → → | Enhance the recognition ability of the model for different size objects. | The loss of detail information, the introduction of interpolation noise, resulting in image blur. | |

| Translation |  → → | Different locations of simulated objects. | The object position is offset, which affects the correct position information. | |

| Flipping |  → → | Facilitate the model to learn the characteristics of the object in different directions. | Change the context information of the object. | |

| Random Cropping |  → → | Different perspectives of simulated objects. | Important information may be lost. | |

| Affine Transformation |  → → | Generate diversified global samples. | High computational complexity. | |

| Color transformation | Brightness |  ↓ ↓ | Simulate different light changes. | Brightness imbalance, loss of details. |

| Contrast |  ↓ ↓ | Allows the model to more easily extract key features. | Excessive enhancement may lead to noise amplification and information loss. | |

| Saturation |  ↓ ↓ | Simulate color performance under different lighting conditions. | Easy to cause color distortion. |

| Methods | Describe | Effect | Deficiency |

|---|---|---|---|

| Shadow or reflection |  → → | Enhance the realism of light occlusion. | Too large easily leads to difficulty in object feature extraction. |

→ → | Increase data volume. | Cause semantic errors. | |

| Simulate different weather and light conditions |  → → | Enhance the adaptability of the model to complex environments | Lack of authenticity. |

| Noise addition |  → → | Enhance model robustness. | Cause image distortion. |

| Fuzzy processing |  → → | Improve the recognition ability of the model for different definition images. | Affect learning of key features. |

| Hybrid augmentation |  → → | Enrich data. | High computational cost and blurred image labels. |

| The Resulting Issues | Describe | Solution |

|---|---|---|

| Mode Collapse | Due to the generator finding an output that can “deceive” the discriminator, it no longer explores other possibilities, resulting in a high similarity of most of the generated samples. | Introducing regularization techniques: incorporating methods such as L1 and L2 regularization into the loss function to improve the model’s ability to generate diverse samples. |

| Adding noise: Injecting random noise into the input or network layer of the generator to promote the diversity of generated samples. | ||

| Gradient penalty: Punishing the excessive gradients of the discriminator to prevent them from becoming too strong during training. | ||

| Gradient Vanishing | During the training process, the gradient values decrease layer by layer and gradually disappear, resulting in sluggish or even failed convergence of the neural network. The generator cannot be trained. | Depth the network architecture |

| Add residual connection | ||

| Batch regularization | ||

| Change the activation function | ||

| Adjust learning rate | ||

| Image Blur | Due to the imbalanced training of the generator and discriminator, the generated images have unclear details, poor quality, and unstable model training. | Optimize network architecture |

| Improve loss function | ||

| Training of Balance Generator and Discriminator | ||

| Adjust training strategy | ||

| Adjust hyperparameters | ||

| Training Instability | During the training process, when either the generator or discriminator becomes too powerful, it can lead to an imbalance in the entire training process and unstable generation quality. | Optimize network architecture |

| Adjust training strategy | ||

| Data preprocessing and augmentation | ||

| Batch standardization | ||

| Adjust hyperparameters | ||

| Overfitting | The generator performs well on training data but performs poorly on new, unseen data. | Introducing regularization techniques |

| Data preprocessing and augmentation | ||

| Label smoothing | ||

| Underfitting | The generator cannot fully learn the distribution of the training data, resulting in its deviation from the real distribution. | Increasing the network complexity |

| Adjusting training parameters | ||

| Extending the number of iterations |

| Comparative Dimension | Image Transformation | GAN | Diffusion Model |

|---|---|---|---|

| Calculation cost | low | moderate | The demand for graphics memory and computing power far exceeds that of GAN. |

| Resolution | unchanged | high resolution (1024 × 1024) | high resolution (1024 × 1024) |

| Training stability | very stable | low | relatively stable |

| Semantic fidelity | low | The generated results are often not fully aligned with the semantic meaning of the text. | High fidelity generation |

| Small object applicability | low | moderate | The generated samples have rich details and far greater diversity than GAN. |

| License restrictions | none | Attention should be paid to data licensing, etc. | Attention should be paid to data licensing, etc. |

| Inference speed | extremely fast | quick | slow |

| Models Name | Challenges | Specific Manifestations |

|---|---|---|

| GAN | Training instability, which leads to fluctuations in generation quality, and even result in complete failure of the training process [47]. | The fundamental challenge of GAN lies in the difficulty of achieving dynamic balance between the generator and discriminator during adversarial training. While there have been improvements in training strategies and loss function compensation, there is still a lack of conditional control for generating rare scene data based on specific needs, particularly for small object data augmentation tasks. To address this issue, it is necessary to combine GAN with supervised enhancement methods that use labels from training samples to guide data augmentation, or to develop effective solutions for generating super-resolution training data to address the problem of data loss in rare scenes. |

| Mode collapse, which refers to the generator starting to generate very limited sample diversity [48,49]. | ||

| Difficulty capturing rare traffic scenarios [50] | ||

| Diffusion Models | High computational complexity. Reference [51] comprehensively reviewed the issues of data loss and multimodality in the field of transportation using diffusion models, pointing out their challenges in terms of efficiency, controllability, and generalization. | The diffusion model is known for its time-consuming training and inference process, whereas GAN only requires one forward propagation to generate an output image. Therefore, it is crucial to explore more efficient architectures and sampling methods that can produce high-quality images while minimizing computational overhead. Additionally, in order to enhance the interpretability and controllability of the model and accurately manipulate specific attributes, objects, or regions in the generated image, it is essential to develop model output interpretation techniques, such as spatial conditions. Lastly, to address issues with noise scheduling and control the sampling process, methods such as learning noise scheduling and continuous time diffusion models can be utilized to maintain the model’s generalization ability while adapting to new tasks. |

| Weak interpretability and controllability | ||

| Difficulty in noise scheduling. To enhance the efficiency of diffusion models, Reference [52] emphasizes the relationship between noise scheduling and optimization strategies, which are key factors influencing the efficiency and performance of diffusion models. |

| Layers | Parameter Count | Computational Complexity | Activation | Expressive Capacity |

|---|---|---|---|---|

| Real-valued dense layers | n × m | O (n × m) | The activation function is applied independently to the numerical output of each neuron, ensuring that each element is processed individually. | More generic |

| Hypercomplex layer | (n × m)/4 | The computational complexity increases to approximately O(16*n*m), with a parameter count of 4*n*m real values. But it obtained rotational invariance. | Apply the activation function to the hypercomplex whole, rather than its individual components. | Enhanced capability to model complex feature distributions and capture multidimensional relationships. |

| Enhancement Methods | Metrics | Dataset | Performance | |

|---|---|---|---|---|

| Image transformation data augmentation [21] | Baseline | mAP (YOLOv3) | CTSD | mAP0.5: 83.4% mAP0.5:0.95: 53.8% |

| Mixup | mAP0.5: 84% mAP0.5:0.95: 55.5% | |||

| Cutout | mAP0.5: 83.9% mAP0.5:0.95: 53.7% | |||

| CutMix | mAP0.5: 85.2% mAP0.5:0.95: 56.4% | |||

| Mosaic | mAP0.5: 85.8% mAP0.5:0.95: 57.6% | |||

| FlexibleCP | mAP0.5: 86.4% mAP0.5:0.95: 61.7% | |||

| GAN [29] | Baseline | mAP (YOLOv4) | Original images | mAP0.5: 99.55% |

| DCGAN | mAP0.5: 99.07% | |||

| LSGAN | mAP0.5: 99.98% | |||

| WGAN | mAP0.5: 99.45% | |||

| Diffusion Model [54] | Baseline | mAP (YOLOX) | PASCAL VOC | 52.5% |

| Diffusion Model | 53.7% | |||

| Composite Data Augmentation [7] | Alternative Enhancement Method Based on the Standardization Characteristics of Traffic Signs | mAP (YOLOv5) | TT 100 K (45 categories) | mAP0.5: 85.18% mAP@.5:.95: 65.46% |

| TT 100 K (24 categories) | mAP0.5: 88.16% mAP@.5:.95: 66.79% | |||

| Name | Developer | Total | Categories | Attributes | Description |

|---|---|---|---|---|---|

| CTSDB | China | 16,164 | 58 | Different time, weather condition, lighting conditions, moving blurring, prohibition signs, indication signs, speed limit signs, etc. | All images in the Chinese Traffic Sign Database are labeled with four corresponding items of symbols and categories. It contains 10,000 detection dataset images and 6164 recognition dataset images [55]. |

| CCTSDB | Zhang Jianming’s team | 10,000 | 3 | salt-and-pepper noise, indicator signs, prohibition signs, warning signs | The CSUST Chinese Traffic Sign Detection Benchmark includes original images, resized images, images with added salt-and-pepper noise, and images with adjusted brightness [56]. |

| TT 100 K | Tsinghua University and Tencent | 100,000 | N/A | different weather, lighting conditions, warning, prohibition, mandatory, uneven categories | The Tsinghua Trent 100 K Tutorial dataset covers 30,000 examples of traffic signs [57]. |

| GTSRB | Germany | 50,000 | 40 | class labels | The images of German Traffic Sign Recognition Benchmark have been meticulously annotated with corresponding class labels [58]. |

| LISA Traffic Sign Dataset | America | N/A | 47 | categories, size, location, occlusion | All images are labeled with categories, size, location, occlusion, and auxiliary road information [59]. |

| Mapillary Traffic Sign Dataset | N/A | 100,000 | N/A | diverse dataset, street view, over 300 bounding box annotations, different weather, seasons, times of day, urban, rural | It is used for detecting and classifying traffic signs around the world [60]. |

| KUL Belgium Traffic Sign Dataset | N/A | 145,000 | N/A | resolution of 1628 × 1236 pixels | The dataset was created in 2013 and consists of training and testing sets (2D, 3D) [61]. |

| RTSD | Russia | 104,359 | 156 | Recognition task | The Russian Traffic Sign Dataset is mainly used for traffic sign recognition [62,63]. |

| STSD | N/A | 10,000 | N/A | Different roads, weather, and lighting conditions, high image quality, uniform resolution | The Swedish Traffic Sign Dataset collected data in the local area, suitable for traffic sign recognition and classification tasks [64]. |

| Name | Developer | Total | Categories | Attributes | Description |

|---|---|---|---|---|---|

| LISA Traffic Light Dataset | San Diego, CA, USA | N/A | N/A | Day/night, lighting, weather variation | Images and videos captured under different lighting and weather conditions [65]. |

| Bosch Small Traffic Lights Dataset | Bosch | 13,427 | Training set: 15 Testing set: 4 | Rain, strong light, interference | The training set consists of 5093 images and 10,756 annotated traffic lights; The test set consists of 8334 consecutive images and 13,486 annotated traffic lights [66]. |

| DriveU Traffic Light Dataset | N/A | N/A | N/A | Rich scene attributes, low resolutions, small objects | Additionally, it can accurately annotate objects with small pixels, further enhancing its value for this type of research [67]. |

| LaRA | La Route Automatisée, France | 11,179 frames (8 min video) | 4 categories (red, green, yellow, blurry) | Resolution 640 × 480 | The traffic light video dataset contains four types of annotated labels for traffic light detection [68]. |

| PTL | Shanghai American School Puxi Campus | 5000 | N/A | Pedestrian + traffic light labels | Pedestrian-Traffic-Lights dataset includes both pedestrians and traffic lights [69]. |

| Name | Developer | Year | Total | Attributes | Description |

|---|---|---|---|---|---|

| Caltech Pedestrian Detection Benchmark | N/A | 2009 | 250,000 frames, 350,000 bounding boxes, 2300 pedestrians | Resolution 640 × 480, 2300 unique pedestrians | 10 h of annotated videos for pedestrian detection [70]. |

| TUD-Brussels Pedestrian | Max Planck Institute for Informatics | 2010 | 1326 annotated images | Resolution 640 × 480, 1326 annotated images of pedestrians, multiple viewing angles | Contains pedestrians mostly captured at small scales and various angles [71]. |

| D2-City | University of Southern California, Didi Laboratories | N/A | 10,000+ videos (1000 fully annotated, rest partially) | Vehicle, pedestrian, street scene annotations | Large-scale driving recorder dataset with diverse objects and scenarios [72]. |

| CityPersons | Research team from Technical University of Munich | 2016 | 5000+ images (2975 train, 500 val, 1575 test) | Subset of CityScape, person-only annotations | High-quality pedestrian dataset for refined detection tasks [73]. |

| CrowdHuman | Bosch | N/A | 15,000 train, 4370 val, 5000 test; 470,000 human instances | Avg. 23 people per image, diverse annotations | Rich pedestrian dataset with strong crowd diversity [74]. |

| NightOwls Dataset | N/A | N/A | N/A | Resolution 1024 × 640, multiple cities & conditions | Focused on nighttime pedestrian detection, includes pedestrians, cyclists, and others [75]. |

| JAAD | York University | 2017 | 346 video clips; 2793 pedestrians | Weather variations, daily driving scenario | Captures joint attention behavior in driving contexts [76]. |

| Elektra (CVC-14) | Universitat Autònoma de Barcelona | 2016 | 3110 train + 2880 test (day); 2198 train + 2883 test (night) | Day/night subsets, 2500 pedestrians | Dataset for day/night pedestrian detection tasks [77]. |

| PANDA | Tsinghua University and Duke University | 2020 | 15,974.6 k bounding boxes, 111.8 k attributes, 12.7 k trajectories, 2.2 k groups | Large-scale fine-grained attributes and groups | Dense pedestrian analysis dataset for pose, attributes, and trajectory research [78]. |

| Name | Developer | Year | Total | Attributes | Description |

|---|---|---|---|---|---|

| CCPD | University of Science and Technology of China and Xingtai Financial Holding Group | 2018 | 250,000 car images | License plate position annotations | Chinese City Parking Dataset for license plate detection and recognition [79]. |

| Udacity | Udacity | 2016 | Dataset 1: 9423 frames (1920 × 1200) Dataset 2: 15,000 frames (1920 × 1200) | Cars, trucks, pedestrians (Dataset 1), Cars, trucks, pedestrians, traffic lights (Dataset 2) Daytime environment | Benchmark dataset for autonomous driving research [80]. |

| ONCE | HUAWEI | 2021 | 1 M LiDAR scenes + 7 M camera images | Cars, buses, trucks, pedestrians, cyclists, Diverse weather environments | One millioN sCenEs dataset for large-scale perception research [81]. |

| NYC3DCars | Cornell University | 2013 | 2000 annotated images 3787 annotated vehicles | Vehicle location, type, geographic location, occlusion degree, time | Dataset of 3D vehicles in geographic context (New York) [82]. |

| CompCars | Chinese University of Hong Kong | 2015 | Web-nature: 136,726 full-car + 27,618 component images Surveillance: 50,000 front-view images | 163 car brands, 1716 vehicle models, Web-nature & surveillance-nature data | Comprehensive Cars dataset for fine-grained vehicle classification and analysis [83]. |

| Name | Developer | Year | Total | Attributes | Description |

|---|---|---|---|---|---|

| Highway Workzones | Carnegie Mellon University | 2015 | N/A | Highway driving, sunny, rainy, cloudy, 6 videos, spring, winter | The dataset can be utilized for training and identifying the boundaries of highway driving areas, as well as detecting changes in driving environments. The images have been accurately labeled with 9 different types of tags [84]. |

| TME Motorway Dataset | Czech Technical University in Prague & University of Parma | 2011 | 28 video clips and 30,000 frames | Highways in northern Italy, different traffic conditions, lighting conditions, two subsets, day, night, resolution of 1024 × 768 | Only the vehicles have been annotated [85]. |

| RDD-2020 | Indian Institute of Technology, University of Tokyo, and UrbanX Technologies | 2021 | 26,620 | road damage | Road Damage Dataset 2020 [86]. |

| CeyMo | University of Moratuwa | 2021 | N/A | 1920 × 1080, various regions, 2887 images (with 4706 instances of road markings across 11 categories) | See More on Roads—A Novel Benchmark Dataset for Road Marking Detection. The test set consists of six distinct scenarios: normal, crowded, glare, night, rain, and shadow [87]. |

| Oxford Road Boundaries | University of Oxford | 2021 | 62,605 labeled samples | Straight roads, parked cars, intersections, different scenarios | Detection task [88]. |

| Name | Developer | Year | Total | Attributes | Description |

|---|---|---|---|---|---|

| ApolloScape | N/A | N/A | N/A | Different cities, different traffic conditions, high resolution images, RGB video | The dataset is divided into three subsets, which are used for training, validation, and testing. No semantic annotation was provided for testing images. All pixels in the ground truth annotation used for testing images are marked as 255 [89]. |

| BLVD | Xi’an Jiaotong University and Chang’an University | 2019 | 214,900 tracking points, 6004 valid segments | 5D Semantics Benchmark, autonomous Driving dataset, low/high density of traffic participants, daytime/nighttime | It contains a total of 4900 objects for 5D intent prediction [90]. |

| BDD100K | BERKELEY ARTIFICIAL INTELLIGENCE RESEARCH | 2020 | 100,000 videos | Diverse Driving Video Database, different geographical, environmental and weather conditions | It includes 10 tasks [91]. |

| SODA10M | Huawei Noah’s Ark Laboratory, Sun Yat-sen University, and Chinese University of Hong Kong | 2021 | N/A | large-scale 2D dataset | A Large-Scale 2D Self/Semi-Supervised Object Detection Dataset for Autonomous Driving. It contains 10 m unlabeled images and 20 k labeled images with 6 representative object categories [92]. |

| Street Scene | Mitsubishi Electric Research Laboratory and North Carolina State University | 2020 | 46 training video sequences, 35 testing video sequences | Street view, car activities, complex backgrounds, pedestrians, trees, two consecutive summers | The sequences captured from street views [93]. |

| KITTI | Karlsruhe Institute of Technology (KIT) and Toyota Technological Institute at Chicago (TTI-C) | 2012 | 14,999 images | Urban, rural, highway scenes, | It provides 14,999 images and corresponding point clouds for 3D object detection tasks [94]. |

| Cityscapes | A consortium primarily led by Daimler AG | 2016 | N/A | 50 cities, different weather and lighting conditions, 30 categories, roads, pedestrians, vehicles, traffic signs, etc. | Image annotation includes pixel level fine annotation corresponding to the image [95]. |

| nuScenes | Motional (formerly nuTonomy) | 2019 | 1000 scenes, 1400,000 camera images | 1200 h, Boston, Pittsburgh, Las Vegas, and Singapore | It provides detailed 3D annotations for 23 object classes and is a key benchmark for 3D detection and tracking [96]. |

| Argoverse | Argo AI, Carnegie Mellon University and Georgia Institute of Technology | 2019 | prediction dataset of 324,557 scenes | 3D tracking dataset | The dataset contains 113 3D annotated scenes and a motion prediction dataset [97]. |

| Waymo Open Dataset | Waymo | 2019 | 1150 scenes | High-resolution sensor data, multiple urban and suburban environments, driving scenarios, day and night, sunny and rainy days | Each scenes with a duration of 20 s [98]. |

| Enhancement Methods | Improvement Type | Evaluation Indicator |

|---|---|---|

| image transformation | Optimize performance and improve methods for addressing issues such as spatial and lighting invariance, local occlusion, and motion blur in the model. | mAP/mIoU (Mean Intersection over Union)/Accuracy |

| GAN | By improving cross domain generalization ability, extracting small object detail features, and mining rare scenes, the generalization ability and robustness of the model can be enhanced. | mAP/mIoU/Recall |

| Diffusion Model | Data generation, multimodal fusion, and domain adaptive optimization in complex scenarios. | mAP/mIoU/Recall |

| Composite Data Augmentation | Improvements and enhancements have been made in various aspects of small object detection performance, context understanding ability, and multimodal fusion. | mAP/mIoU/Recall |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, M.; Ewe, L.S.; Yew, W.K.; Deng, S.; Tiong, S.K. A Survey of Data Augmentation Techniques for Traffic Visual Elements. Sensors 2025, 25, 6672. https://doi.org/10.3390/s25216672

Yang M, Ewe LS, Yew WK, Deng S, Tiong SK. A Survey of Data Augmentation Techniques for Traffic Visual Elements. Sensors. 2025; 25(21):6672. https://doi.org/10.3390/s25216672

Chicago/Turabian StyleYang, Mengmeng, Lay Sheng Ewe, Weng Kean Yew, Sanxing Deng, and Sieh Kiong Tiong. 2025. "A Survey of Data Augmentation Techniques for Traffic Visual Elements" Sensors 25, no. 21: 6672. https://doi.org/10.3390/s25216672

APA StyleYang, M., Ewe, L. S., Yew, W. K., Deng, S., & Tiong, S. K. (2025). A Survey of Data Augmentation Techniques for Traffic Visual Elements. Sensors, 25(21), 6672. https://doi.org/10.3390/s25216672