Abstract

The reliable characterization of fabric anisotropy in concrete aggregates is critical for understanding the mechanical behavior and durability of concrete. The accurate segmentation of aggregates is essential for anisotropy assessment. However, conventional threshold-based segmentation methods exhibit high sensitivity to noise, while deep learning approaches are often constrained by the scarcity of annotated data. To address these challenges, this study introduces the Segment Anything Model (SAM) for automated aggregate segmentation, leveraging its remarkable zero-shot generalization capabilities. In addition, a novel quantification technique integrating computational geometry with second-order Fourier series is proposed to evaluate both the magnitude and orientation of fabric anisotropy. Extensive experiments conducted on a self-constructed concrete aggregate dataset demonstrated the effectiveness and accuracy of the proposed method. The process incorporates domain-specific image preprocessing using Contrast Limited Adaptive Histogram Equalization (CLAHE) to enhance the input quality for the SAM. The SAM achieves an F1-score of 0.842 and an intersection over union (IoU) of 0.739, with mean absolute errors of 4.15° for the orientation and 0.025 for the fabric anisotropy. Notably, optimal segmentation performance is observed when the SAM’s grid point parameter is set to 32. These results validate the proposed method as a robust, accurate, and automated solution for quantifying concrete aggregate anisotropy, providing a powerful tool for microstructure analysis and performance prediction.

1. Introduction

Concrete dominates civil engineering infrastructure due to its exceptional compressive strength, durability, versatility, and cost efficiency, serving as a fundamental material for buildings, bridges, dams, roads, and tunnels [1]. The macroscopic mechanical behavior of concrete is critically governed by its structural characteristics, with aggregate properties such as spatial distribution, morphology, and orientation affecting its strength, deformation, fracture, and long-term durability [2,3]. Although extensive research has examined the effects of aggregate distribution and morphology, aggregate orientation remains largely unquantified [4]. Orientation refers to the alignment of aggregate particles within hardened concrete and is a fundamental factor governing fabric anisotropy. It is inherently random due to casting vibrations and interactions with mortar and surrounding aggregates [5] and serves as a key structural indicator for assessing the fabric anisotropy and predicting concrete behavior [6,7]. Therefore, quantification of aggregate orientation is essential for relating microstructural features to concrete performance [8].

Accurate evaluation of aggregate orientation has traditionally relied on destructive sampling, such as coring or cross-sectioning, followed by visual inspection to determine the aggregate direction and distribution [5]. These manual approaches are subjective, time-consuming, and error-prone, limiting the precise quantification of aggregate anisotropy. Image processing techniques have therefore emerged as effective tools for automated evaluation, typically categorized into indirect and direct methods. Indirect approaches first segment individual particles from images [9] and then extract orientations through geometric analysis to quantify the material anisotropy [10]. Conventional segmentation methods, including watershed segmentation, thresholding, and edge detection, separate images into distinct regions based on features such as grayscale, texture, and shape. For example, Wang et al. [6] proposed image-processing methods to identify aggregates and their boundaries, obtaining orientations by connecting the image center with each aggregate center. Zhang et al. [11] utilized the ImageJ software to recognize each aggregate with surrounding rectangles. Han et al. [8] combined grayscale-based identification with manual correction. These methods, however, often require manual parameter inversion and are sensitive to image noise, limiting the accuracy and efficiency, particularly for complex two-dimensional granular images. In contrast, direct analytical approaches extract particle anisotropy directly from image features without the need for segmentation [12,13,14]. The rotational Haar wavelet transform (RHWT) has been widely applied to quantify fabric anisotropy and orientation in various materials, including sands [15], high explosives [16], and scanning electron microscope images of cemented sand [17]. The RHWT analyzes directional variations in boundary pixels to determine particle orientations, but its applicability and accuracy for concrete aggregates remain to be fully validated.

Recent advances in artificial intelligence, particularly deep learning, have opened new opportunities for the quantitative analysis of concrete. Classical deep convolutional neural network-based classical segmentation algorithms, such as fully convolutional neural network (FCN) [18], U-Net [19], and mask region-based convolutional neural network (Mask R-CNN) [20], have achieved notable success in tasks such as crack segmentation [21], aggregate identification [22], and damage assessment [23,24]. For instance, Chow et al. [25] applied U-Net to segment kaolinite particles in clay and quantified their directional distribution using the fabric tensor, with orientations extracted from the major particle axes. Wang et al. [26] developed an enhanced DeepLabv3+ framework with SE-block-modified ResNeXt50, achieving concrete aggregate segmentation for stability assessment. However, deep learning methods for concrete aggregate anisotropy are limited by high resource demands and reliance on domain expertise. Annotated images require destructive sampling and labor-intensive labeling, while model development depends on specialized knowledge, creating barriers to widespread use. These challenges highlight the need for automated methods that reduce manual effort while maintaining accuracy.

To address the limitations of existing methods for evaluating concrete aggregate anisotropy, this study develops a computational approach based on the Segment Anything Model (SAM) for automated quantification of aggregate orientation and fabric anisotropy. The main contributions are as follows: (1) We present a four-module computational pipeline integrating (i) domain-specific image preprocessing using Contrast Limited Adaptive Histogram Equalization (CLAHE), (ii) zero-shot segmentation of concrete aggregates using the SAM, (iii) extraction of individual aggregate orientations via computational geometry-based fitting, and (iv) computation of fabric anisotropy using the fabric tensor. (2) We incorporate second-order Fourier analysis to evaluate the dominant directional trend within each image, thereby refining the anisotropy characterization. (3) The proposed approach is validated on a self-constructed dataset of concrete aggregate images. The results show that it enables accurate, automated quantification of aggregate anisotropy and provides a potential tool for linking microstructural features to concrete performance.

2. Methodology

2.1. Image Preprocessing Using CLAHE

The primary objective of the CLAHE [27] algorithm is to improve the contrast of an image while reducing the noise. It accomplishes this by adjusting the contrast in a way that enhances the overall quality and clarity of the image. Additionally, the algorithm has the benefit of preserving fine details and controlling the level of contrast.

The necessity of CLAHE preprocessing stems from two domain-specific challenges in concrete imaging: (1) non-uniform illumination artifacts induced by wet-drilling residue create localized shadows that obscure aggregate boundaries, which cannot be fully compensated for by deep learning’s normalization layers; (2) low-contrast texture similarity between aggregate and mortar regions causes ambiguous edge transitions, requiring targeted enhancement to amplify discriminative features.

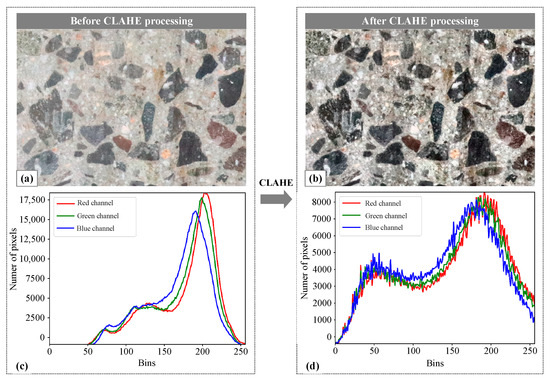

The images obtained from core drilling often have reduced contrast due to the equipment’s cutting and friction. This can lead to unclear details, information loss, and decreased visual quality. To address this issue, the CLAHE algorithm is used to enhance the contrast by adjusting the brightness in different regions of the image based on local features. This improves the visibility of concrete aggregates and mortar, resulting in better visual quality. The adaptive enhancement provided by the algorithm is crucial for accurately extracting detailed edge features of aggregate particles. Additionally, the CLAHE algorithm includes contrast limitations to prevent excessive enhancement of noise and local details during the equalization process. This not only preserves image details but also improves the overall visual quality. As such, the CLAHE algorithm was utilized in this study to improve the contrast of the original concrete image and minimize the impact of noise. Figure 1 displays the cross-sectional image of hardened concrete. The original image (Figure 1a) was subjected to the CLAHE algorithm, resulting in a more pronounced contrast in Figure 1b that emphasized local details. Furthermore, a quantitative analysis of the RGB channels in the processed image indicate a decrease in peak intensity from 17,500 (Figure 1c) to approximately 8000 (Figure 1d), suggesting a more balanced image after processing.

Figure 1.

Concrete image processing based on the CLAHE algorithm: (a) original image; (b) image processed using the CLAHE algorithm; (c) quantitative analysis of RGB values in the original image; (d) quantitative analysis of RGB values in the processed image.

2.2. SAM-Based Concrete Aggregate Segmentation

The SAM [28] represents a comprehensive deep learning vision model designed as a versatile tool for image segmentation. It has undergone extensive training on an extensive dataset, encompassing over one billion masks across eleven million images. Consequently, the SAM demonstrates remarkable zero-shot generalization capabilities to unexpected data, eliminating the necessity for additional training. Because of its outstanding performance across various computer vision benchmarks, the SAM has garnered significant attention for segmentation tasks [29], such as in medical imaging [30], civil engineering [31], remote sensing [32], and industrial engineering [33].

From a theoretical perspective, the suitability of the SAM for concrete aggregate segmentation lies in three aspects. First, its ViT-based encoder extracts robust global features, enabling accurate delineation of aggregates with heterogeneous textures and overlapping boundaries. Second, its flexible prompt encoder allows integration of sparse or dense guidance, which enhances the segmentation consistency under diverse imaging conditions. Third, the SAM’s lightweight mask decoder ensures efficient inference, which is beneficial for large-scale analysis of aggregate images. This characteristic aligns with concrete aggregate segmentation needs, where morphological isolation suffices for geometric analysis, making the SAM exceptionally suitable for this study’s focus. Consequently, this study explores the SAM’s performance when identifying concrete aggregates.

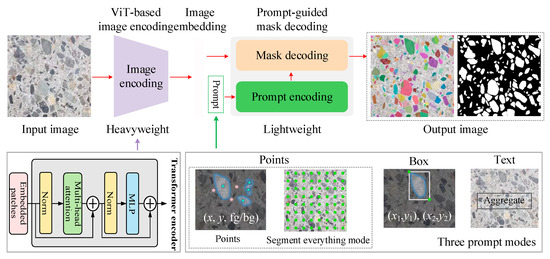

The SAM’s structure comprises three essential components, namely, a Vision transformer (ViT)-based image encoder (Fi-enc), a prompt encoder (Fp-enc), and a lightweight mask decoder (Fm-dec). The schematic diagram of the SAM is illustrated in Figure 2. The Fi-enc section relies upon a conventional ViT that has undergone prior pre-training using the Masked Autoencoder (MAE) methodology for image feature extraction. The outcome of the image-encoding process is a 16× downsampled embedding of the input image. The Fp-enc provides the positional information for the Fm-dec through the utilization of interactive input points, boxes, and text. In this work, the “everything” mode was selected to automatically generate masks on almost every aggregate. The Fm-dec is a lightweight decoder with a dynamic mask prediction head that incorporates the embedded prompt and the image embedding from Fi-enc. The main mathematical model can be expressed as Equation (1):

where represents the input image, and H and W are the image height and width, respectively. Fimg denotes the intermediate features that are extracted by the ViT module. {p} represents either sparse prompts (e.g., points, boxes, text) or dense prompts (masks), while Tpro denotes the corresponding prompt tokens encoded by Fp-enc. Fc-mask denotes the optional input with a coarse mask for the SAM, and Fout denotes the learnable tokens from different masks and their corresponding intersection over union (IoU) predictions. Opre denotes the predicted masks.

Figure 2.

The overview of SAM for concrete aggregate segmentation.

As this work eliminated the need to differentiate aggregate types, we employed the SAM in the “everything” mode to generate candidate masks across the entire image, ensuring that nearly all aggregates were captured. The resulting binary masks provided reliable input for downstream tasks, including the orientation and anisotropy quantification. This pipeline design leverages the SAM’s broad generalization capacity while incorporating lightweight, domain-specific refinements, thereby ensuring both accuracy and efficiency during aggregate segmentation.

2.3. Aggregate Orientation Extraction

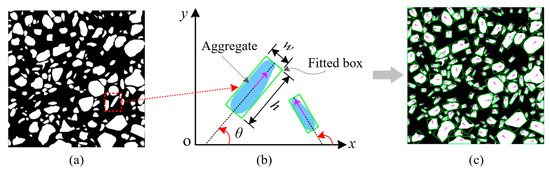

Based on the SAM segmentation results from delineating individual concrete aggregates (Figure 3a), the process for extracting the aggregate orientation involves the following key steps: (1) The contour extraction algorithm is employed to capture the continuous pixel coordinates outlining the aggregate particles’ boundaries, which effectively represents their shapes. (2) For each contour, image-processing techniques are applied to determine the minimum bounding rectangle that fully encloses the contour while minimizing its area, which is referred to as the smallest external rectangle. (3) Regarding a set of points P = {pk (xk, yk), k = 1, 2, 3, 4}, the algorithm computes the covariance matrix and performs eigenvalue decomposition on the points P. The eigenvector associated with the largest eigenvalue of P signifies the major axis direction of the smallest external rectangle, and this direction represents the orientation of the aggregate particles.

Figure 3.

Orientation fitting of each concrete aggregate: (a) aggregate segmentation; (b) aggregate orientation; (c) orientation fitting.

The aggregate direction is defined as depicted in Figure 3b. For each aggregate i, the minimum external rectangle is characterized by dimensions w × h, and the angle between its major axis direction and the horizontal x-axis is identified as the particle’s orientation θ. The aggregate direction θ varies between 0° and 180°. The minimum external rectangle and direction-fitting results of individual particles are illustrated in Figure 3c. Computer vision techniques effectively extract aggregate orientation information from input images, establishing essential groundwork for subsequent anisotropy analysis.

2.4. Fabric Anisotropy Analysis

The quantification of granular material fabric anisotropy can be achieved using a fabric tensor. Within this theoretical framework [34,35], we define an evaluation index for concrete fabric anisotropy, which is derived from the orientation of aggregate particles in two-dimensional space, and expressed as Equation (2):

where Δ quantifies the degree of fabric anisotropy, θi is the orientation of i-th aggregate (Figure 3b), and N represents the total number of aggregate particles. As such, the value of Δ ranges from 0 when the aggregate particles have a completely random particle orientation distribution to 1 when all the long axes of the aggregate particles are in the same direction.

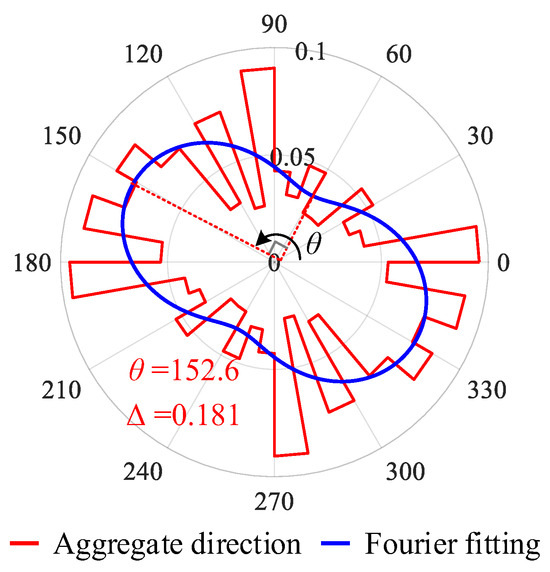

Considering the impact of segmentation errors, image noise, and local aggregate display at image edges, the second-order Fourier series [17] is utilized to fit the distribution of the aggregate particle direction. The fitting function F(θ) can be expressed as Equation (3):

Due to the symmetry (i.e., F( + 180°) = F()) in Equation (3), a1 and b1 must be zero. Thus, Equation (3) can be expressed as:

where and . The coefficients a0, a2, and b2 can be determined by fitting Equation (4) according to the original aggregate direction plot (Figure 4). Fmax and Fmin are computed using Equation (5):

Figure 4.

Results in the polar coordinate system of the concrete aggregate direction.

Taking Figure 3a as an example, the statistical direction of aggregates in the image and the Fourier fitting results are shown in Figure 4. Among them, the direction statistics of the concrete aggregate are presented as the red curve, utilizing 10 degrees as the unit interval. Then, a0, a2, and b2 could be computed as 0.05556, 0.01203, and −0.01711, respectively. Thus, Fmax = 0.076 and Fmin = 0.035 were computed according to Equation (5), and the fabric direction θ (direction of Fmax) could be computed as 152.6° and Δ could be computed as 0.181 using Equation (2).

3. Dataset

The experiments were conducted using self-constructed datasets. The concrete images were captured from the structural components with specific concrete compositions outlined in Table 1. This mix proportion was selected for its conformity with standard C30-grade structural concrete commonly used in hydro-power engineering. It reflects established engineering practices, ensuring relevance to typical field conditions. The aggregates of concrete primarily comprised fine and coarse aggregates, with a composition ratio of 35.6% for fine aggregates and 11.9% for coarse aggregates. The coarse aggregates predominantly fell within the 10–20 mm size range.

Table 1.

The mix proportion of concrete specimens.

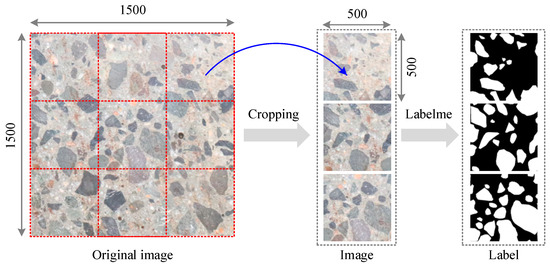

To establish the image analysis database, the following procedure was adopted: (1) Specimen preparation: After the concrete achieved its design strength and hardening, structural sections were extracted using a cutting machine to expose representative cross-sections. (2) Surface treatment: Each cross-section was carefully rinsed with water to remove debris and surface artifacts from the cutting process. (3) Image acquisition: High-resolution photographs were captured using a SONY A6000 camera (Sony Corporation, Wuxi, China) positioned orthogonally 200–400 mm away under controlled lighting. The original images had a resolution of 4000 × 6000 pixels. (4) Pre-processing: The raw images were cropped into standardized 500 × 500-pixel patches to ensure consistency across the dataset. (5) Annotation: Manual labeling was conducted using the Labelme software (version 5.7.0, https://github.com/wkentaro/labelme, accessed on 15 March 2025) to generate ground truth masks, where aggregates were represented by white pixels and mortar by black pixels. The dataset includes aggregates of varying sizes, from fine aggregates (5–10 mm) and coarse aggregates (10–20 mm). This scale ensures that the dataset is directly applicable to practical engineering scenarios, providing a solid foundation for the development and application of the anisotropy index in real-world concrete design.

Following this procedure, a total of 108 image–label pairs were obtained to evaluate the SAM’s segmentation performance. Figure 5 presents representative examples of the raw images and their corresponding ground truth labels.

Figure 5.

Concrete aggregate image acquisition and label.

4. Experiment Results and Discussion

This study was conducted on a single NVIDIA RTX 3060 (NVIDIA Corporation, Shenzhen, China) graphics processing unit with 12 GB. The SAM (https://github.com/facebookresearch/segment-anything, accessed on 20 March 2025) was encoded using Python (version 3.9) language and implemented with PyTorch (version 1.10.0) framework. The official checkpoint of the ViT-H SAM was selected to evaluate the optimal performance of SAM in the context of zero-shot concrete aggregate segmentation. The “everything” mode in SAM, which can prompt the SAM using many points distributed across the image to automatically segment almost every aggregate, was selected.

The key experimental parameters are detailed as follows: CLAHE preprocessing was applied with a clip limit of 2.0 and a grid size of 8 × 8. SAM parameters were configured with a Predict IoU threshold of 0.96, stability score threshold of 0.95 (offset 0.95), confidence threshold of 0.9, minimum mask region area of 100 pixels, and crop number of layers of 1. In addition, the grid points were set as to 32 during inference.

4.1. Evaluation Metrics

Four quantitative metrics are introduced for the comprehensive evaluation of different segmentation models, namely Precision, Recall, F1-score, and IoU. To assess the concrete aggregate segmentation accuracy, a comparative analysis was conducted against the annotated mask (Figure 5). Specifically, the aggregate pixels with white color are considered as positive instances and the suspension pixels with black color are considered as negative instances. Precision quantifies the proportion of accurately classified aggregate pixels to the total number of classified aggregates. Conversely, recall quantifies the proportion of all the aggregate pixels that are correctly classified. A high precision indicates a high accuracy of the aggregate detection results, while a high recall indicates that fewer aggregate pixels are missed during the detection process. The F1-score takes into account both the precision and recall of the classification model. IoU captures the intersection of the union between the predicted segmentation and the ground truth. The four evaluation indicators are defined as follows:

where true positive (TP) is defined as the aggregate pixels that are accurately identified, false positive (FP) is the incorrectly detected aggregate pixels, and false negative (FN) is the aggregate pixels that remain undetected.

For the evaluation of the segmentation performance, Precision, Recall, and IoU were calculated on a per-image basis using the binary masks of concrete aggregates, with the ground truth labels serving as a reference. Specifically, each predicted segmentation was compared against the manually annotated binary mask to quantify the correctly and incorrectly classified aggregate pixels. The resulting metrics were then averaged uniformly across the entire test set, so that each sample contributed equally to the overall performance. This averaging strategy ensured that the reported values reflect the robustness of the method across diverse images and avoids bias toward images containing a larger number of aggregates.

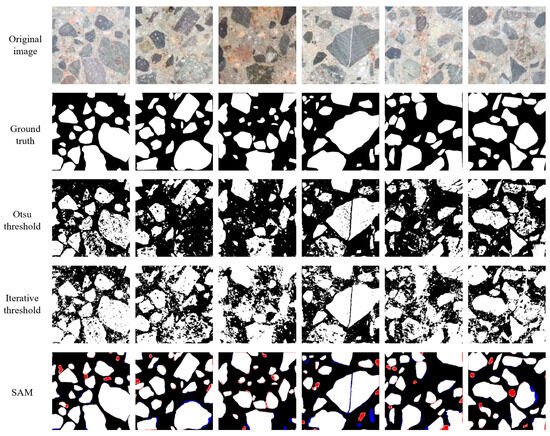

4.2. Comparison of SAM with Other Segmentation Methods

To comprehensively evaluate the SAM’s segmentation performance, the traditional classical Otsu thresholding and iterative thresholding segmentation methods were selected for comparison. They can directly extract the shallow features of the image without additional training process, and achieve the best segmentation performance of aggregate and mortar through the most suitable threshold. The performance evaluation of diverse segmentation methods is presented in Table 2, and some segmentation samples are demonstrated in Figure 6. Traditional segmentation approaches struggle with complex aggregate image segmentation, yielding noisy outcomes and exhibiting blurred aggregate contours. The evaluation index IoU indicates the suboptimal performance of classical segmentation techniques in dealing with intricate images. The segmentation metrics Precision, Recall, F1-score, and IoU of the SAM are 0.894, 0.796, 0.842, and 0.739, respectively. The results demonstrate that the SAM achieved significant improvement regarding performance and achieved impressive capacity for zero-shot generalization, demonstrating its potential for robust performance across diverse scenarios. Notably, precision surpasses recall, indicating that the positive class (i.e., concrete aggregates) is correctly predicted, but some of the mortar class is also been erroneously classified as aggregates. That is, the SAM can accurately identify the aggregate class while maintaining a low probability of missing concrete aggregates. It is worth mentioning that some mortar may be misclassified as aggregates due to the small size of the fine aggregate, which may not be explicitly treated as aggregates during manual annotation. However, SAM could effectively capture subtle boundary pixel changes between aggregates and mortar, ensuring accurate classification of the aggregate category, as evidenced by the SAM’s segmentation results in Figure 6.

Table 2.

Segmentation performance of different methods for concrete aggregate.

Figure 6.

Comparison of segmentation results obtained using different methods. Note: White, red, and blue denote true positive, false positive, and false negative pixels, respectively.

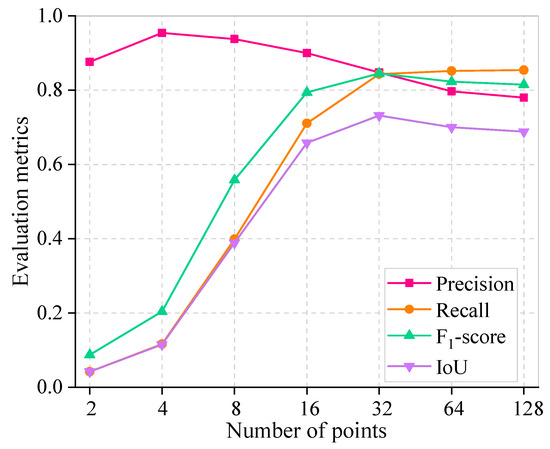

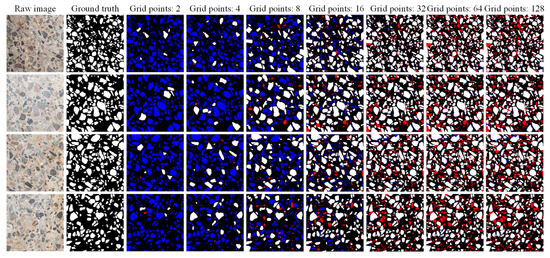

4.3. Effect of Grid Points on SAM Performance

Table 3 and Figure 7 demonstrate the SAM’s segmentation performance with different grid points and their corresponding computation time. The image pixels used in the experiment were 1500 × 1500 to better represent the performance of different grid points on image segmentation. The evaluation metrics recall, F1-score, and IoU show a significant increasing trend with the increase in grid points, which reaches the peak with a point setting of 32 and subsequently experiences a slight decreasing trend. This indicates that denser grid points do not lead to a superior performance. This phenomenon is chiefly attributed to the fact that the SAM’s optimal segmentation performance is not achieved with fewer grid points, and too many grid points lead to over-segmentation. Partial image segmentation for different grid point scenarios is depicted in Figure 8. This is corroborated by a discernible progression in segmentation performance, transitioning from under-segmentation (indicated by blue pixels) to over-segmentation (indicated by red pixels) with the increasing number of grid points. Furthermore, the precision is higher in general, and an explanation for this phenomenon is provided in Section 4.2. Furthermore, it is noteworthy that an increase in the number of grid points proportionally extends the computational time for an image with 1500 × 1500 pixels. Doubling the number of grid points (greater than 16) essentially increases the computational time by a factor of 2. As such, setting the grid point to 32 in this work is reasonable.

Table 3.

Segmentation performance of different grid points.

Figure 7.

Sensitivity analysis of different numbers of grid points.

Figure 8.

Segmentation examples of different numbers of grid points. Note: True positive pixels are shown in white, false positive pixels are shown in red, and false negative pixels are shown in blue.

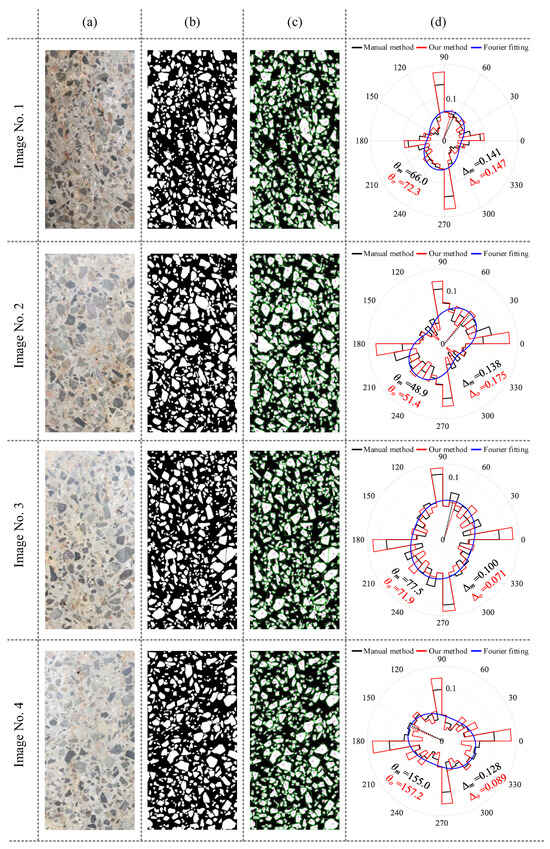

4.4. Evaluation of Concrete Aggregate Anisotropy

Illustrated using four distinct images with a resolution of 3000 × 1500 pixels, the proposed methodology commenced with the application of CLAHE processing to enhance the image quality. Subsequently, the SAM-based segmentation was executed. Considering the uniform generation of grid points in “segment everything mode”, the 3000 × 1500-pixel image was initially decomposed into 1500 × 1500-pixel sub-regions. These sub-regions were subsequently concatenated after SAM segmentation. A comprehensive performance evaluation of image segmentation based on SAM is summarized in Table 4. The average values of evaluation metrics Precision, Recall, F1-score, and IoU are 0.919, 0.839, 0.877, and 0.781, respectively. These results verified the superior accuracy and generalization capabilities of the SAM in the concrete aggregate segmentation context.

Table 4.

Segmentation results of SAM-based concrete aggregate.

After image segmentation was completed, the aggregate directions were determined and fitted through the application of minimum bounding rectangles. The segmentation results of four sample images, the fitted aggregate directions, and the rose plot of aggregate direction are shown in Figure 9. Among them, manual annotation, based on manual assessment of aggregate orientations, is included and compared with proposed method to validate its efficacy. A comprehensive evaluation of aggregate direction and anisotropy obtained from four images is provided in Table 5. The results indicate that the maximum error, minimum error, and average values of the angles are 6.3°, 2.2°, and 4.15°, respectively. The maximum, minimum, and average values of fabric anisotropy indicators are 0.039, 0.006, and 0.025, respectively. Figure 9 illustrates an abundance of aggregates oriented near 0° and 90°. This phenomenon can be attributed to two factors. First, the prevalence of nearly circular particles results in similar dimensions for length and width during aggregate fitting, leading to orientations near 0° or 90°. Second, due to image boundary constraints, aggregates located at the boundary exhibit straight edges, thereby favoring orientations approximating 0° or 90° during the fitting process.

Figure 9.

Computational results for different concrete images: (a) original image; (b) aggregate segmentation; (c) orientation fitting; (d) anisotropy evaluation.

Table 5.

Anisotropy evaluation results of concrete aggregate.

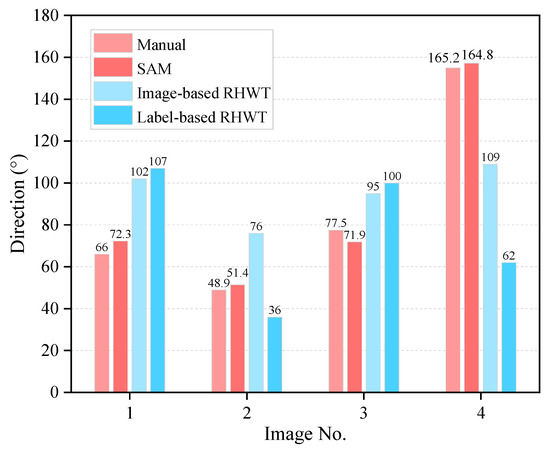

The results of concrete aggregate direction evaluation using different methods are illustrated in Figure 10. The RHWT method is utilized to evaluate the direction of the original image and segmented label image. The results indicate a discernible disparity between the outcomes derived from the RHWT method and this work. The potential cause may be the image noise in the RHWT evaluation results, and the RHWT method’s applicability for concrete aggregate direction may need further validation.

Figure 10.

Direction evaluation results of different methods for concrete aggregate.

4.5. Limitations and Future Work

This study demonstrates the feasibility of combining an unsupervised SAM-based segmentation framework with a Fourier descriptor to quantify fabric anisotropy in concrete aggregates. While the approach shows promising accuracy, several limitations should be acknowledged. First, the present work does not yet establish a direct quantitative relationship between the proposed anisotropy index and macroscopic mechanical properties such as compressive strength or tensile strength. Second, although the SAM-based segmentation achieved encouraging performance (F1-score of 0.842 and IoU of 0.739), further improvements remain necessary, particularly through domain-specific fine-tuning to capture smaller or more irregular aggregates. Third, the dataset employed for validation consists of 108 images, which, although diverse in aggregate size, is relatively limited in scope; broader validation on larger and more varied datasets will be essential to ensure robustness and generalizability. Finally, the present analysis is restricted to two-dimensional cross-sections, whereas three-dimensional CT-based reconstruction would provide a more comprehensive view of aggregate orientation.

Future work would address these limitations regarding several aspects. Controlled laboratory experiments and numerical simulations could be conducted to establish explicit correlations between the anisotropy index and the mechanical performance of concrete. A larger and more diverse dataset could be constructed, and domain-adapted fine-tuning of the SAM could be explored to further improve segmentation precision. In parallel, systematic benchmarking against supervised deep learning algorithms, such as U-Net, Mask R-CNN, and YOLO, could be performed to evaluate trade-offs in the accuracy, training cost, and deployment practicality. Finally, extending the framework to three-dimensional imaging modalities would enable volumetric characterization of anisotropy and open the way for anisotropy-aware mixture design and intelligent process control strategies for enhanced structural performance.

Meanwhile, the proposed research offers demonstrable translational value for practical engineering. By quantifying the orientation and anisotropic distribution of concrete aggregates, the findings provide a scientific basis for optimizing the mix design in high-performance concrete, particularly for critical structures, such as dams, bridges, and high-rise buildings, where load-bearing reliability is paramount. The analysis of aggregate directionality also contributes to predicting crack propagation paths and durability risks, thereby supporting long-term service performance evaluation. From a construction perspective, understanding how compaction or vibration processes induce aggregate alignment offers practical guidance for optimizing field techniques and mitigating potential weak planes. Furthermore, the proposed approach can be integrated with non-destructive testing methods such as CT scanning, ultrasound, or image-based inspection for quality assessment and hidden defect diagnosis. It may also inform emerging construction practices, including 3D-printed concrete, by improving structural homogeneity and mechanical consistency.

5. Conclusions

Quantifying the fabric anisotropy of concrete aggregate is critical for understanding concrete microstructure and predicting mechanical behavior. The accurate segmentation of concrete aggregates is essential to quantify their fabric anisotropy. This study introduces the Segment Anything Model (SAM) for automated concrete aggregate segmentation and proposes a computational geometry-based method combined with second-order Fourier series to quantify fabric anisotropy and dominant orientation. The following main conclusions were drawn:

- (1)

- The SAM achieves effective zero-shot segmentation of concrete aggregates without additional training. On the self-constructed dataset, SAM achieved a Precision of 0.894, Recall of 0.796, F1-score of 0.842, and IoU of 0.739, demonstrating a high accuracy and robustness.

- (2)

- The computational geometry approach combined with second-order Fourier series provides accurate assessment of the aggregate orientation and fabric anisotropy. The average absolute discrepancies in directional and fabric anisotropy indicators are 4.15° and 0.025, respectively, validating the reliability of the proposed method.

- (3)

- The segmentation performance is sensitive to the number of SAM grid points. Increasing grid points shifts the results from under-segmentation to over-segmentation, with an optimal performance observed at 32 grid points.

Future work can be conducted on enhancing the ability of SAM to differentiate multiple target types and on validating the geometry- and Fourier-based quantification across diverse concrete compositions and loading conditions.

Author Contributions

Z.L.: conceptualization, methodology, validation, investigation, and writing—original draft; C.C.: supervision, writing—reviewing and editing, and funding acquisition; H.H.: investigation and writing—reviewing and editing; J.C.: supervision, writing—reviewing and editing, and funding acquisition; P.Z.: conceptualization and writing—reviewing and editing; J.X.: supervision and writing—reviewing and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was substantially supported by the General Program of the National Natural Science Foundation of China (Grant No. 42277181), the Young Elite Scientists Sponsorship Program by CAST (Grant No. 2023QNRC009), and the State Key Program of National Nature Science Foundation of China (Grant No. 52130904).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author.

Conflicts of Interest

Author Zongxian Liu was employed by the company Yalong River Valley Hydropower Development Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Lu, X.; Pei, L.; Peng, S.; Li, X.; Chen, J.; Chen, C. Hydration, Hardening Mechanism, and Performance of Tuff Normal Concrete. J. Mater. Civ. Eng. 2021, 33, 04021063. [Google Scholar] [CrossRef]

- Taheri-Shakib, J.; Al-Mayah, A. A Review of Microstructure Characterization of Asphalt Mixtures Using Computed Tomography Imaging: Prospects for Properties and Phase Determination. Constr. Build. Mater. 2023, 385, 131419. [Google Scholar] [CrossRef]

- Ghosh, S.; Deb, A. Role of Material Fabric in Flexural Failure in Concrete. J. Eng. Mech. 2021, 147, 04021112. [Google Scholar] [CrossRef]

- Lee, S.J.; Lee, C.H.; Shin, M.; Bhattacharya, S.; Su, Y.F. Influence of Coarse Aggregate Angularity on the Mechanical Performance of Cement-Based Materials. Constr. Build. Mater. 2019, 204, 184–192. [Google Scholar] [CrossRef]

- Wang, D.; Ren, B.; Cui, B.; Wang, J.; Wang, X.; Guan, T. Real-Time Monitoring for Vibration Quality of Fresh Concrete Using Convolutional Neural Networks and IoT Technology. Autom. Constr. 2021, 123, 103510. [Google Scholar] [CrossRef]

- Wang, F.; Xiao, Y.; Cui, P.; Ma, T.; Kuang, D. Effect of Aggregate Morphologies and Compaction Methods on the Skeleton Structures in Asphalt Mixtures. Constr. Build. Mater. 2020, 263, 120220. [Google Scholar] [CrossRef]

- Eyad, M.; Laith, T.; Niranjanan, S.; Dallas, L. Micromechanics-Based Analysis of Stiffness Anisotropy in Asphalt Mixtures. J. Mater. Civ. Eng. 2002, 14, 374–383. [Google Scholar] [CrossRef]

- Han, J.; Wang, K.; Wang, X.; Monteiro, P.J.M. 2D Image Analysis Method for Evaluating Coarse Aggregate Characteristic and Distribution in Concrete. Constr. Build. Mater. 2016, 127, 30–42. [Google Scholar] [CrossRef]

- Ambika, K.; Animesh, D. Experimental Study on Effect of Geometrical Arrangement of Aggregates on Strength of Asphalt Mix. In Proceedings of the Airfield and Highway Pavements 2023: Design, Construction, Condition Evaluation, and Management of Pavements—Selected Papers from the International Airfield and Highway Pavements Conference 2023, Austin, TX, USA, 14–17 June 2023. [Google Scholar]

- Peng, Y.; Sun, L.J. Micromechanics-Based Analysis of the Effect of Aggregate Homogeneity on the Uniaxial Penetration Test of Asphalt Mixtures. J. Mater. Civ. Eng. 2016, 28, 04016119. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Yang, L.; Liu, G.; Du, H. Evaluation of Aggregates, Fibers and Voids Distribution in 3D Printed Concrete. J. Sustain. Cem. Mater. 2023, 12, 775–788. [Google Scholar] [CrossRef]

- Roux, S.G.; Clausel, M.; Vedel, B.; Jaffard, S.; Abry, P. Self-Similar Anisotropic Texture Analysis: The Hyperbolic Wavelet Transform Contribution. IEEE Trans. Image Process. 2013, 22, 4353–4363. [Google Scholar] [CrossRef]

- Oulhaj, H.; Rziza, M.; Amine, A.; Toumi, H.; Lespessailles, E.; El Hassouni, M.; Jennane, R. Anisotropic Discrete Dual-Tree Wavelet Transform for Improved Classification of Trabecular Bone. IEEE Trans. Med. Imaging 2017, 36, 2077–2086. [Google Scholar] [CrossRef] [PubMed]

- Lefebvre, A.; Corpetti, T.; Moy, L.H. Estimation of the Orientation of Textured Patterns via Wavelet Analysis. Pattern Recognit. Lett. 2011, 32, 190–196. [Google Scholar] [CrossRef]

- Zheng, J.; Hryciw, R.D. Cross-Anisotropic Fabric of Sands by Wavelet-Based Simulation of Human Cognition. Soils Found. 2018, 58, 1028–1041. [Google Scholar] [CrossRef]

- Jia, X.; Cao, P.; Han, Y.; Zheng, H.; Wu, Y.; Zheng, J. Microstructure Characterization of High Explosives by Wavelet Transform. Math. Probl. Eng. 2022, 2022, 3833205. [Google Scholar] [CrossRef]

- Wang, W.; Wei, D.; Gan, Y. An Experimental Investigation on Cemented Sand Particles Using Different Loading Paths: Failure Modes and Fabric Quantifications. Constr. Build. Mater. 2020, 258, 119487. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Benedetti, P.; Femminella, M.; Reali, G. Mixed-Sized Biomedical Image Segmentation Based on U-Net Architectures. Appl. Sci. 2022, 13, 329. [Google Scholar] [CrossRef]

- Chang, C.C.; Wang, Y.P.; Cheng, S.C. Fish Segmentation in Sonar Images by Mask R-Cnn on Feature Maps of Conditional Random Fields. Sensors 2021, 21, 7625. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Xu, H.; Zhu, X.; Xie, L. Automatic Crack Detection Using Weakly Supervised Semantic Segmentation Network and Mixed-Label Training Strategy. Found. Comput. Decis. Sci. 2024, 49, 95–118. [Google Scholar] [CrossRef]

- Coenen, M.; Schack, T.; Beyer, D.; Heipke, C.; Haist, M. Semi-Supervised Segmentation of Concrete Aggregate Using Consensus Regularisation and Prior Guidance. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 2, 83–91. [Google Scholar] [CrossRef]

- Wan, H.; Gao, L.; Yuan, Z.; Qu, H.; Sun, Q.; Cheng, H.; Wang, R. A Novel Transformer Model for Surface Damage Detection and Cognition of Concrete Bridges. Expert Syst. Appl. 2023, 213, 119019. [Google Scholar] [CrossRef]

- Zhao, S.; Kang, F.; Li, J. Concrete Dam Damage Detection and Localisation Based on YOLOv5s-HSC and Photogrammetric 3D Reconstruction. Autom. Constr. 2022, 213, 104555. [Google Scholar] [CrossRef]

- Chow, J.K.; Li, Z.; Su, Z.; Wang, Y.H. Characterization of Particle Orientation of Kaolinite Samples Using the Deep Learning-Based Technique. Acta Geotech. 2021, 17, 1097–1110. [Google Scholar] [CrossRef]

- Wang, W.; Su, C.; Zhang, H. Automatic Segmentation of Concrete Aggregate Using Convolutional Neural Network. Autom. Constr. 2022, 134, 104106. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the Contrast Limited Adaptive Histogram Equalization (CLAHE) for Real-Time Image Enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the IEEE International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

- Kang, J.; Chen, N.; Li, M.; Mao, S.; Zhang, H.; Fan, Y.; Liu, H. A Point Cloud Segmentation Method for Dim and Cluttered Underground Tunnel Scenes Based on the Segment Anything Model. Remote Sens. 2024, 16, 97. [Google Scholar] [CrossRef]

- Shi, P.; Qiu, J.; Abaxi, S.M.D.; Wei, H.; Lo, F.P.W.; Yuan, W. Generalist Vision Foundation Models for Medical Imaging: A Case Study of Segment Anything Model on Zero-Shot Medical Segmentation. Diagnostics 2023, 13, 1947. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Kim, Y. Integrated Framework for Unsupervised Building Segmentation with Segment Anything Model-Based Pseudo-Labeling and Weakly Supervised Learning. Remote Sens. 2024, 16, 526. [Google Scholar] [CrossRef]

- Chen, K.; Liu, C.; Chen, H.; Zhang, H.; Li, W.; Zou, Z.; Shi, Z. RSPrompter: Learning to Prompt for Remote Sensing Instance Segmentation Based on Visual Foundation Model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4701117. [Google Scholar] [CrossRef]

- Jiang, G. Industrial-SAM with Interactive Adapter. In Pattern Recognition and Computer Vision; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Chen, J.; Kong, Y.; Wang, H.; Chen, Y.; Liu, J. Investigation on Inherent Anisotropy of Asphalt Concrete Due to Internal Aggregate Particles. In Transportation Research Congress 2016; American Society of Civil Engineers: Reston, VA, USA, 2016; pp. 39–48. [Google Scholar]

- Le, N.B.; Toyota, H.; Takada, S. Evaluation of Mechanical Properties of Mica-Mixed Sand Considering Inherent Anisotropy. Soils Found. 2020, 60, 533–550. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).