Integrating 1D-CNN and Bi-GRU for ENF-Based Video Tampering Detection

Abstract

1. Introduction

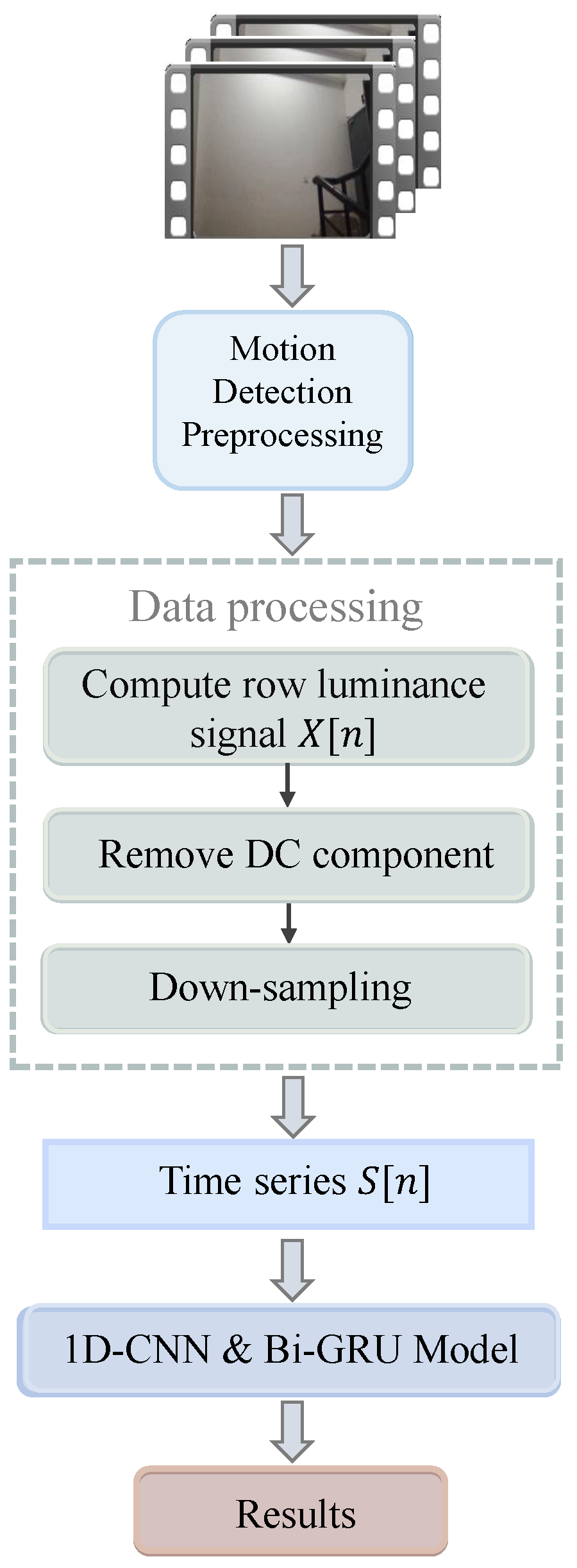

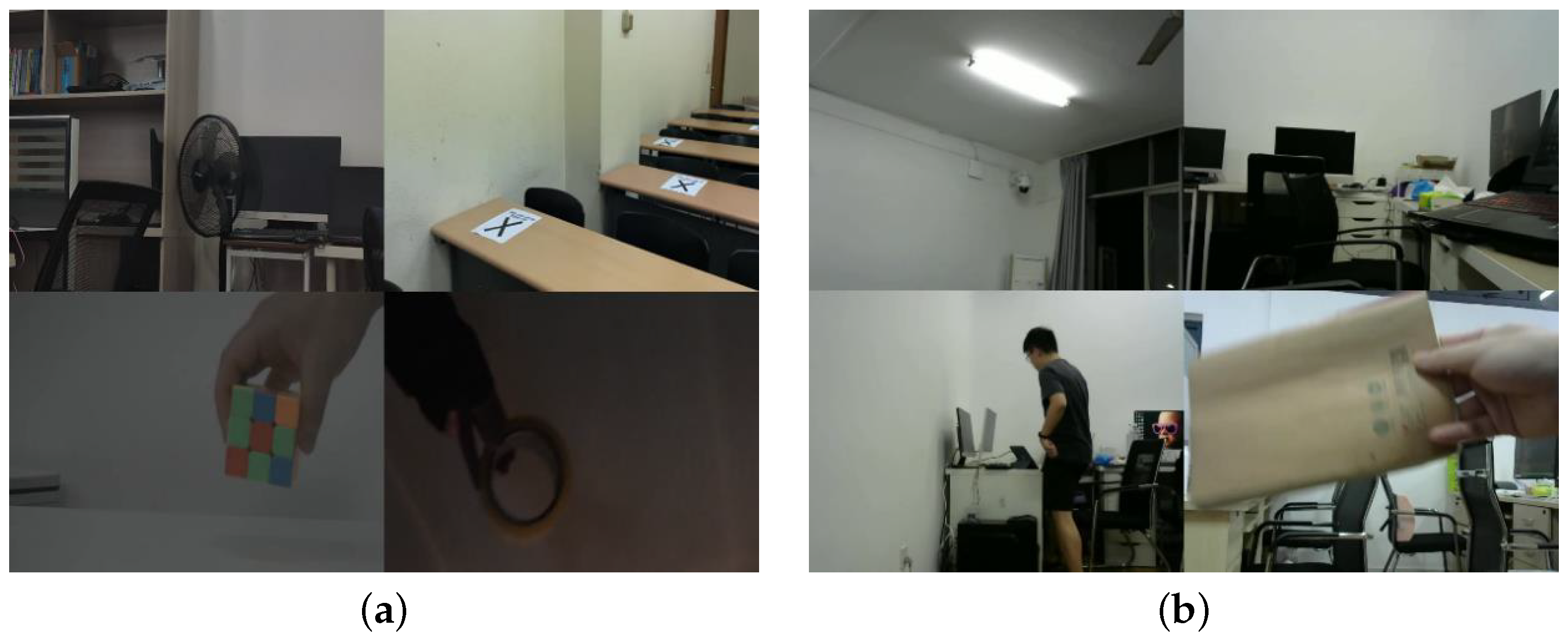

- Aiming at the deficiency of video data, we collected static scene videos and dynamic scene videos from studies [22,26,27], which were captured in different regions and covered two nominal ENF frequencies. Then we generated several tampered video datasets using FFMPEG for training and testing, resulting in three types of inter-frame tampering videos, i.e., frame deletion, frame duplication, and frame insertion.

- Without the need of extracting ENF signals, the proposed method can directly leverage luminance information for tamper detection. Particularly, we employed the Gaussian Mixture Model for background subtraction to identify and mask moving objects of each frame to reduce the interference of moving scenes with illumination signal.

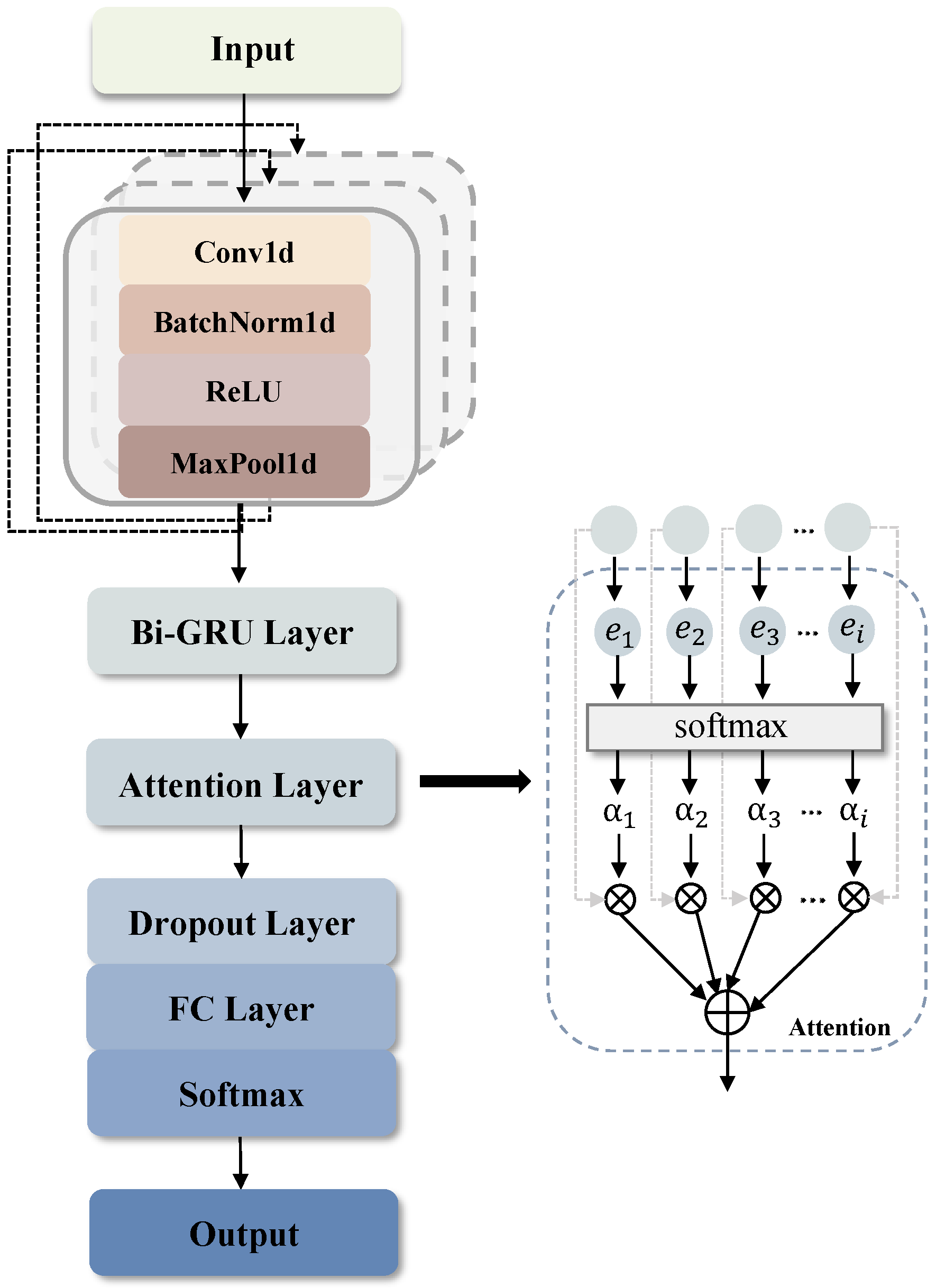

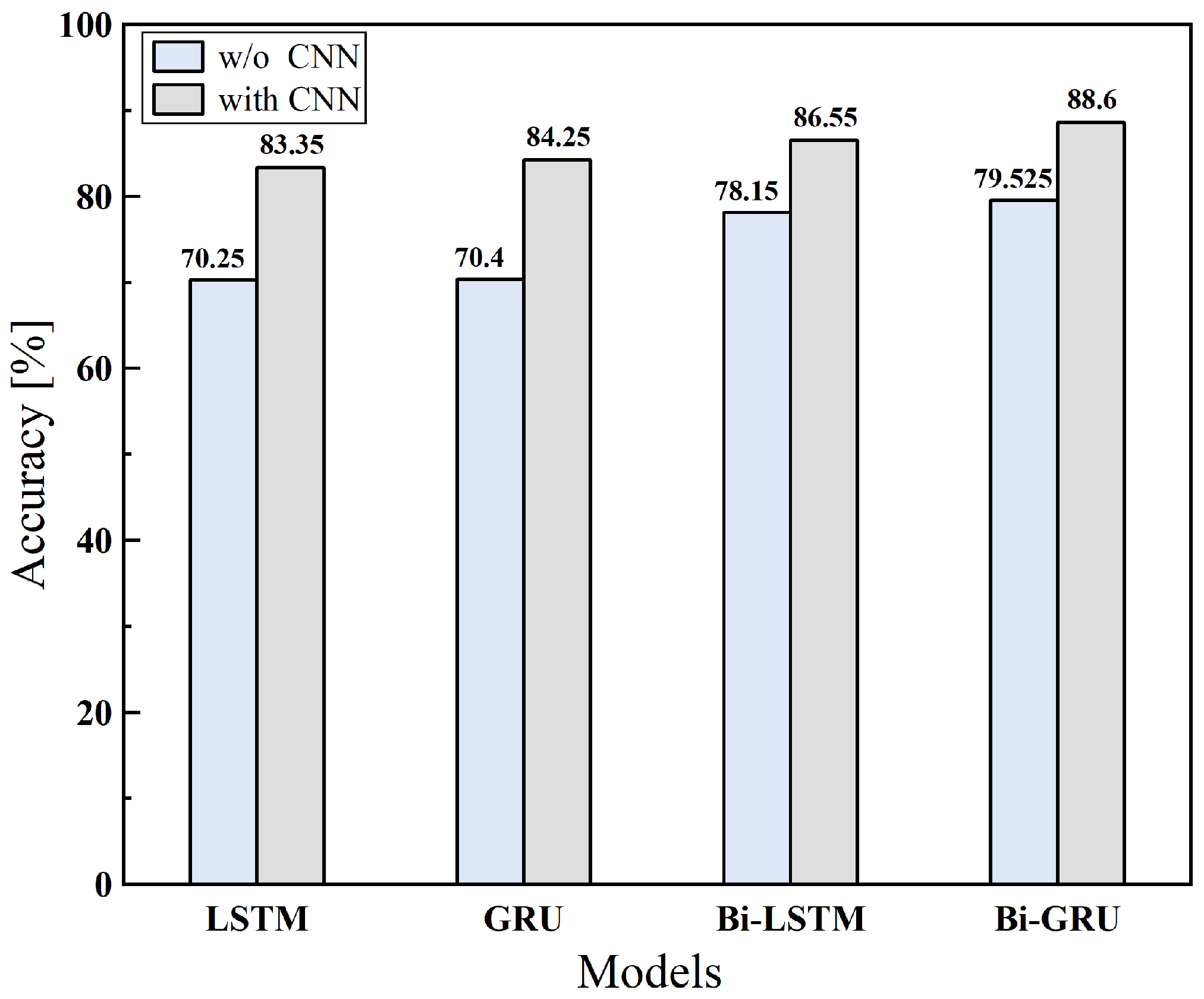

- We designed a lightweight but efficient model integrating one-dimensional convolutional neural network (1D-CNN) and Bidirectional Gated Recurrent Unit (Bi-GRU) for anomaly detection in luminance signals of videos, which can learn local features and temporal dependencies of input sequences and distinguish whether videos have been tampered or not.

2. Related Work

3. The Proposed Method

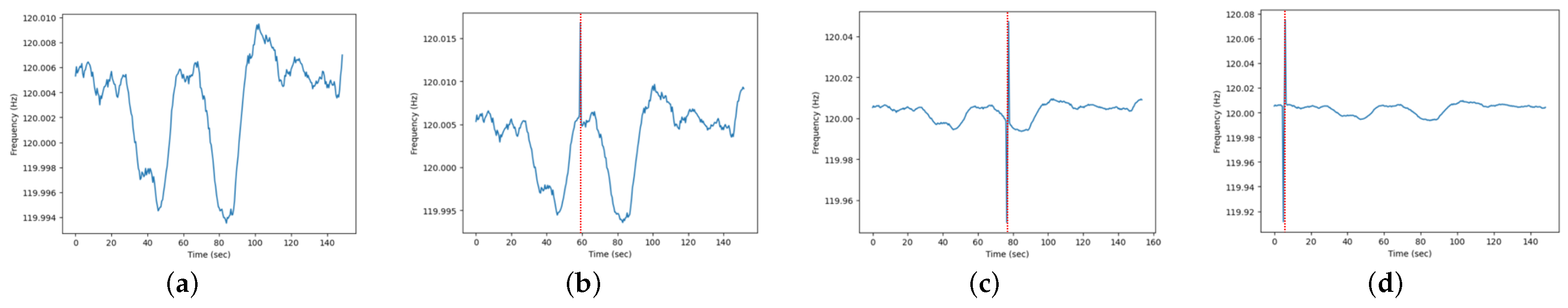

3.1. The Effect of Video Tampering on ENF Signals

3.2. Dynamic Video Preprocessing

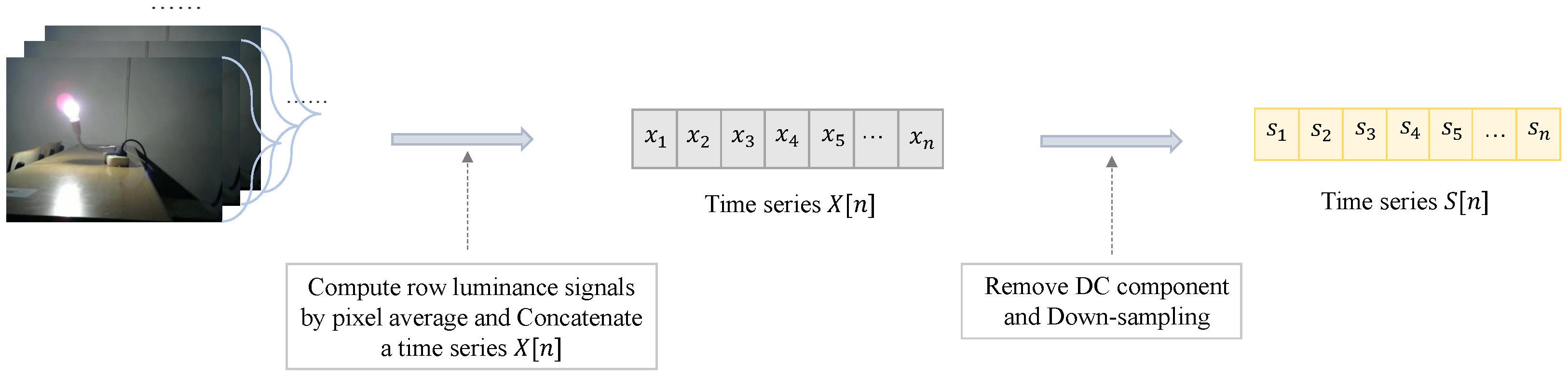

3.3. Processing of Illumination Signal

- Remove DC component: The time series removes its DC component by subtracting mean luminance.

- Down-sampling: Downsample the time series after subtracting average luminance to 1 kHz to get a new time series for the input to the model as the sampling rate of row luminance signal is much higher than the underlying ENF.

3.4. Network Model Based on 1D-CNN & Bi-GRU

4. Experiments

4.1. Datasets and Experimental Settings

4.2. Experimental Results and Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Grigoras, C. Digital audio recording analysis: The Electric Network Frequency (ENF) Criterion. Int. J. Speech Lang. Law 2005, 12, 63–76. [Google Scholar] [CrossRef]

- Brixen, E.B. Techniques for the Authentication of Digital Audio Recordings. In Audio Engineering Society Convention; Audio Engineering Society: New York, NY, USA, 2007. [Google Scholar]

- El Gemayel, T. Feasibility of Using Electrical Network Frequency Fluctuations to Perform Forensic Digital Audio Authentication. Master’s Thesis, University of Ottawa, Ottawa, ON, Canada, 2013. [Google Scholar]

- Zheng, L.; Zhang, Y.; Lee, C.E.; Thing, V.L.L. Time-of-recording estimation for audio recordings. Digit. Investig. 2017, 22, S115–S126. [Google Scholar] [CrossRef]

- Vatansever, S.; Dirik, A.E.; Memon, N. ENF based robust media time-stamping. IEEE Signal Process. Lett. 2022, 29, 1963–1967. [Google Scholar] [CrossRef]

- Bang, W.; Yoon, J.W. Power Grid Estimation Using Electric Network Frequency Signals. Secur. Commun. Netw. 2019, 2019, 1982168. [Google Scholar] [CrossRef]

- Hajj-Ahmad, A.; Garg, R.; Wu, M. ENF-based region-of-recording identification for media signals. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1125–1136. [Google Scholar] [CrossRef]

- Suresha, P.B.; Nagesh, S.; Roshan, P.S.; Gaonkar, P.A.; Meenakshi, G.N.; Ghosh, P.K. A high resolution ENF based multi-stage classifier for location forensics of media recordings. In Proceedings of the 2017 Twenty-third National Conference on Communications (NCC), Chennai, India, 2–4 March 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Rodríguez, D.P.A.A.; Apolinário, J.A.; Biscainho, L.W.P. Audio authenticity: Detecting ENF discontinuity with high precision phase analysis. IEEE Trans. Inf. Forensics Secur. 2010, 5, 534–543. [Google Scholar] [CrossRef]

- Esquef, P.A.A.; Apolinário, J.A.; Biscainho, L.W.P. Edit detection in speech recordings via instantaneous electric network frequency variations. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2314–2326. [Google Scholar] [CrossRef]

- Lin, X.; Kang, X. Supervised audio tampering detection using an autoregressive model. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Wang, Z.-F.; Wang, J.; Zeng, C.-Y.; Min, Q.-S.; Tian, Y.; Zuo, M.-Z. Digital audio tampering detection based on ENF consistency. In Proceedings of the International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Chengdu, China, 15–18 July 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Zeng, C.; Kong, S.; Wang, Z.; Li, K.; Zhao, Y.; Wan, X.; Chen, Y. Digital audio tampering detection based on spatio-temporal representation learning of electrical network frequency. Multimed. Tools Appl. 2024, 83, 83917–83939. [Google Scholar] [CrossRef]

- Hatami, M.; Dorje, L.; Li, X.; Chen, Y. Electric Network Frequency as Environmental Fingerprint for Metaverse Security: A Comprehensive Survey. Computers 2025, 14, 321. [Google Scholar] [CrossRef]

- Hatami, M.; Qu, Q.; Chen, Y.; Mohammadi, J.; Blasch, E.; Ardiles-Cruz, E. ANCHOR-Grid: Authenticating Smart Grid Digital Twins Using Real-World Anchors. Sensors 2025, 25, 2969. [Google Scholar] [CrossRef]

- Garg, R.; Varna, A.L.; Wu, M. “Seeing” ENF: Natural time stamp for digital video via optical sensing and signal processing. In Proceedings of the 19th ACM International Conference on Multimedia, Scottsdale, AZ, USA, 28 November–December 2011. [Google Scholar]

- Garg, R.; Varna, A.L.; Hajj-Ahmad, A.; Wu, M. “Seeing” ENF: Power-signature-based timestamp for digital multimedia via optical sensing and signal processing. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1417–1432. [Google Scholar] [CrossRef]

- Wong, C.-W.; Hajj-Ahmad, A.; Wu, M. Invisible geo-location signature in a single image. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Choi, J.; Wong, C.-W. ENF signal extraction for rolling-shutter videos using periodic zero-padding. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Choi, J.; Wong, C.-W.; Su, H.; Wu, M. Analysis of ENF signal extraction from videos acquired by rolling shutters. IEEE Trans. Inf. Forensics Secur. 2023, 18, 4229–4242. [Google Scholar] [CrossRef]

- Ferrara, P.; Sanchez, I.; Draper-Gil, G.; Junklewitz, H.; Beslay, L. A MUSIC spectrum combining approach for ENF-based video timestamping. In Proceedings of the International Workshop on Biometrics and Forensics (IWBF), Rome, Italy, 6–7 May 2021; IEEE: New York, NY, USA, 2021. [Google Scholar]

- Han, H.; Jeon, Y.; Song, B.-k.; Yoon, J.W. A phase-based approach for ENF signal extraction from rolling shutter videos. IEEE Signal Process. Lett. 2022, 29, 1724–1728. [Google Scholar] [CrossRef]

- Ngharamike, E.; Ang, L.M.; Seng, K.P.; Wang, M. ENF based digital multimedia forensics: Survey, application, challenges and future work. IEEE Access 2023, 11, 101241–101272. [Google Scholar] [CrossRef]

- Nagothu, D.; Chen, Y.; Aved, A.J.; Blasch, E. Authenticating Video Feeds using Electric Network Frequency Estimation at the Edge. EAI Endorsed Trans. Secur. Saf. 2021, 7, e4. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, Y.; Liew, A.W.C.; Li, C.T. ENF based video forgery detection algorithm. Int. J. Digit. Crime Forensics (IJDCF) 2020, 12, 131–156. [Google Scholar] [CrossRef]

- Xu, L.; Hua, G.; Zhang, H.; Yu, L.; Qiao, N. “Seeing” Electric Network Frequency From Events. In Proceedings of the IEEE/CVF Con-ference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Xu, L.; Hua, G.; Zhang, H.; Yu, L. “Seeing” ENF From Neuromorphic Events: Modeling and Robust Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6809–6825. [Google Scholar] [CrossRef]

- Nagothu, D.; Xu, R.; Chen, Y.; Blasch, E.; Aved, A. Defakepro: Decentralized deepfake attacks detection using enf authentication. IT Prof. 2022, 24, 46–52. [Google Scholar] [CrossRef]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the 17th International Conference on Pattern Recognition, ICPR, Cambridge, UK, 26 August 2004. [Google Scholar]

- Mikolov, T.; Karafiát, M.; Burget, L.; Černocký, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the 11th Annual Conference of the International Speech Communication Association (INTERSPEECH 2010), Makuhari, Chiba, Japan, 26–30 September 2010; pp. 1045–1048. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in neural information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- FFmpeg Developers, FFmpeg. March 2019. Available online: https://ffmpeg.org (accessed on 26 September 2025).

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef] [PubMed]

| Layer | Output Shape | Implementation Details |

|---|---|---|

| Conv1 | [32, 60000] | kernel size 3, stride 1 |

| Conv2 | [64, 30000] | kernel size 3, stride 1 |

| Conv3 | [128, 15000] | kernel size 3, stride 1 |

| GRU | [7500, 128] | bidirectional, 64 hidden states |

| Linear | [7500, 1] | |

| Attention | 128 | |

| Dropout | 128 | ratio = 0.5 |

| Output | 2 |

| Dataset | Positive Samples (Label = 1) | Negative Samples (Label = 0) | Spatial Resolution |

|---|---|---|---|

| Dataset-1 | 3000 | 3000 | |

| Dataset-2 | 2000 | 2000 |

| Model | Accuracy [%] |

|---|---|

| CNN-Bi-GRU | 88.6 |

| CNN-Bi-GRU-Attention | 93.75 |

| Input | Accuracy [%] |

|---|---|

| ENF signal | 77.62 |

| row luminance signal | 85.56 |

| Motion Detection Preprocessing | Accuracy [%] |

|---|---|

| w/o Preprocessing | 85.56 |

| with Preprocessing | 93.75 |

| Method | Accuracy [%] |

|---|---|

| Proposed | 93.75 |

| Phase Continuity (non-DL) [25] | 78.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, X.; Zang, X. Integrating 1D-CNN and Bi-GRU for ENF-Based Video Tampering Detection. Sensors 2025, 25, 6612. https://doi.org/10.3390/s25216612

Lin X, Zang X. Integrating 1D-CNN and Bi-GRU for ENF-Based Video Tampering Detection. Sensors. 2025; 25(21):6612. https://doi.org/10.3390/s25216612

Chicago/Turabian StyleLin, Xiaodan, and Xinhuan Zang. 2025. "Integrating 1D-CNN and Bi-GRU for ENF-Based Video Tampering Detection" Sensors 25, no. 21: 6612. https://doi.org/10.3390/s25216612

APA StyleLin, X., & Zang, X. (2025). Integrating 1D-CNN and Bi-GRU for ENF-Based Video Tampering Detection. Sensors, 25(21), 6612. https://doi.org/10.3390/s25216612