MemRoadNet: Human-like Memory Integration for Free Road Space Detection

Abstract

1. Introduction

- We present a framework that integrates human-inspired cognitive architectures implementing episodic, semantic, and working memory subsystems with biologically inspired consolidation and forgetting mechanisms for enhanced performance.

- Our comprehensive experiments demonstrate superior performance among state-of-the-art single-modality-based methods and competitive performance approaching multimodal systems on challenging road segmentation benchmarks. Additionally, we present a detailed analysis of memory dynamics, retrieval mechanisms, and their impact on performance.

2. Related Work

2.1. Multimodal Approaches

2.2. Methods Based on Single-Modality

2.3. Research Gap

3. Methodology

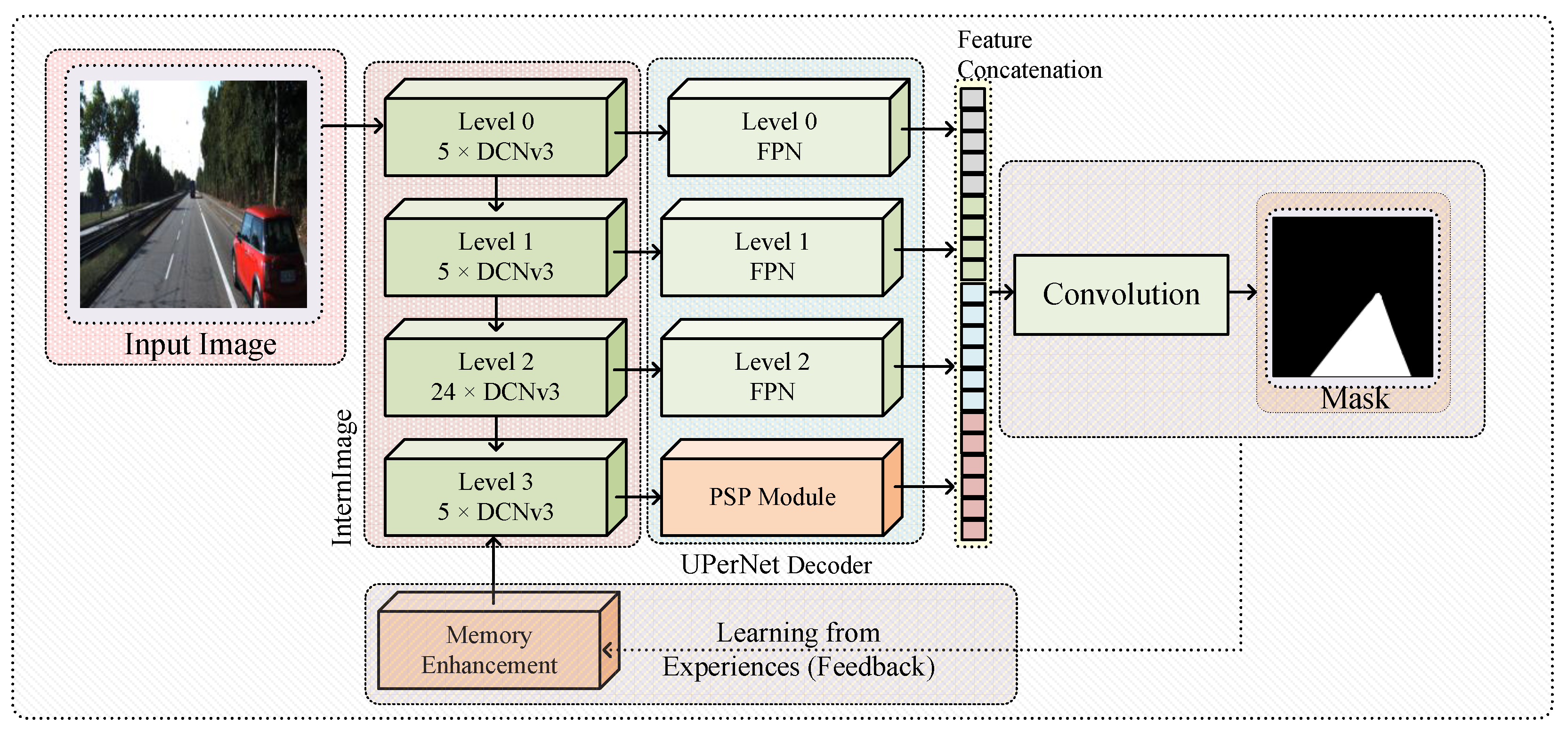

3.1. Overall Architecture

3.2. InternImage-XL Backbone with DCNv3

3.3. UPerNet Decoder Head

3.4. Human-like Memory Bank System

3.4.1. Memory Architecture Design

3.4.2. Experience Encoding and Memory Formation

3.4.3. Memory Recall and Integration

3.4.4. Memory-Guided Feature Enhancement

3.5. Training Strategy and Memory Dynamics

4. Experiments and Results

4.1. Datasets

4.2. Training and Testing Protocol

4.3. Experimental Setup

4.4. Evaluation Metrics

4.5. Comparison with State-of-the-Art Multimodal Methods

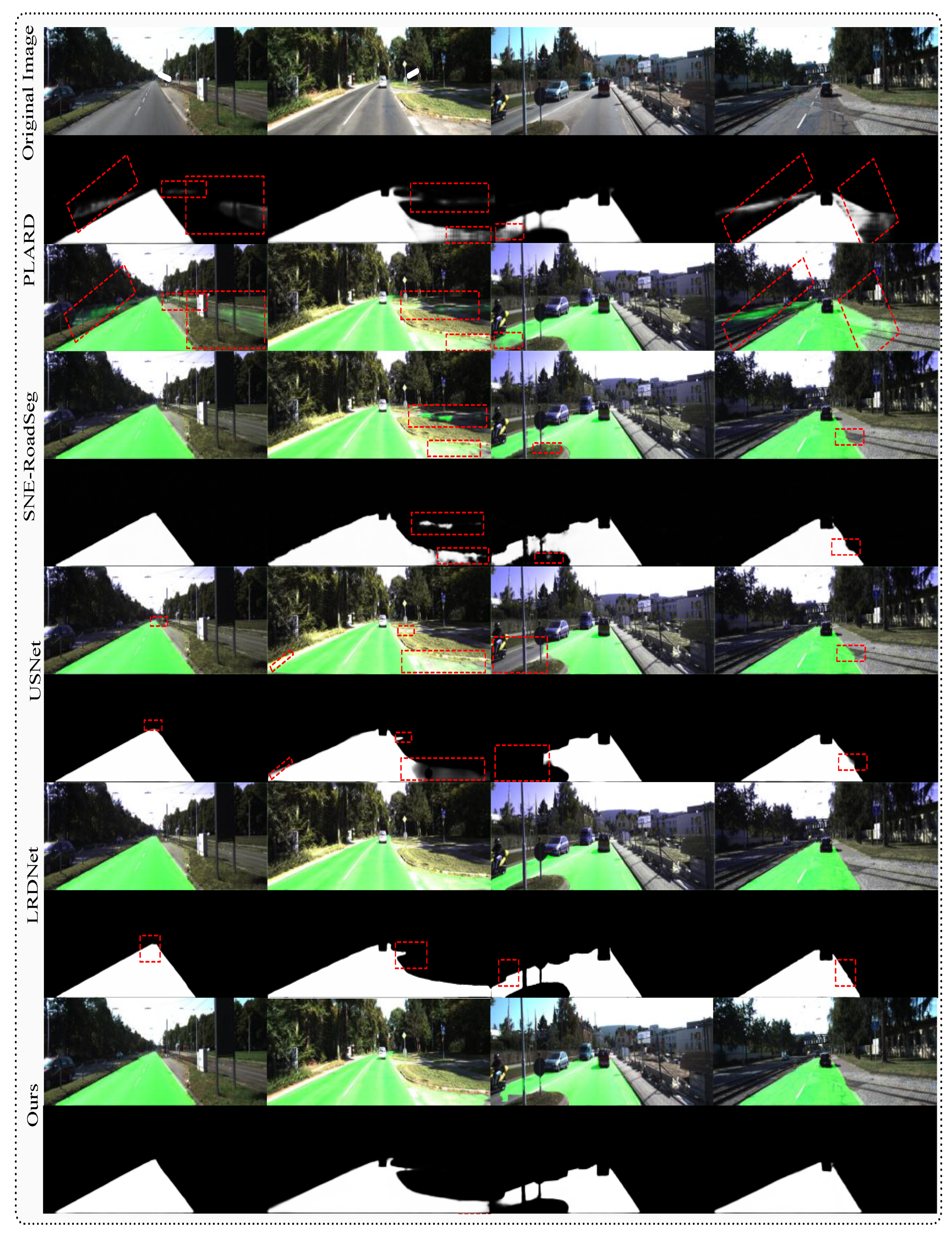

4.6. Comparison with State-of-the-Art Single-Modality Methods

4.7. Comprehensive Ablation Studies

4.7.1. Impact of Memory System Integration

4.7.2. Memory Bank Capacity Analysis

4.7.3. Memory Influence Weight Optimization

4.7.4. Loss Function Component Analysis

4.7.5. Pretrained Weight Initialization Impact

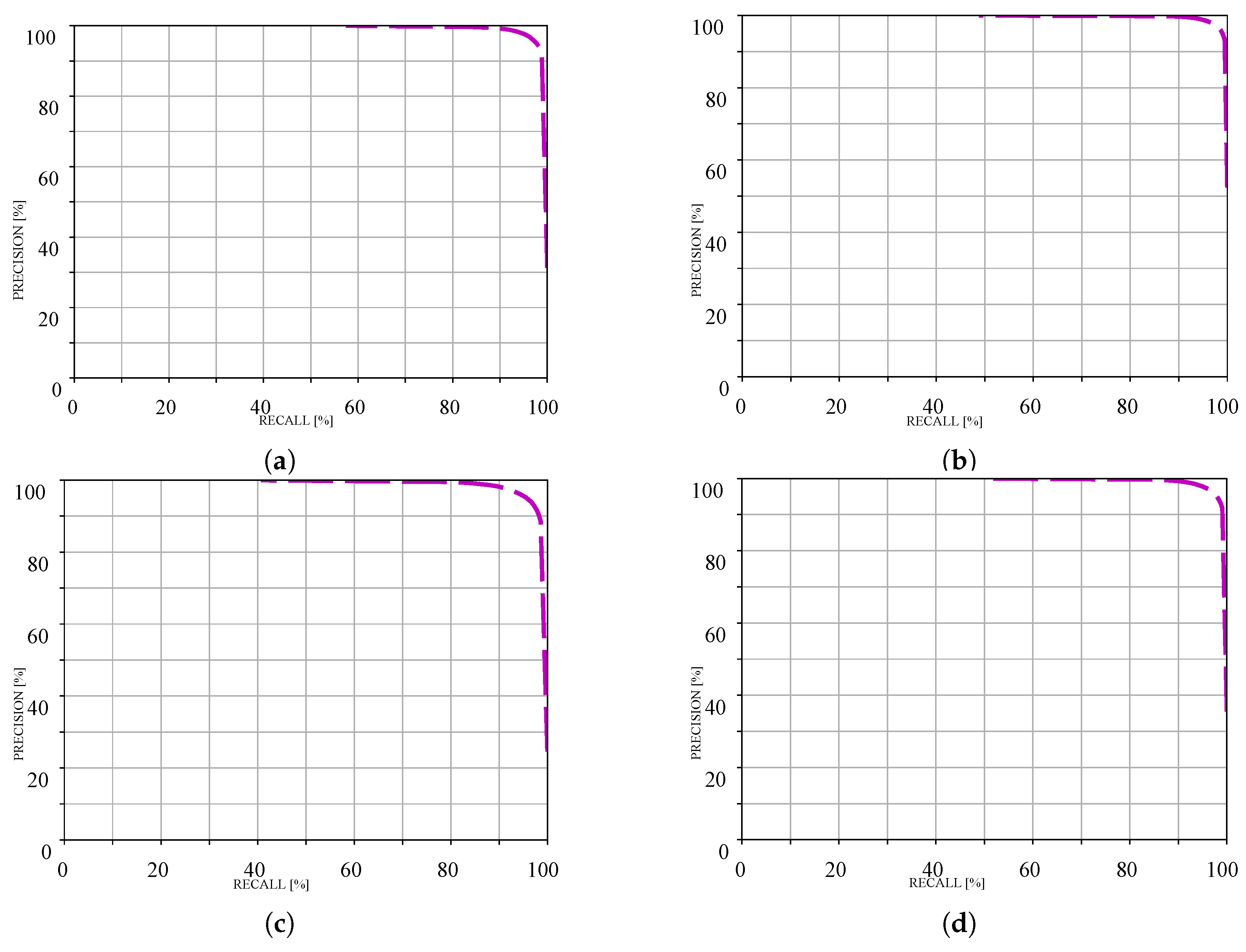

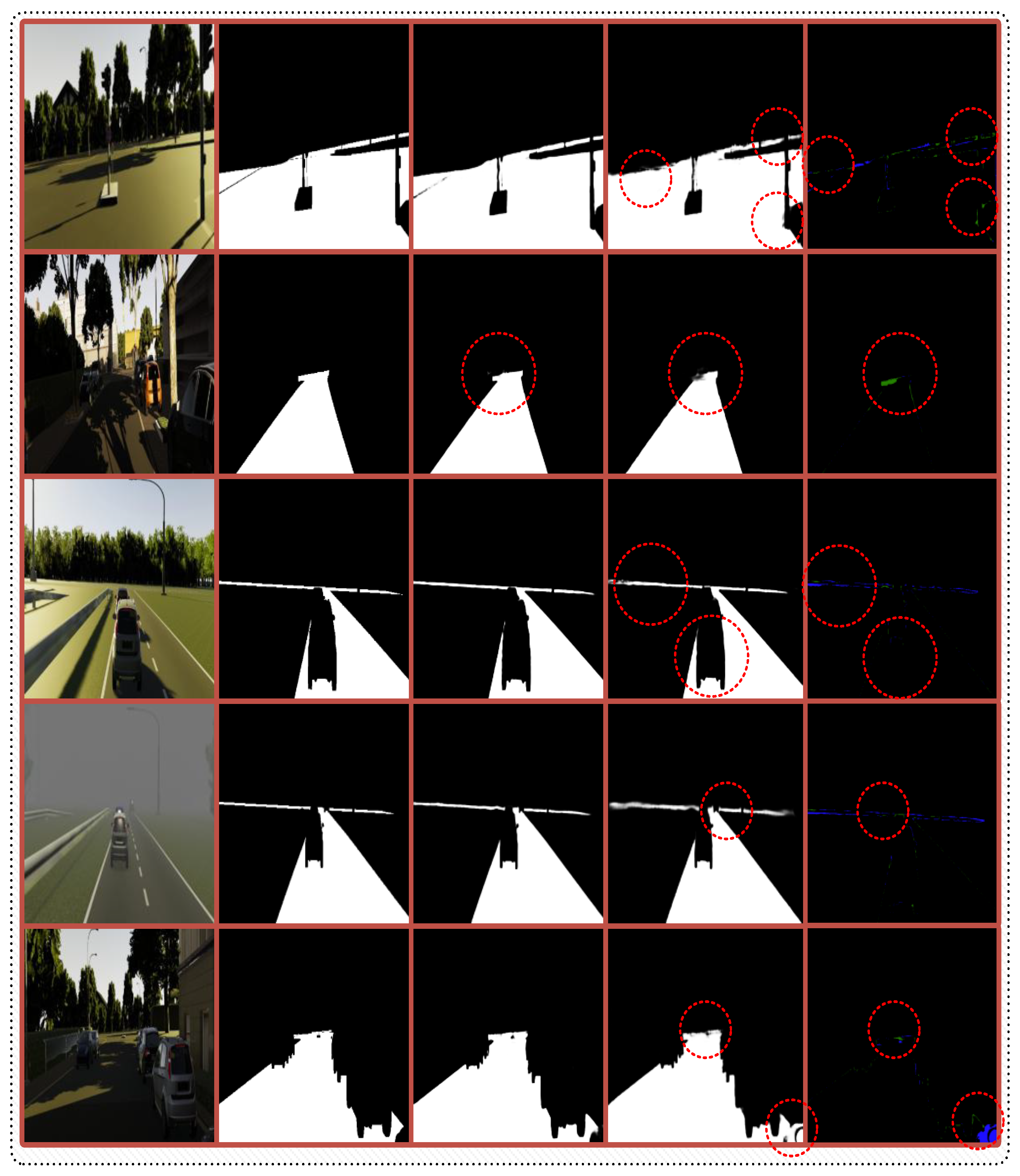

4.8. Performance on R2D

4.9. Performance on Cityscapes

4.10. Computational Efficiency Analysis

4.11. Qualitative Analysis

5. Limitations, Environmental Impact, and Future Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, X.; Dong, Y.; Li, X.; Zheng, X.; Liu, H.; Li, T. Drivable area recognition on unstructured roads for autonomous vehicles using an optimized bilateral neural network. Sci. Rep. 2025, 15, 13533. [Google Scholar] [CrossRef]

- Zhao, J.; Wu, Y.; Deng, R.; Xu, S.; Gao, J.; Burke, A.F. A Survey of Autonomous Driving from a Deep Learning Perspective. ACM Comput. Surv. 2025, 57, 263. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Fritsch, J.; Kühnl, T.; Geiger, A. A new performance measure and evaluation benchmark for road detection algorithms. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems, ITSC 2013, The Hague, The Netherlands, 6–9 October 2013; pp. 1693–1700. [Google Scholar]

- Lin, H.Y.; Chang, C.K.; Tran, V.L. Lane detection networks based on deep neural networks and temporal information. Alex. Eng. J. 2024, 98, 10–18. [Google Scholar] [CrossRef]

- Cabon, Y.; Murray, N.; Humenberger, M. Virtual KITTI 2. arXiv 2020, arXiv:2001.10773. [Google Scholar]

- Amponis, G.; Lagkas, T.; Argyriou, V.; Radoglou-Grammatikis, P.I.; Kyranou, K.; Makris, I.; Sarigiannidis, P.G. Channel-Aware QUIC Control for Enhanced CAM Communications in C-V2X Deployments Over Aerial Base Stations. IEEE Trans. Veh. Technol. 2024, 73, 9320–9333. [Google Scholar] [CrossRef]

- Rae, J.W.; Hunt, J.J.; Danihelka, I.; Harley, T.; Senior, A.W.; Wayne, G.; Graves, A.; Lillicrap, T. Scaling Memory-Augmented Neural Networks with Sparse Reads and Writes. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; pp. 3621–3629. [Google Scholar]

- Santoro, A.; Bartunov, S.; Botvinick, M.M.; Wierstra, D.; Lillicrap, T.P. Meta-Learning with Memory-Augmented Neural Networks. In Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York, NY, USA, 19–24 June 2016; JMLR: Norfolk, MA, USA, 2016; pp. 1842–1850. [Google Scholar]

- Tulving, E. Episodic and semantic memory. Organ. Mem. 1972, 1, 381–403. [Google Scholar]

- Baddeley, A. The episodic buffer: A new component of working memory? Trends Cogn. Sci. 2000, 4, 417–423. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. InternImage: Exploring Large-Scale Vision Foundation Models with Deformable Convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 14408–14419. [Google Scholar]

- Wang, Z. Combining UPerNet and ConvNeXt for Contrails Identification to reduce Global Warming. arXiv 2023, arXiv:2310.04808. [Google Scholar]

- Khan, A.A.; Shao, J.; Rao, Y.; She, L.; Shen, H.T. LRDNet: Lightweight LiDAR Aided Cascaded Feature Pools for Free Road Space Detection. IEEE Trans. Multim. 2025, 27, 652–664. [Google Scholar] [CrossRef]

- Xue, F.; Chang, Y.; Xu, W.; Liang, W.; Sheng, F.; Ming, A. Evidence-Based Real-Time Road Segmentation With RGB-D Data Augmentation. IEEE Trans. Intell. Transp. Syst. 2025, 26, 1482–1493. [Google Scholar] [CrossRef]

- Zhou, H.; Xue, F.; Li, Y.; Gong, S.; Li, Y.; Zhou, Y. Exploiting Low-Level Representations for Ultra-Fast Road Segmentation. IEEE Trans. Intell. Transp. Syst. 2024, 25, 9909–9919. [Google Scholar] [CrossRef]

- Gu, S.; Yang, J.; Kong, H. A Cascaded LiDAR-Camera Fusion Network for Road Detection. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA 2021, Xi’an, China, 30 May–5 June 2021; pp. 13308–13314. [Google Scholar]

- Chen, Z.; Zhang, J.; Tao, D. Progressive LiDAR adaptation for road detection. IEEE/CAA J. Autom. Sin. 2019, 6, 693–702. [Google Scholar] [CrossRef]

- Chang, Y.; Xue, F.; Sheng, F.; Liang, W.; Ming, A. Fast Road Segmentation via Uncertainty-aware Symmetric Network. In Proceedings of the 2022 International Conference on Robotics and Automation, ICRA 2022, Philadelphia, PA, USA, 23–27 May 2022; pp. 11124–11130. [Google Scholar]

- Wang, H.; Fan, R.; Cai, P.; Liu, M. SNE-RoadSeg+: Rethinking Depth-Normal Translation and Deep Supervision for Freespace Detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2021, Prague, Czech Republic, 27 September –1 October 2021; pp. 1140–1145. [Google Scholar]

- Feng, Y.; Ma, Y.; Andreev, S.; Chen, Q.; Dvorkovich, A.V.; Pitas, I.; Fan, R. SNE-RoadSegV2: Advancing Heterogeneous Feature Fusion and Fallibility Awareness for Freespace Detection. IEEE Trans. Instrum. Meas. 2025, 74, 2512109. [Google Scholar] [CrossRef]

- Chen, S.; Han, T.; Zhang, C.; Liu, W.; Su, J.; Wang, Z.; Cai, G. Depth Matters: Exploring Deep Interactions of RGB-D for Semantic Segmentation in Traffic Scenes. arXiv 2024, arXiv:2409.07995. [Google Scholar]

- Li, J.; Zhang, Y.; Yun, P.; Zhou, G.; Chen, Q.; Fan, R. RoadFormer: Duplex Transformer for RGB-Normal Semantic Road Scene Parsing. IEEE Trans. Intell. Veh. 2024, 9, 5163–5172. [Google Scholar] [CrossRef]

- Huang, J.; Li, J.; Jia, N.; Sun, Y.; Liu, C.; Chen, Q.; Fan, R. RoadFormer+: Delivering RGB-X Scene Parsing through Scale-Aware Information Decoupling and Advanced Heterogeneous Feature Fusion. IEEE Trans. Intell. Veh. 2024, 10, 3156–3165. [Google Scholar] [CrossRef]

- Wang, H.; Fan, R.; Sun, Y.; Liu, M. Dynamic Fusion Module Evolves Drivable Area and Road Anomaly Detection: A Benchmark and Algorithms. IEEE Trans. Cybern. 2022, 52, 10750–10760. [Google Scholar] [CrossRef] [PubMed]

- Milli, E.; Erkent, Ö.; Yilmaz, A.E. Multi-Modal Multi-Task (3MT) Road Segmentation. IEEE Robot. Autom. Lett. 2023, 8, 5408–5415. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, H.; Yin, W. Pseudo-LiDAR-Based Road Detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5386–5398. [Google Scholar] [CrossRef]

- Bayón-Gutiérrez, M.; García-Ordás, M.T.; Alaiz-Moretón, H.; Aveleira-Mata, J.; Rubio-Martín, S.; Benítez-Andrades, J.A. TEDNet: Twin Encoder Decoder Neural Network for 2D Camera and LiDAR Road Detection. Log. J. IGPL 2024, 33, jzae048. [Google Scholar] [CrossRef]

- Bayón-Gutiérrez, M.; Benítez-Andrades, J.A.; Rubio-Martín, S.; Aveleira-Mata, J.; Alaiz-Moretón, H.; García-Ordás, M.T. Roadway Detection Using Convolutional Neural Network Through Camera and LiDAR Data. In Proceedings of the Hybrid Artificial Intelligent Systems—17th International Conference, HAIS 2022, Salamanca, Spain, 5–7 September 2022; pp. 419–430. [Google Scholar]

- Ni, T.; Zhan, X.; Luo, T.; Liu, W.; Shi, Z.; Chen, J. UdeerLID+: Integrating LiDAR, Image, and Relative Depth with Semi-Supervised. arXiv 2024, arXiv:2409.06197. [Google Scholar]

- Gu, S.; Zhang, Y.; Tang, J.; Yang, J.; Kong, H. Road Detection through CRF based LiDAR-Camera Fusion. In Proceedings of the International Conference on Robotics and Automation, ICRA 2019, Montreal, QC, Canada, 20–24 May 2019; pp. 3832–3838. [Google Scholar]

- Lyu, Y.; Bai, L.; Huang, X. Road Segmentation using CNN and Distributed LSTM. In Proceedings of the IEEE International Symposium on Circuits and Systems, ISCAS 2019, Sapporo, Japan, 26–29 May 2019; pp. 1–5. [Google Scholar]

- Lyu, Y.; Bai, L.; Huang, X. ChipNet: Real-Time LiDAR Processing for Drivable Region Segmentation on an FPGA. IEEE Trans. Circuits Syst. I Regul. Pap. 2019, 66-I, 1769–1779. [Google Scholar] [CrossRef]

- Muñoz-Bulnes, J.; Fernández, C.; Parra, I.; Llorca, D.F.; Sotelo, M.Á. Deep fully convolutional networks with random data augmentation for enhanced generalization in road detection. In Proceedings of the 20th IEEE International Conference on Intelligent Transportation Systems, ITSC 2017, Yokohama, Japan, 16–19 October 2017; pp. 366–371. [Google Scholar]

- Fan, R.; Wang, H.; Cai, P.; Wu, J.; Bocus, M.J.; Qiao, L.; Liu, M. Learning Collision-Free Space Detection from Stereo Images: Homography Matrix Brings Better Data Augmentation. IEEE/ASME Trans. Mechatron. 2022, 27, 225–233. [Google Scholar] [CrossRef]

- Sun, J.; Kim, S.; Lee, S.; Kim, Y.; Ko, S. Reverse and Boundary Attention Network for Road Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshops, ICCV Workshops 2019, Seoul, Republic of Korea, 27–28 October 2019; pp. 876–885. [Google Scholar]

- Oeljeklaus, M. An integrated approach for traffic scene understanding from monocular cameras: Towards resource-constrained perception of environment representations with multi-task convolutional neural networks. PhD Thesis, Technical University of Dortmund, Dortmund, Germany, 2021. [Google Scholar]

- Li, H.; Zhang, Y.; Zhang, Y.; Li, H.; Sang, L. DCNv3: Towards Next Generation Deep Cross Network for CTR Prediction. arXiv 2024, arXiv:2407.13349. [Google Scholar]

- Lin, T.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Fan, R.; Wang, H.; Cai, P.; Liu, M. SNE-RoadSeg: Incorporating Surface Normal Information into Semantic Segmentation for Accurate Freespace Detection. In Proceedings of the Computer Vision—ECCV 2020—16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 340–356. [Google Scholar]

- Lacoste, A.; Luccioni, A.; Schmidt, V.; Dandres, T. Quantifying the Carbon Emissions of Machine Learning. arXiv 2019, arXiv:1910.09700. [Google Scholar]

| Parameter | Value |

|---|---|

| Optimizer | Adam |

| Learning rate | |

| LR scheduler | ReduceLROnPlateau |

| Scheduler patience | 2 |

| Scheduler factor | 0.1 |

| Memory weight | 0.2 |

| Loss function | Combined (Dice + BCE) |

| Epochs | 100 |

| Batch size | 2 |

| Image size | 640 × 640 |

| Memory size | 200 |

| Top-k memories | 9 |

| Method | MaxF | AP | PRE | REC | FPR | FNR |

|---|---|---|---|---|---|---|

| DiPFormer [22] | 0.9757 | 0.9294 | 0.9734 | 0.9779 | 0.0147 | 0.0221 |

| RoadFormer+ [24] | 0.9756 | 0.9374 | 0.9743 | 0.9769 | 0.0142 | 0.0231 |

| SNE-RoadSegV2 [21] | 0.9755 | 0.9398 | 0.9757 | 0.9753 | 0.0134 | 0.0247 |

| UdeerLID+ [30] | 0.9755 | 0.9398 | 0.9746 | 0.9765 | 0.0140 | 0.0235 |

| RoadFormer [23] | 0.9750 | 0.9385 | 0.9716 | 0.9784 | 0.0157 | 0.0216 |

| SNE-RoadSeg+ [20] | 0.9750 | 0.9398 | 0.9741 | 0.9758 | 0.0143 | 0.0242 |

| Pseudo-LiDAR [27] | 0.9742 | 0.9409 | 0.9730 | 0.9754 | 0.0149 | 0.0246 |

| Evi-RoadSeg [15] | 0.9708 | 0.9354 | 0.9657 | 0.9759 | 0.0191 | 0.0241 |

| PLARD [18] | 0.9703 | 0.9403 | 0.9719 | 0.9688 | 0.0154 | 0.0312 |

| LRDNet+ [14] | 0.9695 | 0.9222 | 0.9688 | 0.9702 | 0.0172 | 0.0298 |

| USNet [19] | 0.9689 | 0.9325 | 0.9651 | 0.9727 | 0.0194 | 0.0273 |

| LRDNet (L) [14] | 0.9687 | 0.9191 | 0.9673 | 0.9701 | 0.0181 | 0.0299 |

| DFM-RTFNet [25] | 0.9678 | 0.9405 | 0.9662 | 0.9693 | 0.0187 | 0.0307 |

| SNE-RoadSeg [41] | 0.9675 | 0.9407 | 0.9690 | 0.9661 | 0.0170 | 0.0339 |

| LRDNet(S) [14] | 0.9674 | 0.9254 | 0.9679 | 0.9669 | 0.0176 | 0.0331 |

| 3MT-RoadSeg [26] | 0.9660 | 0.9390 | 0.9646 | 0.9673 | 0.0195 | 0.0327 |

| TEDNet [28] | 0.9462 | 0.9305 | 0.9428 | 0.9496 | 0.0317 | 0.0504 |

| CLRD [29] | 0.9420 | 0.9266 | 0.9425 | 0.9414 | 0.0316 | 0.0586 |

| CLCFNet [17] | 0.9638 | 0.9085 | 0.9638 | 0.9639 | 0.0199 | 0.0361 |

| LFD-RoadSeg [16] | 0.9521 | 0.9371 | 0.9535 | 0.9508 | 0.0256 | 0.0492 |

| Ours | 0.9666 | 0.9395 | 0.9646 | 0.9687 | 0.0196 | 0.0313 |

| Method | MaxF | AP | PRE | REC | FPR | FNR |

|---|---|---|---|---|---|---|

| RBANet [36] | 0.9630 | 0.8972 | 0.9514 | 0.9750 | 0.0275 | 0.0250 |

| CLCFNet (LiDAR) [17] | 0.9597 | 0.9061 | 0.9612 | 0.9582 | 0.0213 | 0.0418 |

| LC-CRF [31] | 0.9568 | 0.8834 | 0.9362 | 0.9783 | 0.0367 | 0.0217 |

| Hadamard-FCN [37] | 0.9485 | 0.9148 | 0.9481 | 0.9489 | 0.0286 | 0.0511 |

| HA-DeepLabv3+ [35] | 0.9483 | 0.9324 | 0.9477 | 0.9489 | 0.0288 | 0.0511 |

| DEEP-DIG [34] | 0.9398 | 0.9365 | 0.9426 | 0.9369 | 0.0314 | 0.0631 |

| LFD-RoadSeg [16] | 0.9349 | 0.9219 | 0.9346 | 0.9352 | 0.0213 | 0.0648 |

| RoadNet3 [32] | 0.9295 | 0.9193 | 0.9332 | 0.9258 | 0.0216 | 0.0742 |

| ChipNet [33] | 0.9291 | 0.8495 | 0.9098 | 0.9491 | 0.0306 | 0.0509 |

| Ours | 0.9666 | 0.9395 | 0.9646 | 0.9687 | 0.0196 | 0.0313 |

| Benchmark | MaxF | AP | PRE | REC | FPR | FNR |

|---|---|---|---|---|---|---|

| UM road | 0.9655 | 0.9358 | 0.9646 | 0.9664 | 0.0161 | 0.0336 |

| UMM road | 0.9746 | 0.9557 | 0.9707 | 0.9786 | 0.0325 | 0.0214 |

| UU road | 0.9537 | 0.9276 | 0.9508 | 0.9566 | 0.0161 | 0.0434 |

| Urban road | 0.9666 | 0.9395 | 0.9646 | 0.9687 | 0.0196 | 0.0313 |

| Loss Function | MaxF | AP | PRE | REC | FPR | FNR |

|---|---|---|---|---|---|---|

| BCE loss | 0.9905 | 0.9995 | 0.9920 | 0.9891 | 0.0024 | 0.0109 |

| Dice loss | 0.9873 | 0.9941 | 0.9880 | 0.9866 | 0.0036 | 0.0134 |

| Combined (BCE + Dice) | 0.9905 | 0.9995 | 0.9912 | 0.9898 | 0.0026 | 0.0102 |

| Initialization | MaxF | AP | PRE | REC | FPR | FNR |

|---|---|---|---|---|---|---|

| Training from scratch | 0.9896 | 0.9991 | 0.9905 | 0.9887 | 0.0028 | 0.0113 |

| Pretrained InternImage | 0.9893 | 0.9992 | 0.9908 | 0.9878 | 0.0027 | 0.0122 |

| Method | MaxF | PRE | REC |

|---|---|---|---|

| SNE-RoadSeg [41] | 0.9505 | 0.9450 | 0.9561 |

| LRDNet+ [14] | 0.9459 | 0.9382 | 0.9538 |

| LRDNet (L) [14] | 0.9406 | 0.9462 | 0.9350 |

| LRDNet (S) [14] | 0.9373 | 0.9325 | 0.9421 |

| USNet [19] | 0.9366 | 0.9310 | 0.9423 |

| RBANet [36] | 0.9329 | 0.9354 | 0.9305 |

| DFM-RTFNet [25] | 0.9298 | 0.9275 | 0.9321 |

| 3MT-RoadSeg [26] | 0.9287 | 0.9312 | 0.9263 |

| TEDNet [28] | 0.9156 | 0.9089 | 0.9225 |

| CLCFNet [17] | 0.9145 | 0.9201 | 0.9090 |

| Ours | 0.9490 | 0.9545 | 0.9436 |

| Method | MaxF | PRE | REC |

|---|---|---|---|

| SNE-RoadSeg [41] | 0.9275 | 0.9290 | 0.9261 |

| USNet [19] | 0.9269 | 0.9201 | 0.9337 |

| LRDNet+ [14] | 0.9265 | 0.9228 | 0.9302 |

| LRDNet (L) [14] | 0.9247 | 0.9098 | 0.9401 |

| HA-DeepLabv3+ [35] | 0.9233 | 0.9277 | 0.9189 |

| LRDNet (S) [14] | 0.9176 | 0.8805 | 0.9580 |

| DFM-RTFNet [25] | 0.9134 | 0.9156 | 0.9112 |

| 3MT-RoadSeg [26] | 0.9089 | 0.9123 | 0.9055 |

| RBANet [36] | 0.8982 | 0.9014 | 0.8950 |

| TEDNet [28] | 0.8945 | 0.8976 | 0.8914 |

| CLCFNet [17] | 0.8923 | 0.8845 | 0.9003 |

| CLRD [29] | 0.8867 | 0.8901 | 0.8834 |

| Ours | 0.9189 | 0.9259 | 0.9120 |

| Model | Params. (M) | FLOPs (G) |

|---|---|---|

| LRDNet+ | 28.5 | 336 |

| LRDNet (L) | 19.5 | 173 |

| SNE-RoadSeg | 201.3 | 1950.2 |

| USNet | 30.7 | 78.2 |

| PLARD | 76.9 | 1147.6 |

| RBANet | 42.1 | 156.8 |

| Ours | 358 | 476 |

| Memory Weight | MaxF | AP | PRE | REC | FPR | FNR |

|---|---|---|---|---|---|---|

| 0.9905 | 0.9992 | 0.9914 | 0.9896 | 0.0026 | 0.0104 | |

| 0.9900 | 0.9991 | 0.9908 | 0.9892 | 0.0027 | 0.0108 | |

| 0.9907 | 0.9995 | 0.9918 | 0.9895 | 0.0024 | 0.0105 |

| Memory Size | MaxF | AP | PRE | REC | FPR | FNR |

|---|---|---|---|---|---|---|

| 50 memories | 0.9896 | 0.9994 | 0.9910 | 0.9882 | 0.0027 | 0.0118 |

| 100 memories | 0.9893 | 0.9994 | 0.9904 | 0.9882 | 0.0029 | 0.0118 |

| 200 memories | 0.9899 | 0.9993 | 0.9911 | 0.9886 | 0.0026 | 0.0114 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shafiq, S.; Khan, A.A.; Shao, J. MemRoadNet: Human-like Memory Integration for Free Road Space Detection. Sensors 2025, 25, 6600. https://doi.org/10.3390/s25216600

Shafiq S, Khan AA, Shao J. MemRoadNet: Human-like Memory Integration for Free Road Space Detection. Sensors. 2025; 25(21):6600. https://doi.org/10.3390/s25216600

Chicago/Turabian StyleShafiq, Sidra, Abdullah Aman Khan, and Jie Shao. 2025. "MemRoadNet: Human-like Memory Integration for Free Road Space Detection" Sensors 25, no. 21: 6600. https://doi.org/10.3390/s25216600

APA StyleShafiq, S., Khan, A. A., & Shao, J. (2025). MemRoadNet: Human-like Memory Integration for Free Road Space Detection. Sensors, 25(21), 6600. https://doi.org/10.3390/s25216600