3.3.1. Overall

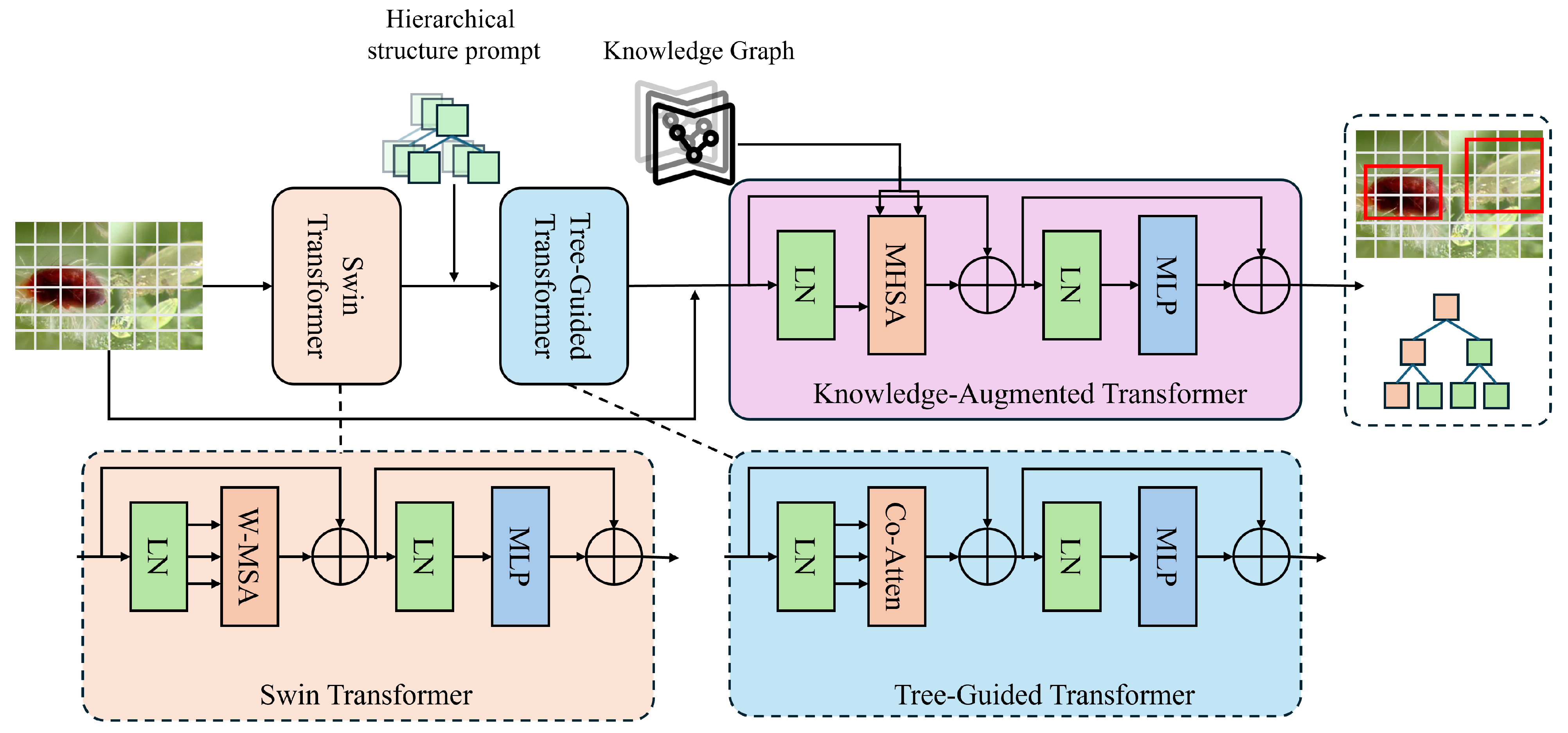

The proposed pest–predator hierarchical recognition and co-occurrence modeling framework, as shown in

Figure 2, has been constructed as a multi-module collaborative deep learning system, designed to address ecological multi-object recognition and semantic reasoning tasks in agricultural field images. The input to the model consists of preprocessed images and corresponding structured label information. A Swin Transformer-based visual backbone is employed to extract multi-scale image features by modeling both spatial and semantic relationships through a hierarchical windowed self-attention mechanism, producing high-dimensional regional feature representations. Subsequently, detected object regions are processed via RoI alignment to extract local feature vectors, which are then embedded and forwarded to the Tree-guided Transformer module. This module incorporates a pre-defined three-level ecological taxonomy of pests and predators to construct hierarchical prompts, guiding the large language model to generate class predictions step by step, enabling structured recognition from order to species with enhanced generalizability and interpretability.

To further improve the model’s capacity to perceive ecological relationships among targets, a knowledge-augmented transformer module is introduced. This component integrates semantic embeddings of entity nodes from an agricultural KG into the attention layers of the visual backbone. These embeddings are fused with the transformer’s multi-head attention computations, enabling the model to capture ecological semantic patterns such as predation, cohabitation, and competition under multi-object coexistence conditions. A co-occurrence attention mechanism is designed within the attention layers, incorporating structural relational priors between entities from the KG into the attention weighting process, thereby enhancing the model’s ability to represent semantic dependencies among objects.

The model produces two main outputs: the hierarchical classification path for each detected target and the predicted ecological co-occurrence relationships between object pairs. These are jointly optimized through a multi-task loss function that integrates classification accuracy, structural path consistency, and ecological relationship reasoning. This design ensures that the model not only achieves high recognition performance but also exhibits structural and relational interpretability, facilitating a transition from visual recognition to ecological understanding [

26].

3.3.2. Tree-Guided Transformer

The Tree-guided Transformer module is designed to enable Transformer structure to perform structured and hierarchy-aware semantic reasoning for object recognition. In pest–predator co-occurrence imagery, conventional classification models often adopt a flat label structure, disregarding the intrinsic semantic hierarchy among biological entities. To address this limitation, a three-level ecological taxonomy tree—spanning order, family, and species—has been integrated into the Tree-Guided Prompt design. This structure supports step-wise semantic generation, combined with multi-step decoding, shareable cache optimization, and hierarchical consistency constraints, forming a complete hierarchical reasoning pipeline.

As shown in

Figure 3, the Tree-guided Transformer accepts region-level visual features extracted via RoI alignment as guidance inputs into a multi-round prompting sequence. Each round corresponds to one level in the taxonomy tree, where the output from the previous level is concatenated as context input to the subsequent prompt, forming a progressive reasoning path. This process can be formalized as a generation tree

, where

denotes the generation node at level

i, and the final output path is

. At each generation step

, the optimal predicted class is computed as

where

denotes the candidate label set at level

i,

x represents the visual guidance feature, and

is the prefix of the previously generated path. To improve, a self-consistency decoding strategy is incorporated, where the most probable output paths are selected and cross-validated. Additionally, a small number of annotated examples are employed to facilitate few-shot prompting, thereby enhancing the semantic accuracy and robustness of the generated label paths.

The LLaVA model is adopted as the backbone LLM, configured with an embedding dimension of 768 and 12 transformer layers. A shareable key–value cache mechanism is employed to store intermediate results of different prompts, reducing computational overhead across multi-round generation. Prompts are encoded using a structured template: “A target has been detected. Please identify its category at the {taxonomy level} from the candidates:

,” where

is the candidate class set at that level. To further reinforce structural supervision, a hierarchical loss function, TreePathLoss, is introduced. The consistency term is defined as

where

assigns higher weights to lower levels of the tree to emphasize the importance of fine-grained classification. This loss formulation penalizes inconsistent hierarchical paths and mitigates error propagation caused by incorrect upper-level predictions.

This module offers significant advantages for multi-object ecological recognition in agricultural settings. The hierarchical constraint improves the model’s discriminative power for visually similar classes, particularly low-frequency predator categories. The step-wise prompt structure aligns with human ecological cognition, enhancing interpretability. Moreover, the fusion of regional visual features and structured taxonomy-based prompts facilitates cross-modal reasoning, supporting accurate and explainable recognition of complex ecological imagery. The Tree-guided Transformer thus integrates seamlessly with the visual backbone and co-occurrence modeling modules to form a comprehensive solution for hierarchical recognition and ecological relationship inference.

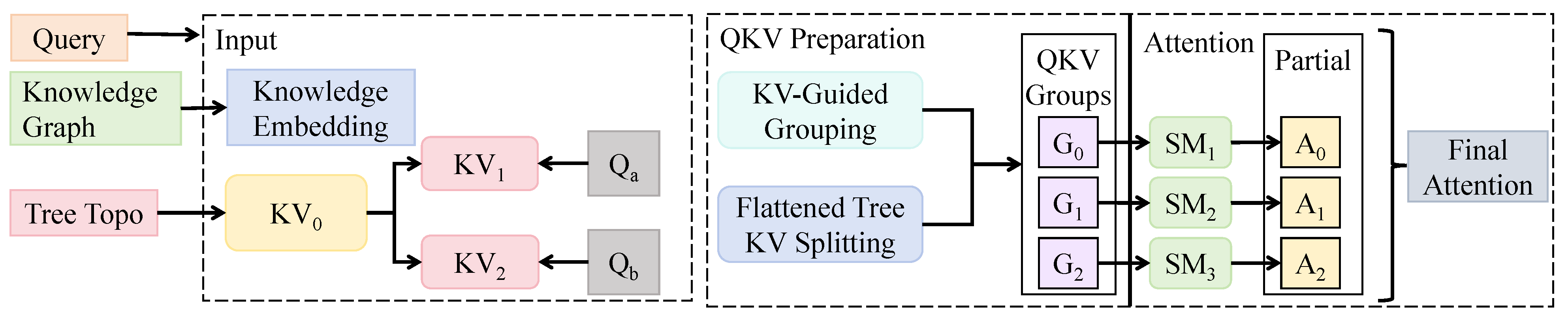

3.3.3. Knowledge-Augmented Transformer

The proposed Knowledge-Augmented Transformer was designed to embed agricultural ecological KGs into the visual backbone network, enabling the perception and modeling of semantic relationships among multiple objects in images. This module was constructed based on the Swin Transformer architecture, consisting of four stages. Each stage employed a hierarchical sliding window mechanism and cross-window connections to progressively extract semantic information from local to global scales. The feature map resolutions for Stages 1 to 4 were , , , and , respectively, with corresponding channel dimensions . Each stage contained two Swin Block modules, with the number of attention heads set to and a fixed window size of .

To provide reliable prior knowledge for the cross-modal embedding module, we first constructed agricultural knowledge graphs (KGs) from multiple domain-specific resources. Specifically, we collected structured information from authoritative agricultural ontologies (e.g., plant taxonomy databases, pest–disease knowledge bases, and agronomic practice guidelines), together with curated triples extracted from agricultural literature and expert-annotated datasets. Entities were defined to represent objects of interest in agricultural systems, including crop species, pests, diseases, soil types, and ecological factors, while relations described their interactions, such as “causes,” “affects,” “co-occurs with,” or “belongs to.” To ensure coverage of long-tail cases, we applied relation extraction and named-entity recognition (NER) methods to supplement missing triples from unstructured text sources, followed by expert verification to guarantee correctness. The resulting KG was represented as a set of triples , where h and t denote head and tail entities and r denotes the relation type. Each triple was validated against agricultural guidelines and merged into a unified schema to maintain consistency across heterogeneous sources. To enrich the connectivity, we further performed KG completion using embedding-based link prediction models (e.g., TransE/RotatE), which allowed the inference of plausible but unobserved relations. After completion, the final KG was pruned to remove noisy or low-confidence edges, and the resulting structured context graph was used as input for subsequent graph convolutional encoding.

To incorporate information from agricultural KGs, as shown in

Figure 4, a cross-modal embedding module was designed. Structured context graphs

were constructed from entity nodes

and relational edges

. Each node was encoded as a vector

and processed through a GCN model to produce knowledge embeddings

, which were then fused with visual features. A key-value guided attention mechanism was adopted for fusion: in each Swin Block attention module, the image feature

was projected into a query vector

, while the knowledge embedding was mapped to key and value vectors as

and

. The attention weights were computed to update image features:

This formulation introduced structured prior knowledge into the attention mechanism, allowing the model to consider not only local textures but also semantic inter-object relationships such as “predator–pest” and “co-occurrence” during relevance computation.

A hierarchical knowledge-guided fusion mechanism was further incorporated. Based on the graph structure, entities were grouped into subgraphs (e.g., insect orders or predator families), each generating level-specific key-value mappings. In each attention module of the Swin layers, the corresponding level’s knowledge embeddings were selected for attention calculation, forming a hierarchical supervision mechanism expressed as

Here,

l denotes the layer index,

refers to the knowledge embeddings selected for that level, and

is the input feature at the current layer. Residual connections were used to enhance model stability. To improve semantic consistency, a knowledge-guided query offset was introduced as follows:

In this formulation,

is a learnable parameter and

is the knowledge offset function. This design enables adaptive adjustment of the query vector based on the semantic graph, aligning the semantic and visual feature spaces.

This module and the Tree-guided Transformer module were jointly modeled through object detection results and graph entity anchors. Specifically, the class path predicted by the Tree-guided Transformer was mapped to a graph node

and used as a conditioning signal for the Knowledge-Augmented Transformer. This reverse guidance path formed a conditionally enhanced cross-modal attention mechanism, mathematically expressed as

Here,

is the class path output by the LLM, and the PromptTree function maps it to a graph-based condition. Through dual-path semantic and structural injection, the proposed framework significantly enhanced generalization, recognition accuracy, and semantic interpretability in ecological scenarios. It was especially effective in improving stability and semantic coherence in tasks involving misidentified predators, occluded multi-object detection, and co-occurrence recognition in complex farmland ecosystems.

3.3.4. Ecological Semantic Alignment Loss

In multi-object recognition and ecological modeling tasks, conventional loss functions such as cross-entropy loss typically focus solely on classification accuracy while ignoring the underlying semantic hierarchy and ecological relations among targets. Such “flat” label assumptions are often inadequate for pest–predator co-occurrence recognition, where entities exhibit hierarchical taxonomic dependencies (e.g., “Hymenoptera–Braconidae–Trichogramma”) and ecological interactions (e.g., predation, co-habitation, competition). To address this, an Ecological Semantic Alignment Loss (ESA, denoted as ) was developed to enforce consistency between model outputs and ecological semantic structures during training.

This loss consists of three components: category classification loss, hierarchical path preservation loss, and semantic relation preservation loss. Let the ground-truth class path for target

i be

and the predicted path be

, with corresponding graph embeddings

and

. The semantic alignment loss is defined as

The first term represents standard cross-entropy loss, while the second enforces semantic embedding proximity in the graph space. Here,

and

are weighting coefficients. For co-occurring object pairs

, ecological relations defined in the graph as

were encoded as vectors

, with predicted relation

. A relation consistency loss was introduced as follows:

Here,

is the relation embedding function and cos denotes cosine similarity. This term encourages the predicted inter-object relation to align semantically with the ecological KG. The overall loss is given by

Here,

N is the number of targets, and

M is the number of co-occurring target pairs in set

. Unlike traditional classification losses that focus solely on label accuracy, the proposed ESA loss enforces triple-level consistency among class, path, and inter-object relations in both semantic and structural spaces. This enhances model understanding of ecological multi-object scenes, as demonstrated in Algorithm 3.

| Algorithm 3 Computation of Ecological Semantic Alignment Loss |

- 1:

Initialize loss terms: , , - 2:

for to N do - 3:

- 4:

for to 3 do ▹ Each level in the hierarchical structure - 5:

- 6:

end for - 7:

end for - 8:

for alldo ▹ Each co-occurring object pair - 9:

- 10:

end for - 11:

|

From a mathematical perspective, this loss minimizes KL divergence via cross-entropy, Euclidean distance in the embedding space, and angular disparity via cosine similarity, forming a unified optimization framework for object recognition and relation modeling. This method is particularly suited for dense, co-occurrence-rich scenarios with high semantic redundancy, improving both detection performance and interpretability for rare targets such as predators.