1. Introduction

Autonomous robots are increasingly deployed in confined channel applications, such as pipelines, sewer systems, and industrial ducts, performing inspection, maintenance, or monitoring [

1,

2,

3,

4,

5]. These environments present unique navigation challenges: narrow passages that limit maneuverability, dynamic obstacles, and reduced visual perception due to dust, darkness, or occlusion [

6,

7,

8,

9]. Reliable navigation requires accurate perception, robust localization, and real-time decision-making [

10].

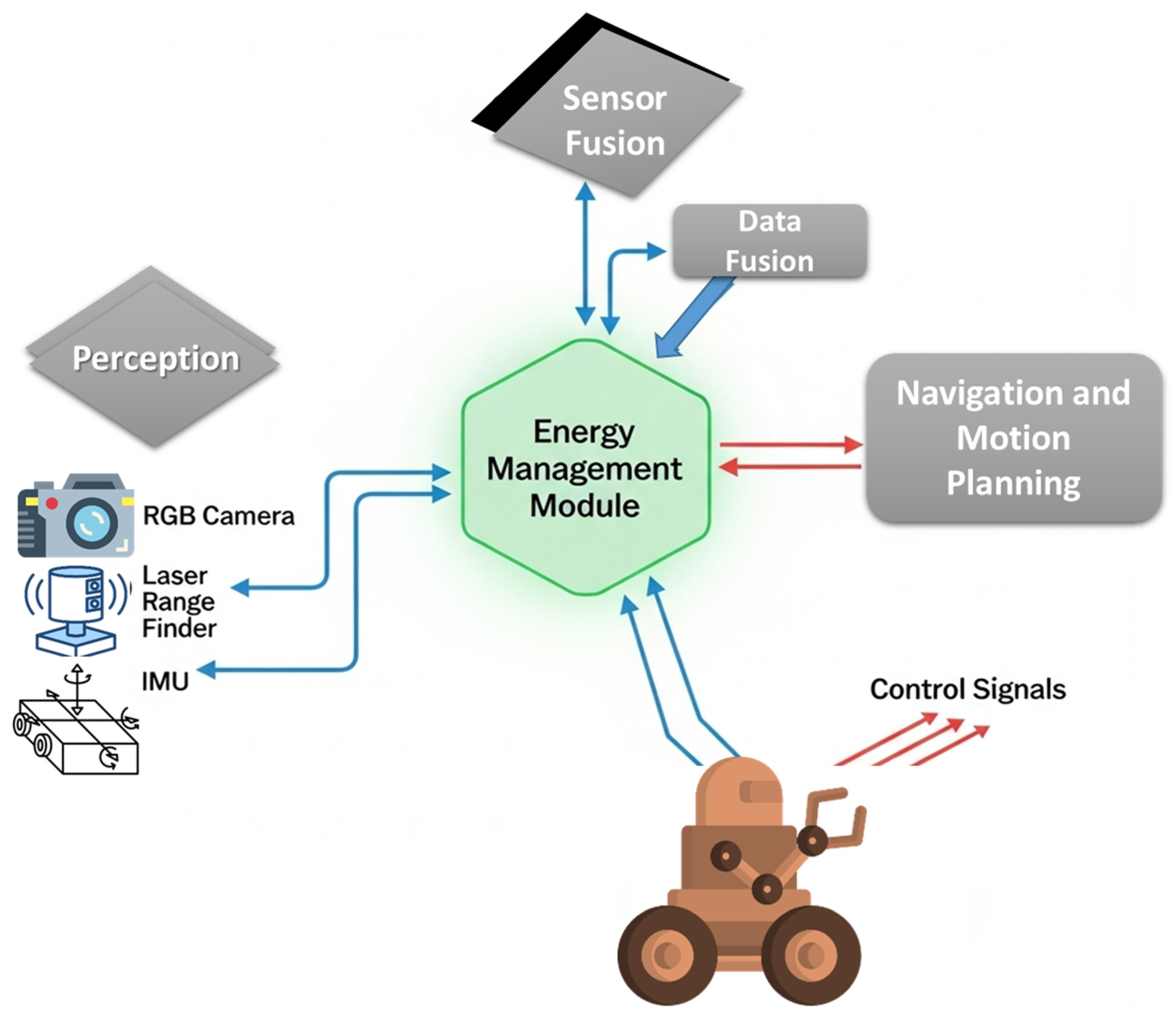

As shown in

Figure 1, the system enables safe and efficient autonomous navigation in confined spaces while reducing energy use. The robot collects data from multiple sensors to detect obstacles, estimate orientation, and monitor the environment. It selectively fuses sensor data and dynamically prioritizes sensor use and computational resources, allowing real-time adjustments for accurate navigation and energy-efficient operation.

Sensor fusion is a critical technique for autonomous navigation, combining data from heterogeneous sensors such as RGB cameras, LiDAR, and inertial measurement units (IMUs) [

11,

12,

13,

14,

15]. Fusion mitigates individual sensor limitations; for example, cameras underperform in low-light conditions, and LiDAR may fail with complex obstacles, thus improving situational awareness and navigation reliability [

16,

17,

18,

19,

20].

Even with sensor fusion, energy efficiency is often compromised in battery-powered robots. Running all sensors continuously leads to high computational load and rapid battery depletion, limiting mission duration in long-duration operations [

21,

22,

23,

24,

25]. Moreover, most existing systems lack dynamic sensor management, wasting energy processing low-value or obsolete data [

26,

27,

28,

29,

30]. Robustness under sensor degradation or failure is another concern, as environmental factors like dust, moisture, or vibration can reduce sensor reliability [

31,

32,

33,

34,

35].

In this work, we employ an adaptive Extended Kalman Filter (EKF)-based sensor fusion algorithm that dynamically integrates RGB, LiDAR, and IMU data depending on environmental complexity and sensor availability. The EKF selectively fuses sensor inputs to maintain high navigation accuracy while optimizing energy consumption, which is critical for long-duration autonomous operations in confined channels. This adaptive EKF framework allows the robot to maintain reliable localization, robust obstacle detection, and safe navigation, even when certain sensors are inactive or degraded due to environmental conditions.

To address these limitations, a comprehensive approach is needed that simultaneously ensures energy-aware operation, adaptive sensor management, and fault-tolerant fusion. The proposed adaptive EKF-based architecture enables autonomous robots operating in constrained channels to maintain robust navigation performance while reducing energy consumption, even under dynamic environmental conditions and uncertain sensor reliability.

Thus, the key contributions and aspects of the proposed approach have been elaborated as follows:

- ✓

Energy-Aware Adaptive Sensor Fusion Framework: This study introduces an adaptive energy-aware sensor fusion framework that dynamically activates or deactivates sensors according to environmental complexity and mission phase. Unlike conventional fusion approaches that keep all sensors active, the proposed method significantly reduces power consumption while maintaining navigation accuracy.

- ✓

Dynamic Sensor Management Strategy: A novel Energy Management Unit (EMU) is developed to monitor, in real time, the battery status, computational load, and sensor utilization. The EMU applies a duty-cycling and on-demand scheduling strategy to balance energy efficiency with reliable perception performance.

- ✓

Mid-Level Fusion for Efficiency and Robustness: The framework employs a mid-level Extended Kalman Filter (EKF) fusion process that integrates data from RGB cameras, LiDAR, and IMU sensors. This level of fusion enhances localization robustness while lowering computational cost compared to traditional high-level fusion methods.

- ✓

Energy Performance Trade-Off Analysis: Extensive quantitative evaluations demonstrate that the proposed system achieves up to 35% energy reduction while maintaining 98% navigation accuracy and zero collisions. This confirms that the system achieves an optimal balance between energy savings and operational safety in constrained channels.

- ✓

Validation through Simulation and Real Prototype: The proposed approach is validated both through simulation experiments and on a microcontroller-based prototype in confined environments. The results confirm its feasibility and scalability for real-world low-power robotic applications.

- ✓

Scalable and Modular Architecture: The proposed architecture is modular and easily adaptable to various robotic platforms and channel geometries. It is suitable for multiple industrial and inspection tasks, including pipeline monitoring, sewer inspection, and search-and-rescue operations.

The remainder of the paper will be organized as follows:

Section 2 summarizes the related literature on sensor fusion, energy-aware robotics, and adaptive navigation in constrained environments. Then,

Section 3 includes the proposed system architecture, algorithm development of sensor fusion, and energy management.

Section 4 provides details of the experiment, assessment metrics, and results.

Section 5 provides a discussion of the research findings and limitations. Finally,

Section 6 provides the conclusion and future works.

3. Materials and Methods

This section discusses the methodology used in the design and assessment of the presented energy-aware sensor fusion architecture for autonomous robot navigation in constrained channel contexts. The methodology has three major components: system architecture, system flow, and experimental setup.

3.1. System Architecture

In this section, we present the proposed system’s layered structure (or architecture). Our architecture has five functional layers: application, perception, sensor fusion module, energy management, and control. All the layers function to improve energy efficiency and navigational reliability. The perception layer relies on RGB cameras, LiDAR, and IMU sensors to provide environmental data from each sensor. The adaptive EKF fusion module utilizes the new sensor information to fuse data into a 2D occupancy grid. The energy management layer monitors battery levels and the computational load to engage and adjust sensor duty cycling in real time as necessary. The control layer converts the fused perception layer data into motion commands for a safe and reliable flight while also being energy efficient.

The Energy Management Unit (EMU) implements sensor duty cycling through software-controlled low-power modes and adaptive sampling rates. Each sensor can be temporarily put into sleep mode or sampled less frequently depending on its priority, the current battery level, and navigation complexity. The EMU evaluates sensor reliability and environmental requirements using a lightweight priority-based algorithm, ensuring that critical sensors remain active for safe navigation while lower-priority sensors are temporarily deactivated to save energy. This process incurs minimal computational overhead, as the EMU’s decisions are integrated into the main control loop without affecting real-time performance.

The system continuously monitors the quality and reliability of all sensor data to ensure robust navigation in confined environments. When a sensor exhibits excessive noise, produces inconsistent measurements, or experiences temporary failure, the adaptive Extended Kalman Filter (EKF) dynamically adjusts the sensor’s contribution by reducing its weighting or temporarily excluding it from the fusion process. Simultaneously, the Energy Management Unit (EMU) modifies the duty cycling of all sensors, prioritizing those that remain reliable and informative for the current navigation task. This coordinated adaptation ensures that obstacle detection and localization accuracy are maintained without significantly increasing energy consumption. By actively managing sensor reliability and energy allocation, the system provides a fault-tolerant mechanism that allows the robot to continue safe and stable operation even under partial sensor degradation, transient failures, or adverse environmental conditions such as lighting changes, sensor noise, or dynamic obstacles.

In

Figure 2, the five-layer architecture of the proposed system is illustrated. Data from RGB cameras, LiDAR, and IMU are collected at the perception layer and processed in the sensor fusion module using an adaptive EKF. The energy management layer monitors battery levels and computational load, dynamically managing the sensors’ power states. The control layer converts fused data into motion commands, while the application layer handles mission-level decision-making. Arrows indicate the flow of information and control signals between layers. The energy-aware strategies implemented in the system enable efficient navigation with reduced power consumption.

Table 2 summarizes the proposed architecture for autonomous robots navigating confined channels. Each layer is described along with its main components, primary functions, and contribution to energy efficiency. The perception layer and sensor fusion module employ adaptive strategies to activate only necessary sensors, while the energy management layer monitors and optimizes overall power consumption. The Control Layer ensures safe navigation while supporting energy savings.

3.2. System Flowchart

The system flow illustrates the direction of data and decisions within the proposed autonomous navigation architecture. The process begins with mission initialization and continuous monitoring of energy levels, which determines whether adaptive sensor activation is required according to the complexity and dynamics of the surrounding environment. When necessary, the system’s sensors, including RGB cameras, laser range finders, LiDAR, and IMU units, are activated to collect raw observational data. These data then undergo a preprocessing stage aimed at reducing noise, normalizing signals, and correcting inconsistencies to ensure reliable input for subsequent processing modules.

Following preprocessing, the cleaned sensor data are fused within an adaptive Extended Kalman Filter (EKF) framework, producing a two-dimensional occupancy grid that represents both the robot’s navigable areas and the obstacles within its environment. This occupancy grid serves as an essential input for the control layer, which generates motion commands for the robot’s actuators to achieve efficient path planning while maintaining collision avoidance and safe navigation.

Figure 3 provides a detailed flowchart of this operational process, offering a clear and comprehensive visualization of data movement, decision-making, and control actions within the system. The flowchart depicts the continuous and cyclical nature of the system through the sequence of sensing, preprocessing, energy-aware decision-making, sensor fusion, path planning, actuation, and feedback, which repeats until the mission is complete. The high-resolution and color-coded design enhances legibility and distinguishes the decision paths for high-complexity and low-complexity environments. Annotated arrows further indicate the conditional flow between modules, including the energy-aware decision stage.

3.3. Energy-Aware Strategies

In this section, we describe the key mechanisms through which energy is saved in our proposed architecture for the channel robot, while maintaining navigation performance. The system leverages adaptive duty cycling, allowing sensors to be selectively turned on or off depending on environmental complexity and mission context. More recently, the system implements on-demand activation, enabling only the relevant sensors in cluttered or complex sections of the channel, rather than keeping all sensors active redundantly, thereby reducing unnecessary battery consumption in real time. This approach also allows for sensor prioritization, using low-power sensors, such as the IMU, before activating higher-consumption sensors like LiDAR and RGB cameras as needed. Overall, this strategy achieves up to 35% energy savings while maintaining high obstacle avoidance accuracy. The energy management function is designed to enable efficient operation in short and confined spaces without compromising performance, as illustrated in

Figure 4.

4. Experimental Results

The proposed energy-aware adaptive sensor fusion system has been assessed using simulation and prototype evaluation in confined channel situations. The evaluation was focused on navigation, obstacle detection, sensor reliability, and energy usage.

4.1. Navigation Performance

This section evaluates the navigation performance of the proposed energy-aware adaptive sensor fusion framework in confined environments. The objective was to assess how effectively the system could maintain reliable motion, obstacle detection, and localization accuracy while minimizing energy consumption.

The robot was tested under various constrained scenarios, including narrow, curved, and obstacle-filled channels. Navigation was achieved using an occupancy-grid sensor fusion approach combined with a modified vector field histogram (VFH) algorithm. The robot’s movement was observed to be smooth and collision-free, successfully following channel walls and avoiding obstacles even in complex conditions.

The performance of the navigation system was quantitatively assessed based on localization precision, trajectory stability, and obstacle avoidance. Across 30 experimental trials, the proposed framework demonstrated high accuracy and consistency. The root mean square error (RMSE) of position estimation was measured at 3.8 cm, while the maximum drift over a 5 m trajectory did not exceed 5.2 cm. The 95% confidence interval for position accuracy was within ±4 cm, indicating stable and repeatable localization performance.

The robot achieved a 100% navigation success rate with zero collisions and a 0% failure rate, meaning no deviations greater than 10 cm or mission interruptions were recorded. Compared with the baseline configuration (all sensors active continuously), the proposed energy-aware system showed less than 2% performance degradation, confirming that dynamic sensor scheduling does not compromise navigation reliability.

Table 3 shows that the proposed energy-aware sensor fusion system achieves high navigation performance, with an RMSE of 3.8 cm, a maximum drift of 5.2 cm, and a 95% confidence interval of ±4 cm. All trials were completed without collisions (100% success rate, 0% failure), and navigation accuracy remained 98% compared to the full-sensor baseline, demonstrating reliable performance with reduced energy use.

4.1.1. Navigation in Confined Channels

This section examines the robot’s navigation capabilities in constricted channels under various conditions, such as narrow or curved hallways and unexpected obstacles. The system employs occupancy-grid sensor fusion combined with a modified vector field histogram (VFH) algorithm, using data from RGB cameras, laser pointers, LiDAR, and Inertial Measurement Unit (IMU) sensors to generate a 2D occupancy grid of obstacles and free space surrounding the robot. Fusing multiple sensor types provides a seamless representation of both free space and obstacle information.

Figure 5 illustrates obstacle detection using an RGB camera and a linear laser pointer. The laser projects a visible line onto the obstacle, which the RGB camera observes. The system measures changes in the laser projection using a modified triangulation approach, accurately determining the distance to each obstacle. This technique is lightweight, inexpensive, and suitable for short-range detection in tight channel configurations, as it does not rely on power-hungry 3D sensors.

4.1.2. Obstacle Detection and Sensor Configuration

In this subsection, we describe the condition detection capabilities and sensor suite of the channel robot. The robot is equipped with an RGB camera, ground- and ceiling-mounted laser pointers, a pseudo-2D LiDAR, and an IMU. The two laser sources detect objects at different heights, identifying both low-lying obstacles and overhead items (e.g., light fixtures). The RGB camera captures the 2D projected lines from both lasers, and distances to obstacles are calculated using a modified triangulation method. Experimental tests in constrained physical channel environments showed that the detection system achieves (1) an accuracy within 5 cm for all obstacles, and (2) over 94% confidence in detection quality. The resulting occupancy grid is generated and used with a modified VFH algorithm that supports wall-following as well as redundant, energy-aware detection.

Table 4 summarizes results from the independently simulated obstacle detection experiment, using the RGB camera with support from the laser and LiDAR. Three obstacle locations are shown—the left wall, right wall, and an obstacle in front of the robot—with the simulated environment defining the true distance for each. The measured distances, obtained via the modified triangulation algorithm, differed from the true distances by ≤5 cm, with confidence levels exceeding 94% in all cases. These results confirm that the proposed method reliably detects proximal obstacles in channel-like environments, consistent with simulation outcomes.

Figure 6 presents the dual-laser setup, showing the ground-level and ceiling-level laser projections. This configuration provides full vertical coverage of the robot’s path, reducing blind spots and enhancing safety in constrained spaces.

4.2. Energy Efficiency

Energy efficiency was evaluated by comparing the proposed energy-aware adaptive sensor activation strategy against a baseline system where all sensors remain continuously active. The energy-aware module evaluates channel complexity and mission phase to dynamically activate RGB, LiDAR, and IMU sensors, while deactivating or reducing the sampling rate of sensors in low-complexity segments, such as straight runs or paths without obstacles. High-resolution sensors, like LiDAR, are activated only when navigating cluttered or dynamic sections to maintain robust perception.

Per-sensor power measurements were included in the evaluation. The RGB camera consumes approximately 500 mW; the LiDAR, 1200 mW; and the IMU, 150 mW. The Energy Management Unit (EMU) adds a minimal 50 mW, representing negligible computational overhead while providing real-time sensor management. The proposed scheduling strategy reduces average system power consumption by 35% and computational load by 30% while maintaining a high navigation accuracy of 98%.

Furthermore, by reducing the time high-power sensors remain active, the adaptive strategy lowers stress on the battery, which mitigates long-term degradation and extends operational lifetime. This ensures that the robot can perform extended missions in confined channels without frequent battery replacement or recharging.

Figure 7 shows the energy consumption profiles for the baseline and energy-aware systems. The baseline system shows constant energy usage, as all sensors remain active continuously. In contrast, the energy-aware system exhibits variability according to sensor duty-cycling and demand-based activation. The shaded area in

Figure 7 highlights the net energy savings achieved by the adaptive approach, demonstrating that substantial energy reductions are possible without compromising navigation reliability.

Table 5 summarizes the energy consumption metrics per sensor, the EMU cost, and the overall system performance. This quantitative analysis confirms that the proposed energy-aware approach achieves substantial energy savings while preserving navigation reliability and reducing long-term battery wear.

4.3. Sensor Feedback Accuracy

Sensor feedback is crucial for achieving precise navigation and localization in tight or confined channels. The proposed system includes IMU sensors (accelerometers and gyroscopes), LiDAR, RGB cameras, and linear laser pointers to enhance situational awareness. IMU sensors measure the robot’s relative and absolute rotation, while LiDAR provides accurate distances to walls and obstacles.

Using mid-level sensor data fusion, raw sensor readings are integrated into a unified representation. This fusion improves obstacle detection accuracy across multiple planes (ground, ceiling, and walls) and ensures reliable wall-following navigation. An additional advantage of mid-level fusion is the reduced computational load, enabling faster processing and energy-efficient operation without compromising detection accuracy.

The effectiveness of mid-level fusion is illustrated in

Figure 8, which depicts a single occupancy map generated by fusing data from RGB cameras, linear laser pointers, LiDAR, and IMU sensors. In the map, obstacles detected by the RGB + laser sensors are shown as red dots, walls measured by LiDAR as blue lines, and orientation/rotation information from the IMU as green arrows. The purple shaded area represents the final occupancy grid constructed via mid-level fusion, which the robot uses for navigation and path planning.

Table 6 summarizes the accuracy of each individual sensor and sensor fusion in a fused system. IMU provides reliable knowledge of rotations; LiDAR provides highly accurate distance information, and RGB + laser use enables the operator to detect obstacles at different heights. Fusion of all sensor inputs provides fewer errors and enhances situational awareness overall.

4.4. Quantitative Metrics

In this section, important performance metrics are reported for the energy-aware sensor fusion system used for the autonomous navigation of the channel robot. The performance metrics compare obstacle detection performance, energy performance, reliable navigation, and performance efficiency. The quantitative assessment illustrates the merits of mid-level fusion and the selective activation of sensors when the environment is limited.

The experimental results are provided in

Table 7. Each metric is succinctly defined, and the results indicate high accuracy, energy performance, reliable navigation, and improved performance efficiency of processing. This comparison demonstrates the merits of the energy-aware sensor fusion framework in development compared to standard approaches.

Figure 9 shows the performance metrics of the energy-aware channel robot using voltage-style signals. Each metric is represented as a continuous curve, resembling a sensor voltage waveform, which makes it easy to compare the relative magnitudes of obstacle detection accuracy, energy savings, navigational reliability, and processing time improvements. The plots are color-coded, with markers indicating peak values, similar to actual sensor measurements. This representation provides an intuitive overview of system performance and energy efficiency, in a format familiar to embedded systems engineers.

4.5. System Implementation for Channel Navigation

The proposed system enables robotic autonomy for navigating narrow channels while maintaining energy awareness. It integrates a suite of sensors, including RGB cameras, linear laser pointers, pseudo-2D LiDAR, and IMU sensors, providing accurate environmental perception, obstacle detection, and motion control. The energy-aware sensor fusion selectively activates sensors based on environmental complexity, ensuring low power consumption while maintaining reliable navigation.

4.5.1. Sensor Configuration and Obstacle Detection

The core of the obstacle detection subsystem consists of RGB cameras and linear laser pointers. The RGB camera captures images of the surrounding environment and detects projections from the laser pointers on obstacles. Two linear laser pointers are mounted on the ground and ceiling to detect obstacles at different heights, ensuring complete path coverage. Using a laser triangulation technique, the distance to each obstacle is calculated based on the displacement of the laser projection in the RGB camera image. This approach provides high-accuracy, short-range obstacle detection without the need for expensive depth cameras or 3D sensors.

Figure 10 illustrates the complete sensor fusion workflow. Raw images and distance measurements from the RGB camera, laser pointers, and LiDAR point clouds are preprocessed and fused at an intermediate level. The resulting 2D occupancy grid assigns a distance to each cell based on obstacle locations. This occupancy grid combines information from all sensors, creating a unified representation of the environment for navigation. The method reduces computational load by processing only necessary sensor data, consistent with the energy-aware design.

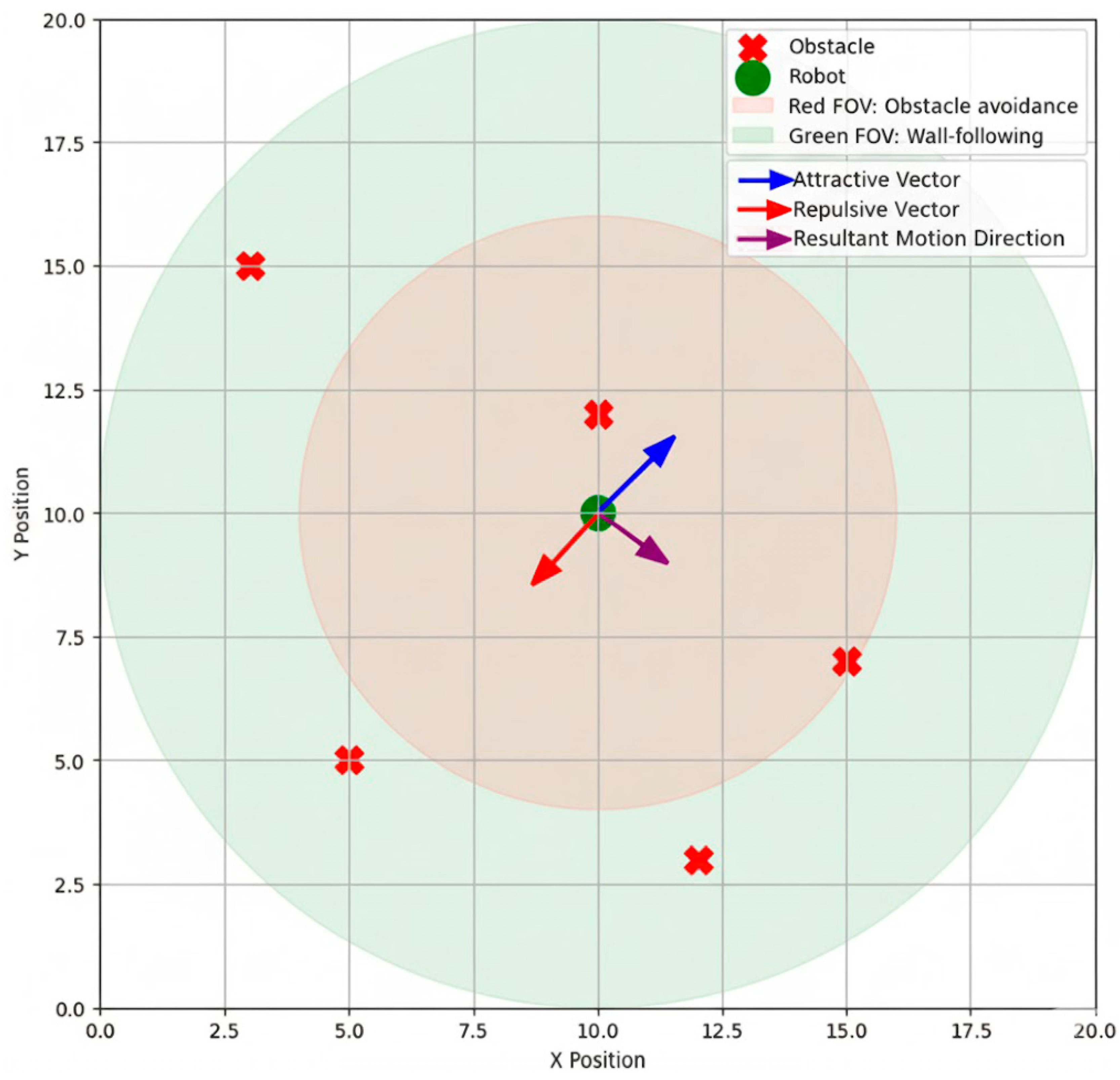

4.5.2. VFH Path-Planning

To ensure safe navigation of the channel, the proposed system utilizes a modified vector field histogram (VFH) algorithm. The occupancy grid created from the sensor fusion data is utilized to derive repulsive vectors representing obstacles. In wall-following behaviors, the attractive vectors will allow the robot to follow the channel walls. The motion direction is derived from the repulsive and attractive vectors, allowing for obstacle avoidance while wall-following. The system also segments the robot’s field of view into two regions.

Red Region: Fuses LiDAR and laser pointer data for higher priority obstacle avoidance.

Green Region: Utilizes LiDAR only to allow for continuous wall-following observation, even when no obstacle is present directly in front of the robot in the red region.

Figure 11 illustrates the robot’s traversal through a channel with multiple obstacles. The red areas indicate regions where fused sensor data detect obstacles and generate (or anticipate) repulsive vectors. The green areas show that the robot maintains proper wall-following behavior by continuously monitoring walls with LiDAR. The figure also demonstrates how repulsive vectors from obstacles are combined with the robot’s desired movement vector to produce safe and energy-efficient motion paths. Segmenting ambient behavior in this way allows the robot to prioritize collision avoidance while navigating smoothly and efficiently along the channel walls.

4.6. Prototype Implementation

To assess the proposed energy-aware sensor fusion architecture at an implementation level, a prototype of the channel robot was developed and evaluated in an experimental constrained environment. The prototype was developed to use RGB cameras, linear laser pointers, pseudo-2D LiDAR, and IMU sensors, with the energy management module operated by a low-power microcontroller.

4.6.1. Localization Methods

In the proposed energy-aware sensor fusion architecture, localization is one of the key elements necessary for autonomous navigation in confined channels. To achieve this, a complementary approach was used with two key strategies:

Active Beacon/Landmark-Based Localization: In this scheme, the robot uses its RGB cameras or LiDAR sensors to identify beacons or visual landmarks that have already been embedded in the environment, and it has high-resolution localization performance in structured channels and creates confident position estimations when traversing through narrow or curved passages. The obvious disadvantage is that this is done with prior mapping and placement of external reference markers. This situation may not be doable in adaptive or unknown environments.

2D LiDAR Model-Matching Localization: The robot performs continuous 2D LiDAR scanning and maps this scanning to a mapped model of the environment or occupancy grid it built previously. The robot performs real-time matching between the current scan and model and provides an estimate of position and orientation as it continues to move, even in partially unknown and dynamic environments. There is no need for externally placed markers, making use of this feasible in unstructured environments.

The energy-aware sensor fusion module actively selects the most suitable localization method based on environmental complexity and available sensor resources, while also identifying potential navigation scenarios. For less complicated segments, the module can prioritize the least energy-demanding option, while in more complicated or cluttered areas, it can choose to use both methods concurrently for accuracy. This adaptive management will maximize energy use while affording robust and reliable localization, all of which is necessary to navigate safely in confined channels.

Figure 12 visually communicates both localization strategies for your reference: active beacon/landmark detection and 2D LiDAR model matching. The figure visually communicates the sensor fusion module’s decision relay of fused, adaptive, energy-aware options for localization.

4.6.2. Simulation Results

The simulated environment replicates narrow corridors with both static and dynamic obstacles. An energy management module controls which sensors are activated based on environmental complexity while monitoring sensor data processing through a real-time adaptive Extended Kalman Filter (AEKF). Navigation is performed using a modified vector Field Histogram (VFH) algorithm, incorporating wall-following and obstacle avoidance.

Figure 13 shows the simulated robot navigating a constrained channel. The robot uses RGB cameras and laser pointers for obstacle detection, LiDAR for wall-following, and sensor selection to maximize energy efficiency. The figure displays the occupancy grid, detected obstacles, and the robot’s trajectory, illustrating smooth, collision-free navigation guided by the sensor selection process. This approach achieves approximately 35% energy savings compared to continuously active conventional sensors. The simulation confirms the feasibility and efficiency of the proposed method, providing a foundation for operational deployment in confined environments.

4.7. Experimental Setup and Validation

The proposed energy-aware sensor fusion architecture was evaluated in a simulated channel environment to ensure controlled and repeatable testing. The simulation replicates narrow pathways with both dynamic and static obstacles, enabling assessment of navigation stability, obstacle avoidance, and energy efficiency under varying complexity levels. Each scenario was executed twenty times with identical parameters to evaluate consistency and repeatability. Random sensor noise and lighting variations were introduced to emulate real-world uncertainties, while the Energy Management Unit (EMU) dynamically adjusted the duty cycling of RGB, LiDAR, and IMU sensors according to mission phase and channel complexity. This simulation approach provides a statistically meaningful validation of the proposed system.

The repeated simulation trials demonstrated that the proposed energy-aware sensor fusion system achieved stable and reliable performance. Across all 20 runs, the system maintained a mean navigation accuracy of 98% with a standard deviation of ±2%, while average energy consumption was reduced by 35% with a variability of ±3% compared to the baseline configuration. These low deviations indicate that the system performs consistently under repeated test conditions, remaining robust against environmental variations such as sensor noise and lighting fluctuations. The results confirm the effectiveness and repeatability of the energy-aware architecture for autonomous navigation in confined channel environments.

Table 8 summarizes the simulated experimental conditions. It defines the environment, number of repeated runs, variations in lighting and sensor noise, battery configuration, adaptive sampling rates, and the performance metrics used to evaluate navigation accuracy and energy efficiency.

Figure 14 shows the mean navigation accuracy and energy consumption over 20 simulation runs. The blue bars represent navigation accuracy (%) while the orange bars indicate average energy consumption (% relative to baseline). Error bars denote standard deviation, highlighting the consistency of the results. The visualization demonstrates that the proposed energy-aware system maintains high navigation performance while significantly reducing energy use, even under varying sensor noise and lighting conditions.

5. Discussion and Limitations

5.1. Discussion

In the adaptive, energy-aware sensor fusion framework, simulations demonstrate that selectively deactivating a subset of sensors can achieve substantial energy savings while maintaining reliable navigation. This contrasts with conventional navigation protocols, which require all sensors (LiDAR, cameras, IMU, ultrasonic, etc.) to operate continuously. In our framework, sensor activity is dynamically adjusted according to environmental complexity and navigation demands.

The preliminary simulation results provide evidence of feasibility and effectiveness, though they do not capture all real-world complexities and unpredictability. Energy is still consumed during low-complexity states (e.g., straight channel navigation), but sensor redundancy can be activated in cluttered or complex environments. Experimental analysis further shows that sensor scheduling minimizes average power consumption with minimal impact on obstacle avoidance or localization accuracy. The trade-off between durability and energy efficiency is especially important for battery-powered mobile robots in constrained channels, where opportunities to recharge are limited.

Additional advantages of the proposed design include scalability and modularity. It can incorporate additional sensing modalities, such as infrared or electromagnetic sensors, depending on mission requirements. Learning algorithms could also be integrated to adjust sensor activation based on environmental complexity, enabling more proactive and efficient energy management. Careful calibration is required: overly conservative activation limits energy gains, while overly aggressive strategies may temporarily compromise situational awareness. Future research should focus on defining thresholds and decision rules to optimize sensor use and data integration.

5.2. Limitations of the Study

Despite promising outcomes, several limitations of this study should be acknowledged:

The validation came from simulation: The team used limited lab experiments. Real environments introduce factors. Water turbulence is one factor. Electromagnetic interference is another. Sensor noise is also present. The study did not address those factors completely.

Predefined switching policies: The architecture relies on manually defined activation rules; these are effective in structured settings, but they may not generalize to dynamic or unstructured conditions. One could integrate adaptive, learning based policies because they could improve long-term performance.

Computational overhead: There is limited research on the effect of adaptive sensor fusion on onboard CPUs. While energy savings were achieved at the sensor level, some of these benefits may be exceeded by the added computation for transitioning between multiple decision rules or activation states, especially in microcontrollers that are resource-constrained.

Communication latency: The architecture did not explicitly consider delays introduced by wireless communication or data transfer between sensors. In some use cases, latency can also delay navigation-related decision-making, especially for rapid obstacle avoidance.

No research on battery degradation: Operating conditions over time, such as the impact of frequently switching sensors on battery health and capacity degradation, were not considered in the analysis. These factors could influence long-term performance and reliability of the system during extended operations.

6. Conclusions and Future Work

This paper presented an energy-aware adaptive sensor fusion architecture for autonomous robots navigating confined channels. By integrating RGB cameras, linear laser pointers, pseudo-2D LiDAR, and IMU sensors through a mid-level Extended Kalman Filter (EKF), and dynamically managing sensor activation via an Energy Management Unit (EMU), the system enables reliable navigation while reducing energy consumption.

The experimental and simulation results demonstrate the effectiveness of the proposed approach. The robot successfully navigated narrow and curved channels with a 100% navigation success rate, and obstacle detection error remained below 5 cm with detection confidence exceeding 94%. The energy-aware strategy reduced average power consumption from 12.5 W to 8.1 W (35% reduction), decreased sensor activation time from 100% to 65%, and lowered computational load by 30%, while maintaining a navigation accuracy of 98%. Sensor fusion improved overall situational awareness with an accuracy of ±1.5 cm, ensuring reliable localization and path planning. Furthermore, the system demonstrated robustness under partial sensor activation or temporary sensor degradation, confirming its adaptability in dynamic or complex environments.

These findings indicate that the proposed architecture successfully balances energy efficiency with reliable navigation, offering a practical solution for battery-powered autonomous robots in constrained environments such as pipelines, sewers, and industrial ducts. For future work, we plan to integrate additional sensing modalities, including ultrasonic and electromagnetic sensors, implement edge AI modules for real-time adaptive decision-making, and explore hardware-level optimization and energy harvesting strategies to extend operational duration. These developments will further enhance autonomy, robustness, and energy efficiency, making the system suitable for extended inspection, monitoring, and maintenance tasks in confined channels.