1. Introduction

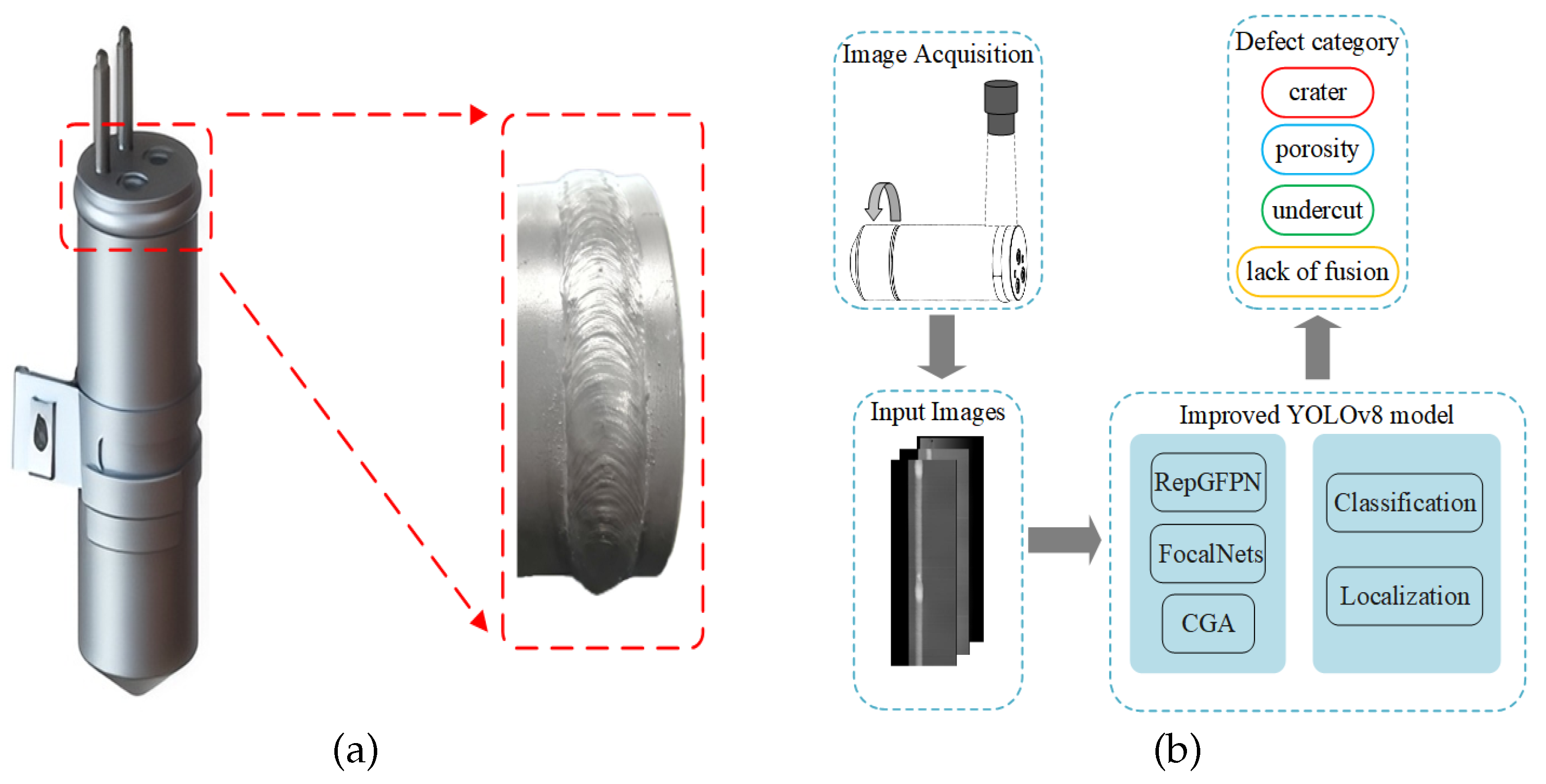

The liquid reservoir plays an important role in the automotive air conditioning system. Its primary function is to ensure that the refrigerant circulates in the appropriate state within the system. As shown in

Figure 1, the liquid reservoir is typically assembled through welding to ensure airtightness. In recent years, automatic welding has become an important part of intelligent manufacturing for improving efficiency [

1,

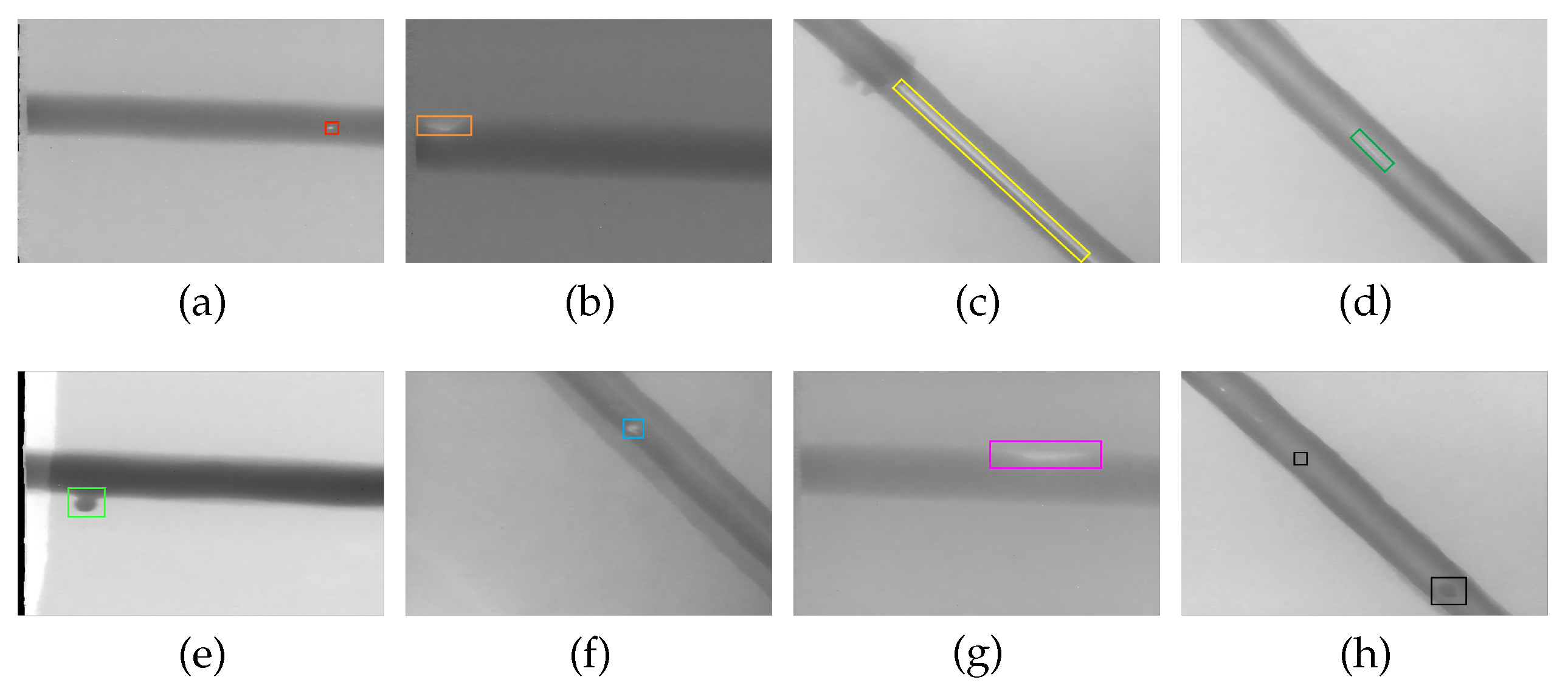

2]. External variables in the welding process, such as inaccurate temperature regulation and inadequate surface cleaning of liquid reservoir, can lead to weld defects. The most commonly occurring types of defects are craters, porosity, undercuts, and lack of fusion, with representative samples shown in

Figure 2. These defects directly undermine the sealing integrity of liquid reservoirs [

3]. If a liquid reservoir with weld defects is installed, it can adversely affect the air conditioning system and potentially compromise vehicle safety [

4,

5,

6]. Therefore, real-time monitoring and effective detection of weld defects after the liquid reservoir welding process is crucial [

7].

In practical liquid reservoir welding, the frequency of defect occurrence is relatively low, yet multiple types of defects may coexist simultaneously. There is significant variation between different categories of defects, and even within the same defect type, the size and shape can be highly complex and variable. For example, crater defects are characterized by intricate shapes and considerable variations in area. Porosity defects tend to have smaller areas, making them susceptible to missed detection. In contrast, undercut defects and lack of fusion defects are narrow in shape, exhibit large length variations, and may even span the entire weld seam. These characteristics significantly complicate the task of defect detection. Manual inspection, though widely practised, is not only time-consuming and labor-intensive but also subject to the subjective judgment and work experience of the workers, resulting in unreliable results. Consequently, researchers have explored advanced techniques such as eddy current testing [

8] and X-ray inspection [

9] for detecting weld defects. However, these methods rely on physical equipment which is associated with high operational costs and is vulnerable to environmental factors. In the context of intelligent manufacturing and automated production, these challenges can be addressed by integrating advanced technologies such as deep learning and automated visual inspection systems [

10,

11,

12].

Machine vision technology exhibits substantial potential for weld defect detection compared to the aforementioned non-destructive detection methods. Machine vision facilitates real-time image inspection while also offering comprehensive visual information regarding defects. Traditionally, weld defect detection is performed using image processing techniques combined with feature classification algorithms. Commonly employed feature classification algorithms include Support Vector Machines (SVMs) [

13,

14], Random Forest [

15], and Decision Trees [

16]. Malarvel et al. [

9] introduced a weld defect detection and classification approach that employs the OTSU algorithm for image preprocessing, followed by an SVM for defect classification. Likewise, Hu et al. [

17] proposed a weld defect detection methodology leveraging the BT-SVM classifier, which extracts defect attributes as classification features to identify weld defects. However, such methods encounter challenges in detecting complex shapes and small-sized defects. Furthermore, their performance is significantly influenced by environmental and lighting variations, leading to diminished adaptability and robustness.

In recent years, deep learning detection methods based on convolutional neural networks (CNNs) [

18,

19,

20] have found important applications and developments. Representative networks such as VGGNet [

21] and ResNet [

22] are highly effective in automatic feature extraction and overcome the limitations of poor robustness inherent in traditional machine learning algorithms. Currently, object detection algorithms are generally categorized into two main types: two-stage and one-stage detection algorithms. Two-stage algorithms comprise R-CNN [

23,

24] and Faster R-CNN [

25], while one-stage algorithms comprise YOLO [

26,

27,

28] and SSD [

29]. Numerous researchers have applied these algorithms to the field of weld defect detection. For instance, Liu et al. [

30] proposed the AF-RCNN algorithm, which integrates ResNet and FPN as its backbone while employing an efficient convolutional attention module (ECAM). Similarly, Chen et al. [

31] enhanced the Faster-RCNN algorithm by introducing the deep residual network Res2Net, which significantly improves feature extraction capabilities for weld defect detection.

However, these models enhance detection accuracy by increasing the number of parameters and model complexity, leading to excessive model size and limited inference speed. To address the challenge of low detection speed, Liu et al. [

32] introduced an enhanced LF-YOLO algorithm. By incorporating an Efficient Feature Extraction (EFE) module, the model attained a detection speed of 61.5 FPS and an average precision of 92.9% on X-ray images of weld seam defects. To address overfitting due to the limited size of metal welding defect datasets, Li et al. [

33] implemented Mosaic and Mixup augmentation techniques to augment the dataset. Additionally, they incorporated the lightweight GhostNet network to substitute the residual module in the CSP1 architecture, thereby reducing parameter count. The improved YOLOv5 algorithm adopted the Complete Intersection over Union (CIoU) loss function to improve bounding box regression precision. Wang et al. [

34] introduced the YOLO-AFK model to handle complex welding defect scenarios. By integrating the Fusion Attention Network (FANet) and Alterable Kernel Convolution (AKConv) and designing a Cross-Stage Partial Network Fusion (C2f) module, they increased the model’s parameter count by just 12.4M while boosting accuracy, mAP, and FPS. In early 2023, Ultralytics launched the YOLOv8 model [

35,

36], delivering faster inference speeds, enhanced precision, and greater ease of training and adjustment.

Despite these advancements, deep learning models still face significant challenges in detecting liquid reservoir weld defects. The high variability in the shape and size of the same type of defect limits the generalization ability of these models, resulting in poor detection performance. To overcome these limitations, we propose an improved model based on YOLOv8s. In summary, this work makes the following contributions:

(1) The model’s neck is enhanced through the integration of the improved Reparameterized Generalized Feature Pyramid Network (RepGFPN) and an additional detection head with a size of 160 × 160. This significantly improves the model’s feature fusion capability while increasing its sensitivity to detecting small-area defects, such as porosity, as well as narrow defects like undercut defects and lack of fusion defects.

(2) The Focal Modulation Network (FocalNets) structure is incorporated into the YOLOv8s framework, replacing the Spatial Pyramid Pooling Fast (SPPF) module. This enhancement mitigates the degradation of detailed feature information, enhancing the model’s effectiveness in detecting complex defects within liquid reservoir weld seams.

(3) The Cascaded Group Attention (CGA) is incorporated within the C2f structure, effectively suppressing the propagation of redundant feature information in the neck and enabling more precise defect detection.

The remainder of this article is structured as follows:

Section 2 presents the baseline, followed by a detailed discussion of the proposed improvement strategies and research methodologies. In

Section 3, we describe the comparative experiments and ablation studies we conducted and present the results. Finally,

Section 4 summarizes the conclusions and proposes potential directions for future research.

4. Conclusions

This paper proposes an improved YOLOv8s-based deep learning method for detecting liquid reservoir weld defects. To enhance the detection performance of YOLOv8s, a series of improvement strategies are implemented: first, the original neck network is optimized by introducing an improved RepGFPN feature fusion structure and adding a small-target detection head with a scale of 160 × 160; second, the SPPF module is replaced with the FocalNets module; finally, a C2f structure integrated with the CGA module is connected before the detection heads. Systematic ablation and comparative experiments demonstrate that the enhanced model achieves increases of 6.3% in mAP@0.5 and 4.3% in mAP@0.5:0.95 over the baseline. For specific defects, AP values improve by 3.9% for craters, 13.5% for porosity, 5.0% for undercuts, and 2.5% for lack of fusion, confirming the effectiveness of our modifications.

Future work will focus on extending the applicability boundaries of the proposed improved model by systematically validating its defect detection capabilities for industrial components made of diverse materials, such as metal alloys, engineering plastics, and composite materials. We will construct a cross-material sample dataset to investigate the model’s feature generalization performance on heterogeneous material surfaces. Furthermore, the multi-scale feature fusion mechanism will be optimized to account for differences in textual characteristics and defect manifestations across materials, thereby enhancing the model’s adaptability and practical utility in complex industrial environments.