FedECPA: An Efficient Countermeasure Against Scaling-Based Model Poisoning Attacks in Blockchain-Based Federated Learning †

Abstract

1. Introduction

- A practical scaling-based model poisoning attack on a BFL system is introduced. Specifically, we simulate an FL task with non-malicious and malicious clients for different attack strategies and settings, such that malicious clients use model weights on the blockchain to perform the attack.

- A novel defense, named FedECPA, an extension to FedAvg aggregation algorithm with Efficient Countermeasure against scaling-based model Poisoning Attacks, is proposed. The main idea of this defense is to employ the interquartile range (IQR) rule as a statistical method to filter out outlier model weights submitted by malicious clients. Specifically, model weights that fall outside of a lower and upper limit (based on the 25th and 75th percentiles of the participating clients’ model weights) are identified and excluded from aggregation.

- A comprehensive security analysis of FedECPA, demonstrating its Byzantine tolerance to mitigate scaling attacks effectively.

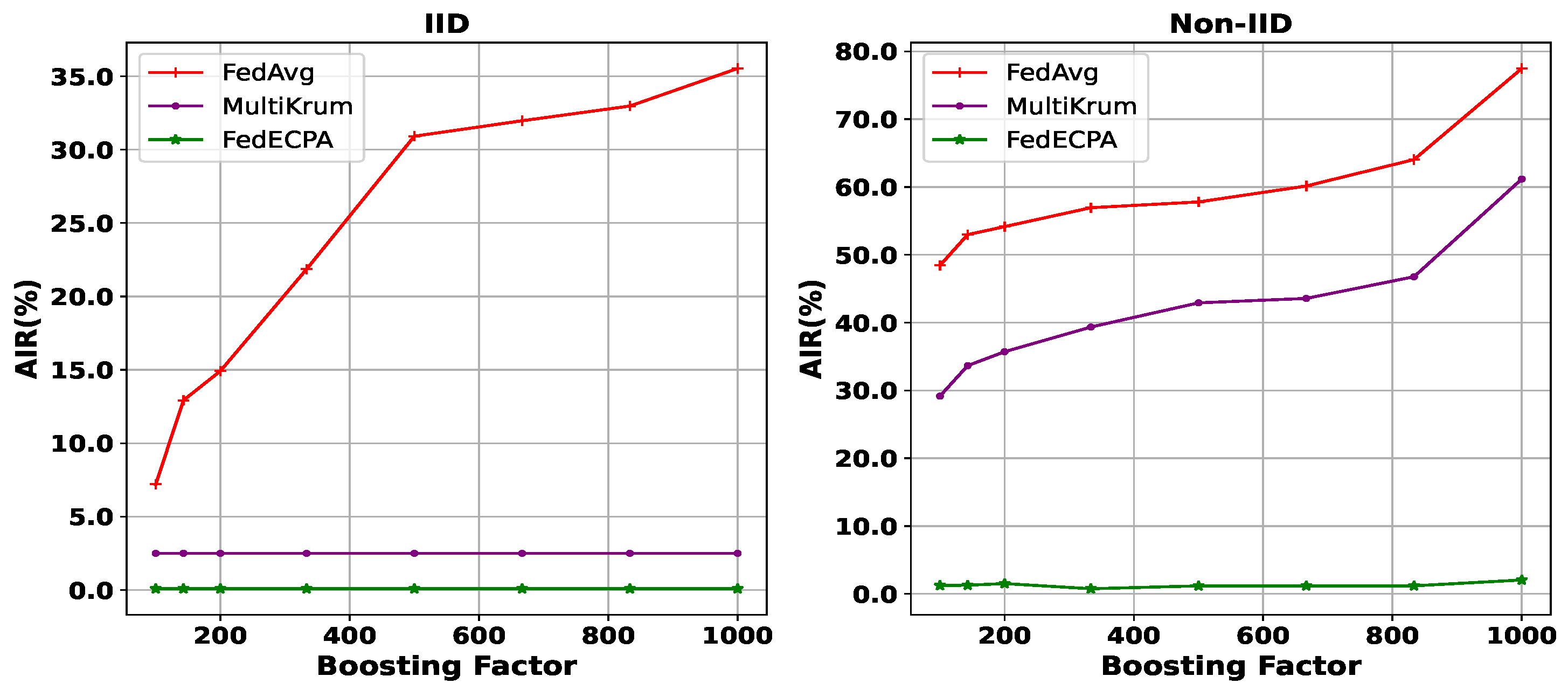

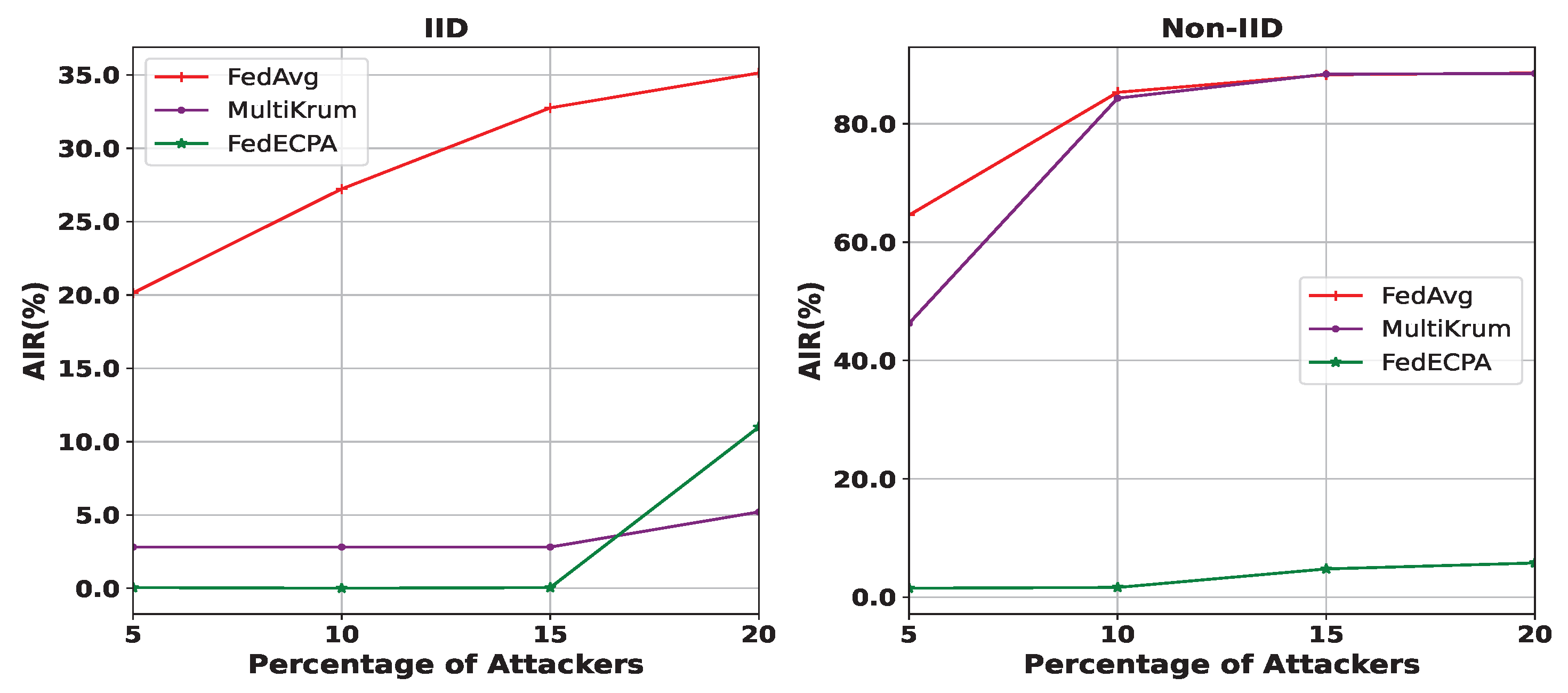

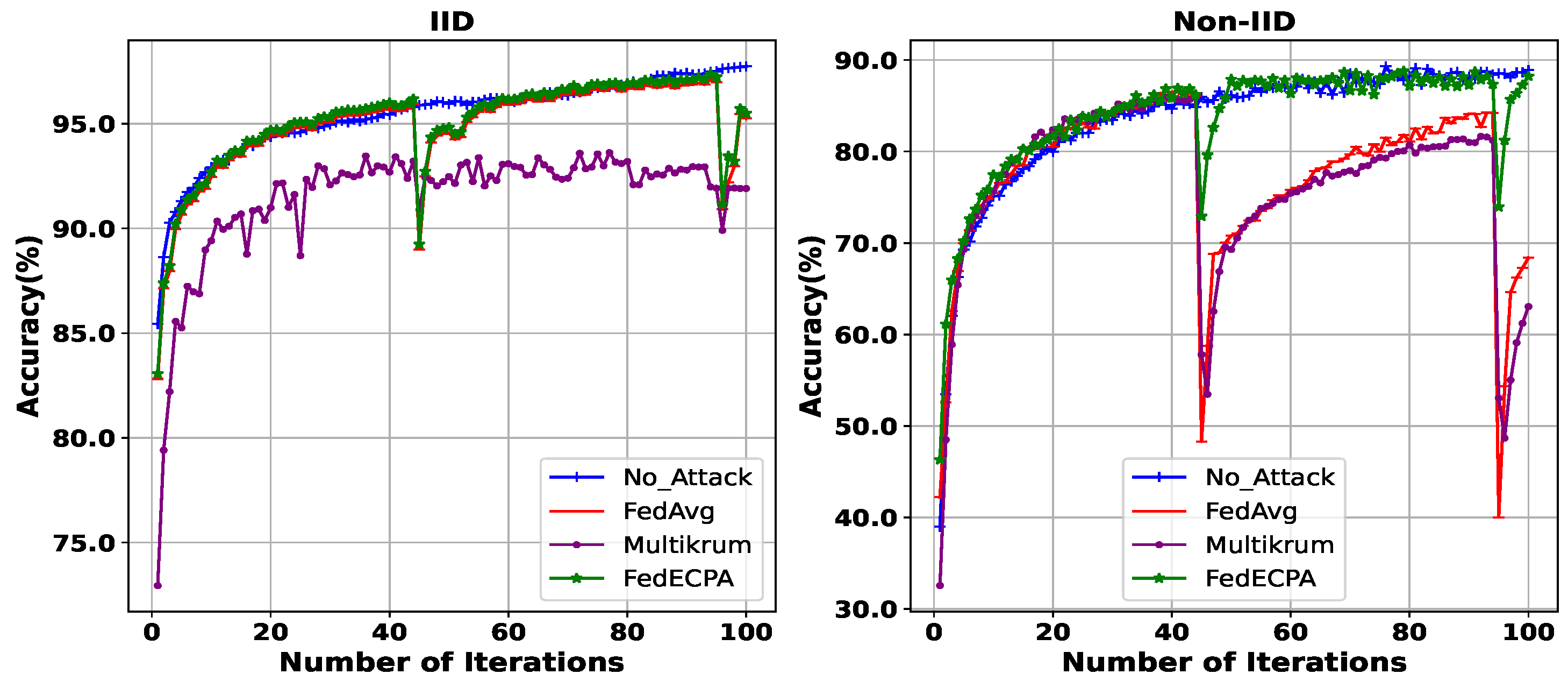

- Extensive experimental and comparative analyses are conducted in both IID and non-IID settings to demonstrate the impact of the model poisoning attack on BFL and the efficacy of the proposed defense mechanism. Results show that FedECPA, in most cases, outperforms Multikrum [27]—a traditionally robust FL aggregation algorithm that mitigates model poisoning attacks in most FL settings.

2. Related Work

3. Background

3.1. Blockchain and Smart Contract

3.2. Federated Learning (FL)

- Clients selection: The server selects clients to participate in training at a given learning iteration as a first step. The selection can happen randomly, based on predefined protocols, or all clients can participate [40]. Several predefined selection protocols exist, including those based on effective participation and fairness [41] and greedy selection based on confidence bounds [42].

- Local model training: Next, selected clients download the global model parameters and train local models based on their data and the downloaded global model. In each iteration, clients computewhere and are the local model weight of at iterations t and , respectively [43]. is the global model at the learning iteration , is a learning rate (an ML hyper-parameter that controls how quickly an algorithm learns or updates the estimates of a parameter), is the local loss function for , and and are local data with data size at client .

- Local model upload: After the local training, clients upload local model updates to the server for aggregation.

- Global model aggregation: A new global model is calculated on the server by executing an aggregation algorithm that merges clients’ updates. These aggregation algorithms can calculate the mean, such as FedAvg [44], or add momentum to the calculated mean as in FedAvgM [45]. In this work, we adopt FedAvg, which is the conventional and most popular aggregation algorithm. In FedAvg, the global model is computed aswhere is the model update of each client at the learning iteration t, is the number of data samples for each client, and D is the total number of data samples from all participating clients.

- Global model download: After aggregation, the participating clients download the newly computed global model for the next training iteration.

3.3. Blockchain-Based Federated Learning (BFL)

4. System and Threat Model

4.1. System Model

- Step 1: A requester requests a global model and publishes the task to the blockchain network.

- Step 2: The request is broadcast through the nodes in the network to FL clients.

- Step 3: Upon obtaining this request, FL clients train their local models based on their data and the current global model weights. This results in new local model weights.

- Step 4: After generating the local model weights, they are uploaded to the blockchain network for aggregation. This is done by submitting a transaction or calling the upload weights smart contract function.

- Step 5: Blockchain nodes collectively verify the local model weights from clients and record them on the blockchain ledger (in terms of transactions). They also aggregate these local model weights and generate the new global model.

- Step 6: Finally, the new global model is broadcast back to the participating clients for the next training iteration.

4.2. Threat Model

- Private knowledge: The malicious client(s) need(s) information about the number of participating FL clients (even if their model updates are encrypted). Although this information is highly restrictive server-side knowledge in a traditional FL, it will become non-private in BFL due to the open nature of blockchain. Since clients’ models are broadcast to all participating nodes, adversaries can exploit the visibility of these models to craft poisoning attacks. Moreover, once malicious clients’ models are recorded on-chain, they become irreversible, amplifying their negative effect on the global model. These malicious clients can infer this information from the number of blocks and transactions in the blockchain and leverage it to launch the model poisoning attack on the BFL system.

- Public knowledge: This includes the knowledge of the learning rate, the number of learning iterations, and the global model computed in each iteration. They are public parameters our malicious client(s) require. We assume that all client(s) participating in learning iterations have access to these parameters, and malicious clients can use them to launch model poisoning attacks.

4.3. Security Goals

- Unpoisoned global model: This goal ensures that the global model is not poisoned, given our earlier assumption of less than malicious clients. This means that we should only have non-malicious client(s) updates and eliminate poisoned updates. In other words, the malicious (poisoned) updates should not affect the global model.

- Non-Fake model updates: We aim to ensure that the local and global models are correctly calculated and guaranteed to be non-fake and authentic. In other words, we want to ensure that all generated global and local models are verified for correctness.

- No single point of failure and no trusted third party: Another goal is to ensure that the BFL is centralization-free by ensuring no single point (node or a party) of failure and no trusted third party is involved.

5. Proposed FedECPA Methodology

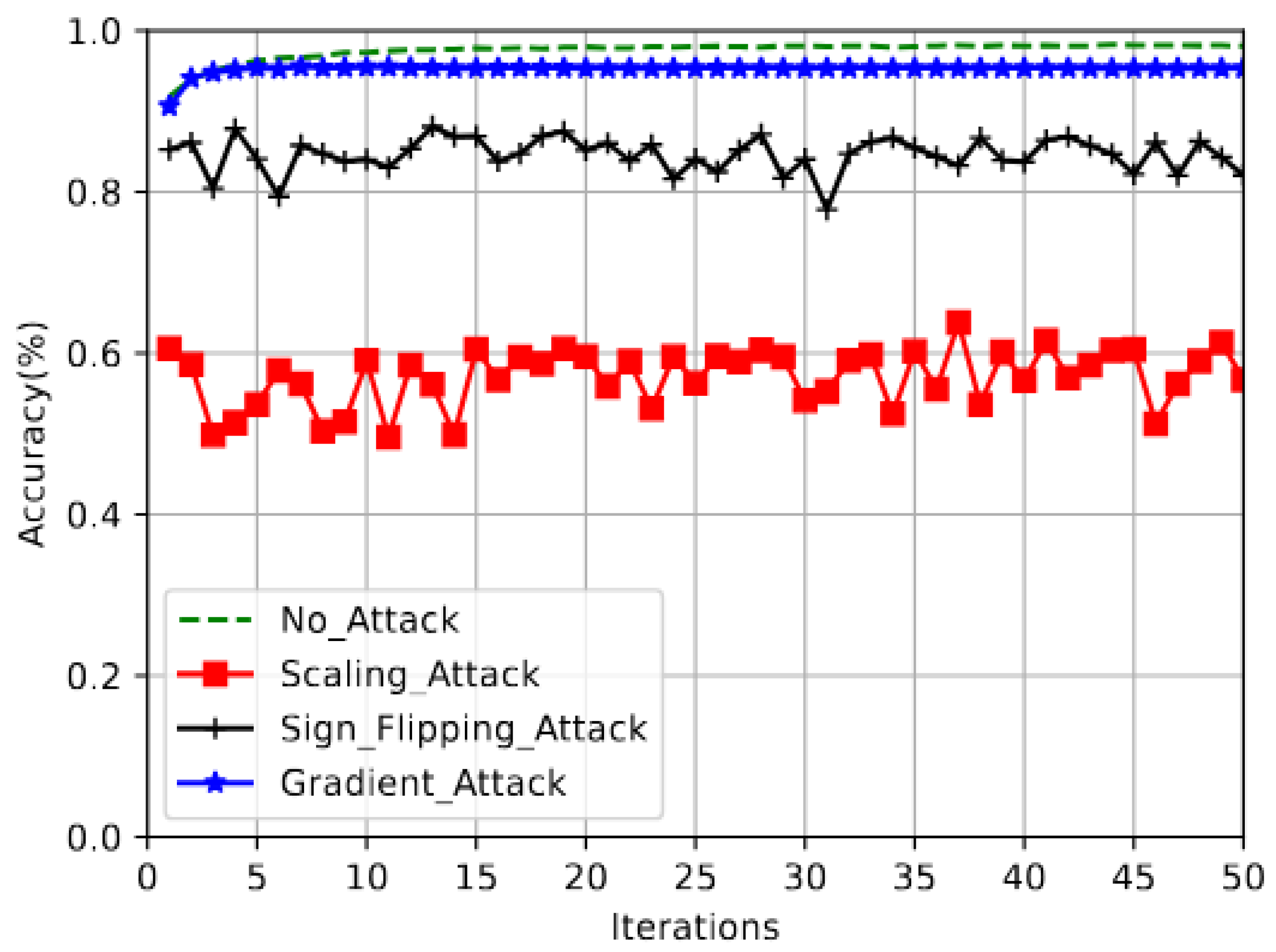

5.1. Model Poisoning Attacks

5.2. Attack Construction

5.3. FedECPA Defense Algorithm

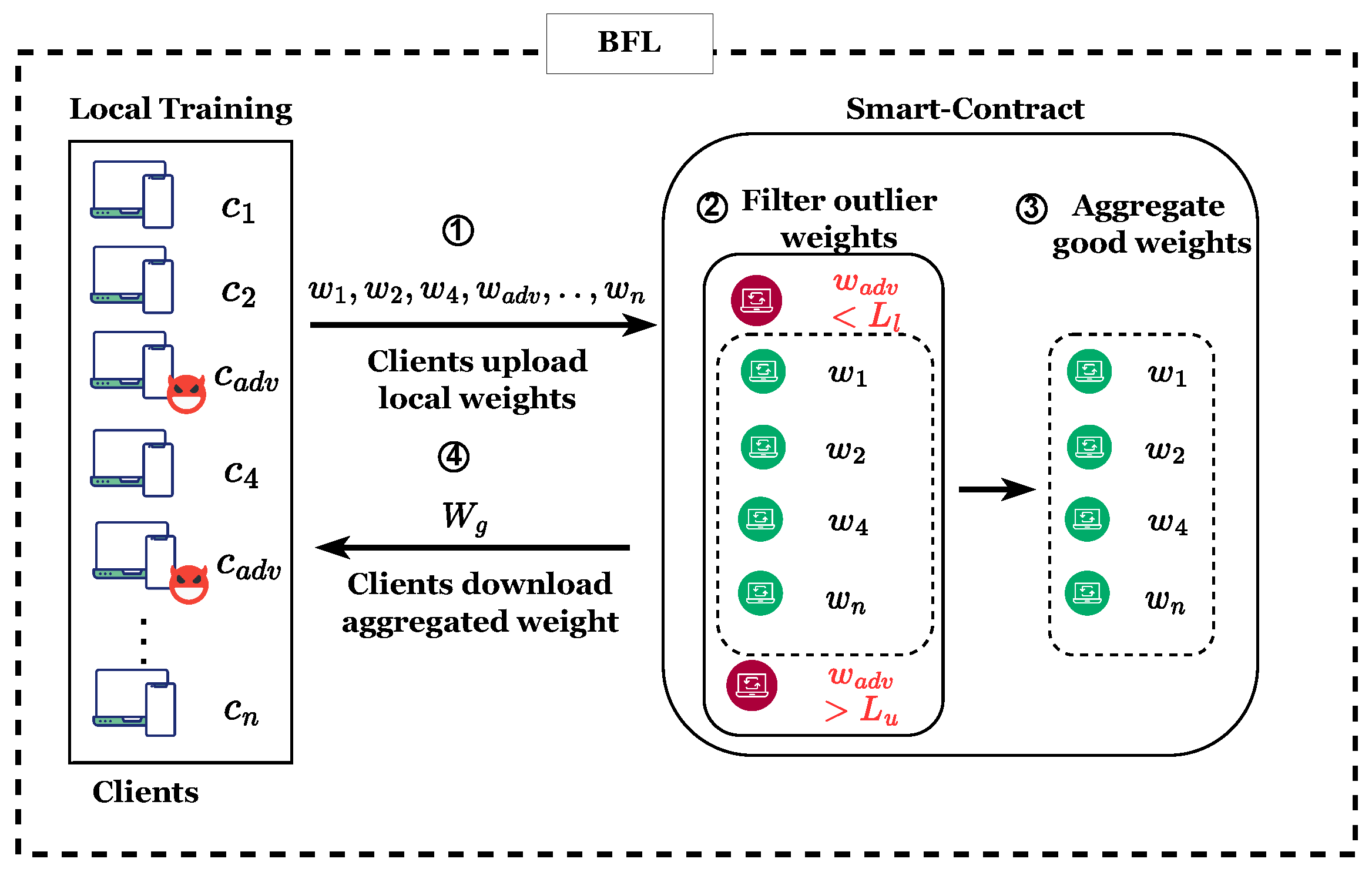

- Step 1: Each participating client performs its training and uploads local (malicious and non-malicious) model weights to the blockchain for aggregation (see lines 5–10 in Algorithm 1). It should be noted that the uploaded local weights come from both benign clients and malicious clients . They are added to the system as transactions.

| Algorithm 1: Pseudocode of FedECPA |

|

- Step 2: After the clients upload local weights, the function in the smart contract is invoked by blockchain nodes before performing the aggregations (see lines 16 in Algorithm 1). This function employs the IQR rule to identify malicious clients with outlier weights that exceed a lower limit and upper limit . Note that, following the common practice in some FL-related papers (e.g., [43,52]) which use neural networks for building local models, the notation denotes a two-dimensional vector of variable x that is generally adopted in this section. For example, in (13) is a two-dimensional vector representing the weights of the local model sent by i. These local weights are sorted across the vector dimension of each client. Then, the 25th and 75th percentiles are computed as follows:Following that, the is computed asThen, the Tukey fence lower and upper limits are computed asSuppose any client’s weights fall below or above . In that case, the client is treated as a malicious client, and its local model weights are excluded from the weights aggregation for that particular learning iteration as depicted in Figure 3.

- Step 3: Next, the weights returned from the function are aggregated to compute the new global model (see lines 28–30 in Algorithm 1).

- Step 4: Lastly, the new global model is added to a blockchain and downloaded by clients for the next training iteration (see lines 32 in Algorithm 1).

5.4. BFL Implementation

- : At the beginning of the task, an initial global model is randomly computed in the blockchain. This happens after a requester submits a BFL task request to the blockchain.

- : Each client invokes this function to obtain the current global weight. This global weight could be the initial or subsequent global weight.

- : This function is called by clients when submitting their local model weights, , to the blockchain network. Once verified, each weight gets recorded as a transaction in a block on the blockchain.

- : After the required minimum number of weights from the participating clients have been uploaded to the blockchain network, this function is invoked automatically to compute the aggregation of all the weights. The aggregated weight, , is the current global weight for the next training iteration t.

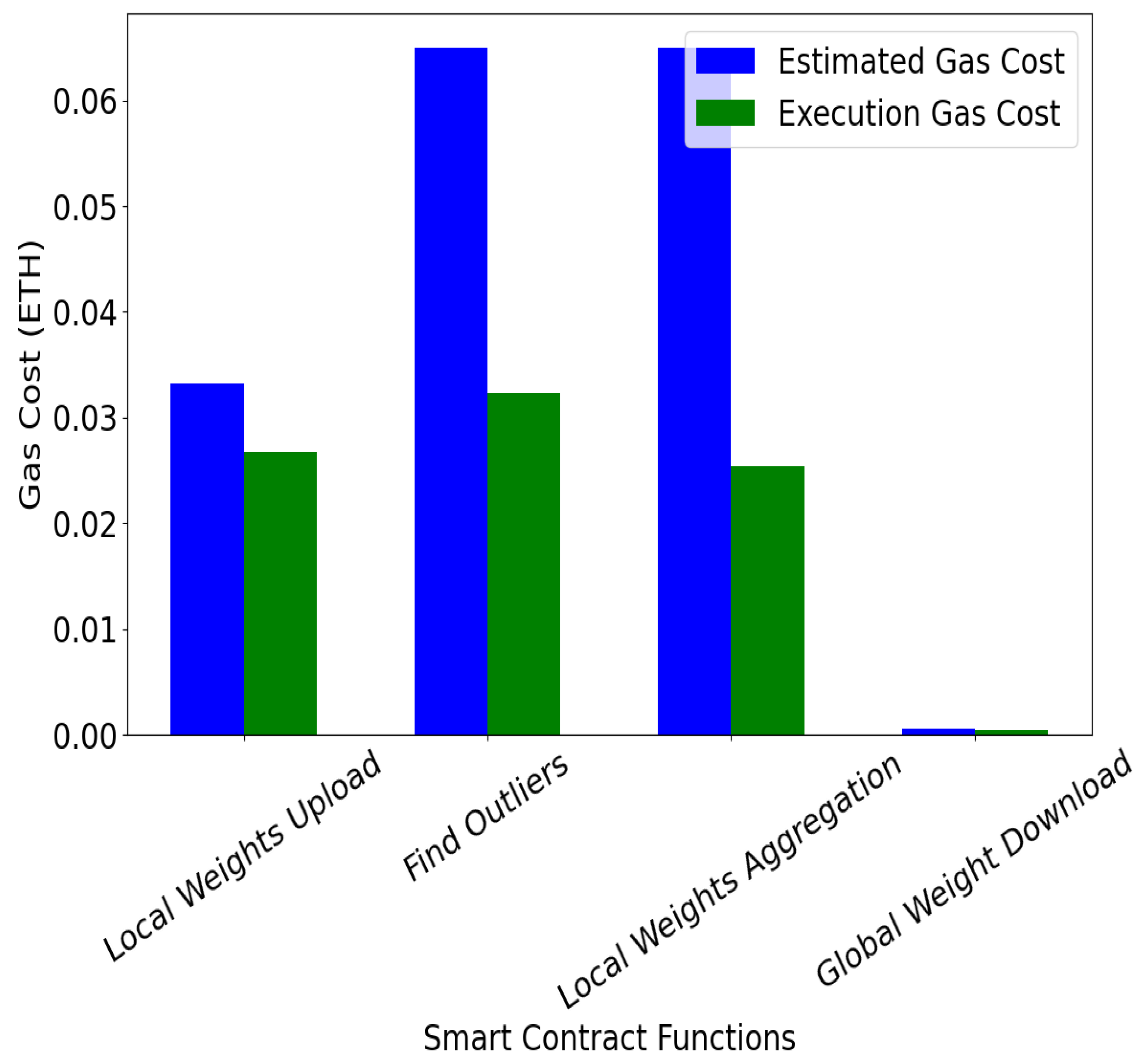

- : This function helps to filter out and isolate the outlier weights, , from being included in the aggregation process. To filter the outlier weights, the local model weights must be sorted. In our experiment, the weights were sorted before being uploaded to the blockchain because sorting on the blockchain requires a large amount of gas to be executed. With this function, only weights from non-malicious clients are considered for aggregation in each communication iteration.

6. Experimental Setup and Performance Evaluation

6.1. Experimental Setup

- FedAvg with attack: The traditional FedAvg techniques under scaling attack.

- Multikrum [43]: An FL aggregation algorithm that selects clients with the best scores for the local model weights. Specifically, it selects the weights , with the best scores and aggregates them as . This algorithm is widely adopted as a poisoning attack defense algorithm.

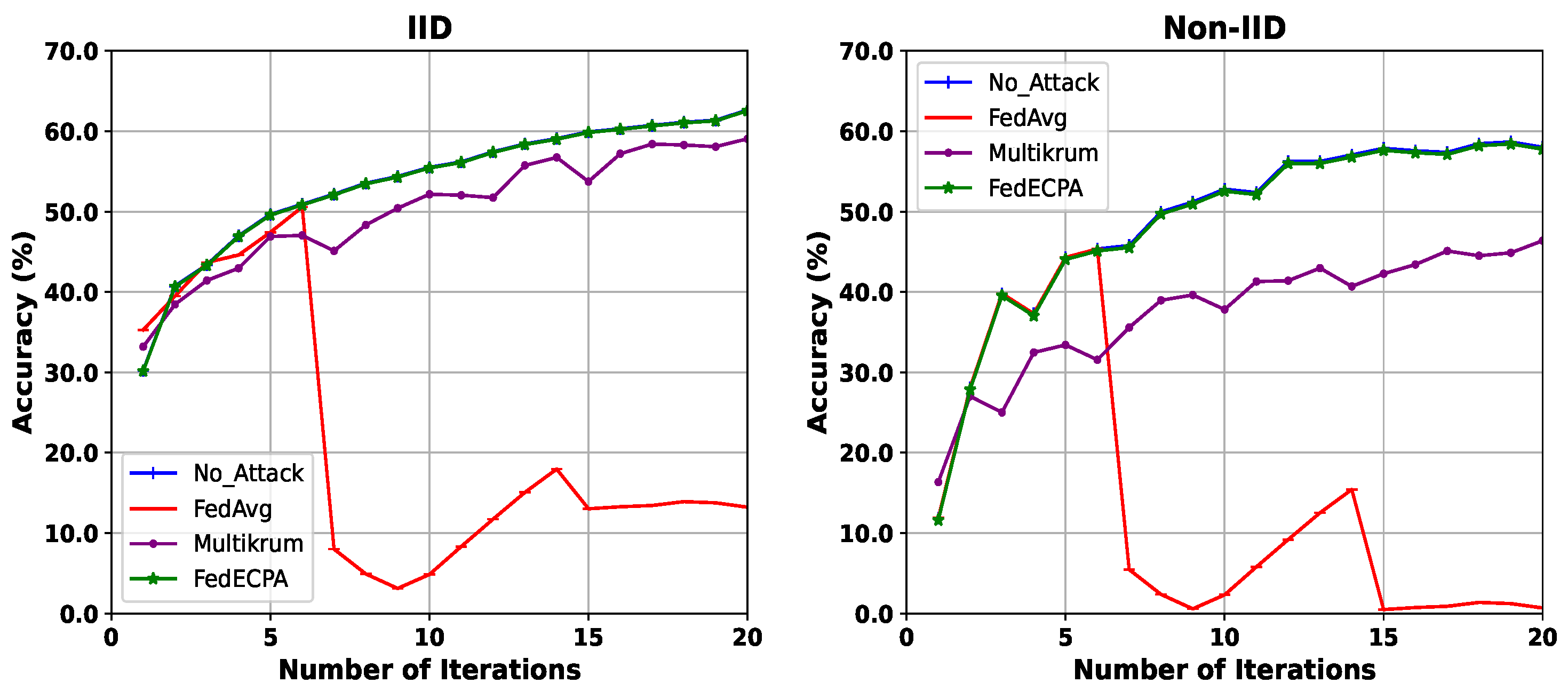

- Accuracy: It measures the efficacy of an ML model by estimating the ratio of accurate predictions of the model to the total number of predictions. In this work, we measure the BFL model accuracy without the attack, the BFL model accuracy with the attack, and the BFL model accuracy with the attack and the proposed defense. Note that accuracy here refers to test accuracy, i.e., the 10,000 testing samples that are assumed to be held aside for validation.

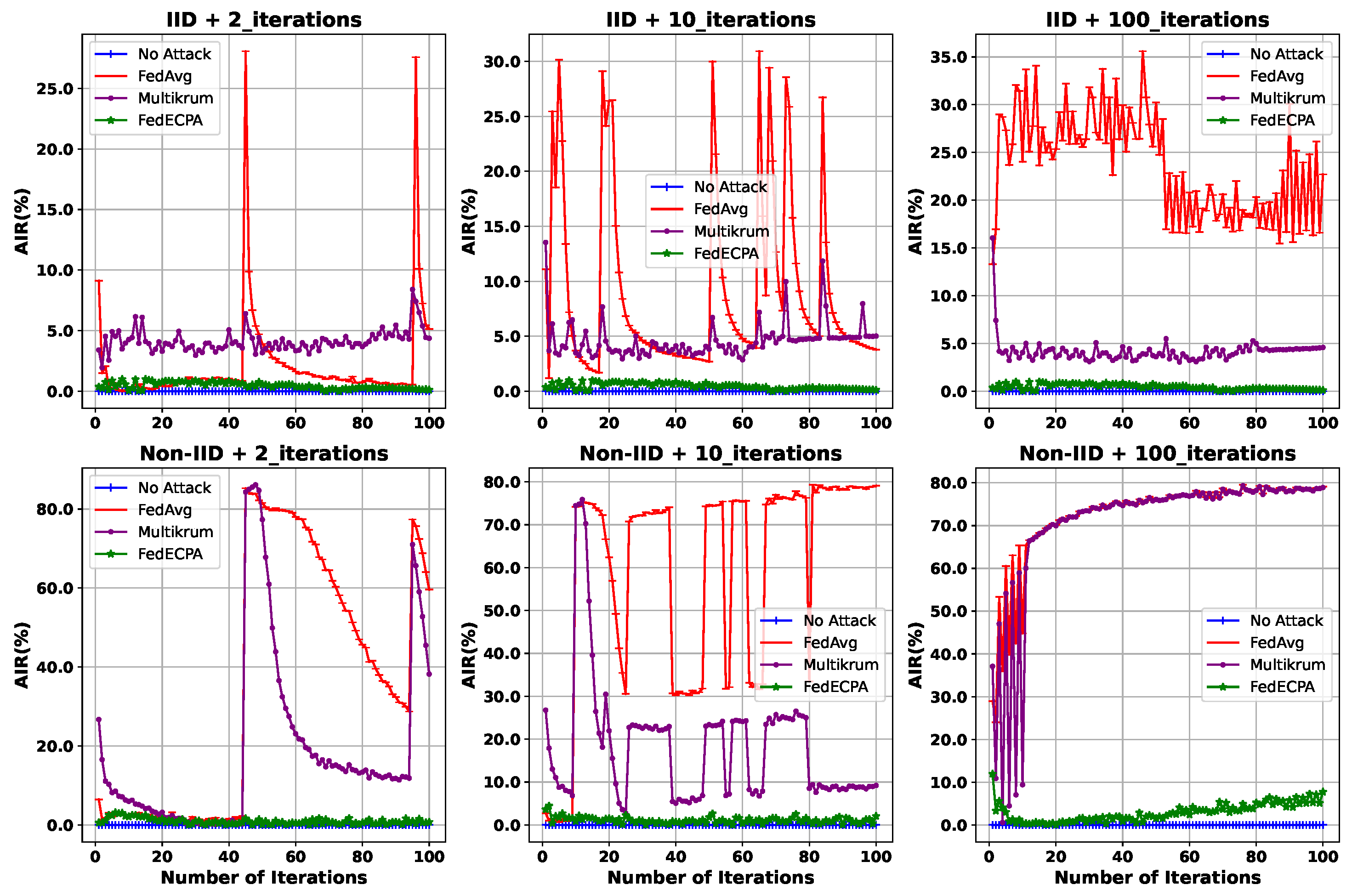

- Attack Impact Rate : AIR measures the effect of the model poisoning attack on the generated model performance. This is measured in terms of the accuracy difference between a general setting without an attack and under attack.

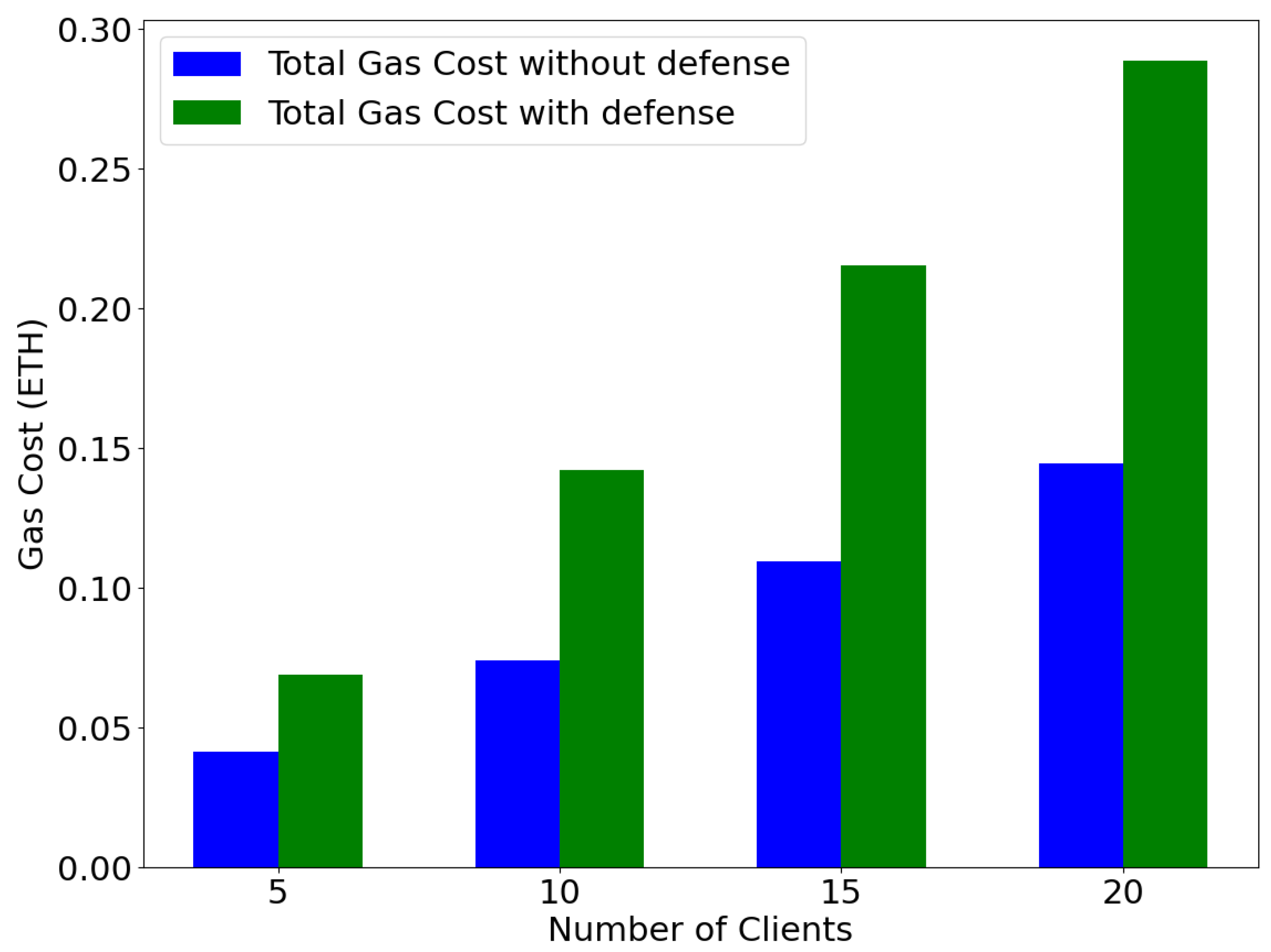

- : This is the total cost of gas used for all the operations carried out in the blockchain network. It is calculated by finding the product of the gas used and the unit gas price specified in the transaction: * . The unit in GWEI used for this experiment is 20, and the is paid in , a cryptocurrency.

6.2. Performance Evaluation of BFL

6.3. Blockchain Performance Evaluation

6.4. Complexity Analysis of FedECPA

6.5. Security Analysis of FedECPA

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Glossary

| Notation | Description |

| Local model weights of client i | |

| Local model weight of a malicious client/attacker | |

| Global model weight | |

| Learning rate | |

| n | Number of participating clients |

| v | Number of malicious participating clients/attackers |

| m | Percentage of attackers |

| Boosting factor; | |

| t | Iteration/Round |

| 25th percentile of the local model weights | |

| 75th percentile of the local model weights | |

| Model accuracy | |

| Inter quartile range of local model weights | |

| Lower limit of the IQR | |

| Upper limit of the IQR | |

| Attack impact rate |

References

- Wang, Z.; Hu, Q. Blockchain-based federated learning: A comprehensive survey. arXiv 2021, arXiv:2110.02182. [Google Scholar] [CrossRef]

- Qi, J.; Lin, F.; Chen, Z.; Tang, C.; Jia, R.; Li, M. High-quality model aggregation for blockchain-based federated learning via reputation-motivated task participation. IEEE Internet Things J. 2022, 9, 18378–18391. [Google Scholar] [CrossRef]

- Rodríguez-Barroso, N.; Jiménez-López, D.; Luzón, M.V.; Herrera, F.; Martínez-Cámara, E. Survey on federated learning threats: Concepts, taxonomy on attacks and defences, experimental study and challenges. Inf. Fusion 2023, 90, 148–173. [Google Scholar] [CrossRef]

- Guo, Y.; Sun, Y.; Hu, R.; Gong, Y. Hybrid Local SGD for Federated Learning with Heterogeneous Communications. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2021. [Google Scholar]

- Zhu, J.; Cao, J.; Saxena, D.; Jiang, S.; Ferradi, H. Blockchain-empowered federated learning: Challenges, solutions, and future directions. ACM Comput. Surv. 2023, 55, 1–31. [Google Scholar] [CrossRef]

- Xu, G.; Li, H.; Liu, S.; Yang, K.; Lin, X. Verifynet: Secure and verifiable federated learning. IEEE Trans. Inf. Forensics Secur. 2019, 15, 911–926. [Google Scholar] [CrossRef]

- Mäenpää, D. Towards Peer-to-Peer Federated Learning: Algorithms and Comparisons to Centralized Federated Learning. Master’s Thesis, Linköping University, Linköping, Sweden, 2021. [Google Scholar]

- Sattler, F.; Müller, K.R.; Wiegand, T.; Samek, W. On the Byzantine Robustness of Clustered Federated Learning. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 8861–8865. [Google Scholar] [CrossRef]

- Issa, W.; Moustafa, N.; Turnbull, B.; Sohrabi, N.; Tari, Z. Blockchain-based federated learning for securing internet of things: A comprehensive survey. ACM Comput. Surv. 2023, 55, 1–43. [Google Scholar] [CrossRef]

- Park, J.; Han, D.J.; Choi, M.; Moon, J. Sageflow: Robust federated learning against both stragglers and adversaries. In Proceedings of the 35th International Conference on Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34, pp. 840–851. [Google Scholar]

- Hua, G.; Zhu, L.; Wu, J.; Shen, C.; Zhou, L.; Lin, Q. Blockchain-based federated learning for intelligent control in heavy haul railway. IEEE Access 2020, 8, 176830–176839. [Google Scholar] [CrossRef]

- Korkmaz, C.; Kocas, H.E.; Uysal, A.; Masry, A.; Ozkasap, O.; Akgun, B. Chain FL: Decentralized federated machine learning via blockchain. In Proceedings of the 2020 Second International Conference on Blockchain Computing and Applications (BCCA), Antalya, Turkey, 2–5 November 2020; pp. 140–146. [Google Scholar]

- Mondal, A.; Virk, H.; Gupta, D. Beas: Blockchain enabled asynchronous & secure federated machine learning. arXiv 2022, arXiv:2202.02817. [Google Scholar] [CrossRef]

- Bandara, E.; Shetty, S.; Rahman, A.; Mukkamala, R.; Zhao, J.; Liang, X. Bassa-ML—A Blockchain and Model Card Integrated Federated Learning Provenance Platform. In Proceedings of the 2022 IEEE 19th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2022; pp. 753–759. [Google Scholar]

- Li, J.; Shao, Y.; Wei, K.; Ding, M.; Ma, C.; Shi, L.; Han, Z.; Poor, H.V. Blockchain Assisted Decentralized Federated Learning (BLADE-FL): Performance Analysis and Resource Allocation. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 2401–2415. [Google Scholar] [CrossRef]

- Cui, L.; Su, X.; Zhou, Y. A Fast Blockchain-based Federated Learning Framework with Compressed Communications. IEEE J. Sel. Areas Commun. 2022, 40, 3358–3372. [Google Scholar] [CrossRef]

- Wang, Q.; Liao, W.; Guo, Y.; McGuire, M.; Yu, W. Blockchain-Empowered Federated Learning Through Model and Feature Calibration. IEEE Internet Things J. 2023, 11, 5770–5780. [Google Scholar] [CrossRef]

- Jiang, Y.; Ma, B.; Wang, X.; Yu, G.; Yu, P.; Wang, Z.; Ni, W.; Liu, R.P. Blockchained Federated Learning for Internet of Things. ACM Comput. Surv. 2024, 56, 1–37. [Google Scholar] [CrossRef]

- Ababio, I.B.; Bieniek, J.; Rahouti, M.; Hayajneh, T.; Aledhari, M.; Verma, D.C.; Chehri, A. A Blockchain-Assisted Federated Learning Framework for Secure Data Sharing in Industrial IoT. Future Internet 2025, 17, 13. [Google Scholar] [CrossRef]

- Moudoud, H.; Cherkaoui, S. Toward Secure and Private Federated Learning for IoT using Blockchain. In Proceedings of the GLOBECOM 2022—2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022. [Google Scholar] [CrossRef]

- Yang, Q.; Xu, W.; Wang, T.; Wang, H.; Wu, X.; Cao, B.; Zhang, S. Blockchain-Based Decentralized Federated Learning With On-Chain Model Aggregation and Incentive Mechanism for Industrial IoT. IEEE Trans. Ind. Inform. 2024, 5, 6420–6429. [Google Scholar] [CrossRef]

- García-Márquez, M.; Rodríguez-Barroso, N.; Luzón, M.; Herrera, F. Krum Federated Chain (KFC): Using blockchain to defend against adversarial attacks in Federated Learning. arXiv 2025, arXiv:2502.06917. [Google Scholar] [CrossRef]

- Ma, Z.; Ma, J.; Miao, Y.; Li, Y.; Deng, R.H. ShieldFL: Mitigating Model Poisoning Attacks in Privacy-Preserving Federated Learning. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1639–1654. [Google Scholar] [CrossRef]

- Guo, J.; Li, H.; Huang, F.; Liu, Z.; Peng, Y.; Li, X.; Ma, J.; Menon, V.G.; Igorevich, K.K. ADFL: A Poisoning Attack Defense Framework for Horizontal Federated Learning. IEEE Trans. Ind. Inform. 2022, 18, 6526–6536. [Google Scholar] [CrossRef]

- Zhang, Z.; Cao, X.; Jia, J.; Gong, N.Z. FLDetector: Defending federated learning against model poisoning attacks via detecting malicious clients. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 2545–2555. [Google Scholar]

- Olapojoye, R.; Baza, M.; Salman, T. On the Analysis of Model Poisoning Attacks Against Blockchain-Based Federated Learning. In Proceedings of the 2024 IEEE 21st Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 6–9 January 2024; pp. 943–949. [Google Scholar]

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine learning with adversaries: Byzantine tolerant gradient descent. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Rückel, T.; Sedlmeir, J.; Hofmann, P. Fairness, integrity, and privacy in a scalable blockchain-based federated learning system. Comput. Netw. 2022, 202, 108621. [Google Scholar] [CrossRef]

- Hu, Q.; Wang, Z.; Xu, M.; Cheng, X. Blockchain and federated edge learning for privacy-preserving mobile crowdsensing. IEEE Internet Things J. 2021, 10, 12000–12011. [Google Scholar] [CrossRef]

- Lyu, L.; Yu, H.; Yang, Q. Threats to federated learning: A survey. arXiv 2020, arXiv:2003.02133. [Google Scholar] [CrossRef]

- Wang, N.; Yang, W.; Wang, X.; Wu, L.; Guan, Z.; Du, X.; Guizani, M. A blockchain based privacy-preserving federated learning scheme for Internet of Vehicles. Digit. Commun. Netw. 2024, 10, 126–134. [Google Scholar] [CrossRef]

- Salman, T.; Zolanvari, M.; Erbad, A.; Jain, R.; Samaka, M. Security services using blockchains: A state of the art survey. IEEE Commun. Surv. Tutor. 2018, 21, 858–880. [Google Scholar] [CrossRef]

- Hildebrand, B.; Baza, M.; Salman, T.; Tabassum, S.; Konatham, B.; Amsaad, F.; Razaque, A. A comprehensive review on blockchains for Internet of Vehicles: Challenges and directions. Comput. Sci. Rev. 2023, 48, 100547. [Google Scholar] [CrossRef]

- Rajasekaran, A.S.; Azees, M.; Al-Turjman, F. A comprehensive survey on blockchain technology. Sustain. Energy Technol. Assess. 2022, 52, 102039. [Google Scholar] [CrossRef]

- Hewa, T.M.; Hu, Y.; Liyanage, M.; Kanhare, S.S.; Ylianttila, M. Survey on blockchain-based smart contracts: Technical aspects and future research. IEEE Access 2021, 9, 87643–87662. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pham, Q.V.; Pathirana, P.N.; Le, L.B.; Seneviratne, A.; Li, J.; Niyato, D.; Poor, H.V. Federated learning meets blockchain in edge computing: Opportunities and challenges. IEEE Internet Things J. 2021, 8, 12806–12825. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 12:1–12:19. [Google Scholar] [CrossRef]

- Liang, X.; Liu, Y.; Chen, T.; Liu, M.; Yang, Q. Federated Transfer Reinforcement Learning for Autonomous Driving. In Adaptation, Learning, and Optimization; Springer: Cham, Switzerland, 2022; Volume 27, pp. 357–371. [Google Scholar]

- Xu, J.; Wang, H. Client Selection and Bandwidth Allocation in Wireless Federated Learning Networks: A Long-Term Perspective. IEEE Trans. Wirel. Commun. 2021, 20, 1188–1200. [Google Scholar] [CrossRef]

- Huang, T.; Lin, W.; Shen, L.; Li, K.; Zomaya, A.Y. Stochastic Client Selection for Federated Learning with Volatile Clients. IEEE Internet Things J. 2022, 9, 20055–20070. [Google Scholar] [CrossRef]

- Shi, F.; Hu, C.; Lin, W.; Fan, L.; Huang, T.; Wu, W. VFedCS: Optimizing Client Selection for Volatile Federated Learning. IEEE Internet Things J. 2022, 9, 24995–25010. [Google Scholar] [CrossRef]

- Fung, C.; Yoon, C.J.; Beschastnikh, I. Mitigating sybils in federated learning poisoning. arXiv 2018, arXiv:1808.04866. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Hsu, T.M.H.; Qi, H.; Brown, M. Measuring the effects of non-identical data distribution for federated visual classification. arXiv 2019, arXiv:1909.06335. [Google Scholar] [CrossRef]

- Qu, Y.; Uddin, M.P.; Gan, C.; Xiang, Y.; Gao, L.; Yearwood, J. Blockchain-Enabled Federated Learning: A Survey. ACM Comput. Surv. 2022, 55, 1–35. [Google Scholar] [CrossRef]

- Nicolazzo, S.; Arazzi, M.; Nocera, A.; Conti, M. Privacy-Preserving in Blockchain-based Federated Learning Systems. arXiv 2024, arXiv:2401.03552. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020; pp. 2938–2948. [Google Scholar]

- Xie, C.; Koyejo, S.; Gupta, I. Zeno++: Robust fully asynchronous SGD. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 10495–10503. [Google Scholar]

- Wan, C.; Huang, F.; Zhao, X. Average gradient-based adversarial attack. IEEE Trans. Multimed. 2023, 25, 9572–9585. [Google Scholar] [CrossRef]

- Dash, C.S.K.; Behera, A.K.; Dehuri, S.; Ghosh, A. An outliers detection and elimination framework in classification task of data mining. Decis. Anal. J. 2023, 6, 100164. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Rieger, P.; De Viti, R.; Chen, H.; Brandenburg, B.B.; Yalame, H.; Möllering, H.; Fereidooni, H.; Marchal, S.; Miettinen, M.; et al. FLAME: Taming backdoors in federated learning. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 1415–1432. [Google Scholar]

- Wood, G. Ethereum: A secure decentralized generalized transaction ledger. Ethereum Proj. Yellow Pap. 2014, 151, 1–32. [Google Scholar]

- Ganache. 2021. Available online: https://trufflesuite.com/docs/ganache/ (accessed on 22 September 2025).

- LeCun, Y. The MNIST Database of Handwritten Digits. 1998. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 22 September 2025).

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. Master’s Thesis, University of Tront, Toronto, ON, Canada, 2009. [Google Scholar]

- Li, Q.; Diao, Y.; Chen, Q.; He, B. Federated Learning on Non-IID Data Silos: An Experimental Study. arXiv 2021, arXiv:2102.02079. [Google Scholar] [CrossRef]

- Guo, J.; Liu, C. Practical poisoning attacks on neural networks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 142–158. [Google Scholar]

- Flower Documentation. 2021. Available online: https://flower.dev/docs/ (accessed on 22 September 2025).

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 22 September 2025).

| Property | FedECPA (Ours) | Blade-FL [15] | Krum [27] | Shield-FL [23] | ADFL [24] | FLDetector [25] |

|---|---|---|---|---|---|---|

| Technique/ Approach | IQR-based detection of outlier model weights; exclude outliers before aggregation | Clients are trainers + miners; PoW secures updates | Selects local model most similar to others | Cosine similarity between local and global weights | Clients generate proofs via garbled aggregation | Predicts client updates, assigns consistency score |

| Strengths | Detects malicious clients every round; robust to a range of attack strategies | Reduces privacy leakage; strong blockchain security | Limits impact of single malicious client | Detects distant outliers | Verifiability; privacy-preserving | Detects sudden deviations |

| Limitations | Fails if majority are malicious | No malicious client detection | Fails if majority are malicious | Fails if poisoned model is near aggregated weights | High overhead; weak against adaptive adversaries | Misses consistent but poisoned updates |

| Learning Iteration | Accuracy | ||

|---|---|---|---|

| FedAvg | MultiKrum | FedECPA | |

| 45th | 0.69 | 0.92 | 0.97 |

| 50th | 0.92 | 0.93 | 0.98 |

| 95th | 0.70 | 0.93 | 0.98 |

| 100th | 0.93 | 0.94 | 0.98 |

| Learning Iteration | Accuracy | ||

|---|---|---|---|

| FedAvg | MultiKrum | FedECPA | |

| 45th | 0.01 | 0.16 | 0.87 |

| 50th | 0.04 | 0.01 | 0.87 |

| 95th | 0.11 | 0.18 | 0.89 |

| 100th | 0.29 | 0.51 | 0.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Olapojoye, R.; Salman, T.; Baza, M.; Alshehri, A. FedECPA: An Efficient Countermeasure Against Scaling-Based Model Poisoning Attacks in Blockchain-Based Federated Learning. Sensors 2025, 25, 6343. https://doi.org/10.3390/s25206343

Olapojoye R, Salman T, Baza M, Alshehri A. FedECPA: An Efficient Countermeasure Against Scaling-Based Model Poisoning Attacks in Blockchain-Based Federated Learning. Sensors. 2025; 25(20):6343. https://doi.org/10.3390/s25206343

Chicago/Turabian StyleOlapojoye, Rukayat, Tara Salman, Mohamed Baza, and Ali Alshehri. 2025. "FedECPA: An Efficient Countermeasure Against Scaling-Based Model Poisoning Attacks in Blockchain-Based Federated Learning" Sensors 25, no. 20: 6343. https://doi.org/10.3390/s25206343

APA StyleOlapojoye, R., Salman, T., Baza, M., & Alshehri, A. (2025). FedECPA: An Efficient Countermeasure Against Scaling-Based Model Poisoning Attacks in Blockchain-Based Federated Learning. Sensors, 25(20), 6343. https://doi.org/10.3390/s25206343