Robust Underwater Docking Visual Guidance and Positioning Method Based on a Cage-Type Dual-Layer Guiding Light Array

Abstract

1. Introduction

- (1)

- A visual guidance scheme based on a dual-layer light array is proposed to mitigate the limitations of onboard visual systems with restricted fields of view. By optimizing the spatial configuration of the light sources, the scheme ensures continuous target visibility within the docking station and significantly enhances the reliability of the autonomous docking process.

- (2)

- Based on this guidance scheme, a corresponding visual localization method for the dual-layer guiding light array is presented. This method dynamically distinguishes between the front-layer and rear-layer light sources at each docking stage, ensuring stable optical guidance with at least one layer at any given time. When the number of detected light sources in a single layer reaches four or more, the method performs robust tag matching and achieves pose estimation in world coordinates. The localization of each layer is performed independently to guarantee the robustness of the overall system.

- (3)

- To verify the effectiveness and accuracy of the proposed method, a series of simulation and pool experiments were conducted. The experimental results demonstrate that the proposed method not only adapts effectively to changes in the field of view but also robustly addresses issues such as missing and spurious light beacons, thus significantly improving the robustness of the AUV autonomous docking process.

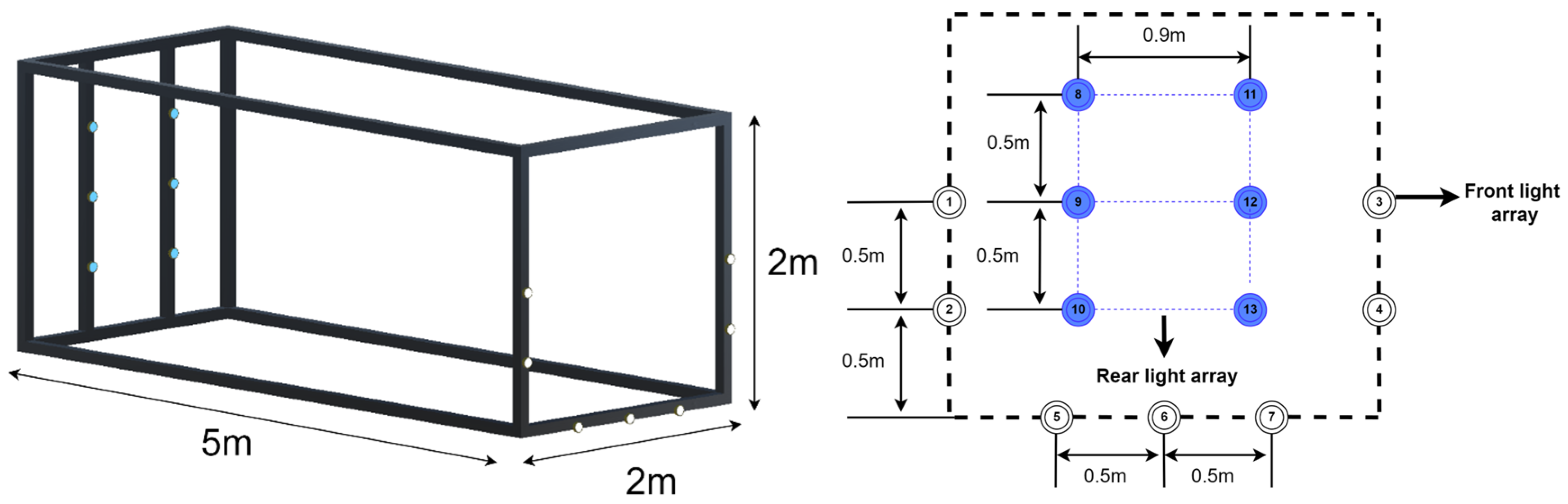

2. Dual-Layer Cage-Type Guide Light Array Position Scheme

2.1. Design of Dual-Layer Light Array for Cage-Type Docking System

- (1)

- Front-layer light array: The front layer should support long-distance detection and adopt a dispersed, asymmetrical configuration to mitigate light overlap or merging caused by optical diffusion.

- (2)

- Rear-layer light array: The rear layer should adopt a compact layout with lights featuring narrow beam angles, making it suitable for operation in restricted field-of-view scenarios and ensuring reliable detection at short distances.

- (3)

- Structural features: The overall arrangement should present distinct spatial patterns, allowing the AUV to accurately differentiate and match individual light beacons as they progressively enter the camera’s field of view.

- (4)

- Deployment location: Since AUVs typically approach the docking station while ascending from deeper to shallower depths, the front-layer light array should be primarily deployed near the lower section of the docking station to facilitate early detection and localization.

2.2. Phase-Wise Analysis of Docking Process

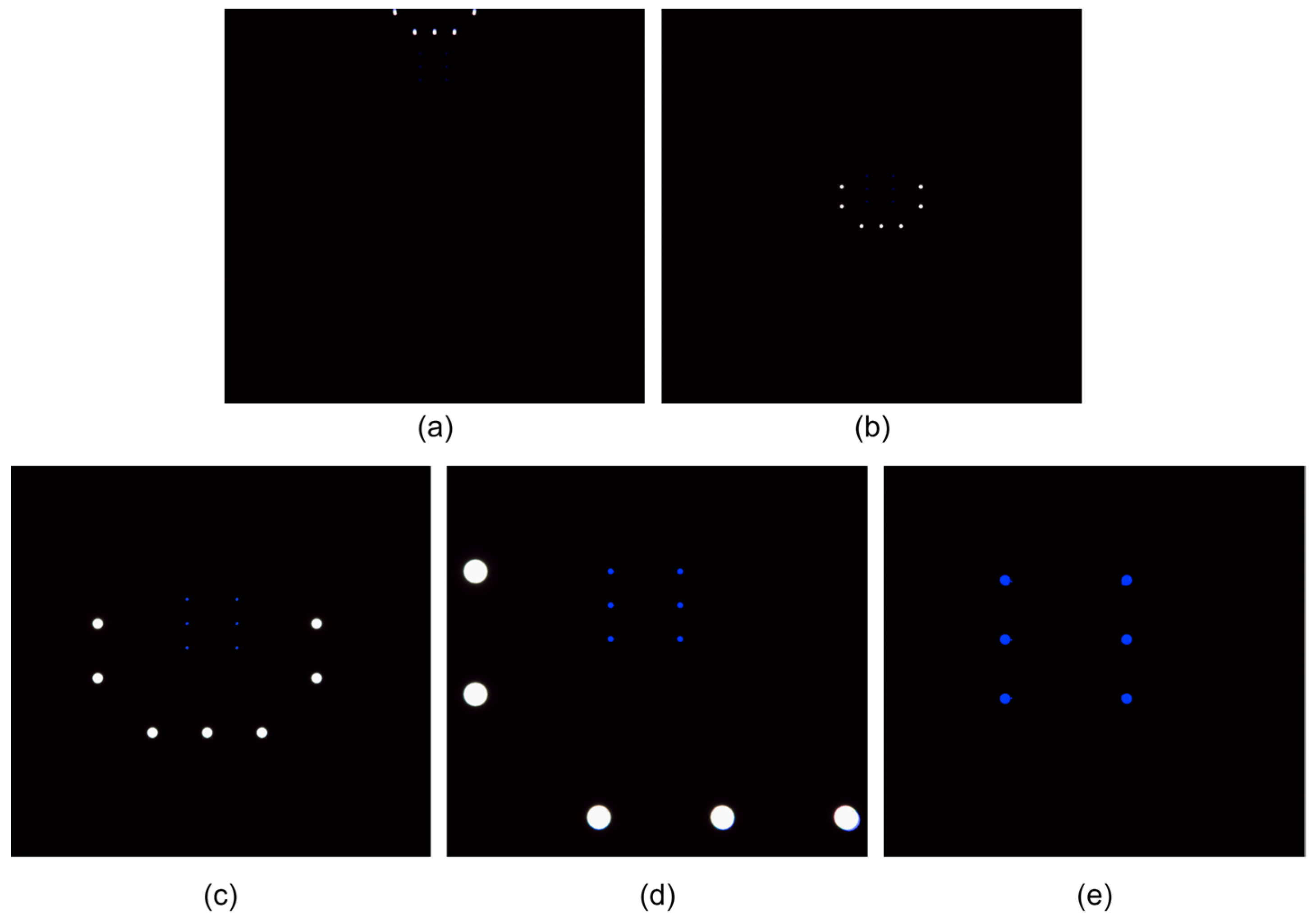

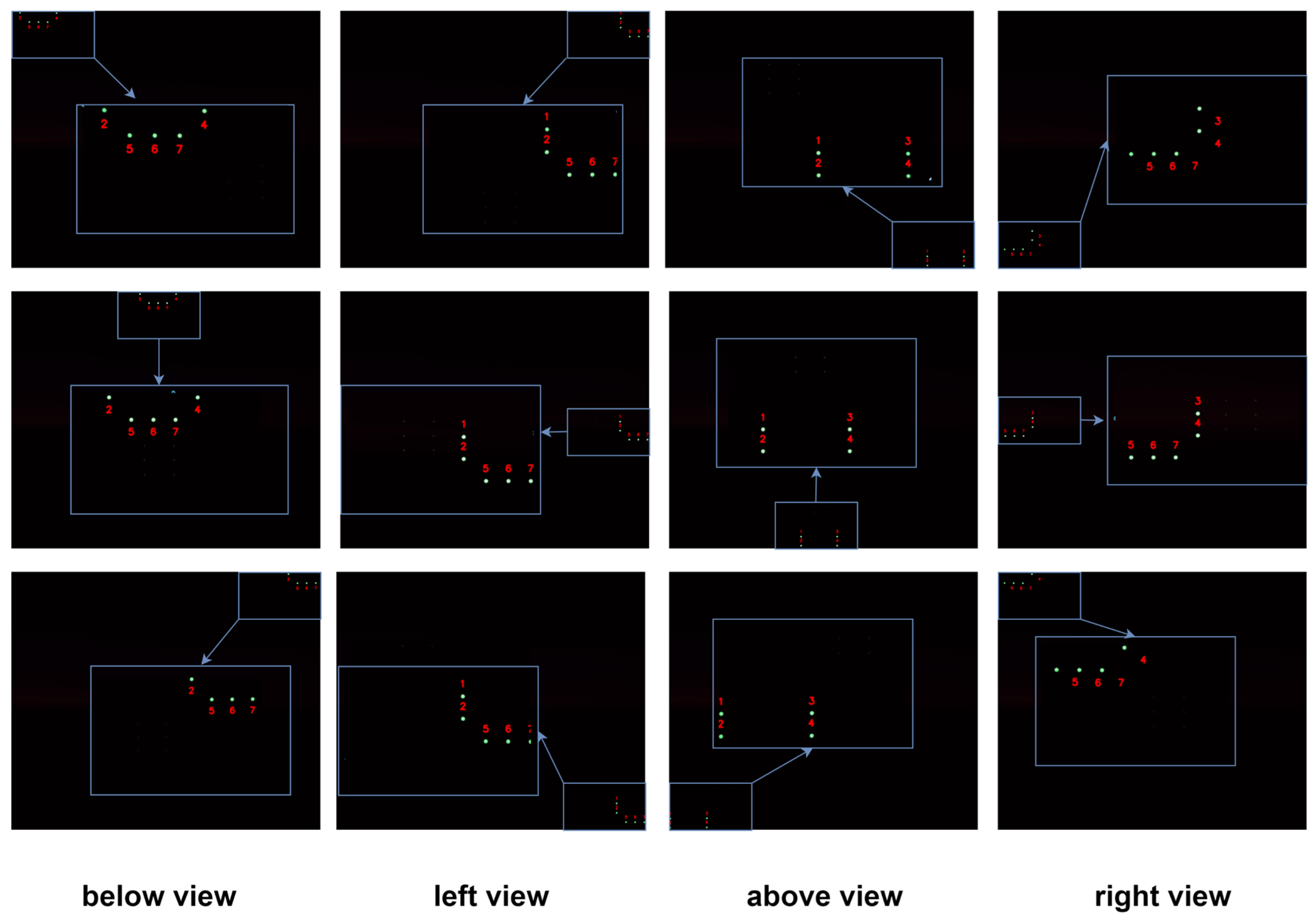

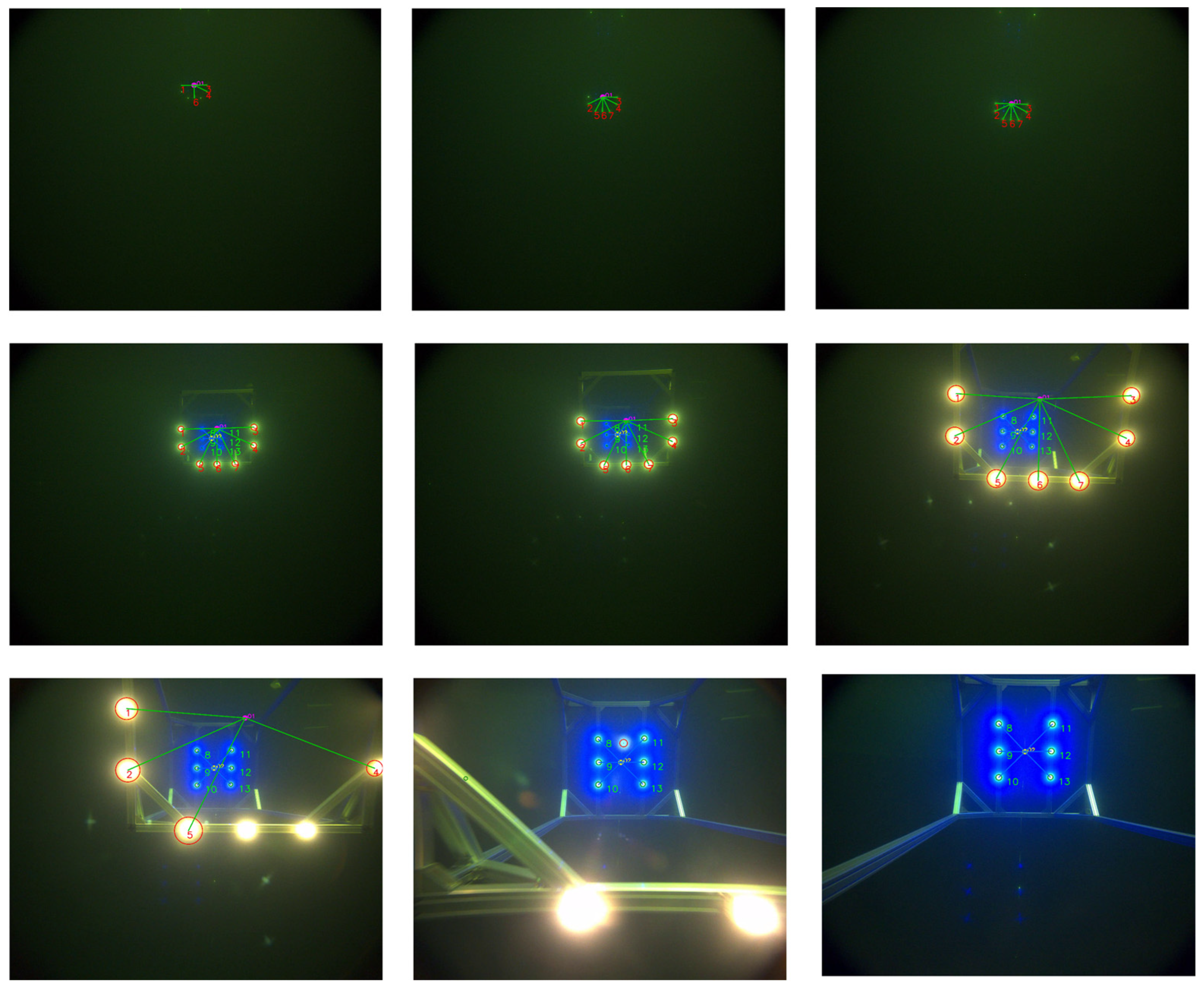

- Search stage: The AUV approaches the docking station from a distance and performs small-scale vertical and lateral maneuvers to search for the front-layer light array. During this phase, the system estimates the position of the docking station based on partially detected front-layer lights. Due to factors such as light attenuation and relative positioning, typically only a subset of the front-layer lights is visible, while the rear-layer lights are out of view (Figure 3a).

- Front-layer light array approach stage: As the AUV moves closer, the front-layer lights fully enter the camera’s field of view and can be reliably detected. The system utilizes these lights for precise localization and navigation. At this point, the rear-layer lights begin to gradually appear (Figure 3b).

- Transition stage between front and rear arrays: As the AUV continues to advance, the rear-layer lights progressively enter the field of view. The system must dynamically distinguish between rear and front layer light sources to ensure a smooth transition from front-layer-array-based guidance to rear-layer-array-based guidance, avoiding tracking errors or interruptions (Figure 3c,d).

- Rear-layer light array docking stage: Once the AUV enters the interior of the docking station, only the rear light array remains visible. At this stage, the system relies entirely on the rear light array for fine-grained localization and attitude adjustment, ensuring accurate and stable final docking (Figure 3e).

3. Visual Positioning Method Based on Dual-Layer Light Array

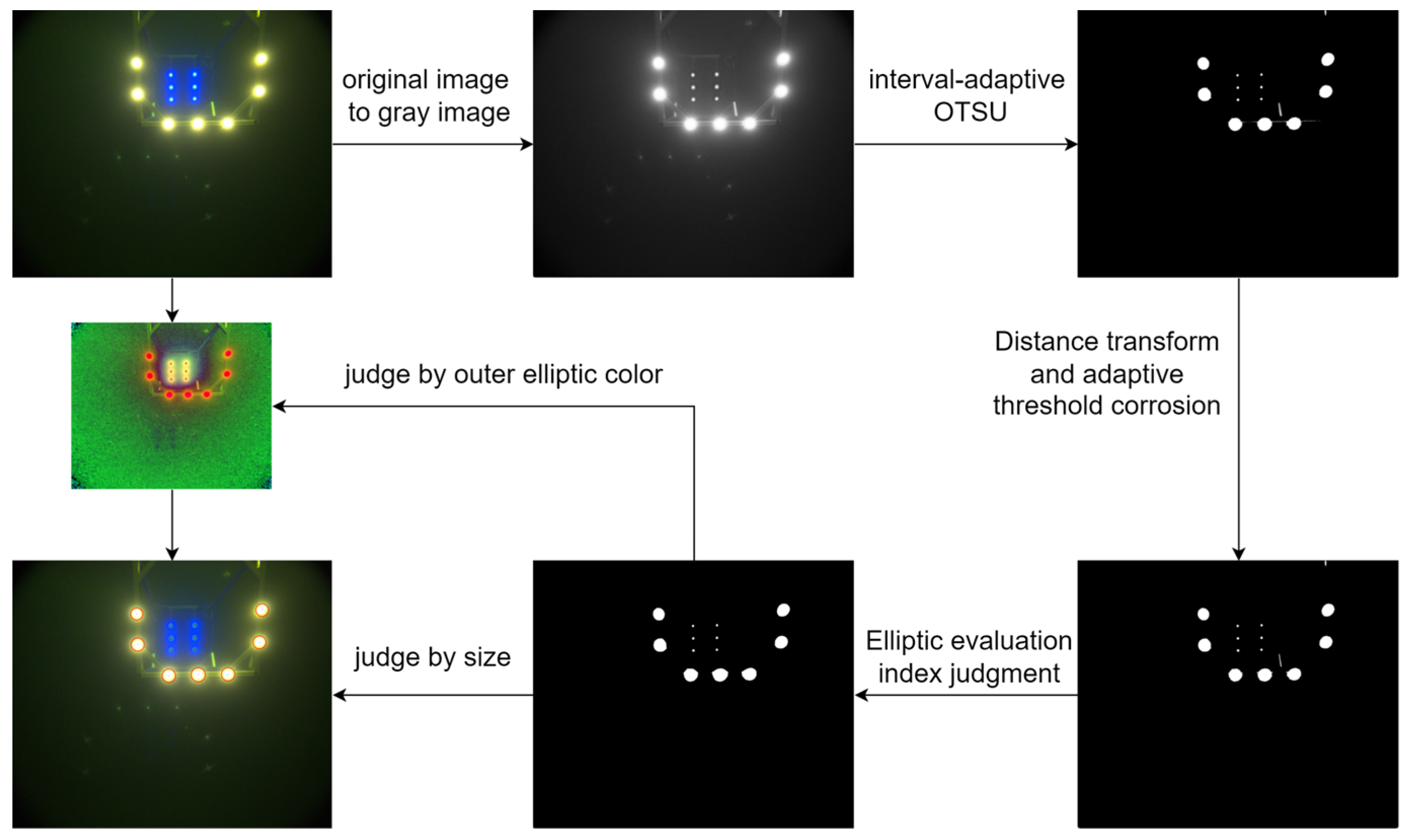

3.1. Extraction and Discrimination of Front and Rear Layer Light Arrays

3.1.1. Light Source Feature Extraction

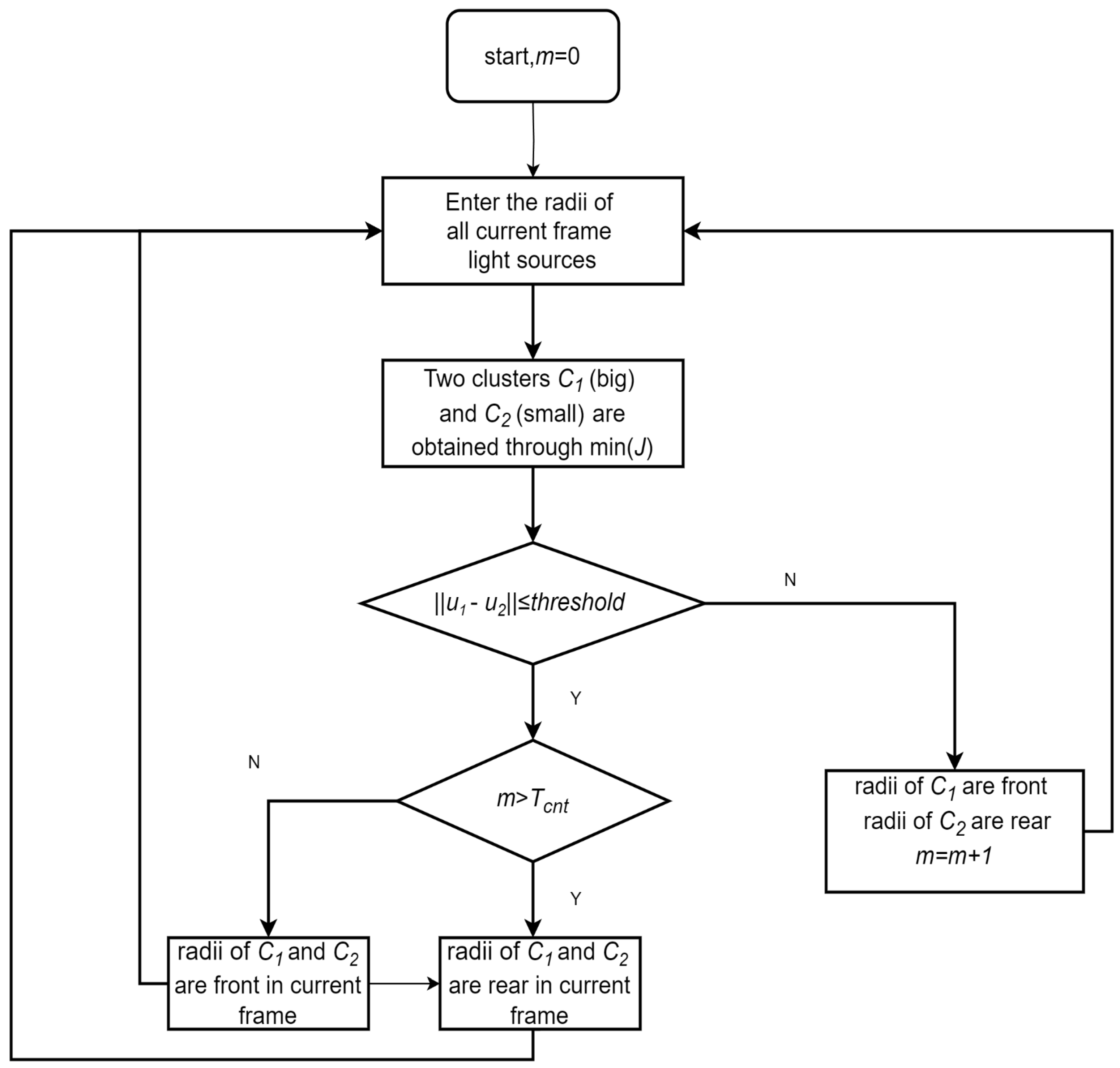

3.1.2. Discrimination of Front and Rear Layer Light Arrays

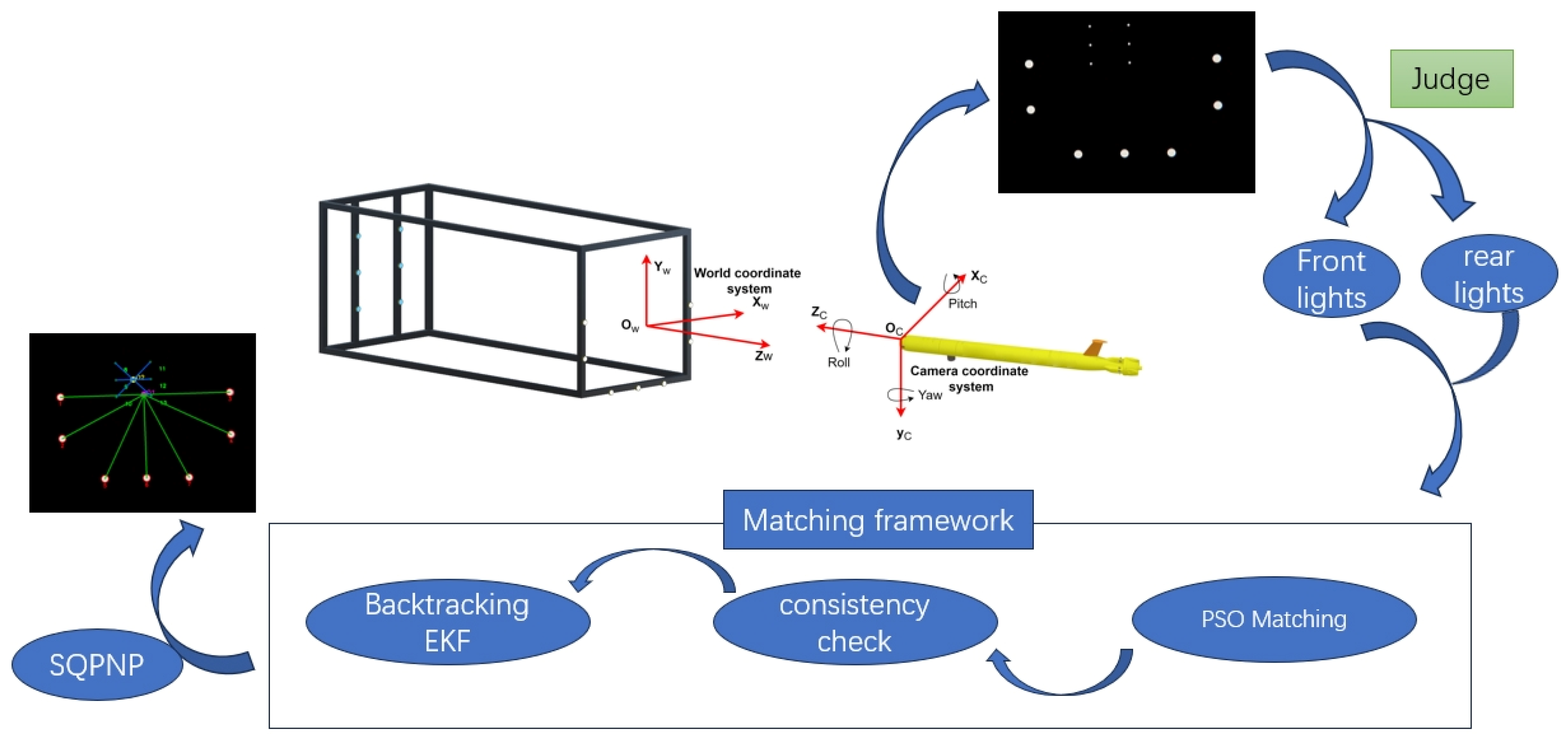

3.2. Single-Layer Light Array Tag Matching Framework

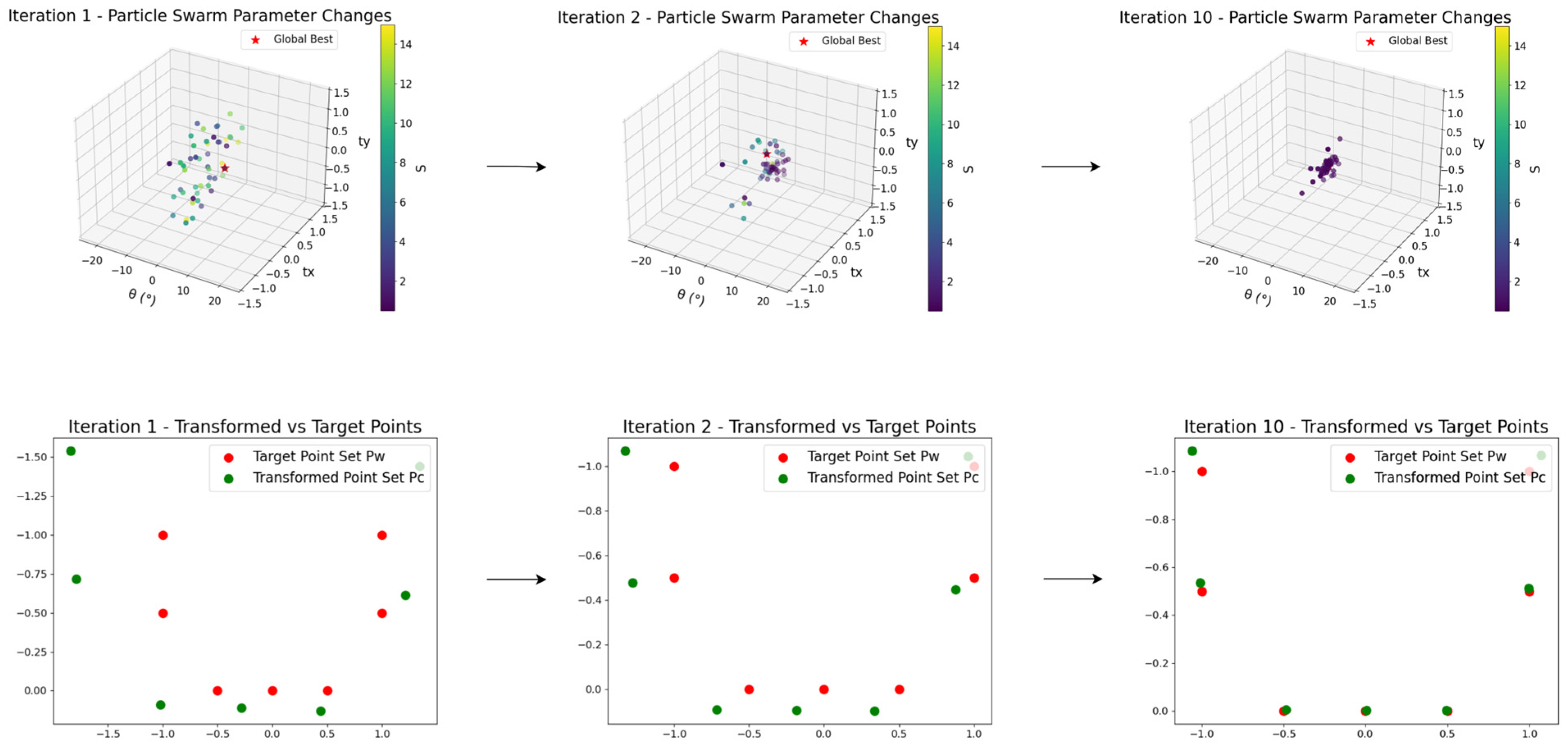

3.2.1. Enhanced PSO for AUV Active Beacon Matching

3.2.2. Consistency of Distance and Angular Ratios

- a.

- Distance-Ratio Consistency

- b.

- Angular Consistency

3.2.3. Backtracking EKF Matching Correction

- a.

- Predictive Covariance Projection

- b.

- Candidate Gating via Mahalanobis Distance

- c.

- EKF Update

- d.

- Backtracking Logic

- e.

- Convergence and Pose Acceptance.

3.3. Pose Estimation

4. Experiment

4.1. Single-Layer Light Array Tag Matching Framework

- (1)

- Simulation Environment and Parameter Settings

- (2)

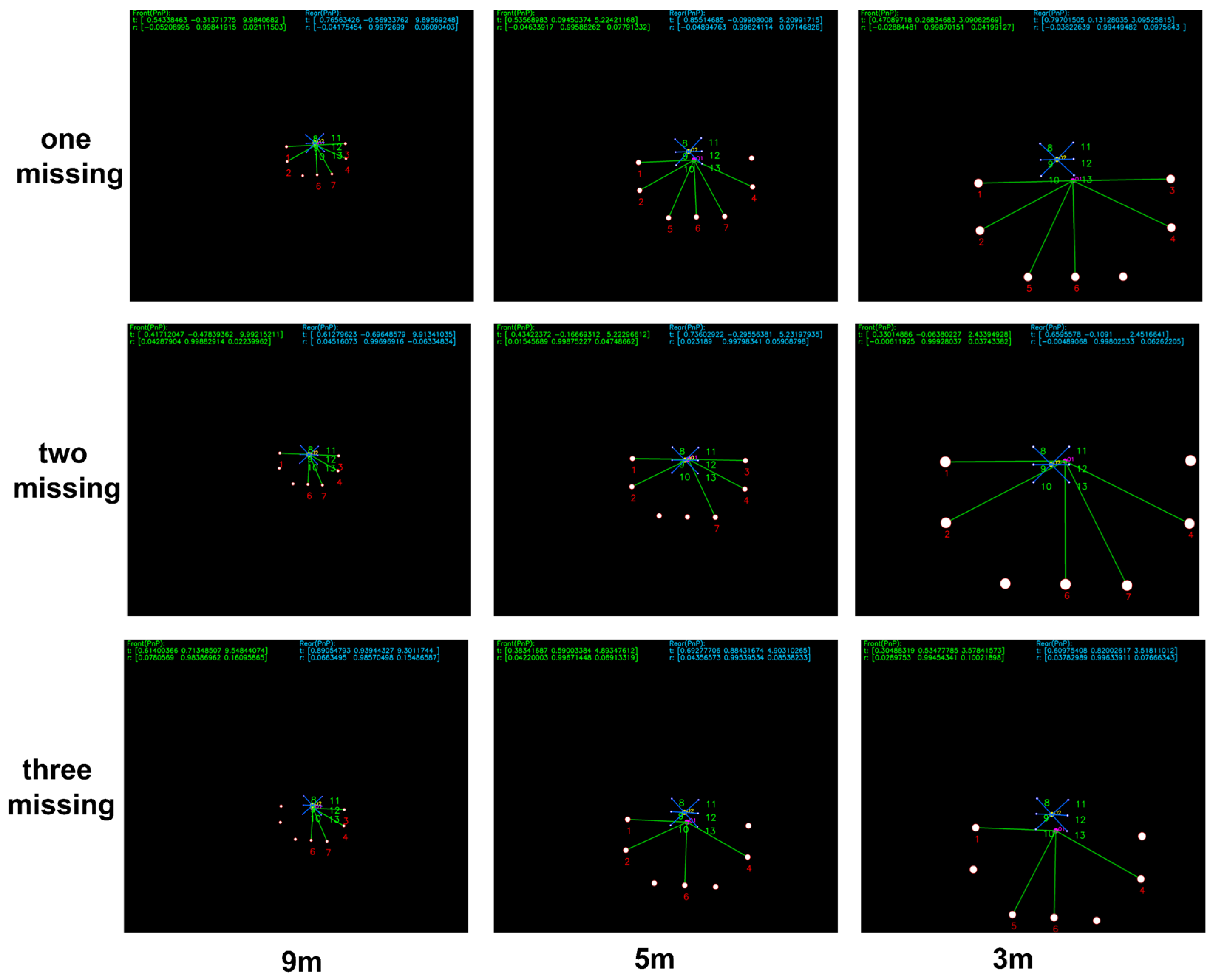

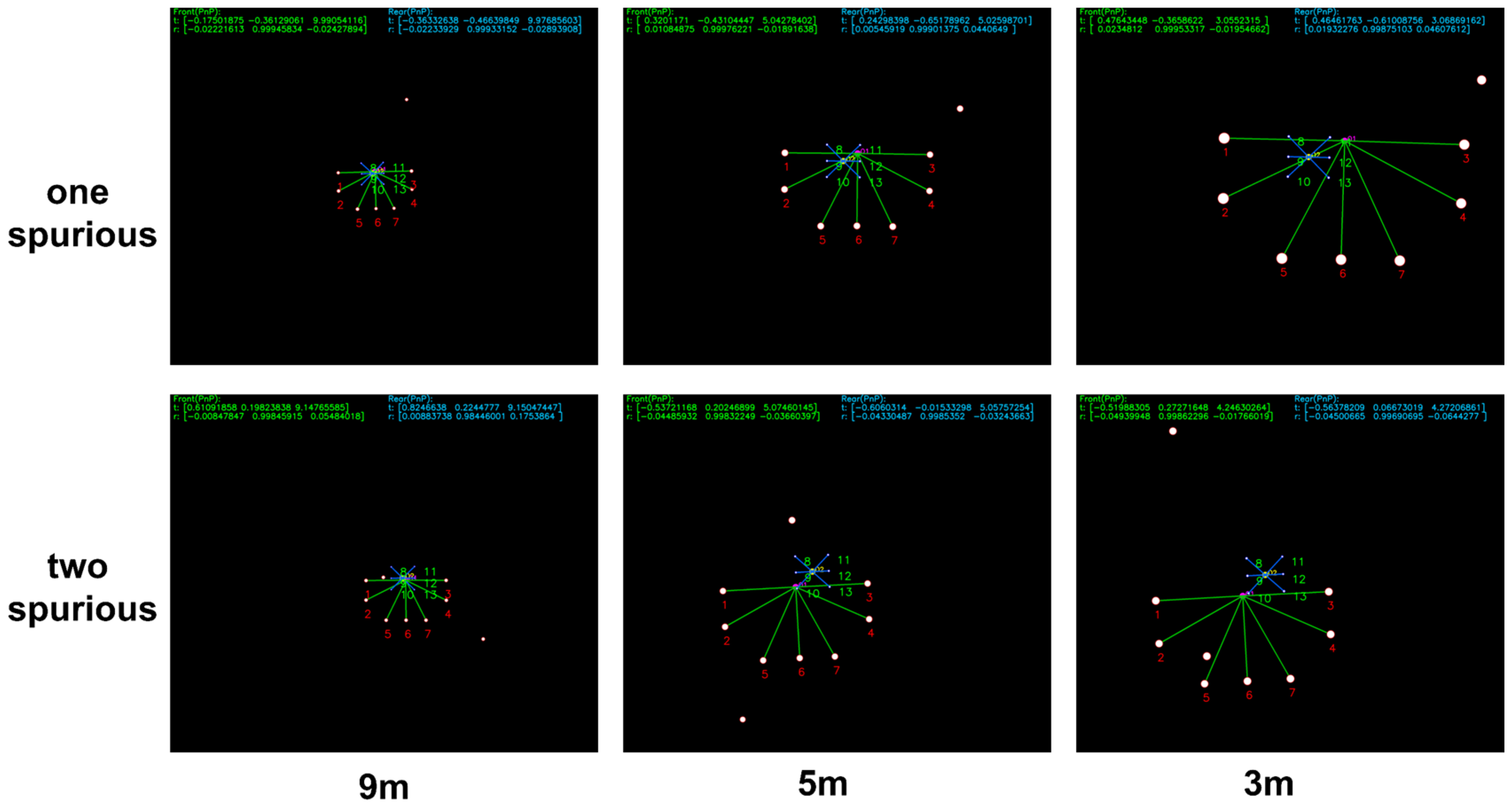

- Robustness to Missing and Spurious Beacons

- (3)

- Search-Phase Simulation Verification

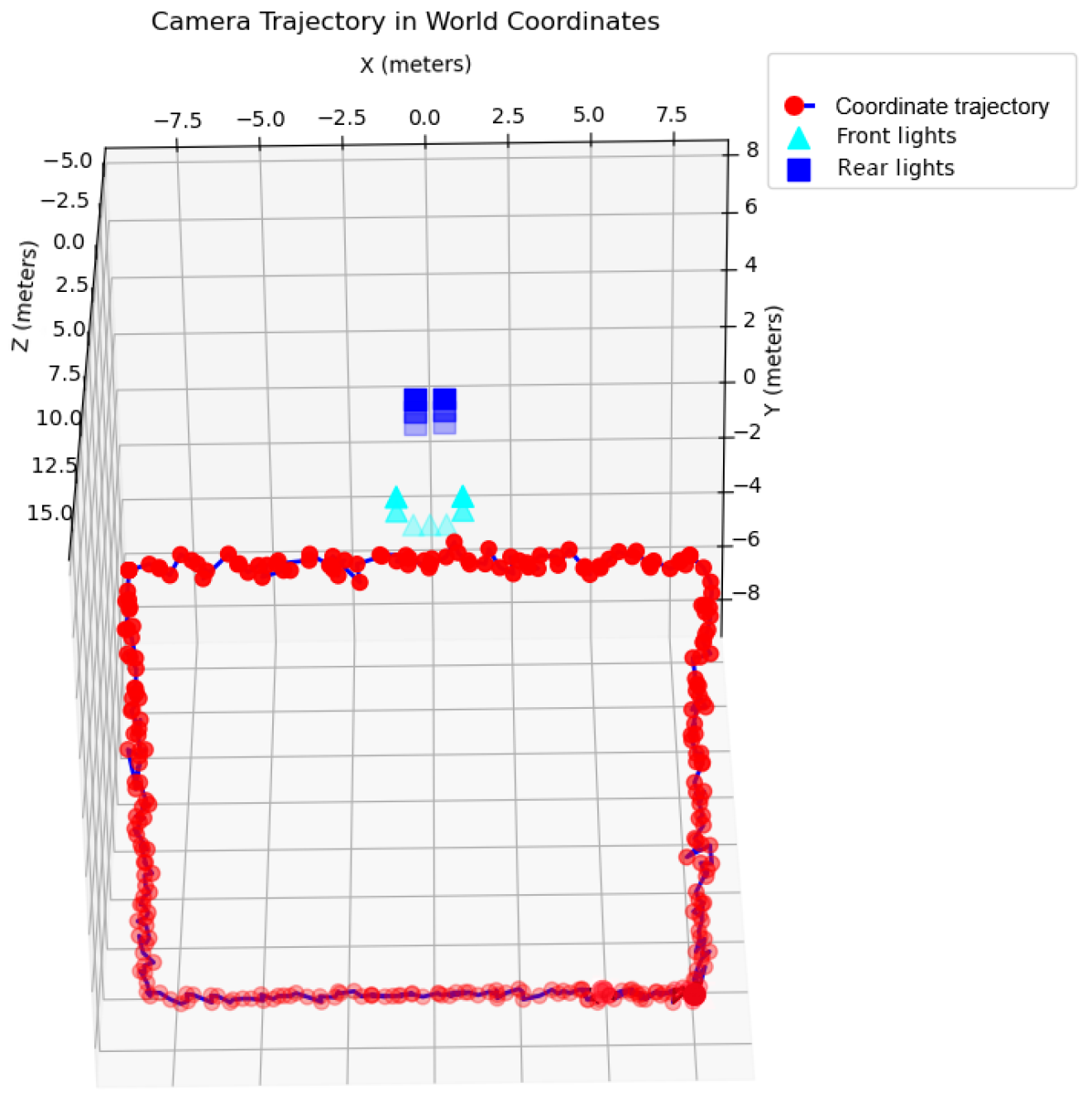

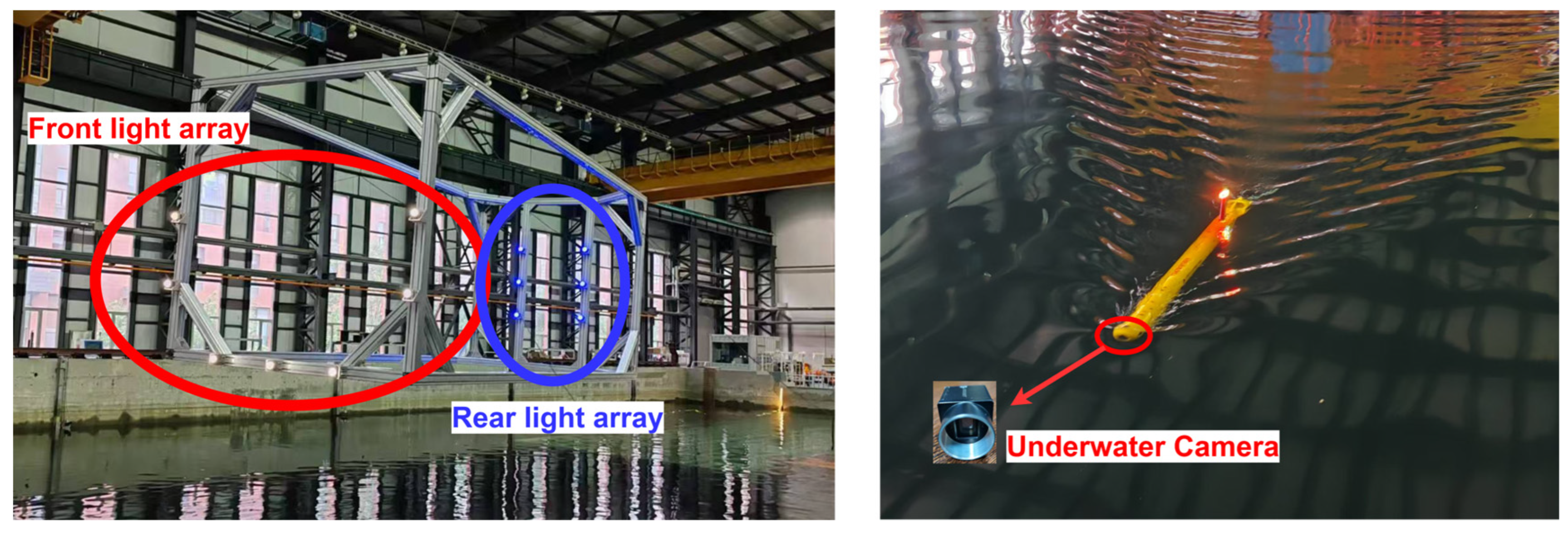

4.2. Pool-Based Feasibility Experiment for Continuous Guidance

- (1)

- Test Platform and Equipment

- (2)

- AUV Recovery and Docking Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wu, S.; Chen, Z.; Wang, S.; Zhang, J.; Yang, C. A review of deep-seawater samplers: Principles, applications, performance, and trends. Deep Sea Res. Part I Oceanogr. Res. Pap. 2024, 213, 104401. [Google Scholar] [CrossRef]

- Zhou, J.; Si, Y.; Chen, Y. A review of subsea AUV technology. J. Mar. Sci. Eng. 2023, 11, 1119. [Google Scholar] [CrossRef]

- Liu, J.; Yu, F.; He, B.; Soares, C.G. A review of underwater docking and charging technology for autonomous vehicles. Ocean. Eng. 2024, 297, 117154. [Google Scholar] [CrossRef]

- Wang, Z.; Guan, X.; Liu, C.; Yang, S.; Xiang, X.; Chen, H. Acoustic communication and imaging sonar guided AUV docking: System infrastructure, docking methodology and lake trials. Control. Eng. Pract. 2023, 136, 105529. [Google Scholar] [CrossRef]

- Trslic, P.; Rossi, M.; Robinson, L.; O’Donnel, C.W.; Weir, A.; Coleman, J.; Riordan, J.; Omerdic, E.; Dooly, G.; Toal, D. Vision based autonomous docking for work class ROVs. Ocean. Eng. 2020, 196, 106840. [Google Scholar] [CrossRef]

- Pan, S.; Xu, X.; Zhang, L.; Yao, Y. A novel SINS/USBL tightly integrated navigation strategy based on improved ANFIS. IEEE Sens. J. 2022, 22, 9763–9777. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, T.; Cui, B.; Wei, X.; Jin, B. In-motion coarse alignment for SINS/USBL based on USBL relative position. IEEE Trans. Autom. Sci. Eng. 2024, 22, 1425–1434. [Google Scholar] [CrossRef]

- Lin, R.; Zhao, Y.; Li, D.; Lin, M.; Yang, C. Underwater electromagnetic guidance based on the magnetic dipole model applied in AUV terminal docking. J. Mar. Sci. Eng. 2022, 10, 995. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, F.; Li, D.; Jin, B.; Lin, R.; Zhang, Z. Research on AUV terminal electromagnetic positioning system based on two coils. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–20 October 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Lv, F.; Xu, H.; Shi, K.; Wang, X. Estimation of positions and poses of autonomous underwater vehicle relative to docking station based on adaptive extraction of visual guidance features. Machines 2022, 10, 571. [Google Scholar] [CrossRef]

- Ni, T.; Sima, C.; Zhang, W.; Wang, J.; Guo, J.; Zhang, L. Vision-based underwater docking guidance and positioning: Enhancing detection with YOLO-D. J. Mar. Sci. Eng. 2025, 13, 102. [Google Scholar] [CrossRef]

- Han, T.; Ding, P.; Liu, N.; Wang, Z.; Li, Z.; Ru, Z.; Song, H.; Yin, Z. Design and Implementation of a High-Reliability Underwater Wireless Optical Communication System Based on FPGA. Appl. Sci. 2025, 15, 3544. [Google Scholar] [CrossRef]

- Bertocco, M.; Brighente, A.; Peruzzi, G.; Pozzebon, A.; Tormena, N.; Trivellin, N. Fear of the dark: Exploring PV-powered IoT nodes for VLC and energy harvesting. In Proceedings of the 2024 IEEE International Workshop on Metrology for the Sea; Learning to Measure Sea Health Parameters (MetroSea), Portorose, Slovenia, 14–16 October 2024; pp. 512–517. [Google Scholar] [CrossRef]

- Liu, A.; Liu, X.; Fu, X. Mobility-enhancement simultaneous optical wireless communication and energy harvesting system for IoUT. IEEE Internet Things J. 2024, 11, 17292–17300. [Google Scholar] [CrossRef]

- Perera, M.A.N.; Katz, M.; Häkkinen, J.; Godaliyadda, R. Light-based IoT: Developing a full-duplex energy autonomous IoT node using printed electronics technology. Sensors 2021, 21, 8024. [Google Scholar] [CrossRef]

- Li, Y.; Sun, K.; Han, Z.; Lang, J. Deep Learning-Based Docking Scheme for Autonomous Underwater Vehicles with an Omnidirectional Rotating Optical Beacon. Drones 2024, 8, 697. [Google Scholar] [CrossRef]

- Yan, Z.; Gong, P.; Zhang, W.; Li, Z.; Teng, Y. Autonomous underwater vehicle vision guided docking experiments based on L-shaped light array. IEEE Access 2019, 7, 72567–72576. [Google Scholar] [CrossRef]

- Xu, S.; Jiang, Y.; Li, Y.; Wang, B.; Xie, T.; Li, S.; Qi, H.; Li, A.; Cao, J. A stereo visual navigation method for docking autonomous underwater vehicles. J. Field Robot. 2024, 41, 374–395. [Google Scholar] [CrossRef]

- Ren, R.; Zhang, L.; Liu, L.; Yuan, Y. Two AUVs guidance method for self-reconfiguration mission based on monocular vision. IEEE Sens. J. 2021, 21, 10082–10090. [Google Scholar] [CrossRef]

- Zhao, C.; Dong, H.; Wang, J.; Qiao, T.; Yu, J.; Ren, J. Dual-type marker fusion-based underwater visual localization for autonomous docking. IEEE Trans. Instrum. Meas. 2023, 73, 1–11. [Google Scholar] [CrossRef]

- Wei, Q.; Yang, Y.; Zhou, X.; Fan, C.; Zheng, Q.; Hu, Z. Localization method for underwater robot swarms based on enhanced visual markers. Electronics 2023, 12, 4882. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Z.; Gong, P.; Pan, J.; Wu, W. Visual location method based on asymmetric guiding light array in UUV recovery progress. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; pp. 2671–2675. [Google Scholar] [CrossRef]

- Zou, J.; Cai, T. Improved particle swarm optimization screening iterative algorithm in gravity matching navigation. IEEE Sens. J. 2022, 22, 20866–20876. [Google Scholar] [CrossRef]

- Wang, C.; Wang, C.; Ji, C. A simulated annealing based constrained particle swarm optimization algorithm for geomagnetic matching. Electron. Des. Eng. 2019, 27, 153–157. [Google Scholar]

- Moreno-Noguer, F.; Lepetit, V.; Fua, P. Pose priors for simultaneously solving alignment and correspondence. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 405–418. [Google Scholar] [CrossRef]

- Terzakis, G.; Lourakis, M. A consistently fast and globally optimal solution to the perspective-n-point problem. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 24–28 August 2020; pp. 478–494. [Google Scholar] [CrossRef]

- Wei, Q.; Yang, Y.; Zhou, X.; Hu, Z.; Li, Y.; Fan, C.; Zheng, Q.; Wang, Z. Enhancing Inter-AUV Perception: Adaptive 6-DOF Pose Estimation with Synthetic Images for AUV Swarm Sensing. Drones 2024, 8, 486. [Google Scholar] [CrossRef]

- Ju, L.; Zhou, X.; Hu, Z.; Yang, Y.; Li, L.; Bai, S. Visual localization method for underwater robots based on synthetic data. Inf. Control. 2023, 52, 129–141. [Google Scholar] [CrossRef]

- Chu, S.; Lin, M.; Li, D.; Lin, R.; Xiao, S. Adaptive reward shaping based reinforcement learning for docking control of autonomous underwater vehicles. Ocean. Eng. 2025, 318, 120139. [Google Scholar] [CrossRef]

- Wang, T.; Peng, X.; Lei, X.; Wang, H.; Jin, Y. Knowledge-assisted evolutionary task scheduling for hierarchical multiagent systems with transferable surrogates. Swarm Evol. Comput. 2025, 98, 102107. [Google Scholar] [CrossRef]

- Campbell, D.; Liu, L.; Gould, S. Solving the blind perspective-n-point problem end-to-end with robust differentiable geometric optimization. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 24–28 August 2020; pp. 244–261. [Google Scholar] [CrossRef]

| Condition | Missing 3 | Missing 2 | Missing 1 | 1 Spurious | 2 Spurious |

|---|---|---|---|---|---|

| PSO | 90.3% | 98.6% | 100% | 99.6% | 99.6% |

| PSO + BT EKF | 100% | 100% | 100% | 100% | 99.9% |

| Component | Specification | Quantity |

|---|---|---|

| White LED Beacons | Spectrum range: 400–700 nm Power Consumption: 5.4 W Luminous Intensity: 637 cd beam angle: 120° | 7 |

| Blue LED Beacons | Wavelength: 455–460 nm Power Consumption: 2 W Luminous Intensity: 127 d | 6 |

| beam angle: 90° | ||

| TS-MINI AUV | Physical dimensions: 160 cm × 10 cm × 10 cm | |

| Underwater Camera | Sensor Model: Sony IMX264 | 1 |

| Effective pixels: 2448 × 2048 | ||

| Field of view: 60° | ||

| Voltage: 9–24 VDC | ||

| Pixel size: 3.45 µm × 3.45 µm | ||

| Frame rate: 15 FPS | ||

| Focal length: 7.2 mm | ||

| Onboard Computer | NVIDIA Jetson AGX Xavier | 1 |

| CPU: 6-core NVIDIA Carmel ARM | ||

| GPU: NVIDIA Volta |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Zhou, X.; Yang, Y.; Hu, Z.; Wei, Q.; Fan, C.; Zheng, Q.; Wang, Z.; Liao, Z. Robust Underwater Docking Visual Guidance and Positioning Method Based on a Cage-Type Dual-Layer Guiding Light Array. Sensors 2025, 25, 6333. https://doi.org/10.3390/s25206333

Wang Z, Zhou X, Yang Y, Hu Z, Wei Q, Fan C, Zheng Q, Wang Z, Liao Z. Robust Underwater Docking Visual Guidance and Positioning Method Based on a Cage-Type Dual-Layer Guiding Light Array. Sensors. 2025; 25(20):6333. https://doi.org/10.3390/s25206333

Chicago/Turabian StyleWang, Ziyue, Xingqun Zhou, Yi Yang, Zhiqiang Hu, Qingbo Wei, Chuanzhi Fan, Quan Zheng, Zhichao Wang, and Zhiyu Liao. 2025. "Robust Underwater Docking Visual Guidance and Positioning Method Based on a Cage-Type Dual-Layer Guiding Light Array" Sensors 25, no. 20: 6333. https://doi.org/10.3390/s25206333

APA StyleWang, Z., Zhou, X., Yang, Y., Hu, Z., Wei, Q., Fan, C., Zheng, Q., Wang, Z., & Liao, Z. (2025). Robust Underwater Docking Visual Guidance and Positioning Method Based on a Cage-Type Dual-Layer Guiding Light Array. Sensors, 25(20), 6333. https://doi.org/10.3390/s25206333