1. Introduction

Localization and navigation in the workspace environment are natural processes for people and other species. Robots, however, must use a sample of sensors to be able to locate the workspace automatically, build an image of the workspace, and place themselves within this workspace. Simultaneous localization and mapping (SLAM) is a process of detecting either the unknown or known workspace and placing themself into it. Many sensors exist for conducting SLAM, mainly with cameras (monocular, stereo, and RGB-D) and time-of-flight sensors (light detection and ranging (LIDAR), radar, ultrasound, laser, etc.). The localization methods of today’s mobile robots can be divided into those that utilize internal or mounted sensors like LIDARs, inertial measurement units (IMUs), encoders, and cameras and those that rely on external radio frequency sensors such as Wi-Fi, Bluetooth, Ultra-Wideband, etc. [

1,

2]. For many localization cases, the limitation to traditional methods is predetermined by hardware, using on-board sensors that face difficulties in a real-time environment. Recent advances in hardware and computing methods have enabled many artificial intelligence methods that can be used for a robot’s localization. A review paper by Wang and Ahmad [

3] divided these into Deep Learning (GAN, CNN, and RNN), Machine Learning (Reinforcement Learning and SVM), and Evolutionary and Swarm Intelligence methods (such as PSO, GA, DE, etc.). Most papers that have presented AI-based localization solutions operated with visual and odometrical information for mapping and/or localization, whereas our work relies on LIDARs measurements and evolutionary/swarm algorithms to find the robot’s position and orientation. In such a case a map of the mobile robot environment (workspace) must be known or has to be obtained by other mapping methods.

Once the mapping is performed and a so-called a priori map is built, the localization remains. Localization in a low-signal-to-noise ratio workspace is a misleading task, as the robot may not recognize several similar potential locations on the map but, instead, select one of them sporadically, not knowing that the location is incorrect. A simple remediation to this is to follow the path continuously and check the long-term integrity of a solution constantly so that, eventually, only a single solution exists.

The challenge we are addressing is a reliable and real-time localization of a semi-humanoid robot that is equipped with three independent LIDAR sensors, given the underlying a priori map. We have realized that the classical odometry integrates severe offset with respect to the distance traveled, which demands frequent recalibrations.

Moreno et al. [

4] mentioned that, for evolutionary optimization algorithms, a fitness function based on the mean square error function does not perform well for mobile robot localization due to the following:

Sensor accuracy and the number of sensors limit the possibility of discriminating between several fitness function local minima and a global minimum.

The geometrical similarities of the robot workspace produce high numbers of possible mobile robot pose solutions.

We will show in our paper that one more reason exists that an optimization algorithm (OA) cannot discriminate between local minima and a global minimum if the mapping of the robot workspace is not measured accurately enough.

A lot of papers, in spite of their authors recognizing the problems mentioned by Moreno et al. [

4], have not found solutions to discriminating between the local minima and global minimum [

5,

6,

7], so their solutions were limited in practice. Zhang et al. [

5] tried to address this problem by introducing a hybrid algorithm based on two evolutionary algorithms (the firefly algorithm and a genetic algorithm) but only presented the simulation results. Zhang et al. [

8] found a solution for the discrimination of local and global minima, but his fitness function was complicated, so the average time to find the global minimum was 2.41 s in the Matlab environment, while our algorithm was executed a few times faster (0.5 s in the Python 2.7.18 environment), while the position and orientation errors were almost the same. When the geometrical similarities of the robot workspace produced a higher number of possible mobile robot pose solutions, Zhang et al.’s [

8] algorithm needed to investigate from several nearby positions to find the global minimum, while our algorithm needed only two positions and, therefore, decreased the overall elapsed time to a maximum of 1 s in the Python environment.

The following literature review involved research on mobile robot localization with a known a priori map range. Havangi [

6] implemented a practical case study of the mobile robot localization between the particle filter (PF) and extended Kalman filter (EKF) as benchmarks and PSO-driven robot localization. The PSO-based localization proved superior in terms of accuracy and consistency. Zhang et al. [

8] presented an improved PF based on the PSO for mobile robot localization for an indoor Intel Research Lab public dataset and Fort AP Hill [

9]. Chung et al. [

10] implemented an improved Adaptive Monte Carlo Localization (AMCL) for tracking and localizing the robot’s position in two dimensions that is equipped with laser sensors and range finders. Ji et al. [

11] employed pose tracking and localization based on a camera plane extraction in the camera coordinates and comparing these to a real-world map. De Sá et al. [

12] showed an example of range-based mobile robot localization within a wireless network of stationary anchors with known positions. Using basic triangulation, the mobile robot position can be determined by using the min–max method and the PSO. Worley [

13] implemented autonomous localization for water pipe inspection mobile robots. These robots need to define the absolute location based on several properties, such as pipe length, radius, interconnections, and others, and compare these to a known a priori map. A pose graph optimization method with fusion of acoustic information was used by the authors for efficient localization.

The work by Vahdat et al. [

14] stipulated the importance of global (absolute) robot localization. The authors used three algorithms, Monte Carlo localization (MCL), differential evolution (DE), and particle swarm optimization (PSO). In a given case study, the DE proved to be most successful between the three. The authors reported that the DE underwent faster convergence than the PSO, as well as scored a higher convergence rate. Begum et al. [

15] introduced the fuzzy evolutionary SLAM algorithm. A genetic algorithm was utilized as an OA for searching for the optimal robot pose, while fuzzy logic was utilized to handle the uncertainties in the robot’s pose. The robot was equipped with laser range finders, similar to a LIDAR, of up to 10 m of effective distance. The authors proposed that new position updates should be conducted for each 0.5–1 m of travel or a 20 to 40 degree change in rotation. Again, the authors emphasized the importance of solving the robot’s pose problem as a global optimization problem. Bian et al. [

16] showed an example of a multi-objective PSO for FastSLAM, called FastSLAM-MO-PSO, especially useful for high noise and large-scale maps. Carvalho et al. [

17] introduced the perfect match global localization (PMGL) metric for evaluation of the fitness of several hypothetical robot poses displaced over the map. The authors argued that the efficacy of the PMGL may vary across several scenarios. For a sample of maps, the PSO performed similarly to the GA. However, for one of the maps, the PSO performed significantly worse than the GA. The authors therefore highlighted the GA as the most intuitive solution for global localization. In the last five years, novel state-of-the-art swarm intelligence algorithms, such as golden eagle optimizer (GEO) [

18], were proposed, with their performance validated in the robotics field [

19].

The novelties of this paper are as follow:

We upgraded the PSO algorithm successfully with an innovative search strategy that can discriminate between the local minima and the global minimum.

A localization algorithm based on the PSO algorithm with an algorithm for avoiding the local minima was tested in the real laboratory workspace, and the results show that it outperformed the odometrical algorithm and OA’s benchmarks.

The average overall elapsed time to calculate a new position was between and s in the Python environment.

This paper is structured as follows: the

Section 2 presents the fundamentals of the Pepper robot, its LIDAR sensors and cameras, and the OAs, i.e., the PSO and two benchmark OAs. The

Section 3 present the organization of the practical laboratory tests. Then, a rigorous discussion follows in

Section 4. Finally,

Section 5 concludes this paper.

2. Materials and Methods

The following section is divided into three subsections.

Section 2.1 deals with Pepper’s hardware v1.8 (manufactured by Aldebaran Robotics, Paris, France, formerly SoftBank Robotics, Tokyo, Japan, now part of United Robotics, Bremmen, Germany), cameras (OV5640, OmniVision, CA, USA), and LIDAR sensors (Pepperl Fuchs, Mannheim, Germany).

Section 2.2 deals with the OAs employed, such as PSO, the genetic algorithm, and GEO [

18].

Section 2.3 introduces the iterative search strategy called the avoidance of local minima (ALM).

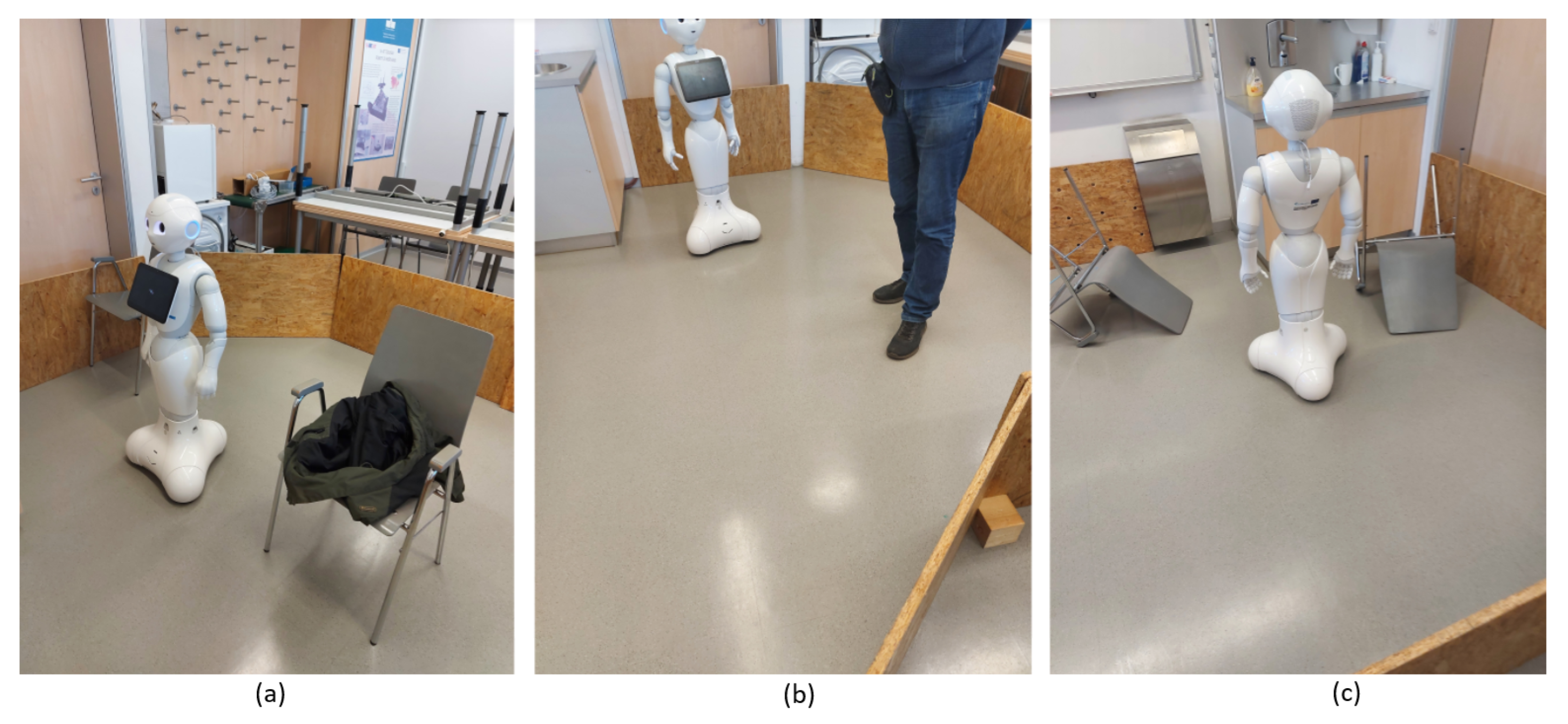

2.1. Pepper Robot and Cameras

The Pepper robot is a semi-humanoid mobile robot that is equipped with numerous built-in functions and operates on its own operating system (OS). NAOqi-OS is based on a Linux distribution and is installed on Pepper’s integrated computer (Intel ATOM, Santa Clara, CA, USA); however, users are restricted from installing third-party applications directly on the OS and must rely on Pepper’s proprietary Software Development Toolkit (SDK). Therefore, users can employ different tools to program and control the robot, e.g., GUI Choreographe, Python SDK, Linux SDK with a ROS environment, etc. Pepper comes with many integrated sensors, such as LIDARs, IRs (Pepperl Fuchs, Mannheim, Germany), sonars, RGB cameras, and a 3D camera (Asus, Taipei, Taiwan), which allow safe navigation and movement in a workspace. In this paper, the particular focus is on two different sensor families: the robot’s RGB cameras that provide color 3D images with depth information (RGB-D) and three separate LIDARs (front, left, and right). The visual data from the 3D camera can be used for feature extraction and mapping of the workspace—for instance, for detecting walls and corners—while the LIDARs can be used for autonomous and absolute localization of the robot within the workspace. The following subsection describes the cameras.

2.1.1. Cameras

Pepper’s cameras are preinstalled in the chin and in the forehead. They provide several adjustable resolutions for image processing tasks such as V-SLAM, although it was figured out that resolutions set too high become a problem due to the stalling WiFi connection. Experimentally, the image resolution at 320 × 240 pixels with 15–20 fps was realized as the maximum allowed, although the mapping results were very poor with higher signal-to-noise ratios. Selection of such a low resolution was recognized as insufficient; hence, we tested different external cameras to evaluate the usability, performance, and speed of camera image processing in ROS. A classic USB mono cam (Logitech HD C270 webcam, Lausanne, Switzerland) and RGB-D cameras (Kinect, RealSense D435, Cupertino, CA, USA and Luxonis DepthAI OAK-D Pro, Denver, CO, USA) were used in the visual SLAM tests while changing settings (resolution, fps, and Oriented FAST and Rotated BRIEF (ORB) features) and calibration parameters (camera distortion, low contrast, etc.). We were aiming for a high-accuracy point cloud for the later workspace map definition needed for localization based on known area dimensions (walls, corners, etc.). Due to the good SDK library support in ROS and Pepper, we decided on a RealSense D435 RGB-D camera that allowed us to operate at an enhanced rate of 640 × 480 pixels at 20 fps. As it was an external camera, we mounted it on the top of the robot’s head for improved vision, as seen in

Figure 1. Again, the RGB-D video stream was streamed using the Raspberry Pi 3 node (Cambridge, UK).

2.1.2. LIDARs

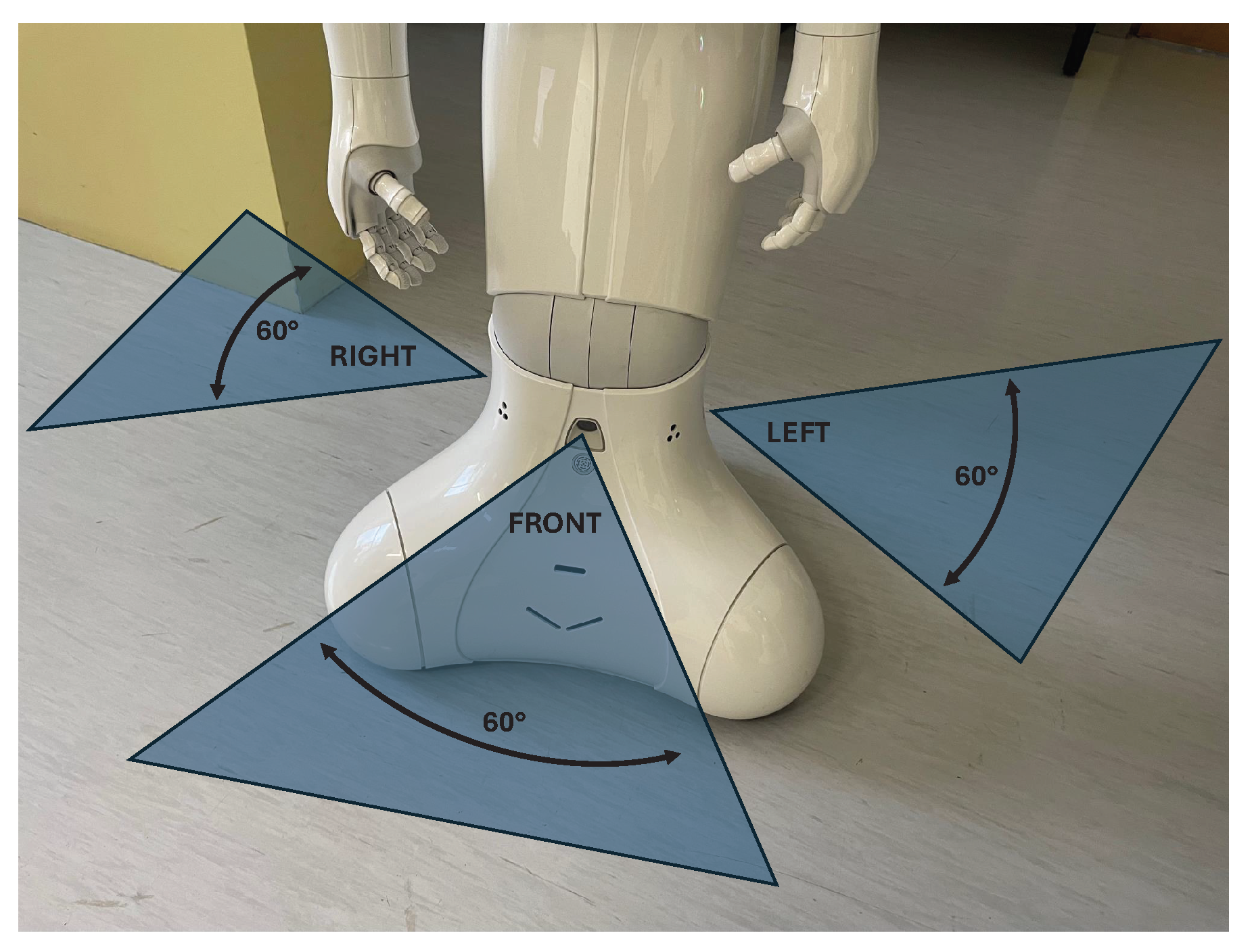

Three different LIDAR sensors are installed within Pepper. LIDAR sensors comprise a transmitting laser actuator and a separated receiving sensor. The sensors are offset by 90°, each with a horizontal field of view (HFOV, hereinafter field of view (FOV)) of 60°. To sense the whole workspace in 360°, Pepper needs either to be rotated or its LIDARs combined with other sensors. The layout of the LIDARs and their visible range is shown in

Figure 2. The frame rate of each laser was 6.25 Hz, or 0.16 s. Each measured LIDAR section (front, left, and right) returns 15 segments divided into

with X and Y of the robot’s coordinate system. The accuracy of the measurements depends on the FOV angle, where segments more to the far left (segment 1) and far right (segment 15) are generally less accurate than the central segments. For example, central segment 8 was the most accurate. The built-in function returns the X and Y values of detected obstacles for each segment, which are then processed to calculate the angle and distance to the obstacle. Here, special cases exist regarding the uncertainty of the measurement accuracy, as the robot may return “Uncertain_Obstacle” or “Annoying_Reflection”. The analysis of these measurements showed no improvements in the measured distances but merely prolonged the data acquisition and processing times. A more detailed specification of the robot’s LIDARs can be found in Pepper’s documentation (

http://doc.aldebaran.com/2-5/home_pepper.html, accessed on 12 June 2025).

The measurements were recorded with all three LIDARs of the robot. The workspace was realistic but artificially constructed with the help of wooden boards and the concrete walls of the laboratory. The workspace was measured precisely with a tape measure. The workspace had a starting point of the base coordinate system, upon which all other distances were derived to all the corners of the workspace in the picture. We placed the robot inside the defined workspace and performed real LIDAR measurements and compared them to the ground-truth measurements.

Additionally, we tested the accuracies of all three LIDARs by placing the robot in front of a flat wide wall, so that the center beam of the LIDAR (sensor segment 8, out of the total 15 segments) was perpendicular to the wall. We moved the robot away from the wall gradually and took measurements with the same LIDAR. The distance of the robot from the wall was additionally measured with a tape measure and a handheld laser meter. It was realized that the measurements deviated from the expected values. In addition, it was realized that the measurements were distorted in a curved way, even if measuring from a flat wall, and the distortion grew as the distance increased. The measurement of distances beyond 3.5 m was not useful practically.

2.1.3. Robot’s Leg

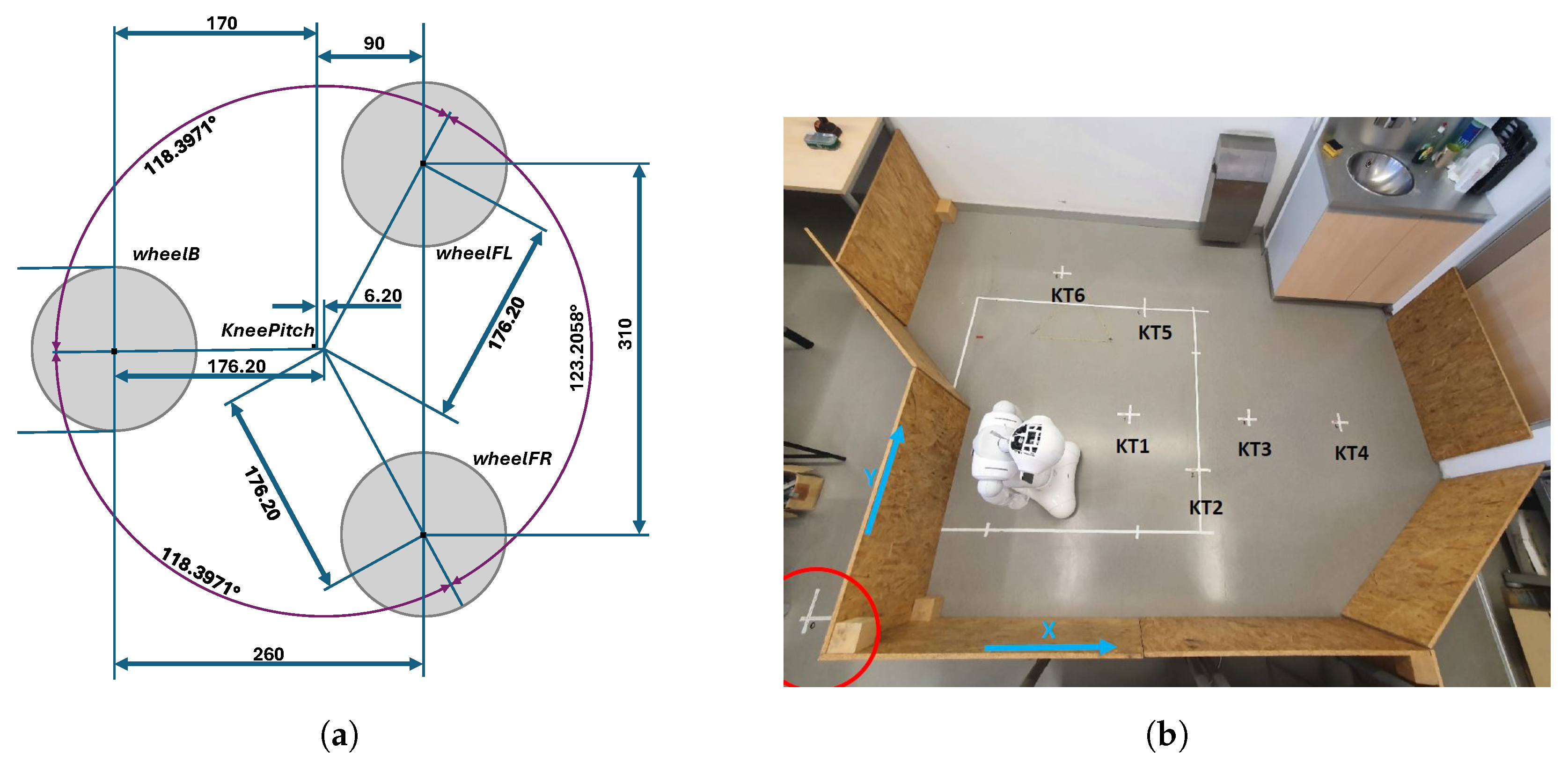

The robot coordinate system has the X axis positive toward the robot’s front, the Y axis from right to left, and the Z axis vertical. Pepper is a semi-humanoid robot that moves using three sets of omnidirectional wheels (omni-wheels), i.e., a front left (FL), front right (FR), and back (B) wheel. These allow free movement in both X and Y directions as well as rotation around Z. The robot’s center of rotation is displaced by 6.2 mm from the KneePitch position, as seen from

Figure 3 on the bottom view of the robot’s base.

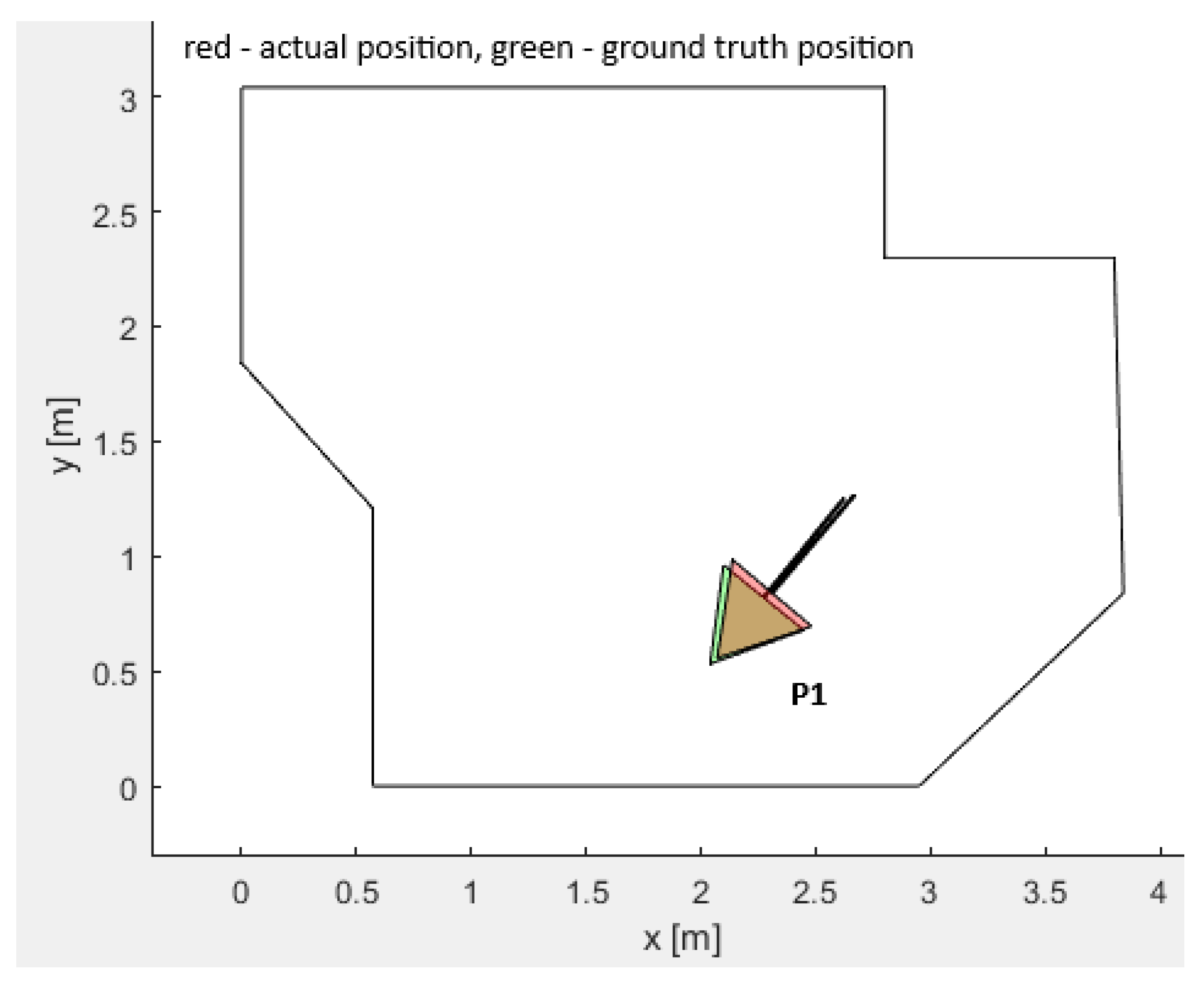

2.1.4. Test Workspace

Mobile robots move and navigate in a workspace according to the given map and a path plan. For evaluation of the proposed localization algorithm, a laboratory testing workspace with dimensions of 4 by 3.2 m was built with classic OSB wooden boards (the right plot of

Figure 3). The surface of the workspace walls (boards) was selected as non-monothonic, so that the RGB-D camera was able to obtain proper results for the visual SLAM mapping and LIDAR measurements.

2.2. Algorithms and Methods Used

This section represents the PSO algorithm, the benchmarks, and the description of the implemented fitness function . Evolutionary and swarm intelligence OAs were selected instead of simple minimum gradient search algorithms due to future work considerations. Although gradient search would be sufficient for present tasks with lower dimensionalities , we selected the more sophisticated OAs to ensure compatibility with anticipated future extensions, i.e., environments with larger dimensionalities , such as localization of airborne unmanned aerial vehicles.

2.2.1. Particle Swarm Optimization

PSO is a population-based OA that was designed in 1995 by Kennedy and Eberhart [

20]. PSO rests on the intelligent movement of a swarm that searches for prey by calculating consecutive velocity and position updates. It is an iterative optimization method, and its pseudocode is denoted in Algorithm 1. The canonical PSO was taken as a baseline, to which we added neighborhood best particle [

21,

22].

| Algorithm 1 Particle swarm optimization (PSO). |

- 1:

Initialize swarm with population size - 2:

Initialize positions and velocities - 3:

Evaluate fitness function values - 4:

Set personal best - 5:

Select global best - 6:

while termination condition not met do - 7:

for each particle i in swarm do - 8:

for each element j in dimension D do - 9:

// Update velocity and position - 10:

- 11:

- 12:

end for - 13:

Evaluate fitness - 14:

if then - 15:

Update personal best - 16:

end if - 17:

if then - 18:

Update global best - 19:

end if - 20:

if then - 21:

{Update neighborhood best} - 22:

end if - 23:

end for - 24:

- 25:

end while - 26:

return global best position

|

The control parameters of PSO are inertia weight

w, cognitive constant

, social constant

, and neighborhood constant

. The parameters

and

are random numbers drawn from the uniform distribution

. The personal best

is updated when the particle itself obtains a better position. The global best

is updated when a particle, among all particles, obtains a better position. The

represents the neighborhood. The neighborhood best

is updated when a particle, among its neighborhood, obtains a better position. PSO was initialized with the parameter settings as outlined in

Table 1 for both the simulation and laboratory experiments.

2.2.2. Adaptive Elitist-Enhanced Genetic Algorithm (GA)

The adaptive elitist-enhanced GA was used as a benchmark. The genetic operators included roulette wheel selection, single-point crossover, bit-flip mutation, and elitism. Binary representation was implemented. An adaptive mutation factor

was implemented to increase the mutation rate

up to a certain value that equaled

in the case of indicating no progress and reduce it down to a certain value that equaled

in case of indicating progress, as follows in Equation (

1). Here,

represents the average value of the fitness function evaluations that were monitored for two different periods separately: First, for the last

generations (

g represents the current generation) to

generations, i.e.,

. Second, for the last

to

generations, i.e.,

. Here, all the calculated fitness function evaluations were taken into account. The mutation rate

was increased when the value

did not exhibit any progress. When significant progress was indicated, the mutation rate was decreased. This mechanism decreased the probability of trapping into the local optima further. Algorithm 2 exhibits the GA pseudocode and

Table 1 exhibits the GA setup.

| Algorithm 2 Adaptive elitist-enhanced genetic algorithm. |

- 1:

Initialize population with random solutions - 2:

Evaluate the fitness of each individual in - 3:

while stopping criterion not met do - 4:

Convert population into binary form (genes) - 5:

Select parents for reproduction - 6:

Perform crossover and mutation to create offspring - 7:

Convert binary genes of population back into decimal representation - 8:

Evaluate the fitness of the offspring - 9:

Apply elitism: select the best individuals for the next generation - 10:

Replace the worst individuals in the population with offspring - 11:

Apply probability of mutation adaptation - 12:

end while

|

2.2.3. Golden Eagle Optimization (GEO)

GEO is among the latest and most novel representatives of nature-inspired OAs. GEO resembles the spiral motion of golden eagles when cruising overhead the prey and, once finding the prey, attacking it. Cruise and attack effectively mirror the trade-off between the exploration and exploitation phases of swarm intelligence algorithms. The control parameters include the initial and final propensities to cruise and propensities to attack. The pseudocode is visualized in Algorithm 3, and the control parameter setup is shown in

Table 1.

| Algorithm 3 Pseudocode of golden eagle optimizer (GEO). |

- 1:

Initialize the population of golden eagles - 2:

Evaluate fitness function - 3:

Initialize population memory - 4:

Initialize and - 5:

for each iteration t do - 6:

Update and - 7:

for each golden eagle i do - 8:

Randomly select a prey from the population’s memory - 9:

Calculate attack vector - 10:

if length of attack vector then - 11:

Calculate cruise vector - 12:

Calculate step vector - 13:

Update position: - 14:

Evaluate fitness function for the new position - 15:

if fitness is better than the position in eagle i’s memory then - 16:

Replace the position in eagle i’s memory with the new position - 17:

end if - 18:

end if - 19:

end for - 20:

end for - 21:

Return the best solution

|

2.2.4. Description of the Fitness Function

The fitness function value to evaluate the quality of each particle or individual during the execution of the OA is denoted as

. Calculation of the fitness function consists of two steps. First, the fitness function

for each measured position

j is calculated as the distance between

and the closest equidistant position on the wall

, as described in Equation (

2). Next, an average of these fitness functions is calculated as a final fitness function

, as described in Equation (

3)

where

and

,

n represents the number of equidistant positions on the walls between the corners of the mobile robot workspace in the base coordinate system, and

m represents the number of positions measured by LIDAR in the base coordinate system.

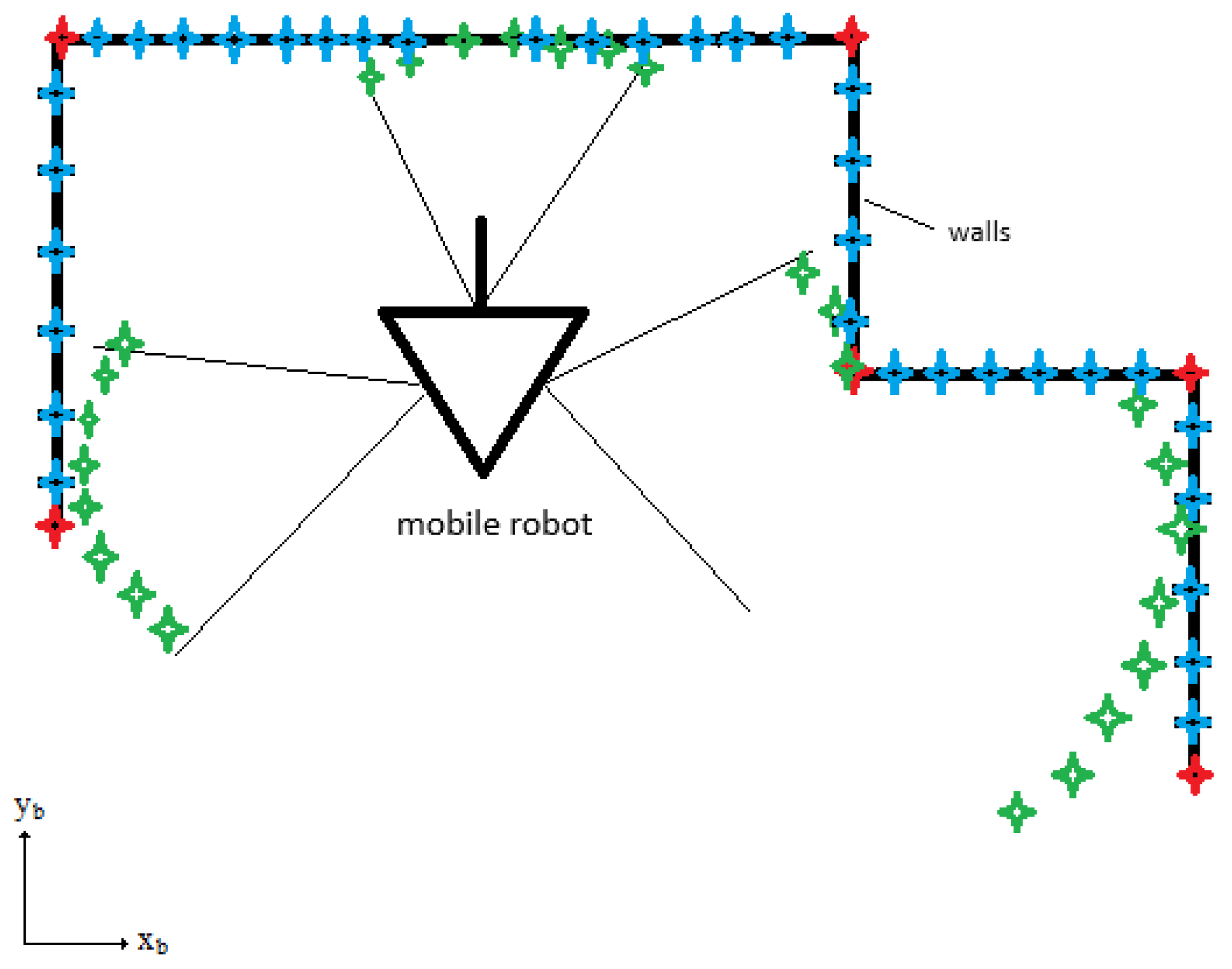

and

represent positions measured by the LIDAR in the base coordinate system, as denoted in

Figure 4.

represent equidistant positions on the walls between the corners of the mobile robot workspace in the base coordinate system, as denoted in

Figure 4.

Figure 4 shows the organization of the workspace. The coordinate axes (

,

) define the base coordinate system. The red diamonds denote the corners of the workspace. The blue diamonds denote equidistant positions on the wall

. The green diamonds denote measured distances between the mobile robot and the walls by the LIDAR

. A triangle and a forward path line define the location and orientation of the mobile robot. The black lines define the walls of the workspace.

2.3. Algorithm for Avoiding the Local Minima (ALM)

The scientific literature has often criticized PSO for not having any global optima avoidance mechanism. Especially if relying on the

action that may cause premature convergence and not having proper momentum settings, PSO can be trapped in the local minima easily [

23]. This problem is usually solved with repeated dispersion of particles from the randomly chosen initial positions, even several times if needed. Knowing the global minimum helps significantly in achieving it, although, in the majority of the cases, the global optimum is not known in practice. In our case, the fitness function value of the global minimum was not known directly. Furthermore, due to the noise of the LIDAR used, a solution in the “spurious” local minima may have the same or, in rare cases, even better fitness function value than in the “truly” global minimum. This means that we cannot estimate directly if the OA, in general, is trapped or not. However, the integrity of the solution can be checked indirectly by moving the robot for a known distance and rotation (the absolute value of the difference betweem the reference position and reference rotation value and the previous, already calculated, actual position and rotation) and making another measurement, hence comparing two consecutive measurements effectively. If the integrity check returns nonsense with regard to the Markov chain, this is then a reliable indicator that the OA is trapped in the local minima.

To cope with this, the classical OAs were tailored to address the challenge of local optima effectively. The idea of avoiding the local minima in the PSO, GA, and GEO algorithms is not a general solution, but rather an application-oriented solution. It is valid only for the localization of the mobile robot application or very similar applications with low signal-to-noise ratios. The odometrical method measures the distance traveled and orientation shifted between two relatively close positions of the mobile robot accurately. For longer distances traveled, the odometrical method is not a reliable indicator due to the omni-wheel slip and constantly accumulating error (in particular the rotation in the Z axis causes odometrical challenges). However, for shorter distances of up to two meters of linear travel and a rotation of less than 90°, the odometrical method works just fine, imposing just a few centimeters of error between two consecutive movements.

Practically, it was realized that the distance error margin of cm and the rotation error margin of , due to omni-wheel slip and accumulating error, resemble the sufficient error margins based on practical observations.

Meeting these two error margins simultaneously means passing the integrity check, meaning that the global minimum was found. When the OA does not find the global optimum within a given maximum number of iterations or generations, the algorithm is reset and run again. The so-called avoiding local minima search strategy (ALM) pseudocode is exhibited in Algorithm 4. If both the error margins are met, the integrity check is passed and the global best solution is returned. When the error margins are not met, the OA is repeated by dispersing the particles or individuals again in a while loop. The process is repeated until the testing solution meets the imposed error margins, which, unfortunately, imposes potential time delays.

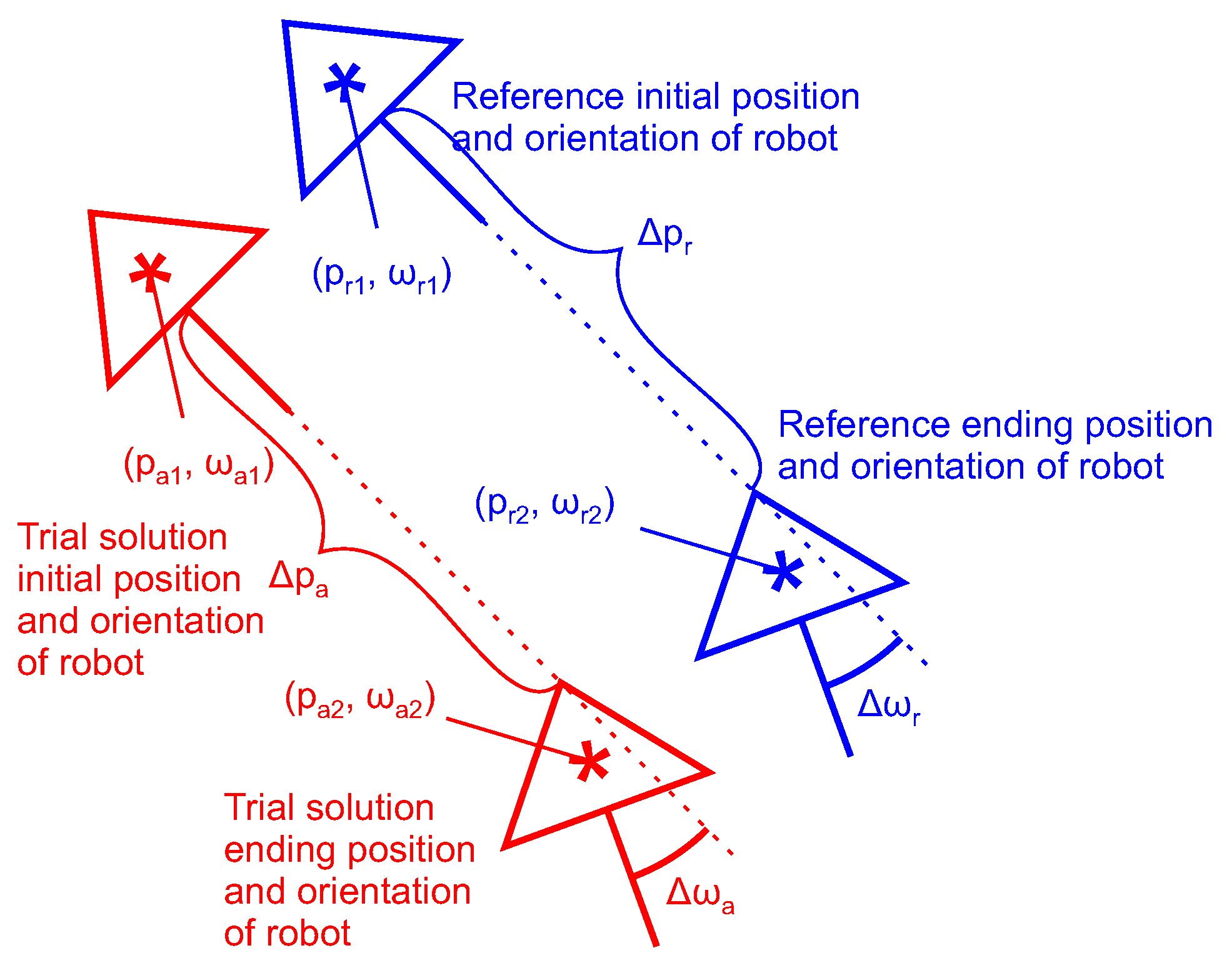

The ALM search strategy commences by initializing the particles or individuals randomly. The variable monitors the number of OA resets after not finding the global optima. It is set to 0. The variables and measure the unknown initial reference position and orientation. The variables and measure the unknown ending reference position and orientation. The differences and measure the reference move.

The variables

and

indicate an OA trial solution for initial position and orientation, where the trial solution means each particle or individual in every epoch or generation. The variables

and

indicate an OA trial solution for the ending position and orientation. The differences

and

measure the reference move. The differences

and

indicate a move performed for the given trial solution (see

Figure 5).

The procedure for the ALM search strategy is as follows: First, the robot is placed at an unknown position within the predetermined search space. Here, the LIDAR measurements are performed. Next, the robot is moved to position . Again, the LIDAR measurements are performed and recorded. The reference distance and rotation change are calculated as follows: and .

Then, the OA is executed to calculate and evaluate the trial solutions. The best solutions and are output for the initial position and and for the ending position. These are then compared to calculate the distance and orientation errors and . As long as these two fall outside the allowed error margins, the OA is rerun randomly again. The variable is incremented with each rerun. Once the errors meet the error margins, the global best solutions and are output.

The ALM search strategy pseudocode is exhibited in Algorithm 4.

| Algorithm 4 The ALM initial search strategy pseudocode. |

- 1:

Move the robot to - 2:

Measure the LIDAR data - 3:

Move the robot to - 4:

Measure the LIDAR data - 5:

Input: reference distance and rotation - 6:

- 7:

Calculate: optimization algorithm result - 8:

Calculate: optimization algorithm result - 9:

- 10:

- 11:

- 12:

- 13:

while cm and do - 14:

Calculate: optimization algorithm result - 15:

Calculate: optimization algorithm result - 16:

- 17:

- 18:

- 19:

- 20:

- 21:

end while - 22:

global best solutions for both poses - 23:

return

|

If both the error margins are met, the integrity check is passed. Then, the two poses of

are output from the ALM as a global minimum solution. If the error margins are not met, the search is repeated by dispersing the particles or individuals again until the testing solution meets the imposed error margins. In addition, the OA needs to be run twice for both unknown first

and second robot positions

. However, for each subsequent position, e.g., a third position or

, in general, just a single OA run is necessary, as the

i-th position is known. Therefore, Algorithm 4 was modified slightly, as follows in Algorithm 5. Accordingly, the average overall elapsed time

has been reduced by more than twice.

| Algorithm 5 The ALM search strategy pseudocode for each subsequent robot position. |

- 1:

Move the robot to - 2:

Measure the LIDAR data - 3:

Input: reference distance and rotation - 4:

- 5:

Calculate: optimization algorithm result - 6:

- 7:

- 8:

- 9:

- 10:

while cm and do - 11:

Calculate: optimization algorithm result - 12:

- 13:

- 14:

- 15:

- 16:

- 17:

end while - 18:

global best solution for the pose - 19:

return

|

2.4. Mapping of the Workspace by the ORB-SLAM2 Method and RANSAC Algorithm

The Pepper robot has 2D and 3D cameras, which means that applying V-SLAM for initial mapping of the workspace was natural. Although robot LIDARs are adequate for mapping surfaces near the ground, Pepper must also be careful of higher obstacles while navigating to prevent falling. Pepper’s native 3D camera assures the security perimeter, so the robot stops before hitting the obstacle, but for SLAM tasks the results can be poor. Therefore a RealSense RGB-D camera was used with ORB-SLAM2, mounted on Pepper’s head, for enhanced point cloud retrieval and a more detailed workspace map.

ORB-SLAM2 [

24] rests on detecting distinct features that are input into the mapping algorithm. It is designed for real-time applications in robotics and computer vision. Developed as an extension of ORB-SLAM, it offers improvements in robustness, efficiency, and adaptability across monocular, stereo, and RGB-D camera inputs. The algorithm utilizes ORB features for keypoint detection and descriptor matching. ORB features are computationally efficient and invariant to rotation and scale, making them well-suited for real-time SLAM applications. The algorithms can be used with monocular cameras, which require a motion-based initialization, while stereo and RGB-D cameras can compute a scaled map directly from depth information. The algorithm estimates the camera pose continuously by matching the extracted ORB features with keypoints in previous frames, and it employs a motion model to predict the next pose and refines estimates using bundle adjustment. It maintains a local map of the workspace by adding new keyframes and refining 3D poses using local bundle adjustment. This process ensures accurate tracking and prevents drift in the estimated trajectory. Finally, to prevent long-term drift, ORB-SLAM2 detects loop closures by matching features from the current frame with previously visited locations. Once a loop closure is detected, the system performs pose graph optimization to correct any accumulated errors.

The ORB-SLAM2 algorithm was not performed on the robot due to its CPU overload in the case of such high processing demand. Rather, the algorithm was executed on an Intel i7 laptop using 16 GB of RAM, running Ubuntu 16.04 LTS with ROS Kinetic. The ORB-SLAM2 used a RealSense D435 camera in RGB-D mode with a properly set up configuration to obtain optimal features for mapping. A RANSAC algorithm was used to be able to recognize and model a workspace with walls and corners from a previous gathered map (point cloud and features).

RANSAC is the short name for the Random Sample Consensus Algorithm. It is used for finding the best fit function for an existing dataset with outliers. Using RANSAC, datasets could be fitted to a single variable, as well as multivariable functions. In comparison to the least squares method (LSM) for finding the best fit function, it is more robust to outliers. RANSAC removes outliers from datasets iteratively before fitting the function to the data by the LSM or some other M-estimator algorithm. To remove outliers, RANSAC samples a part of the points from the existing dataset randomly and fits the function to this sampled dataset. Next, the absolute error between the function and randomly sampled points is calculated, and the points with the greatest error are considered as outliers and are removed from the dataset. Random sampling, error calculation, and elimination of outliers are iterated until random sampling of points from the dataset does not produce any change in the parameters of the function fitted to the dataset. Many improvements of the RANSAC algorithms have been proposed, and among them is MLESAC [

25].

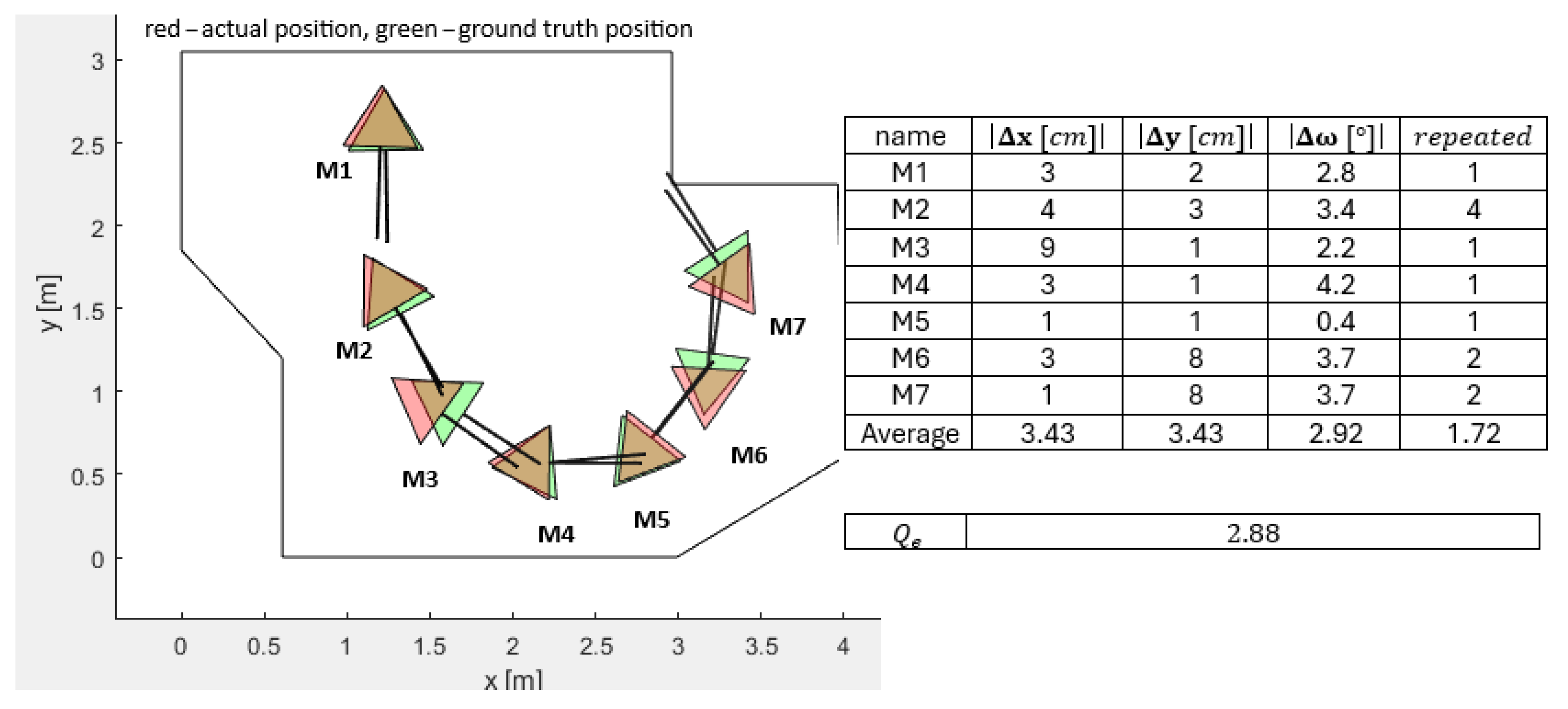

4. Discussion

We have proposed a novel PSO-ALM to cope with the absolute localization of a mobile robot with low-quality LIDAR measurements. The experimental work was divided into simulations and real lab experiments. The simulation tests modeled both random perturbations of the LIDAR as well as camera mapping workspace corner definitions. The former was to model the random disturbances caused by the LIDAR inaccuracies. The latter was used for workspace mapping—localization itself was not capable of simultaneous mapping; therefore separate mapping of the workspace (also called corner identification) was conducted before. The real laboratory tests included manual identification of the workspace corners, as well as automated identification using 3D camera mapping.

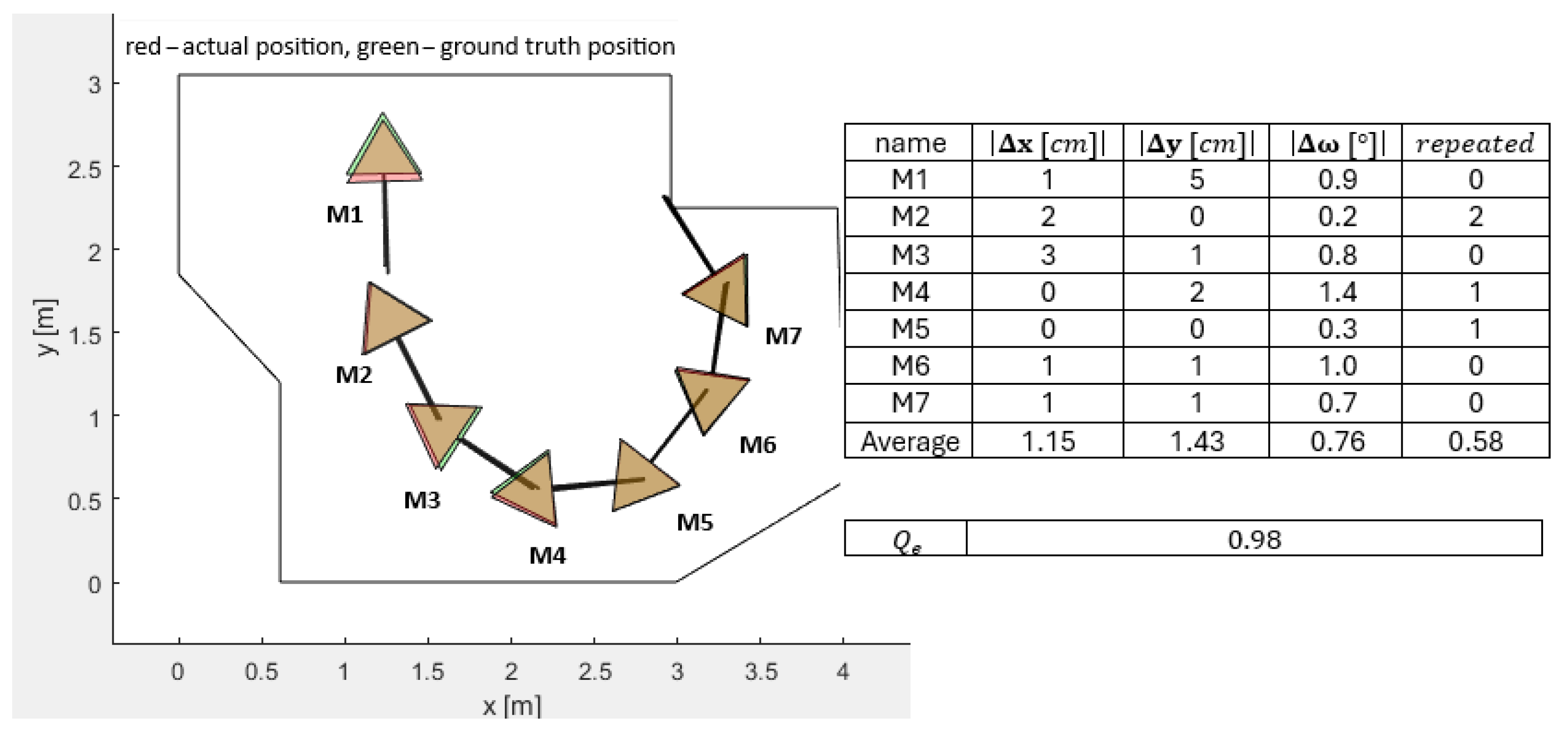

The simulation tests have shown that random perturbations of of simulated LIDAR measurements have quite a small effect on the deterioration in accuracy of the calculated OA positions and orientations. Increasing the perturbations lowered the quality of the results slightly but did not prevent the adequate localization skills of the robot. Even in the case of the highest perturbation, , the deviations were less than or equal to 8 cm; i.e., only a single case had cm and . Definition of the workspace corners had a dominant effect on the localization accuracy.

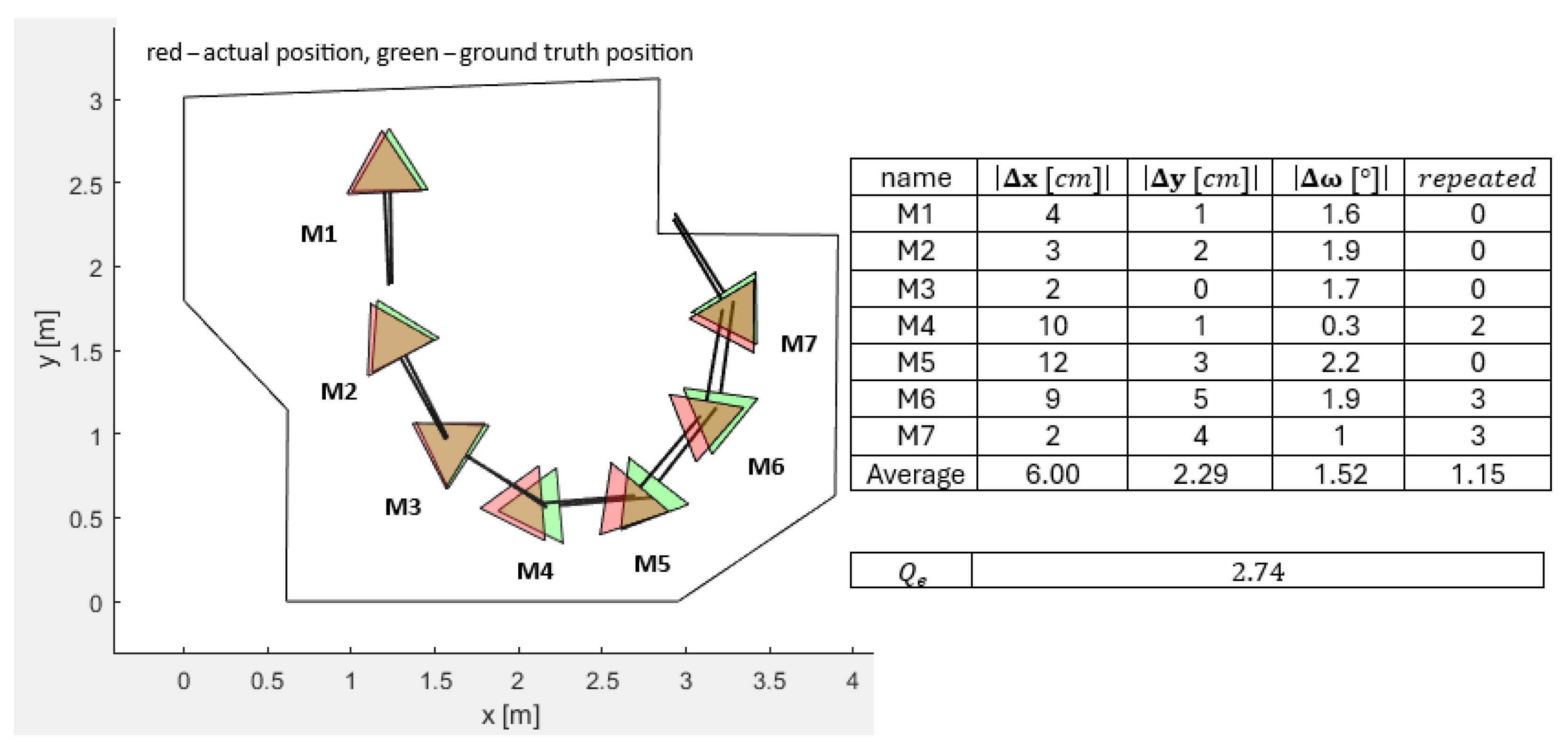

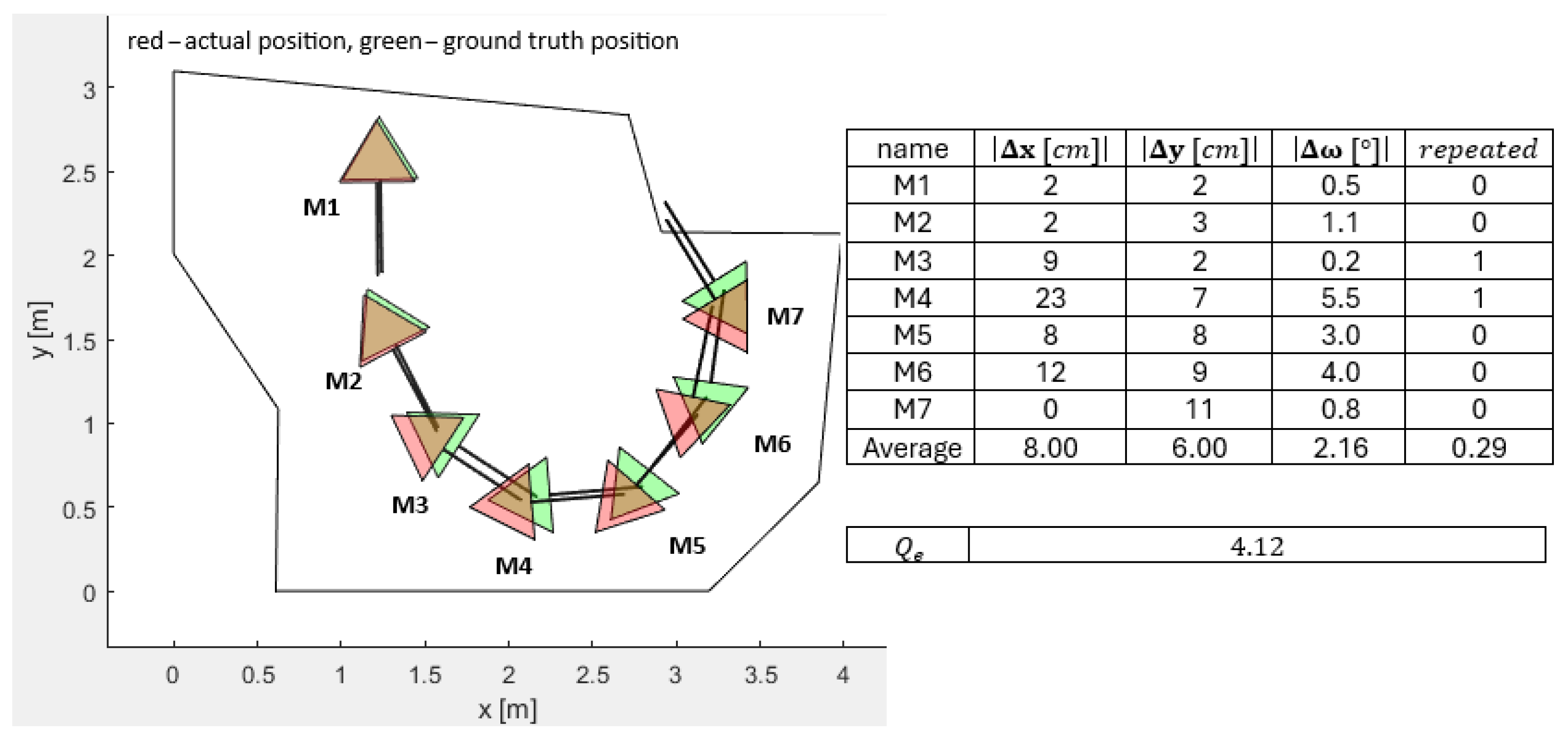

Increasing the perturbation of the corners of the workspace in simulations from to or caused a significant deterioration in the accuracy of the calculated PSO-ALM. Simulated deviations of up to cm and were spotted in the worst-case scenario. For the practical lab experiments, these accuracies of identifying the corner positions using the ORB-SLAM2 were estimated to be , even larger than the simulated ones. The estimated errors in the positions and orientations reached cm, , cm and for orientation in the worst case. Still, the general shape of the workspace was recognizable, and the OA was functioning, but the quality of the solutions was worse than expected. An adequate definition of the robot’s workspace area must be of higher priority than the LIDAR measurements; hence, significant care must be taken in the proper definition of the robot’s workspace area.

The real lab tests were much affected by the heavily deteriorated LIDAR measurements, especially for distances greater than 2 m and angles of measurement greater than 44°. An experiment was performed to realize the adequate FOV, where the disturbances were lowest. Here, the quality function value

was implemented to measure the disturbances. A separate experiment to realize the adequate PSO-ALM parameter settings was performed too. The influence of the obstacles imposed in front of the LIDAR were also studied carefully. The closer the obstacle, the greater the disturbance and the worse the localization inaccuracy. Despite the inaccurate LIDAR measurements, the PSO calculations of the mobile robot positions and orientations were still close to the ground-truth measurements of the real mobile robot positions and orientations (see

Figure 14). The average absolute errors in the base coordinate system were (

) cm for all seven measurements, and the average absolute error was (

).

The value of elapsed time was decreased by lowering the FOV settings, as expected. The most adequate FOV setting seemed to be for lab measurements as a compromise between time complexity and sensitivity to obstacles. The FOV setting could be decreased further to , decreasing the time elapsed for measurements significantly and increasing the quality function value of the measurements due to less dispersed beams but increasing the sensitivity to obstacles. By using more accurate LIDAR sensors, the proposed algorithm would have produced fewer local minima solutions and converged toward the global minimum faster. Therefore, we can say that the proposed method could be generalized, as it should work even better with more accurate LIDARs.

The experiments performed have shown that the best quality function value for pose P1 was obtained by a population size or and number of iterations . Increasing the population size further did not improve the result; actually it worsened the time complexity. Although it was not mentioned in this paper, the real lab experiments were solved using the traditional genetic algorithm (GA) for a fair comparison. The GA performed similarly in terms of the quality function value , but with significantly higher overall elapsed time , app. 3 to 4 times larger than the PSO.

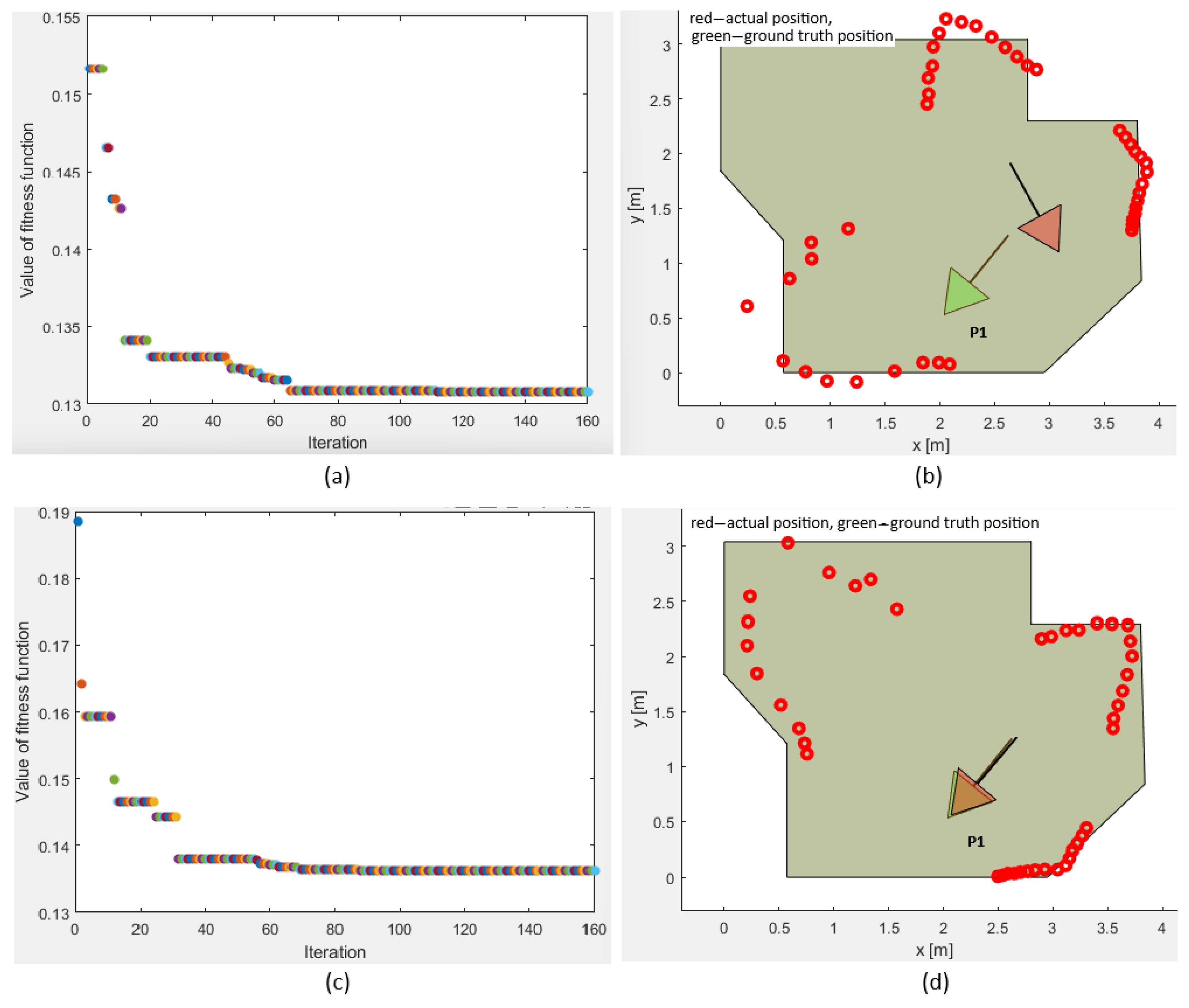

Extensive testing was performed for pose P1 with multiple local optima within the workspace. Additionally, convergence plots were generated for the fitness function value for position P1.

Figure 12 represents the two possible outputs, with one of them being the local optimum trapped solution and the other the global optimum. The experiments performed for position P1 show the fitness function value for both experiments and the positions and orientations calculated by the plain vanilla PSO for each value of the fitness function. Executing the PSO twice, and monitoring the performance after 160 iterations, these fitness function values were between

and

for both experiments, with the difference that one of these was the local optimum trapped solution and the other was the global optimum solution. The wrong positions and orientations were calculated four times more often than the correct one due to the local optimum solution having a fitness function value lower than the global optimum solution. This was a significant drawback of the plain vanilla PSO algorithm. Hence, the integrity check and PSO-ALM were introduced to verify the correctness of the calculated results, as by referencing the fitness function value only, it was impossible to deduce whether the solution was trapped or not. The PSO-ALM demonstrated superior performance compared to the odometric method and overperformed all the benchmarks, the GA, GEO, GA-ALM, and GEO-ALM.

5. Conclusions

The absolute localization of the semi-humanoid mobile robot Pepper in the reference Cartesian coordinate system was presented in this paper. Three sets of light detection and ranging (LIDAR) distance measurements were used, i.e., a single measurement ahead of the robot and two measurements to the sides of the robot. Underlying simulations were performed, as well as real laboratory experiments. Based on the knowledge obtained from the simulations, the modified particle swarm optimization algorithm with a local optima avoidance mechanism (PSO-ALM) and integrity check for verifying the correctness of the solution was proposed and tested in a real-world robot workspace. The proposed solution was compared to the plain vanilla PSO, which underwent local optima trapping. Experimentally, we realized that the solution trapped into the local optima was more than four times more frequent than the global optimum. The built-in odometrical algorithm, on the other hand, depended much more on the distance traveled, since it underwent the classic disturbance integration that increased with respect to the distance. The PSO-ALM worked well when knowing the workspace with high accuracy. When a low-accuracy workspace plan, obtained from the automated ORB simultaneous localization and mapping, was given to the PSO-ALM, its performance decreased heavily. This is one of the drawbacks of the PSO-ALM that should be addressed in future, i.e., to allow for both simultaneous localization and mapping, not only the localization itself. Although some cues were written in this paper, a rigorous statistical analysis with time complexity of the proposed algorithm still needs to be performed. Another improvement that could be implemented is the fusion of localization data coming from different sources for improved reliability, e.g., using an extended Kalman filter or similar. Data fusion of the odometry with the LIDAR seems a natural choice for a future work. The odometry is accurate enough for short distances but prone to drift—such drift could be reset occasionally using the LIDAR localizations. If successful, the proposed method should be validated in a three-dimensional workspace in the future, including the z axis for localization of airborne unmanned aerial vehicles.