1. Introduction

A gravity gradiometer is a high-precision instrument that measures gravity gradients and is widely used in fields such as geophysical exploration, military reconnaissance, space measurement, and underground resource exploration. Since the early 20th century, gravity gradiometers have evolved from mechanical to electronic and eventually to quantum technology. The concept of the gravity gradient traces back to Newton’s law of universal gravitation, which described the gravitational field, despite the fact that there were no instruments available at the time to accurately measure the gravity gradient. By the end of the 19th century, scientists recognized the importance of spatial variation in the gravitational field for more precise detection of underground materials. Following this realization, advances in mechanics, mathematics, and material sciences enabled the design of precise gravity-measuring instruments [

1,

2]. Torsion balance-based gravity gradiometers first appeared in the early 20th century. These torsion balance-type instruments calculated the gravity gradient by measuring force differences on suspended masses within a gravitational field. A representative example is the Eötvös torsion balance, developed by Hungarian physicist Eötvös Loránd around 1900, which was used to measure variations in the earth’s gravitational field. This design became a classic instrument in early gravity gradient measurement, laying the foundation for subsequent developments [

3,

4]. With the advancement in airborne and marine technologies, mechanical gravity gradiometers were gradually applied in these fields by the mid-20th century. Because of the high speed of aircraft and ships, these instruments had to meet higher interference resistance requirements [

5]. Early mechanical instruments were bulky and had low sensitivity, with measurement accuracy limited by platform vibrations and environmental factors, allowing only low-speed, localized measurements [

6]. Gravity gradiometers were revolutionized in the 1950s due to rapid technological advancements. Instruments built with capacitive sensors, piezoelectric materials, and other electronic components gradually replaced traditional mechanical structures. These electronic instruments measured tiny displacements or torques caused by gravitational fields and used electronic amplifiers to improve signal sensitivity [

7,

8]. In 1979, the American Air Force introduced the Mark II electronic gravity gradiometer, marking a significant leap in gravity measurement technology. This instrument used high-precision sensors to capture gravity gradient signals aboard aircraft, increasing sensitivity while also allowing measurements on dynamic platforms [

9]. During this period, electronic gravity gradiometers were widely used in airborne and marine gravity surveys, and they eventually found use in underground resource exploration. During the 1980s, gravity gradiometers were widely used in marine exploration, particularly for detecting oil, gas, and mineral resources. Because gravity gradients are more sensitive to underground structural changes than gravity values, marine gravity gradient measurements may be more accurate in determining resource distributions. These instruments were typically mounted on deep-sea detectors or ships, capturing small changes in the seafloor’s gravitational field to conduct high-resolution surveys [

10,

11]. By the late 20th century, superconducting technology had significantly improved the accuracy of gravity gradiometers. Superconducting gravity gradiometers use superconductors’ zero-impedance properties to significantly reduce environmental noise interference, resulting in extreme sensitivity. These instruments were mainly used for scientific research and military detection, which required precise measurements. Superconducting gravity gradiometers operate at low temperatures, making them ideal for precise gravity measurements in polar or space environments [

12,

13]. In the early 21st century, the emergence of cold atom technology revolutionized gravity gradient measurement. Cold atom technology, which is based on quantum mechanics, cools atoms to near absolute zero, resulting in the ability to measure the gravity gradient through atomic interference. This technology theoretically provides extremely high measurement sensitivity while avoiding the mechanical and thermal noise issues found in traditional instruments. In 2007, Paris-Sud University in France proposed using atomic interferometers to measure gravity gradients. Subsequently, cold atom gravity gradiometers evolved from experimental prototypes to practical devices. Cold atom gravity gradiometers provide more stable and precise measurements under dynamic conditions than traditional electronic gravity gradiometers, making them ideal for applications in aviation, space, and ground exploration [

14,

15].

As airborne gravity gradiometers improved, researchers gradually realized that the aircraft’s movement and attitude changes could have a significant impact on measurement results. The errors in dynamic airborne gravity gradient measurements can be classified into two major categories: First, there are system errors caused by noise within the gravity gradiometer itself, such as scale factor inconsistencies in accelerometers within rotating accelerometer-based gradiometers, installation errors, circuit gain mismatches, and other system error components that are related to the manufacturing process and system performance. Second, errors from the dynamic measurement environment, such as translational motion, angular motion, attitude, and temperature, are coupled with various error channels in the gradiometer system, transferring noise interference from the dynamic environment to the gravity gradiometer’s output, resulting in dynamic measurement errors in gravity gradient measurements [

16]. To reduce the impact of the aircraft’s dynamic environment on the accuracy of gravity gradient measurements, compensation algorithms and technologies have emerged as a key research focus. In the 1970s, researchers proposed using aircraft attitude and velocity information for preliminary compensation. By utilizing motion information provided by the aircraft’s inertial navigation system, initial corrections could be made to compensate for errors caused by acceleration and angular velocity [

17]. Gravity gradient compensation technology became more refined in the 21st century as computer technology, navigation systems, and high-precision sensors advanced rapidly. The use of advanced inertial measurement units (IMUs) and global positioning systems (GPSs) enabled the development of precise dynamic compensation techniques. Multisensor data fusion technology were integrated into airborne gravity gradient compensation systems. By integrating data from sources such as IMUs and GPSs, errors caused by aircraft motion and air turbulence can be effectively eliminated, significantly improving the accuracy of gravity gradient measurements [

18]. In recent years, the advancement of artificial intelligence and machine learning technologies has provided new approaches to airborne gravity gradient compensation.

Deep learning is a branch of machine learning that has made significant advances in a variety of fields in recent years, owing to increased computational power and the availability of large amounts of data. Deep learning has outperformed traditional methods in several areas, including image recognition, speech processing, and natural language processing. The concept of deep learning originated from neuroscience research in the 1940s when McCulloch and Pitts proposed a neuron model that simulated the basic functions of biological neurons [

19]. Rosenblatt introduced the Perceptron model in 1957, which is widely regarded as the first artificial neural network [

20]. The perceptron was a simple linear classifier that performed well with basic problems. However, in 1969, Minsky and Papert discovered that the perceptron could not solve nonlinear problems, such as the XOR (exclusive OR) problem, causing a temporary halt in neural networks research [

21]. In the 1980s, neural networks regained attention with the introduction of the backpropagation algorithm. Hinton and others popularized backpropagation in 1986, allowing neural networks to effectively adjust weights across multiple layers of neurons, thereby addressing complex nonlinear problems [

22]. Though the neural networks of that era were shallow, this progress laid the groundwork for deeper neural networks. Deep learning first emerged in the 21st century as a result of advances in hardware, particularly GPU technology. In 2006, Hinton and colleagues introduced deep belief networks (DBNs), marking the formal rise of deep learning. To address the challenge of training deep neural networks, DBN employed unsupervised layer-wise pretraining [

23]. In 2012, Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton won the ImageNet competition with their proposal of AlexNet, an eight-layer convolutional neural network. AlexNet’s use of GPUs for training significantly improved computational efficiency [

24]. Later neural networks, such as VGGNet (2014) and ResNet (2015), improved neural networks’ depth and performance. ResNet addressed the problem of vanishing gradients in deep networks by introducing residual connections, which enabled networks to reach hundreds of layers [

25]. Recurrent Neural Networks (RNNs) are excellent at handling sequential data, such as time series and natural language. However, standard RNNs have difficulty with long-term dependencies and capturing distant information. Hochreiter and Schmidhuber introduced long short-term memory (LSTM) networks in 1997, which combined memory cells and gating mechanisms to effectively address vanishing and exploding gradients. In recent years, LSTM and their simplified variant, GRU (gated recurrent unit), have been widely used in speech recognition, natural language processing, and other fields [

26]. In 2017, Vaswani and colleagues introduced the Transformer model, which replaced the RNN structure with a self-attention mechanism for sequential data processing. The Transformer model’s multi-head self-attention mechanism established dependencies between any two positions in a sequence, significantly improving parallel computation efficiency. Transformer-based architectures such as BERTs (bidirectional encoder representations from transformers) and GPT (generative pre-trained transformer) have demonstrated great success in natural language processing tasks, confirming deep learning’s dominance in the NLP (natural language processing) domain [

27]. In 2023, Krichen introduced the fundamentals of convolutional neural networks (CNNs), then discussed several popular CNN architectures and compared their performance. He also explored when to use CNNs, their advantages and limitations, and provided recommendations for developers and data scientists [

28]. In the same year, Alahmari et al. introduced three CNN models to measure students’ engagement in E-learning (EL) assignments and demonstrated the efficiency under different conditions. The application of these models is primarily based on analyzing learners’ facial expressions in online environments [

29].

During flight, an aircraft’s acceleration changes gradually and smoothly over time, rather than abruptly or instantaneously. This behavior is based on fundamental mechanical and aerodynamic principles. Acceleration is the rate at which velocity changes, and an aircraft’s speed is influenced by the interactions of various forces, including thrust, drag, gravity, and lift. These forces usually change continuously during flight, resulting in constant acceleration [

30,

31,

32]. Moreover, as observed from the error transmission mechanism in the rotating gravity gradiometers, there is significant coupling between the measured dynamic parameters and the system errors.

Therefore, considering the temporal correlation of the measured dynamic parameters and their coupling with system errors, this paper employs the CNN-LSTM neural network’s post-error compensation method to achieve compensation for dynamic measurement errors in the rotating gravity gradiometer. First, multi-channel convolution is used to enrich the feature space and improve the model’s feature extraction capability. This process prepares the data so that the subsequent LSTM model can better capture dependencies within input sequences. The features are then passed to the LSTM neural networks, which use a unique cell state and appropriate gating to selectively retain, update, or forget information as required. During backpropagation, the LSTM neural networks can effectively capture temporal dependency information, resulting in superior performance in airborne gravity gradient compensation and improved compensation accuracy. This post-error compensation method effectively suppresses dynamic measurement noise, and its effectiveness was demonstrated using both simulated and measured data.

3. Gravity Gradient Compensation Model

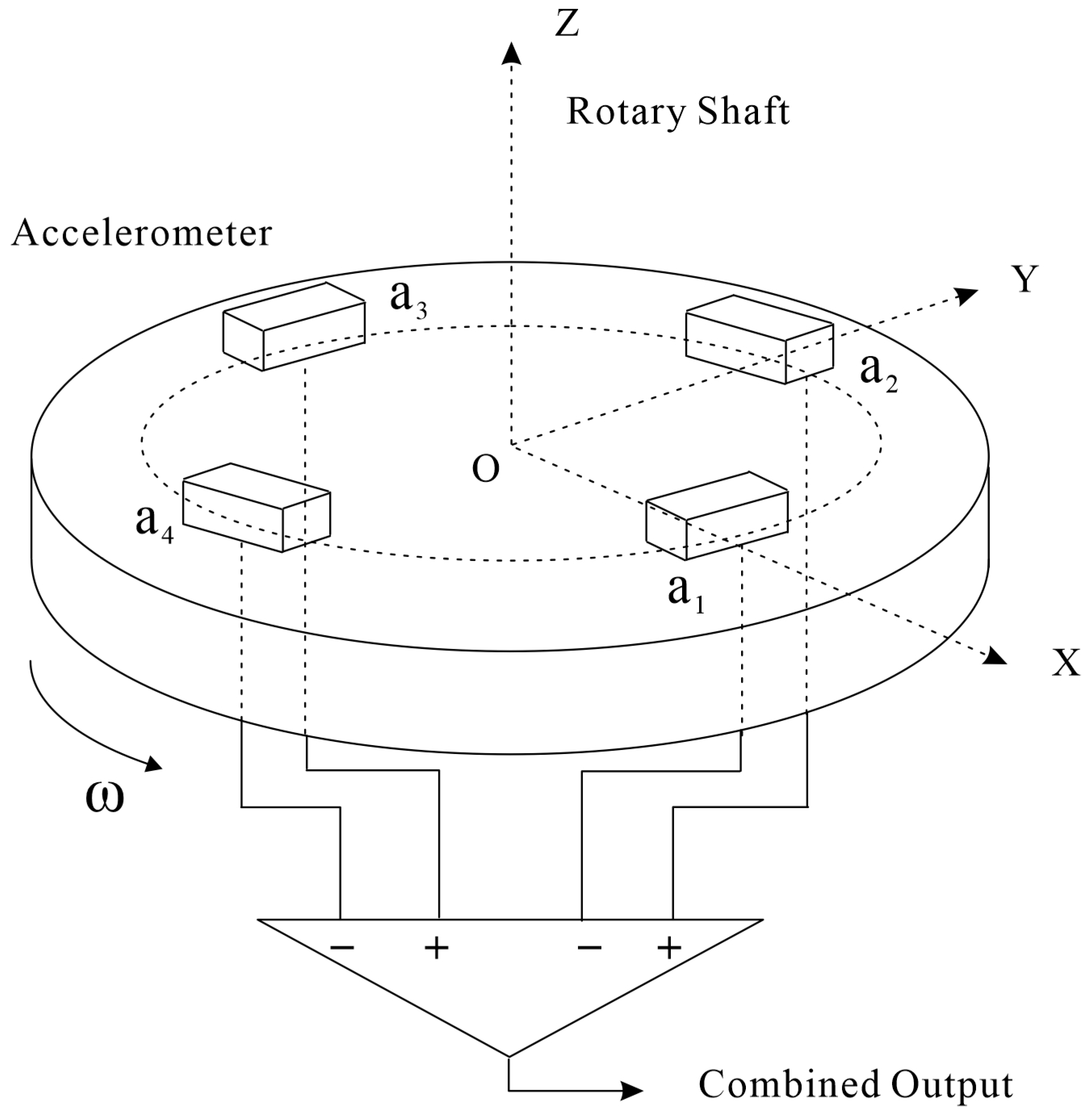

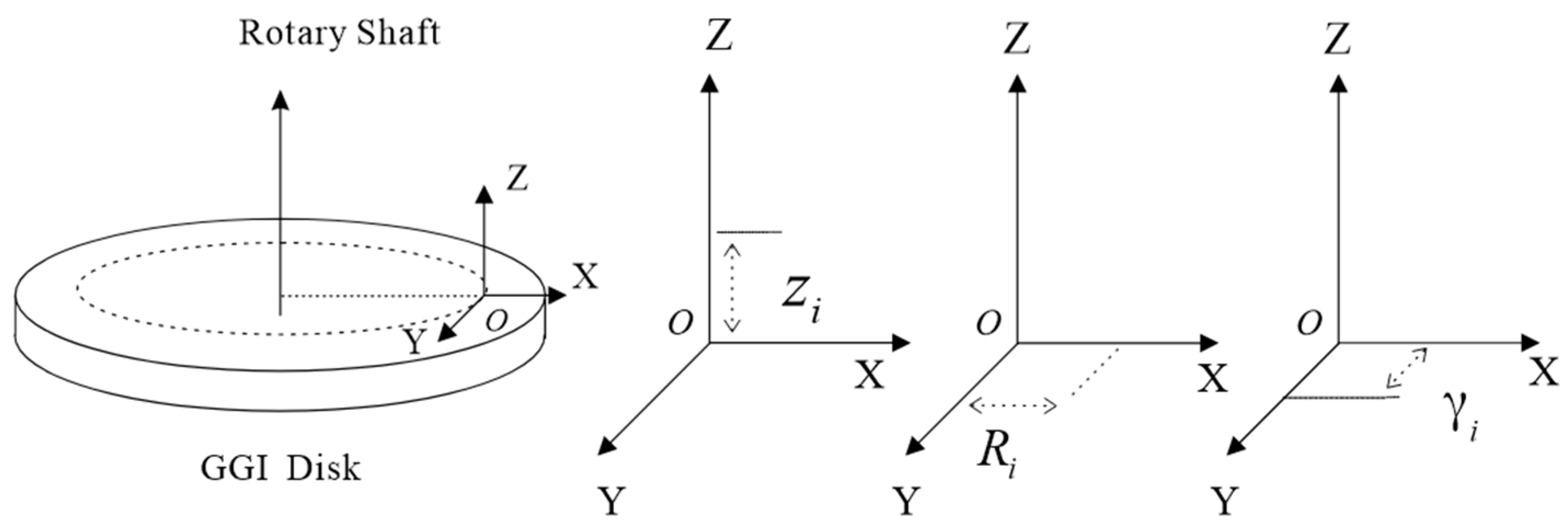

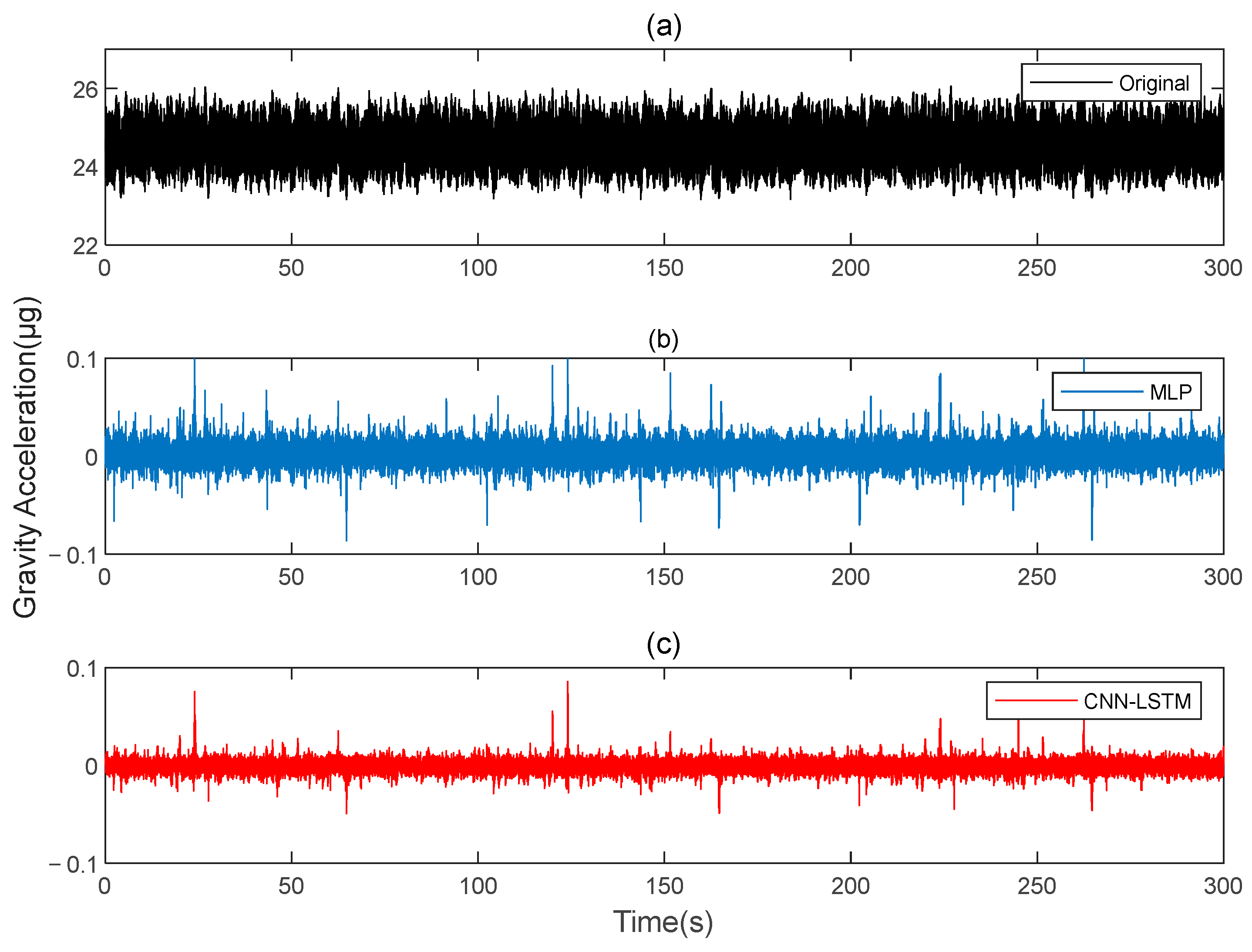

In this paper, a sample set is created by collecting three-directional acceleration, angular velocity, angular acceleration, and gravity gradiometer output noise data obtained during the airborne gravity gradient dynamic measurement procedure. Deep learning neural networks are used to construct a relationship between dynamic measurement errors and motion parameters in order to achieve post-error compensation for gravity gradiometer dynamic measurement data. Given the coupling of noise in airborne gravity gradient data, the proposed neural network model (shown in

Figure 3) improves feature extraction capabilities via convolution, which is then passed on to the LSTM neural network. This allows the model to accurately capture temporal dependencies in the data.

In an LSTM neural network, there is a forget gate that can selectively retain or discard previous state information as needed. This allows the LSTM to selectively keep historical information that is useful for the model when handling time series, instead of either discarding or retaining everything indiscriminately. The LSTM controls the inflow of new information through the input gate and manages the output at each time step through the output gate. This gating mechanism allows the model to flexibly adjust the amount of information to update at each moment. In this way, at different points in the sequence, the LSTM can dynamically choose which information to retain and which to output, thereby effectively handling complex temporal dependencies. The cell state in an LSTM serves as a highway running through the entire sequence, making it less susceptible to vanishing gradient issues. This design enables the LSTM to maintain effective state transmission even across long time steps, allowing long-term information to be carried forward to later points in the sequence. This addresses the vanishing gradient problem that traditional RNNs face when learning from long sequences.

The LSTM’s unique structure includes a gate mechanism that controls the flow of information. The hidden layer consists of three gates: the forget gate, the input gate, and the output gate, as well as a cell state, which stores important information. The forget gate uses a sigmoid activation function to generate a value between 0 and 1 based on the current input and the previous hidden state, which determines how much information is forgotten. The closer the value is to 0, the greater the degree of forgetting, and the closer it is to 1, the more information is retained.

where

represents the output of the forget gate,

is the sigmoid activation function,

represents the weight matrix of the forget gate,

denotes the bias, and

denotes the combination of the current input and the hidden state from the previous time step.

Input gate. The primary function of the input gate is to determine what information needs to be retained in the neuron based on the current input

. First, a candidate cell state at time

t is generated using a tanh (the hyperbolic tangent activation function) layer, denoted as

. This candidate state is then combined with the forget gate’s output to update the cell state, resulting in a new cell state

. The specific computation process for the input gate is as follows:

where

represents the output state of the input gate at time

t, determining the impact of the input

on updating the cell state

;

and

denote the weight and bias terms of the input gate, respectively;

and

represent the weight matrix and bias for generating the candidate memory cell

; and

denotes element-wise multiplication.

Output gate. The output gate extracts and outputs key information from the current cell state. First, a σ layer decides which neuron states should be output. These neuron states are then processed by a tanh layer and multiplied by the output of σ to produce the final output value

, which also serves as the hidden layer input for the subsequent time step. The calculation process for the output gate is as follows:

where

represents the output state of the output gate at time

t;

and

are the weight matrix and bias terms for the output gate, respectively.

Due to these characteristics, LSTM is better suited for capturing dependencies in time series data, which is especially important for data with strong temporal associations. In this paper, combining LSTM with one-dimensional convolution allows for fully leveraging the convolution’s ability to extract local features, while LSTM captures the temporal dependencies in these severely coupled data.

4. Gravity Gradient Noise Signal Simulation and Compensation

This paper comprehensively considers various non-ideal factors in the error transmission mechanism in order to simulate the gravity gradiometer output signal. The parameters associated with these non-ideal factors (model parameters) are determined by selecting values at random from a specified range.

Table 1 lists the specific values and units of the parameters, with ppm representing one part per million.

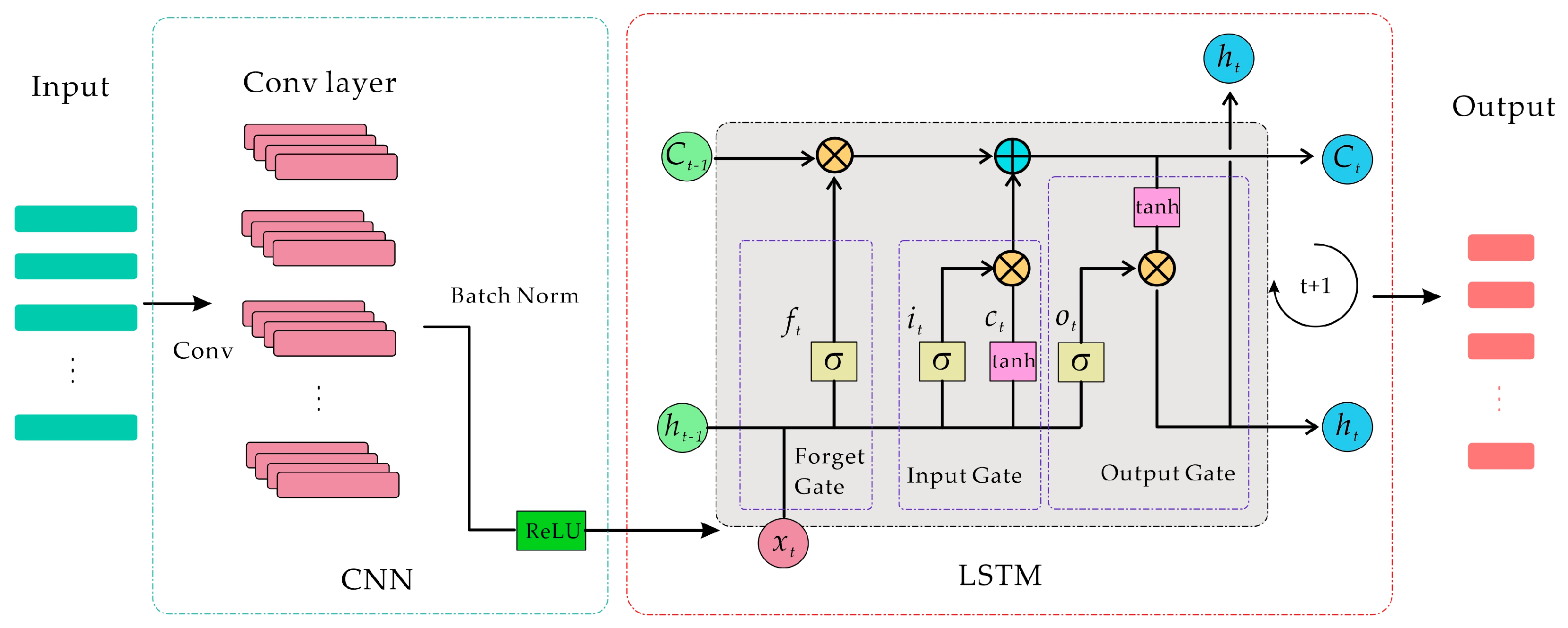

Based on the defined model parameters, the following input parameters are established: The distance R between the center of mass of the accelerometer and the center of the disk is set to 0.2 m. The sampling frequency of the rotating gravity gradiometer is set to 800 Hz, while the rotation frequency f of the GGI disk is set to 0.25 Hz. At f = 0.25 Hz, the primary influence on the measurement of the rotating gravity gradiometer is the component of each motion parameter below 1 Hz. The accelerations , , and in the three directions of the rotating gravity gradiometer are composed of randomly determined accelerations within the range of −0.3 to 0.3 and 100 components randomly distributed between 0.01 and 1 Hz. The amplitudes of these components are randomly determined between 0.006 and 0.010 , and the phases are randomly determined. For , the acceleration of 9.8 caused by gravity must be subtracted.

The angular velocities , , and in the three directions of the rotating gravity gradiometer are set to consist of determined angular velocities randomly determined within the range of −3 to 3 and 100 components evenly distributed from 0.01 to 1 Hz. The amplitudes of these components are randomly determined between 0.2 and 0.5 , and the phases are randomly determined. The above random numbers have a continuous uniform distribution, and quantities remain constant over time once determined. The angular accelerations of rotation in the three directions of the rotating gravity gradiometer are obtained using the first-order difference with respect to time for , , and . Demodulation employs a low-pass filter with a rectangular window and a cutoff frequency of 0.075 Hz.

This paper simulates two sets of rotating gravity gradiometer output signals, denoted as signal A and signal B. Initially, signal A is used as the model’s training set, with signal B serving as the verification set. The compensation results are compared to the traditional deep learning method, MLP.

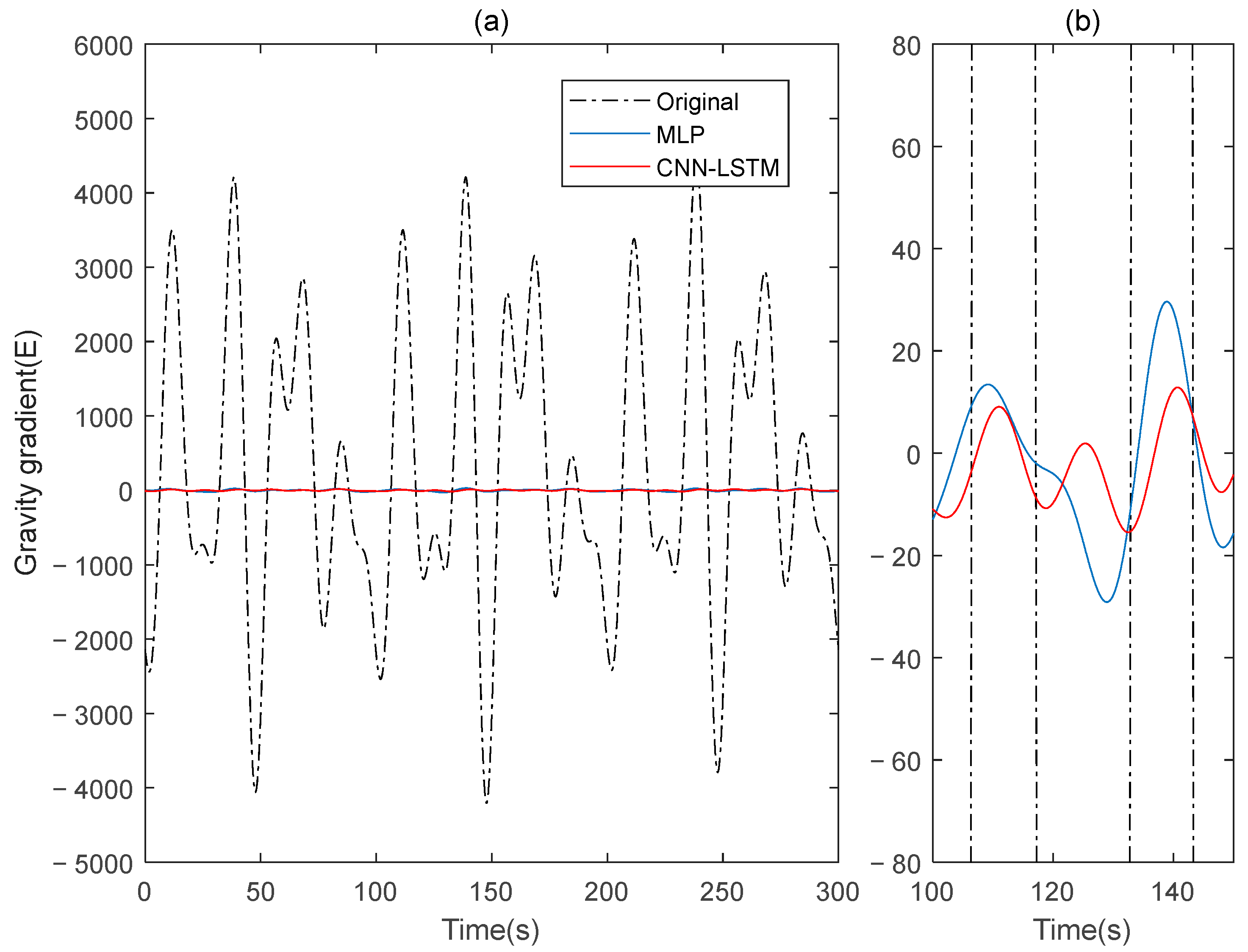

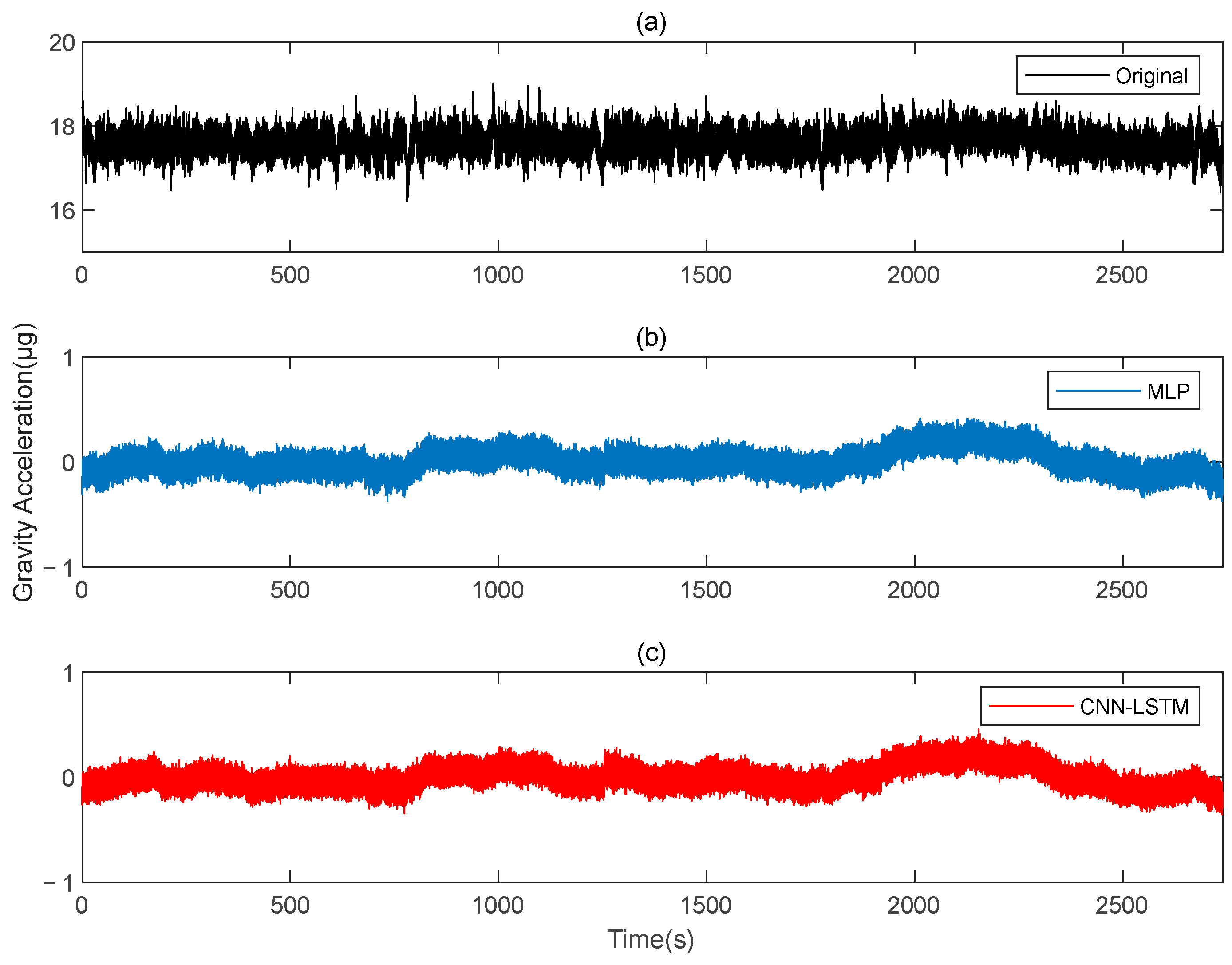

Figure 4 shows the rotating gravity gradiometer’s outputs before and after compensation. The rotating gravity gradiometer output data, both before and after compensation, are demodulated [

34]. The

gradient component is demodulated with a sinusoidal doubling frequency, while the

gradient component is demodulated with a cosine doubling frequency. The

and

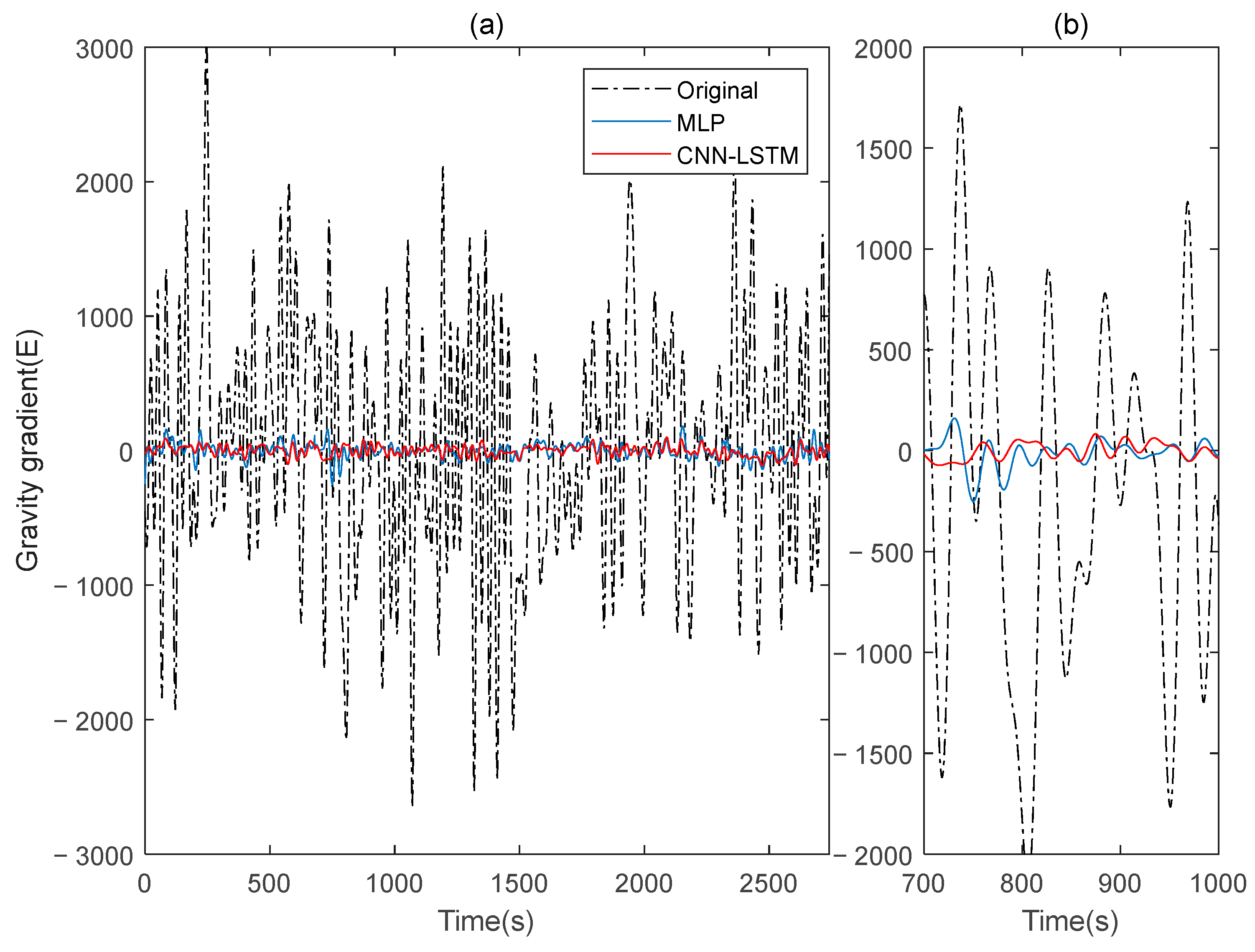

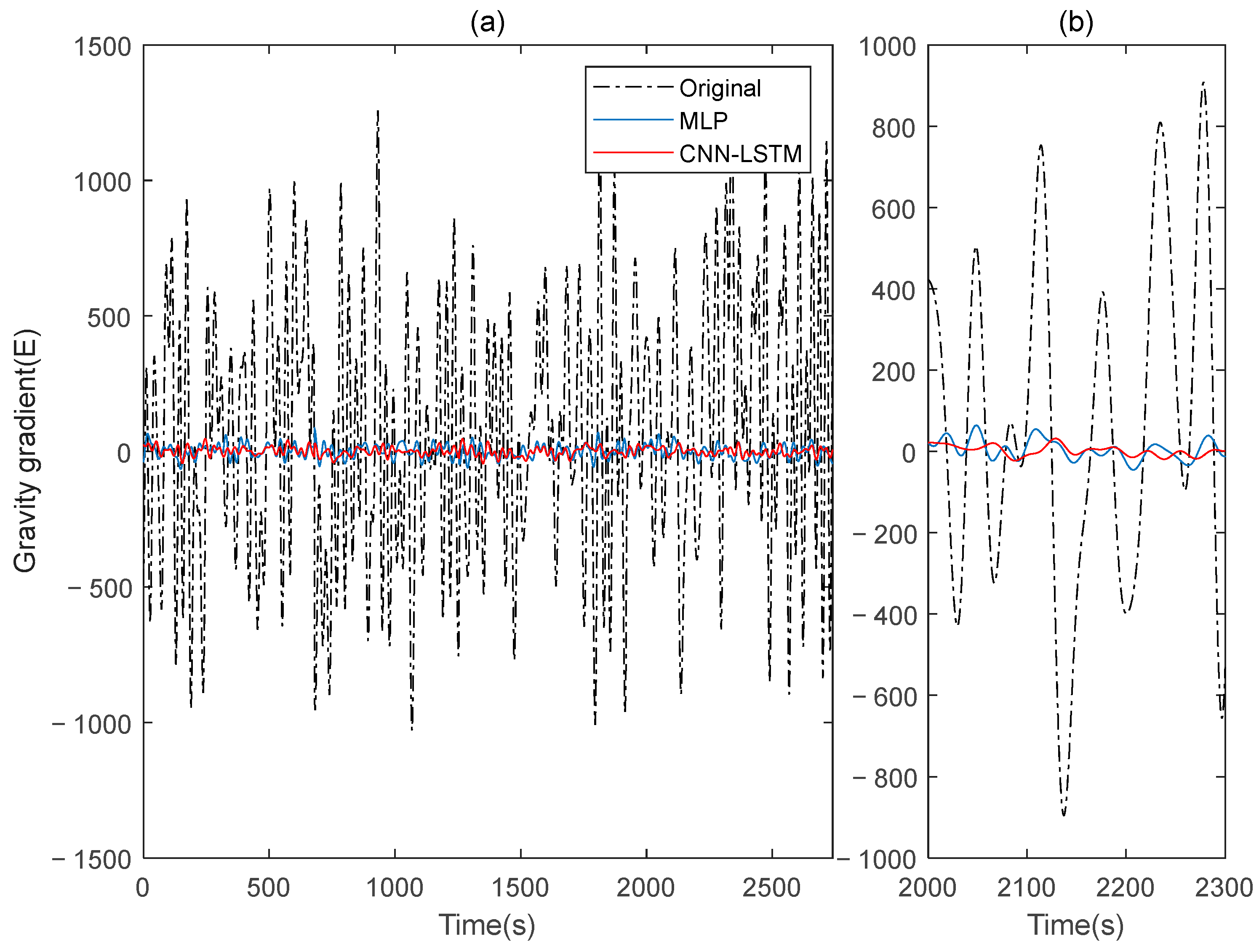

gravity gradient components before and after compensation are obtained as shown in

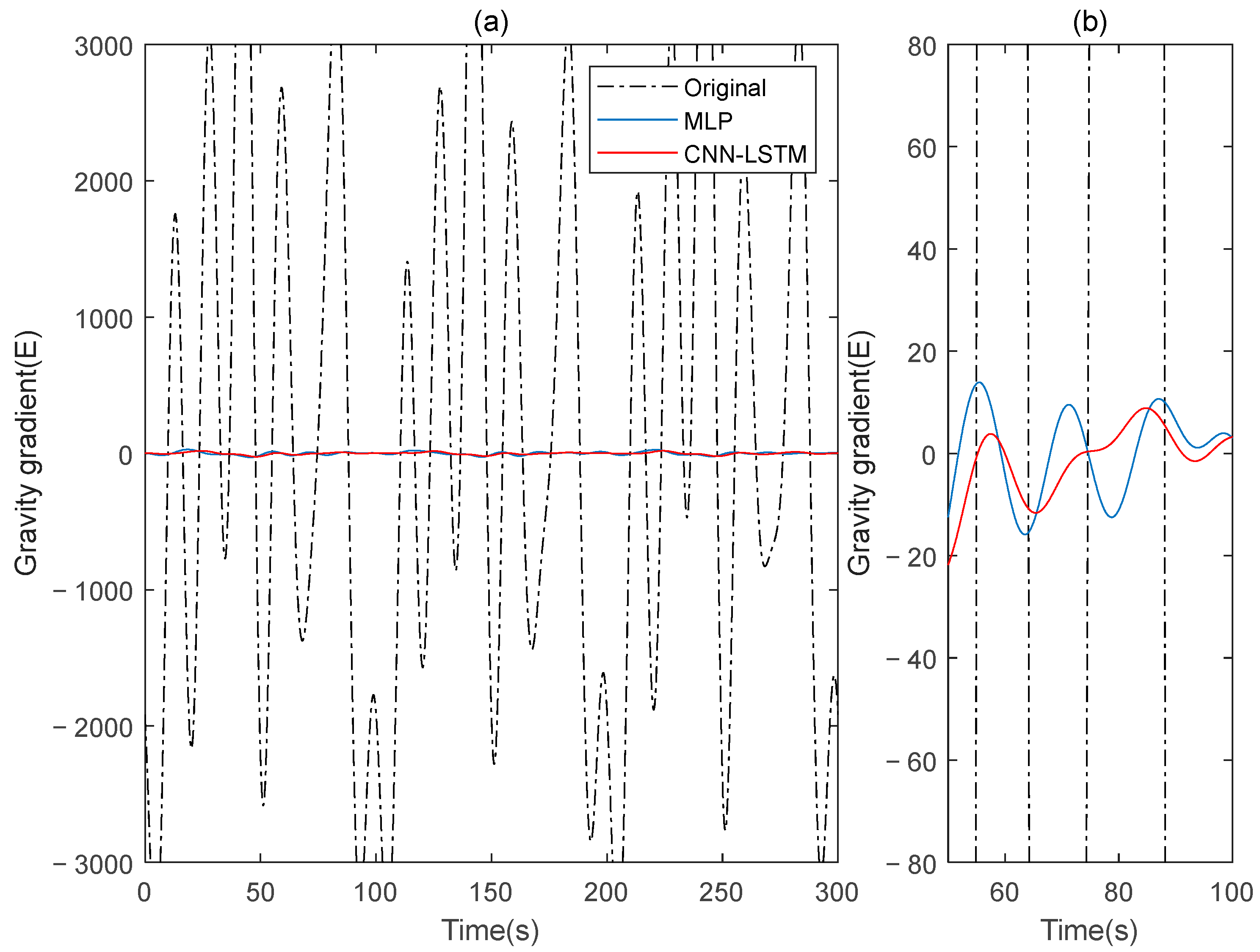

Figure 5 and

Figure 6, respectively.

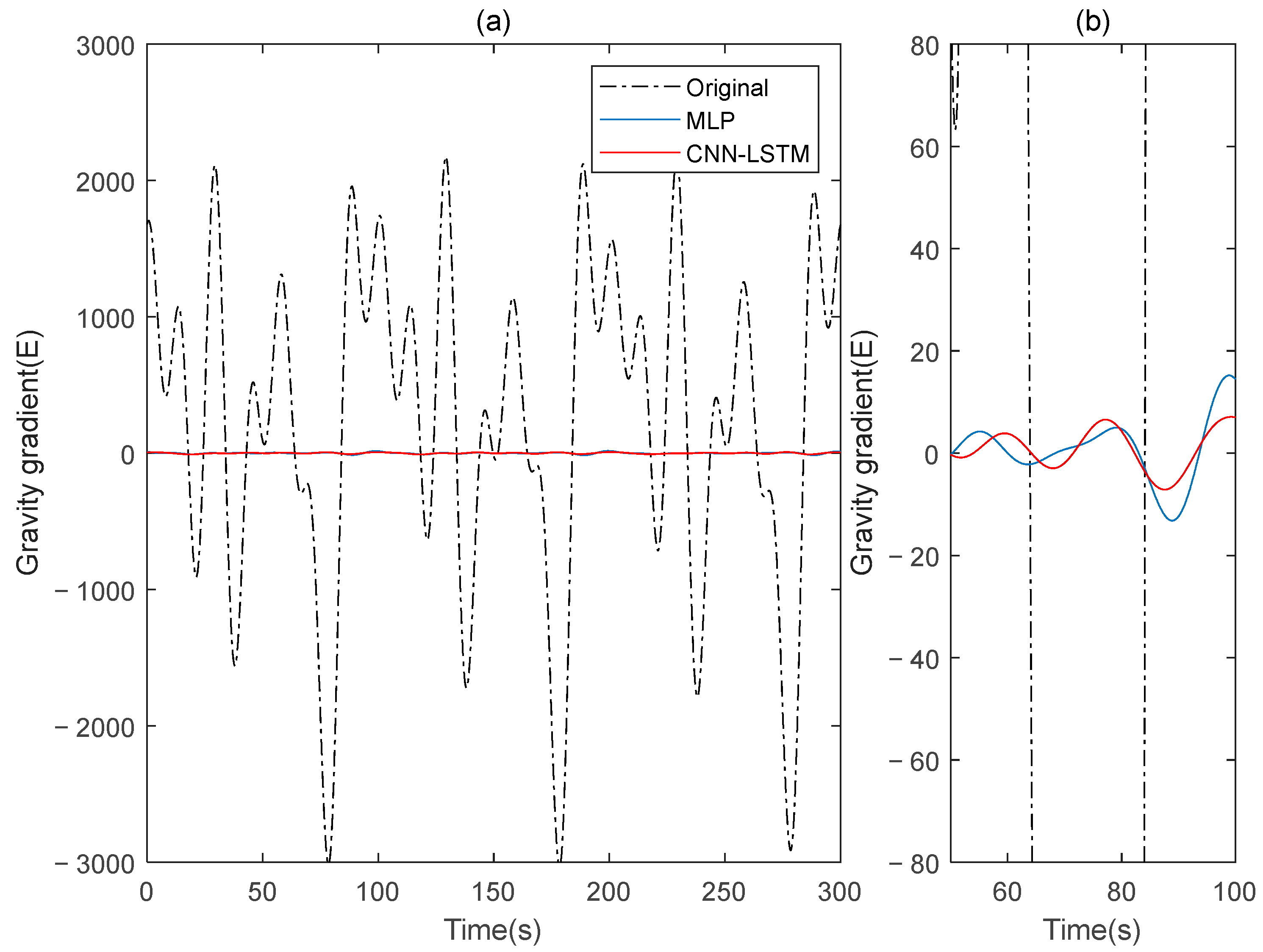

Subsequently, signal B is used as the training set for the model in this paper, while signal A serves as the verification set. The procedures are repeated, and the results before and after compensation are calculated using the rotating gravity gradiometer outputs before and after compensation, as shown in

Figure 7. The

and

gravity gradient components before and after compensation are shown in

Figure 8 and

Figure 9, respectively.

The outcomes of gravity gradient compensation experiments are evaluated using standard deviation (

STD) and lift rate (

IR).

STDs are defined as follows:

where

represents the sample size,

is the signal value, and

is the mean.

is defined as follows:

where

is the uncompensated signal values and

is the compensated signal values.

The compensation result diagram and

Table 2 of the gravity gradient component of the simulated data indicate that the deep learning method CNN-LSTM used in this paper outperforms the traditional MLP method, with a lower

STD value and a higher

IR value, resulting in a significant compensation effect on gravity gradient signal noise.

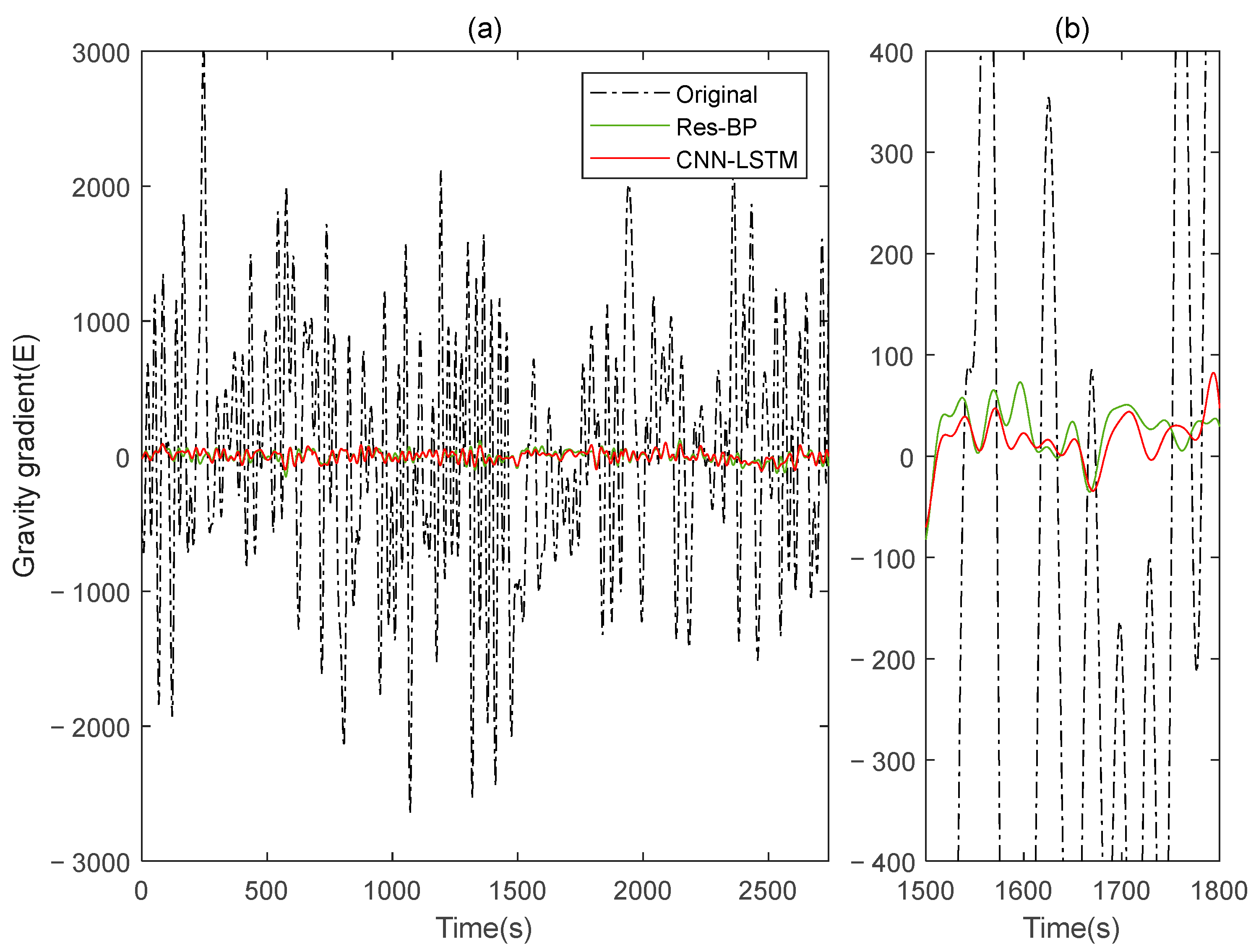

Residual neural networks have shown excellent applications in various fields such as denoising [

35], geophysical inversion [

36], and error compensation [

37]. To further demonstrate the effectiveness of the method in this paper, we conducted a comparative analysis with a residual neural network, Res-BP. The comparison results are presented in

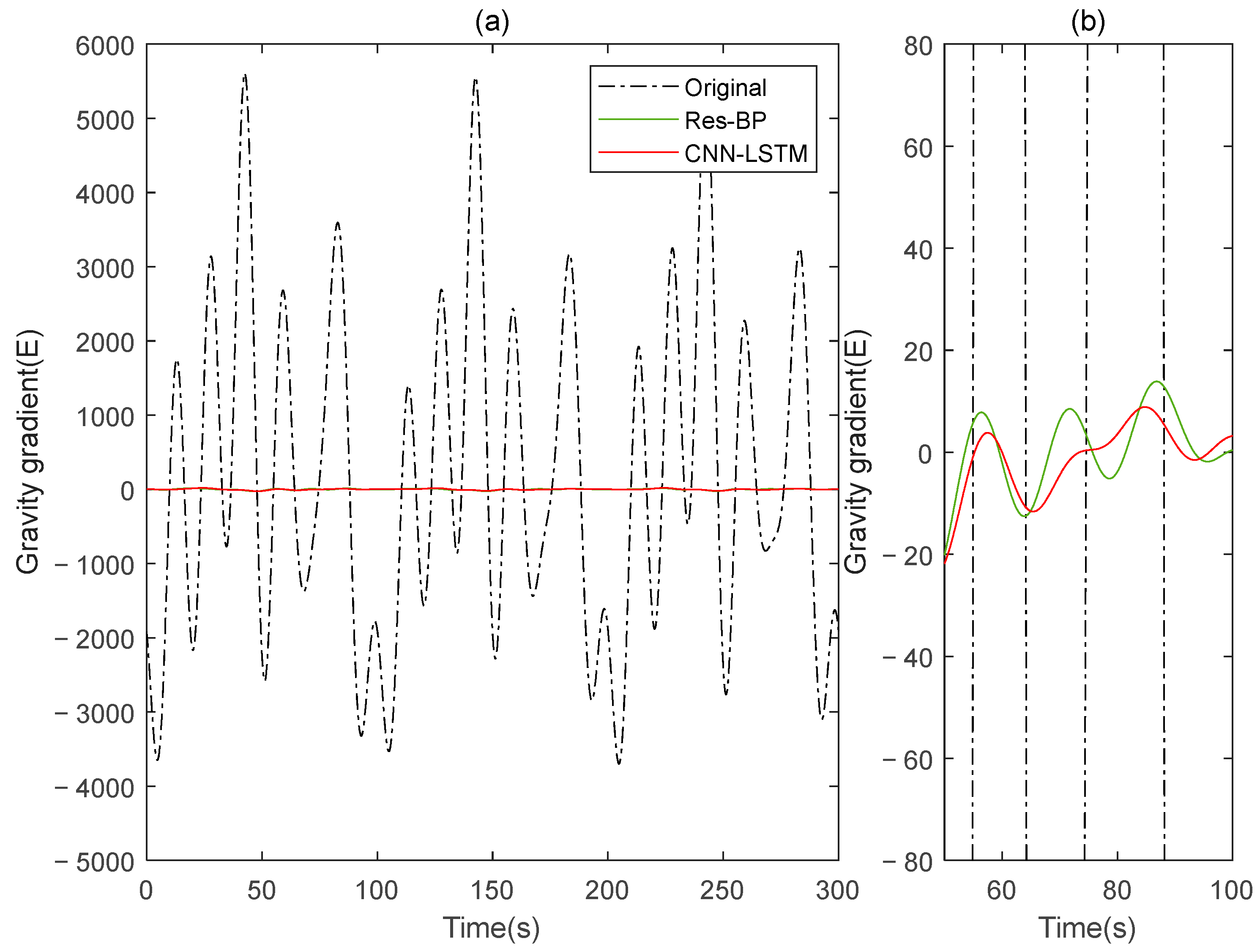

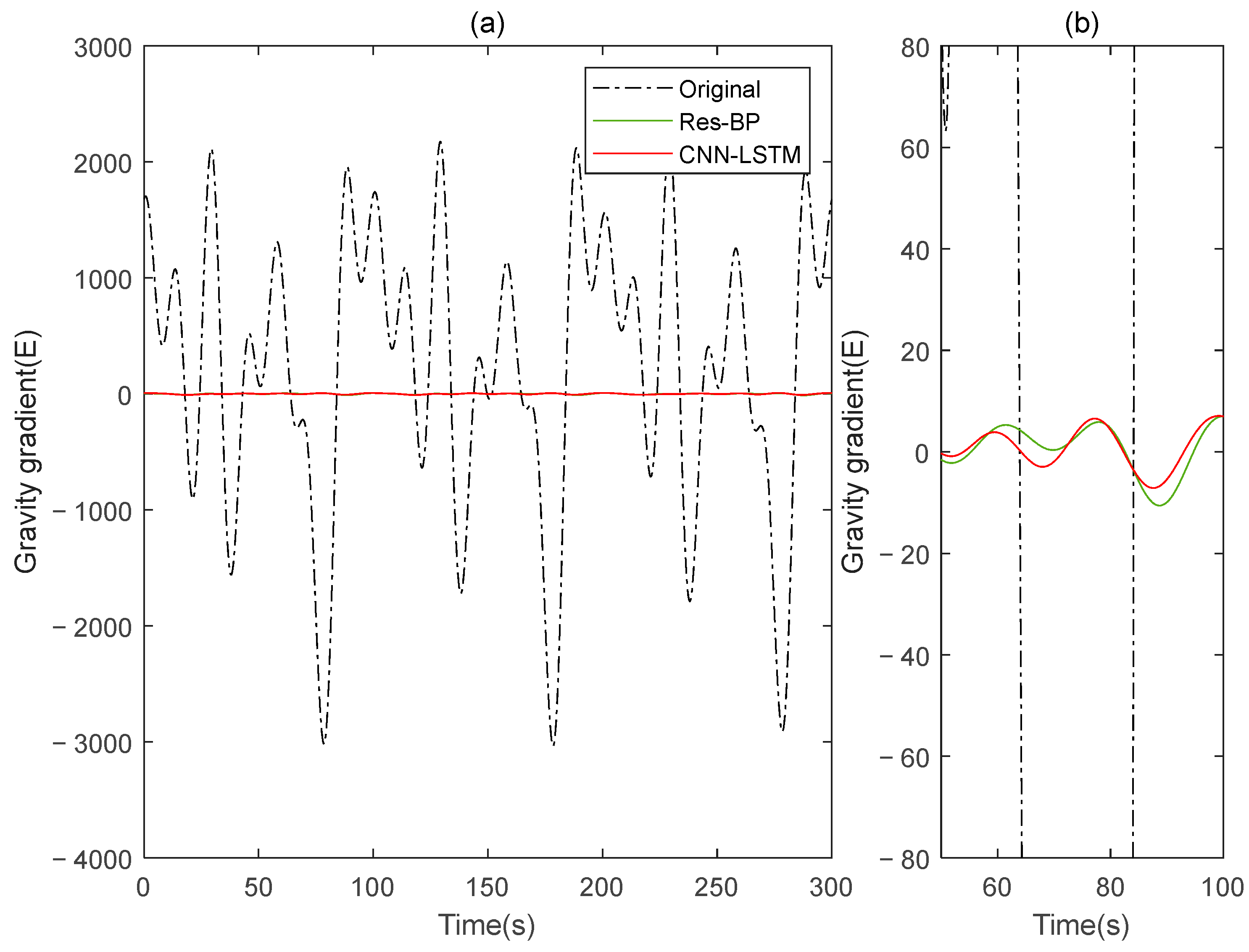

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14 and

Figure 15.

The comparison results of Signal B:

The comparison results of Signal A:

5. Measured Gravity Gradient Data Compensation

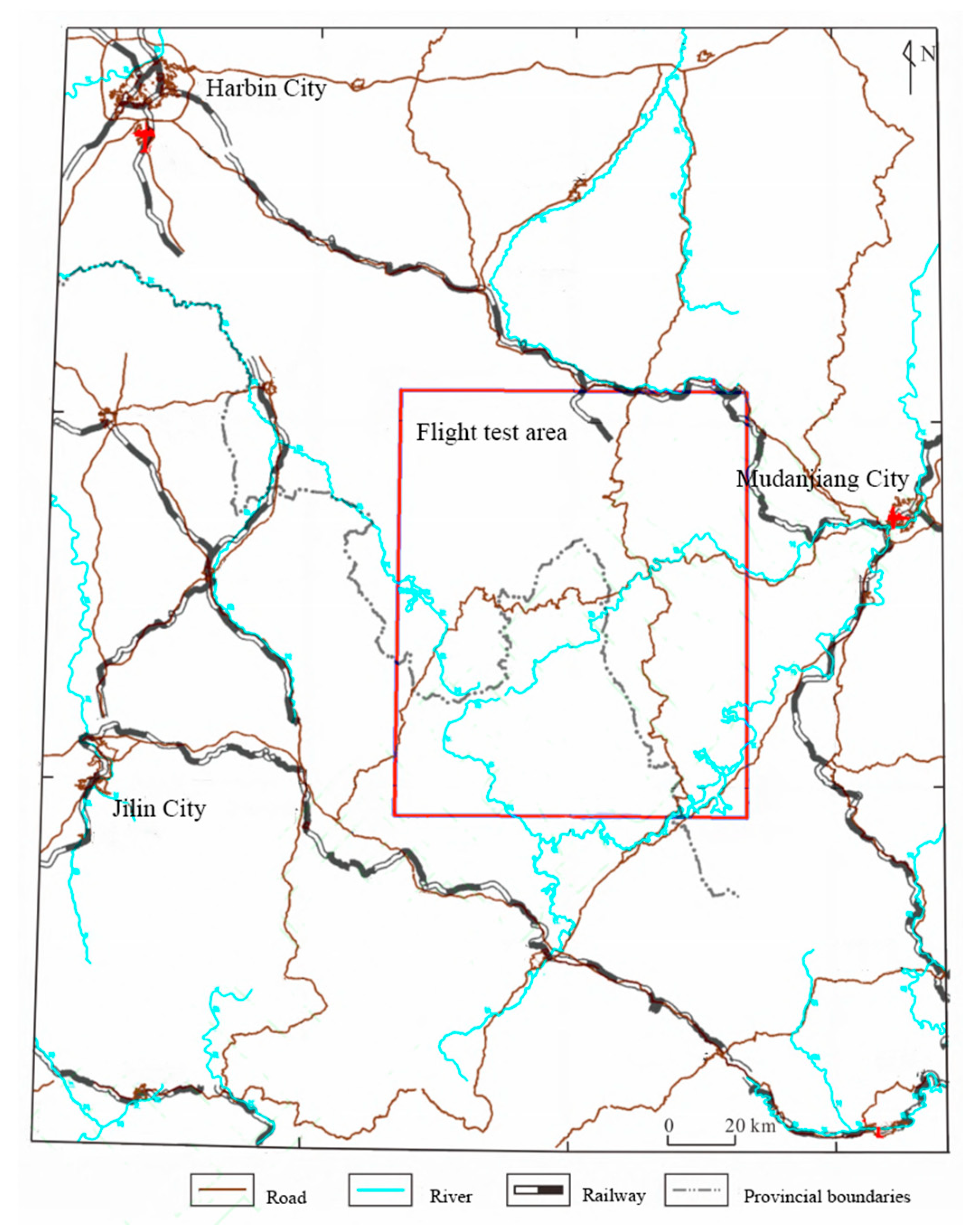

This paper conducted a flight experiment in the airborne gravity gradient test area (

Figure 16), which is located in the southern section of the Zhangguangcai Ridge, west of Mudanjiang City in Heilongjiang Province.

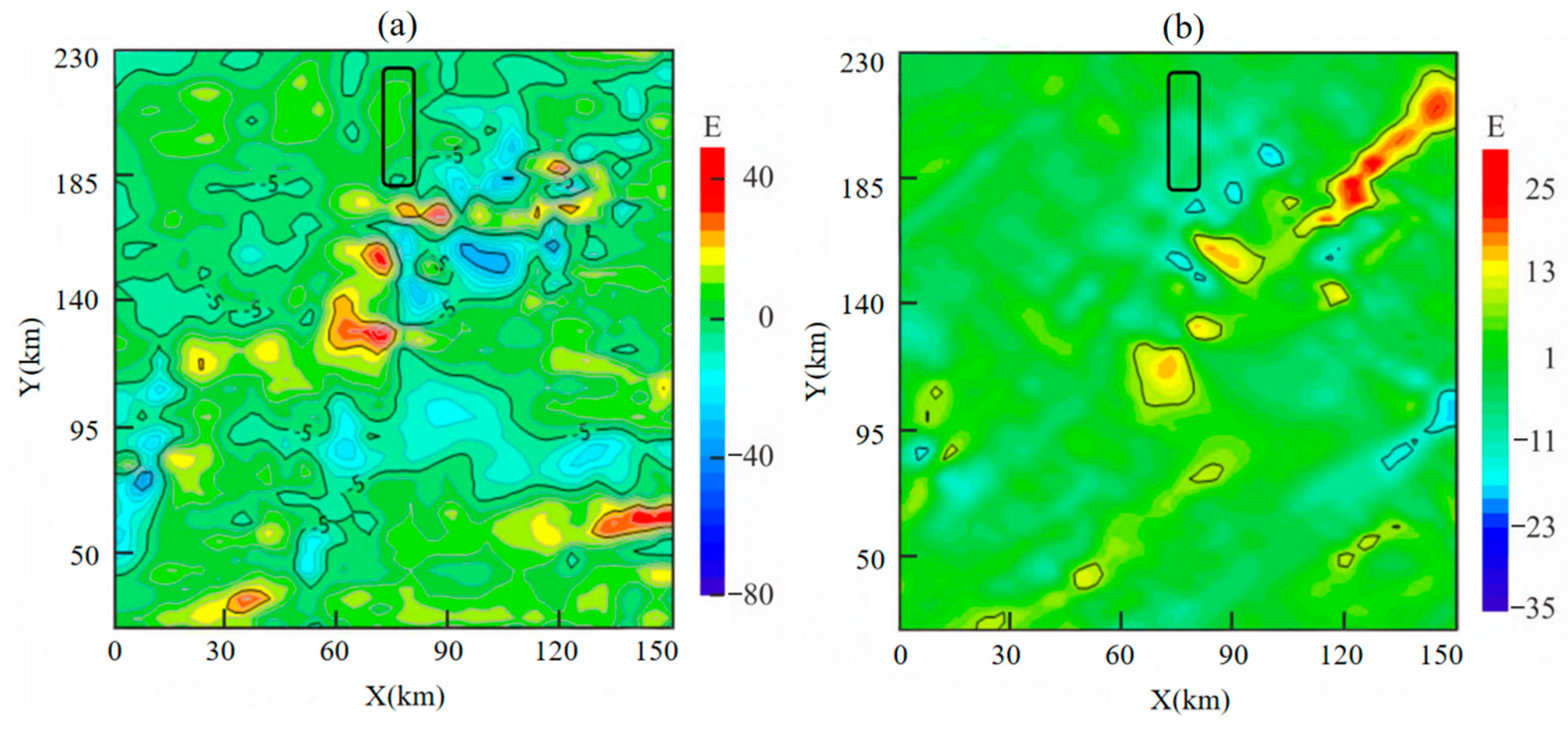

To ensure that the raw output of the gravity gradiometer only reflects dynamic measurement noise, a suitable flight altitude must be selected. At high altitudes, shallow surface or subsurface local targets have a negligible effect on the gravity gradient field. Over small areas, the gradient field appears constant, and it is reasonable to assume that gravity gradient data obtained at this altitude exclude gravity gradient signals caused by geological bodies. To achieve this, airborne gravity data for the area were collected. To establish a gravity gradient reference field, an upward continuation was performed using ground-measured data. Gravity anomalies on an observation plane at an altitude of 3600 m were calculated using arbitrary surface continuation theory, beginning with observed free-air gravity anomalies on the surface near the flight test area. Subsequently, frequency domain component transformation was then used to obtain gravity gradient anomalies

and

at this altitude.

Figure 17 shows that gravity gradient component anomalies

and

within the black-framed area vary within 10 E, making this region suitable for high-altitude measurements at 3600 m. The actual flight trajectory is shown in

Figure 18.

In the actual measurements, this paper uses a Y12 aircraft from China Flying Dragon General Aviation Co., Ltd. (Harbin City, Heilongjiang Province, China.), which is equipped with a gravity gradient acquisition system (see

Figure 19). The system used in this study is a rotational gravity gradient measurement system, primarily composed of high-precision quartz accelerometers, weak signal processing circuits, a rotational mechanism, synchronization circuits, a power supply system, data acquisition and storage circuits, and electronic circuits. Among these components, the high-precision quartz accelerometer serves as the core sensor of the gravity gradient measurement system, primarily measuring acceleration sensitive to the test mass [

38]. The aircraft repeatedly flew in a line at 3600 m altitude, collecting motion parameters such as accelerations, angular velocities, and gravity gradiometer output signals. These are combined with gravity gradiometer output data to create a deep learning sample set and carry out the compensation process.

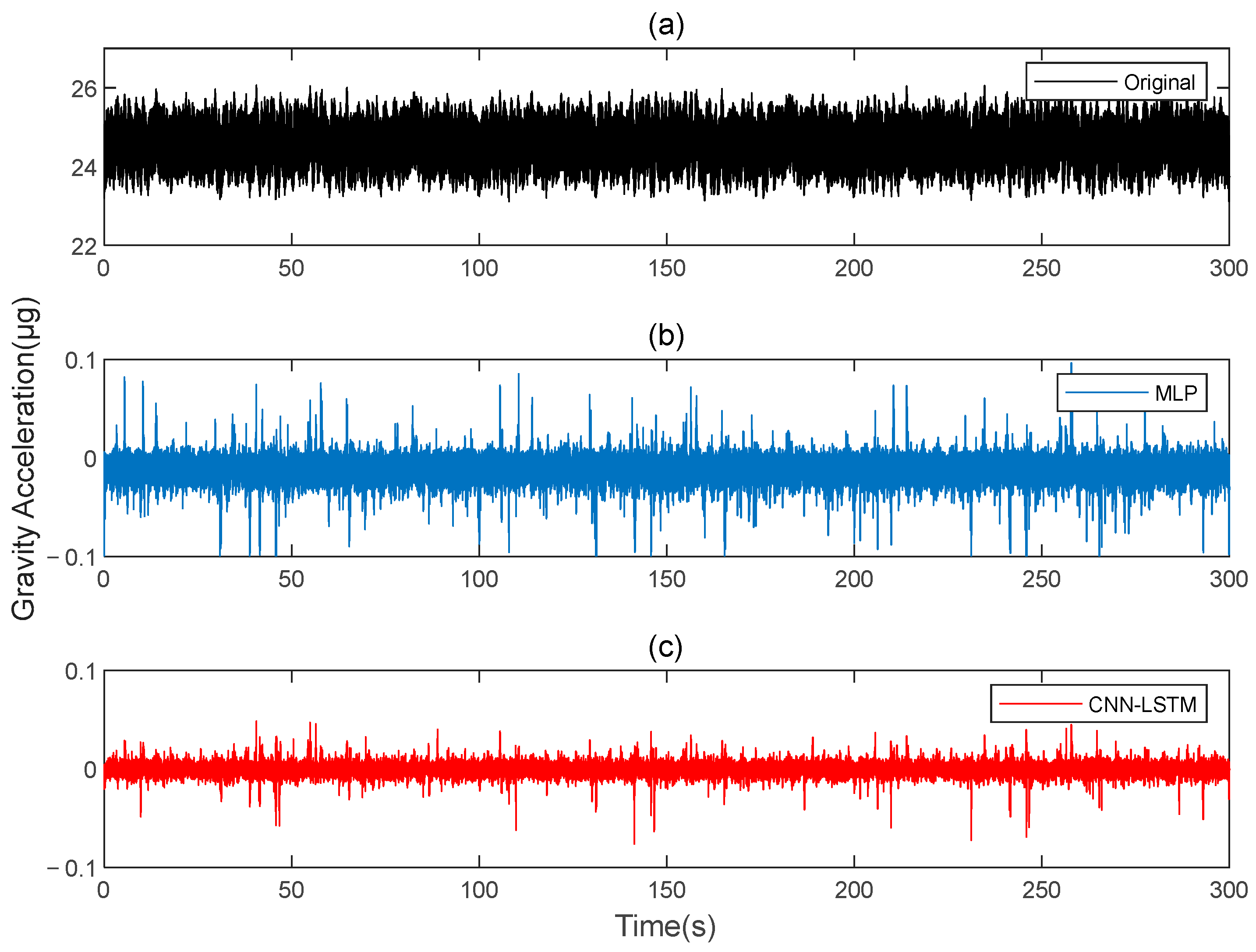

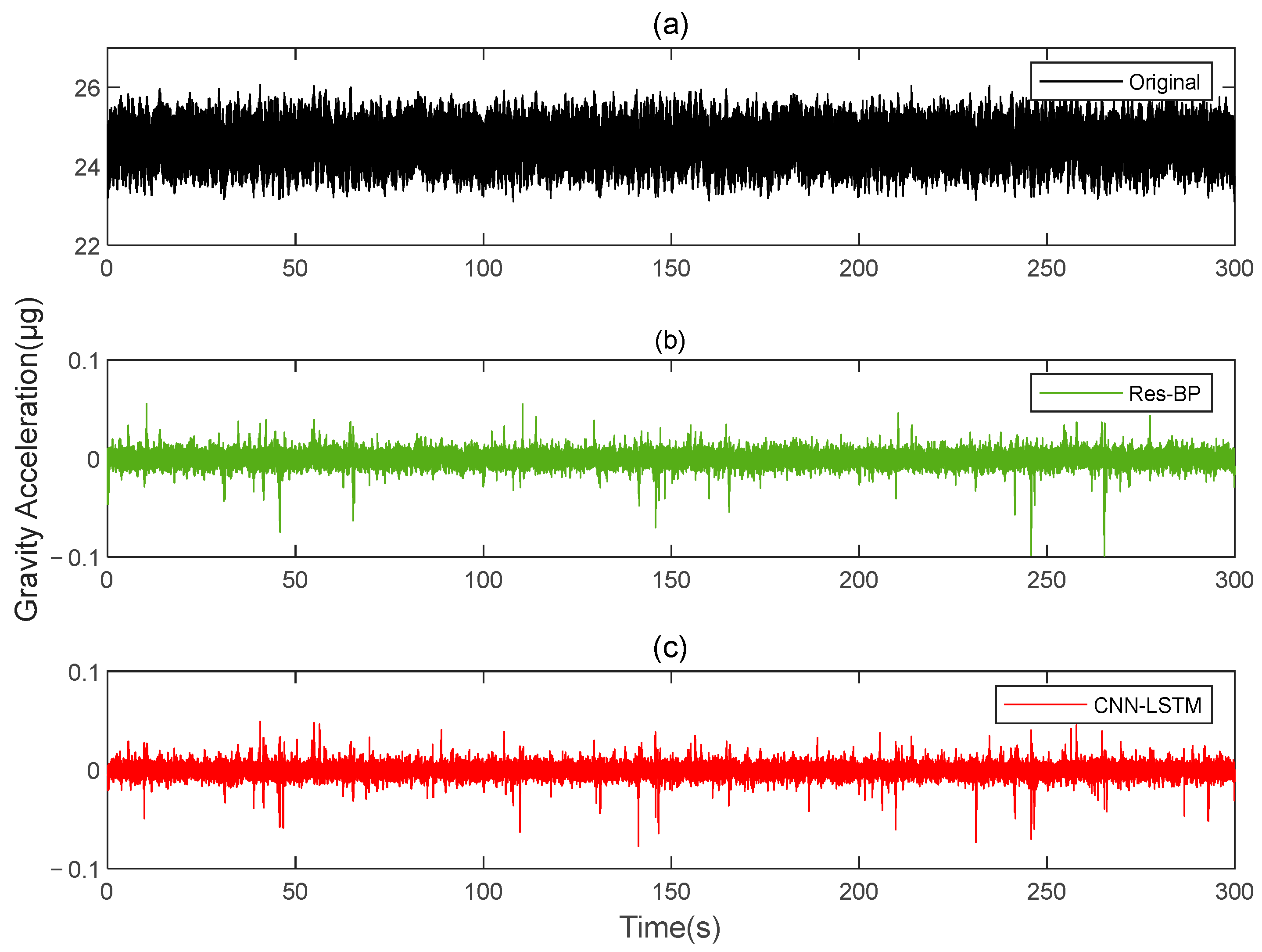

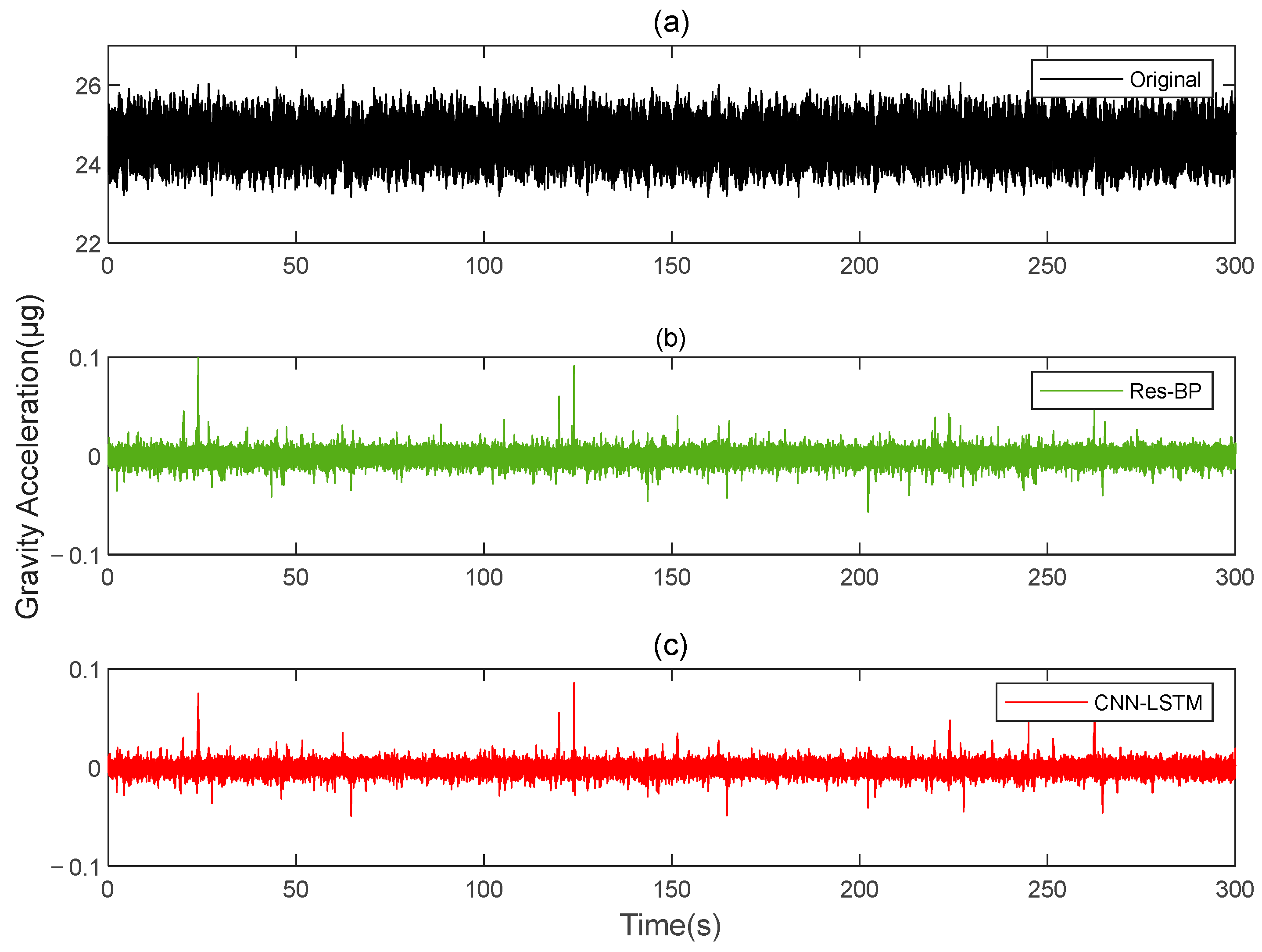

Figure 20 shows the results before and after the compensation of the original data. The output data of the gravity gradiometer, both before and after compensation, are demodulated.

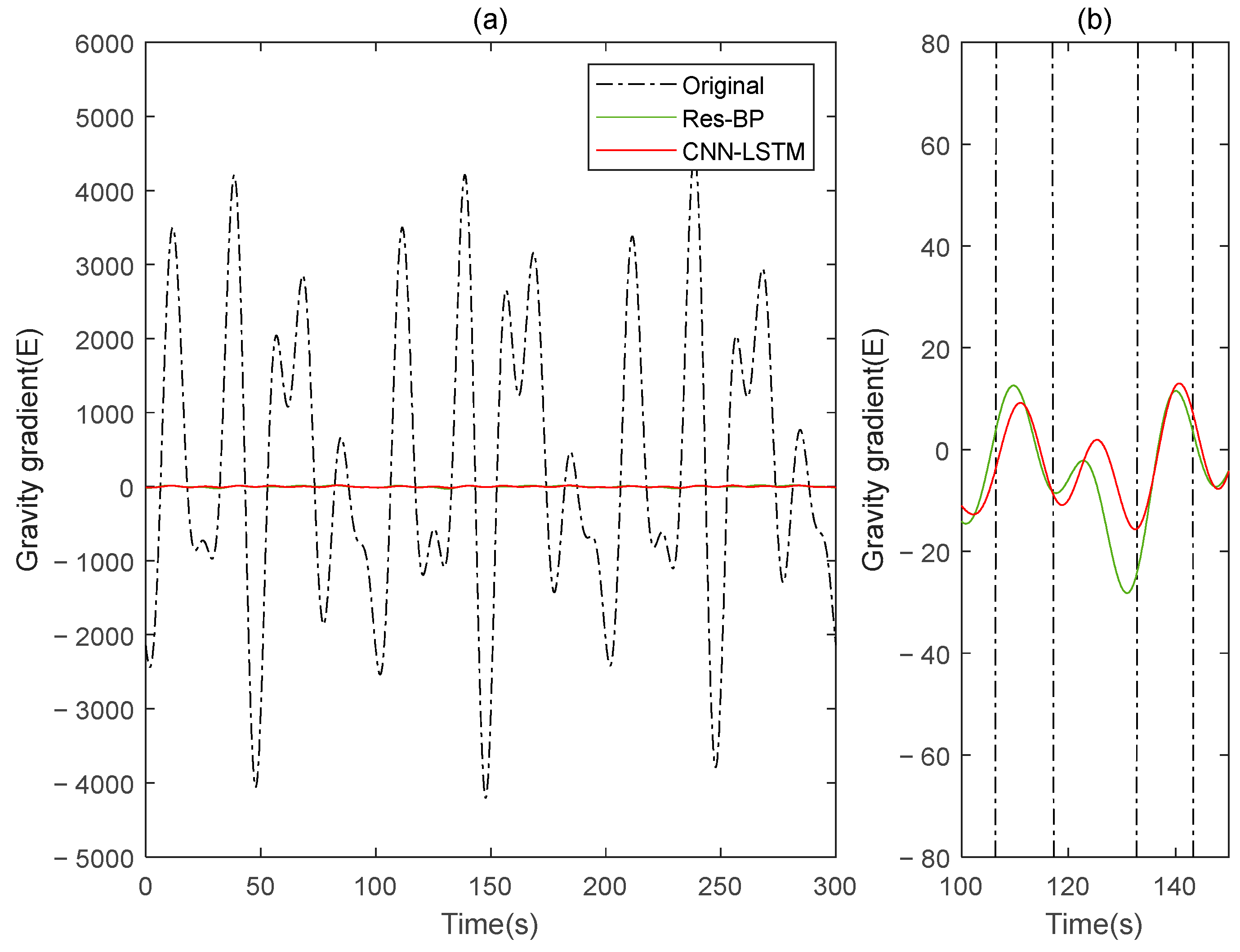

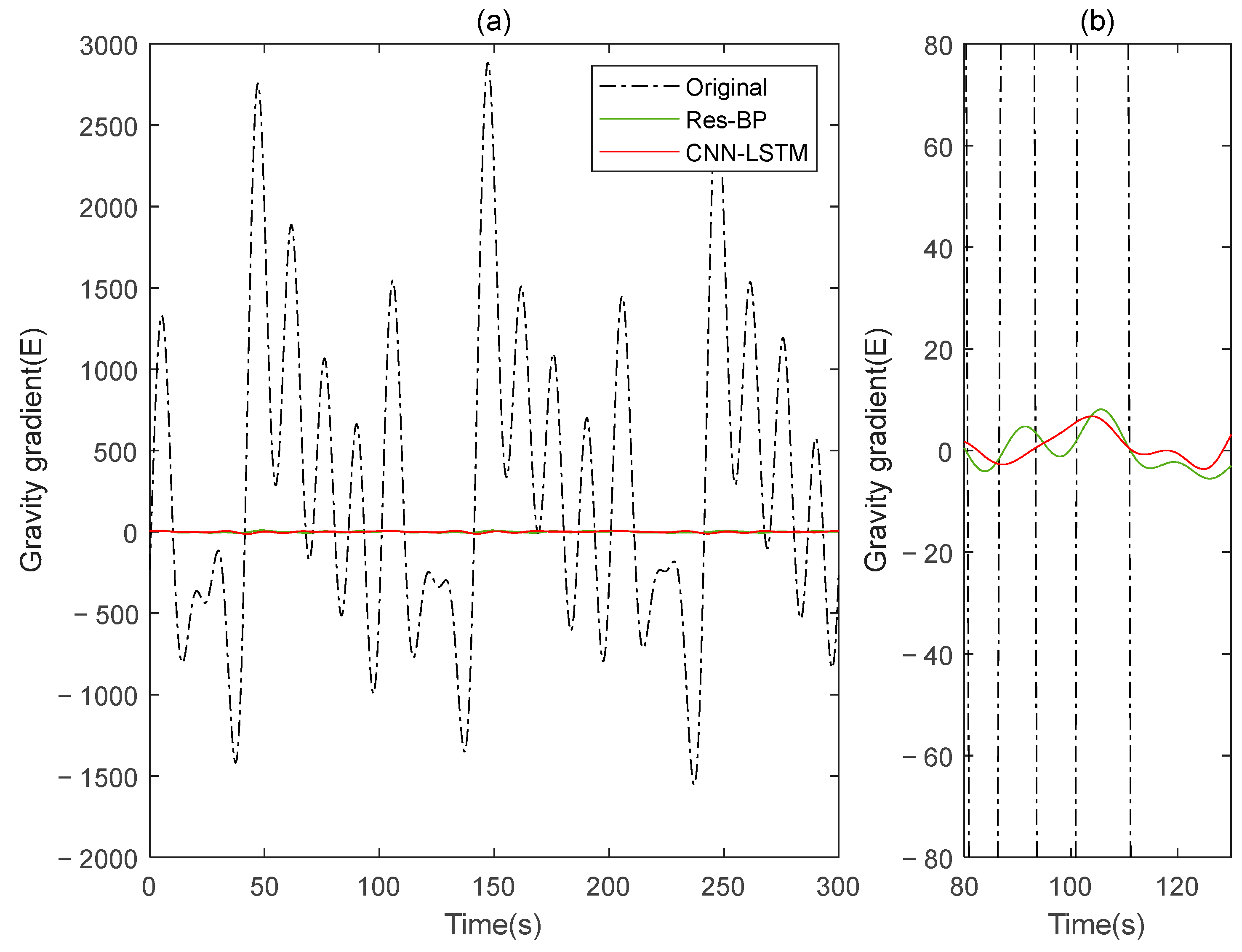

Figure 21 and

Figure 22 show the

and

gravity gradient components before and after compensation, respectively.

Figure 21 and

Figure 22 show how the deep learning approach using the CNN-LSTM effectively suppresses the dynamic noise of the gravity gradient. The compensation effect of this method outperforms that of the MLP neural networks.

Table 4 provides a summary of the final experimental results. The results demonstrate that the post-compensation method described in this paper effectively reduces noise in the actual data. When compared to traditional deep learning methods such as MLP, the improvement rate is significant, increasing the accuracy of gravity gradient compensation. For

, the

STD value before compensation was 867.2312; after MLP compensation, it was 53.8435, and after CNN-LSTM compensation, it was 37.9429. The

IR values are 16.1065 and 22.8562, respectively. For

, the

STD value before compensation was 457.4597; after MLP compensation, it was 24.4546, and after CNN-LSTM compensation, it was 15.7630. The

IR values are 18.7065 and 29.0211, respectively.

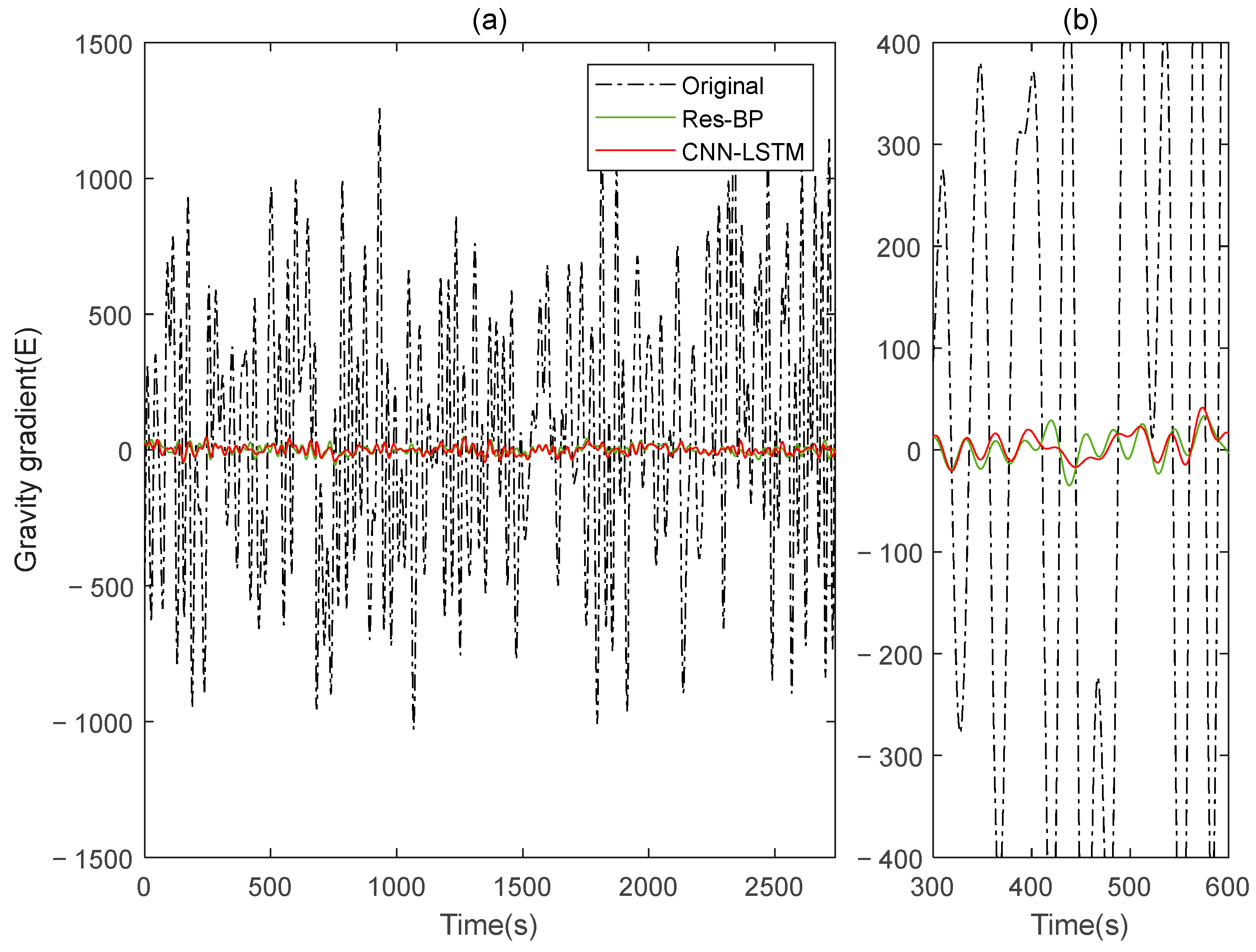

Similarly, in the measured data, we also added a comparison with the Res-BP, and the results are shown below.

In the measured data, the CNN-LSTM still demonstrates better performance compared to Res-BP from the results shown in

Figure 23,

Figure 24 and

Figure 25 and

Table 5. This highlights the effectiveness of the method proposed in this paper.