1. Introduction

Schlieren imaging, as a non-invasive optical sensor system, has become an essential visual diagnostic tool for analyzing optical inhomogeneities in transparent media such as fluid flows, acoustic fields, and combustion processes. These images play a central role in modern image-based analysis pipelines, enabling researchers to extract spatial features and track flow structures [

1]. Compared with particle injection or fluorescence tracking visualization techniques, schlieren technology does not require the addition of any extra substances to the fluid, allowing direct observation of refractive index gradients within, and it can more comprehensively capture the flow field. However, the raw data from schlieren sensors are often affected by noise, limited contrast, and overlapping structures, posing challenges for accurate interpretation. Consequently, the development of robust image processing and analysis methods has become a key research focus in this field [

2].

Before the adoption of modern machine learning-based techniques, researchers explored a range of traditional image processing strategies to extract meaningful features from schlieren images. For example, Arnaud et al. [

3] introduced divergence- and curl-based regularization in optical flow estimation for schlieren velocimetry, integrating physical constraints into motion analysis from image data. Goldhahn et al. [

4] utilized background schlieren imaging to reconstruct the density field downstream of a straight blade in a wind tunnel by inferring the refractive index and density distribution from gray levels, achieving an error within 4%. Cammi et al. [

5] proposed an automatic recognition method for oblique shock waves and simple wave structures based on image processing. By preprocessing and applying the Hough transform to extract linear features in the flow field, and combining physical constraints to eliminate anomalies, they successfully detected local shock wave structures in low-contrast schlieren images. Zheng et al. [

6] focused on the problem of edge extraction in schlieren images, systematically reviewed and optimized the active contour model, and combined Fast Fourier Transform (FFT) with multi-scale convolution strategies to improve the stability of boundary recognition in noisy environments. These early methods marked key milestones where image processing served as the critical bridge between visualization and quantitative interpretation.

Beyond conventional feature detection and edge enhancement, mode decomposition techniques have also been widely used for analyzing schlieren image sequences. The concept of Reduced-Order Model (ROM), first introduced by Dowell et al. [

7], aims to extract dominant modal structures from high-dimensional data. Among them, Proper Orthogonal Decomposition (POD) and Dynamic Mode Decomposition (DMD) are commonly used tools for dimensionality reduction and spatiotemporal pattern analysis. POD projects data onto optimal orthogonal bases, while DMD captures dynamic behaviors from time-resolved image sequences [

8,

9]. These techniques have been adopted in schlieren flow diagnostics—for example, Datta et al. [

10] applied POD to study shock-boundary layer interactions, and Berry et al. [

11] used POD and DMD to analyze nozzle flow dynamics. However, these traditional methods inherently assume linearity and often fail to capture sharp edges and local nonlinearities in raw schlieren images. Even when dozens of POD modes are used in high Reynolds number cases, the proportion of captured energy remains limited [

12].

In recent years, with the rise of deep learning, Autoencoders (AE) have been regarded as a more advanced nonlinear low-dimensional feature representation method [

13,

14]. AE automatically extract low-dimensional features through nonlinear mapping and can effectively capture nonlinear patterns in complex flow fields. Generally, autoencoders use neural network-based encoders for feature reduction in the encoding stage and decoders for flow field reconstruction. Compared with traditional POD or DMD, autoencoders have unique advantages in capturing the nonlinear dynamic characteristics of complex flow fields, providing new opportunities for flow field mode decomposition and feature extraction. In 2019, Lee et al. [

15] first introduced the Convolutional Autoencoder (CAE) into the field of fluid mechanics. Compared with linear ROM methods such as POD, the combination of autoencoder and Long Short-Term Memory (LSTM) improved the performance of flow field reconstruction and prediction, with only a marginal increase in computational cost. This basic framework has been widely used in subsequent studies. For instance, in 2021, Ahmed et al. [

16] proposed a nonlinear POD method, which utilized CAE to learn the nonlinear projection of the manifold space and successfully analyzed the dynamic characteristics of periodic and chaotic flows. In 2023, Bo Zhang [

17] combined CAE with a multi-head attention-based long short-term memory network to predict the unsteady flow field on an airfoil. In 2024, Xu et al. [

18] developed a CAE-RNN hybrid architecture, achieving 99% dimensionality reduction in porous media turbulence research through nonlinear feature compression and combining Recurrent Neural Network (RNN) to predict temporal evolution.

With the increasing complexity of application scenarios, multi-scale architectural design has become one of the improvement directions [

19,

20,

21]. In 2025, Lu et al. [

22] proposed a CAE architecture integrating multi-scale Convolutional Block Attention Modules (CBAM), adding a term in the loss function based on the Navier–Stokes equations, so that the predicted flow respects physical conservation laws. In 2025, Teutsch et al. [

23] developed SMS-CAE, which enhanced feature visualization capabilities through sparse multi-scale design, achieving a 95% modal matching rate in identifying turbulent coherent structures while maintaining 89% reconstruction accuracy, with a 26% improvement in modal identification rate compared to traditional DMD. In 2025, Beiki et al. [

24] developed an attention-enhanced autoencoder combining adaptive attention mechanisms and involution layers, achieving 98.7% accuracy in the reconstruction of the transient process of flow around a cylinder, marking a 12 percent improvement over the standard CAE. Recent efforts also explored hybrid architectures, such as fully connected–convolutional–temporal networks [

25,

26], and parametric modeling with ResNet-based latent space mapping [

27,

28], for enhancing flow prediction under varying conditions.

As a generative model, the Variational Autoencoder (VAE) builds latent space representations of flow field data using a probabilistic framework, demonstrating unique advantages in nonlinear reduced-order modeling [

29,

30,

31]. Unlike traditional autoencoders, VAE achieves nonlinear compression of flow field features by introducing distribution constraints on latent variables, providing the ability to quantify uncertainties in flow structures. In 2022, Hamidreza Eivazi [

32] applied

-Variational Autoencoder (

-VAE) to LESs-simulated urban environment flow field data, achieving 87.36% energy in five modes, far exceeding 32.41% of POD. In 2024, Solera-Rico et al. [

33] combined

-VAE with Transformer, achieving nearly orthogonal latent space representations in two-dimensional viscous flow prediction, with feature modes similar to POD but with significantly improved computational efficiency. In the same year, Wang et al. [

34] optimized latent space decoupling by adjusting the

coefficient, reducing the turbulence energy spectrum reconstruction error to one-third of that of traditional POD. This achievement verified the adaptability of

-VAE to chemically reactive flow fields in the prediction of hydrogen fuel combustion field parameters. To meet the requirements of parametric modeling, Lee et al. [

35] developed LSH-VAE, which uses a hierarchical structure and a hybrid loss function to achieve parameter interpolation prediction in fluid-solid coupling problems, reducing prediction error by 26% compared to traditional methods. Its spherical linear interpolation strategy provides a novel approach for cross-geometric topology prediction.

By leveraging probabilistic generative mechanisms, VAEs can effectively capture quasi-ordered structures and non-stationary characteristics in flows, demonstrating significant potential in flow field data reduction and feature extraction. However, when directly applied to the data from schlieren experiments, traditional VAEs struggle to focus on the key physical features dominated by refractive index gradients in schlieren images. Additionally, in dealing with complex flow features, the latent variable space representations are often ambiguous, making it difficult to effectively decouple physical modes and affecting the precise reconstruction of flow structures and temporal prediction performance. This issue is particularly pronounced in small datasets with significant noise interference in schlieren images. In contrast, linear decomposition methods like POD, although capable of providing a low-dimensional, interpretable representation of flow fields in terms of energy ranking, are limited in capturing nonlinearities, local details, and high-gradient regions. This deficiency leads to insufficient accuracy in reconstructing non-stationary and detail-rich flow structures, and the noise inherent in schlieren images further exacerbates this problem. In fact, when processing the dataset in this study, the energy captured by the first 10 modes of POD was less than 30% of the total energy.

To address challenges in processing schlieren sensor data, we propose a novel Spatial-Frequency-Scale VAE (SFS-VAE) framework based on the -VAE architecture, which integrates frequency domain enhancement with spatial multi-scale mechanisms. The core contribution of this framework involves two specialized modules:

(1) The Progressive Frequency-enhanced Spatial Multi-scale Module (PFSM) combines discrete Fourier transform, adaptive Gaussian frequency masking, and channel-grouped spatial attention to dynamically partition the frequency spectrum and enhance the response of key frequency bands. This design improves sensitivity to fine-scale flow structures and mitigates feature loss commonly observed in traditional convolutional autoencoders during image reconstruction. (2) The Feature-Spatial Enhancement Module (FSEM) integrates gradient-driven edge enhancement, local mean-variance adaptive feature modulation, and physical priors (utilizing gradient-averaged schlieren images for guidance), enabling fine feature optimization in key regions such as jets and edge structures. By effectively decoupling and enhancing features, FSEM, together with PFSM, forms a robust VAE framework, SFS-VAE. The experimental results demonstrate substantial enhancements in low-dimensional flow reconstruction, temporal prediction accuracy, and the interpretability of the physical phenomena captured by the sensor.

2. Methods

Schlieren images, as grayscale representations of flow-induced refractive index gradients, carry unique characteristics that pose distinct challenges for image processing and reduced-order modeling. These images primarily capture the spatial distribution of optical inhomogeneities in transparent media, where subtle grayscale variations correspond to gradients in density or temperature. Key features include:

(1) Structural duality: This involves a relatively stable mainstream region with smooth grayscale transitions and high-gradient jet regions with sharp edges and complex vortical structures, which are critical for understanding flow dynamics. (2) Noise and contrast limitations: Raw images often suffer from low signal-to-noise ratios and uneven contrast, making it difficult to distinguish overlapping flow structures. (3) Multi-scale and multi-frequency components: Flow features span a wide range of spatial scales (from large-scale mainstream to small-scale turbulent eddies) and frequency bands (from low-frequency smooth regions to high-frequency edge perturbations). These characteristics demand specialized algorithms that can simultaneously enhance frequency-domain details, capture multi-scale spatial features, and focus on high-gradient key regions—motivating the development of the SFS-VAE proposed in this study.

2.1. CNN-Based β-Variational Autoencoders

To achieve an efficient reduced-order model for schlieren instantaneous flow field images, we design a neural network model architecture based on the

-VAE. The VAE is a deep generative model that introduces a probabilistic modeling framework with latent variables, enabling it to effectively learn the low-dimensional latent representation structure of image data. Compared with traditional deterministic autoencoders, VAE exhibits superior generalization ability and feature representation capabilities, performing outstandingly in capturing the distribution characteristics of complex data. The

-VAE, based on the traditional VAE, enhances the model’s constraint ability on the latent space distribution by introducing a weight factor

, thereby obtaining greater flexibility and better performance in balancing data compression and reconstruction fidelity [

36].

The base network architecture in this study is based on the structure proposed by Eivazi et al. [

32]. Their research added multiple convolutional layers to construct deeper encoder and decoder structures, significantly enhancing the model’s capacity for nonlinear feature extraction and structural representation. Additionally, they proposed a complete framework for latent space modeling and flow field prediction. In our implementation, the encoder network

contains six convolutional blocks, each using a 3 × 3 kernel size and a stride of 2 for the convolution operation, followed by the ELU activation function to improve the nonlinear fitting ability. Through successive downsampling, the spatial resolution is gradually reduced while the number of channels increases. The resulting feature maps are flattened into a one-dimensional vector through the Flatten layer and mapped to the latent space by a fully connected layer. Therefore, the input image

will be compressed into a latent feature vector

after passing through the encoder. The encoder outputs two vectors

, representing the mean and logarithmic variance of the latent distribution, respectively. To achieve random sampling of latent variables and the trainability of the model, the reparameterization trick is used to generate stochastic latent variables:

The latent variable

is fed into the decoder

for layer-by-layer upsampling and image reconstruction. First, it is transformed from a low-dimensional latent vector into a high-dimensional feature map using a fully connected layer followed by an unflatten operation. A six-layer deconvolutional architecture, symmetric to the encoder and employing ELU activation functions, is then used to incrementally reconstruct the image

to its original size:

We adopt the widely used loss function in

-VAE, which is expressed as follows:

Here, the is a tunable weight term used to balance the trade-off between reconstruction and regularization.

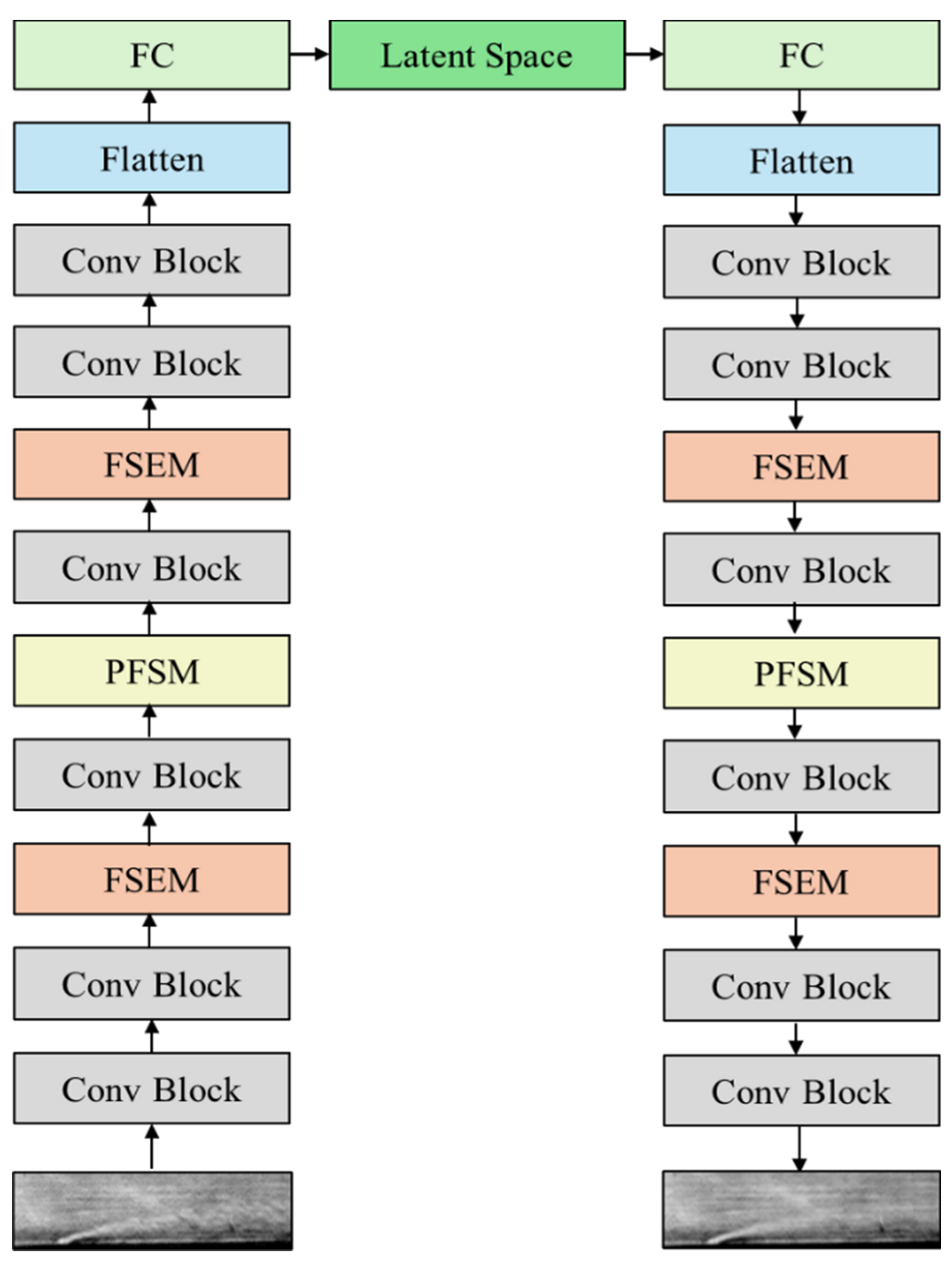

To further enhance the model’s ability to represent complex instantaneous flow field structures captured by schlieren imaging, we extend and optimize the base architecture. Specifically, we introduce a Feature-Spatial Enhancement Module (FSEM) after the second and fourth convolutional layers of the encoder and a Progressive Frequency-enhanced Spatial Multi-scale Module (PFSM) after the third convolutional layer, thereby simultaneously improving the network’s feature capture capabilities in both the spatial and frequency domains. The decoder is constructed symmetrically, and the specific network architecture is illustrated in

Figure 1.

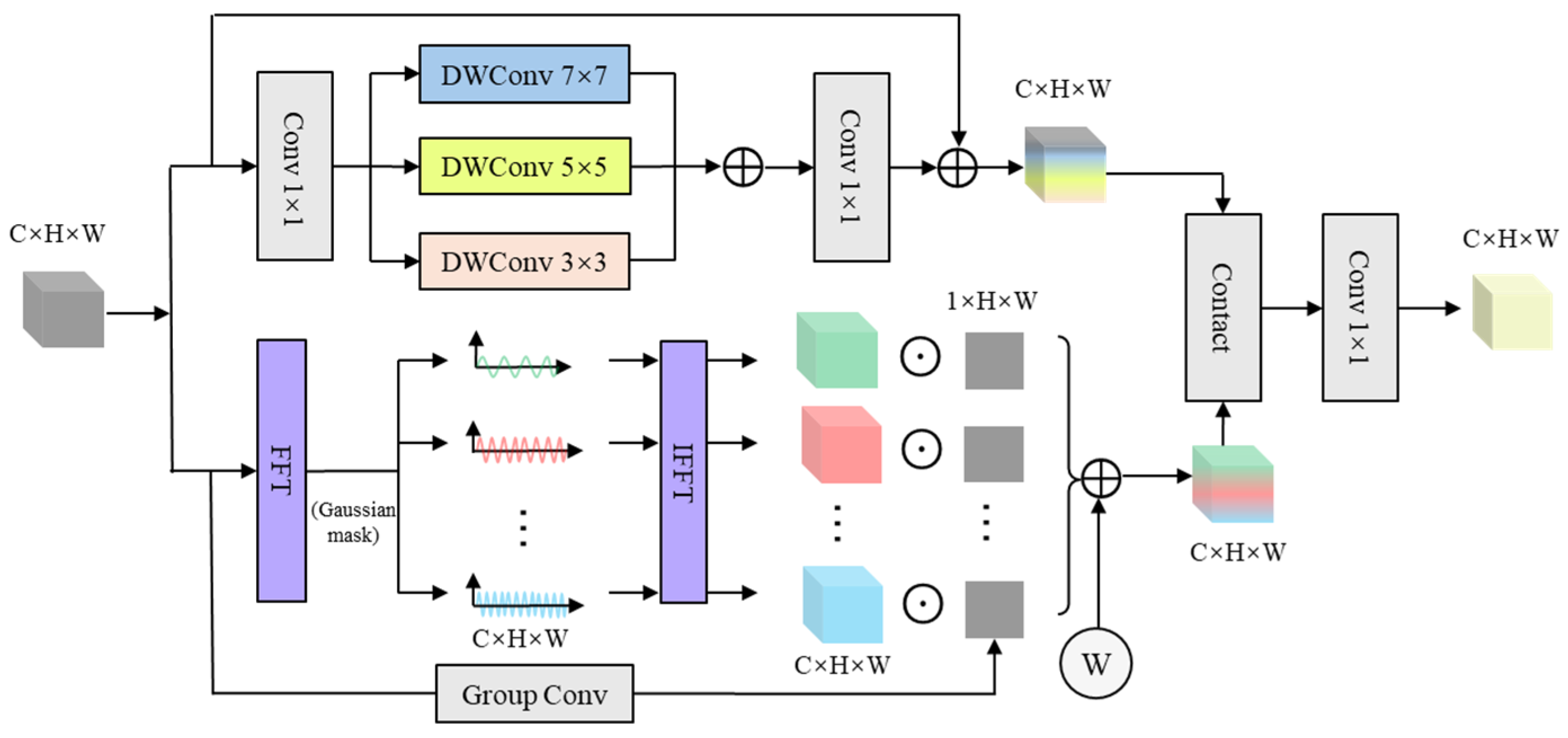

2.2. Progressive Frequency-Enhanced Spatial Multi-Scale Module

To fully exploit the diversity of flow structures in schlieren images across frequency and spatial scales, we design a Progressive Frequency-enhanced Spatial Multi-scale Module (PFSM). This module consists of two parallel branches: (1) The frequency enhancement branch, which uses the Fourier transform combined with an approximate separable Gaussian frequency mask to achieve adaptive continuous division of the spectrum and enhance the representation of flow features in the frequency domain. (2) The spatial multi-scale convolution aggregation branch, which leverages the multi-scale combination of depthwise separable convolutions to extract rich spatial scale information. Finally, the features from the two branches are concatenated and fused to comprehensively capture the spectral and spatial features of the flow structure. This improves the recognition and generalization performance for complex flow fields. The architecture of the PFSM is shown in

Figure 2.

In the frequency domain enhancement branch, let the output feature map of the intermediate layer of the encoder be

. A two-dimensional Fast Fourier Transform is used to calculate the complex frequency spectrum representation

. To construct a trainable frequency band division mechanism, instead of using the traditional discrete hard-cut segmentation mask [

37], an approximate separable Gaussian frequency response function is adopted, which takes the form of:

Here,

represent the frequency center position, and

denote the frequency band width. All these parameters are trainable, enabling the model to adaptively select the most informative frequency regions during training without relying on manually defined annular frequency bands. Compared with using a complete Gaussian mask, this module significantly reduces computational costs while still maintaining the smoothness and continuity of the spectral division. The spectral mask is applied to the frequency spectrum through element-wise multiplication, and then an Inverse Fast Fourier Transform (IFFT) is performed to recover the spatial domain features corresponding to each frequency band:

To enhance the separation ability of local disturbance structures, a shared channel group spatial attention mechanism is introduced in each frequency band branch. Specifically, the original feature map X is processed using group convolution to generate a spatial attention weight map:

Then, a channel-wise broadcast element-wise multiplication is performed between the spatial weight map and the original frequency band feature map to enhance the regions that are sensitive to flow structures. This results in the modulated response:

Subsequently, through the weighted fusion of the frequency bands, the spatial feature expressions of all frequency band responses are integrated to form the final frequency domain enhanced feature output:

Here, is a learnable fusion weight coefficient, allowing the model to adaptively determine the contribution of each frequency band to the final feature representation.

To reflect the diverse spatial-scale characteristics of flow structures in schlieren images, a multi-scale convolutional aggregation branch in the spatial domain is introduced. This branch also takes the output feature map

from the intermediate layer of the encoder as input. It first performs a 1 × 1 convolution for dimensionality transformation to obtain the intermediate feature map

. Then, Depthwise separable convolutions (DWConv) with three different-sized kernels (3 × 3, 5 × 5, 7 × 7) [

38] are used to extract spatial features at different scales:

The feature maps at each scale are then summed and merged through a pointwise convolution (1 × 1). Finally, a residual connection is introduced to stabilize training and enhance the feature representation capability, resulting in the output of multi-scale aggregated features in the spatial domain:

After the feature extraction of the two branches is completed, the frequency domain and spatial domain features are concatenated along the channel dimension, then fused and dimensionally reduced through pointwise convolution (1 × 1 convolution) to obtain the output feature after fusion.

This two-branch design captures and combines features from schlieren images in both frequency and spatial domains, making the reconstruction more accurate.

2.3. Feature-Spatial Enhancement Module

To more accurately capture the local details and physical structure contours of the jet region, we design a Feature-Spatial Enhancement Module (FSEM), which improves local details by using edge-based weights, applying attention to important areas, and introduces the spatial fusion of external physical priors. The specific architecture is shown in

Figure 3.

Let the intermediate layer feature map of the encoder be denoted as

. To highlight the details and edge structures of key flow regions such as vortices, shear layers, and boundary layers in the schlieren image, we introduce an edge enhancement mechanism based on residual gradient features. The input features are subjected to a 3 × 3 average pooling operation to obtain the locally smoothed mean feature map, denoted as

, and then a residual structure is used to extract fine-grained edge feature responses [

39]:

This residual operation can effectively capture subtle boundary variations in the schlieren image and suppress the interference of large-scale smooth regions. The edge response

is processed through a 1 × 1 convolution, Batch Normalization, and Sigmoid activation to generate a spatial edge weight map:

We further propose a local mean-variance feature enhancement method, which utilizes the spatial mean and variance of feature maps and employs a small number of training parameters to achieve adaptive spatial feature modulation:

Here,

,

represent the spatial mean and standard deviation of the feature map, respectively, and

,

are learnable parameters. Subsequently, the enhanced spatial features will be combined with the edge weights:

To enhance the spatial representation ability of local structures in images, the previously enhanced features are further processed by the Localized Soft Pooling Attention (LIA) mechanism, which was originally proposed by Wang et al. in the field of image super-resolution [

40]. First, a 1 × 1 convolution is applied to the feature map

to reduce the channel dimension. Then, a 3 × 3 SoftPooling is used along with the exponentially weighted aggregate to obtain the neighborhood information:

Here, represents the local neighborhood window area centered at position ).

Subsequently, a 3 × 3 convolution with a stride of 2, followed by a Sigmoid function, is utilized to obtain the local spatial weight map

. To further differentiate between significant regions and weak disturbances, a simple gating mechanism

is introduced. The final spatial enhanced feature representation is expressed as:

To more effectively integrate physical prior information, the module also introduces spatial attention guidance derived from external physical fields. Considering the characteristic that the gray value of the schlieren image correlates with the density gradient, we utilize the average schlieren gradient map

, which is obtained through external preprocessing. This map is bilinearly interpolated to match the spatial dimensions of the early -stage features, and is fused via an additional spatial attention mechanism:

This fusion strategy explicitly injects flow-specific priors into the feature extraction process, thereby enhancing the network’s ability to capture fine flow details and edge structures. As a result, the model’s reconstruction accuracy and generalization performance in jet regions are significantly improved.

3. Construction of Datasets

In the design of modern high-bypass gas turbines, increasing demands on blade material temperature resistance have been imposed to improve overall efficiency. Consequently, exploring efficient cooling technologies to reduce the base temperature of turbine blades is essential. Film cooling, as an efficient cooling method in aeroengines, has been widely applied in turbine design. Studying the flow and cooling mechanism of film cooling jets under different jet parameters helps to elucidate their influence on gas-thermal coupling and cooling effect.

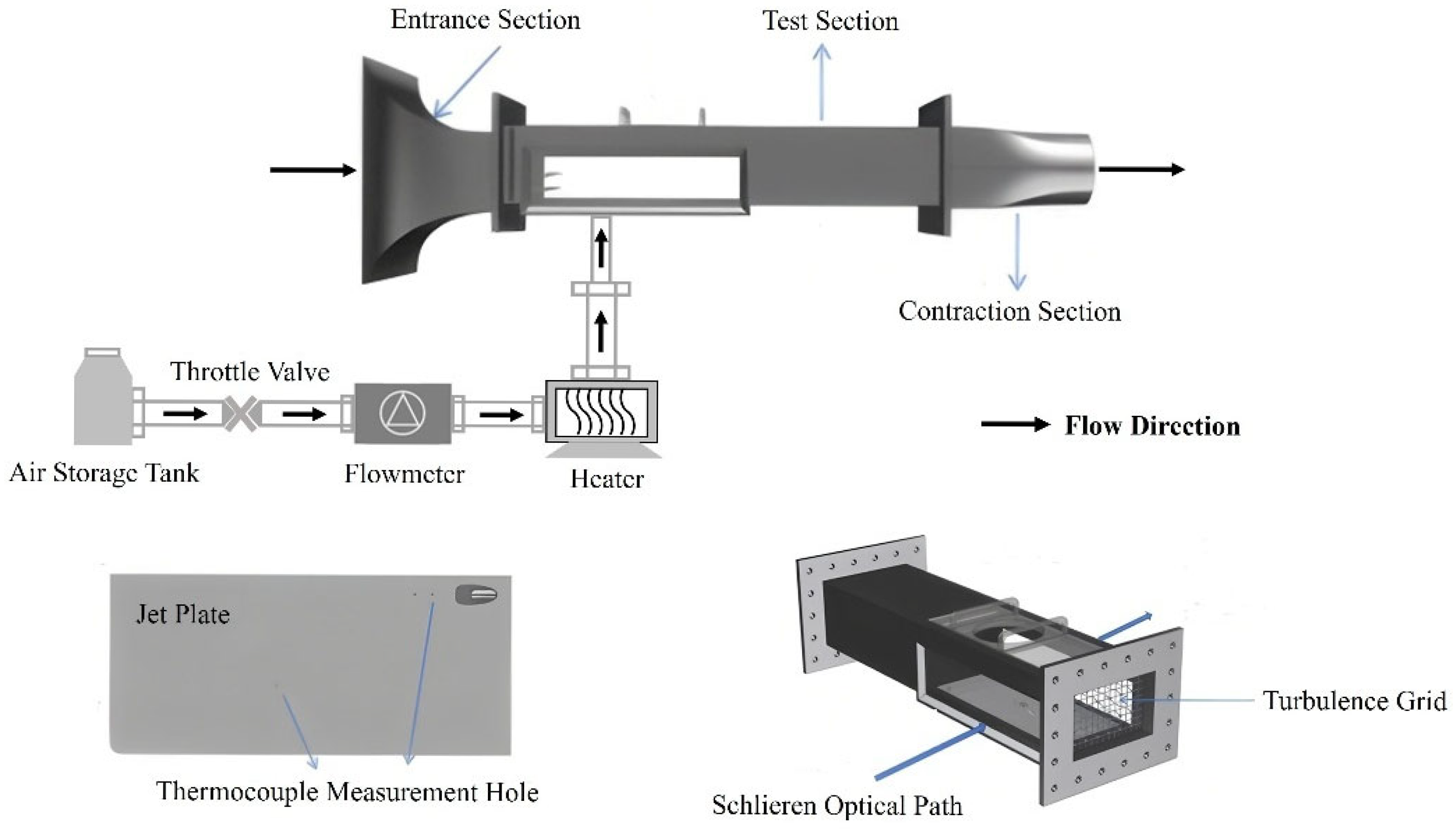

The training data employed in this study were obtained from the film cooling jet experiments conducted in a suction-type flat plate wind tunnel at Nanjing University of Aeronautics and Astronautics. The flat plate jet experiment system mainly consists of the main flow system and the secondary flow system. In actual turbines, film cooling jets are generally in the form of a combination of hot gas in the main flow and cold gas in the jet. However, due to the limited experimental conditions in university settings, the current experiment adopts cold gas as the main flow and hot gas in the jet. Although this setup may cause a decrease in jet density and an increase in jet velocity under the same blowing ratio, affecting the wall-attachment phenomenon, it still effectively captures the essential characteristics of gas-thermal coupling in film cooling scenarios.

The structure of the flat plate jet experiment system is shown in

Figure 4, and the suction-type flat plate wind tunnel main modules include:

Square inlet contraction section: The inlet is equipped with fine grids, which can effectively intercept dust, reduce the probability of equipment failure, and minimize the interference from the external environment. The mainstream turbulence intensity is controlled within 0.8% to 1%.

Test section: The overall size of the wind tunnel test section is moderate. A flat plate with holes is installed at the bottom as the test piece, with the holes connected to a series of devices for generating jet flows. Sensors are arranged on the plate to monitor turbulence intensity and surface temperature in real time. For schlieren imaging, optical glass panels are installed on both sides of the test section, and a sealed plate is installed on the top, with reserved holes for installing hot-wire probes and observation windows.

Square-to-round transition and suction pipe: These components ensure stable fluid inflow and outflow, forming a closed circulation loop with the compressor.

In this experiment, the main adjustable jet parameters include the jet blowing ratio (

) and the jet temperature ratio (

), defined as:

where

,

,

,

represent the density, velocity, temperature and mass flow rate of the jet, respectively, and

is the cross-sectional area of the jet orifice.

,

,

correspond to the density, velocity and total temperature of the mainstream in the test section. The mass flow rate of the jet is controlled and adjusted by a fixed orifice and a flowmeter, while the jet temperature is adjusted by a heater. To facilitate precise control and real-time monitoring, a compressor with a maximum working pressure of 0.8 MPa is used as the gas source, in combination with a throttle valve and a flowmeter to achieve continuous regulation.

Data acquisition was carried out using a conventional “Z”-shaped schlieren system configuration [

41], as shown in

Figure 5. The system utilized a Phantom VEO 710 L high-speed camera (CMOS sensor, pixel size 20 μm, maximum resolution 800 × 1280) to capture transient flow field images. Under two mainstream Mach numbers (0.1 and 0.35), a total of 20 operating conditions were tested with jet blowing ratios M = 0.5–2.5 and jet temperature ratios Tr = 1.2 or 1.3. During acquisition, the wind tunnel flow was stabilized by controlling stagnation pressure, temperature, and humidity, and optical path vibration was minimized through mechanical isolation. The “Z” optical setup was meticulously aligned, with knife-edge position optimized to enhance density-gradient contras. The high-speed camera was set at 10,204 Hz and 512 × 512 resolution, providing sufficient temporal and spatial fidelity for the proposed SFS-VAE method. Raw schlieren frames were processed via Gaussian filtering to suppress high-frequency noise and contrast enhancement to highlight shear layers and vortex structures, followed by cropping to 320 × 128 pixels to remove non-flowfield elements and match model input requirements. These measures ensured high signal-to-noise ratio, sharpness, and contrast in the processed data, supporting accurate feature decomposition, reconstruction, and prediction in later analyses.

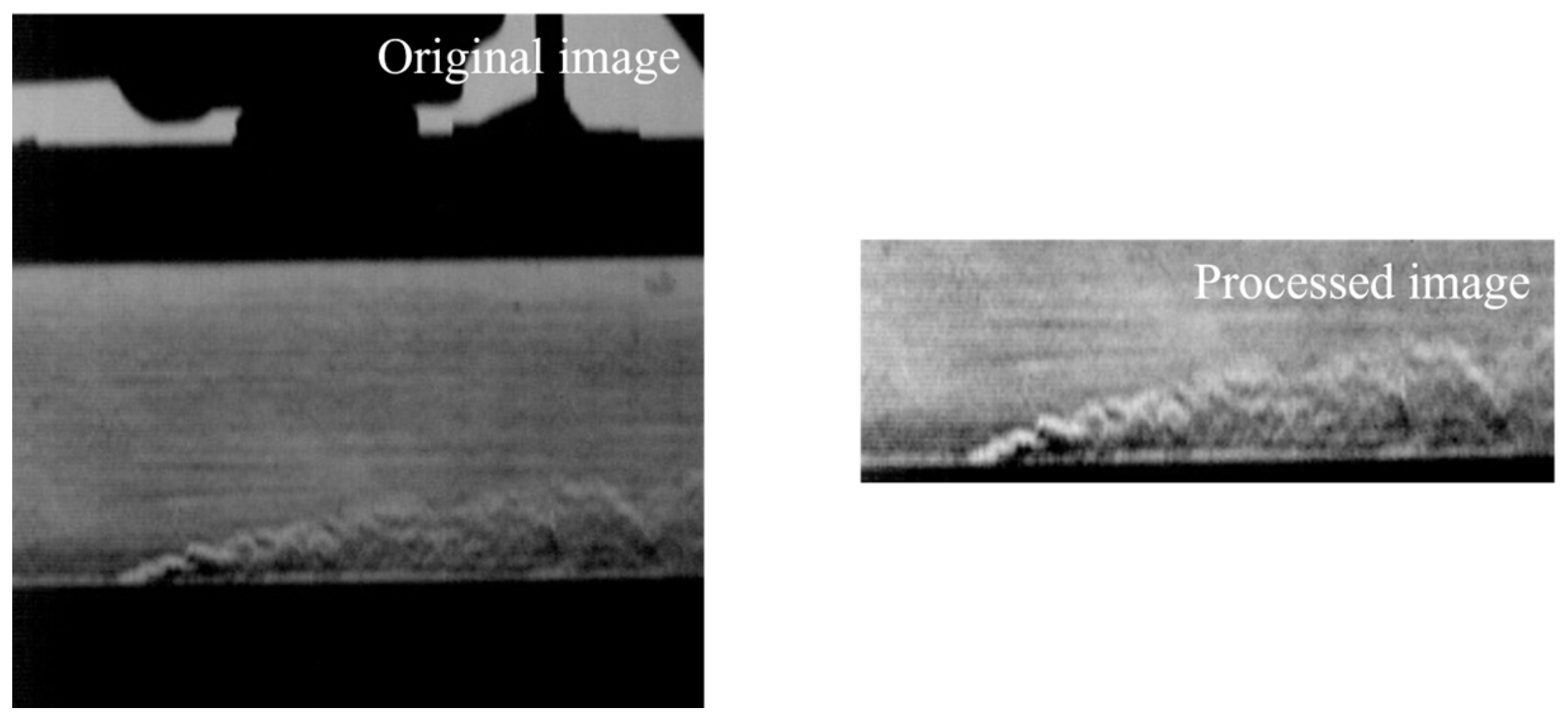

Figure 6 presents both the raw schlieren image and the corresponding processed image from one experiment. In this case, the original image quality was sufficiently high that Gaussian filtering was applied with a 1 × 1 kernel (effectively no smoothing), and the contrast was enhanced by a linear scaling factor of 1.6 to improve the visibility of shear layers and vortex boundaries. In the lower part of the flow field, the jet structure is clearly visible, while weaker transverse mainstream features appear in the background.

4. Results and Discussion

This section evaluates the performance of the proposed reduced-order model in the reconstruction and prediction of the flow field from schlieren image sequences. The study utilized the dataset from the gas film jet schlieren experiment with a Mach number of 0.1, a temperature ratio of 1.3, and a blowing ratio of 2.5, comprising a total of 3120 images. In the experiment, the latent space dimension was fixed at = 10 to balance reconstruction accuracy and computational efficiency. The dataset was divided into a training set (80%) and a test set (20%) based on the time sequence. The results of the test set were inferred solely through the trained model to ensure an objective assessment of the model’s generalization ability.

The implementation and training process of the model were implemented in PyTorch 2.3.0 to ensure code consistency and experimental reproducibility. During the experiment, the model was trained using an NVIDIA GeForce RTX 4070 (12 GB), with the Adam optimizer and a dynamic one-cycle learning rate scheduling strategy. The initial learning rate was set at 1 × 10

−4, reaching a peak of 5 × 10

−4 in the first 20% of the training cycle, and then decreased progressively to 1 × 10

−5 by the end of the training. The entire training process lasted for 400 epochs, with a batch size of 256. An early stopping strategy was implemented; if the validation loss did not show a decreasing trend for 50 consecutive epochs, the training was prematurely terminated.

Table 1 summarizes the model architecture, including layer types and output shapes. The hyperparameter

was set to 0.01, and a discussion on

will be provided in

Section 5.

4.1. Evaluation of Reconstruction Performance

We aim to evaluate the performance of the PFSM, the FSEM, and the integrated SFS-VAE model. In the reduced-order reconstruction of schlieren image flow fields, we conduct a systematic analysis of the original model, ablation models, and the final model. This evaluation includes both visual comparisons of image reconstruction quality and quantitative metrics. The objective is to verify the contribution of each module to the model’s reconstruction capability, especially in accurate modeling of high-density gradient regions such as jets. To quantify the model’s performance in schlieren image reconstruction process, three standard image similarity metrics are employed: Root Mean Square Error (RMSE), Peak Signal-to-Noise Ratio (PSNR), and Pearson Correlation Coefficient (PCC). Their definitions are as follows:

where

represents the maximum possible pixel value, and MSE is the mean square error.

Here, and represent the pixel values of the reference and reconstructed images, respectively; and denote the mean values of the corresponding images; and is the total number of pixels.

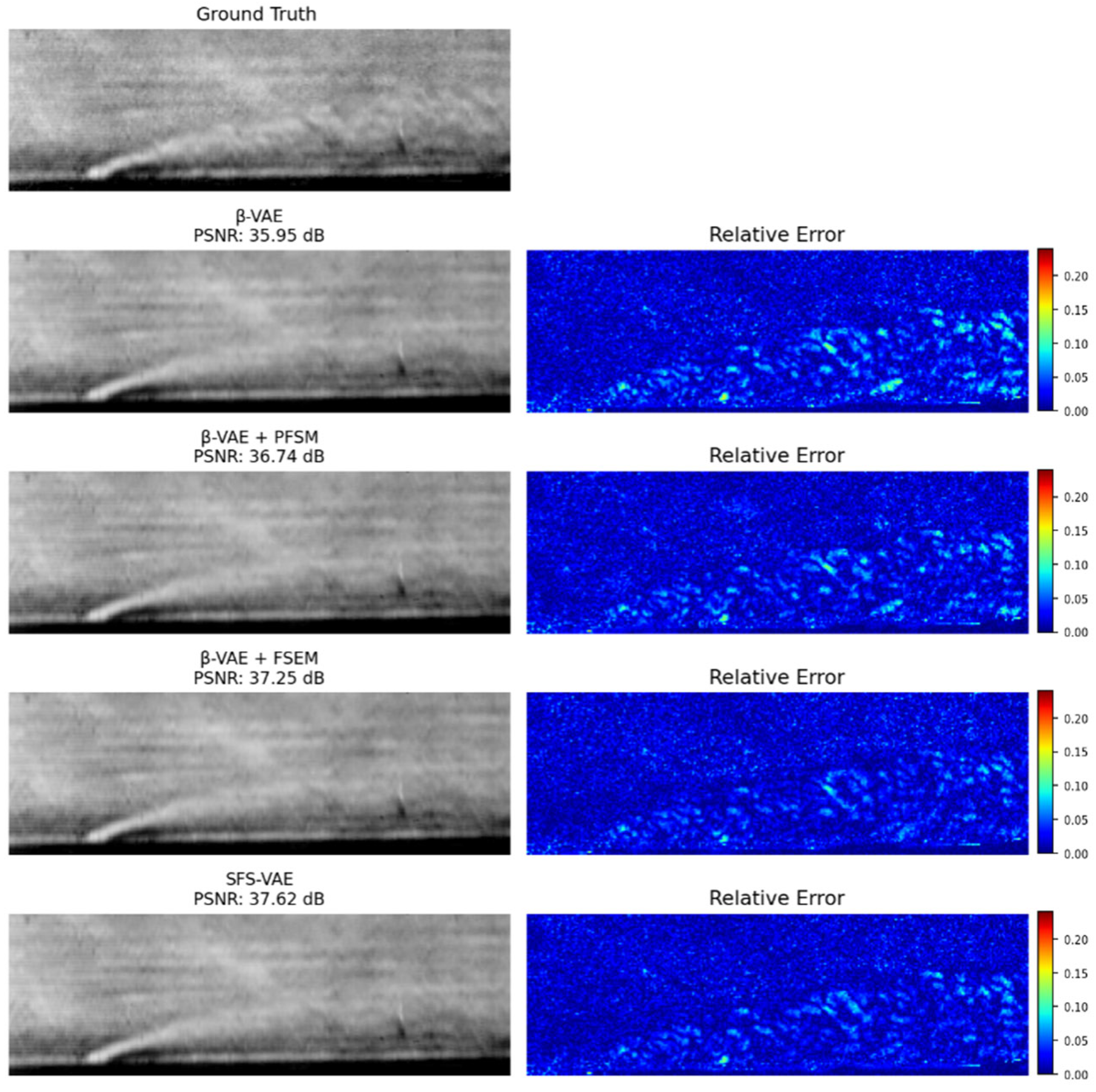

Figure 7 visually compares the reconstructed images of each model at

in the test set with the original image. All models exhibit satisfactory reconstruction performance in the mainstream area that occupies the majority of the image, thus achieving high PSNR values. However, the relative error map reveals that the standard

-VAE model produces noticeable edge blurring artifacts in high-disturbance areas, particularly along the upper and lower boundaries of the jet and within the wake region. In these areas, the model struggles to capture sharp structural variations and grayscale transitions. In contrast, the model incorporating the PFSM shows improved preservation of flow structure boundaries, as evidenced by the clarity of vortex structures and the capture of structural details in the jet and mainstream. This indicates that the PFSM can effectively enhance the model’s perception of local frequency features and improve the clarity of structure restoration. Furthermore, after adding the FSEM, the model exhibits a significantly increased focus on the jet area, as shown by the stronger response in high-density gradient regions in the schlieren image. Notably, the overall reconstruction effect of the lower edge and wake is notably improved, which is highly consistent with the high-gradient regions in the gradient prior map. This suggests that the gradient prior mechanism embedded in the FSEM effectively guides the network’s attention to physically meaningful regions, enhancing the reconstruction of high-density disturbance structures. However, due to the presence of the prior mechanism, the model’s reconstruction error in the mainstream, an area with relatively low significance, is slightly higher than that of the PFSM ablation model and contains more noise-like artifacts. In comparison, the SFS-VAE model integrates the advantages of both models, effectively maintaining the global structure while capturing richer local details, and achieves the highest PSNR of 37.62 dB.

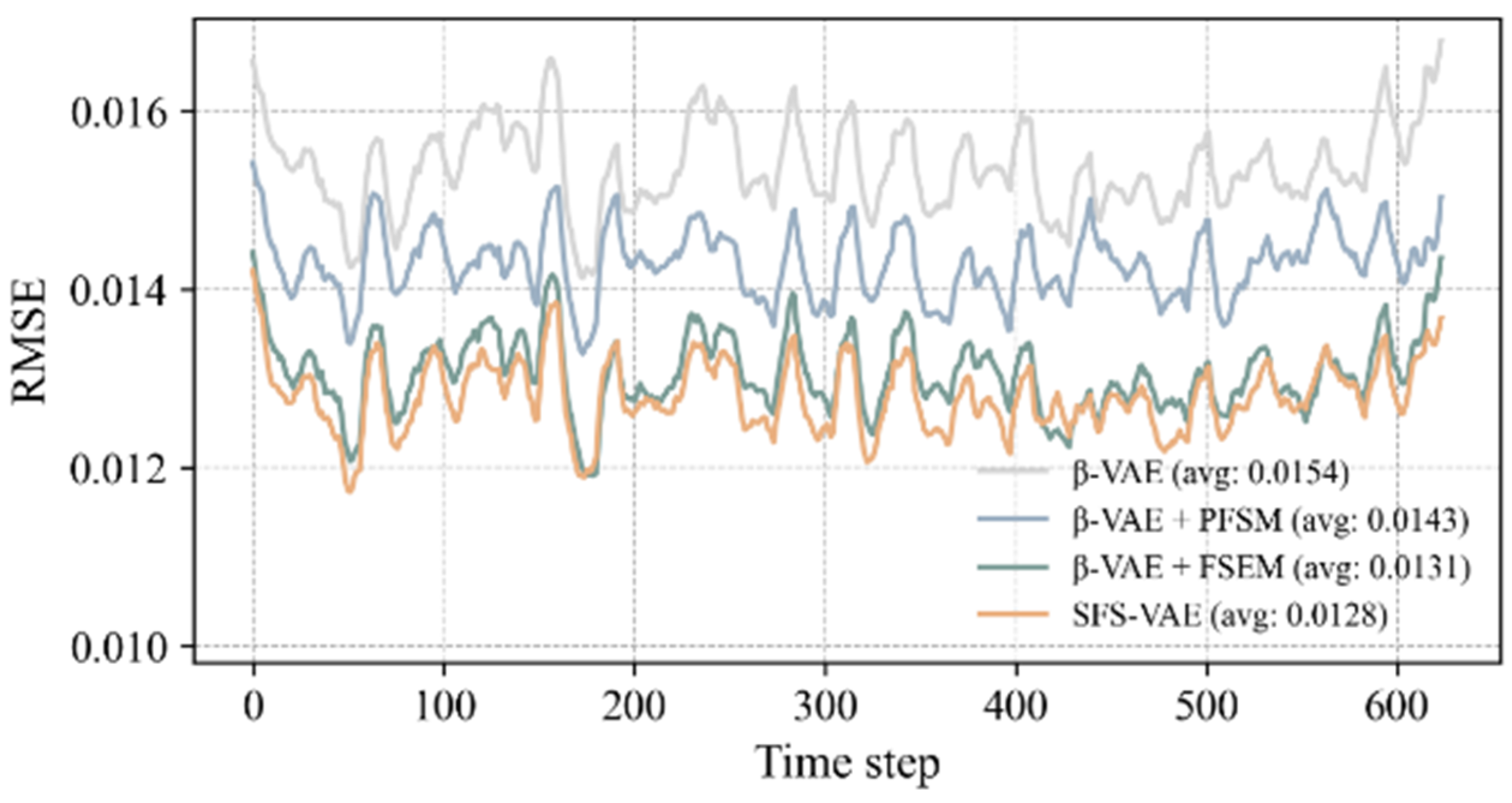

To further quantify the reconstruction capabilities of each model, we statistically analyzed the reconstruction error and image similarity metrics on the time series of the entire test set, as shown in

Figure 8 and

Figure 9. Firstly, the RMSE was calculated to measure the overall reconstruction deviation. The experimental results indicated that the average RMSE of the complete model at all times was 0.0128, the lowest among all models, demonstrating superior reconstruction performance. Secondly, the PSNR also showed that the complete model had a significant advantage in signal-to-noise control, with its average PSNR substantially higher than that of the ablation models and the standard model. Compared to the original

-VAE, the RMSE was reduced by approximately 16.9%, and the PSNR was increased by 1.6 dB.

Overall, the reconstruction performance is strong at earlier time steps, but as time progresses, the metrics exhibit a fluctuating trend rather than a continuous decline. We attribute this behavior to two primary reasons: (1) the reconstruction quality of the mainstream region significantly influences the overall metrics, and since this region has maintained high-quality reconstruction fidelity, fluctuations in the metrics are mainly caused by variations in the jet region; (2) snapshot data suggest that the jet in this experiment exhibits quasi-periodic behavior, allowing the model to apply flow features learned from the training set to unseen patterns in the test set.

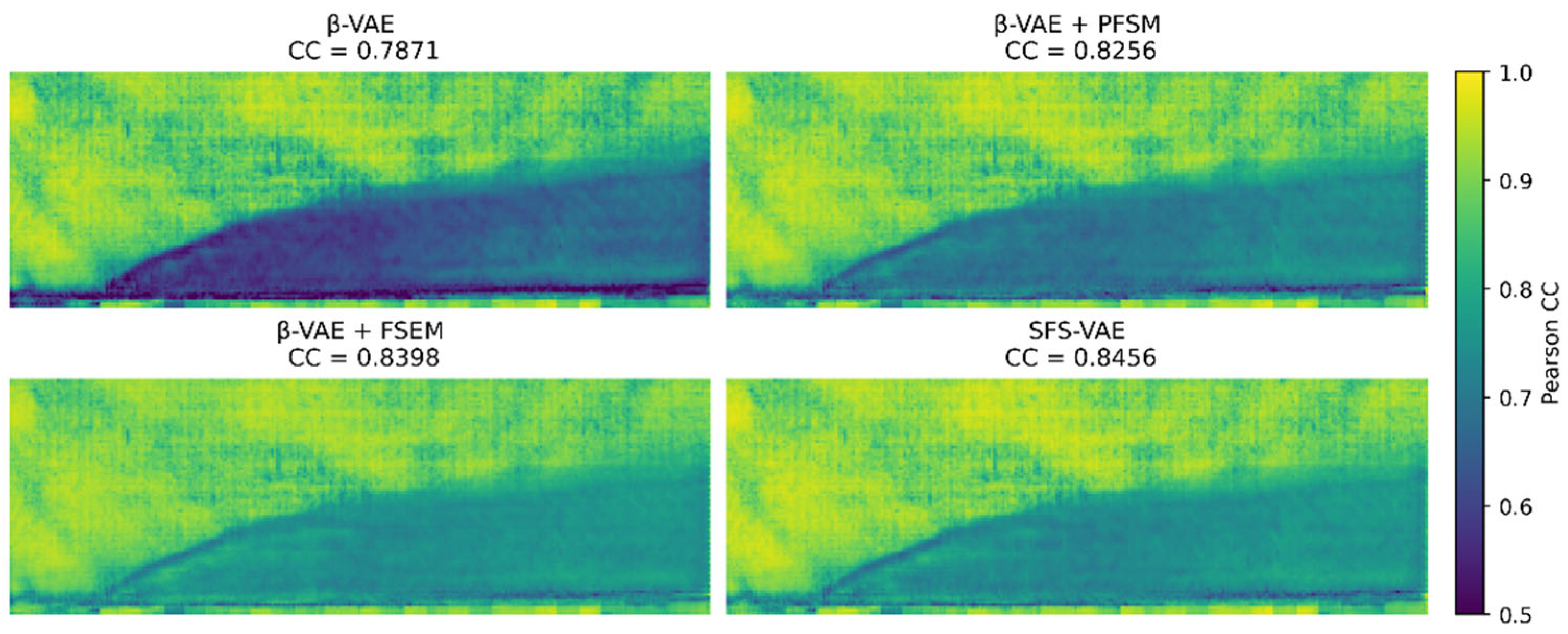

In the comparative analysis shown in

Figure 10, it can be clearly observed that all models perform well in the mainstream region. However, the standard model shows relatively low correlation in the initial zone of the jet structure, the lower boundary, and the wake region, indicating an inaccurate capture of the spatiotemporal evolution trends in these key physical areas, particularly with pronounced correlation discontinuities at the initial zone and edge structures. The PFSM model improves the reconstruction performance in the mainstream area and the initial zone of the jet, significantly mitigating the phenomenon of correlation breaks in the latter. Meanwhile, the FSEM model demonstrates excellent overall performance in reconstructing the jet region, with noticeable improvements in edge continuity. In contrast, the complete model exhibits consistently higher correlation values that are more spatially concentrated and temporally continuous, suggesting superior robustness and expressive capacity in physical structure extraction and temporal dynamics modeling. This outcome further confirms the contribution of PFSM in enhancing the consistency of local edge structures in the frequency domain, as well as the improvements in modeling accuracy of highly perturbed regions brought about by the gradient-driven spatial attention mechanism in FSEM.

Based on the statistical analysis of multiple quantitative metrics, the complete model consistently outperforms both the original and ablation models in terms of RMSE, PSNR, PCC, and other indicators. This demonstrates the effectiveness of the proposed modules in reconstructing Schlieren flow fields, particularly in enhancing the fidelity of jet structure boundaries and regions characterized by high-gradient disturbances.

4.2. Analysis of Latent Space Modes

To further investigate the modal decomposition ability of models for schlieren flow field data, this study compares the differences in modal extraction and energy distribution across various models. Previous works have consistently employed the analysis method proposed by Eivazi [

29,

30,

31]. The core idea is to use the decoder to convert each individually activated latent variable in the latent space into a spatial mode. Specifically, for a trained model, the

-th component of the latent variable vector

obtained through the encoder is retained while the other components are set to 0 to obtain a modal vector

, and then it is sent to the decoder for reconstruction to obtain the representation graph

of the

-th mode. By comparing the decoded mode with the actual data and calculating the percentage of the total energy

occupied by the reconstructed image of this mode, the importance of the

-th mode is quantified:

Here, represents the ensemble average over time, ) and denote the reference gray value and the reconstructed gray value, respectively, and n represents the total number of pixels in the image. By statistically analyzing the contribution of each mode in the reconstruction, the first mode , which carries the highest energy, can be identified. The second mode is defined as the one that, when added to the first mode, contributes the most additional energy to the reconstruction.

Meanwhile, to evaluate the orthogonality between the latent vectors, the correlation matrix

is calculated as:

Here, represents the element in the -th row and -th column of the covariance , and is the dimension of the latent space. When the variables are completely uncorrelated, , and when they are completely correlated, . Additionally, the determinant of this correlation matrix, , is calculated and scaled by a factor of 100 to serve as a metric for evaluating the degree of independence among the latent variables.

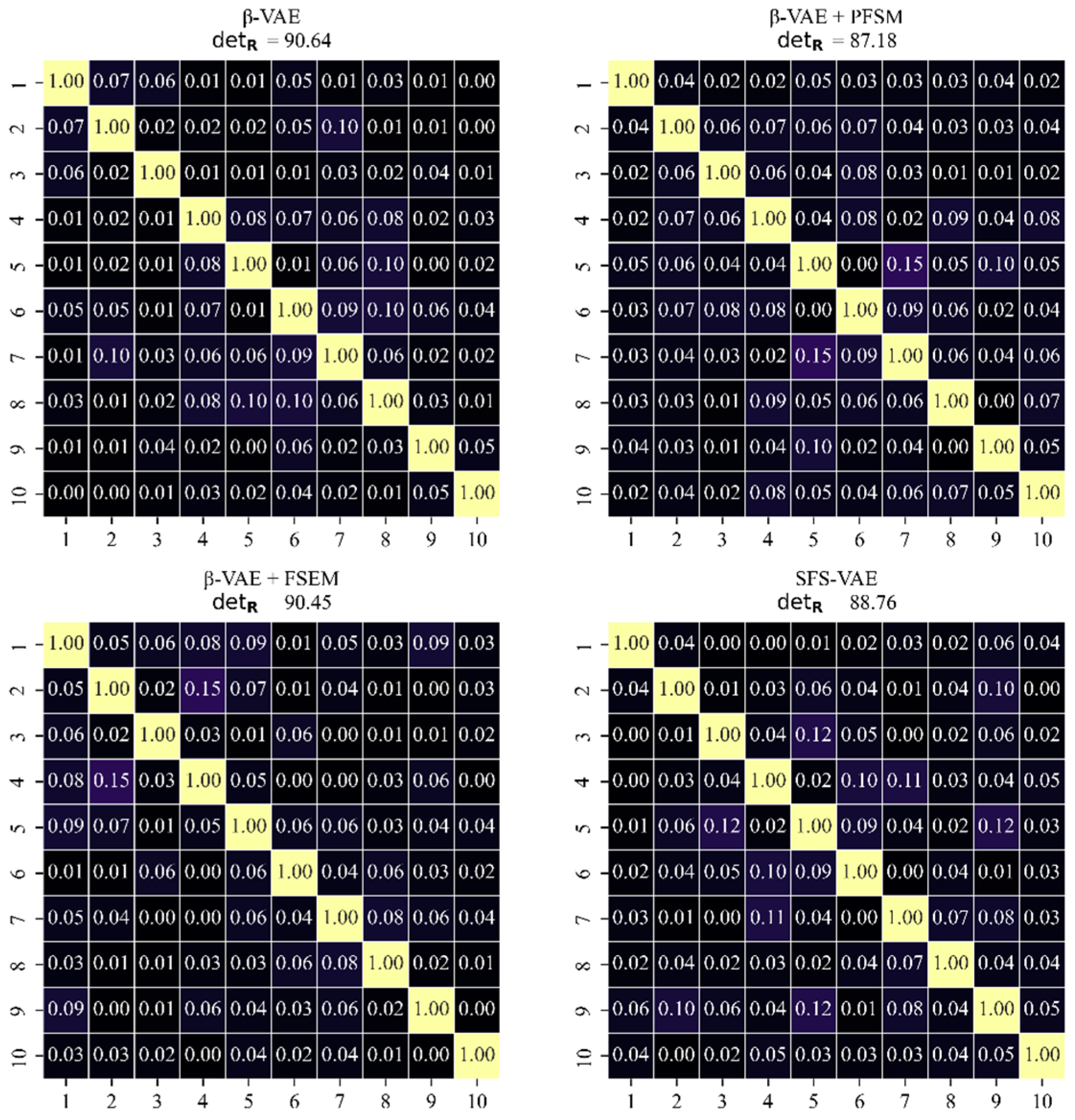

Based on the above principles, this study compares the performance of

-VAE, the final model SFS-VAE, and two ablation models.

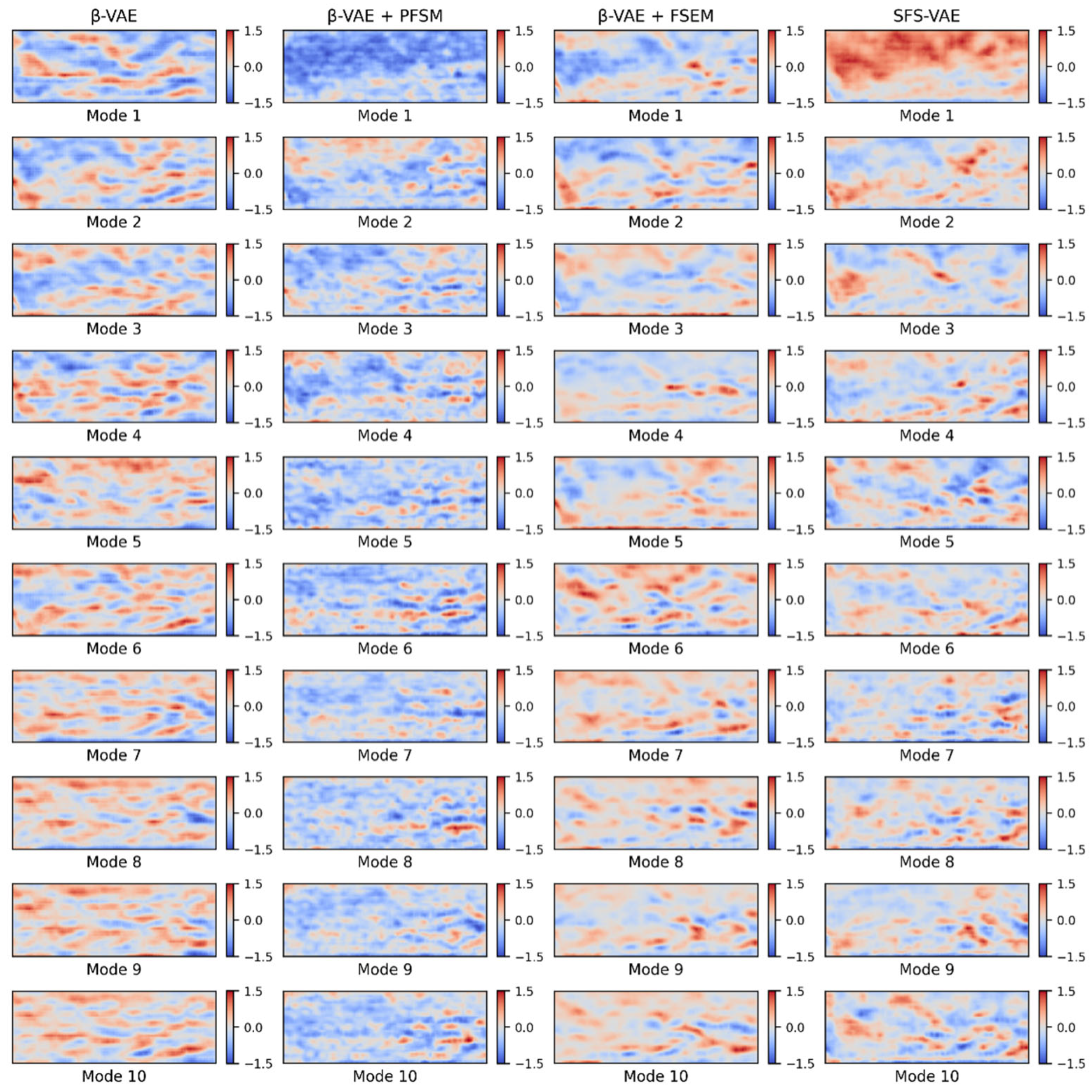

Figure 11 shows the top 10 modes calculated by the four models, and the images of each mode visually reflect the corresponding physical feature distribution. The modes generated by

-VAE typically contain relatively broad global information but exhibit weak differentiation between modes and low physical interpretability. Modes 1–5 appear to aim at capturing quasi-ordered flow structures in the jet region, typically manifested as red-blue alternating patterns within the jet shear layer. However, these modes also contain many features originating from the mainstream flow, introducing predominantly transverse orientations that are inconsistent with the jet’s direction. This mixing reduces the clarity and physical interpretability of the jet-related features. Crucially, the model fails to capture the expected downstream evolution: instead of presenting the desired breakdown into smaller, densely spaced structures in higher-order modes, the influence of mainstream components becomes even more pronounced, further obscuring physically meaningful jet information and preventing the extraction of valuable flow features. These observations suggest that the original model is strongly influenced by noise and background disturbances during feature separation. From the perspective of energy ranking, the mainstream area occupies a large portion of the image and has a substantial impact on the image reconstruction index. Hence, the modes corresponding to the mainstream region should appear earlier. However, in the

-VAE results, a distinct mode representing the mainstream region is not clearly observed; instead, it coexists with the quasi-ordered jet structures in higher modes. In contrast, for the ablation model

-VAE + PFSM, the energy of the first mode is well concentrated in the mainstream area, despite being classified into the blue region. And most of the subsequent modes are related to the quasi-ordered structures of the jet, with relatively small quasi-ordered structure sizes. This indicates that PFSM enhances the model’s ability to capture high-frequency and small-scale flow structures through frequency enhancement and multi-scale convolution. However, the problem that remains is that the mode distinction is still low, and the correlation between some modes is slightly high, as shown in the related matrix graph in the following text. Physically, high inter-mode correlation suggests redundancy in representing similar flow phenomena, reducing the decomposition’s effectiveness in isolating distinct coherent structures. For the other ablation model

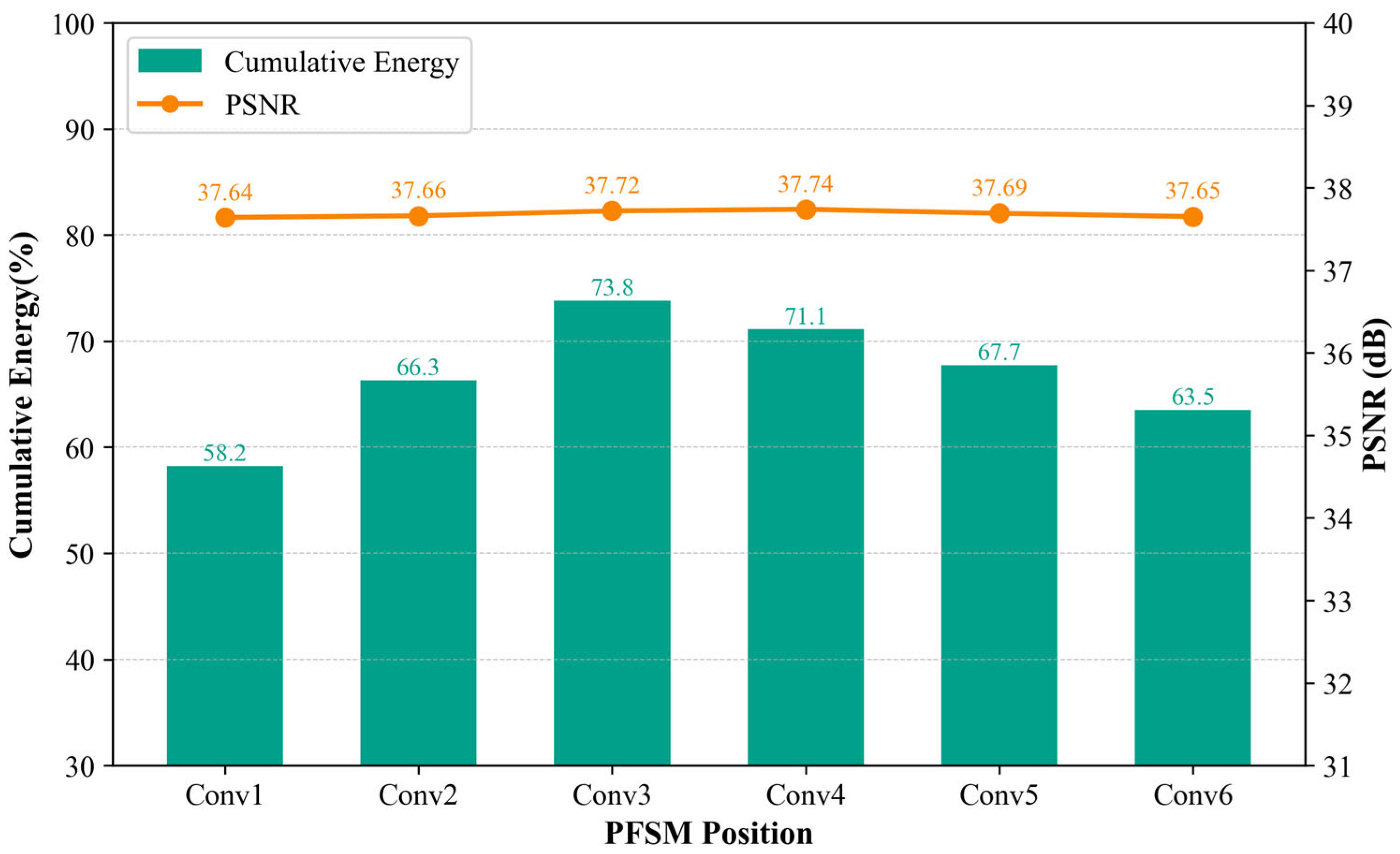

-VAE + FSEM, its ability to decouple the jet region is substantially improved, with all 10 modes showing varying degrees of jet-region decomposition. Higher-order modes also capture finer quasi-ordered structures, appearing as more densely spaced red-blue alternating patterns in the jet region. The final model, SFS-VAE, inherits the advantages of FSEM and PFSM. The first mode primarily captures the mainstream region, while the subsequent modes more precisely identify the high-gradient regions within the jet structure. These modes exhibit clearer and more physically interpretable flow structures. Specifically, several modes preserve the wave-like patterns along the shear layer seen in the original modal decomposition. Additional modes capture the downstream breakdown of vortices into smaller, more densely spaced structures, reflecting the transition to higher-frequency modes, this progression is consistent with the energy cascade in turbulent flows, where large-scale vortices transfer kinetic energy to progressively smaller structures downstream.

Figure 12 illustrates the cumulative energy curves of the top 10 modes for all four models. Upon comparison, it is evident that the SFS-VAE model achieves the highest cumulative energy within the first 10 modes, reaching approximately 73.8%. In contrast,

-VAE captures only 67.2%, indicating lower efficiency in data compression and feature extraction. The cumulative energies of the two ablation models fall in between, but both are still inferior to that of SFS-VAE. In addition, both energy concentration and mode ranking stability are reduced in the ablation models. Furthermore, since both the ablation model

-VAE + PFSM and SFS-VAE capture the mainstream flow structure in their first mode, the energy content of the first mode is significantly higher than in the other two models.

Figure 13 presents the correlation matrices of four models. Among them, the model with the highest

is the original

-VAE, reaching 90.64, while the lowest is

-VAE + PFSM at 87.18. The

of the

-VAE + FSEM model shows minimal change, and the complete model SFS-VAE has a

of 88.76. It is evident that the introduction of the PFSM enhances the inter-mode correlations. Through experimental analysis, we attribute this effect to the spatial-domain multi-scale convolution aggregation branch, which integrates three convolution kernels of varying sizes to perform multi-scale fusion. While this architecture allows the model to better capture the flow field’s multi-scale structures, it also intensifies inter-feature interactions. Experimental results further reveal that increasing the number of convolution kernel types leads to a further reduction in

due to greater overlap among the fused multi-scale features. This overlap introduces redundancy into otherwise independent feature spaces, thereby compromising the orthogonality among modes. Consequently, we ultimately retain three convolution kernels in the spatial-domain multi-scale convolution aggregation branch. Although this slightly reduces orthogonality, the trade-off remains within an acceptable range.

4.3. Evaluation of Time-Series Prediction Performance

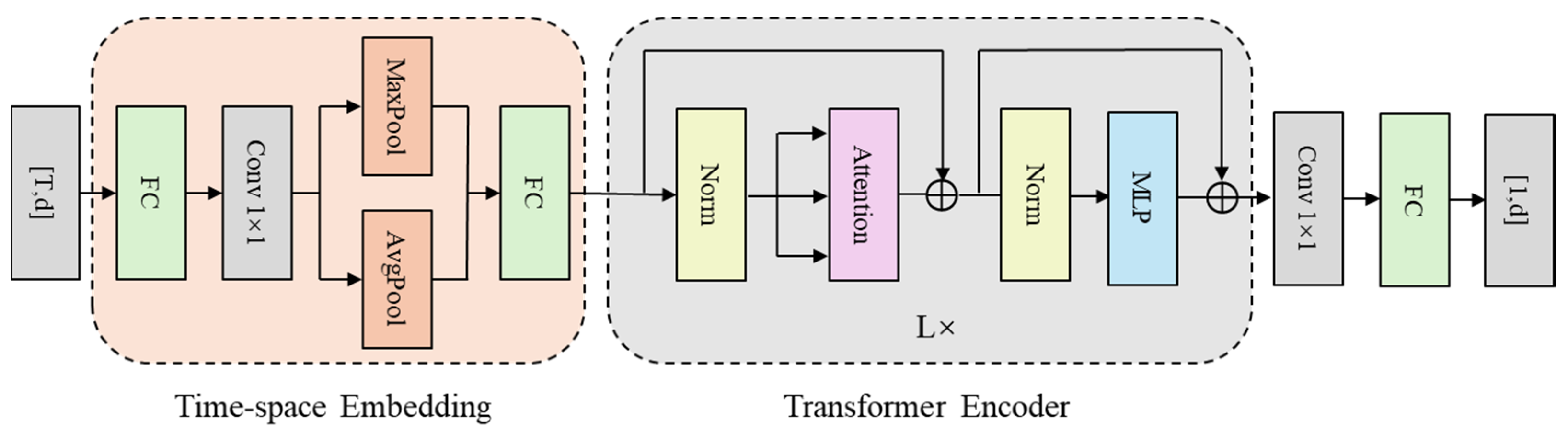

To achieve accurate temporal prediction of latent vector evolution in the flow field, this study adopts a modeling approach based on the Transformer architecture. Specifically, the latent vector sequence extracted from the VAE framework serves as the temporal input. After processing by the Transformer-based temporal prediction model, the predicted schlieren flow field images are reconstructed via the decoder of SFS-VAE. In the experiment, Long Short-Term Memory (LSTM) is employed as a baseline method for temporal prediction [

42]. As a Recurrent Neural Network (RNN) variant with three gates (input, forget, output) and a cell state, it addresses traditional RNNs’ long-term dependency issue to model sequential data effectively. Three variants of the Transformer model are constructed for comparative evaluation. The core architecture follows the seq2one Transformer design proposed by Wang [

34]. The embedding module is implemented as a Time-Space Embedding layer, designed to encode positional information in the temporal sequence. It also enriches spatiotemporal representations via diverse pooling operations. This Time-Space Embedding is adopted in our model, while the Transformer backbone follows the current mainstream Pre-Norm architecture, which has been widely adopted in models such as BERT and the Vision Transformer (ViT). Compared with original Post-Norm, Pre-Norm provides better training stability and convergence in deep networks [

43,

44].

Therefore, the latent vectors with a size dimension of

and a time dimension of

will eventually pass through the Time-space Embedding and Transformer modules, and be modulated by a one-dimensional convolutional layer and a fully connected layer to output the future latent vectors with a dimension of d. The overall architecture is depicted in

Figure 14.

The traditional Transformer module employs the classic multi-head attention mechanism to model global dependencies through standard Q-K-V computations. To explore the impact of different attention mechanisms on time-series prediction performance, we replaced the multi-head attention module in the Transformer with De-stationary Attention (DSAttention) and ProbSparse Self-Attention (ProbAttention), as proposed in recent time-series prediction models [

45,

46]. DSAttention enhances computational efficiency and sensitivity to critical temporal features via dynamic sparsification strategies, while ProbAttention mitigates the influence of redundant information through a probabilistic sampling mechanism, demonstrating superior stability in long-term sequence modeling.

Regarding training settings, all models follow a unified time-series prediction protocol: 80% of the data is used for training and 20% for testing, consistent with the data split used in the preceding VAE model training. Specifically, the latent variables generated from the training set are used for model training, while the latent vectors obtained from the test set are used for evaluation. This ensures that all models are trained and validated under identical data conditions, thereby maintaining the comparability of results. During training, a time-delay window of 64 steps is used to predict the latent variable for the subsequent time step. The training objective is to minimize the Mean Squared Error (MSE) between the predicted and actual sequences. Appropriate regularization strategies are introduced to prevent overfitting. In the experiments, the Transformer model comprises four Transformer blocks, each equipped with four attention heads, and the MLP layer has a dimensionality of 128. The LSTM model adopts a stacked two-layer architecture with a hidden state size of 256.

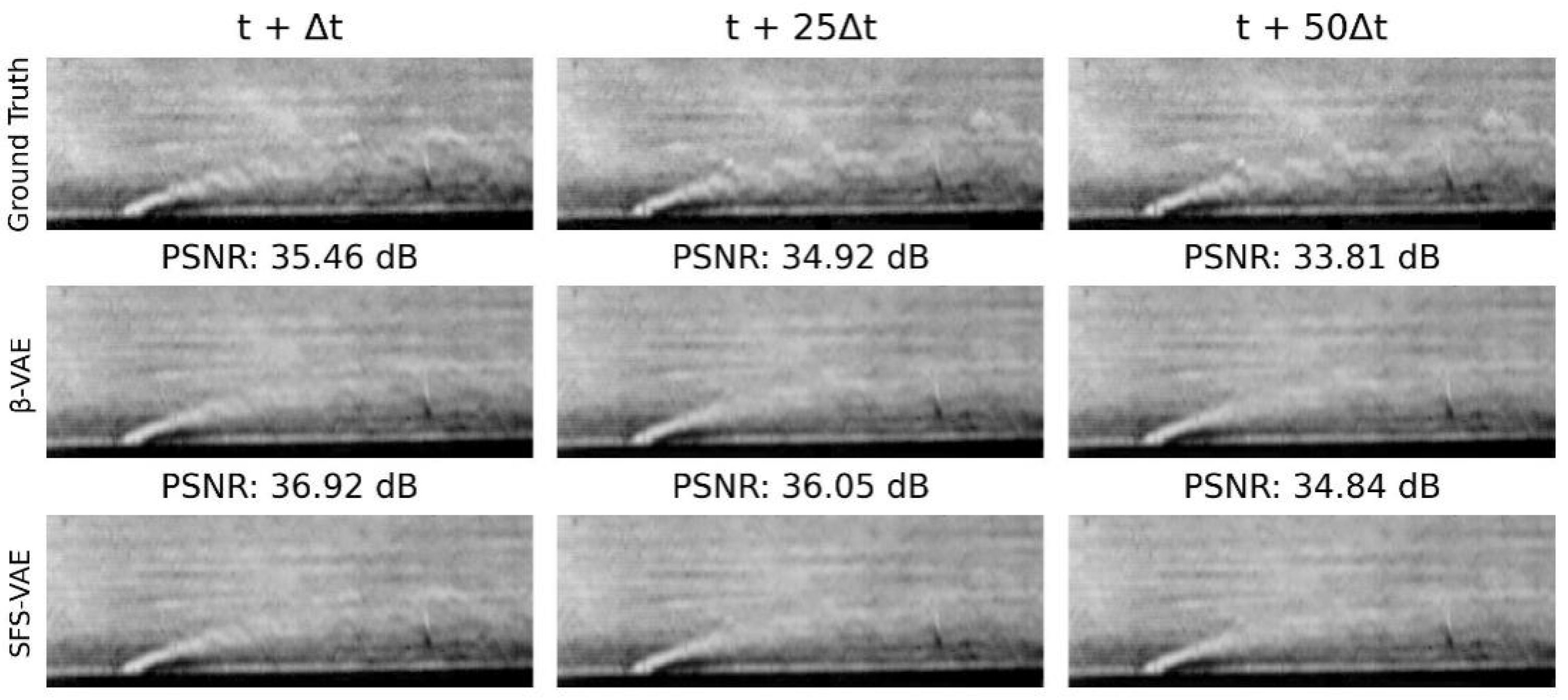

Figure 15 compares the predicted and true time series for the 10 components of the latent vector, corresponding to the 10 spatial modes, using various time-series prediction models, where each subplot illustrates the temporal evolution of one latent component. It can be clearly seen that the LSTM-based prediction can capture the frequency changes in the latent space well before 50-time steps, but it shows a significant smoothing problem in long-term predictions, resulting in a large prediction deviation. Transformer architecture with standard multi-head attention performs the best in overall prediction. Its advantage lies in the global computation mechanism that can fully capture global context information, especially for the time series features in non-mutating regions, making the predicted curve highly consistent with the true value overall, with the RMSE being the lowest error among the four models at 0.992. However, it may have errors in more dynamic latent vectors such as 5 and 10, and also at mutation points in multi-mutating latent vectors such as 1, 2, and 6. The Transformer architecture with De-stationary Attention (DSAttention) shows a similar trend to multi-head attention in prediction, but lags slightly behind multi-head attention in some modes such as 7 and 9. Therefore, the final result is weaker than that of multi-head attention. We believe this might be because dynamic sparsification requires setting a threshold or judging key positions based on local activation values. If the threshold is not sensitive enough in some cases, it may ignore some edge information or respond insufficiently to continuous mutation information, leading to a decrease in prediction accuracy and making the overall result weaker than the traditional multi-head attention. Transformer with ProbSparse Attention (ProbAttention), with its unique probabilistic sampling mechanism, performs well in later-stage predictions, with relatively small errors in the curves of modes 3, 7, and 9. However, probabilistic sampling may miss some low-frequency but crucial detail information during the process or introduce a certain degree of randomness in some periods, resulting in its overall performance being slightly weaker than that of the standard multi-head attention, as shown in modes 2 and 8. Overall, all three types of Transformers outperform LSTM, but the standard multi-head attention performs slightly better in terms of stability and global dependency modeling.

Figure 16 shows the decoding results after using the standard multi-head attention Transformer for latent vector prediction, comparing the flow field image reconstruction performance of the

-VAE and SFS-VAE models at 1 step, 25 steps, and 50 steps. Specifically, at 1-step prediction, the predicted flow field obtained from the latent vector based on

-VAE through the Transformer can reproduce the global features; however, the specific details and jet boundaries are slightly blurred. In contrast, the prediction result based on SFS-VAE exhibits a clearer overall structure and better local details, with a significant increase in PSNR. When the prediction horizon extends to 25 steps, the prediction result of

-VAE begins to deteriorate, with many details lost and the jet tail becoming difficult to observe, while in the SFS-VAE result, although local noise gradually increases, the overall contour and salient features are still relatively well preserved. At 50-step prediction, both models exhibit certain prediction errors. Referring to

Figure 15, it can be observed that the errors in SFS-VAE are primarily caused by prediction inaccuracies in latent vectors 1, 2, and 7. Nevertheless, SFS-VAE consistently maintains a higher PSNR than

-VAE. These results indicate that SFS-VAE, by producing more accurate latent representations, effectively improves the stability and fidelity of temporal prediction.

6. Conclusions and Discussion

This paper addresses the challenge of efficiently reducing dimensionality and predicting flow field images captured by schlieren photography. It proposes and validates the SFS-VAE model, highlighting its performance and design advantages in the following aspects:

For the reduced-order modeling and reconstruction task, SFS-VAE effectively captures multi-scale features in both frequency and spatial domains by introducing two key modules. The PFSM dynamically enhances flow structures across different frequency bands through discrete Fourier transforms and multi-scale convolution, while the FSEM improves the effective representation of the jet area using a gradient-driven spatial attention mechanism. Experimental results show that compared with the traditional -VAE, SFS-VAE reduces RMSE by approximately 16.9%, increases PSNR by about 1.6 dB, and achieves a cumulative modal energy of 73.8%. The modal decomposition also demonstrates stronger interpretability, underscoring the advantages of SFS-VAE in feature decoupling and energy extraction.

In the time-series prediction task, this study evaluates multiple Transformer models with different attention mechanisms to model latent vectors, enhancing the stability and accuracy of future flow field evolution predictions. Experimental results reveal that, based on the high-quality reduced-order representations from SFS-VAE, predicted curves closely match real data in short-term time series prediction, validating the model’s robustness and generalization capabilities in long-term prediction tasks. Additionally, the study discusses model parameters and module placement, clarifying key optimization factors. Experiments show that with a latent variable dimension of 10 and with set to 0.01, the model achieves improved feature orthogonality while maintaining reconstruction accuracy. Regarding PFSM placement, optimal energy accumulation and image reconstruction performance are achieved only when the module is positioned in the middle layer of the encoder. Placement at the bottom or top layers results in a significant decrease in energy despite minimal change in PSNR, indicating a strong correlation between module position and the model’s information extraction ability. Cross-condition experiments verify the strong generalization capability of SFS-VAE across different Mach numbers, temperature ratios, and blowing ratios. The fluctuations in various performance indicators are limited to about 1 to 2 units, demonstrating the model’s broad applicability in engineering flow control and real-time monitoring.

The integration of PFSM and FSEM enhances reconstruction fidelity in sharp-gradient, jet-boundary, and fine-scale regions, improving local detail while preserving the physical interpretability of latent modes. However, several limitations must be acknowledged. First, enhancement performance is sensitive to hyperparameter tuning, including convolution kernel scales, attention weights, and the balance between frequency and spatial contributions. Optimal values can vary with flow regimes and image acquisition settings, necessitating re-tuning or adaptive strategies for deployment in diverse scenarios. Second, while the current model introduces minimal additional training overhead compared with the baseline VAE at moderate resolutions, its inference performance under higher-resolution datasets and longer time-series inputs still requires careful evaluation, which is critical for practical applicability. Due to equipment limitations, experiments involving higher-resolution schlieren datasets, extended temporal sequences, and performance benchmarks across different GPU devices have not yet been conducted, constraining generalization and scalability analysis. Lastly, while the enhancement improves modal interpretability for velocity- and density-related structures, its extension to other scalar fields remains unverified and may require tailored preprocessing or feature fusion schemes. These limitations highlight the need for adaptive parameter control, automated module configuration, more robust denoising, and the development of scalable training and inference strategies.

Ultimately, this work not only provides a powerful tool for fluid dynamics diagnostics but also presents a robust framework for interpreting complex, high-dimensional data across scientific imaging domains. Future research will pursue several directions: (1) developing adaptive parameter selection strategies that automatically adjust based on latent space energy distribution and reconstruction-error patterns; (2) implementing self-tuning enhancement modules, where PFSM scales and FSEM attention weighting are optimized via meta-learning to minimize manual tuning; (3) introducing enhanced data-augmentation strategies—such as diffusion-model-based frame interpolation—to enrich schlieren image datasets; (4) extending the framework to cases where different physical effects interact, such as flows with chemical reactions or combined air-and-heat effects; (5) conducting systematic performance benchmarking across different GPU architectures to evaluate inference efficiency, optimize resource utilization, and support scalability to higher-resolution datasets and extended temporal sequences. These improvements will not only address the current limitations but also broaden the applicability of SFS-VAE towards more diverse, complex, and operational environments.