Utilization of Eye-Tracking Metrics to Evaluate User Experiences—Technology Description and Preliminary Study

Abstract

1. Introduction

2. Background

2.1. Eye-Movement Recording

- Sampling frequency (25–2000 Hz), indicating how many gaze data points are recorded per second,

- Accuracy and precision of measurement,

- Head-box size, or the range in which participants can move without losing calibration,

- Monocular vs. binocular tracking,

- Pupil-detection method (bright or dark pupil effect).

2.2. Eye-Tracking in UX

2.3. Visual Attention and Metrics

2.4. UX-Evaluation Methods

2.5. Design Guidelines and UX

2.6. Research Gap

3. Materials and Methods

3.1. Research Goals and Stages

“When users search for a tool, menu item, or icon in a typical human-computer interface, they often do not have a clear mental representation of the target. Most literature on visual search assumes participants know the exact target. More basic research is needed on visual search tasks where the target is not fully known. A more realistic search task involves looking for the tool that will help accomplish a specific task, even if the user has not yet seen the tool.”

3.2. Study-Group Selection

- Define demographic and behavioral characteristics that accurately represent the internet-user population, including age, gender, education level, place of residence, frequency of internet use, device types (e.g., smartphones, desktops), and level of internet proficiency.

- Employ stratified sampling techniques to ensure proportional representation of these characteristics within the study group. For instance, if 30% of internet users are aged 18–25, the sample should include a similar proportion. Likewise, if women constitute 50% of internet users, this proportion should be mirrored in the sample.

3.3. Sample Characteristics

3.4. Metrics Classification

3.5. Research Questions and Hypotheses

- H1a: Time to first fixation (TTFF) on the Target AOI will be longer for poorly designed variants.

- H1b: Fixation count before first fixation on the Target AOI will be higher for poorly designed variants.

- H1c: Total fixation duration within differential AOIs will be greater in poorly designed variants, reflecting increased cognitive effort or confusion.

- H2a: Time to first click on the Target AOI will be longer for poorly designed variants.

- H2b: Task completion time will be longer for poorly designed variants.

- H2c: Click errors (wrong AOIs) will occur more frequently in poorly designed variants.

- H3a: Reduced contrast will produce the strongest negative effects on search and performance.

- H3b: Unclear links will increase fixation counts and time to task completion.

- H3c: Icon removal or alteration will show context-dependent effects that are smaller than those associated with contrast or link deviations.

3.6. Statistical Methodology

3.7. Research Material

3.8. Research Course

- Welcoming the participant and obtaining signed informed consent. Explaining the study’s procedure and confirming participant understanding.

- Seating the participant comfortably in front of the eye tracker, ensuring easy access to the mouse and keyboard. Participants were instructed not to ask questions during task performance and to avoid turning their head away from the monitor.

- Calibrating the eye-tracking device by having the participant remain still and follow a moving dot on the screen with their eyes.

- Starting data recording using the eye-tracking software.

- Providing an initial instruction detailing the study procedure (see below).

- Administering a test task.

- Conducting the main task while displaying the relevant webpage.

- Asking a survey question regarding the ease of locating information on the page.

- Repeating steps 3 and 4 for each webpage included in the study.

- Ending the recording session in the software.

- Thanking the participant for their involvement in the study.

3.8.1. Initial Instruction

3.8.2. Survey Question

3.9. Description of Statistical Data Analysis

- An initial Poisson GLMM was fitted for each count metric.

- We then conducted two complementary tests for overdispersion:

- A likelihood ratio test (LRT) comparing the fit of the Poisson GLMM to a negative binomial GLMM. A significant result () favors the more complex negative binomial model.

- Calculation of the dispersion statistic (the sum of squared Pearson residuals divided by the residual degrees of freedom). A value significantly greater than 1 indicates substantial overdispersion, necessitating the use of the negative binomial distribution.

- If either test provided significant evidence of overdispersion ( for LRT or dispersion statistic > 1.5), the negative binomial distribution was selected for its ability to model the extra-Poisson variation via a dispersion parameter. Otherwise, the more parsimonious Poisson distribution was retained. The logarithmic link function was used for both distributions to ensure predictions remained within a positive value range.

4. Results

Hypothesis Verification

5. Discussion

5.1. Interpreting Findings and Practical Use

- If Design B yields a significantly longer time to first fixation on the button, it indicates the element is harder to find, negatively impacting discoverability.

- If users make more fixations on the page before clicking Design B’s button, it suggests higher cognitive effort and a less efficient search path.

- A longer time from first fixation to click for Design B implies user hesitation or uncertainty about the element’s purpose or interactivity.

5.2. The Divergent Role of Icons

5.3. Order Effects and Task Framing

5.4. Limitations and External Validity

5.5. Theoretical and Applied Implications

5.6. Future Work

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- ISO 9241-210:2010; Ergonomics of Human-System Interaction—Part 210: Human-centred Design for Interactive Systems. International Organization for Standardization: Geneva, Switzerland, 2010.

- Harte, R.; Glynn, L.; Rodríguez-Molinero, A.; Baker, P.M.; Scharf, T.; Quinlan, L.R.; ÓLaighin, G. A Human-Centered Design Methodology to Enhance the Usability, Human Factors, and User Experience of Connected Health Systems: A Three-Phase Methodology. JMIR Hum. Factors 2017, 4, e8. [Google Scholar] [CrossRef] [PubMed]

- Lamé, G.; Komashie, A.; Sinnott, C.; Bashford, T. Design as a Quality Improvement Strategy: The Case for Design Expertise. Future Healthc. J. 2024, 11, 100008. [Google Scholar] [CrossRef] [PubMed]

- Bojko, A. Eye Tracking the User Experience: A Practical Guide to Research; Rosenfeld Media: New York, NY, USA, 2013. [Google Scholar]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Yarbus, A.L. Eye Movements and Vision; Plenum Press: New York, NY, USA, 1967. [Google Scholar]

- Zhang, X.; Brahim, W.; Fan, M.; Ma, J.; Ma, M.; Qi, A. Radar-Based Eyeblink Detection Under Various Conditions. In Proceedings of the 2023 12th International Conference on Software and Computer Applications, Kuantan, Malaysia, 23–25 February 2023; pp. 177–183. [Google Scholar] [CrossRef]

- Cardillo, E.; Ferro, L.; Sapienza, G.; Li, C. Reliable Eye-Blinking Detection With Millimeter-Wave Radar Glasses. IEEE Trans. Microw. Theory Tech. 2023, 72, 771–779. [Google Scholar] [CrossRef]

- Smith, J.T.; Johnson, L.M.; García-Ramírez, C. Cognitive Load and Trust: An Eye-Tracking Study of Privacy Policy Interfaces. ACM Trans. Comput.-Hum. Interact. 2024, 31, 20. [Google Scholar] [CrossRef]

- Crane, H.D.; Kelly, D.H. The Purkinje Image Eyetracker, Image Stabilization, and Related Forms of Stimulus Manipulation. In Visual Science and Engineering; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice; Springer: New York, NY, USA, 2007. [Google Scholar]

- Ball, L.J.; Richardson, B.H. Eye Movement in User Experience and Human-Computer Interaction Research. In Eye Tracking: Background, Methods, and Applications; Stuart, S., Ed.; Humana Press: Totowa, NJ, USA, 2021; Volume 183, pp. 165–183. [Google Scholar]

- Jacob, R.J.; Karn, K.S. Eye Tracking in Human-Computer Interaction and Usability Research: Ready to Deliver the Promises. In The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research; Elsevier: Oxford, UK, 2003; pp. 573–605. [Google Scholar]

- Law, E.; Kort, J.; Roto, V.; Hassenzahl, M.; Vermeeren, A. Towards a Shared Definition of User Experience. In Proceedings of the CHI ’08 Extended Abstracts on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008. [Google Scholar] [CrossRef]

- Štěpán Novák, J.; Masner, J.; Benda, P.; Šimek, P.; Merunka, V. Eye Tracking, Usability, and User Experience: A Systematic Review. Int. J. Hum.–Comput. Interact. 2024, 40, 4484–4500. [Google Scholar] [CrossRef]

- Krejtz, K.; Wisiecka, K.; Krejtz, I.; Kopacz, A.; Olszewski, A.; Gonzalez-Franco, M. Eye-Tracking in Metaverse: A Systematic Review of Applications and Challenges. Comput. Hum. Behav. 2023, 148, 107899. [Google Scholar] [CrossRef]

- Bednarik, R.; Tukiainen, M. Validating the Restricted Focus Viewer: A Study Using Eye-Movement Tracking. Behav. Res. Methods 2007, 39, 274–282. [Google Scholar] [CrossRef]

- George, A. Image Based Eye Gaze Tracking and Its Applications. Ph.D. Thesis, Indian Institute Of Technology Kharagpur, Electrical Engineering Department, Kharagpur, India, 2019. [Google Scholar]

- Grigg, L.; Griffin, A.L. A Role for Eye-Tracking Research in Accounting and Financial Reporting. In Current Trends in Eye Tracking Research; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 225–230. [Google Scholar]

- Ho, H.F. The Effects of Controlling Visual Attention to Handbags for Women in Online Shops: Evidence from Eye Movements. Comput. Hum. Behav. 2014, 30, 146–152. [Google Scholar] [CrossRef]

- Navarro, O.; Molina, A.I.; Lacruz, M.; Ortega, M. Evaluation of Multimedia Educational Materials Using Eye Tracking. Procedia-Soc. Behav. Sci. 2015, 197, 2236–2243. [Google Scholar] [CrossRef]

- Yang, S.K. An Eye-Tracking Study of the Elaboration Likelihood Model in Online Shopping. Electron. Commer. Res. Appl. 2015, 14, 233–240. [Google Scholar] [CrossRef]

- Tseng, H.-Y.; Chuang, H.-C. An Eye-Tracking-Based Investigation on the Principle of Closure in Logo Design. J. Eye Mov. Res. 2024, 17, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Kim, S.; Park, J. The Role of Icon Concreteness and Familiarity in Mobile UI: An Eye-Tracking Study. Int. J. Hum.-Comput. Stud. 2023, 170, 102976. [Google Scholar] [CrossRef]

- Ehmke, C.; Wilson, S. Identifying Web Usability Issues from Eye-Tracking Data. In Proceedings of the University of Lancaster Conference Proceedings, Lancaster, UK, 3–7 September 2007; pp. 119–128. [Google Scholar]

- Kotval, X.P.; Goldberg, J.H. Eye Movements and Interface Component Grouping: An Evaluation Method. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Santa Monica, CA, USA, 5–9 October 1998; Volume 42, pp. 486–490. [Google Scholar]

- Fisher, R. Statistical Methods for Research Workers; Edinburgh Oliver and Boyd: Edinburgh, UK, 1925. [Google Scholar]

- Kurz, S. Just Use Multilevel Models for Your Pre/Post RCT Data. 2022. Available online: https://solomonkurz.netlify.app/blog/2022-06-13-just-use-multilevel-models-for-your-pre-post-rct-data/ (accessed on 10 September 2025).

- Powell, M.J. The BOBYQA Algorithm for Bound Constrained Optimization without Derivatives. Technical Report NA2009/06; Department of Applied Mathematics and Theoretical Physics: Cambridge, UK; New York, NY, USA, 2009. [Google Scholar]

- Bates, D. Overdispersion Estimation in a Binomial GLMM. 2011. Available online: https://stat.ethz.ch/pipermail/r-sig-mixed-models/2011q1/015392.html (accessed on 10 September 2025).

- McElreath, R. Statistical Rethinking: A Bayesian Course with Examples in R and STAN, 2nd ed.; Chapman and Hall/CRC: New York, NY, USA, 2020. [Google Scholar]

- Kurz, A.S. Statistical Rethinking with Brms, Ggplot2, and the Tidyverse (Second edition). 2023. Available online: https://bookdown.org/content/4857/ (accessed on 10 September 2025).

- R Core Team. R: A Language and Environment for Statistical Computing; R Core Team: Vienna, Austria, 2021. [Google Scholar]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Ben-Shachar, M.S.; Lüdecke, D.; Makowski, D. effectsize: Estimation of Effect Size Indices and Standardized Parameters. J. Open Source Softw. 2020, 5, 1–7. [Google Scholar] [CrossRef]

- Lenth, R.V. emmeans: Estimated Marginal Means, aka Least-Squares Means (R Package). 2022. Available online: https://cran.r-project.org/web/packages/emmeans/ (accessed on 10 September 2025).

- Lüdecke, D. sjPlot: Data Visualization for Statistics in Social Science (R Package). 2022. Available online: https://strengejacke.github.io/sjPlot/ (accessed on 10 September 2025).

- Makowski, D.; Ben-Shachar, M.; Patil, I.; Lüdecke, D. Automated Results Reporting as a Practical Tool to Improve Reproducibility and Methodological Best Practices Adoption. 2022. CRAN. Available online: https://easystats.github.io/report/reference/report-package.html (accessed on 10 September 2025).

- Revelle, W. psych: Procedures for Psychological, Psychometric, and Personality Research (R Package). 2022. Available online: https://cran.r-project.org/web/packages/psych/index.html (accessed on 10 September 2025).

- Wickham, H.; Bryan, J. readxl: Read Excel Files (R Package). 2022. Available online: https://readxl.tidyverse.org/ (accessed on 10 September 2025).

- Korner-Nievergelt, F.; Roth, T.; von Felten, S.; Guelat, J.; Almasi, B.; Korner-Nievergelt, P. Bayesian Data Analysis in Ecology Using Linear Models with R, BUGS and Stan; Elsevier: New York, NY, USA, 2015. [Google Scholar]

- Update to Material Design 3. Available online: https://m2.material.io (accessed on 23 October 2023).

- Cards. Available online: https://m2.material.io/components/cards#specs (accessed on 11 February 2023).

- Laubheimer, P. Cards: UI-Component Definition. Available online: https://www.nngroup.com/articles/cards-component/ (accessed on 8 February 2023).

- Inhoff, A.W.; Radach, R. Definition and Computation of Oculomotor Measures in the Study of Cognitive Processes. In Eye Guidance in Reading and Scene Perception; Underwood, G., Ed.; Elsevier Science: New York, NY, USA, 1998; pp. 29–53. [Google Scholar]

| Metric Type | Examples |

|---|---|

| Eye-movement metrics | Direction (e.g., saccadic direction) |

| Amplitude (e.g., scanpath length) | |

| Duration (e.g., saccade duration) | |

| Speed (e.g., scanpath speed) | |

| Acceleration (e.g., saccadic speed skewness) | |

| Shape (e.g., curvature of giro-saccades) | |

| Transitions between AOIs (e.g., order of first entries) | |

| Scanpath comparisons (e.g., sequence correlations) | |

| Gaze-position metrics | Basic position (e.g., fixation coordinates) |

| Dispersion (e.g., range, standard deviation) | |

| Similarity (e.g., Euclidean distance) | |

| Duration (e.g., fixation duration) | |

| Pupil diameter | |

| Countable metrics | Saccades: number, proportion, frequency |

| Proportion of giro-saccades | |

| Microsaccade frequency | |

| Square wave jerk frequency | |

| Smooth pursuit frequency | |

| Blink frequency | |

| Fixation count and rate | |

| AOI visits: number, proportion, frequency | |

| Regression and forward/backward gaze frequency | |

| Delay and distance metrics | Onset time (e.g., saccade onset) |

| Distance measurements (e.g., between AOIs) |

| Stage | Number of Participants | Number of Tested Websites | Equipment | Comments |

|---|---|---|---|---|

| 1—Pre-test | 22 participants: 9 PhD students and 13 university staff | 9 | Tobii X2-60 eye tracker (60 Hz) and 15” screen | Conducted at University of Technology Sydney (UTS) from 27.11.2019 to 6.12.2019. Methodology approved by local ethics committee (UTS HREC ETH19-3452) |

| 2—Pre-test | 20 participants (students) | 7 | TX-300 eye tracker (300 Hz) and 23” screen | Conducted at Wrocław University of Science and Technology from 28.06.2021 to 6.07.2021 |

| 3—Main study | 102 participants (computer science students) | 11 | TX-300 eye tracker (300 Hz) and 23” screen with chin rest | Conducted at Wrocław University of Science and Technology from 11.11.2022 to 20.12.2022 |

| No. | Website | Project Group | AOI |

|---|---|---|---|

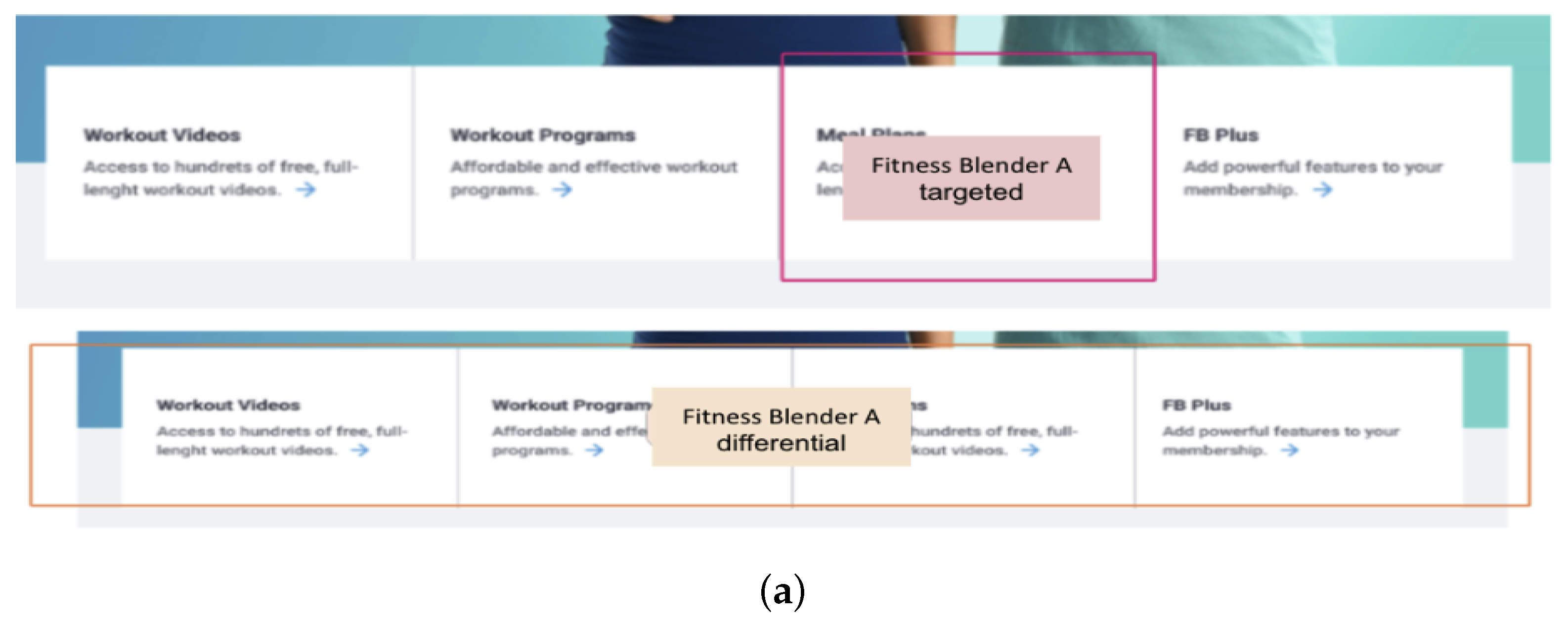

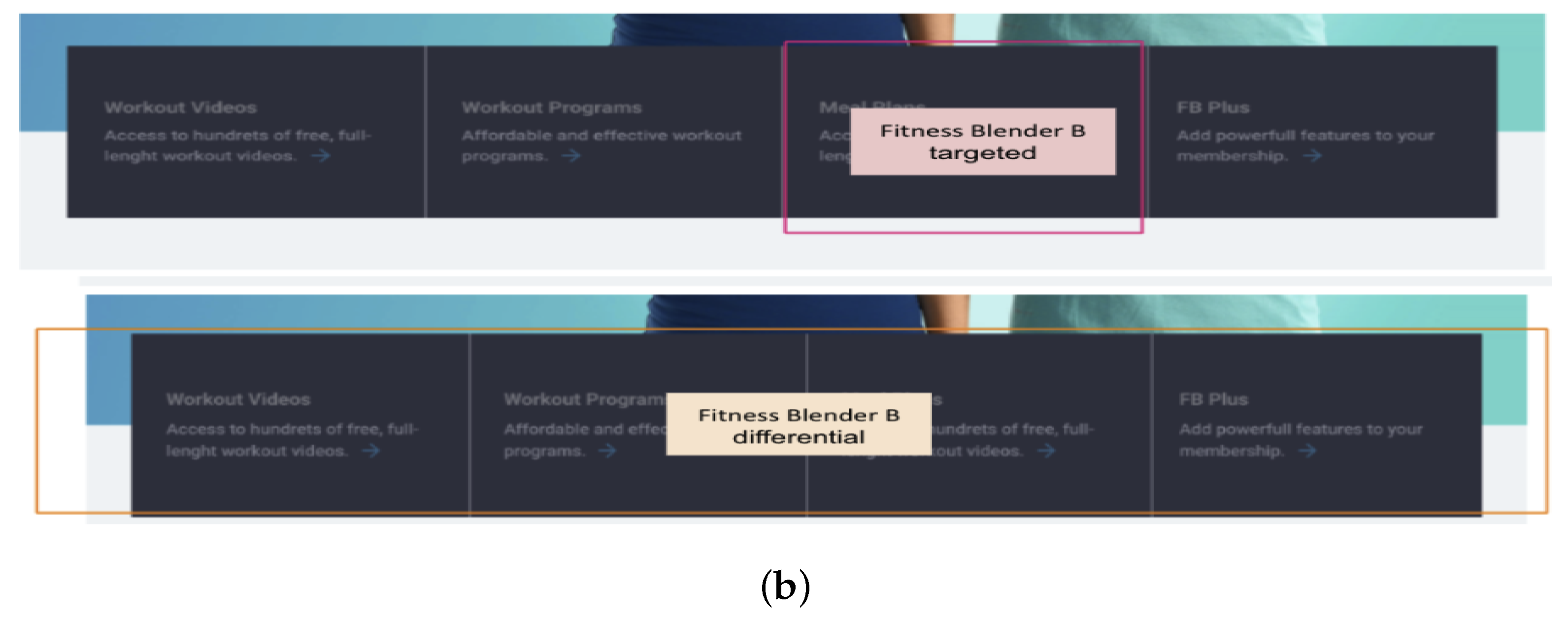

| 1 | Fitness Blender | Contrast | targeted, differential, search |

| 2 | Movies | Icon | targeted, differential, search |

| 3 | Green Energy | Icon | targeted, differential, search |

| 4 | InPost | Contrast | targeted, differential, search |

| 5 | Flower Delivery | Contrast | targeted, differential |

| 6 | OLX | Icon | search, targeted, differential |

| 7 | Poo-Pourri | Link | search, targeted, differential |

| 8 | Salads | Link | search, targeted, differential |

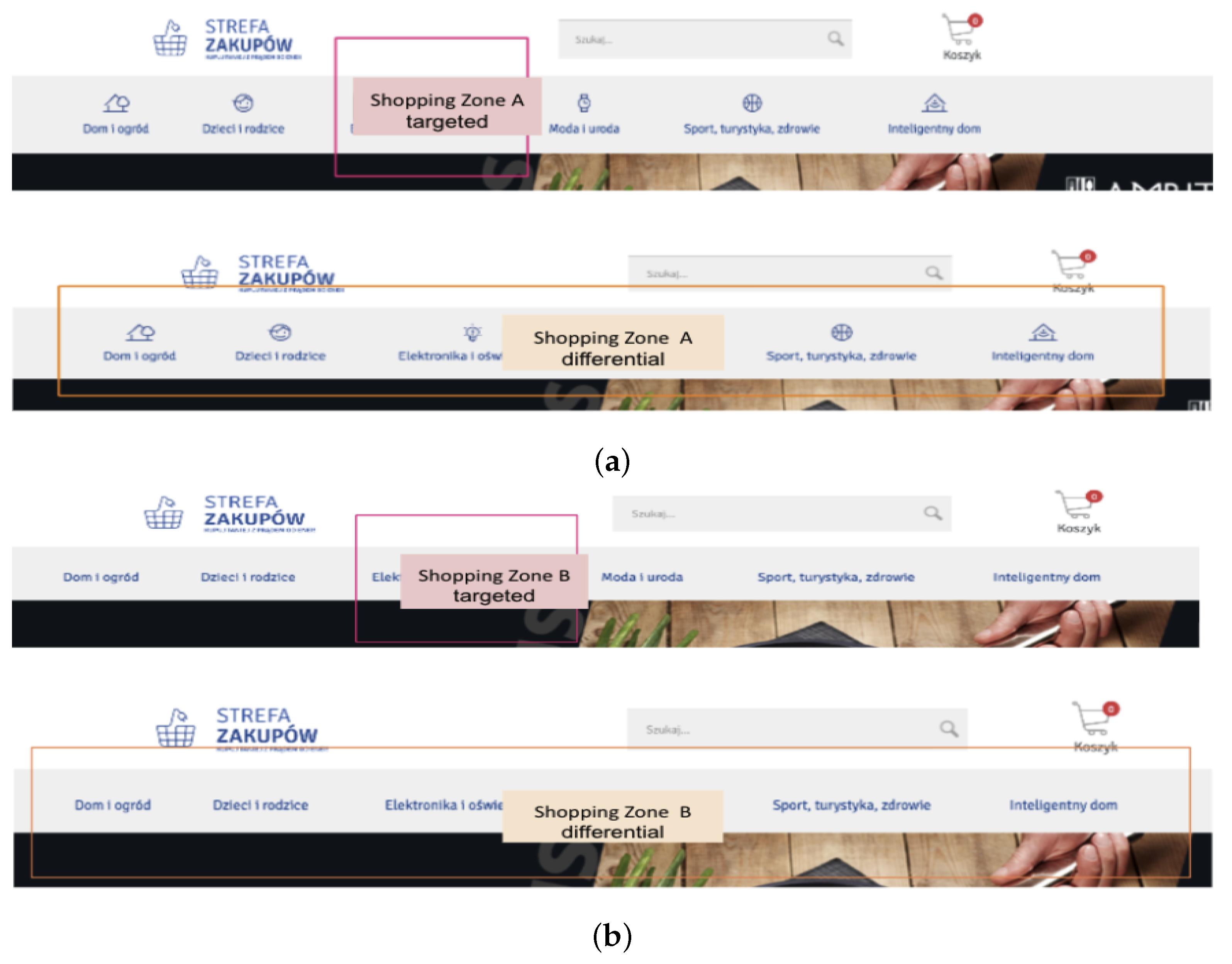

| 9 | Sunny Spain | Contrast | search, targeted, differential |

| 10 | Movie Database | Icon | targeted, differential, search |

| 11 | Recipe Blog | Link | targeted, differential, search |

| Metric Type | Metric Name in Tobii Studio | Definition (Tobii Studio Manual v3.4.8) | Interpretation |

|---|---|---|---|

| Time | Time to first fixation | Time from stimulus onset to the participant’s first fixation on the area of interest (AOI) (seconds). | Noticeability: indicates how easily and quickly an interface element is noticed. Also reflects the ease of finding the target or the visual accessibility of the element users seek. |

| Time | Duration of first fixation | Duration of the participant’s first fixation on the AOI (seconds). | Cognitive processing: measures the effort involved in extracting and understanding information. |

| Time | Fixation duration | Duration of each individual fixation within the AOI (seconds). | Cognitive processing: measures the effort involved in extracting and understanding information. |

| Time | Total fixation duration | Cumulative duration of all fixations within the AOI (seconds). | Cognitive processing: measures the effort involved in extracting and understanding information. |

| Time | Visit duration | Duration of each visit within the AOI (seconds). A visit spans from the first fixation on the AOI to the next fixation outside the AOI. | Interest: reflects how long visual attention is maintained after the user initially notices a specific area. |

| Time | Total visit duration | Total duration of all visits within the AOI (seconds). | Interest: reflects sustained visual attention on a specific area. |

| Time | Time to first mouse click | Time from stimulus onset to the first left mouse click on the AOI (seconds). | Task completion time. |

| Time | Time from first fixation to mouse click | Interval between the participant’s first fixation on the AOI and the subsequent mouse click on the same AOI. | Ease of recognizing the target: indicates the effectiveness of communication and clarity of the element’s purpose. |

| Time | Total saccade time during visits | Calculated as total visit duration minus total fixation duration. | *Proposed metric (not in Bojko’s classification). Based on Kotval and Goldberg [26], a greater number of saccades may indicate a less efficient search for information. |

| Count | Number of fixations before | Number of fixations before the participant first fixates on the AOI (count). | Noticeability: indicates how quickly and easily an element is noticed, reflecting ease of finding the target. |

| Count | Number of fixations | Number of fixations within the AOI (count). | Interest: indicates the duration of visual attention on the area after initial notice. |

| Count | Number of visits | Number of visits to the AOI. Each visit spans from the first fixation on the AOI to the next fixation outside it. | Ease of recognizing the target: reflects how effectively the element communicates its purpose. |

| Contrast | Link | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Eye-Tracking Metrics | AOI | Fitness Blender | InPost | Flower Delivery | Sunny Spain | Contrast Group | Poo-Pourri | Salads | Link Group |

| Time to first fixation | targeted | A<B | |||||||

| differential | |||||||||

| Time to first fixation | targeted | ||||||||

| Fixation time | targeted | A<B | A>B | A>B | |||||

| Total fixation time | Search | A<B | A<B | A<B | A<B | ||||

| Visit time | targeted | A<B | |||||||

| Search | A<B | ||||||||

| Total visit time | Search | A<B | A<B | A<B | A<B | ||||

| Time from first fixation to mouse click | targeted | A<B | A<B | A<B | |||||

| differential | A<B | A<B | A<B | A<B | |||||

| Total saccade time during a visit | differential | A<B | |||||||

| Search | A<B | A<B | A<B | ||||||

| Number of visits | differential | A<B | A<B | A<B | |||||

| Search | A<B | A<B | |||||||

| Number of fixations | targeted | A>B | A<B | ||||||

| differential | A<B | ||||||||

| Search | A<B | A>B | A<B | A>B | A<B | ||||

| Number of fixations before | targeted | A<B | A<B | A<B | A>B | ||||

| differential | A>B | ||||||||

| Time to complete the task | n/a | A<B | A<B | ||||||

| Respondents’ score of the ease of task completion | n/a | A>B | A>B | ||||||

| Icon | |||||||

|---|---|---|---|---|---|---|---|

| Eye-Tracking Metrics | AOI | Filmy | Green Energy | OLX | Shopping Zone | Tchibo | Icon |

| Time to first fixation | targeted | A>B | |||||

| differential | A>B | ||||||

| Time to first fixation | targeted | A<B | |||||

| Fixation time | targeted | A>B | A<B | A<B | |||

| Total fixation time | Search | ||||||

| Visit time | targeted | ||||||

| Search | A>B | ||||||

| Total visit time | Search | ||||||

| Time from first fixation to mouse click | targeted | A<B | |||||

| differential | A<B | ||||||

| Total saccade time during a visit | differential | A<B | |||||

| Search | A<B | ||||||

| Number of visits | differential | ||||||

| Search | |||||||

| Number of fixations | targeted | ||||||

| differential | A<B | ||||||

| Search | A>B | ||||||

| Number of fixations before | targeted | A>B | A<B | A<B | A>B | ||

| differential | A>B | A<B | |||||

| Time to complete the task | n/a | ||||||

| Respondents’ score of the ease of task completion | n/a | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Falkowska, J.; Sobecki, J.; Falkowski, M. Utilization of Eye-Tracking Metrics to Evaluate User Experiences—Technology Description and Preliminary Study. Sensors 2025, 25, 6101. https://doi.org/10.3390/s25196101

Falkowska J, Sobecki J, Falkowski M. Utilization of Eye-Tracking Metrics to Evaluate User Experiences—Technology Description and Preliminary Study. Sensors. 2025; 25(19):6101. https://doi.org/10.3390/s25196101

Chicago/Turabian StyleFalkowska, Julia, Janusz Sobecki, and Michał Falkowski. 2025. "Utilization of Eye-Tracking Metrics to Evaluate User Experiences—Technology Description and Preliminary Study" Sensors 25, no. 19: 6101. https://doi.org/10.3390/s25196101

APA StyleFalkowska, J., Sobecki, J., & Falkowski, M. (2025). Utilization of Eye-Tracking Metrics to Evaluate User Experiences—Technology Description and Preliminary Study. Sensors, 25(19), 6101. https://doi.org/10.3390/s25196101