A Hybrid Framework Integrating Past Decomposable Mixing and Inverted Transformer for GNSS-Based Landslide Displacement Prediction

Abstract

1. Introduction

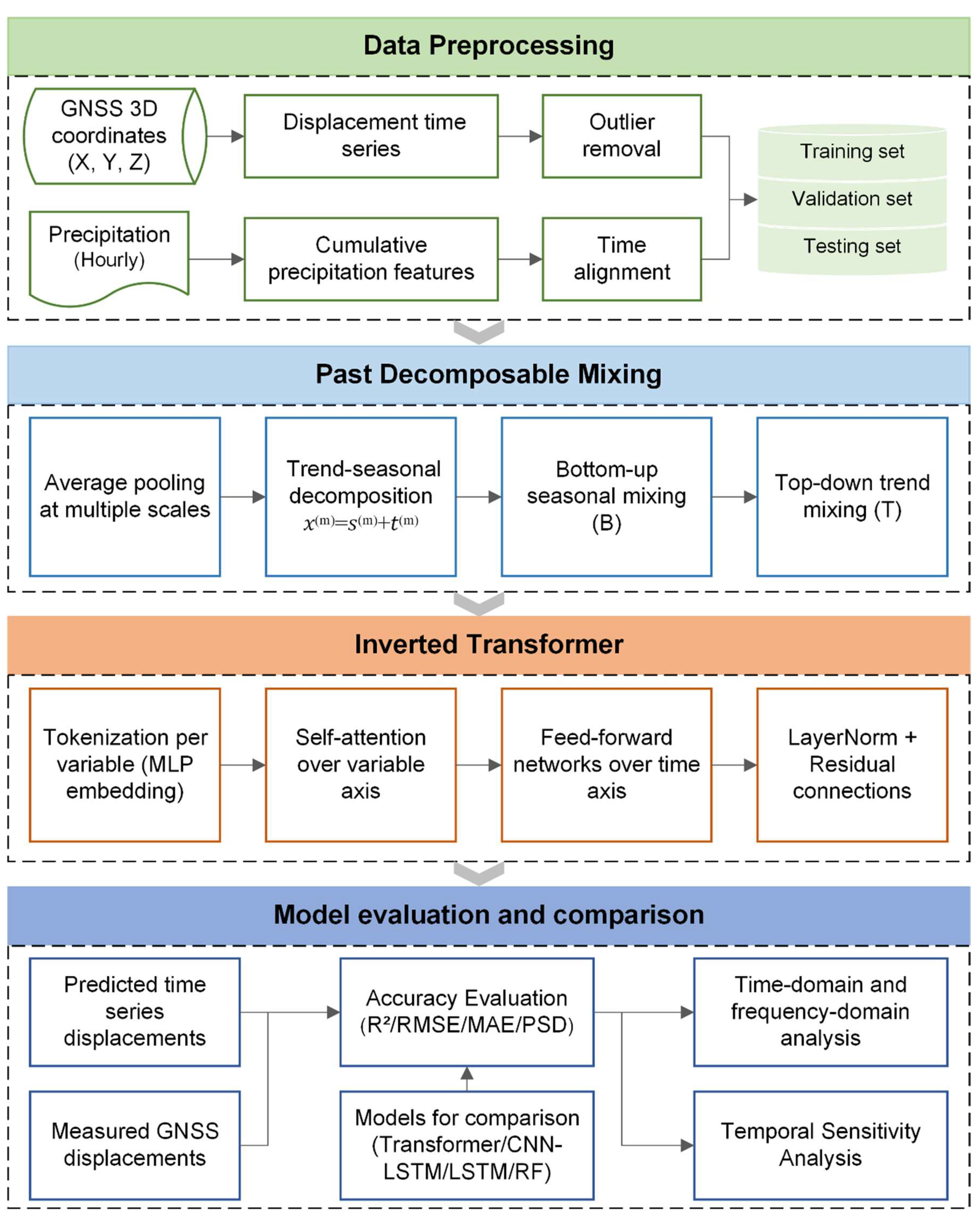

- (1)

- A novel PDM module is integrated into the framework to perform structured decomposition and information mixing across temporal scales, improving the interpretability and robustness of time series feature extraction.

- (2)

- An inverted Transformer architecture is introduced to decouple temporal and variable dimensions in multivariate modeling, enhancing the ability to capture long-range dependencies and cross-variable dynamics.

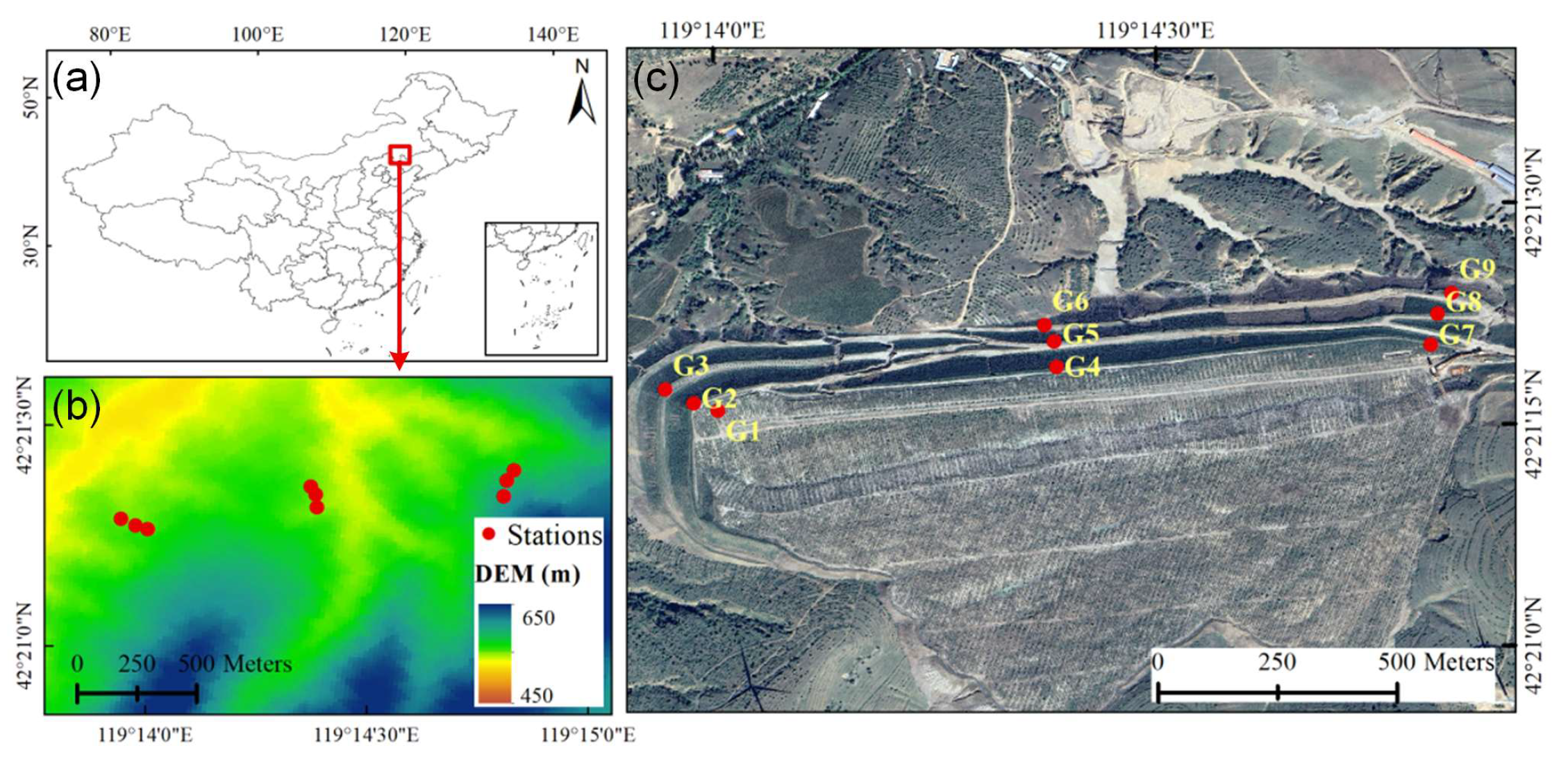

2. Study Area and Data

3. Methodology

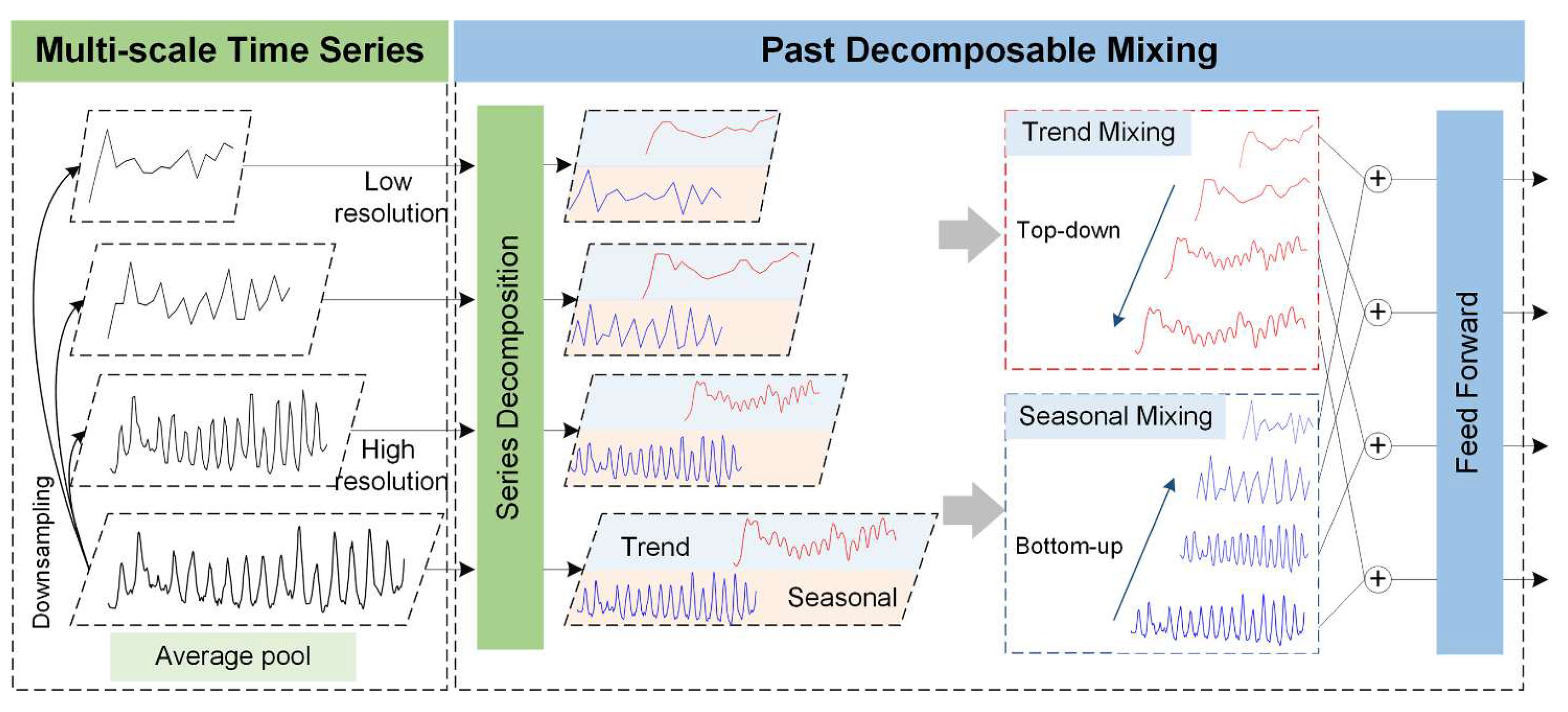

3.1. Past Decomposable Mixing (PDM) Module

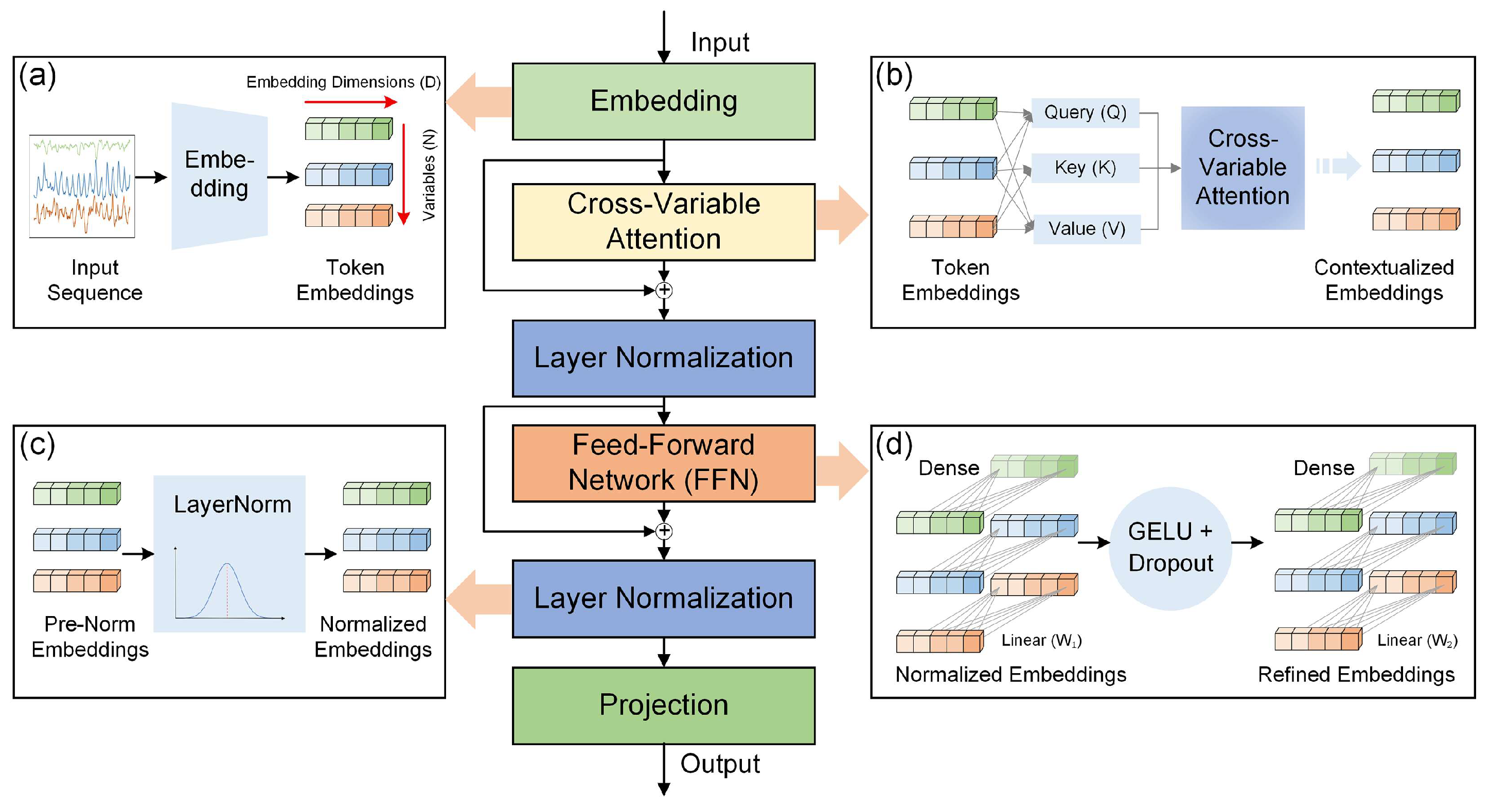

3.2. iTransformer

3.3. Model Validation

4. Results and Discussion

4.1. Model Implementation and Prediction Accuracy

4.1.1. Hyperparameter Settings

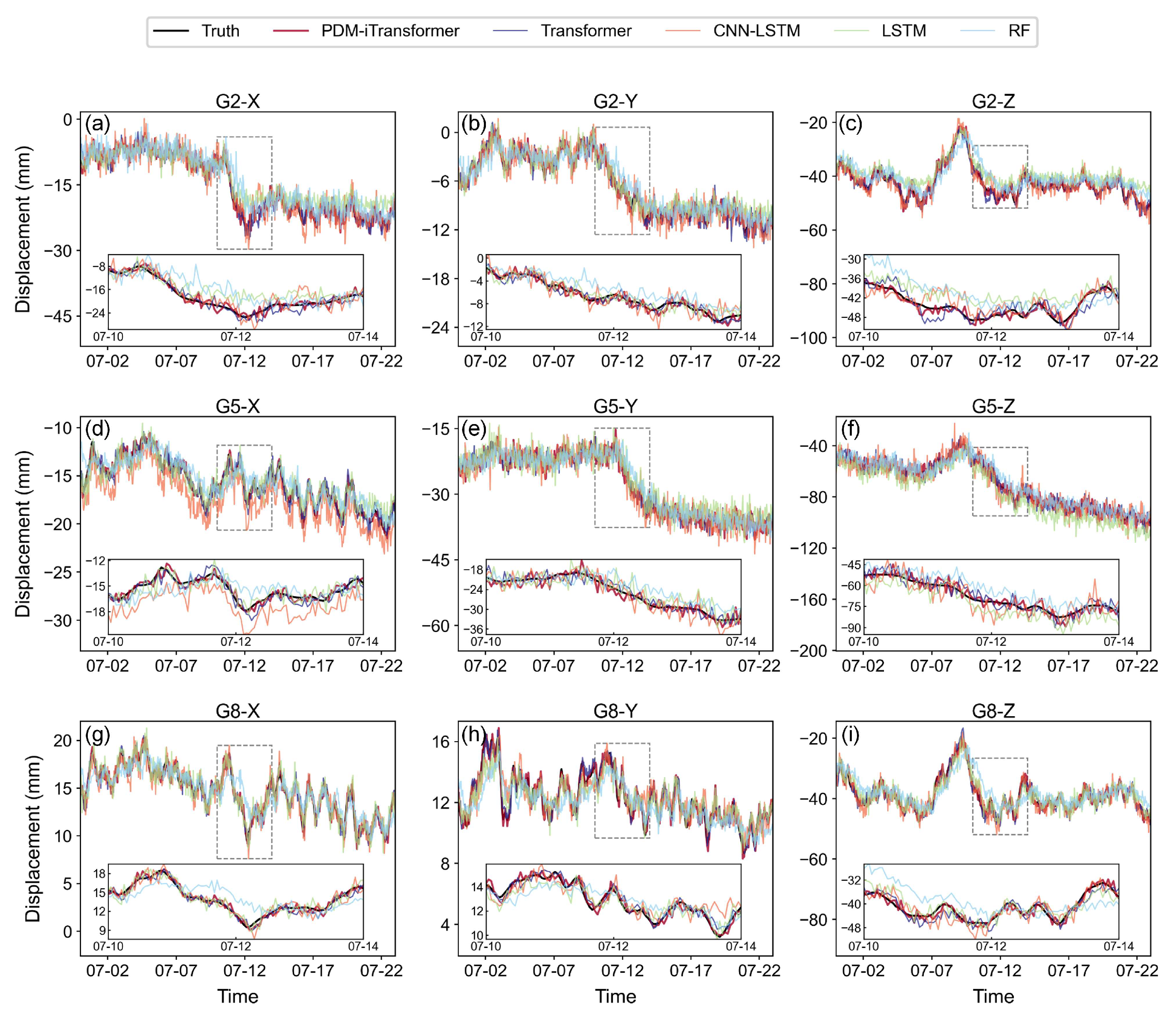

4.1.2. Time Series Prediction and Accuracy Verification

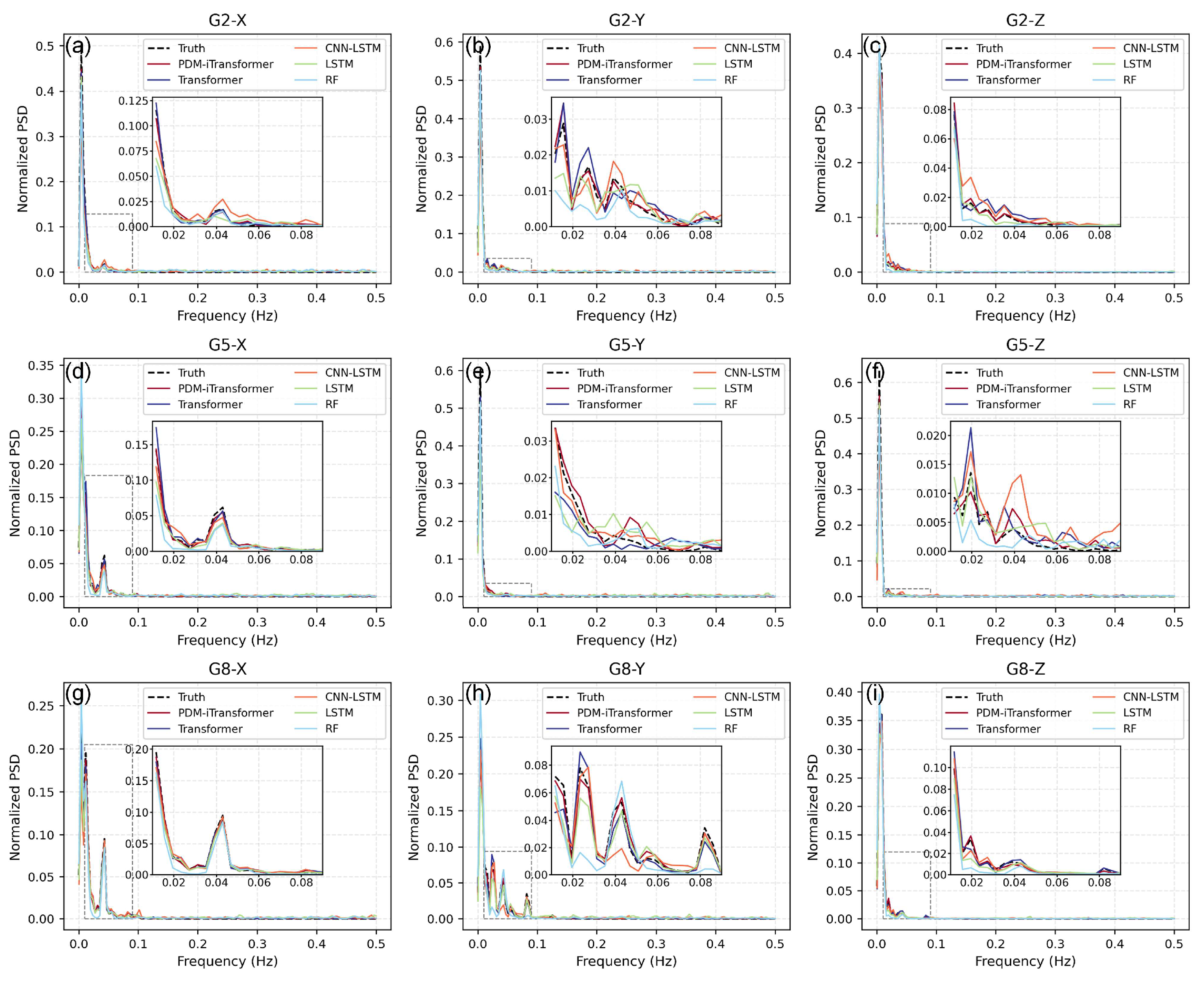

4.2. Frequency-Domain Characteristics Analysis

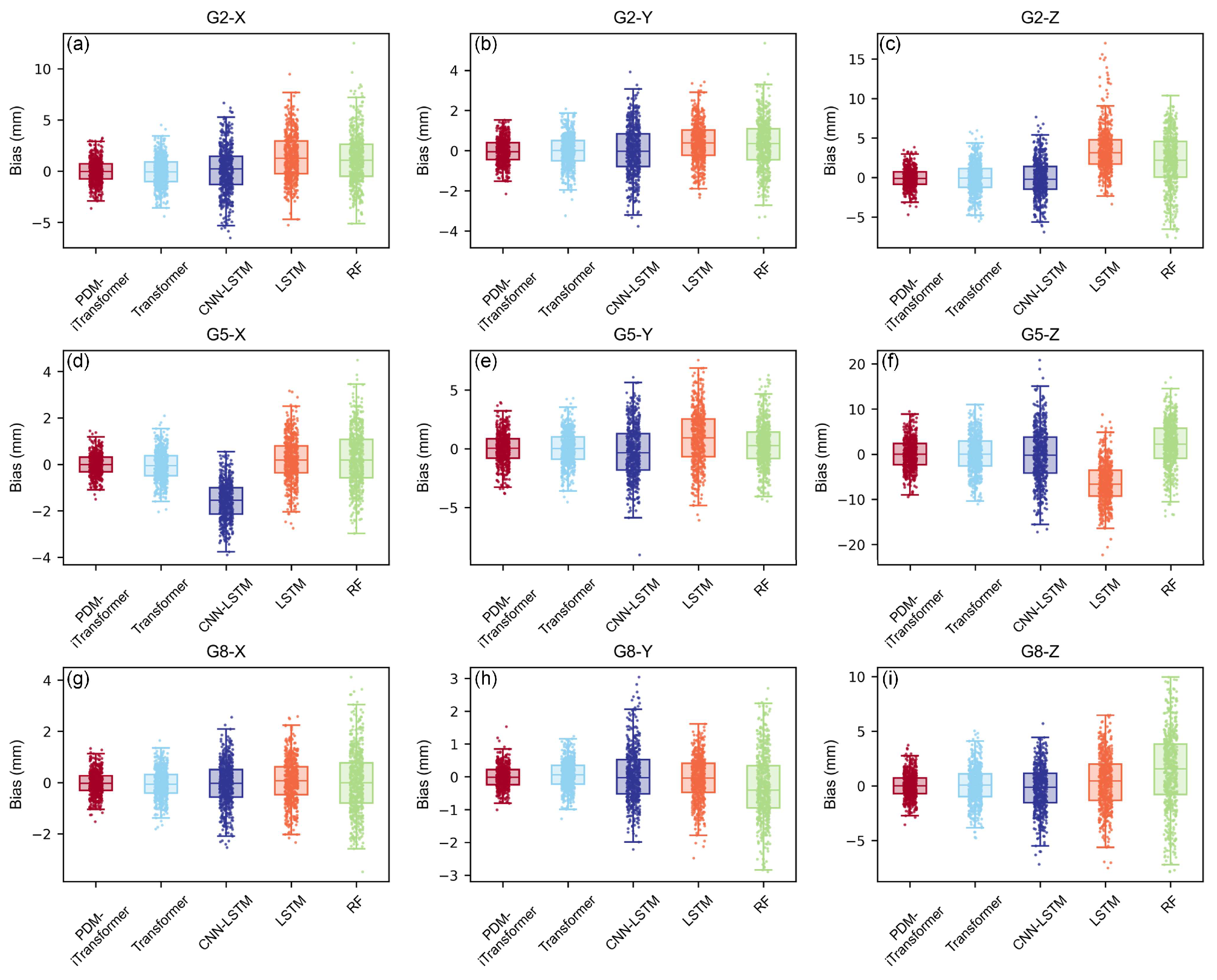

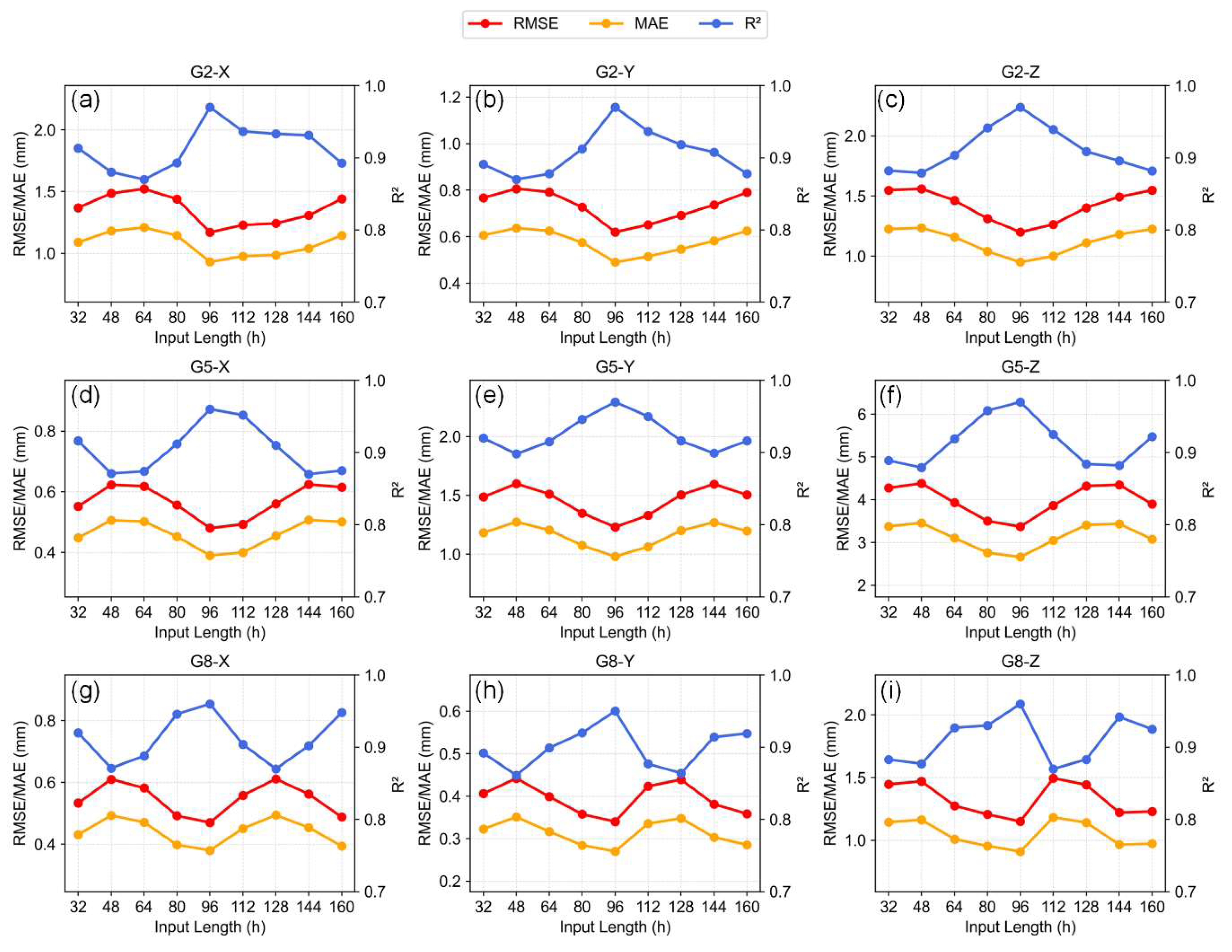

4.3. Prediction Uncertainty and Temporal Sensitivity Analysis

5. Discussion

6. Conclusions

- (1)

- PDM enables multiscale feature extraction and interpretable decomposition, effectively isolating trend and seasonal components of GNSS displacement sequences. This enhances the model’s ability to represent complex temporal variations, contributing to improved prediction robustness.

- (2)

- The iTransformer architecture captures cross-variable interactions between displacement and precipitation features, leveraging variable-wise attention and temporal abstraction to model nonlinear dependencies. This facilitates joint modeling of kinematic deformation and precipitation-induced triggers.

- (3)

- The combined framework achieves consistent performance gains over existing models, with R2 improved by 16.2–48.3%, and RMSE/MAE reduced by up to 1.33 mm and 1.08 mm, respectively. These improvements reflect the synergy between multiscale decomposition and attention-based modeling in capturing long-term trends and short-term fluctuations.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Station | Axis | t | R2 | RMSE (mm) | MAE (mm) |

|---|---|---|---|---|---|

| G2 | X | 32 | 0.913 ± 0.010 | 1.369 ± 0.010 | 1.089 ± 0.009 |

| 48 | 0.880 ± 0.014 | 1.485 ± 0.009 | 1.181 ± 0.008 | ||

| 64 | 0.870 ± 0.012 | 1.521 ± 0.007 | 1.209 ± 0.007 | ||

| 80 | 0.893 ± 0.012 | 1.440 ± 0.009 | 1.144 ± 0.008 | ||

| 96 | 0.970 ± 0.009 | 1.170 ± 0.009 | 0.930 ± 0.008 | ||

| 112 | 0.937 ± 0.009 | 1.228 ± 0.011 | 0.977 ± 0.010 | ||

| 128 | 0.933 ± 0.008 | 1.243 ± 0.010 | 0.986 ± 0.009 | ||

| 144 | 0.931 ± 0.013 | 1.306 ± 0.011 | 1.039 ± 0.010 | ||

| 160 | 0.893 ± 0.012 | 1.441 ± 0.009 | 1.145 ± 0.008 | ||

| Y | 32 | 0.891 ± 0.012 | 0.767 ± 0.008 | 0.607 ± 0.007 | |

| 48 | 0.870 ± 0.008 | 0.806 ± 0.011 | 0.637 ± 0.010 | ||

| 64 | 0.878 ± 0.014 | 0.792 ± 0.011 | 0.625 ± 0.010 | ||

| 80 | 0.912 ± 0.013 | 0.727 ± 0.010 | 0.575 ± 0.009 | ||

| 96 | 0.970 ± 0.009 | 0.620 ± 0.011 | 0.490 ± 0.010 | ||

| 112 | 0.937 ± 0.009 | 0.651 ± 0.009 | 0.515 ± 0.008 | ||

| 128 | 0.918 ± 0.009 | 0.692 ± 0.010 | 0.547 ± 0.009 | ||

| 144 | 0.908 ± 0.010 | 0.736 ± 0.009 | 0.581 ± 0.008 | ||

| 160 | 0.878 ± 0.011 | 0.790 ± 0.007 | 0.625 ± 0.007 | ||

| Z | 32 | 0.882 ± 0.011 | 1.548 ± 0.008 | 1.226 ± 0.007 | |

| 48 | 0.879 ± 0.010 | 1.560 ± 0.007 | 1.235 ± 0.007 | ||

| 64 | 0.903 ± 0.012 | 1.464 ± 0.010 | 1.159 ± 0.009 | ||

| 80 | 0.942 ± 0.009 | 1.312 ± 0.009 | 1.039 ± 0.008 | ||

| 96 | 0.970 ± 0.010 | 1.200 ± 0.010 | 0.950 ± 0.009 | ||

| 112 | 0.939 ± 0.010 | 1.263 ± 0.012 | 1.000 ± 0.011 | ||

| 128 | 0.909 ± 0.011 | 1.403 ± 0.008 | 1.111 ± 0.007 | ||

| 144 | 0.896 ± 0.013 | 1.493 ± 0.009 | 1.182 ± 0.008 | ||

| 160 | 0.882 ± 0.009 | 1.549 ± 0.011 | 1.226 ± 0.010 | ||

| G5 | X | 32 | 0.916 ± 0.011 | 0.552 ± 0.008 | 0.448 ± 0.007 |

| 48 | 0.871 ± 0.012 | 0.623 ± 0.007 | 0.506 ± 0.007 | ||

| 64 | 0.874 ± 0.008 | 0.618 ± 0.008 | 0.502 ± 0.007 | ||

| 80 | 0.912 ± 0.012 | 0.556 ± 0.008 | 0.452 ± 0.007 | ||

| 96 | 0.960 ± 0.009 | 0.480 ± 0.012 | 0.390 ± 0.011 | ||

| 112 | 0.952 ± 0.008 | 0.493 ± 0.011 | 0.400 ± 0.010 | ||

| 128 | 0.910 ± 0.014 | 0.560 ± 0.010 | 0.455 ± 0.009 | ||

| 144 | 0.870 ± 0.014 | 0.624 ± 0.011 | 0.507 ± 0.010 | ||

| 160 | 0.875 ± 0.013 | 0.615 ± 0.011 | 0.501 ± 0.010 | ||

| Y | 32 | 0.920 ± 0.010 | 1.486 ± 0.008 | 1.183 ± 0.007 | |

| 48 | 0.898 ± 0.009 | 1.599 ± 0.011 | 1.274 ± 0.010 | ||

| 64 | 0.915 ± 0.012 | 1.512 ± 0.010 | 1.205 ± 0.009 | ||

| 80 | 0.946 ± 0.011 | 1.350 ± 0.011 | 1.075 ± 0.010 | ||

| 96 | 0.970 ± 0.009 | 1.230 ± 0.011 | 0.980 ± 0.010 | ||

| 112 | 0.950 ± 0.011 | 1.331 ± 0.009 | 1.064 ± 0.008 | ||

| 128 | 0.916 ± 0.008 | 1.505 ± 0.008 | 1.202 ± 0.007 | ||

| 144 | 0.899 ± 0.013 | 1.596 ± 0.008 | 1.270 ± 0.007 | ||

| 160 | 0.916 ± 0.010 | 1.505 ± 0.009 | 1.198 ± 0.008 | ||

| Z | 32 | 0.889 ± 0.012 | 4.276 ± 0.011 | 3.376 ± 0.010 | |

| 48 | 0.879 ± 0.010 | 4.381 ± 0.011 | 3.458 ± 0.010 | ||

| 64 | 0.919 ± 0.011 | 3.931 ± 0.007 | 3.102 ± 0.007 | ||

| 80 | 0.958 ± 0.011 | 3.502 ± 0.010 | 2.763 ± 0.009 | ||

| 96 | 0.970 ± 0.009 | 3.370 ± 0.009 | 2.660 ± 0.008 | ||

| 112 | 0.925 ± 0.014 | 3.864 ± 0.008 | 3.049 ± 0.007 | ||

| 128 | 0.884 ± 0.013 | 4.320 ± 0.008 | 3.410 ± 0.007 | ||

| 144 | 0.882 ± 0.014 | 4.349 ± 0.009 | 3.434 ± 0.008 | ||

| 160 | 0.922 ± 0.013 | 3.899 ± 0.012 | 3.078 ± 0.011 | ||

| G8 | X | 32 | 0.920 ± 0.012 | 0.533 ± 0.009 | 0.431 ± 0.008 |

| 48 | 0.871 ± 0.014 | 0.610 ± 0.010 | 0.493 ± 0.009 | ||

| 64 | 0.888 ± 0.009 | 0.582 ± 0.011 | 0.471 ± 0.010 | ||

| 80 | 0.946 ± 0.009 | 0.492 ± 0.009 | 0.398 ± 0.008 | ||

| 96 | 0.960 ± 0.008 | 0.470 ± 0.012 | 0.380 ± 0.011 | ||

| 112 | 0.904 ± 0.010 | 0.558 ± 0.012 | 0.451 ± 0.011 | ||

| 128 | 0.870 ± 0.010 | 0.611 ± 0.008 | 0.494 ± 0.007 | ||

| 144 | 0.902 ± 0.010 | 0.562 ± 0.009 | 0.454 ± 0.008 | ||

| 160 | 0.948 ± 0.013 | 0.488 ± 0.009 | 0.394 ± 0.008 | ||

| Y | 32 | 0.892 ± 0.010 | 0.406 ± 0.008 | 0.323 ± 0.007 | |

| 48 | 0.861 ± 0.010 | 0.442 ± 0.007 | 0.351 ± 0.007 | ||

| 64 | 0.899 ± 0.011 | 0.399 ± 0.010 | 0.317 ± 0.009 | ||

| 80 | 0.920 ± 0.009 | 0.358 ± 0.010 | 0.285 ± 0.009 | ||

| 96 | 0.950 ± 0.013 | 0.340 ± 0.007 | 0.270 ± 0.007 | ||

| 112 | 0.877 ± 0.008 | 0.423 ± 0.008 | 0.335 ± 0.007 | ||

| 128 | 0.864 ± 0.014 | 0.439 ± 0.012 | 0.348 ± 0.011 | ||

| 144 | 0.914 ± 0.013 | 0.381 ± 0.008 | 0.303 ± 0.007 | ||

| 160 | 0.919 ± 0.009 | 0.359 ± 0.008 | 0.286 ± 0.007 | ||

| Z | 32 | 0.883 ± 0.008 | 1.447 ± 0.009 | 1.145 ± 0.008 | |

| 48 | 0.877 ± 0.013 | 1.470 ± 0.012 | 1.163 ± 0.011 | ||

| 64 | 0.927 ± 0.012 | 1.275 ± 0.008 | 1.009 ± 0.007 | ||

| 80 | 0.930 ± 0.012 | 1.207 ± 0.010 | 0.955 ± 0.009 | ||

| 96 | 0.960 ± 0.013 | 1.150 ± 0.011 | 0.910 ± 0.010 | ||

| 112 | 0.870 ± 0.008 | 1.495 ± 0.008 | 1.183 ± 0.007 | ||

| 128 | 0.883 ± 0.010 | 1.443 ± 0.011 | 1.142 ± 0.010 | ||

| 144 | 0.942 ± 0.009 | 1.221 ± 0.009 | 0.966 ± 0.008 | ||

| 160 | 0.925 ± 0.013 | 1.230 ± 0.010 | 0.973 ± 0.009 |

References

- Schuster, R.L.; Highland, L.M. Socioeconomic and Environmental Impacts of Landslides in the Western Hemisphere; United States Geological Survey: Reston, VI, USA, 2001. [Google Scholar]

- Alimohammadlou, Y.; Najafi, A.; Yalcin, A. Landslide process and impacts: A proposed classification method. CATENA 2013, 104, 219–232. [Google Scholar] [CrossRef]

- Geertsema, M.; Highland, L.; Vaugeouis, L. Environmental impact of landslides. In Landslides–Disaster Risk Reduction; Springer: Berlin/Heidelberg, Germany, 2009; pp. 589–607. [Google Scholar]

- Casagli, N.; Intrieri, E.; Tofani, V.; Gigli, G.; Raspini, F. Landslide detection, monitoring and prediction with remote-sensing techniques. Nat. Rev. Earth Environ. 2023, 4, 51–64. [Google Scholar] [CrossRef]

- Lizheng, D.; Hongyong, Y.; Jianguo, C. Research progress on landslide deformation monitoring and early warning technology. J. Tsinghua Univ. (Sci. Technol.) 2023, 63, 849–864. [Google Scholar]

- Emberson, R.; Kirschbaum, D.; Stanley, T. Global connections between El Nino and landslide impacts. Nat. Commun. 2021, 12, 2262. [Google Scholar] [CrossRef]

- Huang, C.; Yin, K.; Liang, X.; Gui, L.; Zhao, B.; Liu, Y. Study of direct and indirect risk assessment of landslide impacts on ultrahigh-voltage electricity transmission lines. Sci. Rep. 2024, 14, 25719. [Google Scholar] [CrossRef]

- Guo, Z.; Yin, K.; Gui, L.; Liu, Q.; Huang, F.; Wang, T. Regional rainfall warning system for landslides with creep deformation in three gorges using a statistical black box model. Sci. Rep. 2019, 9, 8962. [Google Scholar] [CrossRef]

- Wang, Y.; Ling, Y.; Chan, T.O.; Awange, J. High-resolution earthquake-induced landslide hazard assessment in Southwest China through frequency ratio analysis and LightGBM. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103947. [Google Scholar] [CrossRef]

- Duncan, J.M.; Wright, S.G.; Brandon, T.L. Soil Strength and Slope Stability; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Kumar, N.; Verma, A.K.; Sardana, S.; Sarkar, K.; Singh, T.N. Comparative analysis of limit equilibrium and numerical methods for prediction of a landslide. Bull. Eng. Geol. Environ. 2018, 77, 595–608. [Google Scholar] [CrossRef]

- Ishii, Y.; Ota, K.; Kuraoka, S.; Tsunaki, R. Evaluation of slope stability by finite element method using observed displacement of landslide. Landslides 2012, 9, 335–348. [Google Scholar] [CrossRef]

- Caine, N. The rainfall intensity-duration control of shallow landslides and debris flows. Geogr. Ann. Ser. A Phys. Geogr. 1980, 62, 23–27. [Google Scholar]

- Lee, M.L.; Ng, K.Y.; Huang, Y.F.; Li, W.C. Rainfall-induced landslides in Hulu Kelang area, Malaysia. Nat. Hazards 2013, 70, 353–375. [Google Scholar] [CrossRef]

- Aggarwal, A.; Alshehri, M.; Kumar, M.; Alfarraj, O.; Sharma, P.; Pardasani, K.R. Landslide data analysis using various time-series forecasting models. Comput. Electr. Eng. 2020, 88, 106858. [Google Scholar] [CrossRef]

- Wang, Z.; Tang, J.; Hou, S.; Wang, Y.; Zhang, A.; Wang, J.; Wang, W.; Feng, Z.; Li, A.; Han, B. Landslide displacement prediction from on-site deformation data based on time series ARIMA model. Front. Environ. Sci. 2023, 11, 1249743. [Google Scholar] [CrossRef]

- Wang, Y.; Tang, H.; Huang, J.; Wen, T.; Ma, J.; Zhang, J. A comparative study of different machine learning methods for reservoir landslide displacement prediction. Eng. Geol. 2022, 298, 106544. [Google Scholar] [CrossRef]

- Welsch, W.; Heunecke, O. Models and Terminology for the Analysis of Geodetic Monitoring Observations. 2001. Available online: https://www.fig.net/resources/publications/figpub/pub25/figpub25.asp (accessed on 10 January 2025).

- Rotaru, A.; Oajdea, D.; Răileanu, P. Analysis of the landslide movements. Int. J. Geol. 2007, 1, 70–79. [Google Scholar]

- Ren, F.; Wu, X.; Zhang, K.; Niu, R. Application of wavelet analysis and a particle swarm-optimized support vector machine to predict the displacement of the Shuping landslide in the Three Gorges, China. Environ. Earth Sci. 2015, 73, 4791–4804. [Google Scholar] [CrossRef]

- Pham, B.T.; Bui, D.T.; Prakash, I. Bagging based support vector machines for spatial prediction of landslides. Environ. Earth Sci. 2018, 77, 146. [Google Scholar] [CrossRef]

- Huang, F.; Huang, J.; Jiang, S.; Zhou, C. Landslide displacement prediction based on multivariate chaotic model and extreme learning machine. Eng. Geol. 2017, 218, 173–186. [Google Scholar] [CrossRef]

- Lian, C.; Zeng, Z.; Yao, W.; Tang, H. Displacement prediction model of landslide based on a modified ensemble empirical mode decomposition and extreme learning machine. Nat. Hazards 2013, 66, 759–771. [Google Scholar] [CrossRef]

- Hu, X.; Wu, S.; Zhang, G.; Zheng, W.; Liu, C.; He, C.; Liu, Z.; Guo, X.; Zhang, H. Landslide displacement prediction using kinematics-based random forests method: A case study in Jinping Reservoir Area, China. Eng. Geol. 2021, 283, 105975. [Google Scholar] [CrossRef]

- Krkač, M.; Špoljarić, D.; Bernat, S.; Arbanas, S.M. Method for prediction of landslide movements based on random forests. Landslides 2017, 14, 947–960. [Google Scholar] [CrossRef]

- Yang, B.; Yin, K.; Lacasse, S.; Liu, Z. Time series analysis and long short-term memory neural network to predict landslide displacement. Landslides 2019, 16, 677–694. [Google Scholar] [CrossRef]

- Zhang, W.; Li, H.; Tang, L.; Gu, X.; Wang, L.; Wang, L. Displacement prediction of Jiuxianping landslide using gated recurrent unit (GRU) networks. Acta Geotech. 2022, 17, 1367–1382. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, K.; Cai, C.; Liu, W.; Xie, J. Displacement prediction of step-like landslides based on feature optimization and VMD-Bi-LSTM: A case study of the Bazimen and Baishuihe landslides in the Three Gorges, China. Bull. Eng. Geol. Environ. 2021, 80, 8481–8502. [Google Scholar] [CrossRef]

- Wang, J.; Nie, G.; Gao, S.; Wu, S.; Li, H.; Ren, X. Landslide deformation prediction based on a GNSS time series analysis and recurrent neural network model. Remote Sens. 2021, 13, 1055. [Google Scholar] [CrossRef]

- Li, L.-M.; Wang, C.-Y.; Wen, Z.-Z.; Gao, J.; Xia, M.-F. Landslide displacement prediction based on the ICEEMDAN, ApEn and the CNN-LSTM models. J. Mt. Sci. 2023, 20, 1220–1231. [Google Scholar] [CrossRef]

- Wang, H.; Ao, Y.; Wang, C.; Zhang, Y.; Zhang, X.J.S.R. A dynamic prediction model of landslide displacement based on VMD–SSO–LSTM approach. Sci. Rep. 2024, 14, 9203. [Google Scholar] [CrossRef] [PubMed]

- Ebrahim, K.M.P.; Fares, A.; Faris, N.; Zayed, T. Exploring time series models for landslide prediction: A literature review. Geoenviron. Disasters 2024, 11, 25. [Google Scholar] [CrossRef]

- Kuang, P.; Li, R.; Huang, Y.; Wu, J.; Luo, X.; Zhou, F. Landslide displacement prediction via attentive graph neural network. Remote Sens. 2022, 14, 1919. [Google Scholar] [CrossRef]

- Bai, D.; Lu, G.; Zhu, Z.; Tang, J.; Fang, J.; Wen, A. Using time series analysis and dual-stage attention-based recurrent neural network to predict landslide displacement. Environ. Earth Sci. 2022, 81, 509. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient transformer for long sequence time-series forecasting. Proc. AAAI Conf. Artif. Intell. 2021, 35, 11106–11115. [Google Scholar] [CrossRef]

- Lim, B.; Arık, S.Ö.; Loeff, N.; Pfister, T. Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 2021, 37, 1748–1764. [Google Scholar] [CrossRef]

- Ge, Q.; Li, J.; Wang, X.; Deng, Y.; Zhang, K.; Sun, H. LiteTransNet: An interpretable approach for landslide displacement prediction using transformer model with attention mechanism. Eng. Geol. 2024, 331, 107446. [Google Scholar] [CrossRef]

- Wu, D.; Zhou, B.; Zimin, M. Prediction of landslide displacement based on the CA-stacked transformer model. Alex. Eng. J. 2025, 124, 389–403. [Google Scholar] [CrossRef]

- Dahal, A.; Tanyaş, H.; Lombardo, L. Full seismic waveform analysis combined with transformer neural networks improves coseismic landslide prediction. Commun. Earth Environ. 2024, 5, 75. [Google Scholar] [CrossRef]

- Kumar, P.; Priyanka, P.; Uday, K.V.; Dutt, V. Improving generalization in slope movement prediction using sequential models and hierarchical transformer predictor autoencoder. Sci. Rep. 2025, 15, 13801. [Google Scholar] [CrossRef]

- Wang, S.; Wu, H.; Shi, X.; Hu, T.; Luo, H.; Ma, L.; Zhang, J.Y.; Zhou, J. Timemixer: Decomposable multiscale mixing for time series forecasting. arXiv 2024, arXiv:2405.14616. [Google Scholar] [CrossRef]

- Kong, X.; Chen, Z.; Liu, W.; Ning, K.; Zhang, L.; Marier, S.M.; Liu, Y.; Chen, Y.; Xia, F. Deep learning for time series forecasting: A survey. Int. J. Mach. Learn. Cybern. 2025, 16, 5079–5112. [Google Scholar] [CrossRef]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. itransformer: Inverted transformers are effective for time series forecasting. arXiv 2023, arXiv:2310.06625. [Google Scholar]

- Zhu, X.; Xu, Q.; Tang, M.; Nie, W.; Ma, S.; Xu, Z. Comparison of two optimized machine learning models for predicting displacement of rainfall-induced landslide: A case study in Sichuan Province, China. Eng. Geol. 2017, 218, 213–222. [Google Scholar] [CrossRef]

- Saunders, L.J.; Russell, R.A.; Crabb, D.P. The coefficient of determination: What determines a useful R2 statistic? Investig. Ophthalmol. Vis. Sci. 2012, 53, 6830–6832. [Google Scholar] [CrossRef]

- Rekapalli, R.; Yezarla, M.; Rao, N.P. Discriminating landslide waveforms in continuous seismic data using power spectral density analysis. Geophys. Res. Lett. 2024, 51, e2024GL110466. [Google Scholar] [CrossRef]

- Martin, R. Noise power spectral density estimation based on optimal smoothing and minimum statistics. IEEE Trans. Speech Audio Process. 2001, 9, 504–512. [Google Scholar] [CrossRef]

- Silva, E.G.; Neto, P.S.G.D.M.; Cavalcanti, G.D.C. A Dynamic predictor selection method based on recent temporal windows for time series forecasting. IEEE Access 2021, 9, 108466–108479. [Google Scholar] [CrossRef]

| Method | Hyperparameter | Explanation |

|---|---|---|

| PDM- iTransformer | sequence length = 96, batch size = 24, attention heads = 8, learning rate = 1 × 10−4, epoch = 150, patience = 15, layers = 4, D = 128, FFN_hidden = 256, activation = GELU, dropout = 0.2, weight_decay = 1 × 10−4, optimizer = AdamW, lr_scheduler = Cosine decay | sequence length: Number of past time steps used as input. batch size: Number of samples per training batch. attention heads: Number of attention heads in multi-head attention. learning rate: Step size for optimizer updates. epoch: Maximum number of training iterations. patience: Number of epochs with no improvement before early stopping. cnn_filters: Number of filters in CNN layers. lstm_units: Number of hidden units in LSTM layers. dropout: Dropout rate for regularization. layers: Number of encoder layers in the Transformer or iTransformer. D: Embedding dimension of input features for each attention head. FFN_hidden: Number of hidden units in the feed-forward network. activation: Type of activation function used in the network. dropout: Dropout rate for regularization to prevent overfitting. weight_decay: L2 regularization parameter for controlling model complexity. optimizer: Optimization algorithm used for gradient updates. lr_scheduler: Learning rate adjustment strategy during training. |

| Transformer | sequence length = 96, batch size = 24, attention heads = 8, learning rate = 1 × 10−4, epoch = 150, patience = 15, layers = 4, D = 128, FFN_hidden = 256, activation = GELU, dropout = 0.2, weight_decay = 1 × 10−4, optimizer = AdamW, lr_scheduler = Cosine decay | |

| CNN-LSTM | sequence length = 96, batch size = 24, cnn_filters = 64, lstm_units = 128, dropout = 0.2, learning rate = 1 × 10−4, epoch = 150, patience = 15 | |

| LSTM | sequence length = 96, batch size = 24, lstm_units = 128, dropout = 0.2, learning rate = 1 × 10−4, epoch= 150, patience = 15 | |

| RF | n_estimators = 500, max_depth = 20, min_samples_split = 4, min_samples_leaf = 2 | n_estimators: Number of decision trees. max_depth: Maximum depth of each tree. min_samples_split: Minimum number of samples required to split an internal node. min_samples_leaf: Minimum number of samples required to be at a leaf node. |

| Station | Axis | R2 | RMSE (mm) | MAE (mm) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PDM- iTrans- former | Trans- former | CNN- LSTM | LSTM | RF | PDM- iTrans former | Trans- former | CNN- LSTM | LSTM | RF | PDM- iTrans- former | Trans- former | CNN- LSTM | LSTM | RF | ||

| G1 | X | 0.96 | 0.93 | 0.82 | 0.81 | 0.5 | 0.39 | 0.5 | 0.8 | 0.82 | 1.32 | 0.31 | 0.4 | 0.64 | 0.65 | 1.07 |

| Y | 0.95 | 0.9 | 0.78 | 0.84 | 0.44 | 0.28 | 0.41 | 0.6 | 0.52 | 0.96 | 0.23 | 0.32 | 0.47 | 0.4 | 0.75 | |

| Z | 0.97 | 0.92 | 0.86 | 0.86 | 0.66 | 1.27 | 1.98 | 2.67 | 2.67 | 4.16 | 1.01 | 1.56 | 2.11 | 2.12 | 3.34 | |

| G2 | X | 0.97 | 0.96 | 0.89 | 0.85 | 0.84 | 1.14 | 1.38 | 2.19 | 2.59 | 2.71 | 0.9 | 1.11 | 1.73 | 2.04 | 2.11 |

| Y | 0.97 | 0.96 | 0.89 | 0.92 | 0.89 | 0.61 | 0.77 | 1.22 | 1.06 | 1.25 | 0.49 | 0.61 | 0.98 | 0.84 | 1 | |

| Z | 0.97 | 0.91 | 0.88 | 0.53 | 0.62 | 1.2 | 1.91 | 2.26 | 4.49 | 4 | 0.94 | 1.51 | 1.78 | 3.62 | 3.33 | |

| G3 | X | 0.96 | 0.94 | 0.85 | 0.88 | 0.61 | 0.39 | 0.5 | 0.8 | 0.71 | 1.29 | 0.31 | 0.39 | 0.63 | 0.58 | 1.04 |

| Y | 0.95 | 0.88 | 0.8 | 0.81 | 0.4 | 0.25 | 0.39 | 0.5 | 0.49 | 0.87 | 0.2 | 0.31 | 0.4 | 0.39 | 0.68 | |

| Z | 0.97 | 0.93 | 0.89 | 0.67 | 0.69 | 1.2 | 1.68 | 2.21 | 3.78 | 3.65 | 0.97 | 1.33 | 1.75 | 3.02 | 2.97 | |

| G4 | X | 0.96 | 0.93 | 0.87 | 0.88 | 0.66 | 0.39 | 0.5 | 0.7 | 0.66 | 1.11 | 0.31 | 0.4 | 0.56 | 0.54 | 0.92 |

| Y | 0.94 | 0.79 | 0.69 | 0.64 | 0.51 | 0.31 | 0.61 | 0.75 | 0.8 | 0.94 | 0.24 | 0.5 | 0.57 | 0.62 | 0.76 | |

| Z | 0.96 | 0.92 | 0.89 | 0.83 | 0.67 | 1.21 | 1.78 | 2.11 | 2.63 | 3.66 | 0.97 | 1.41 | 1.69 | 2.11 | 3.02 | |

| G5 | X | 0.96 | 0.93 | 0.45 | 0.84 | 0.73 | 0.46 | 0.64 | 1.76 | 0.95 | 1.23 | 0.37 | 0.51 | 1.58 | 0.75 | 0.99 |

| Y | 0.97 | 0.96 | 0.9 | 0.87 | 0.93 | 1.29 | 1.39 | 2.23 | 2.5 | 1.9 | 1.01 | 1.1 | 1.81 | 2.02 | 1.46 | |

| Z | 0.97 | 0.95 | 0.9 | 0.83 | 0.92 | 3.29 | 4.06 | 5.9 | 7.84 | 5.47 | 2.65 | 3.26 | 4.7 | 6.81 | 4.41 | |

| G6 | X | 0.96 | 0.94 | 0.88 | 0.84 | 0.67 | 0.36 | 0.45 | 0.66 | 0.76 | 1.09 | 0.29 | 0.36 | 0.53 | 0.6 | 0.87 |

| Y | 0.96 | 0.91 | 0.7 | 0.67 | 0.64 | 0.3 | 0.46 | 0.82 | 0.86 | 0.9 | 0.24 | 0.36 | 0.62 | 0.66 | 0.72 | |

| Z | 0.96 | 0.92 | 0.89 | 0.49 | 0.69 | 1.09 | 1.52 | 1.85 | 3.94 | 3.08 | 0.85 | 1.22 | 1.47 | 3.04 | 2.53 | |

| G7 | X | 0.96 | 0.94 | 0.86 | 0.83 | 0.55 | 0.34 | 0.39 | 0.62 | 0.66 | 1.09 | 0.27 | 0.31 | 0.49 | 0.53 | 0.89 |

| Y | 0.95 | 0.81 | 0.72 | 0.63 | 0.43 | 0.26 | 0.51 | 0.62 | 0.71 | 0.88 | 0.21 | 0.4 | 0.49 | 0.54 | 0.69 | |

| Z | 0.96 | 0.93 | 0.88 | 0.85 | 0.67 | 1.19 | 1.63 | 2.18 | 2.49 | 3.63 | 0.96 | 1.3 | 1.74 | 1.99 | 2.89 | |

| G8 | X | 0.96 | 0.95 | 0.88 | 0.88 | 0.75 | 0.46 | 0.56 | 0.83 | 0.84 | 1.21 | 0.36 | 0.45 | 0.65 | 0.67 | 0.96 |

| Y | 0.95 | 0.92 | 0.72 | 0.81 | 0.54 | 0.33 | 0.43 | 0.8 | 0.67 | 1.03 | 0.26 | 0.34 | 0.62 | 0.54 | 0.85 | |

| Z | 0.96 | 0.92 | 0.88 | 0.83 | 0.57 | 1.11 | 1.62 | 2.03 | 2.43 | 3.81 | 0.88 | 1.28 | 1.62 | 1.97 | 3.08 | |

| G9 | X | 0.96 | 0.93 | 0.87 | 0.84 | 0.64 | 0.35 | 0.49 | 0.65 | 0.73 | 1.09 | 0.28 | 0.39 | 0.5 | 0.58 | 0.88 |

| Y | 0.96 | 0.87 | 0.82 | 0.81 | 0.55 | 0.26 | 0.47 | 0.55 | 0.57 | 0.88 | 0.21 | 0.37 | 0.44 | 0.46 | 0.7 | |

| Z | 0.97 | 0.93 | 0.88 | 0.84 | 0.64 | 1.07 | 1.47 | 2.02 | 2.28 | 3.43 | 0.86 | 1.17 | 1.62 | 1.82 | 2.78 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Cao, C.; Fei, L.; Han, X.; Wang, Y.; Chan, T.O. A Hybrid Framework Integrating Past Decomposable Mixing and Inverted Transformer for GNSS-Based Landslide Displacement Prediction. Sensors 2025, 25, 6041. https://doi.org/10.3390/s25196041

Wu J, Cao C, Fei L, Han X, Wang Y, Chan TO. A Hybrid Framework Integrating Past Decomposable Mixing and Inverted Transformer for GNSS-Based Landslide Displacement Prediction. Sensors. 2025; 25(19):6041. https://doi.org/10.3390/s25196041

Chicago/Turabian StyleWu, Jinhua, Chengdu Cao, Liang Fei, Xiangyang Han, Yuli Wang, and Ting On Chan. 2025. "A Hybrid Framework Integrating Past Decomposable Mixing and Inverted Transformer for GNSS-Based Landslide Displacement Prediction" Sensors 25, no. 19: 6041. https://doi.org/10.3390/s25196041

APA StyleWu, J., Cao, C., Fei, L., Han, X., Wang, Y., & Chan, T. O. (2025). A Hybrid Framework Integrating Past Decomposable Mixing and Inverted Transformer for GNSS-Based Landslide Displacement Prediction. Sensors, 25(19), 6041. https://doi.org/10.3390/s25196041