1. Introduction

As industrial automation levels advance, bearings, as one of the important components in various mechanical devices, and their state of operation, has a direct influence on the equipment’s overall performance, safety, and reliability [

1]. As bearings undergo long-term operation in mechanical systems, faults may occur in various parts, and changes in operating conditions often obscure fault features. Upon the occurrence of a fault, it may lead to substantial economic losses and can even be the cause of major accidents [

2,

3,

4]. Therefore, to guarantee the proper functioning of machinery, reduce or even prevent faults, real-time monitoring of the equipment’s health condition and fault location diagnosis based on signal changes are crucial. Regular maintenance can help reduce potential failures, save maintenance costs, avoid major accidents, and promote sustainable development, all of which are of significant practical importance [

5].

Traditional data-driven methods typically involve steps such as feature extraction, feature selection, and model training. The feature extraction phase processes raw vibration signals, including time–domain features, frequency–domain features, and time–frequency domain features [

6,

7]. The most representative features are selected from the original feature set using dimensionality reduction techniques (e.g., PCA) or correlation analysis for model training [

8,

9,

10]. Traditional machine learning techniques, including random forest, support vector machine (SVM), and logistic regression, are then used to train classifiers to identify different fault modes [

11,

12]. Zhu et al. [

13] developed an SVM-based fault diagnosis methodology and optimized the SVM using quantum genetic algorithms (QGA) to increase fault diagnosis precision and effectiveness in rotating machinery. Hong et al. [

14] introduced a probabilistic classification model using an SVM classifier for the real-time identification of faults in power transformers. Deng et al. [

15] introduced the EWTFSFD diagnosis approach, combining EWT, fuzzy entropy, and SVM, which performs fault recognition by decomposing signals, extracting features, and constructing classifiers. The experimental results confirmed its accuracy and effectiveness, with better signal decomposition using EWT. Xiao et al. [

16] presented a rotating machinery fault diagnosis method, which combines improved variational mode decomposition (IVMD) with convolutional neural networks (CNN). They first solved the time–domain feature extraction problem using the improved VMD that automatically optimizes the number of modes. Then, they selected high-correlation components using correlation analysis to reconstruct the signal, and 2D time–frequency domain feature maps were extracted using CWT. This method is suitable for complex environments and achieves high recognition rates.

Deep learning techniques have apparent advantages in automatically extracting complex features and uncovering hidden information from data, compared to traditional machine learning methods. Their rapid development has gradually led to their application in the fields of Prognostics and Health Management (PHM) [

17,

18,

19,

20]. Wang et al. [

21] proposed a fault diagnosis scheme based on Multi-scale Diversity Entropy (MDE) and Extreme Learning Machine (ELM), demonstrating that its classification accuracy in rotating machinery pattern recognition surpasses that of existing methods such as Sample Entropy, Fuzzy Entropy, and Permutation Entropy. He et al. [

22] introduced a few-shot learning method based on a fine-tuned GPT-2 model for fault diagnosis of all-ceramic bearings, achieving high diagnostic accuracy with very few labeled samples. Tian et al. [

23] studied an iron-core fluxgate sensor for motor condition monitoring, showing that, compared to coreless sensors, the iron-core sensor has a stronger and more sensitive measurement of electromotive force in noisy environments. Bai et al. [

24] addressed the challenges of using deep learning for mechanical fault diagnosis, such as the dependence on large data, long training times, and performance degradation with inconsistent data, by introducing an innovative data representation method using the fractional order Fourier transform (FRFT) and recursive graph transformation for bearing fault diagnosis. Liu et al. [

25] developed a rolling bearing fault diagnosis method using 1D and 2D CNNs for two-domain information learning. Tests on the CWRU and DUT datasets confirmed that the approach attains high recognition accuracy and operates effectively without manual expertise. The method also demonstrated its superiority and robustness in tests with strong noise vibration data. The fault diagnosis approaches referenced in the previous literature depend exclusively on data from a single sensor. However, in actual industrial production environments, there are various uncontrollable factors that cause noise in the signals. Therefore, the data gained from a single sensor is limited.

For the various information problems associated with single sensors, how to use multi-sensor data fusion to enhance fault diagnosis model accuracy has become a key research focus. Three fusion levels are distinguished: data-level fusion, feature-level fusion, and decision-level fusion [

26]. Shao et al. [

27] proposed a cross-domain fault diagnosis method for ceramic bearings based on meta-learning and multi-source heterogeneous data fusion. This method effectively addresses issues of data dimension differences and feature consistency, achieving an average diagnostic accuracy of 98.91% across six cross-domain scenarios, demonstrating excellent noise resistance. Dong et al. [

28] introduced a lightweight convolutional dual-regularized contrastive transformer based on multi-sensor data fusion for small sample fault diagnosis of aerospace bearings. By integrating cliff entropy weighting and the Diwaveformer architecture, this method achieved efficient and accurate fault identification. Saucedo-Dorantes et al. [

29] proposed a data-driven diagnostic method based on deep feature learning. Utilizing a deep autoencoder model and feature fusion techniques, this method effectively diagnosed and identified faults in metal, hybrid, and ceramic bearings within motor systems, and its adaptability and performance were validated across different motor systems. Chao et al. [

30] introduced a multi-sensor fusion method utilizing a convolutional neural network receiving three-channel vibration data with decision-level fusion. The method was validated using an axial piston pump experiment, showing its effectiveness. Although the above deep learning-based multi-sensor fusion fault diagnosis methods have achieved good results, certain limitations still exist. First, the diagnostic results are not ideal when monitoring signals in real industrial environments encounter significant noise interference. The robustness of the above deep learning fusion methods to noise needs to be further enhanced. Second, most diagnostic methods use one or more vibration sensors, which require precise installation of contact-type sensors, but less attention is given to non-contact sensors like acoustic sensors and temperature sensors. The combination of multiple signals was demonstrated to improve the efficacy of fault diagnosis methods. Third, multi-sensors often provide heterogeneous data types, including images, time series, acoustic signals, etc. Each data type may differ in time and spatial scales. How to efficiently fuse these data from different scales and extract useful fault information remains a challenging problem.

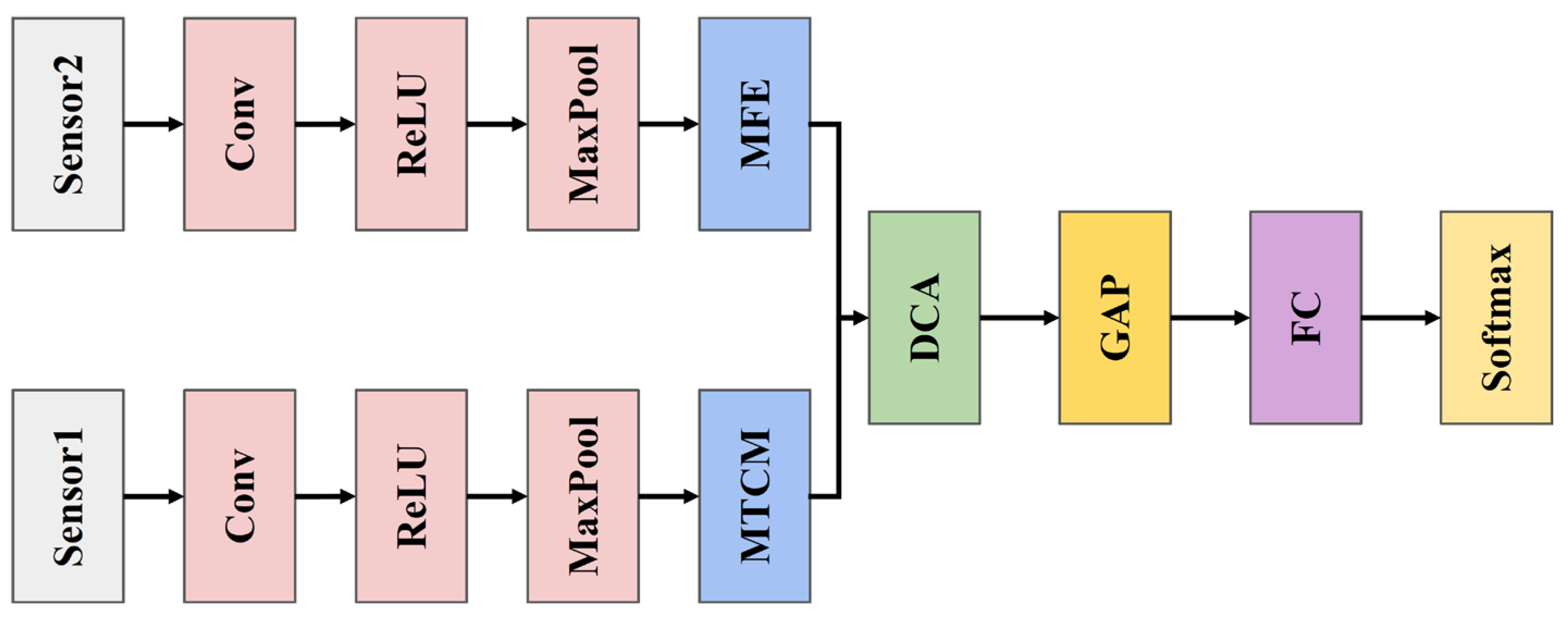

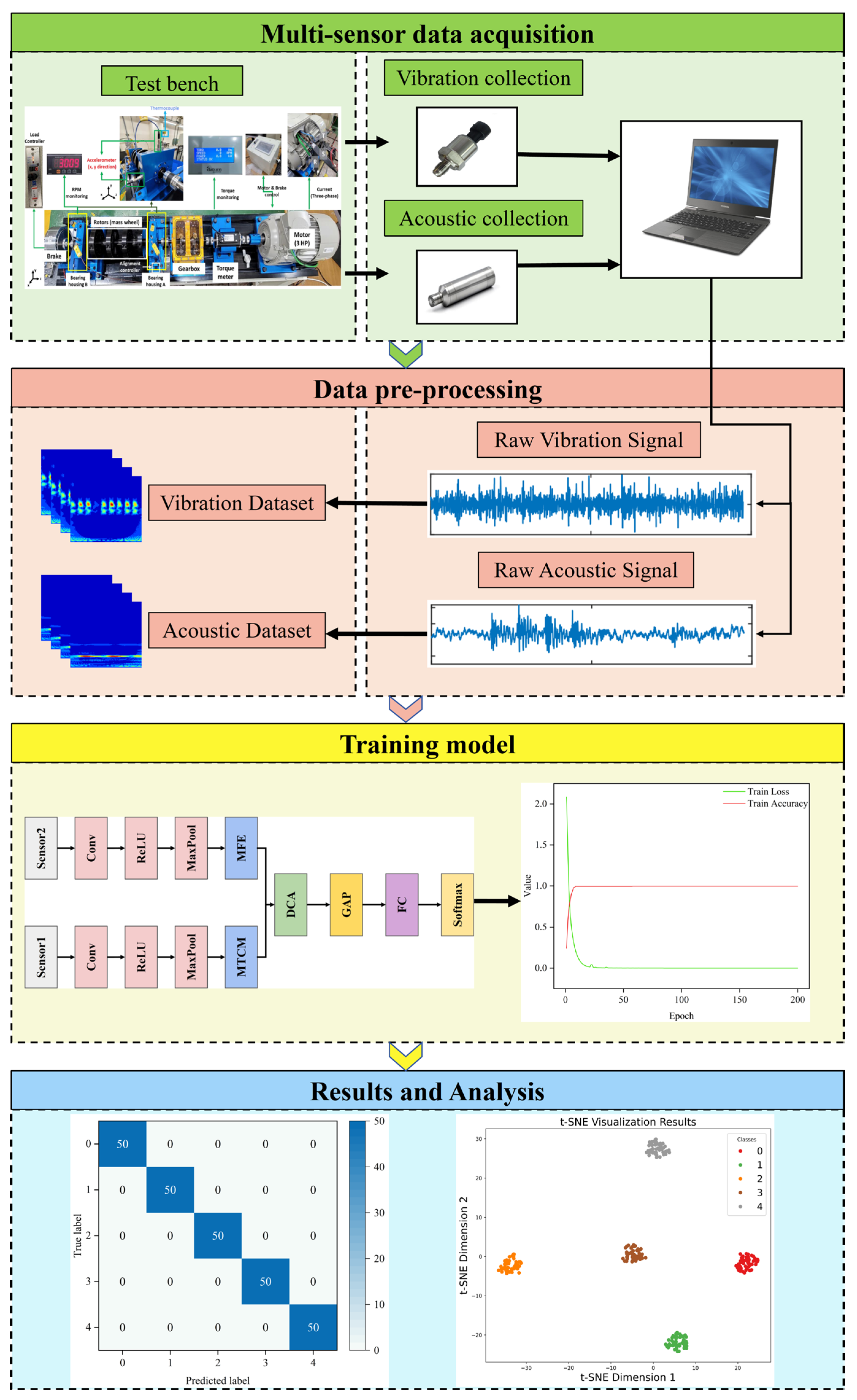

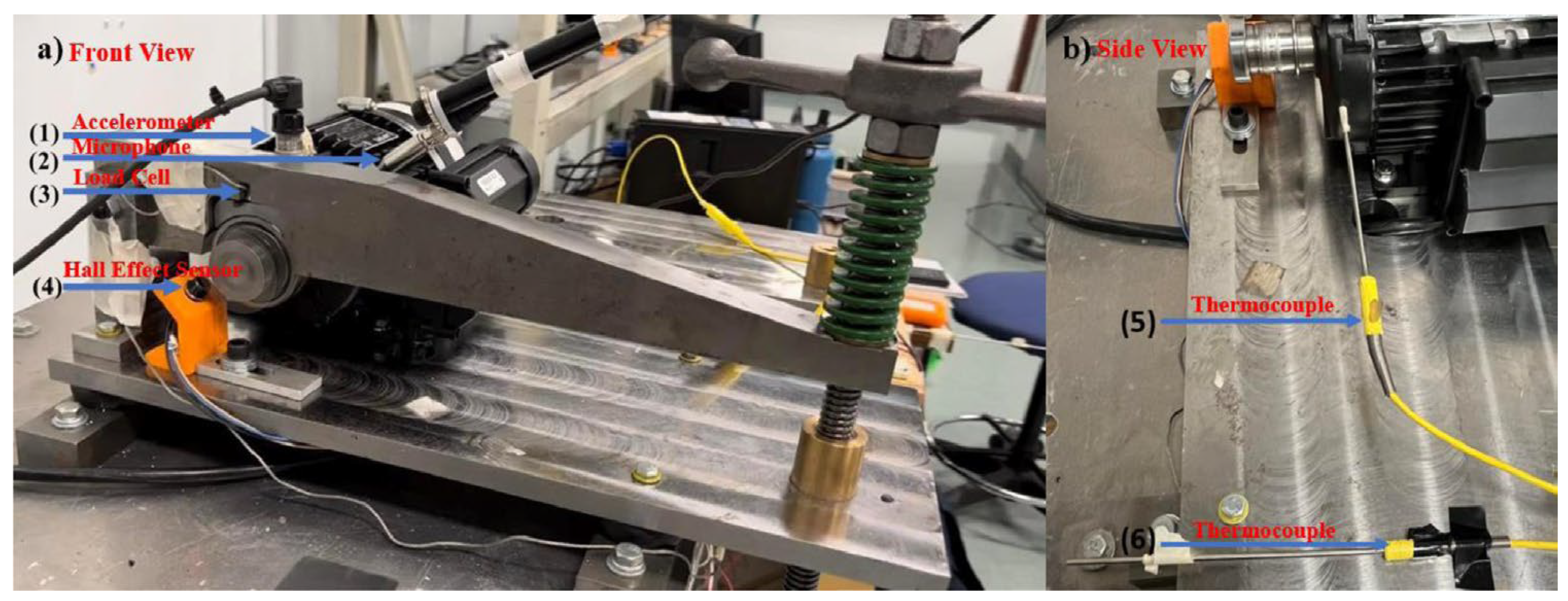

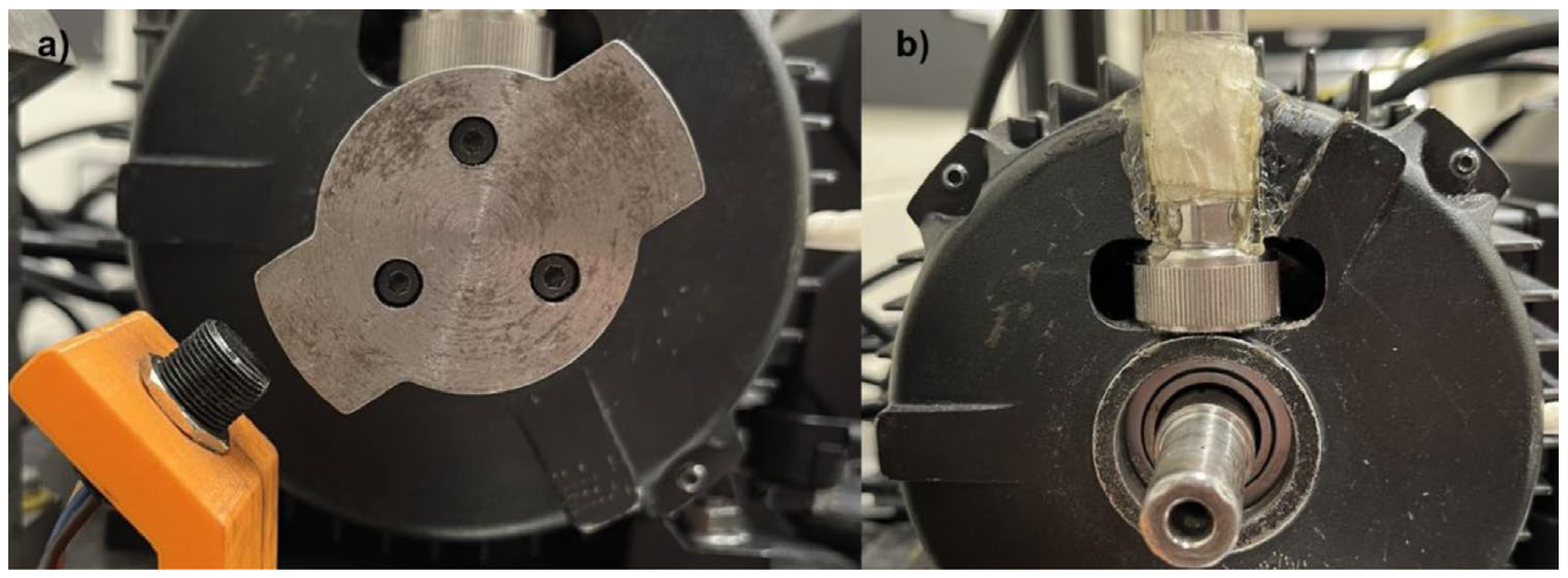

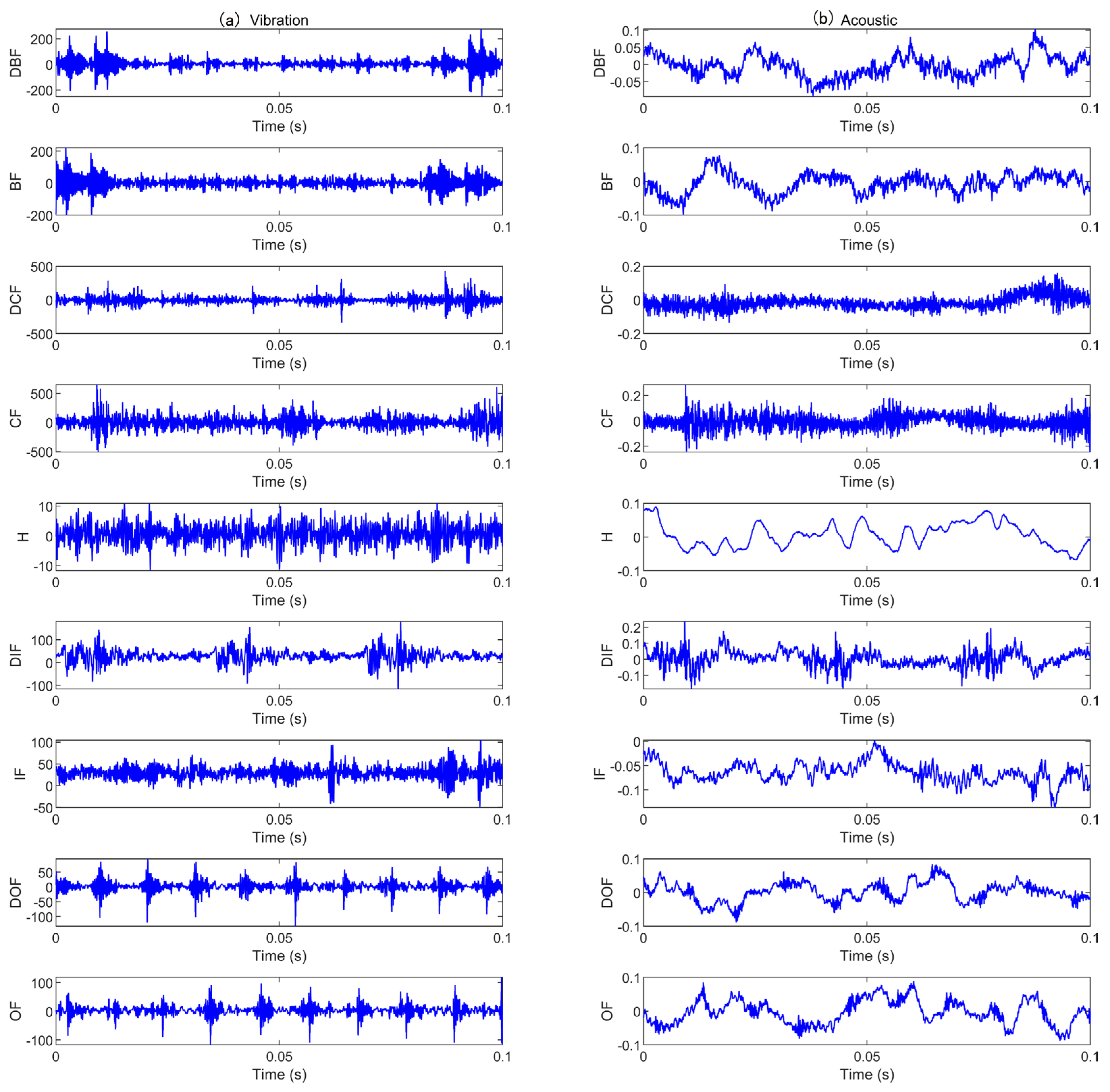

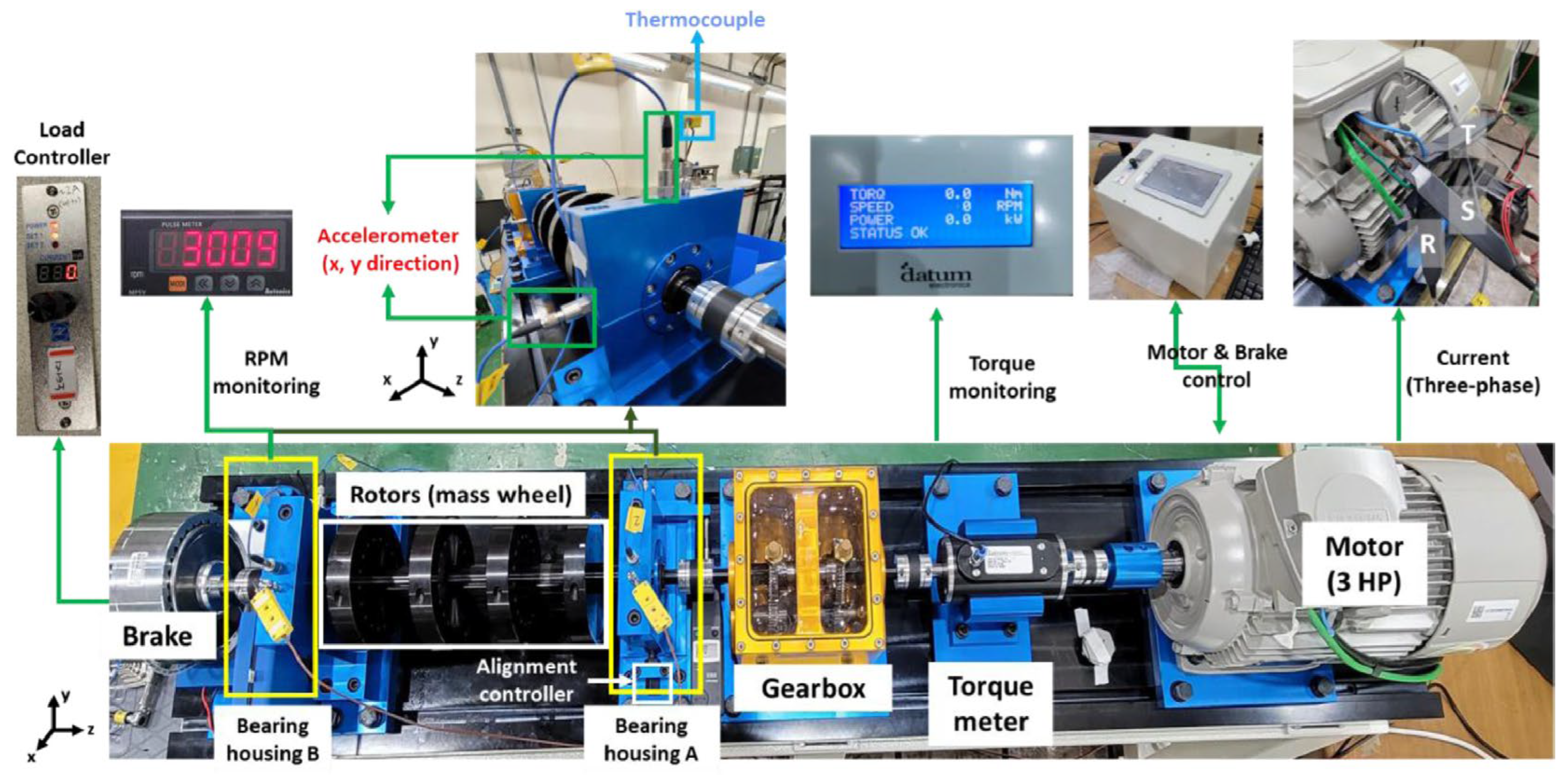

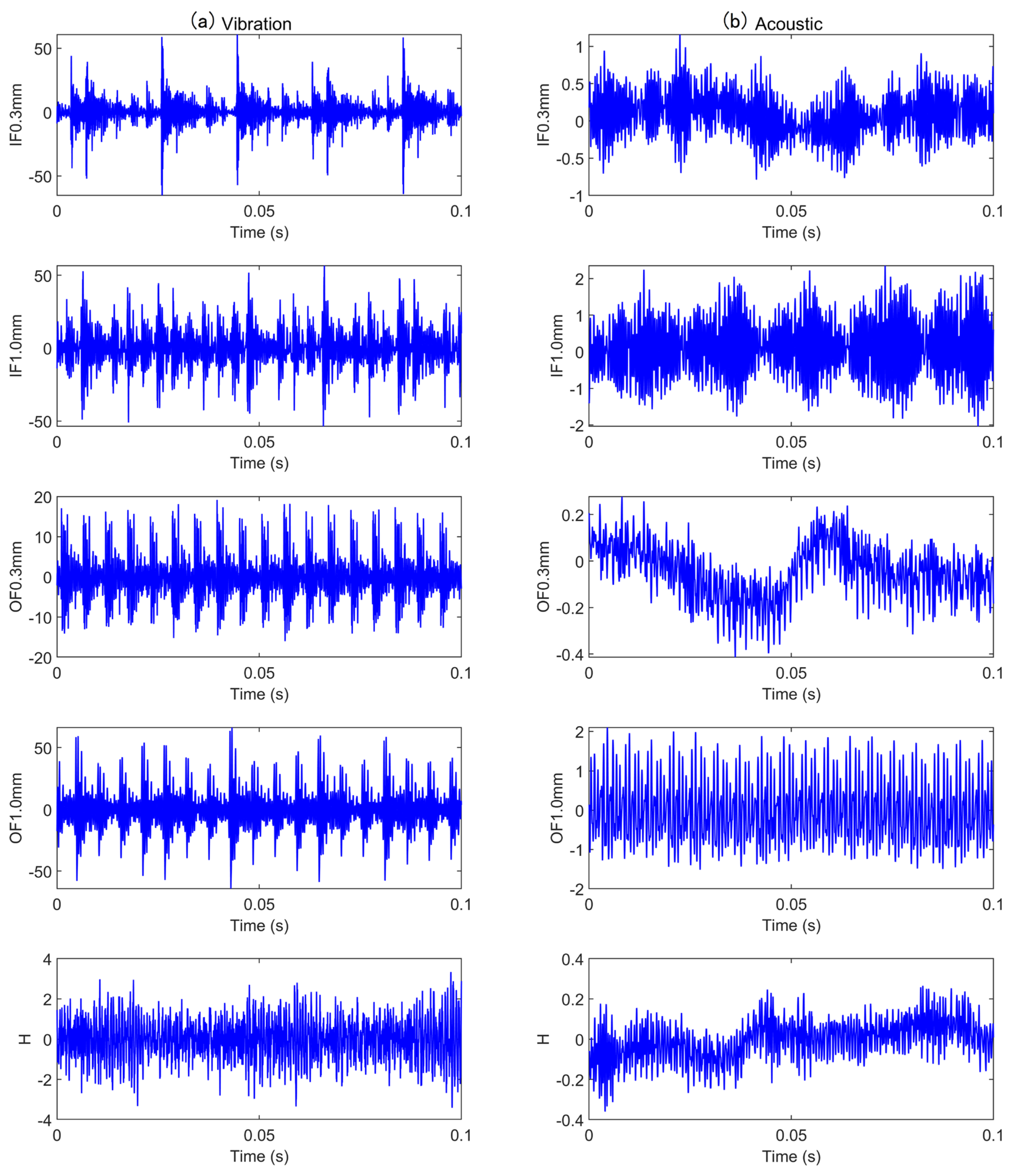

To address the aforementioned issues and enhance the performance of multi-sensor acoustic-vibration fusion fault diagnosis methods, while comprehensively utilizing features at different levels and scales to capture multi-level detailed information, this paper introduces a bearing fault diagnosis approach using a multi-channel multi-scale spatiotemporal convolutional cross-attention fusion network. Specifically, CWT is employed to convert the 1D acoustic and vibration signals into 2D time–frequency images. After these images undergo rough feature extraction using ResNet, deep feature extraction is performed using the multi-scale temporal convolution module (MTCM) and multi-feature extraction block (MFE) to obtain time and spatial features. These features subsequently undergo processing using the dual cross-attention (DCA) mechanism, where multi-scale channel and spatial cross-fusion of the features is performed to achieve adaptive fusion of global and local features. Finally, the fused features are classified and recognized in vector form using GAP, FC, and softmax. The primary contributions of this work include the following:

(1) A bearing fault diagnosis framework based on a multi-channel multi-scale spatiotemporal convolutional cross-attention fusion network (MMSTCCAFN) is proposed. This framework not only eliminates the requirement for manual feature extraction in conventional diagnostic approaches but also addresses the limitations of using a single sensor. At the same time, it effectively realizes the complementary fusion of spatiotemporal feature information from multi-sensor acoustic and vibration signals.

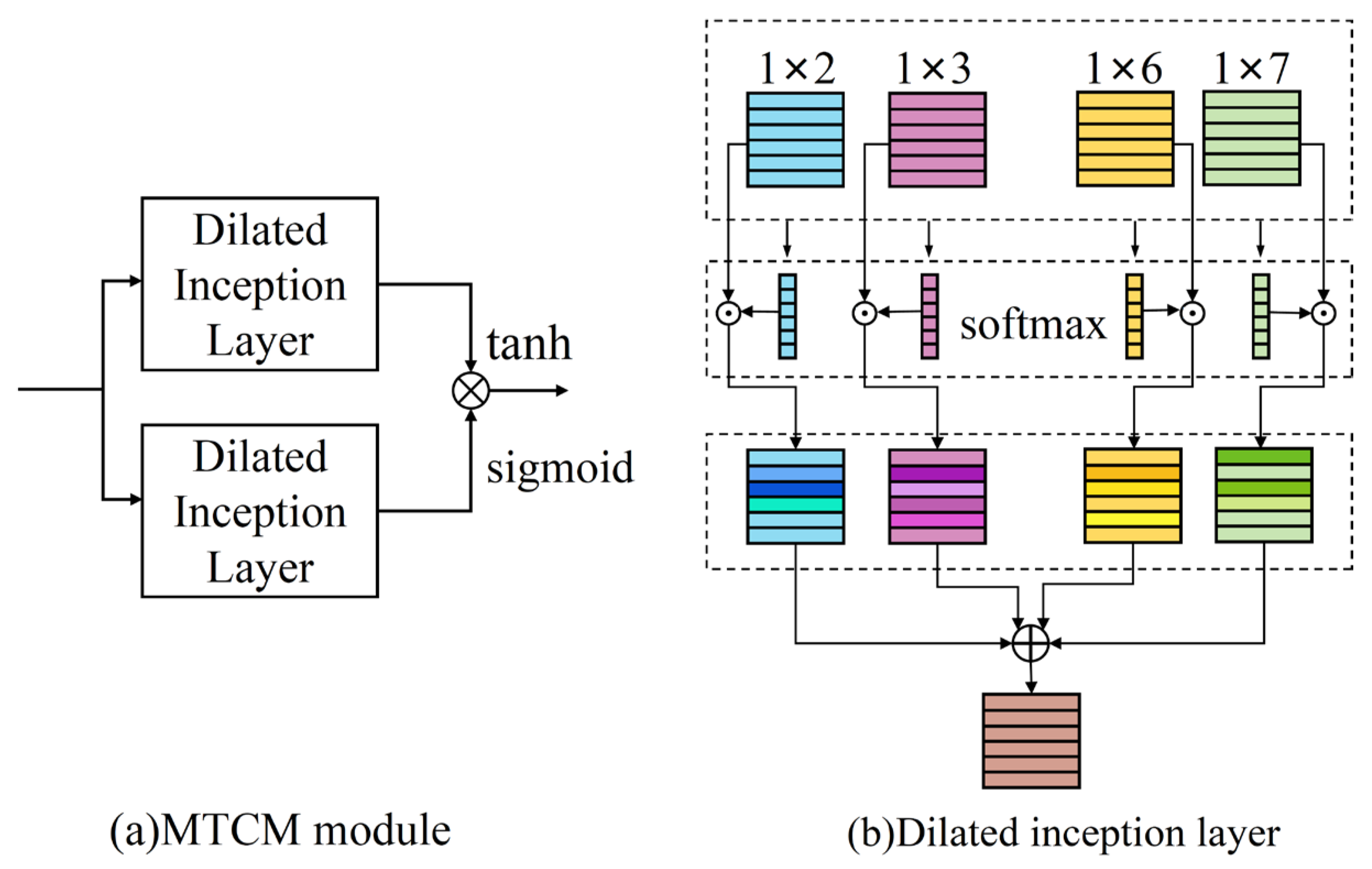

(2) The feature extraction module, MTCM, is improved. Building upon the original TCM, the expansion of the inception layer is enhanced by assigning weights using the softmax function, and the weighted sum is improved to emphasize important features while suppressing less significant information.

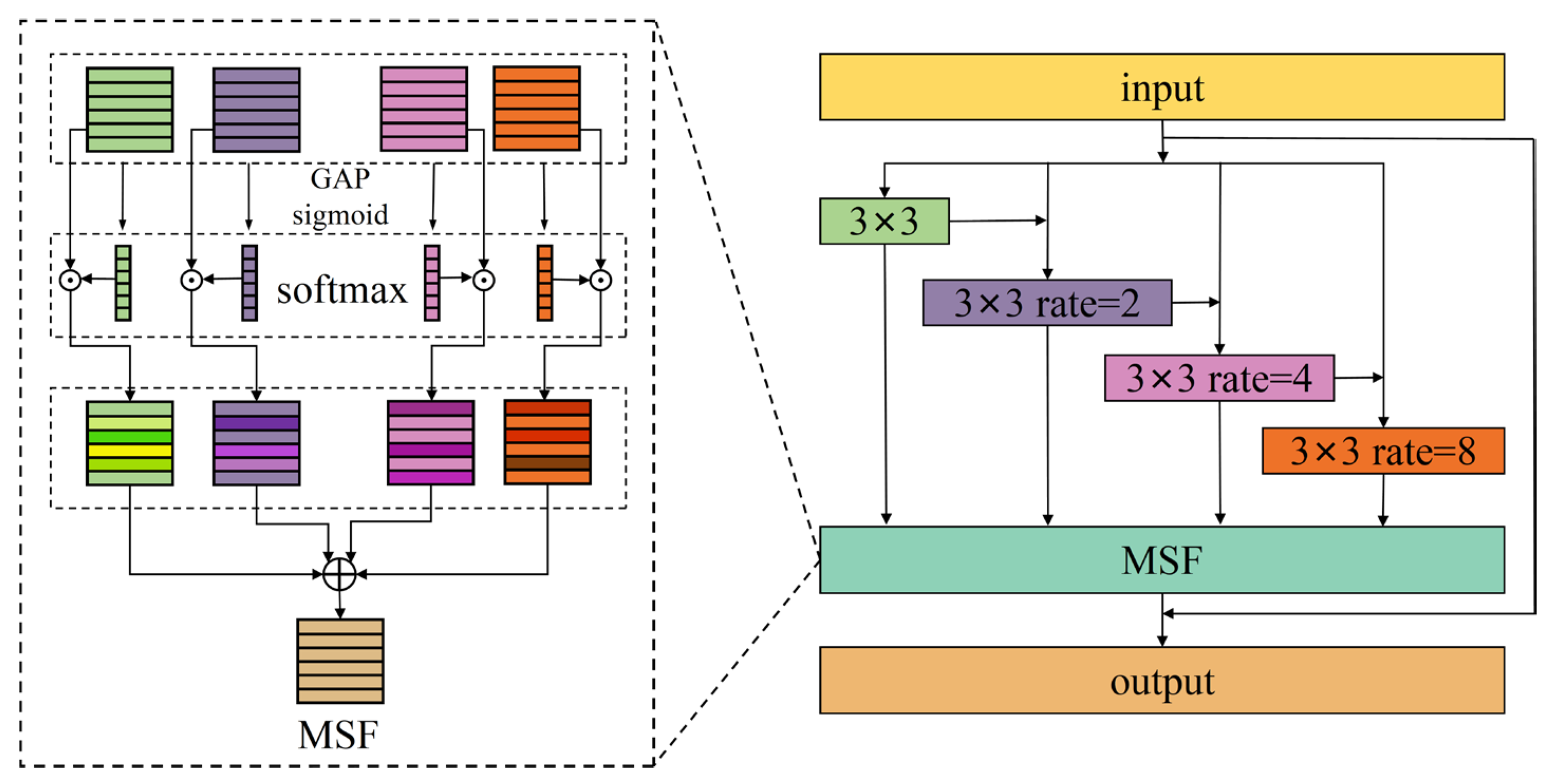

(3) A multi-scale spatial feature extraction module (MFE) is introduced. This module employs convolution and attention operations, making it suitable for processing images of different scale sizes. It effectively captures and integrates both local and global contextual information from the images.

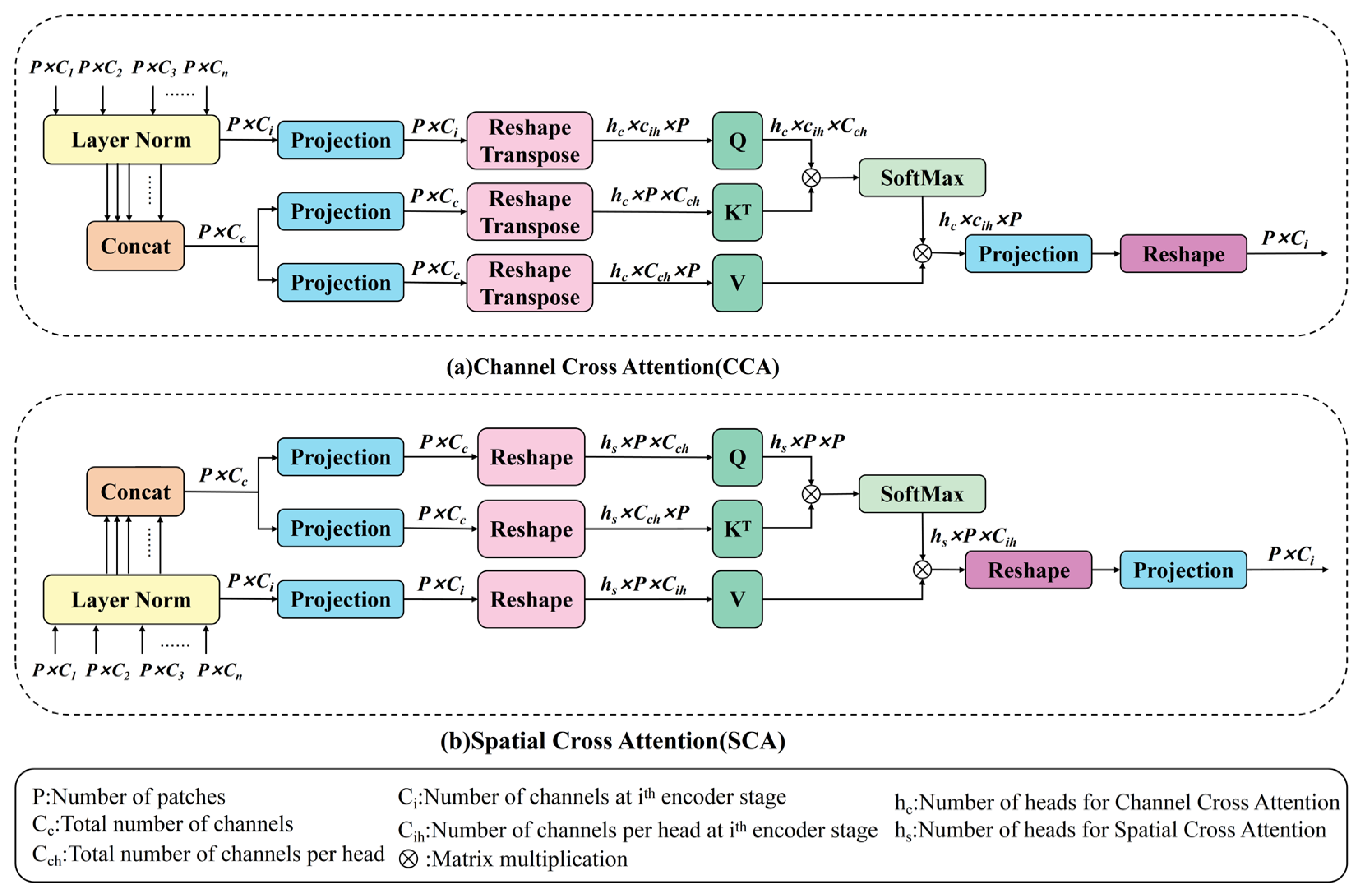

(4) A dual cross-attention module (DCA) is introduced, which includes channel cross-attention (CCA) and spatial cross-attention (SCA) mechanisms. This module adaptively captures multi-scale channel and spatial dependencies, enabling the learning of information at various spatial scales.

The organization of the article is as follows.

Section 2 briefly introduces the continuous wavelet transform and the attention mechanism.

Section 3 details the proposed method, including MTCM, MFE, DCA, and MMSTCCAFN, along with the bearing fault diagnosis framework based on MMSTCCAFN.

Section 4 validates the efficacy of the proposed methodology using two experimental cases. And

Section 5 presents the conclusion.

5. Conclusions

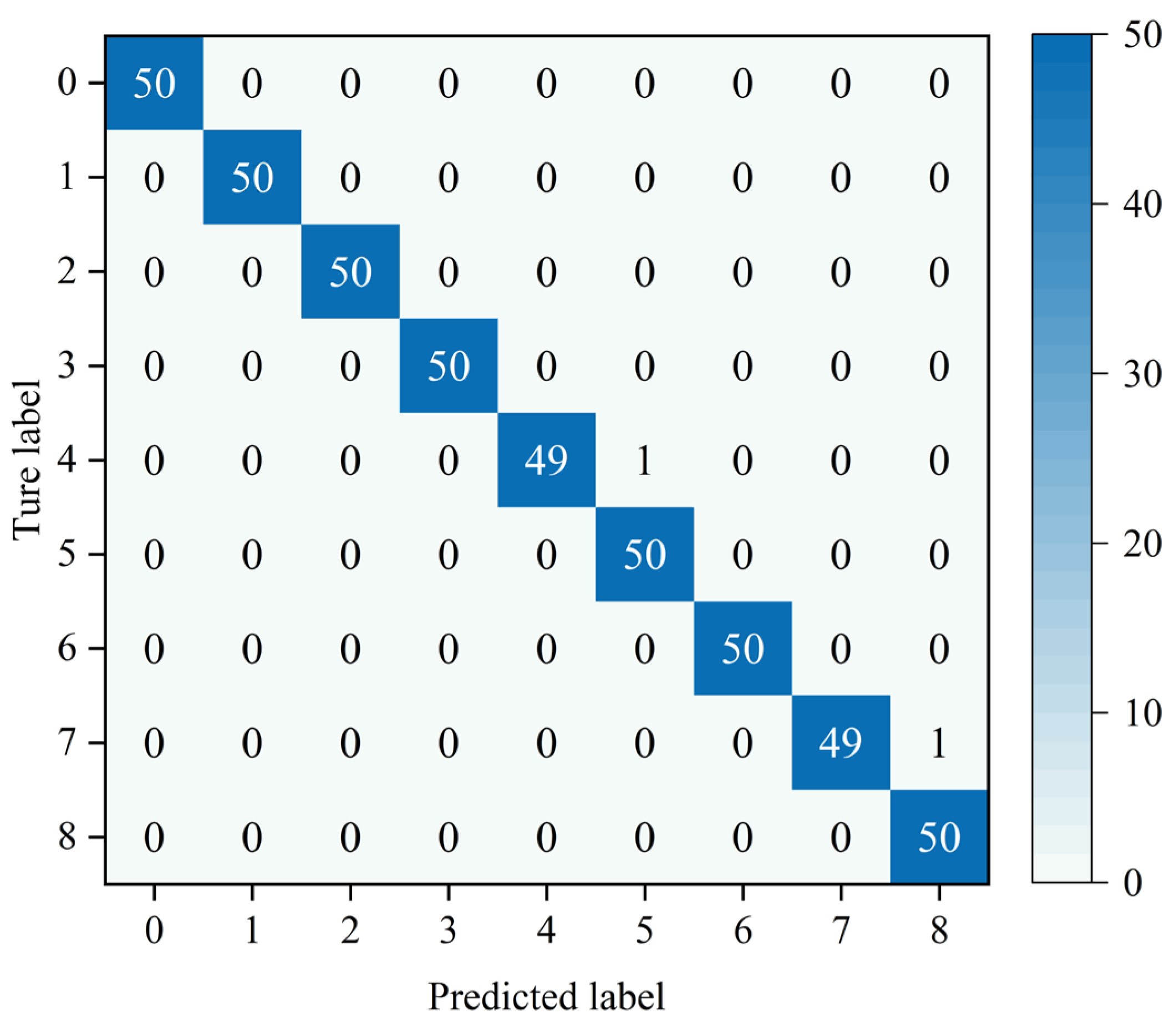

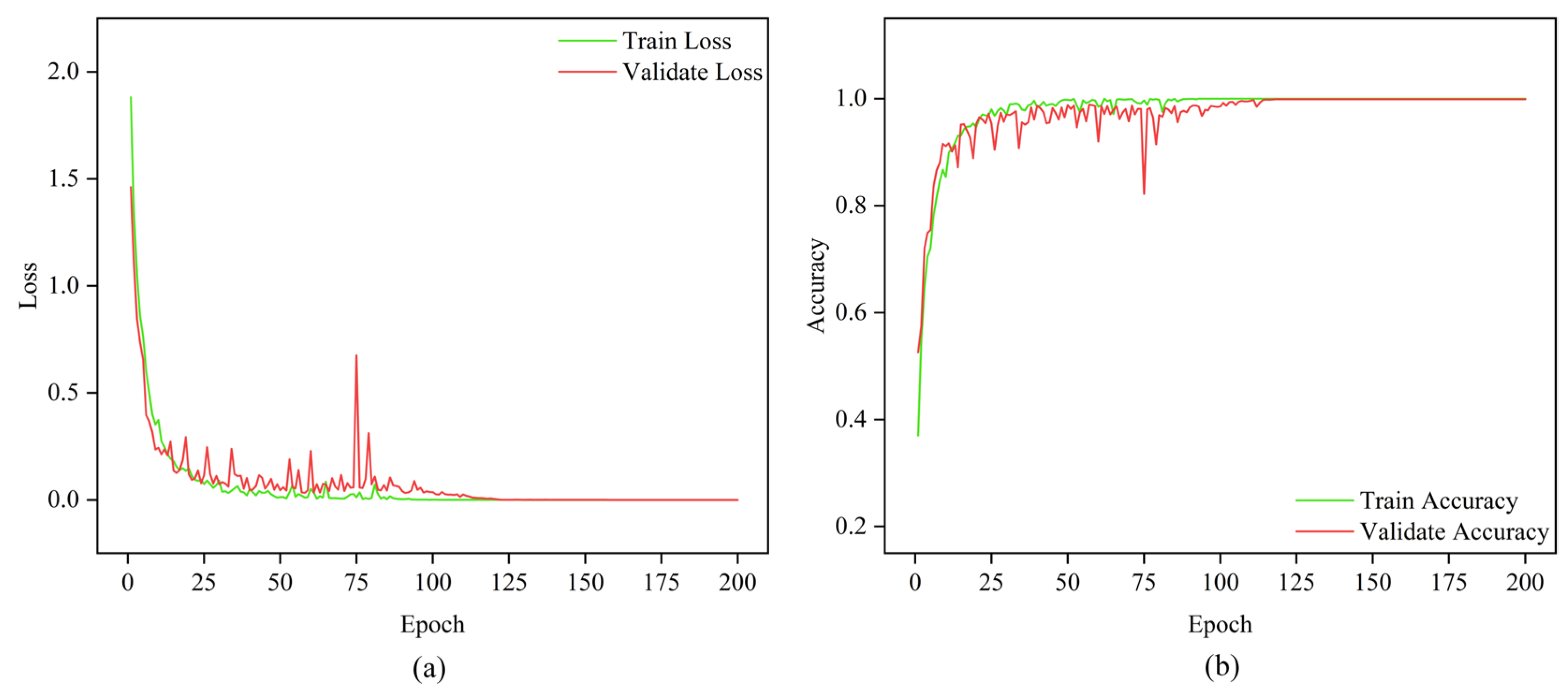

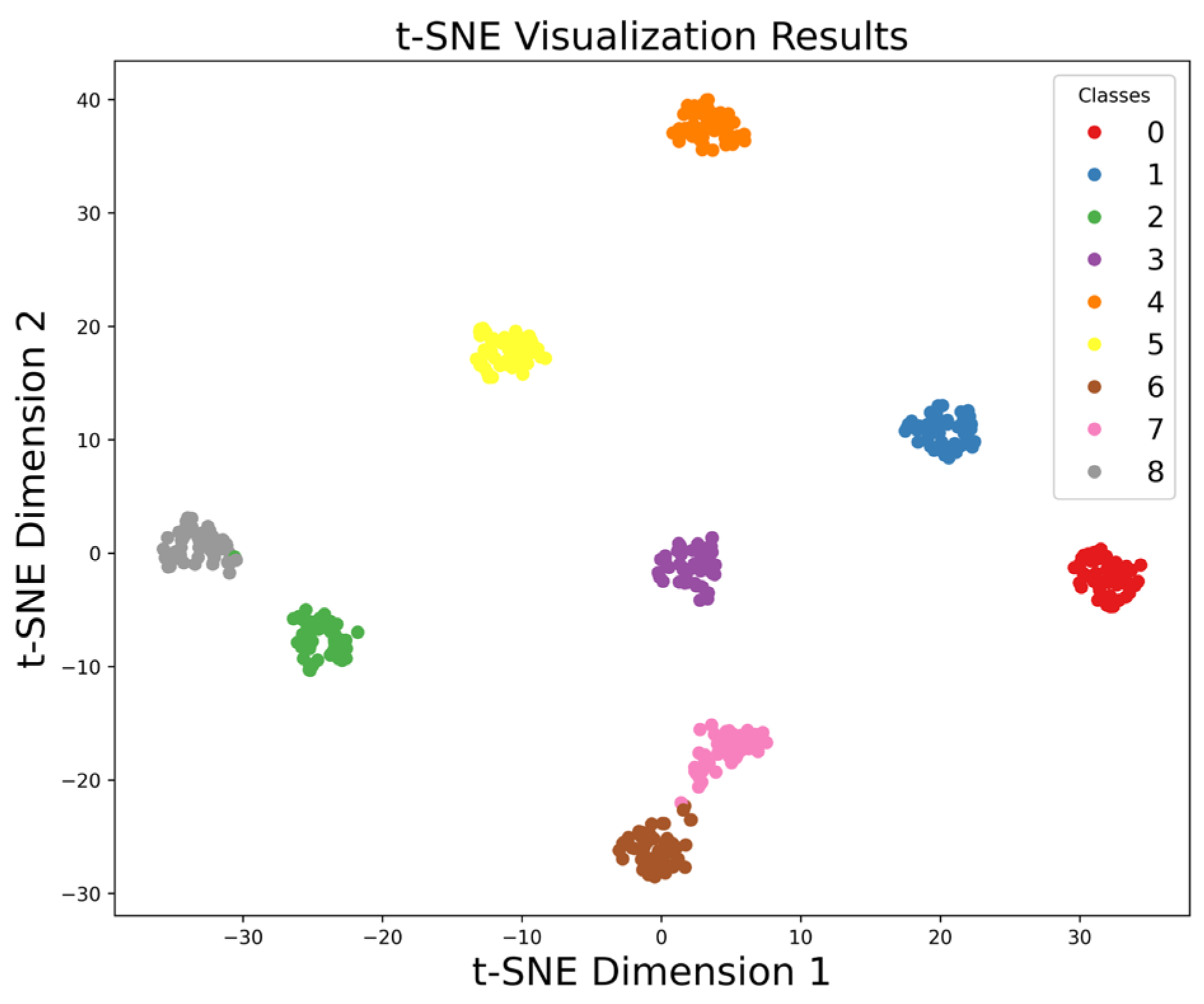

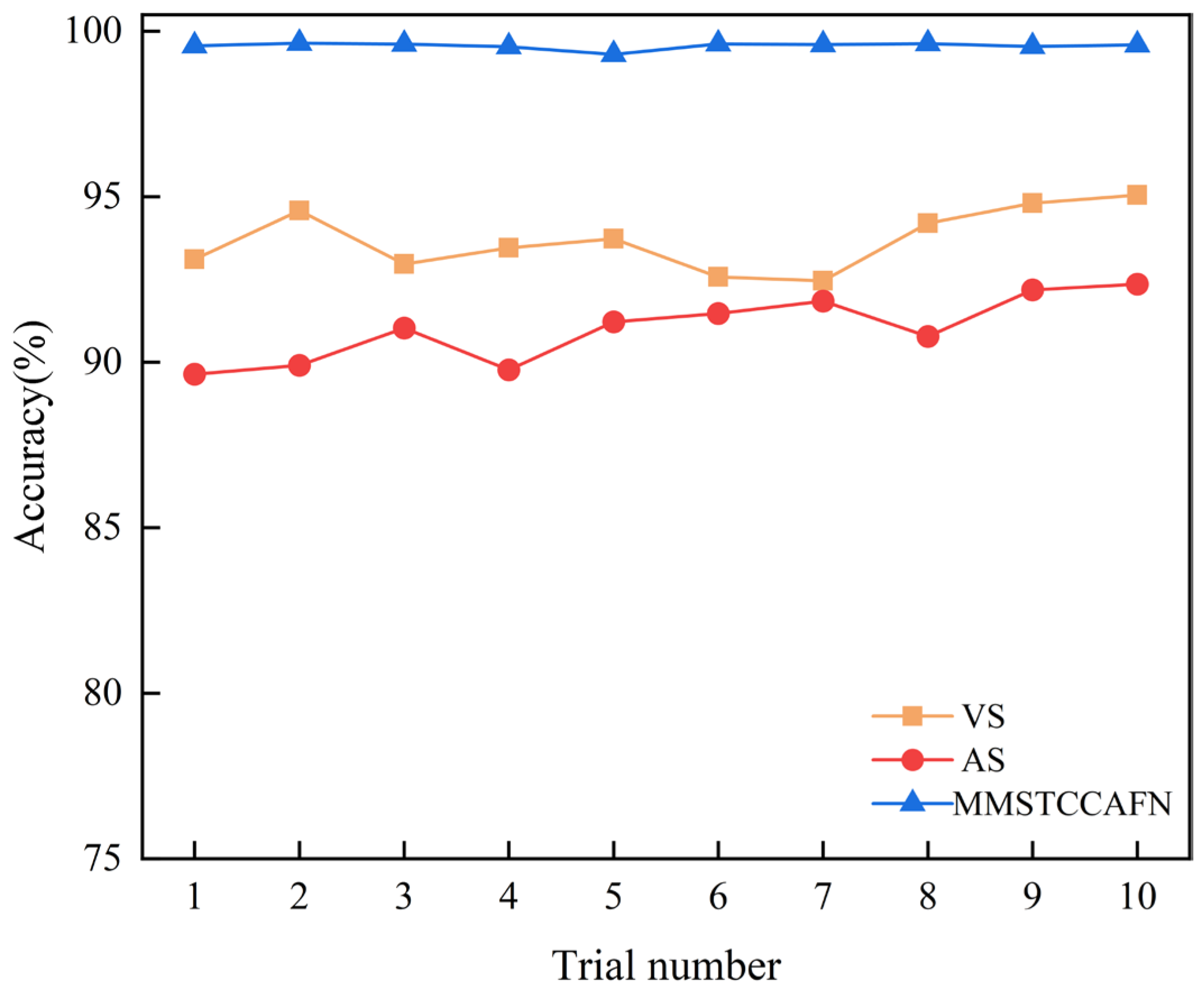

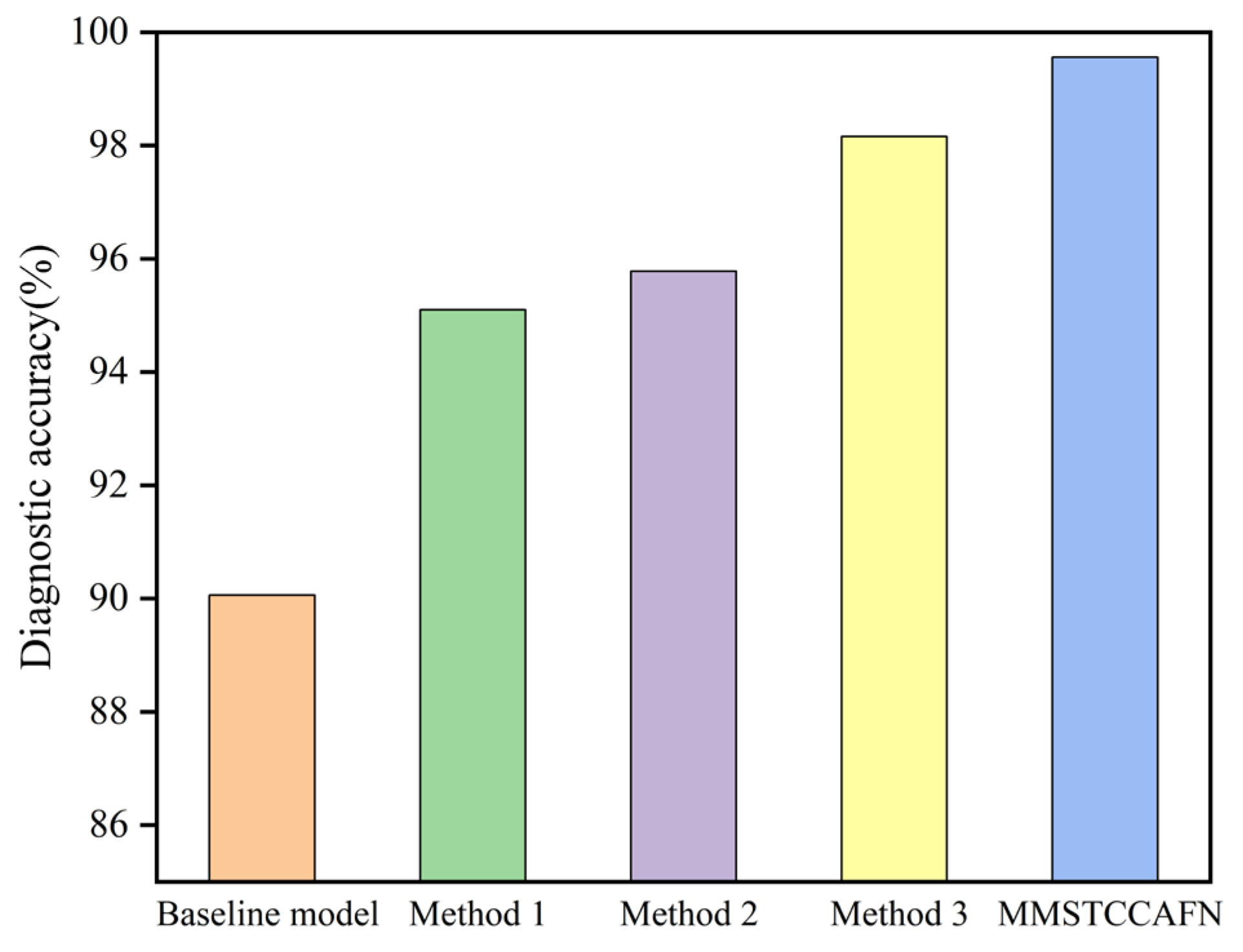

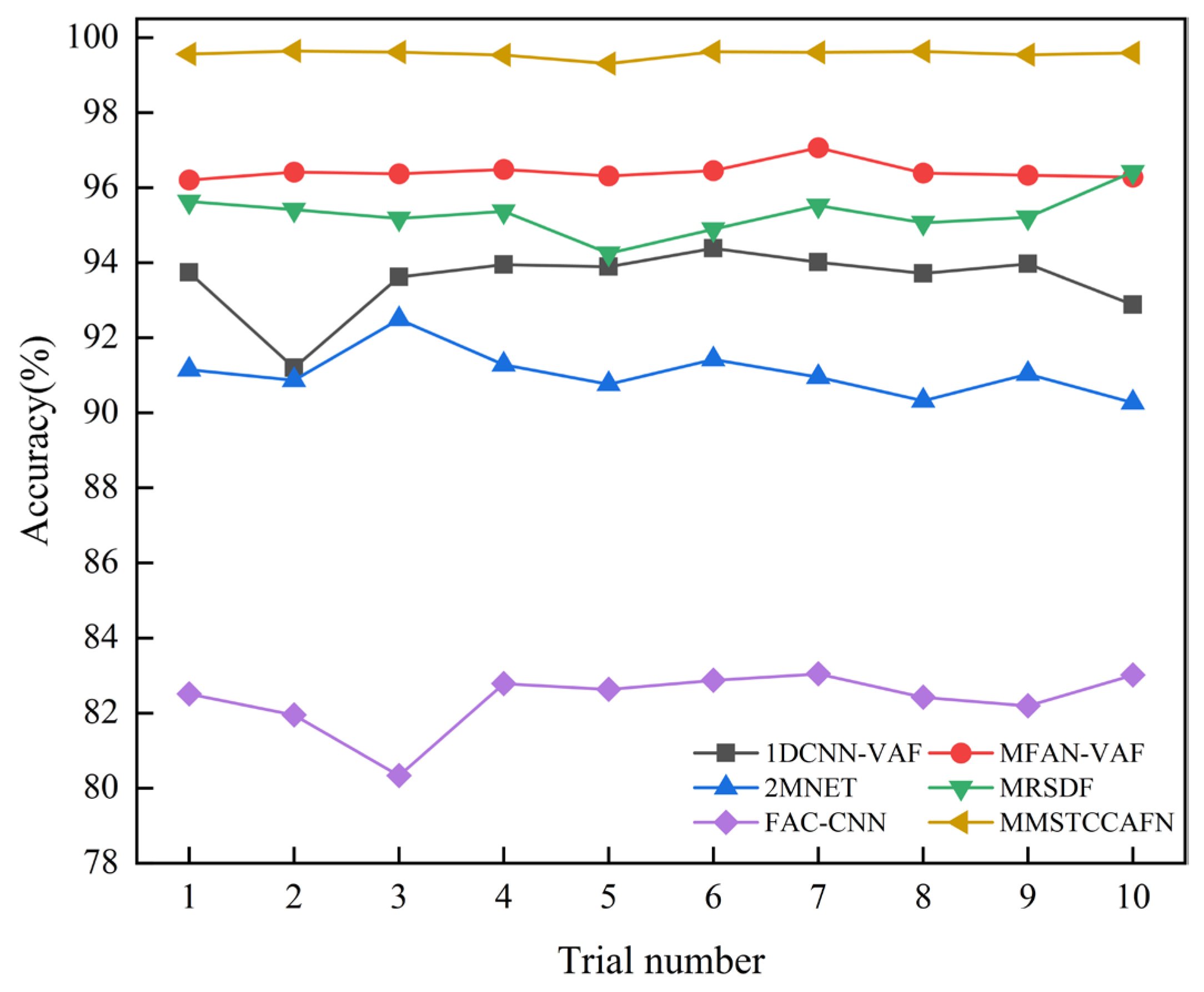

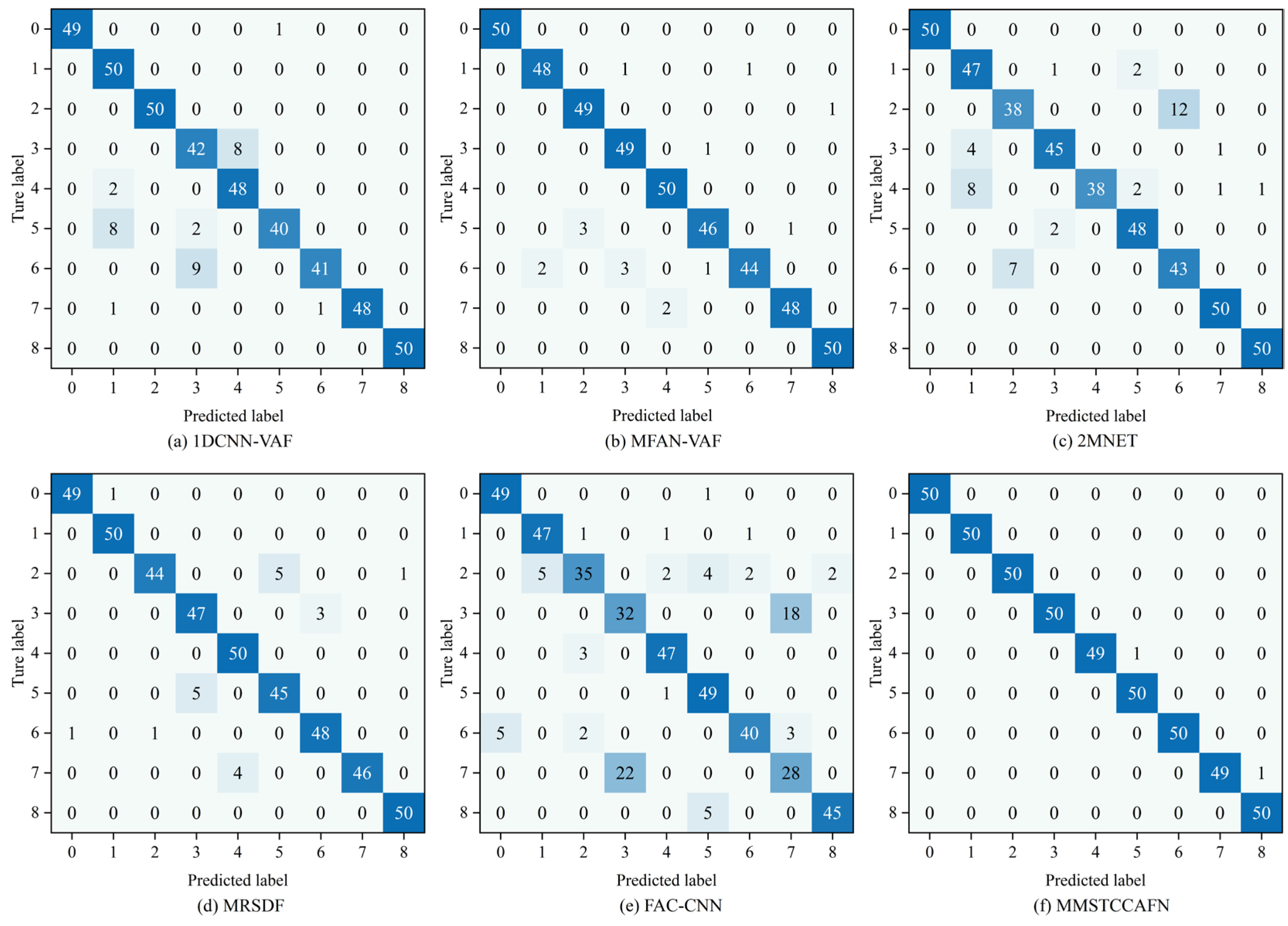

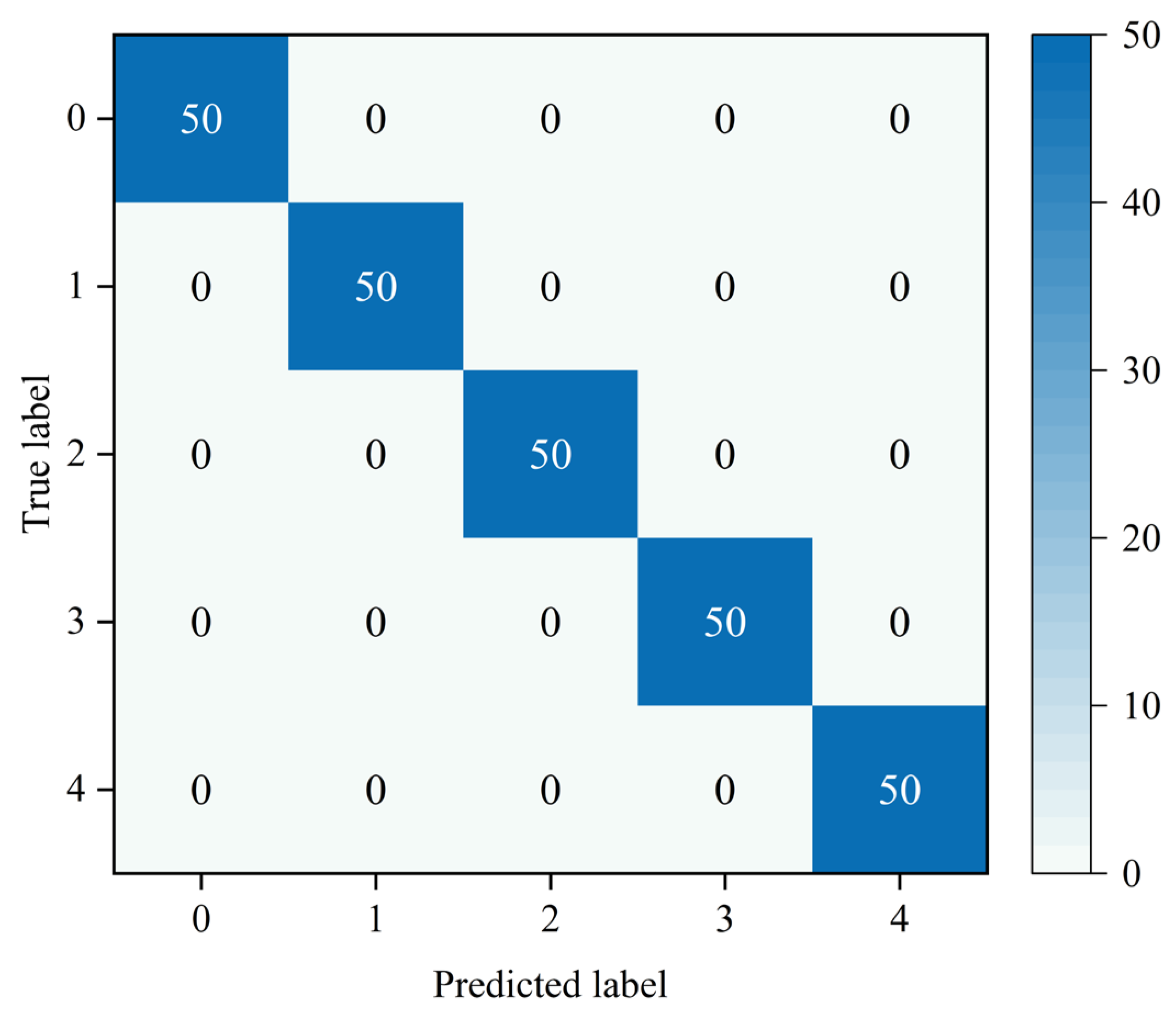

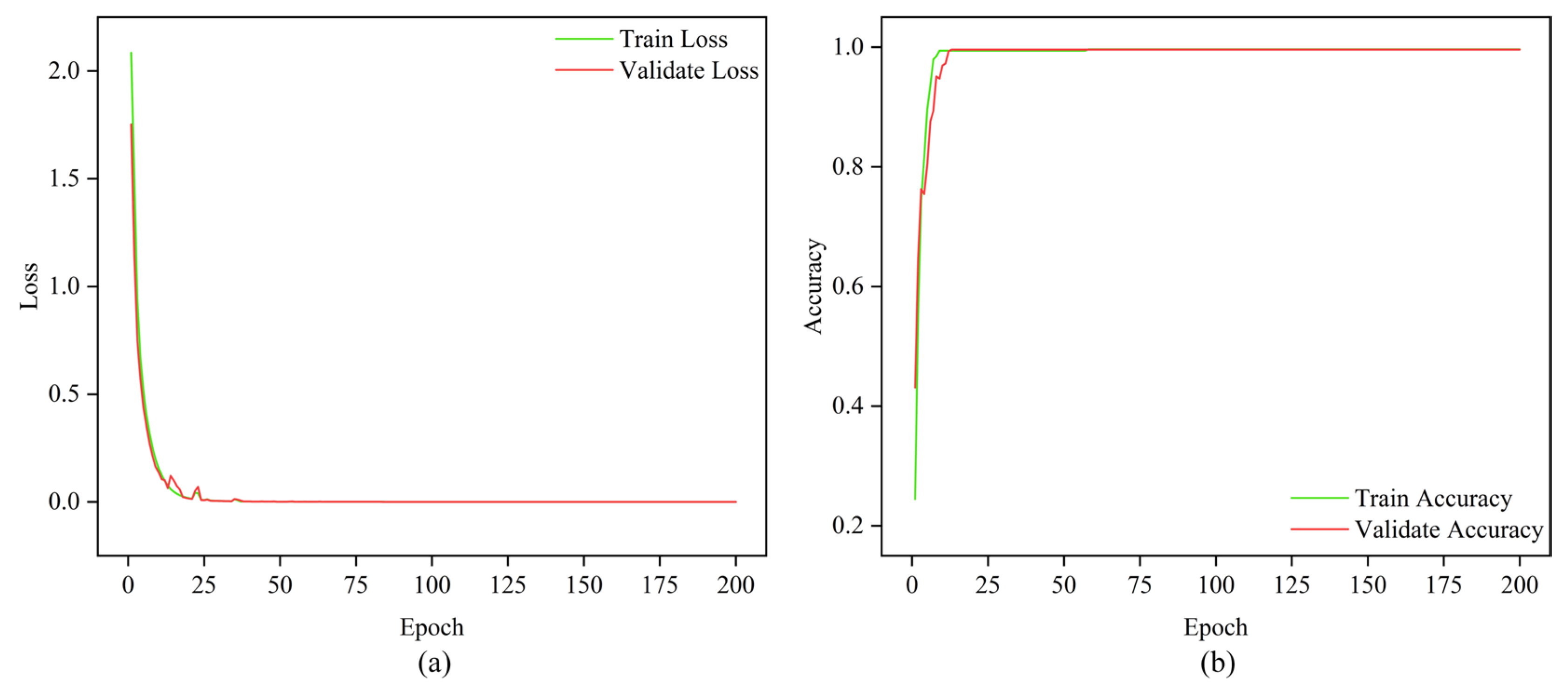

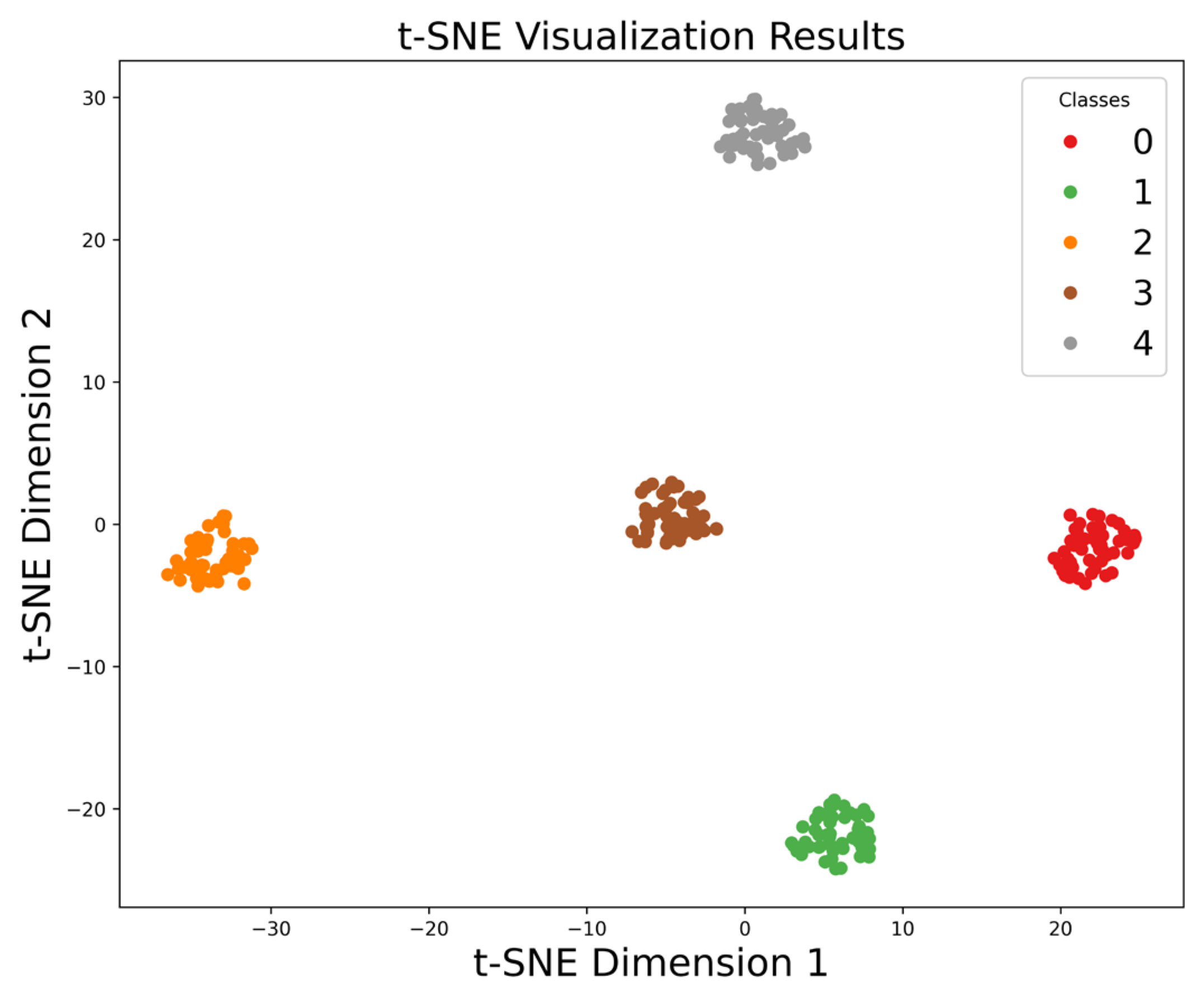

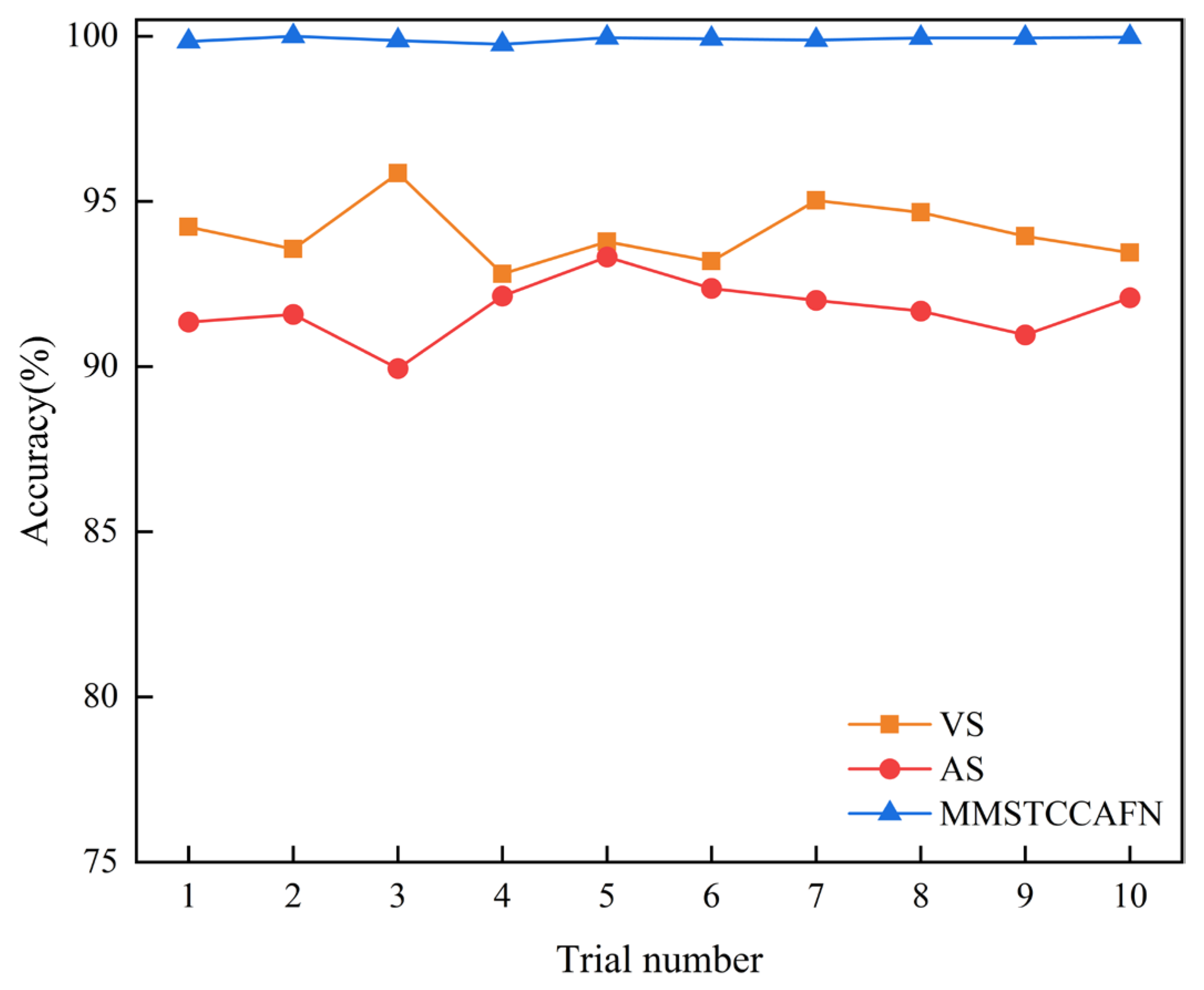

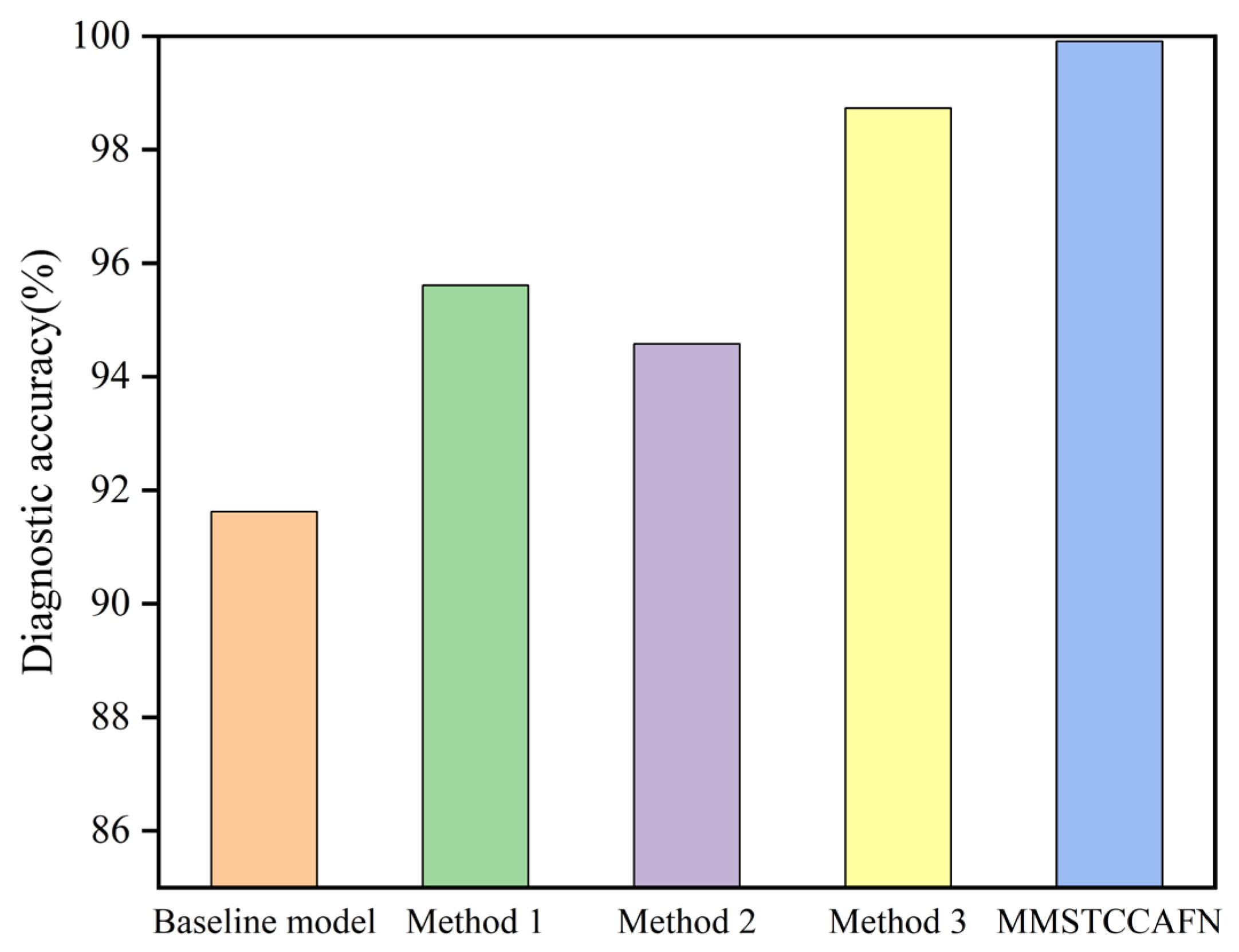

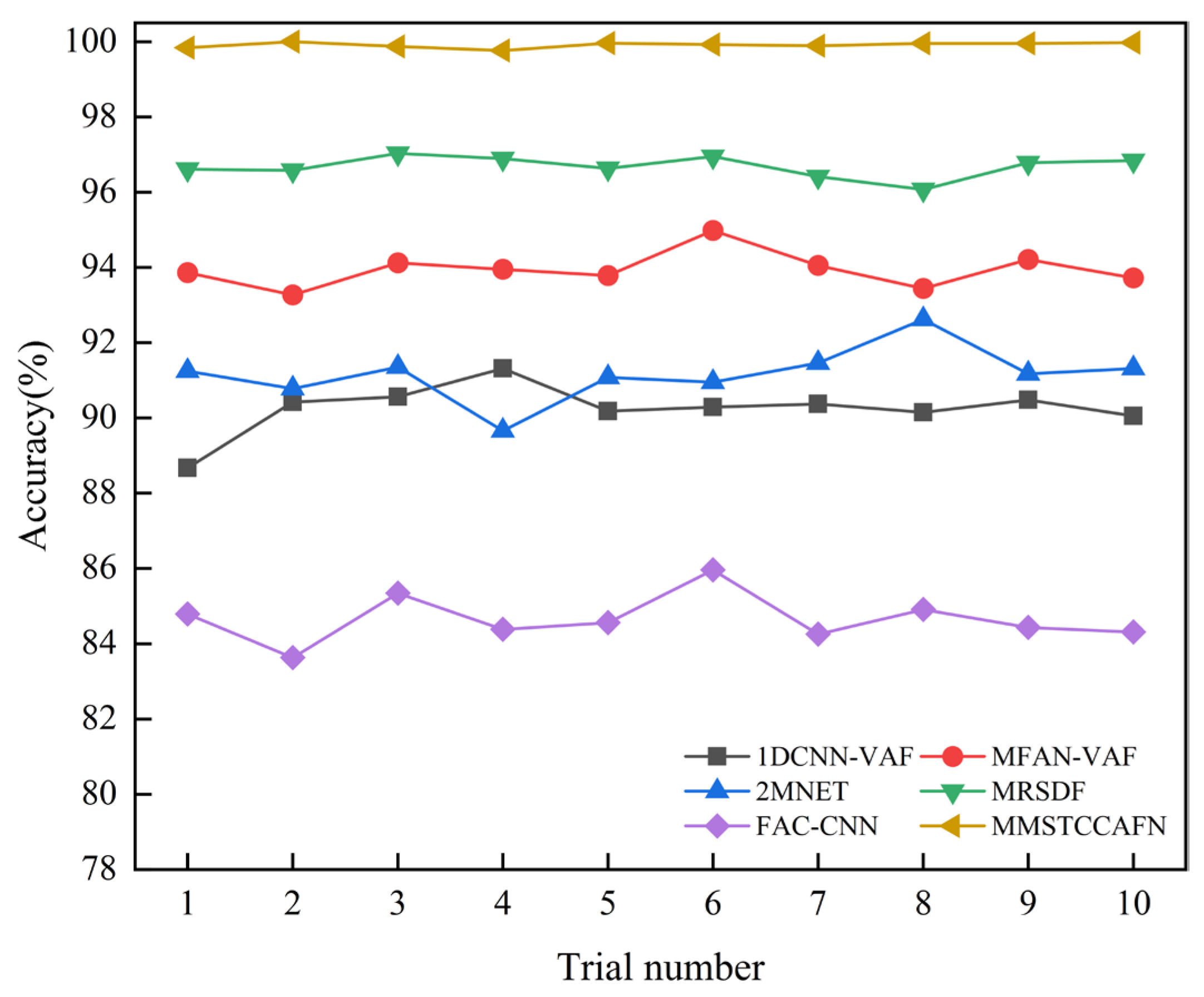

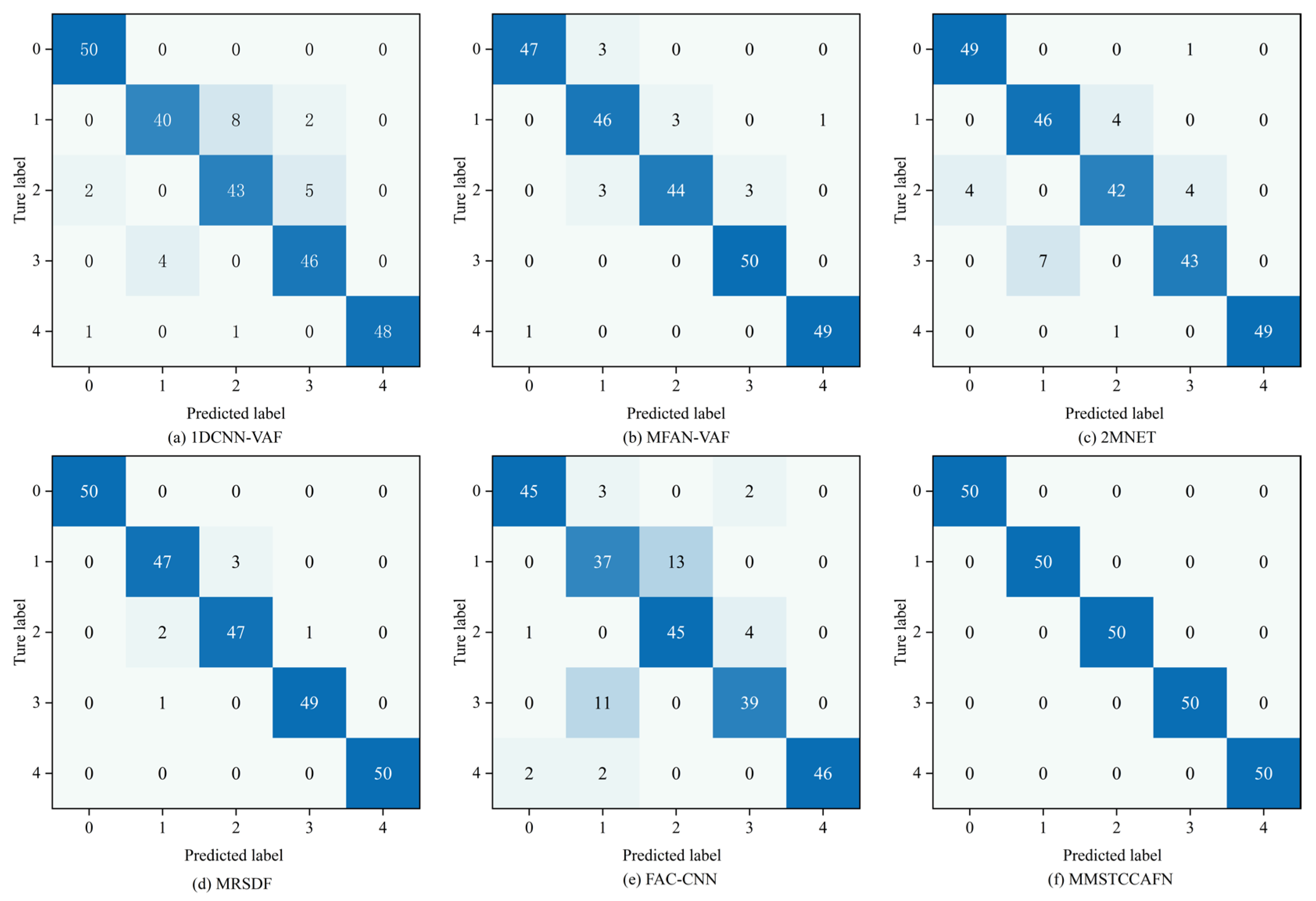

This paper proposes a multi-channel multi-scale spatiotemporal convolutional cross-attention fusion network (MMSTCCAFN) for bearing fault diagnosis. This network leverages the complementary characteristics and correlations between acoustic and vibration signals, performing adaptive fusion from multi-scale feature perspectives. As a result, it captures multi-level detailed information, enhancing diagnostic performance in high-noise environments while overcoming the potential limitations of relying on a single sensor. We validated the proposed method’s effectiveness using a comparison of diagnostic results from two bearing datasets with those from single sensors. Furthermore, compared to other methods, MMSTCCAFN achieves the highest average accuracy and the lowest standard deviation across both datasets, demonstrating its excellent performance and robustness. Additionally, the ablation experiments demonstrate the mutual cooperation of the three modules, highlighting the MMSTCCAFN’s commendable performance in terms of accuracy and stability.

Despite the high diagnostic accuracy of our proposed method, there are still certain limitations. Firstly, in practical industrial environments, additional data types such as speed, load, and temperature are also available. In this study, only vibration and acoustic data were fused. In the future, we plan to incorporate additional types of data to obtain more useful information. Secondly, despite the high diagnostic accuracy, the internal learning process of the method is complex, making it difficult to comprehend why the model arrived at a specific result. Future work will focus on enhancing the transparency and interpretability of the model by evaluating the final contributions of each input feature. Additionally, while the proposed method has potential advantages for real-time monitoring, practical implementation requires addressing issues such as sensor data synchronization and real-time data processing to ensure system stability and reliability. In our future research, we will prioritize solving problems related to computational complexity and system integration to enhance the practical application value of the method.