1. Introduction

Motion is a fundamental quality of sensory input. In vision, despite diverse evolutionary trajectories across different species, from insects and cephalopods to vertebrates, visual systems have converged on fundamentally similar mechanisms of motion processing [

1,

2]. However, motion is not exclusive to vision; it is also a hallmark of the tactile sensory system, with considerable behavioural relevance for both animals and humans. Everyday interactions such as object manipulation and haptic exploration involve relative motion between the skin and surfaces [

3]. For example, discerning roughness and smoothness, identifying material properties (e.g. metal vs. wood) or recognising object shapes requires dynamic contact through palpation and movement. Reading Braille depends on lateral movement of the fingertips to interpret sequences of raised dots. Tactile motion processing underpins fine motor control and precise object manipulation [

4,

5]. Yet, the perceptual and computational mechanisms underlying tactile motion remain incompletely understood.

Two principal sources of information for tactile motion have been identified in the literature [

5,

6]. The first relies on the sequential activation of mechanoreceptors at different skin locations as an object or stimulus moves across the skin—such as when an insect crawls along the arm. This includes apparent tactile motion, typically studied experimentally using discrete, pulsed stimuli delivered in succession to separate skin sites [

7,

8,

9,

10,

11,

12,

13,

14]. The second source involves skin deformation cues, particularly shear and stretch, which arise during sliding contact or friction. These deformations can convey directional information [

15], potentially through recruitment of distinct afferent populations, such as slowly adapting type II (SA2) units, which are sensitive to stretch and contribute to motion direction perception [

16].

At the level of periphery, slowly adapting type I (SA1) afferents convey high-resolution spatial information about contact location [

4,

17,

18]. The spatiotemporal pattern of their population activity is thought to encode motion direction and speed with acuity comparable to human perceptual performance [

18]. Rapidly adapting type I (RA1) afferents may also contribute to motion encoding via spatiotemporal patterns, though with lower spatial precision due to their larger receptive fields [

18]. SA2 afferents, previously mentioned, respond to skin stretch and support motion direction perception through their tuning to deformation patterns [

15,

16].

Here, I investigate a third and less explored mechanism for tactile motion: the perception of motion across fingers induced by phase-shifted, continuous streams of vibrotactile input delivered to two fingertips simultaneously. Unlike prior studies that typically rely on either (a) sequential, pulsed stimulations delivered to multiple discrete skin sites [

7,

9,

11,

13,

14,

19] or (b) physical sliding stimuli that engage friction-induced skin deformation [

5,

6], this paradigm involves two spatially fixed but temporally dynamic inputs. The stimulation does not involve skin movement or high spatial acuity, but rather evokes motion percepts through temporal phase differences between inputs to two fingerpads.

In previous tactile motion paradigms, the perceived continuity and smoothness of motion heavily relied on spatial continuity, either through densely packed actuator arrays or by delivering real mechanical motion through skin deformation. Apparent motion displays such as the OPTACON device and related arrays use a dense grid of vibratory pins to create a sense of continuous motion across the fingertip but are limited to a spatial extent of only a few millimetres on the skin surface [

9,

10,

11,

14,

20,

21,

22,

23]. Other approaches have relied on mechanical motion cues, where probes or surfaces are physically moved across the fingerpad, generating tangential shear and frictional forces that produce a percept of continuous sliding motion [

24,

25,

26,

27,

28]. However, these strategies are limited either by spatial coverage or by employing actual mechanical movement, which is not ideal for compact or wearable devices. The approach introduced here departs from these conventions by using only two spatially coarse stimulators, with smooth, continuous motion percepts instead emerging from the temporal continuity of phase-shifted vibrotactile stimulation across the fingers. This demonstrates a novel, temporally driven mechanism for tactile motion perception that does not depend on dense actuator arrays or elaborate skin-deforming hardware. Previous studies using similar phase-shifted vibrotacitle stimuli failed to elicit tactile motion perception, because they tested only relatively high modulation frequencies (e.g., 5 Hz) [

29], which lie above the range supporting directional motion perception. In the present study, I demonstrate that directional motion emerges only at lower envelope frequencies (≤1.5 Hz), thereby establishing the critical range that earlier studies overlooked.

Fingertips are among the most densely innervated tactile regions in human body [

30] and are primary organs for active exploration [

3]. This work uses continuous amplitude-modulated vibrations known to predominantly recruit rapidly adapting type II (RA2) afferents—i.e., Pacinian corpuscles—which are sensitive to high-frequency vibratory energy and exhibit large receptive fields [

31,

32,

33]. Unlike SA1 and RA1 afferents, RA2 units are less sensitive to fine spatial features but can detect remote vibratory events across skin and even bone. Notably, the stimuli here are delivered over the entire fingertip pad, eliminating reliance on fine spatial localisation, instead leveraging temporal synchrony or asynchrony across digits. This approach is analogous in principle to mechanisms found in certain arthropods, such as chelicerates, which detect and localise remote vibratory sources using their paired appendages [

34]. Similarly, humans may infer motion direction or location of a remote source by comparing asynchronous vibratory input across fingerpads [

35,

36]—effectively extending tactile spatial perception beyond the point of contact.

In this study, I first formalise the physical basis for detecting the location and direction of a remotely moving vibration source, and how such stimuli can be simulated through asynchronous amplitude-modulated input to two fingertips. I then present a series of psychophysical experiments characterising human perceptual performance in detecting the direction of such inferred motion, revealing a tactile motion perception mechanism that operates independently of spatial acuity or physical surface movement. This paradigm has implications for wearable and compact haptic interfaces such as those developed by Seim et al. [

37], de Vlam et al. [

38] and Huang et al. [

39], where efficient solutions for conveying directional motion are increasingly needed [

40,

41,

42]. Such applications include tactile displays, virtual and augmented reality (VR/AR) environments, and neuroprosthetic feedback systems [

41,

42,

43].

2. Materials and Methods

Vibrotactile stimulation is a versatile method for conveying spatiotemporal information through the skin and has been widely employed in both fundamental research and haptic technology applications [

44]. Arrays of tactors delivering temporally staggered pulses have been used to generate apparent motion across body surfaces such as between hands, along the arm, or across the back, simulating a moving tactile stimulus without physical displacement [

8,

12,

19,

44,

45]. While such paradigms rely on discrete bursts or pulses with differences in stimulus onset timing across spatially distinct sites to evoke motion percepts, the current study employs continuous amplitude-modulated waveforms with controlled phase offsets, enabling investigation of motion perception from continuous, distributed, phase-coded signals in the absence of distinct onsets or spatial displacement.

To model the stimuli generated by a remotely vibrating source, such as a mobile phone on a table, we consider a point source emitting a sinusoidal carrier wave

, where

and

denote the amplitude and carrier frequency of vibration, respectively. As this vibration propagates through the substrate—e.g., the table—approximately as a plane wave, it undergoes attenuation due to dissipation, scattering, and absorption. This attenuation typically follows an exponential decay with distance:

where

is the attenuation coefficient (dependent on medium properties and frequency), and

d is the distance from the source.

When the source moves relative to a fixed point, the amplitude at that point changes with time due to the variation in distance. These changes are proportional to the radial component of the source’s motion. In the special case of periodic movement at a frequency

, the received signal envelope at a fixed remote point with time-varying distance

is itself periodic, and the received signal can be written as follows:

where

is the delay due to propagation over distance

, and

is the Doppler shift caused by the source’s motion. Both parameters

and

depend on the wave propagation speed in the medium, which is determined by its stiffness and density (e.g., ∼5790 ms

−1 in stainless steel, and ∼3960 ms

−1 in hard wood).

For distances on the order of a meter or less, corresponds to sub-millisecond or nanosecond delays, well below biologically plausible detection thresholds. Moreover, assuming slow motion of source relative to wave propagation speed, and , the Doppler shift is negligible. Henceforth, I assume and .

2.1. Motion Direction Estimation via Two Touch Points

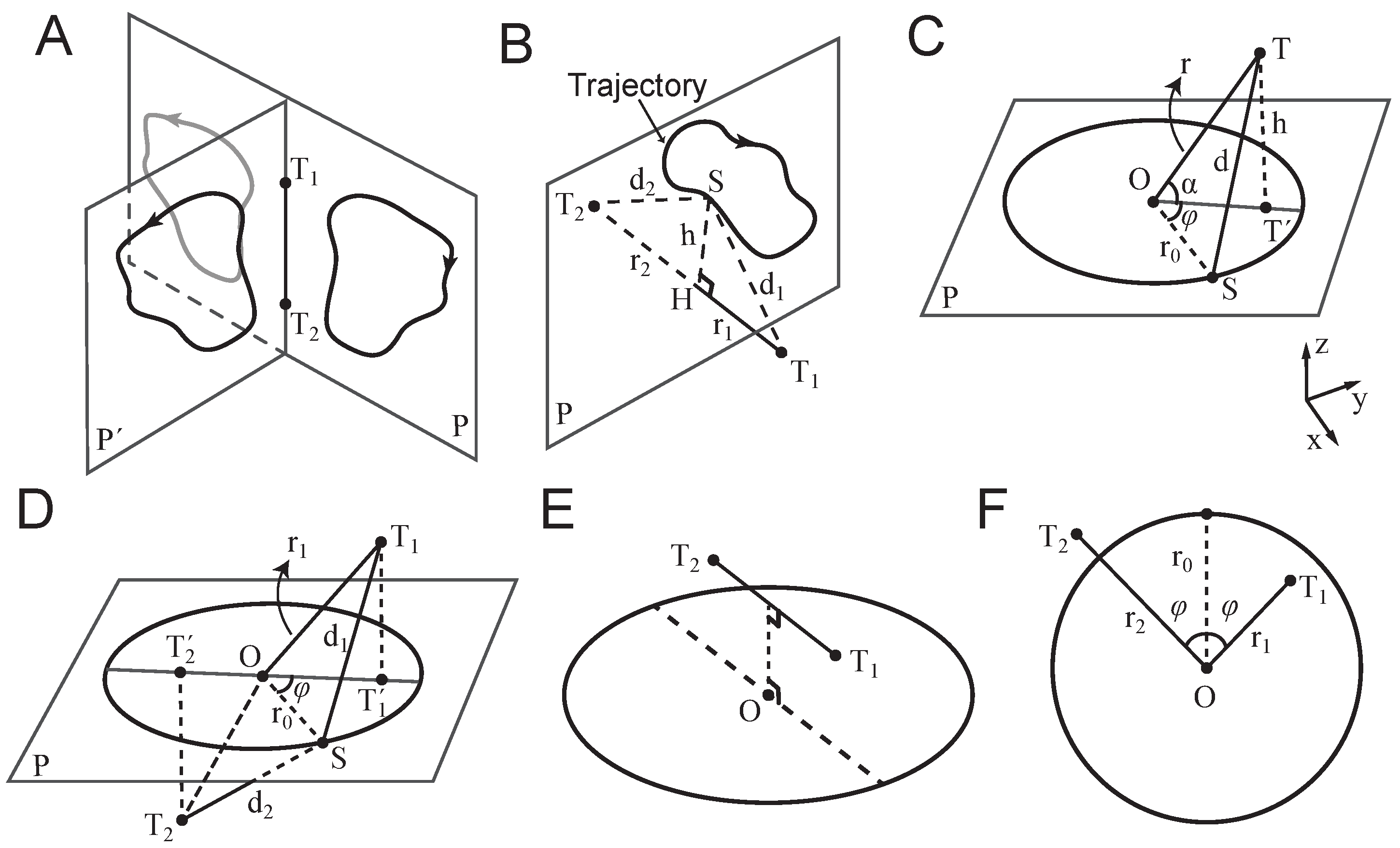

When a sensor (e.g., a fingertip) is placed at a fixed point (hereafter a `touch point’), the direction of source movement along the radial axis (toward or away from the touch point) can be inferred from temporal changes in the vibration envelope. However, a single touch point provides no information about the tangential component of the motion. To recover trajectory information, at least two touch points positioned at distinct spatial locations are required (

Figure 1).

Let

and

denote two such points. The envelopes of vibration received at these two locations can, in principle, be used to infer the moment-by-moment position of a moving source in two-dimensional (2D) space. However, the reconstruction is ambiguous: any trajectory and its mirror reflection across the line connecting the two touch points (the touch-point axis) yields identical vibration patterns. This is illustrated in

Figure 1A.

In three-dimensional (3D) space, ambiguity increases: all trajectories that are rotationally symmetric around the touch-point axis produce indistinguishable vibration profiles at the two points. That is, any trajectory that can be rotated about this axis into another remains perceptually equivalent at the touch points, leading to infinite number of trajectories that create an identical vibration pattern at touch points and .

2.1.1. In-Phase Vibrations

As discussed, a periodic source movement with frequency

f results in a periodic amplitude modulation at each touch point. If the envelopes at

and

vary together over time—i.e., they are monotonic transformations of one another under a strictly increasing odd function—they are said to be `in phase’. For example, consider a trajectory confined to a plane perpendicular to the touch-point axis (

Figure 1B). The closest and farthest positions on the trajectory to

are identical to those to

. Let

denote the instantaneous orthogonal distance from the touch-point axis to the source trajectory at any moment

t, and let

be the perpendicular distance from touch point

to the plane of the trajectory. The amplitude at each touch point is given by the following equation:

and the derivative:

This shows that the envelope at each touch point changes in the same direction (increasing or decreasing together), confirming that they are in phase. Additionally, one can show the following:

which is a strictly increasing function of

, again confirming phase alignment.

2.1.2. Anti-Phase Vibrations

Two waveforms are anti-phase if their envelopes exhibit a phase difference of

, such that when one increases, the other decreases. This occurs when the envelopes are related through a negatively proportional transformation under a strictly increasing odd function. In this case, the perceived direction of motion alternates across each half-cycle, creating a bouncing or bidirectional trajectory. Perceptually, such anti-phase vibration patterns may give rise to “bistable” motion perception, wherein the ambiguous temporal dynamics support two competing interpretations, with each corresponding to motion in opposite directions, that may alternate spontaneously over time. Examples of anti-phase configurations are shown in

Figure 1D,E.

2.2. Circular Motion of a Vibrating Source

Consider a point source moving along a circular trajectory with radius

and constant tangential velocity

v (

Figure 1C). The frequency of motion is

Let

T be a touch point located at polar coordinates

relative to the centre of the circular path

O. The received vibration at time

t is given by the following equation:

where

is the source amplitude,

is the carrier frequency, and

is the attenuation coefficient of the medium. The envelope of the received vibration is the following time-varying function:

which oscillates at frequency

f, between a minimum of

and a maximum of

.

2.2.1. Extension to 3D

In three dimensions, the equation for the received signal becomes the following:

where

is the elevation of the touch point

T relative to the trajectory plane denoted by

P, and

is the inclination angle in spherical coordinates (

Figure 1C).

2.2.2. Phase Differences from Geometry

Let

P denote the plane of circular trajectory, centred at

O. Consider two touch points,

and

, with projections

and

onto plane

P. Without loss of generality, let the coordinates of the two touch points be

and

, respectively. According to Equation (

9), the received vibrations at the two touch points are as follows:

Thus, the envelope phase difference between the two points is

.

Assume that the projected points and the centre of the circular path

O lie on a straight line (

Figure 1D). Then, if

O lies between

and

(i.e.,

) the envelopes of the vibrations are anti-phase (

Figure 1D,E). Conversely, if

O lies outside the segment connecting

—i.e.,

—the envelopes are in phase.

For simplicity, hereafter, I focus on the 2D symmetric case where the centre of the circular trajectory lies on the perpendicular bisector of the segment connecting the two touch points and , such that . In this configuration, the two touch points are equidistant from the centre, resulting in vibration envelopes with equal amplitude range. This condition facilitates visualisation and analysis of in-phase and anti-phase conditions in a two-dimensional geometry.

2.3. Experimental Procedure

Three psychophysical experiments were conducted to investigate vibrotactile motion direction discrimination using a common two-alternative forced-choice (2-AFC) discrete trial paradigm. In this experiment, participants reported the perceived direction of vibrotactile motion (left vs. right) generated by two amplitude-modulated (AM) vibrations delivered simultaneously to the index and middle fingertips of the right hand (see details below). All experimental procedures were approved by the Monash University Human Research Ethics Committee (MUHREC) and conducted in accordance with approved guidelines.

2.3.1. Participants

A total of 25 participants (12 female; age range: 19–34; one left-handed) took part across the three experiments. All were undergraduate or graduate students at Monash University. Each experiment involved distinct participant groups. All participants provided written informed consent prior to the experiment.

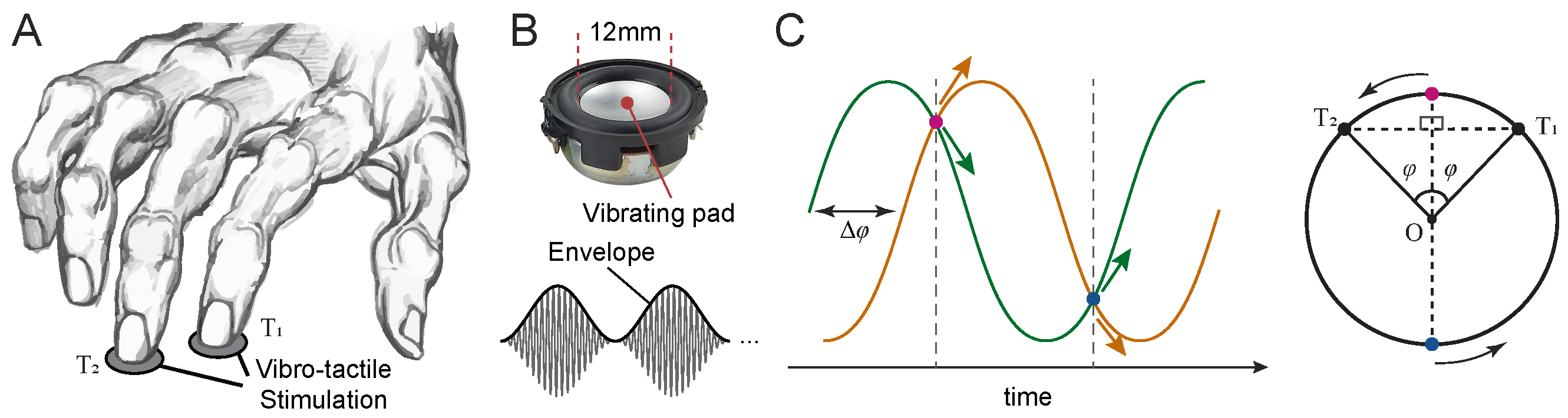

2.3.2. Vibrotactile Stimulation

In all experiments, vibrotactile stimuli were delivered simultaneously to the index and middle fingertips of the right hand using two miniature solenoid transducers (PMT-20N12AL04-04, Tymphany HK Ltd; 4 Ω, 1 W, 20 mm diameter) mounted 5 cm apart on a vibration-isolated pad. Stimuli were generated in MATLAB (R2022a; MathWorks Inc.) at a sampling rate of either 48 kHz or 192 kHz and were output through a Creative Sound Blaster Audigy Fx 5.1 sound card (model SB1570). The peak-to-peak amplitude of the output waveform was set to 1.98 V. The shape and curvature of the transducer matched the size and contour of adult fingertips [

46]. The base (carrier) frequency of the vibrations was

Hz. Although this frequency is within the audible range, we verified during pilot testing that the stimuli were imperceptible to hearing and could only be perceived through tactile sensation. Amplitude modulation was applied to generate low-frequency envelopes, with each trial containing 3 modulation cycles. The modulation amplitude was set well above the detection threshold, and pilot testing confirmed that even halving the amplitude had negligible effects on performance in the motion discrimination task.

In all experiments, sinusoidal envelopes were used due to their mathematical and physical properties. Sinusoids are fundamental in Fourier decomposition and are the only waveforms that preserve their shape under summation with others of the same frequency. Sinusoidal modulation mimics natural oscillatory signals (e.g., wind, light, and sound waves) and implies motion with varying velocity, similar to pendular or spring–mass dynamics.

For a given phase difference

, the two sinusoidally modulated vibrations were defined as follows:

To avoid any response bias or cues about motion direction arising from differences in initial envelope amplitude, vibration onset was set to one of the two isoamplitude points where the envelopes were identical. For non-zero

, these occur at

and

, with the corresponding envelope amplitudes of

and

, respectively (

Figure 2C). Since cosine is an even function, the envelope magnitudes are identical for

and

. At each of these onset points, the envelopes have opposite slopes—one rising and the other falling—corresponding to opposite directions of motion along the circular trajectory. On each trial, one of these two onset points was selected at random with equal probability, ensuring that initial envelope phase provided no reliable cue about motion direction.

In Experiment 1, we additionally included stimuli with exponentially decaying envelopes to simulate more realistic, physically plausible patterns of vibration propagation. The envelopes were derived from Equation (

10), using fixed parameters

and

:

These stimuli were also initiated at one of the two isoamplitude points selected randomly on each trial, consistent with the sinusoidal condition.

2.4. Motion Direction Discrimination Task

Participants performed a discrete-trial two alternative forced-choice (2-AFC) task to judge the perceived direction of vibrotactile motion. On each trial, two amplitude-modulated vibrations were delivered simultaneously to the index and middle fingertips of the right hand. Participants were instructed to gently rest their fingertips on the transducers without applying force (

Figure 2). The two transducers were spaced 5 cm apart on a vibration-isolated pad, arranged such that vibrations from one transducer were not perceptible at the other. Participants rested their arm on the chair armrest with their wrist comfortably supported on a padded surface aligned with the stimulation platform. They were instructed to maintain a stable hand posture throughout each session. All participants reported clear perception of the envelope modulation, and pilot testing confirmed that the stimulus amplitude was well above detection threshold.

The task was self-paced, with all responses made via keyboard. On each trial, a pair of vibrations with a specific envelope phase difference was presented for three cycles (e.g., 6 s at an envelope frequency of 0.5 Hz). Participants reported the perceived motion direction (leftward or rightward) by pressing the corresponding arrow key with their left hand. There was no time limit for responses, and participants could respond at any moment during or after stimulation.

The specific phase differences and envelope modulation frequencies varied across the three experiments. In Experiment 1, I compared sinusoidal and exponential envelopes with phase differences of ±30° to ±150° (30° increments) at a fixed envelope frequency of 0.5 Hz. In Experiment 2, sinusoidal envelopes were used with phase differences ranging from −180° to 180° in 30° increments, tested at envelope frequencies of 0.5, 1, and 1.5 Hz. In Experiment 3, sinusoidal envelopes were tested at phase differences of 0°, ±30°, ±60°, ±90°, and 180° at a fixed frequency of 0.5 Hz, with participants additionally providing confidence ratings after each response by pressing a number key from “1” (no confidence) to “5” (absolute certainty). These ratings were linearly scaled to a 0–100% confidence range. Experimental conditions were presented in pseudo-random order across trials, with approximately 30 repetitions per condition.

2.5. Psychometric Modelling

To quantify sensitivity to phase differences at each modulation frequency, we modelled perceptual discrimination performance as a function of phase difference

using a nonlinear periodic-sigmoid psychometric function:

where

denotes the predicted proportion of correct responses at a given phase difference

, and

is a sensitivity parameter reflecting the steepness of the psychometric function. Higher

values indicate greater sensitivity to phase differences. The model is based on the well-known logistic function [

47] applied to the sine of the phase difference

, ensuring that predicted performance is at chance (0.5) at 0° and 180°, where the vibrations provide no directional cue. The sinusoidal form, combined with its single-parameter structure, avoids overfitting and reflects the hypothesised mechanism of motion perception based on phase-difference readout across spatially separated tactile sensors. The model was fit to group-averaged accuracy data using nonlinear least-squares regression, and the model performance (goodness-of-fit) was assessed using the coefficient of determination (

).

2.6. Probabilistic Model of Temporal Reference Detection and Cycle Disambiguation

Consider two AM vibrations with phase difference

, which corresponds to a temporal lag

d between their envelopes:

where

f is the envelope modulation frequency. Let

and

denote the perceived moments of two consecutive salient reference points (e.g., peaks) of vibration 1, and let

denote the corresponding reference point of vibration 2 that occurs between

and

. These reference points are extracted from the envelopes of the amplitude-modulated vibrations. Hereafter, we focus on peak features, but the same logic applies to other amplitude landmarks (e.g., troughs or zero-crossings). Due to sensory noise and perceptual limits, each detected peak is assumed to lie within a temporal uncertainty window around the true peak time. For each reference point, I model the perceived time as being uniformly distributed within a window of width

w centred at the true peak. This uncertainty window depends on the perceptual threshold with which the envelope is extracted. For instance, assuming a sinusoidal envelope in Equation (

11), the time intervals where the envelope deviates from the peak amplitude by less than a threshold value

correspond to durations satisfying

, which implies the following:

so that the total uncertainty window is as follows:

Since the vibrations are periodic and have identical envelope shapes (with vibration 2 being a phase-shifted version of vibration 1), the reference point of vibration 2 is shifted by lag

d. Similarly,

is one cycle after

, i.e., with an offset of

T, where

is the envelope modulation period. Without loss of generality, we assume

and align the reference points relative to zero and define their distributions as

,

, and

. Correct perception of motion direction depends on both (1) the reliability of judging the temporal order of salient reference points (e.g., peaks) and (2) disambiguation of within-cycle versus across-cycle intervals. As mentioned, while the focus here is on peak features, the same logic applies to other amplitude landmarks (e.g., troughs or zero-crossings).

Based on these distributions, two forms of perceptual inference are required to judge motion direction: first, the temporal order judgement, i.e., determining whether the peak of vibration 2 occurs after the peak of vibration 1 (). Second, the inter-peak interval discrimination, i.e., comparing whether the interval between and is shorter than the interval between and , i.e., testing whether .

The sections that follow formalise these probabilities and derive an analytical expression for the overall probability of a correct motion direction judgement.

2.6.1. Temporal Order Judgement

The first source of error arises from uncertainty in judging the temporal order of peaks. If the temporal lag

d is smaller than the uncertainty window

w, the perceived ordering of

and

may be incorrect. The probability that the peak of envelope 1 is perceived before that of envelope 2 (i.e., a correct temporal order judgement) is given by the integral of the joint distribution of

and

over the area

:

As

and

are mutually independent with uniform distributions,

is distributed triangularly over the interval

, yielding the following:

The case

guarantees correct order due to non-overlapping supports.

2.6.2. Across-Cycle Ambiguity and Inter-Peak Interval Discrimination

As

d increases and the uncertainty window extends into the next modulation cycle, another form of error emerges. This is when the uncertainty around

extends beyond the halfway point of the modulation period

T; the perceived peak of envelope 2 may fall closer in time to the next peak of envelope 1 (denoted

) rather than the original one at

. This may result in an incorrect interval comparison, i.e.,

, thus misjudging the motion direction. Based on the mutually independent uniform distributions of

,

, and

, the probability density function of

is a piece-wise quadratic function of the following form:

The probability of avoiding this across-cycle confusion is as follows:

The probability is 1 when

, and drops below 1 as

d increases beyond this point.

2.6.3. Joint Probability of Correct Motion Perception

Correct motion perception requires both correct temporal order identification of peaks and correct across-cycle inter-peak interval discrimination. These two conditions are not independent, and their joint probability must be calculated conditionally. Let .

The joint probability of a correct response is as follows:

Using the law of total probability over the distribution of

, the second term can be rewritten as follows:

where

is the conditional probability density function of

given

, defined as follows:

with

denoting the triangular probability density function of

and the normalisation constant

as derived in Equation (

15). Thus, the joint probability becomes as follows:

The conditioned probability

can be expressed as follows:

where

is the distribution of the difference between

and

, given

. The conditional distribution

, where

so that the support length is

, at most

w. This leads to a trapezoidal distribution for

, from which we derive the cumulative probability:

The total probability of a correct decision is obtained by substituting this into Equation (

19). The full expression combines a triangular distribution for

, a trapezoidal distribution for

, and a conditional integration over all valid

. Though based on simple assumptions, this model predicts a non-linear psychometric curve that captures key features observed in the data, including asymmetries in performance (e.g., better performance at 30° than at 150° phase lags in Experiment 2).

3. Results and Discussion

3.1. Experiment 1: Direction Discrimination Using Sinusoidal vs. Exponential Envelopes

To examine whether tactile motion perception can arise from simple envelope phase differences alone, I first tested whether participants could discriminate the direction of motion from two simultaneous vibrations with either sinusoidal or naturalistic—i.e., exponential—amplitude-modulated (AM) envelopes. Both envelope types simulated a virtual motion trajectory via systematic phase differences across two fingertips. Participants performed a 2-AFC motion direction discrimination task at an envelope frequency of 0.5 Hz. On average across subjects (n = 8), direction discrimination accuracy was 85.5% ± 4.2% SEM for exponential envelopes, and 80.2% ± 5.1% SEM for sinusoidal envelopes (

Figure 3). While exponential envelopes yielded slightly higher accuracy by 5.3% ± 1.5% SEM—possibly due to their closer resemblance to naturalistic wave propagation—participants still showed robust performance with sinusoidal envelopes. This demonstrates that the tactile system extracts directional information purely from sinusoidal phase offsets, despite their more abstract physical basis.

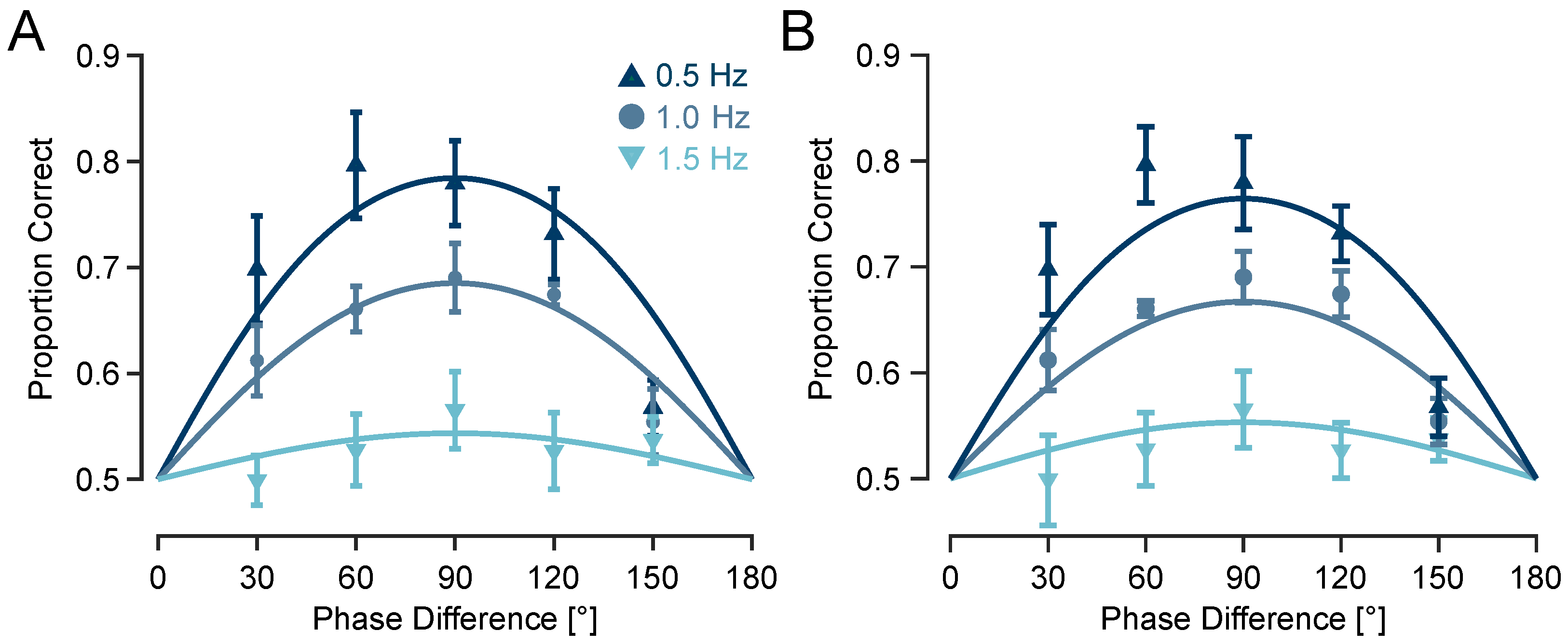

3.2. Experiment 2: Upper Frequency Limit for Tactile Motion Discrimination Lies Below or at 1.5 Hz

To quantify discrimination performance at each envelope frequency, I fit a sigmoid psychometric model with a sensitivity parameter

to the proportion of correct responses as a function of phase differences (see Methods). At 0.5 Hz, the model fit was robust (coefficient of determination

) with a relatively high sensitivity parameter

, predicting a maximum accuracy of 78.4%. At 1 Hz, performance declined moderately (

;

), with a predicted maximum accuracy of 68.5% correct responses. At 1.5 Hz, performance approached chance level (

;

) with a predicted maximum of just 54.4% correct (

Figure 4A), indicating that the upper temporal limit for perceiving direction of tactile motion lies below 1.5 Hz.

To further assess the effects of phase difference and modulation frequency on a trial-by-trial basis, while accounting for subject-level variability, I fit a generalised linear mixed-effects model (GLMM) using a logit link function and binomial distribution. The logit transformation yields a logistic sigmoidal response function, consistent with the psychometric curve fitted at the group level. Fixed effects included

and its interaction with modulation frequency (

f), with random slopes for

across participants. Specification enforces chance-level performance at 0° and 180°. The fixed sine term was significantly positive (

SE; 95% CI

;

), indicating enhanced accuracy with increasing sine of phase difference. The interaction term was significantly negative (

SE; 95% CI

;

), demonstrating that phase sensitivity declined at higher modulation frequencies. The model provided an adequate fit to the data (log-likelihood = −10,094; deviance = 20,187;

trials). The estimated standard deviation of the subject-specific random slopes was 0.195, reflecting modest between-participant variability, and the dispersion parameter was 0.998, indicating no evidence of overdispersion beyond the binomial assumption. As illustrated in

Figure 4B, model predictions captured the systematic decline in phase sensitivity with increasing frequency and matched the empirical data across frequencies.

To verify the unreliability of performance at 1.5 Hz, the population-average (marginal) GLMM accuracy at 90° was computed for each frequency and statistically compared with chance performance (50%). These were obtained by Monte-Carlo integration over the estimated random-slope distribution, with 95% confidence intervals from 5000 parametric bootstrap samples of the the fixed effects (from the estimated covariance matrix). At 1.5 Hz, the model predicted 55.3% accuracy (95% CI ), which was marginal and not reliably different from chance (). In contrast, predictions at 1.0 and 0.5 Hz were 66.7% (95% CI ) and 76.4% (95% CI ), respectively, both highly significant relative to chance ().

To directly test performance at 1.5 Hz, a separate GLMM restricted to this frequency revealed that the effect of across the full range of tested phase differences was not statistically significant (, 95% CI , ). This aligns with the sharp drop in sensitivity observed in the group-level psychometric fits (), further supporting the interpretation that 1.5 Hz marks the upper perceptual limit for robust phase-based motion discrimination in this paradigm. The analysis included 1478 trials across participants, providing sufficient statistical power to detect meaningful effects. Moreover, in the GLMM analysis, the interaction between phase and frequency was highly significant (), confirming that discrimination performance varies systematically with modulation frequency. The absence of a statistically reliable effect at 1.5 Hz reflects a genuine loss of directional information, not a lack of statistical power.

These findings contrast with those of Kuroki et al. [

35], who examined human sensitivity to AM vibrotactile stimuli up to 20 Hz in a synchronisation–asynchronisation detection task [

35]. They reported that participants could reliably detect synchrony or asynchrony in AM signals at modulation frequencies nearly ten times higher than those supporting motion direction discrimination in the present study. Moreover, they reported an inverse relationship between modulation frequency and detection threshold, with higher frequencies yielding better synchrony detection. A subsequent follow-up study using similar AM stimuli, however, examined frequencies above the present motion discrimination threshold (e.g., 2.5 and 5 Hz) and found no evidence of motion perception [

29], which is consistent with our present finding that directional motion perception is absent above 1.5 Hz. This discrepancy between the frequency ranges supporting asynchrony detection and directional motion perception underscores a critical distinction: while humans are capable of detecting synchrony in high-frequency AM signals, perceiving directional motion from inter-finger phase differences relies on much lower envelope frequencies. These differences point to potentially distinct neural mechanisms supporting temporal coincidence detection versus motion perception in the tactile domain.

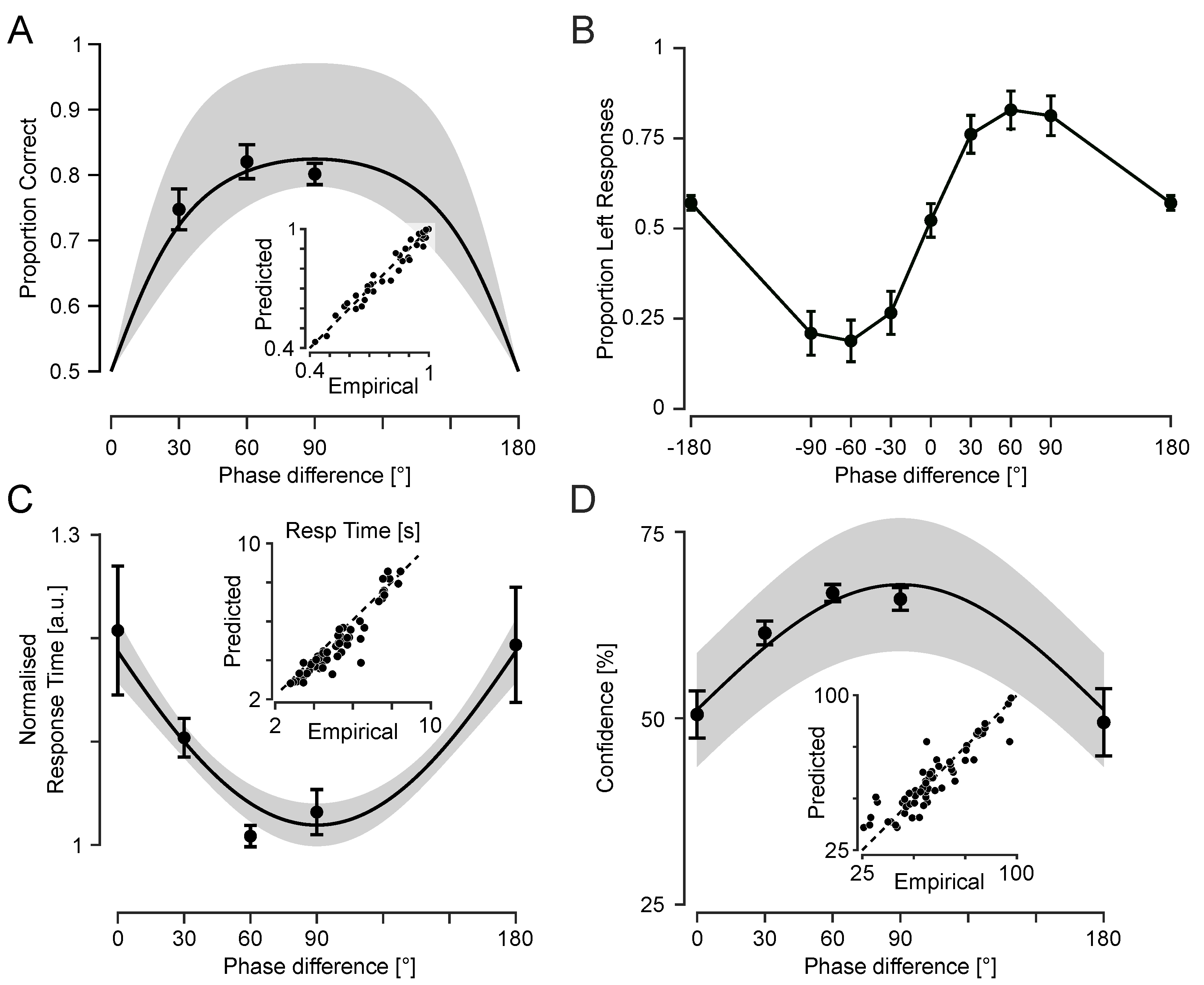

3.3. Experiment 3: Cognitive and Metacognitive Signatures of Tactile Motion Perception

Building on Experiments 1 and 2, which established that tactile motion perception depends on the phase difference between fingertip vibrations, Experiment 3 introduced confidence ratings and examined behavioural signatures of perceptual ambiguity. By focusing on phase conditions with minimal directional information (e.g., 0° and 180°), Experiment 3 aimed to characterise how motion uncertainty is reflected in decision confidence, reaction times, and potential choice biases.

3.3.1. Ambiguity at 0° and 180° Revealed by Choice Distribution

To assess whether phase differences between fingertip vibrations generate a reliable perception of motion direction, I examined participants’ choices across the range of phase offsets. For directional phase differences (e.g., ±30°, ±60°, and ±90°) performance accuracy captures the extent to which participants reported motion direction consistent with the sign of the phase difference (see

Figure 5A). However, at 0° and 180°, the vibrations were either perfectly in-phase or anti-phase across the two fingertips, resulting in symmetric temporal envelopes with no consistent directional cue. As such, for these two conditions, “correct” or “incorrect” responses are undefined. Thus, I instead analysed these conditions in terms of choice likelihood—specifically, the proportion of “leftward” responses (

Figure 5B).

At 0°, participants selected “left” on 52.2% of trials (SEM = 4.7%), not significantly different from chance (t(11) = 0.48; p = 0.64), consistent with perceptual ambiguity. At 180°, however, participants showed a subtle but reliable leftward bias (mean = 56.8%; SEM = 2.1%), which was significantly above chance (t(11) = 3.24; p = 0.008). This bias suggests that at 180°, even in the absence of reliable directional cues, early envelope asymmetries or internal decision biases may influence motion judgements.

3.3.2. Slower Responses Reflect Ambiguity in Motion Signal

Response time (RT) provides a behavioural index of the strength of sensory evidence. Here, I analysed how RT changed as a function of phase difference to assess how tactile motion signals support directional judgements under varying degrees of ambiguity. As shown in

Figure 5C, RTs followed a U-shaped pattern: responses were slower for the ambiguous conditions (0° and 180°) and faster for intermediate phase differences. To statistically assess this pattern, two linear mixed-effects (LME) models (with random intercepts per subject) were fitted to trial-level RTs. Trials with excessively long RTs (>15 s) were excluded, and the remaining RTs were log-transformed to reduce skewness. To account for individual baseline differences, subject-wise mean log-transformed RTs were subtracted, yielding a normalised measure. This approach is equivalent to divisive normalisation of RTs by each participant’s geometric mean.

The first model included as a fixed-effects predictor with random intercepts across participants, capturing the expected U-shaped pattern. The model revealed a robust negative effect ( SE; 95% CI ; ), consistent with slower responses at 0° and 180° and faster responses at 30°–90°. The second model included phase difference (in radians) and its square as fixed effects, allowing potential asymmetries. This quadratic model showed a significant positive quadratic term ( SE; 95% CI ; ) and a significant negative linear term ( SE; 95% CI ; ), implying a vertex (minimum) at 95.0°. These estimates align with a near-symmetric U-shaped RT pattern, as captured by the sine model. Indeed, the average RTs at 0° and 180° were nearly identical (5.32 ± 0.44 s and 5.32 ± 0.48 s, respectively), and both were higher than for other phase differences. However, a detailed characterisation of symmetry requires denser sampling across the full range of phase differences, particularly between 90° and 180°, as in Experiment 2.

Bayesian Information Criterion (BIC) provided preference for the simpler model (

), while Akaike Information Criterion (AIC) showed partial preference (

). A likelihood-ratio comparison treated heuristically (as models are not strictly nested) found no significant improvement in the fit over the simpler sine model (

,

), and residual dispersion was comparable for both models (∼

in log units). Thus, both approaches converge on the same conclusion that RTs were systematically longer under ambiguous conditions (0° and 180°) and shorter when phase differences provided stronger motion cues. The sine model, previously used to characterise performance in Experiments 2 and 3, captures this pattern well. Thus, the sine model specification was used as the primary summary of the RT effect in

Figure 5C, with model predictions retransformed from log-space using the smearing estimator [

51].

3.3.3. Confidence Ratings Track Motion Signal Strength

Confidence ratings reflect participants’ subjective certainty about each decision, providing a metacognitive index of how strongly they perceived the motion signals on each trial. Confidence was lowest at the extreme phase differences (0° and 180°) and highest at intermediate phase differences (30°–90°) as shown in

Figure 5D, mirroring the pattern observed in reaction times and consistent with weaker or more ambiguous motion signals at the extremes. As in the RT analysis, two linear mixed-effects models were used to model trial-level confidence. The first model used the phase difference (in radians) and its square for fixed effects with a random intercept per participant. The model revealed a robust quadratic relationship between confidence and phase difference reflected in a significant negative quadratic term (

SE; 95% CI

;

) and a significant positive linear term (

SE; 95% CI

;

), consistent with an inverted-U pattern.

The second model used as the fixed-effect, with random intercepts and participant-specific sine slopes. The fixed sine effect was positive and reliable ( SE; 95% CI ; ). This model fit the data better than the quadratic model ( and ), and this was also supported by a likelihood-ratio comparison treated heuristically because the models are not strictly nested (; ). To justify its random-effects structure, this model was further compared with two nested variants that included either a random intercept or a random slope. The intercept-only model was worse than the full sine model ( and ; , ), indicating that allowing participant-specific sine slopes improves fit. The slope-only model without a random intercept performed much worse than the full model ( and ; , ), supporting the inclusion of both random components.

These results indicate that participants were more confident when phase differences provided stronger directional cues and less confident when the motion signal was more ambiguous. Average confidence at 180° was 49.4% ± 6.8% (mean ± SEM across participants), lower than all other conditions, including 0° (52.8% ± 3.0%), indicating that anti-phase stimulation elicits especially uncertain percepts.

3.4. Phase Differences, Not Amplitude Differences, Drive Tactile Motion Perception

The behavioural ambiguity observed at 180° phase difference—reflected in a subtle directional bias, low confidence, and slow responses—raises a critical question: What stimulus features underlie tactile motion perception? Two plausible mechanisms are as follows: (i) motion perception based on the “phase difference” between two AM signals (i.e., relative temporal shifts in their envelopes), and (ii) perception based on moment-by-moment “amplitude” differences between the signals.

The present stimulus design enables these alternatives to be dissociated. While ±180° phase differences produce the largest instantaneous amplitude differences between fingertips, they contain no consistent directional information, as the +180° and −180° stimuli are physically identical and indistinguishable. If motion perception were driven by amplitude differences alone, one would expect robust and consistent directional judgements under these conditions—contrary to the observed choice likelihood patterns.

Moreover, an amplitude-based account might predict high confidence on individual trial bases (despite random direction across trials), assuming a salient motion signal. Yet, confidence ratings at 180° were the lowest across all phase differences, mirroring the slower responses typically associated with perceptual uncertainty. Together, these findings support a mechanism in which “phase differences” between signals, not momentary amplitude (or “energy”) differences, drive tactile motion perception.

3.5. Potential Underlying Neural Computations

As in vision, tactile motion perception may rely on multiple neural computations [

5,

52]. Here, I outline two candidate mechanisms that could support the perception of motion based on phase differences in vibrotactile signals. These mechanisms differ in whether they rely on the measures of similarity of temporal patterns or on the relative timing of specific features (e.g., peaks or troughs) in the tactile signals. Below, I briefly discuss each and assess their neural plausibility.

3.5.1. Temporal Cross-Correlation Mechanisms

A plausible computational mechanism underlying tactile motion perception is based on temporal cross-correlation of the continuous tactile sensory inputs received at the two fingers. In this scenario, the brain compares the envelopes of each vibration over a certain temporal window to estimate their relative lag (phase difference) similar to Reichardt detectors [

1,

53]. The inferred phase neural mechanisms for such temporal cross-correlation have been widely studied in other sensory systems. For example, in the auditory system, interaural time differences are computed via temporally sensitive circuits in the medial superior olive, involving coincidence detection mechanisms [

54]. In the electrosensory system of weakly electric fish, neurons perform delay-sensitive comparisons between signals from different electroreceptors to extract motion or phase differences of preys [

55]. While mammalian tactile system may not contain dedicated delay lines, some neurons in somatosensory cortex (particularly S1 and S2) exhibit phase-locked responses to frequency modulations [

56,

57], carrying information about the temporal patterns of sensory inputs. Additionally, cross-digit integration occurs at multiple levels, including primary and secondary somatosensory cortices, where receptive fields often span multiple fingers [

58,

59]. Such distributed, temporally sensitive representations could support correlation-based decoding of phase relationships. The observed sensitivity to small phase differences (e.g., 30°) in the present study is consistent with this type of integration. Thus, a biologically plausible hypothesis is that populations of neurons in somatosensory cortex, or possibly parietal areas, integrate envelope information and compare their temporal alignment. Population-level decoding of such temporal relationships could underlie the perceptual sensitivity to direction based on phase difference, as observed in the present experiments. Whether these computations occur via explicit cross-correlation at the neural level, or are approximated by population-level pooling across temporal patterns, remains to be clarified.

Importantly, these computations are not limited to biological intuition but are also grounded in formal estimation theory. Under assumptions of linearity and Gaussian noise, cross-correlation, least-squares, and maximum likelihood methods yield equivalent estimates for time delay between signals [

60]. These mechanisms are sensitive to the overall similarity and alignment of time-varying signals, rather than to discrete features such as peaks or zero-crossings. As such, they can operate continuously and flexibly and do not depend on precise extraction of singular time points, potentially making them robust to noise.

3.5.2. A Probabilistic Model from Envelope Landmarks

A second mechanism is that the tactile system detects specific temporal landmarks in the envelope of each vibration—such as peaks, troughs, or other salient features—and infers motion direction based on the temporal order or timing of these events relative to each other. This process is inherently susceptible to sensory noise and perceptual uncertainty, especially when the modulated envelope changes gradually or when features are close in time.

To formalise this temporal uncertainty, I propose a simple threshold-based model in which a temporal reference point (e.g., a peak) is detected when the change in the envelope exceeds a certain slope or amplitude threshold. Changes below this threshold are not perceived as distinct events. For instance, under this assumption, any portion of the envelope around the true peak whose amplitude lies within the threshold margin is perceptually indistinguishable from the true peak. This introduces variability in the perceived timing of features or leads to missed detections, particularly when the modulation depth is shallow or the envelope varies slowly.

Such detection uncertainty can lead to errors in temporal order judgements. For instance, two peaks occurring closely in time might be perceived in the wrong order, or the tactile system might match a peak from one vibration to the wrong cycle of the other, especially under large phase differences. These errors impair the brain’s ability to infer motion direction reliably. Importantly, this minimal model—based on a fixed amplitude detection threshold without any complex decoding and uniform temporal variability—produces non-trivial psychometric predictions. As illustrated in

Figure 6A, the model correctly generates an asymmetric curve of predicted proportion as a function of phase difference: for phase differences below 90° (e.g., 30°), errors primarily result from uncertainty in the temporal order of closely spaced landmarks, described by a quadratic function of phase difference (Equation (

15)), whereas for phase differences above 90° (e.g., for 150°), cross-cycle misalignments dominate due to increased ambiguity in aligning peaks across cycles, as captured by a piecewise cubic function of phase difference (Equation (

17)). This asymmetry is also evident in the present experimental data, particularly in Experiment 2 (

Figure 4), where performance at a 30° phase difference (69.7% ± 5.1%) is higher than at 150° (56.8% ± 2.6%), despite the physical symmetry of the stimuli.

To evaluate the model’s predictive validity, I fit the probabilistic model to behavioural accuracy data across participants. The best-fitting threshold—expressed as an amplitude proportion relative to the envelope peak—was 0.57 ± 0.07, with a root mean squared error (RMSE) of 0.068 ± 0.009 across subjects (

, from Experiment 1 and 2). An RMSE of 0.068 corresponds to an average prediction error of 6.8% in accuracy, indicating that the model achieves reasonably close fits to the observed performances (

Figure 6B).

While this model was implemented based on peak detection, the same logic applies to other types of envelope features, including troughs or points of inflection. The key principle is that temporal reference points are perceived only if they exceed a salience threshold, and perceptual errors emerge from variability in the timing or detectability of these points. This model captures the dual sources of perceptual error: (1) local ambiguity in temporal order when the temporal reference points are too close and (2) misattribution across cycles when phase differences approach 180°.

Critically, this model explains why perceptual performance deteriorates at large phase differences despite increased amplitude contrast: the temporal lag between reference points (e.g. peaks) is closer to , increasing the probability that reference points from one vibration are misattributed to a different cycle of the other. These findings suggest that under threshold-limited temporal resolution, tactile motion perception involves a delicate balance between fine temporal discrimination and the global temporal structure of the stimulus.

Together, these results suggest that tactile motion perception across fingers could be shaped by both global (e.g., cross-correlation) and local (event-based) temporal processing mechanisms, each with distinct neural constraints and noise profiles.

4. Conclusions

This study investigated how the tactile system extracts spatial information about object motion from temporally structured vibrations delivered to two fingertips. Across three experiments, I delivered pairs of amplitude-modulated vibrations—each comprising a 100 Hz carrier modulated by a low-frequency sinusoidal envelope—to simulate continuous tactile motion. By systematically varying the phase difference between the two envelopes, I quantified how inter-fingertip phase offsets influence perceived motion direction, response latency, and confidence.

The present findings confirmed that the direction of perceived motion is determined by the phase difference between the two vibrations and not by their absolute frequency or amplitude. Experiment 1 showed that sinusoidal envelope vibrations reliably elicited robust directional motion percepts, comparable to those evoked by natural patterns (e.g., exponential). Notably, Zhao et al. [

12] found that gradually ramped vibrotactile stimuli produced stronger and smoother motion percepts than abrupt onsets, consistent with the use of continuous amplitude modulated vibrations in the present study to simulate naturalistic motion cues. Experiment 2 established that the upper frequency limit for reliable tactile motion discrimination lies below 1.5 Hz, a nearly ten-fold lower upper limit than those reported in earlier studies using similar stimuli but for asynchrony detection [

35]. Experiment 3 revealed systematic changes in confidence and reaction time with phase difference, with ambiguous conditions (0° and 180°) producing slower responses and lower confidence ratings. Importantly, the 180° condition, despite producing the largest moment-by-moment amplitude differences between fingers, did not yield a consistent percept of direction, suggesting that motion perception depends on phase differences, not amplitude disparity.

Together, these results provide new insight into the computational basis of tactile motion perception. They support a mechanism in which tactile motion perception arises from the relative phase differences between temporally structured signals across skin locations, rather than from instantaneous amplitude differences or energy shifts. Unlike prior studies of tactile synchrony detection, the present paradigm required spatial trajectory inference across inputs, revealing that “phase-based” temporal integration, rather than amplitude contrast, underpins tactile motion perception. While Kuroki et al. [

35] demonstrated that humans can detect temporal asynchrony in AM tactile stimuli at higher modulation frequencies (up to 20 Hz) indicative of sensitivity to temporal structure, their task probed asynchrony detection, not motion inference. Drawing parallels to the visual system, they proposed that tactile perception may rely on both “phase-shift” and “energy-shift” mechanisms, analogous to first- and second-order motion processing in vision.

Notably, Kuroki et al. [

35] reported peak detection at 180° phase difference. Yet in the current study, the same phase difference produced ambiguous motion percepts, reflected in lower confidence, slower responses, and inconsistent choices. This discrepancy likely reflects task-specific neural computations for synchrony detection and motion perception. Synchrony detection may rely on local temporal contrast or energy cues at single skin locations, whereas tactile motion perception requires spatial comparison and temporal integration across fingertips. The present results suggest that phase-based readout, rather than local amplitude difference, is central to tactile motion perception.

This dissociation highlights that motion perception depends on the integration of temporal phase relationships across space and time. As in the visual system, where distinct pathways support multiple forms of motion processing, the tactile system may also engage parallel mechanisms for temporal analysis. Phase-based computations appear specifically tuned for inferring motion trajectories, distinguishing them from those supporting synchrony detection. These findings reveal how the tactile system transforms temporally structured input into spatial motion percepts and how the brain selectively engages distinct temporal codes based on perceptual goals.

Here, I proposed two complementary models of tactile motion perception; one based on global cross-correlation of vibration envelopes and another relying on local temporal comparisons between salient features such as envelope peaks. While the cross-correlation model captures overall waveform similarity, the feature-based model formalises direction perception as a probabilistic judgement derived from uncertain detection of temporal landmarks within amplitude-defined windows. Notably, both models are applicable to conventional apparent motion paradigms, where discrete or pulsed stimuli with staggered onsets simulate movement. Although the inter-peak intervals in the present study (e.g., 167 ms for 30° and 500 ms for 90° at 0.5 Hz) exceed classical tactile temporal order judgement thresholds [

61], participants nonetheless exhibited robust directional performance and systematic confidence patterns. Notably, performance at a 30° phase lag aligns with previously reported temporal order judgement thresholds (∼100 ms; [

61]), despite differences in stimulus type and parameters, suggesting that reliable direction perception can emerge without discrete onsets or overt spatial displacement. While supramodal attentional tracking could, in principle, support such judgements—e.g., by tracking salient events across time and space irrespective of sensory modality—the proposed model provides a tactile-specific alternative. It attributes direction perception to probabilistic comparisons between uncertain temporal landmarks (e.g., envelope peaks), detected within amplitude-defined integration windows. This framework captures the non-linearity in psychometric curves, including both the reliable direction perception at shorter phase lags and the ambiguity at 180°, without invoking higher-level amodal mechanisms or cross-modal attentional strategies. Instead, it reflects constraints intrinsic to tactile processing, where perceptual uncertainty in temporal feature extraction shapes directional judgements.

Central to the perception of the vibration-induced motion studied here is the brain’s ability to track dynamic changes in the envelopes of tactile signals and extract directional information from their relative timing. This sensory strategy has analogues across species: arachnids, for example, detect prey using complex vibration patterns transmitted through webs or substrates, relying on finely tuned mechanosensory systems that evolved independently from vertebrate touch [

34]. In mammalian glabrous skin, Meissner’s and Pacinian corpuscles are specialised for detecting vibration [

62,

63,

64,

65,

66], with Pacinian corpuscles implicated in encoding vibrotactile pitch in both mice and humans [

67,

68,

69,

70]. My previous work demonstrated that rodents can discriminate vibrations based on both amplitude and frequency using their whiskers [

57,

71,

72]. Neurons in primary somatosensory cortex integrate these features in a way that supports vibrotactile perception. The present study builds on these principles, showing that temporal features—specifically phase relationships—can be exploited to generate robust perceptions of tactile motion across fingertips. This supports the idea that tactile systems, across species and sensor types, flexibly encode both spectral and temporal properties of mechanical stimuli to extract high-level perceptual content.

In natural touch, a variety of cues, such as localised skin stretch in the direction of motion, texture changes, and temporal shear patterns by engaging multiple mechanoreceptor types (e.g., SA1, SA2, RA1, and RA2) [

3,

73], jointly contribute to tactile motion perception. In contrast, the present study demonstrates that even a single channel of information—vibrotactile input delivered to just two skin sites—is sufficient to elicit robust and directionally specific motion percepts. While contact force, finger posture, and transducer–skin coupling were not directly measured, participants maintained passive, stable contact with the actuators, in line with established psychophysical methods [

74]. These controlled conditions yielded consistent directional reports, indicating that the observed effects are robust to minor naturalistic variability in contact conditions. Future studies may incorporate force sensing or posture tracking to further refine the paradigm; however, such additions are not necessary to support the current findings.

A major challenge in somatosensory research is delivering tactile stimuli with high precision and consistency, particularly in freely moving animal preparations or in humans, where skin mechanics, posture, and contact variability can alter mechanoreceptor engagement. To mitigate these issues, the present paradigm derives motion perception from the phase relationships between temporally structured vibrations delivered at fixed spatial locations without requiring physical displacement. This approach decouples directional cues from low-level variables such as pressure, tension, amplitude, or frequency fluctuations, offering a robust and reproducible method. Moreover, it provides a powerful framework for probing tactile decision-making and perceptual inference under structured temporal stimulation, analogous to the random-dot motion in vision science. By dissociating low-level vibration features from high-level motion percepts, the paradigm links somatosensory encoding with computational decision models across both human and animal studies. Future extensions, particularly when combined with physiological or imaging techniques, may help test and refine the proposed computational mechanisms and further illuminate the neural basis of tactile motion perception, laying the scientific groundwork for applications in touch-based interfaces, neuroprosthetics, and tactile cognition.

Finally, given the increasing demand for motion-capable haptic feedback in wearable systems, neuroprosthetic interfaces, and immersive VR/AR environments [

40,

41,

42], this approach can be integrated with current wearable vibrotactile devices such as [

37,

38,

39] providing an efficient, scalable, lightweight, and cost-effective method for conveying directional tactile motion information without physical displacement—even in settings with limited actuator count or power budget such as mobile platforms. Further research is required to evaluate the paradigm with larger and more diverse participant groups, under a wider range of contact conditions and stimulation parameters, and across other body regions to better understand its generalisability and limitations.