Real-Time Auto-Monitoring of Livestock: Quantitative Framework and Challenges

Abstract

1. Introduction

2. Sensors and Data Streams for Livestock Monitoring

3. Framing the Prediction/Decision and Validation Problem

4. Methods for Predictions/Decisions

4.1. Distribution-Free Statistical Approaches

4.2. Anomaly/Change-Point Detection

4.3. Classical Statistical Modelling

4.3.1. Modelling Usual Monitoring Data

4.3.2. Modelling Based on the Outcome of Interest

4.4. Latent Class or Variable Modelling

4.5. Machine Learning Methods

4.5.1. Basic Machine Learning Methods

4.5.2. Neural Networks

4.5.3. Application of Machine Learning in Prediction/Decision Context

4.6. Discussion of Alternative Prediction/Decision Methods

5. Validation of Predictions/Decisions

5.1. Challenges

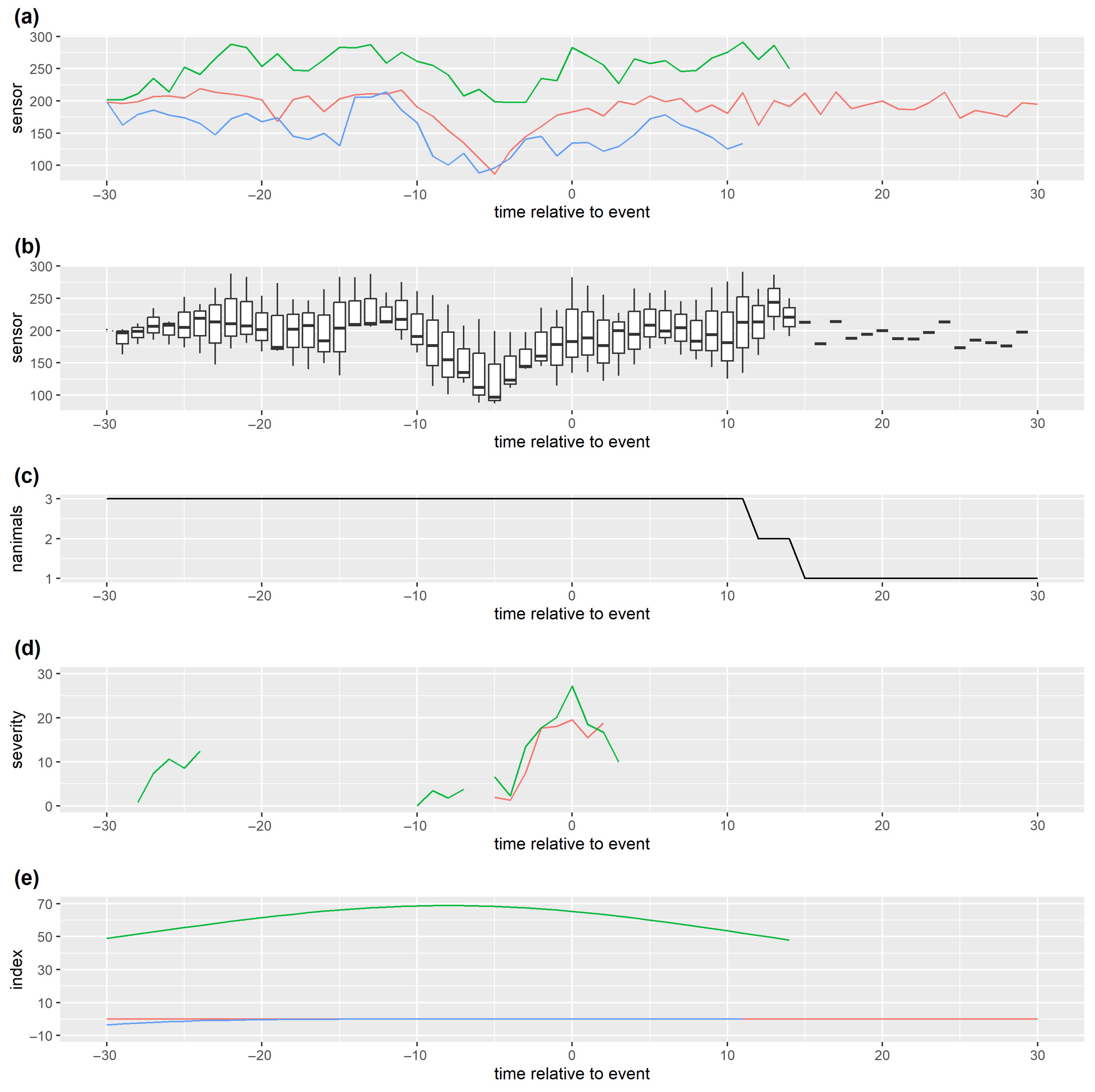

5.2. Data Visualisation

5.3. Quantitative Assessment

5.3.1. Classification

5.3.2. Severity

5.3.3. Time Lags and Other Temporal Considerations

5.4. Cross-Validation

5.5. On-Farm Validation in Practice

5.6. Other Considerations

6. Detailed Examples and Types of Studies

6.1. Small-Scale Clinical Studies for Specific Health Issues

6.2. On-Farm and In-Field Studies

6.2.1. Dairy Farms

6.2.2. Extensively Managed Cattle and Sheep

6.2.3. Pigs and Poultry

6.2.4. Summary

6.3. High-Level Validation Studies

7. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

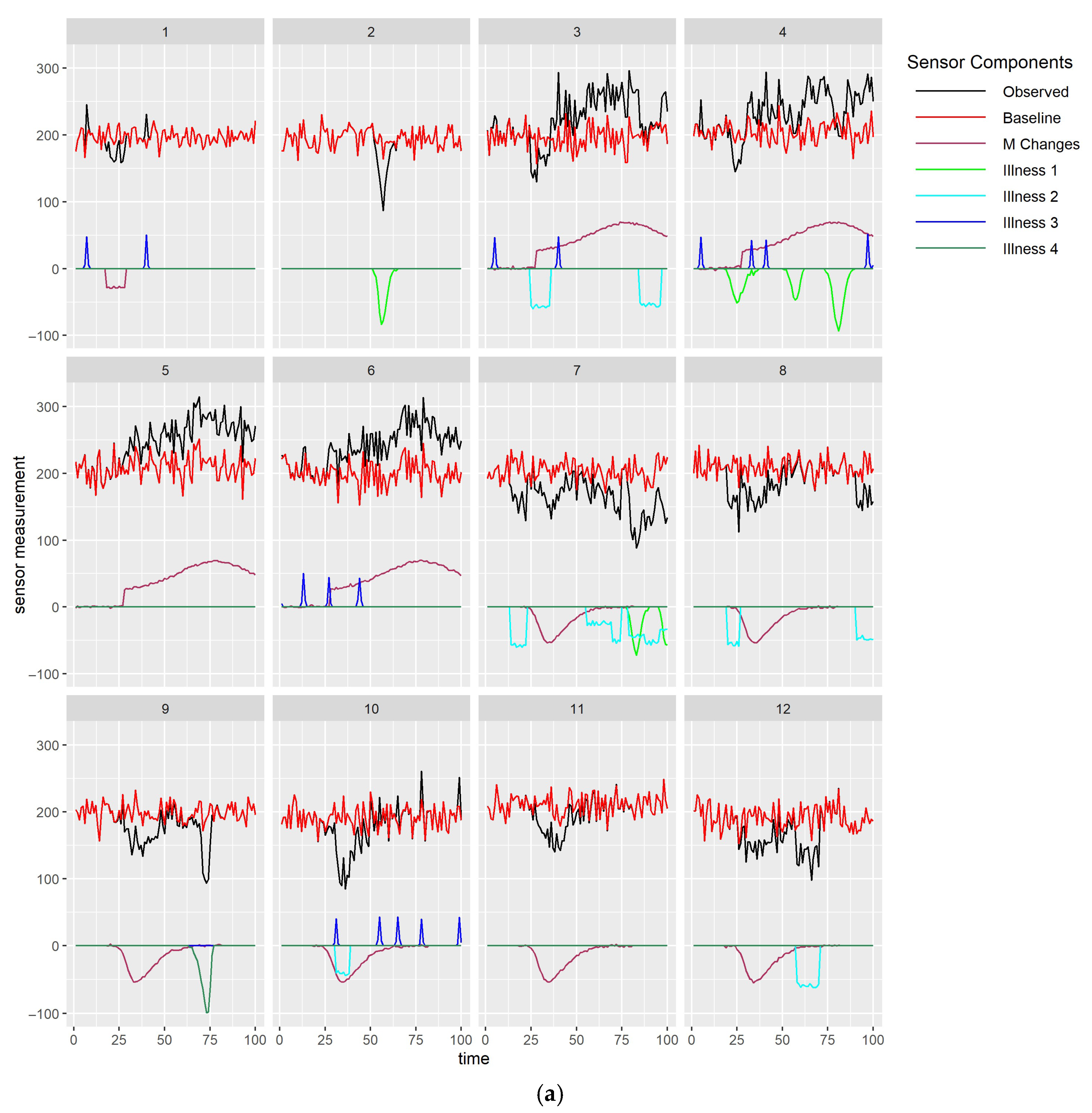

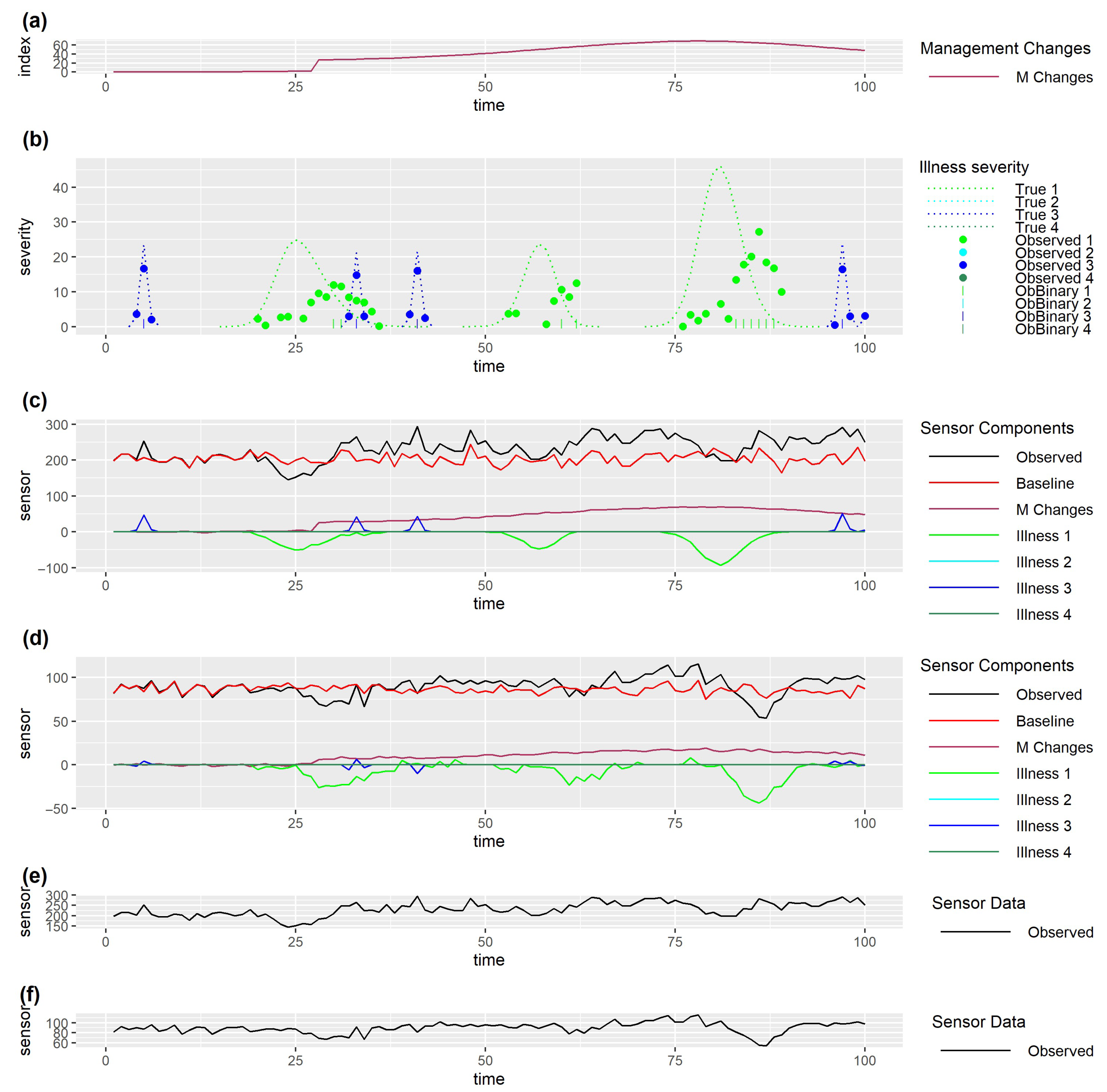

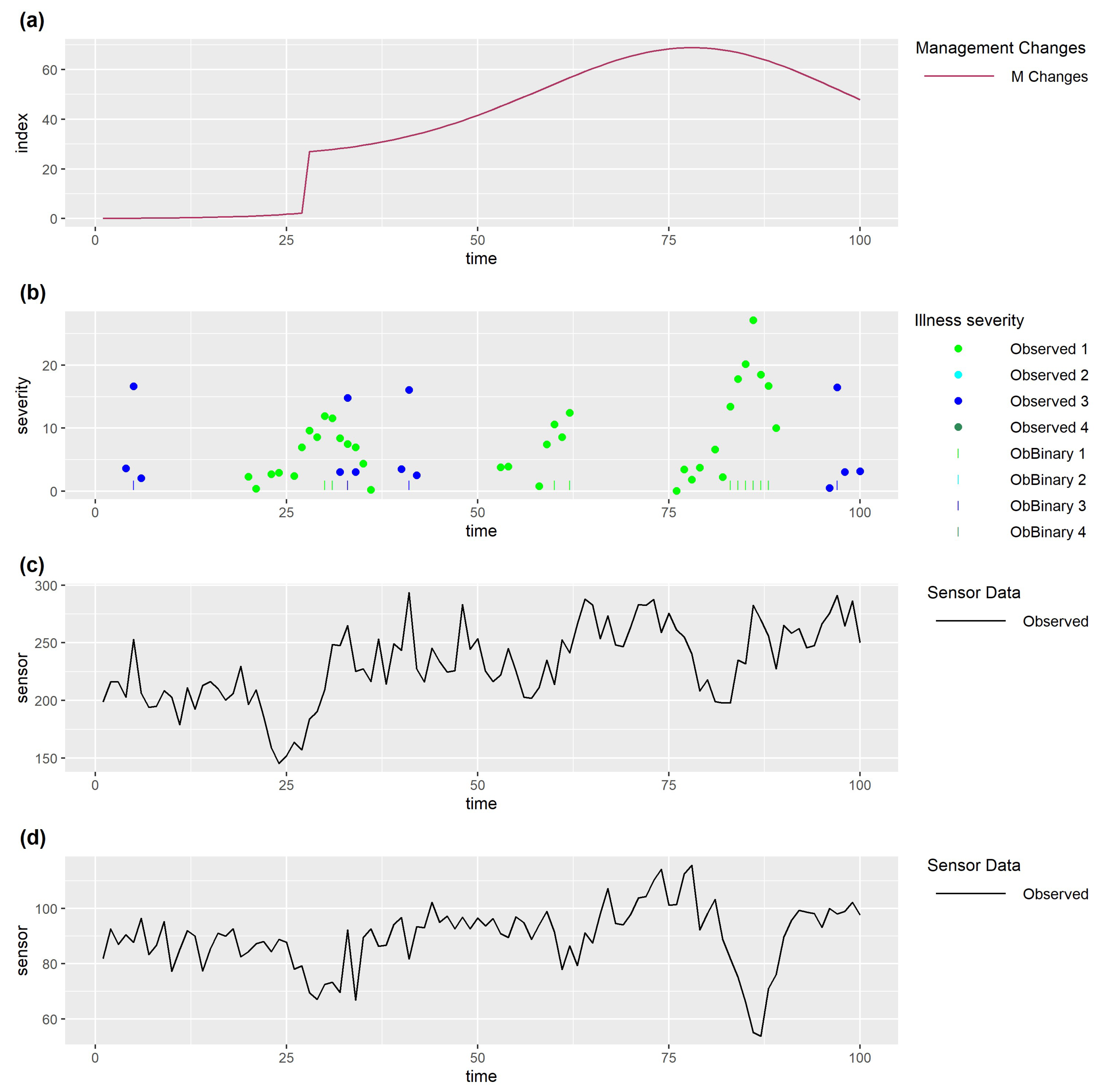

Appendix A. Mathematical Details for Simulation Program

Appendix A.1. Generating Health Data

Appendix A.2. Generating Management Data

Appendix A.3. Generating Sensor Data

Appendix A.4. Parameters Used in Simulation

| Parameter Name | Equation | Parameters 1 |

|---|---|---|

| (A1) | (0.4, 0.3, 0.5, 0.2) | |

| (A2) | (0.01, 0.02, 0.03, 0.01) | |

| (A3) | (2.0, 1.0, 0.5, 2.0) | |

| (A3) | (4.0, 2.0, 0.5, 4.0) | |

| (A3) | (−0.5, −0.1, 0.1, −0.9) | |

| (A3) | (0.5, 0.1, 0.2, −0.4) | |

| (A3) | (0, 10, 0, 0) | |

| (A3) | (0, 30, 0, 0) | |

| (A3) | (20, 10, 20, 30) | |

| (A3) | (50, 40, 25, 70) | |

| (A3) | (1, 0, 1, 1) | |

| (A3) | (0.001, 0.001, 0.001, 0.001) | |

| (A5) | (0, 0, 0, 0) |

| Parameter Name | Equation | Parameters 1 |

|---|---|---|

| (A6) | (0, 0, 0, 0) | |

| (A6) | (0.5, 0.7, 0.7, 0.9) | |

| (A6) | (10, 5, 1, 4) | |

| (A6) | (5, 0, 0, 0) | |

| (A6) | (1, 1, 1, 1) | |

| (A6) | ((1, 1, 7), (1, 1, 7), (1, 1, 1), (1, 1, 1)) 2 | |

| (A7) | (10, 0, 5, 10) |

| Parameter Name | Equation | Parameters 1 |

|---|---|---|

| (A8) | (1, 1, 1) | |

| (A9) | (0.01, 0.01, 0.01) | |

| (A10) | (1, 20, 2) | |

| (A10) | (2, 20, 8) | |

| (A10) | (0.8, −0.1, 0.8) | |

| (A10) | (0.9, 0.1, 0.9) | |

| (A10) | (20, 20, 0) | |

| (A10) | (30, 30, 0) | |

| (A10) | (30, 30, 50) | |

| (A10) | (50, 50, 60) | |

| (A10) | (0, 1, 1) | |

| (A10) | (−1, 1, −1) | |

| (A10) | (0.001, 0.001, 0.001) | |

| (A11) | (0.5, 1.0, 1.0) |

| Parameter Name | Equation | Parameters 1 |

|---|---|---|

| (A14) | (200, 100) | |

| (A14) | (10, 20) | |

| (A14) | (200, 40) | |

| (A14) | (10, 5) | |

| (A14) | (200, 30) | |

| (A14) | (10, 5) | |

| (A14) | (200, 10) |

| Parameter Name | Equation | Parameters 1 |

|---|---|---|

| (A15) | ((0, 0, 0, 0), (0, 0, 0, 0)) | |

| (A15) | ((−2, −2, 2, −2), (−1, −1, 0, −1)) | |

| (A15) | ((4, 4, 4, 4), (16, 16, 16, 16)) | |

| (A15) | ((0, 0, 0, 0), (5, 0, 0, 0)) | |

| (A15) | ((1, 1, 1, 1), (0, 1, 1, 1)) |

| Parameter Name | Equation | Parameters 1 |

|---|---|---|

| (A16) | ((0, 0, 0), (0, 0, 0)) | |

| (A16) | ((1, 1, 1), (0.25, 0.25, 0.25)) | |

| (A16) | ((1, 1, 1), (1, 1, 1)) | |

| (A16) | ((0, 0, 0), (0, 0, 10)) | |

| (A16) | ((1, 1, 1), (1, 1, 0)) |

Appendix A.5. Code Used

References

- Dayioğlu, M.A.; Türker, U. Digital Transformation for Sustainable Future-Agriculture 4.0: A Review. J. Agric. Sci. 2021, 27, 373–399. [Google Scholar] [CrossRef]

- Monteiro, A.; Santos, S.; Gonçalves, P. Precision Agriculture for Crop and Livestock Farming—Brief Review. Animals 2021, 11, 2345. [Google Scholar] [CrossRef]

- Karunathilake, E.M.B.M.; Le, A.T.; Heo, S.; Chung, Y.S.; Mansoor, S. The Path to Smart Farming: Innovations and Opportunities in Precision Agriculture. Agriculture 2023, 13, 1593. [Google Scholar] [CrossRef]

- Benos, L.; Tagarakis, A.C.; Dolias, G.; Berruto, R.; Kateris, D.; Bochtis, D. Machine Learning in Agriculture: A Comprehensive Updated Review. Sensors 2021, 21, 3758. [Google Scholar] [CrossRef]

- Sharma, A.; Jain, A.; Gupta, P.; Chowdary, V. Machine Learning Applications for Precision Agriculture: A Comprehensive Review. IEEE Access 2021, 9, 4843–4873. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Jiang, B.; Tang, W.; Cui, L.; Deng, X. Precision Livestock Farming Research: A Global Scientometric Review. Animals 2023, 13, 2096. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, X.; Feng, H.; Huang, Q.; Xiao, X.; Zhang, X. Wearable Internet of Things Enabled Precision Livestock Farming in Smart Farms: A Review of Technical Solutions for Precise Perception, Biocompatibility, and Sustainability Monitoring. J. Clean. Prod. 2021, 312, 127712. [Google Scholar] [CrossRef]

- Rosa, G.J.M. Grand Challenge in Precision Livestock Farming. Front. Anim. Sci. 2021, 2, 650324. [Google Scholar] [CrossRef]

- van Erp-van der, E.; Rutter, S.M. Using Precision Farming to Improve Animal Welfare. CAB Rev. Perspect. Agric. Vet. Sci. 2020, 15, 1–10. [Google Scholar] [CrossRef]

- Bailey, D.W.; Trotter, M.G.; Tobin, C.; Thomas, M.G. Opportunities to Apply Precision Livestock Management on Rangelands. Front. Sustain. Food Syst. 2021, 5, 611915. [Google Scholar] [CrossRef]

- Tobin, C.T.; Bailey, D.W.; Stephenson, M.B.; Trotter, M.G.; Knight, C.W.; Faist, A.M. Opportunities to Monitor Animal Welfare Using the Five Freedoms with Precision Livestock Management on Rangelands. Front. Anim. Sci. 2022, 3, 928514. [Google Scholar] [CrossRef]

- Aquilani, C.; Confessore, A.; Bozzi, R.; Sirtori, F.; Pugliese, C. Review: Precision Livestock Farming Technologies in Pasture-Based Livestock Systems. Animal 2022, 16, 100429. [Google Scholar] [CrossRef]

- Neethirajan, S.; Kemp, B. Digital Livestock Farming. Sens. Biosensing Res. 2021, 32, 100408. [Google Scholar] [CrossRef]

- García, R.; Aguilar, J.; Toro, M.; Pinto, A.; Rodríguez, P. A Systematic Literature Review on the Use of Machine Learning in Precision Livestock Farming. Comput. Electron. Agric. 2020, 179, 105826. [Google Scholar] [CrossRef]

- Neethirajan, S. The Role of Sensors, Big Data and Machine Learning in Modern Animal Farming. Sens. Biosensing Res. 2020, 29, 100367. [Google Scholar] [CrossRef]

- Tedeschi, L.O.; Greenwood, P.L.; Halachmi, I. Advancements in Sensor Technology and Decision Support Intelligent Tools to Assist Smart Livestock Farming. J. Anim. Sci. 2021, 99, skab038. [Google Scholar] [CrossRef] [PubMed]

- Neethirajan, S. Artificial Intelligence and Sensor Technologies in Dairy Livestock Export: Charting a Digital Transformation. Sensors 2023, 23, 7045. [Google Scholar] [CrossRef]

- Morota, G.; Ventura, R.V.; Silva, F.F.; Koyama, M.; Fernando, S.C. Big Data Analytics and Precision Animal Agriculture Symposium: Machine Learning and Data Mining Advance Predictive Big Data Analysis in Precision Animal Agriculture. J. Anim. Sci. 2018, 96, 1540–1550. [Google Scholar] [CrossRef]

- Neethirajan, S. SOLARIA-SensOr-Driven ResiLient and Adaptive MonitoRIng of Farm Animals. Agriculture 2023, 13, 436. [Google Scholar] [CrossRef]

- Neethirajan, S. Artificial Intelligence and Sensor Innovations: Enhancing Livestock Welfare with a Human-Centric Approach. Hum. Centric Intell. Syst. 2023, 4, 77–92. [Google Scholar] [CrossRef]

- Agrawal, S.; Ghosh, S.; Kaushal, S.; Roy, B.; Nigwal, A.; Lakhani, G.P.; Jain, A.; Udde, V. Precision Dairy Farming: A Boon for Dairy Farm Management. Int. J. Innov. Sci. Res. Technol. 2023, 8, 509–518. [Google Scholar]

- Kleen, J.L.; Guatteo, R. Precision Livestock Farming: What Does It Contain and What Are the Perspectives? Animals 2023, 13, 779. [Google Scholar] [CrossRef]

- Michie, C.; Andonovic, I.; Davison, C.; Hamilton, A.; Tachtatzis, C.; Jonsson, N.; Duthie, C.A.; Bowen, J.; Gilroy, M. The Internet of Things Enhancing Animal Welfare and Farm Operational Efficiency. J. Dairy Res. 2020, 87, 20–27. [Google Scholar] [CrossRef]

- Knight, C.H. Sensor Techniques in Ruminants: More than Fitness Trackers. Animal 2020, 14, s187–s195. [Google Scholar] [CrossRef] [PubMed]

- Szenci, O. Accuracy to Predict the Onset of Calving in Dairy Farms by Using Different Precision Livestock Farming Devices. Animals 2022, 12, 2006. [Google Scholar] [CrossRef] [PubMed]

- Santos, C.A.d.; Landim, N.M.D.; Araújo, H.X.d.; Paim, T.d.P. Automated Systems for Estrous and Calving Detection in Dairy Cattle. AgriEngineering 2022, 4, 475–482. [Google Scholar] [CrossRef]

- Antanaitis, R.; Anskienė, L.; Palubinskas, G.; Džermeikaitė, K.; Bačėninaitė, D.; Viora, L.; Rutkauskas, A. Ruminating, Eating, and Locomotion Behavior Registered by Innovative Technologies around Calving in Dairy Cows. Animals 2023, 13, 1257. [Google Scholar] [CrossRef]

- Borchers, M.R.; Chang, Y.M.; Proudfoot, K.L.; Wadsworth, B.A.; Stone, A.E.; Bewley, J.M. Machine-Learning-Based Calving Prediction from Activity, Lying, and Ruminating Behaviors in Dairy Cattle. J. Dairy. Sci. 2017, 100, 5664–5674. [Google Scholar] [CrossRef]

- Keceli, A.S.; Catal, C.; Kaya, A.; Tekinerdogan, B. Development of a Recurrent Neural Networks-Based Calving Prediction Model Using Activity and Behavioral Data. Comput. Electron. Agric. 2020, 170, 105285. [Google Scholar] [CrossRef]

- Horváth, A.; Lénárt, L.; Csepreghy, A.; Madar, M.; Pálffy, M.; Szenci, O. A Field Study Using Different Technologies to Detect Calving at a Large-Scale Hungarian Dairy Farm. Reprod. Domest. Anim. 2021, 56, 673–679. [Google Scholar] [CrossRef]

- Crociati, M.; Sylla, L.; De Vincenzi, A.; Stradaioli, G.; Monaci, M. How to Predict Parturition in Cattle? A Literature Review of Automatic Devices and Technologies for Remote Monitoring and Calving Prediction. Animals 2022, 12, 405. [Google Scholar] [CrossRef]

- Steensels, M.; Antler, A.; Bahr, C.; Berckmans, D.; Maltz, E.; Halachmi, I. A Decision-Tree Model to Detect Post-Calving Diseases Based on Rumination, Activity, Milk Yield, BW and Voluntary Visits to the Milking Robot. Animal 2016, 10, 1493–1500. [Google Scholar] [CrossRef]

- Higaki, S.; Matsui, Y.; Sasaki, Y.; Takahashi, K.; Honkawa, K.; Horii, Y.; Minamino, T.; Suda, T.; Yoshioka, K. Prediction of 24-h and 6-h Periods before Calving Using a Multimodal Tail-Attached Device Equipped with a Thermistor and 3-Axis Accelerometer through Supervised Machine Learning. Animals 2022, 12, 2095. [Google Scholar] [CrossRef]

- Van Hertem, T.; Bahr, C.; Tello, A.S.; Viazzi, S.; Steensels, M.; Romanini, C.E.B.; Lokhorst, C.; Maltz, E.; Halachmi, I.; Berckmans, D. Lameness Detection in Dairy Cattle: Single Predictor v. Multivariate Analysis of Image-Based Posture Processing and Behaviour and Performance Sensing. Animal 2016, 10, 1525–1532. [Google Scholar] [CrossRef]

- Riaboff, L.; Relun, A.; Petiot, C.-E.; Feuilloy, M.; Couvreur, S.; Madouasse, A. Identification of Discriminating Behavioural and Movement Variables in Lameness Scores of Dairy Cows at Pasture from Accelerometer and GPS Sensors Using a Partial Least Squares Discriminant Analysis. Prev. Vet. Med. 2021, 193, 105383. [Google Scholar] [CrossRef]

- Lemmens, L.; Schodl, K.; Fuerst-Waltl, B.; Schwarzenbacher, H.; Egger-Danner, C.; Linke, K.; Suntinger, M.; Phelan, M.; Mayerhofer, M.; Steininger, F.; et al. The Combined Use of Automated Milking System and Sensor Data to Improve Detection of Mild Lameness in Dairy Cattle. Animals 2023, 13, 1180. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Han, S.; Wu, J.; Cheng, G.; Wang, Y.; Wu, S.; Liu, J. Early Lameness Detection in Dairy Cattle Based on Wearable Gait Analysis Using Semi-Supervised LSTM-Autoencoder. Comput. Electron. Agric. 2023, 213, 108252. [Google Scholar] [CrossRef]

- Beer, G.; Alsaaod, M.; Starke, A.; Schuepbach-Regula, G.; Müller, H.; Kohler, P.; Steiner, A. Use of Extended Characteristics of Locomotion and Feeding Behavior for Automated Identification of Lame Dairy Cows. PLoS ONE 2016, 11, e0155796. [Google Scholar] [CrossRef] [PubMed]

- Post, C.; Rietz, C.; Büscher, W.; Müller, U. Using Sensor Data to Detect Lameness and Mastitis Treatment Events in Dairy Cows: A Comparison of Classification Models. Sensors 2020, 20, 3863. [Google Scholar] [CrossRef]

- Kang, X.; Zhang, X.D.; Liu, G. A Review: Development of Computer Vision-based Lameness Detection for Dairy Cows and Discussion of the Practical Applications. Sensors 2021, 21, 753. [Google Scholar] [CrossRef]

- O’Leary, N.W.; Byrne, D.T.; O’Connor, A.H.; Shalloo, L. Invited Review: Cattle Lameness Detection with Accelerometers. J. Dairy. Sci. 2020, 103, 3895–3911. [Google Scholar] [CrossRef]

- Busin, V.; Viora, L.; King, G.; Tomlinson, M.; Lekernec, J.; Jonsson, N.; Fioranelli, F. Evaluation of Lameness Detection Using Radar Sensing in Ruminants. Vet. Rec. 2019, 185, 572. [Google Scholar] [CrossRef]

- Shrestha, A.; Loukas, C.; Le Kernec, J.; Fioranelli, F.; Busin, V.; Jonsson, N.; King, G.; Tomlinson, M.; Viora, L.; Voute, L. Animal Lameness Detection with Radar Sensing. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1189–1193. [Google Scholar] [CrossRef]

- Perttu, R.K.; Peiter, M.; Bresolin, T.; Dórea, J.R.R.; Endres, M.I. Predictive Models for Disease Detection in Group-Housed Preweaning Dairy Calves Using Data Collected from Automated Milk Feeders. J. Dairy. Sci. 2024, 107, 331–341. [Google Scholar] [CrossRef] [PubMed]

- Silva, F.G.; Conceição, C.; Pereira, A.M.F.; Cerqueira, J.L.; Silva, S.R. Literature Review on Technological Applications to Monitor and Evaluate Calves’ Health and Welfare. Animals 2023, 13, 1148. [Google Scholar] [CrossRef]

- Džermeikaitė, K.; Bačėninaitė, D.; Antanaitis, R. Innovations in Cattle Farming: Application of Innovative Technologies and Sensors in the Diagnosis of Diseases. Animals 2023, 13, 780. [Google Scholar] [CrossRef] [PubMed]

- Vidal, G.; Sharpnack, J.; Pinedo, P.; Tsai, I.C.; Lee, A.R.; Martínez-López, B. Impact of Sensor Data Pre-Processing Strategies and Selection of Machine Learning Algorithm on the Prediction of Metritis Events in Dairy Cattle. Prev. Vet. Med. 2023, 215, 105903. [Google Scholar] [CrossRef] [PubMed]

- Denholm, S.J.; Brand, W.; Mitchell, A.P.; Wells, A.T.; Krzyzelewski, T.; Smith, S.L.; Wall, E.; Coffey, M.P. Predicting Bovine Tuberculosis Status of Dairy Cows from Mid-Infrared Spectral Data of Milk Using Deep Learning. J. Dairy. Sci. 2020, 103, 9355–9367. [Google Scholar] [CrossRef] [PubMed]

- Cantor, M.C.; Casella, E.; Silvestri, S.; Renaud, D.L.; Costa, J.H.C. Using Machine Learning and Behavioral Patterns Observed by Automated Feeders and Accelerometers for the Early Indication of Clinical Bovine Respiratory Disease Status in Preweaned Dairy Calves. Front. Anim. Sci. 2022, 3, 852359. [Google Scholar] [CrossRef]

- Bowen, J.M.; Haskell, M.J.; Miller, G.A.; Mason, C.S.; Bell, D.J.; Duthie, C.A. Early Prediction of Respiratory Disease in Preweaning Dairy Calves Using Feeding and Activity Behaviors. J. Dairy. Sci. 2021, 104, 12009–12018. [Google Scholar] [CrossRef]

- Duthie, C.A.; Bowen, J.M.; Bell, D.J.; Miller, G.A.; Mason, C.; Haskell, M.J. Feeding Behaviour and Activity as Early Indicators of Disease in Pre-Weaned Dairy Calves. Animal 2021, 15, 100150. [Google Scholar] [CrossRef]

- De Vries, A.; Bliznyuk, N.; Pinedo, P. Invited Review: Examples and Opportunities for Artificial Intelligence (AI) in Dairy Farms. Appl. Anim. Sci. 2023, 39, 14–22. [Google Scholar] [CrossRef]

- Ghaffari, M.H.; Monneret, A.; Hammon, H.M.; Post, C.; Müller, U.; Frieten, D.; Gerbert, C.; Dusel, G.; Koch, C. Deep Convolutional Neural Networks for the Detection of Diarrhea and Respiratory Disease in Preweaning Dairy Calves Using Data from Automated Milk Feeders. J. Dairy. Sci. 2022, 105, 9882–9895. [Google Scholar] [CrossRef]

- Shahinfar, S.; Khansefid, M.; Haile-Mariam, M.; Pryce, J.E. Machine Learning Approaches for the Prediction of Lameness in Dairy Cows. Animal 2021, 15, 100391. [Google Scholar] [CrossRef]

- Williams, T.; Wilson, C.; Wynn, P.; Costa, D. Opportunities for Precision Livestock Management in the Face of Climate Change: A Focus on Extensive Systems. Anim. Front. 2021, 11, 63–68. [Google Scholar] [CrossRef] [PubMed]

- Chang, A.Z.; Swain, D.L.; Trotter, M.G. A Multi-Sensor Approach to Calving Detection. Inf. Process. Agric. 2024, 11, 45–64. [Google Scholar] [CrossRef]

- Williams, T.M.; Costa, D.F.A.; Wilson, C.S.; Chang, A.; Manning, J.; Swain, D.; Trotter, M.G. Sensor-Based Detection of Parturition in Beef Cattle Grazing in an Extensive Landscape: A Case Study Using a Commercial GNSS Collar. Anim. Prod. Sci. 2022, 62, 993–999. [Google Scholar] [CrossRef]

- García García, M.J.; Maroto Molina, F.; Pérez Marín, C.C.; Pérez Marín, D.C. Potential for Automatic Detection of Calving in Beef Cows Grazing on Rangelands from Global Navigate Satellite System Collar Data. Animal 2023, 17, 100901. [Google Scholar] [CrossRef]

- Chang, A.Z.; Swain, D.L.; Trotter, M.G. Towards Sensor-Based Calving Detection in the Rangelands: A Systematic Review of Credible Behavioral and Physiological Indicators. Transl. Anim. Sci. 2020, 4, txaa155. [Google Scholar] [CrossRef]

- Wolfger, B.; Schwartzkopf-Genswein, K.S.; Barkema, H.W.; Pajor, E.A.; Levy, M.; Orsel, K. Feeding Behavior as an Early Predictor of Bovine Respiratory Disease in North American Feedlot Systems. J. Anim. Sci. 2015, 93, 377–385. [Google Scholar] [CrossRef] [PubMed]

- Bailey, D.W.; Trotter, M.G.; Knight, C.W.; Thomas, M.G. Use of GPS Tracking Collars and Accelerometers for Rangeland Livestock Production Research. Transl. Anim. Sci. 2018, 2, 81–88. [Google Scholar] [CrossRef]

- Qiao, Y.; Kong, H.; Clark, C.; Lomax, S.; Su, D.; Eiffert, S.; Sukkarieh, S. Intelligent Perception-Based Cattle Lameness Detection and Behaviour Recognition: A Review. Animals 2021, 11, 3033. [Google Scholar] [CrossRef] [PubMed]

- Greenwood, P.L.; Kardailsky, I.; Badgery, W.B.; Bishop-Hurley, G.J. Smart Farming for Extensive Grazing Ruminant Production Systems. J. Anim. Sci. 2020, 98, 139–140. [Google Scholar] [CrossRef]

- Tzanidakis, C.; Tzamaloukas, O.; Simitzis, P.; Panagakis, P. Precision Livestock Farming Applications (PLF) for Grazing Animals. Agriculture 2023, 13, 288. [Google Scholar] [CrossRef]

- Nyamuryekung’e, S. Transforming Ranching: Precision Livestock Management in the Internet of Things Era. Rangelands 2024, 46, 13–22. [Google Scholar] [CrossRef]

- Fogarty, E.S.; Swain, D.L.; Cronin, G.; Trotter, M. Autonomous On-Animal Sensors in Sheep Research: A Systematic Review. Comput. Electron. Agric. 2018, 150, 245–256. [Google Scholar] [CrossRef]

- Silva, S.R.; Sacarrão-Birrento, L.; Almeida, M.; Ribeiro, D.M.; Guedes, C.; Montaña, J.R.G.; Pereira, A.F.; Zaralis, K.; Geraldo, A.; Tzamaloukas, O.; et al. Extensive Sheep and Goat Production: The Role of Novel Technologies towards Sustainability and Animal Welfare. Animals 2022, 12, 885. [Google Scholar] [CrossRef]

- Odintsov Vaintrub, M.; Levit, H.; Chincarini, M.; Fusaro, I.; Giammarco, M.; Vignola, G. Precision Livestock Farming, Automats and New Technologies: Possible Applications in Extensive Dairy Sheep Farming. Animal 2021, 15, 100143. [Google Scholar] [CrossRef]

- Morgan-Davies, C.; Tesnière, G.; Gautier, J.M.; Jørgensen, G.H.M.; González-García, E.; Patsios, S.I.; Sossidou, E.N.; Keady, T.W.J.; McClearn, B.; Kenyon, F.; et al. Review: Exploring the Use of Precision Livestock Farming for Small Ruminant Welfare Management. Animal 2024, 18, 101233. [Google Scholar] [CrossRef]

- Fogarty, E.S.; Swain, D.L.; Cronin, G.M.; Moraes, L.E.; Bailey, D.W.; Trotter, M.G. Potential for Autonomous Detection of Lambing Using Global Navigation Satellite System Technology. Anim. Prod. Sci. 2020, 60, 1217–1226. [Google Scholar] [CrossRef]

- Fogarty, E.S.; Swain, D.L.; Cronin, G.M.; Moraes, L.E.; Bailey, D.W.; Trotter, M. Developing a Simulated Online Model That Integrates GNSS, Accelerometer and Weather Data to Detect Parturition Events in Grazing Sheep: A Machine Learning Approach. Animals 2021, 11, 303. [Google Scholar] [CrossRef]

- Dobos, R.C.; Dickson, S.; Bailey, D.W.; Trotter, M.G. The Use of GNSS Technology to Identify Lambing Behaviour in Pregnant Grazing Merino Ewes. Anim. Prod. Sci. 2014, 54, 1722–1727. [Google Scholar] [CrossRef]

- Sohi, R.; Almasi, F.; Nguyen, H.; Carroll, A.; Trompf, J.; Weerasinghe, M.; Bervan, A.; Godoy, B.I.; Ahmed, A.; Stear, M.J.; et al. Determination of Ewe Behaviour around Lambing Time and Prediction of Parturition 7days Prior to Lambing by Tri-Axial Accelerometer Sensors in an Extensive Farming System. Anim. Prod. Sci. 2022, 62, 1729–1738. [Google Scholar] [CrossRef]

- Fogarty, E.S.; Manning, J.K.; Trotter, M.G.; Schneider, D.A.; Thomson, P.C.; Bush, R.D.; Cronin, G.M. GNSS Technology and Its Application for Improved Reproductive Management in Extensive Sheep Systems. Anim. Prod. Sci. 2014, 55, 1272–1280. [Google Scholar] [CrossRef]

- Gurule, S.C.; Flores, V.V.; Forrest, K.K.; Gifford, C.A.; Wenzel, J.C.; Tobin, C.T.; Bailey, D.W.; Hernandez Gifford, J.A. A Case Study Using Accelerometers to Identify Illness in Ewes Following Unintentional Exposure to Mold-Contaminated Feed. Animals 2022, 12, 266. [Google Scholar] [CrossRef]

- Fogarty, E.S.; Cronin, G.M.; Trotter, M. Exploring the Potential for On-Animal Sensors to Detect Adverse Welfare Events: A Case Study of Detecting Ewe Behaviour Prior to Vaginal Prolapse. Anim. Welf. 2022, 31, 355–359. [Google Scholar] [CrossRef]

- Fan, B.; Bryant, R.H.; Greer, A.W. Automatically Identifying Sickness Behavior in Grazing Lambs with an Acceleration Sensor. Animals 2023, 13, 2086. [Google Scholar] [CrossRef]

- Evans, C.A.; Trotter, M.G.; Manning, J.K. Sensor-Based Detection of Predator Influence on Livestock: A Case Study Exploring the Impacts of Wild Dogs (Canis Familiaris) on Rangeland Sheep. Animals 2022, 12, 219. [Google Scholar] [CrossRef]

- Sohi, R.; Trompf, J.; Marriott, H.; Bervan, A.; Godoy, B.I.; Weerasinghe, M.; Desai, A.; Jois, M. Determination of Maternal Pedigree and Ewe–Lamb Spatial Relationships by Application of Bluetooth Technology in Extensive Farming Systems. J. Anim. Sci. 2017, 95, 5145–5150. [Google Scholar] [CrossRef]

- Leroux, E.; Llach, I.; Besche, G.; Guyonneau, J.-D.; Montier, D.; Bouquet, P.-M.; Sanchez, I.; González-García, E. Evaluating a Walk-over-Weighing System for the Automatic Monitoring of Growth in Postweaned Mérinos d’Arles Ewe Lambs under Mediterranean Grazing Conditions. Anim. Open Space 2023, 2, 100032. [Google Scholar] [CrossRef]

- Gómez, Y.; Stygar, A.H.; Boumans, I.J.M.M.; Bokkers, E.A.M.; Pedersen, L.J.; Niemi, J.K.; Pastell, M.; Manteca, X.; Llonch, P. A Systematic Review on Validated Precision Livestock Farming Technologies for Pig Production and Its Potential to Assess Animal Welfare. Front. Vet. Sci. 2021, 8, 660565. [Google Scholar] [CrossRef]

- van Klompenburg, T.; Kassahun, A. Data-Driven Decision Making in Pig Farming: A Review of the Literature. Livest. Sci. 2022, 261, 104961. [Google Scholar] [CrossRef]

- Vranken, E.; Berckmans, D. Precision Livestock Farming for Pigs. Anim. Front. 2017, 7, 32–37. [Google Scholar] [CrossRef]

- Benjamin, M.; Yik, S. Precision Livestock Farming in Swine welfare: A Review for Swine Practitioners. Animals 2019, 9, 133. [Google Scholar] [CrossRef]

- Pomar, C.; Remus, A. Precision Pig Feeding: A Breakthrough toward Sustainability. Anim. Front. 2019, 9, 52–59. [Google Scholar] [CrossRef]

- de Bruijn, B.G.C.; de Mol, R.M.; Hogewerf, P.H.; van der Fels, J.B. A Correlated-Variables Model for Monitoring Individual Growing-Finishing Pig’s Behavior by RFID Registrations. Smart Agric. Technol. 2023, 4, 100189. [Google Scholar] [CrossRef]

- Sadeghi, E.; Kappers, C.; Chiumento, A.; Derks, M.; Havinga, P. Improving Piglets Health and Well-Being: A Review of Piglets Health Indicators and Related Sensing Technologies. Smart Agric. Technol. 2023, 5, 100246. [Google Scholar] [CrossRef]

- Garrido, L.F.C.; Sato, S.T.M.; Costa, L.B.; Daros, R.R. Can We Reliably Detect Respiratory Diseases through Precision Farming? A Systematic Review. Animals 2023, 13, 1273. [Google Scholar] [CrossRef]

- Habineza, E.; Reza, M.N.; Chowdhury, M.; Kiraga, S.; Chung, S.-O.; Hong, S.J. Pig Diseases and Crush Monitoring Visual Symptoms Detection Using Engineering Approaches: A Review. Precis. Agric. Sci. Technol. 2021, 3, 159–173. [Google Scholar] [CrossRef]

- Traulsen, I.; Scheel, C.; Auer, W.; Burfeind, O.; Krieter, J. Using Acceleration Data to Automatically Detect the Onset of Farrowing in Sows. Sensors 2018, 18, 170. [Google Scholar] [CrossRef] [PubMed]

- Gan, H.; Li, S.; Ou, M.; Yang, X.; Huang, B.; Liu, K.; Xue, Y. Fast and Accurate Detection of Lactating Sow Nursing Behavior with CNN-Based Optical Flow and Features. Comput. Electron. Agric. 2021, 189, 106384. [Google Scholar] [CrossRef]

- Yang, A.; Huang, H.; Yang, X.; Li, S.; Chen, C.; Gan, H.; Xue, Y. Automated Video Analysis of Sow Nursing Behavior Based on Fully Convolutional Network and Oriented Optical Flow. Comput. Electron. Agric. 2019, 167, 105048. [Google Scholar] [CrossRef]

- Guo, Q.; Sun, Y.; Orsini, C.; Bolhuis, J.E.; de Vlieg, J.; Bijma, P.; de With, P.H.N. Enhanced Camera-Based Individual Pig Detection and Tracking for Smart Pig Farms. Comput. Electron. Agric. 2023, 211, 108009. [Google Scholar] [CrossRef]

- Zhou, H.; Chung, S.; Kakar, J.K.; Kim, S.C.; Kim, H. Pig Movement Estimation by Integrating Optical Flow with a Multi-Object Tracking Model. Sensors 2023, 23, 9499. [Google Scholar] [CrossRef]

- Mittek, M.; Psota, E.T.; Pérez, L.C.; Schmidt, T.; Mote, B. Health Monitoring of Group-Housed Pigs Using Depth-Enabled Multi-Object Tracking. In Proceedings of the International Conference on Pattern Recognition, Workshop on Visual observation and analysis of Vertebrate and Insect Behavior, Cancun, Mexico, 4–8 December 2016; pp. 9–12. [Google Scholar]

- Zha, W.; Li, H.; Wu, G.; Zhang, L.; Pan, W.; Gu, L.; Jiao, J.; Zhang, Q. Research on the Recognition and Tracking of Group-Housed Pigs’ Posture Based on Edge Computing. Sensors 2023, 23, 8952. [Google Scholar] [CrossRef]

- Dong, Y.; Bonde, A.; Codling, J.R.; Bannis, A.; Cao, J.; Macon, A.; Rohrer, G.; Miles, J.; Sharma, S.; Brown-Brandl, T.; et al. PigSense: Structural Vibration-Based Activity and Health Monitoring System for Pigs. ACM Trans. Sens. Netw. 2023, 20, 1. [Google Scholar] [CrossRef]

- Franchi, G.A.; Bus, J.D.; Boumans, I.J.M.M.; Bokkers, E.A.M.; Jensen, M.B.; Pedersen, L.J. Estimating Body Weight in Conventional Growing Pigs Using a Depth Camera. Smart Agric. Technol. 2023, 3, 100117. [Google Scholar] [CrossRef]

- Nguyen, A.H.; Holt, J.P.; Knauer, M.T.; Abner, V.A.; Lobaton, E.J.; Young, S.N. Towards Rapid Weight Assessment of Finishing Pigs Using a Handheld, Mobile RGB-D Camera. Biosyst. Eng. 2023, 226, 155–168. [Google Scholar] [CrossRef]

- Chen, Z.; Lu, J.; Wang, H. A Review of Posture Detection Methods for Pigs Using Deep Learning. Appl. Sci. 2023, 13, 6997. [Google Scholar] [CrossRef]

- D’Eath, R.B.; Foister, S.; Jack, M.; Bowers, N.; Zhu, Q.; Barclay, D.; Baxter, E.M. Changes in Tail Posture Detected by a 3D Machine Vision System Are Associated with Injury from Damaging Behaviours and Ill Health on Commercial Pig Farms. PLoS ONE 2021, 16, e0258895. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Zhu, W.; Steibel, J.; Siegford, J.; Wurtz, K.; Han, J.; Norton, T. Recognition of Aggressive Episodes of Pigs Based on Convolutional Neural Network and Long Short-Term Memory. Comput. Electron. Agric. 2020, 169, 105166. [Google Scholar] [CrossRef]

- Larsen, M.L.V.; Pedersen, L.J.; Edwards, S.; Albanie, S.; Dawkins, M.S. Movement Change Detected by Optical Flow Precedes, but Does Not Predict, Tail-Biting in Pigs. Livest. Sci. 2020, 240, 104136. [Google Scholar] [CrossRef]

- Li, Y.Z.; Johnston, L.J.; Dawkins, M.S. Utilization of Optical Flow Algorithms to Monitor Development of Tail Biting Outbreaks in Pigs. Animals 2020, 10, 323. [Google Scholar] [CrossRef]

- Reza, M.N.; Ali, M.R.; Samsuzzaman; Kabir, M.S.N.; Karim, M.R.; Ahmed, S.; Kyoung, H.; Kim, G.; Chung, S.-O. Thermal Imaging and Computer Vision Technologies for the Enhancement of Pig Husbandry: A Review. J. Anim. Sci. Technol. 2024, 66, 31–56. [Google Scholar] [CrossRef]

- Arulmozhi, E.; Bhujel, A.; Moon, B.E.; Kim, H.T. The Application of Cameras in Precision Pig Farming: An Overview for Swine-Keeping Professionals. Animals 2021, 11, 2343. [Google Scholar] [CrossRef]

- Hou, Y.; Li, Q.; Wang, Z.; Liu, T.; He, Y.; Li, H.; Ren, Z.; Guo, X.; Yang, G.; Liu, Y.; et al. Study on a Pig Vocalization Classification Method Based on Multi-Feature Fusion. Sensors 2024, 24, 313. [Google Scholar] [CrossRef]

- Hong, M.; Ahn, H.; Atif, O.; Lee, J.; Park, D.; Chung, Y. Field-Applicable Pig Anomaly Detection System Using Vocalization for Embedded Board Implementations. Appl. Sci. 2020, 10, 6991. [Google Scholar] [CrossRef]

- Lagua, E.B.; Mun, H.S.; Ampode, K.M.B.; Chem, V.; Kim, Y.H.; Yang, C.J. Artificial Intelligence for Automatic Monitoring of Respiratory Health Conditions in Smart Swine Farming. Animals 2023, 13, 1860. [Google Scholar] [CrossRef]

- Dawkins, M.S.; Rowe, E. Poultry Welfare Monitoring: Group-Level Technologies. In Understanding the Behaviour and Improving the Welfare of Chickens; Nicol, C., Ed.; Burleigh Dodds Science Publishing: Cambridge, UK, 2020; pp. 177–196. [Google Scholar]

- Li, N.; Ren, Z.; Li, D.; Zeng, L. Automated Techniques for Monitoring the Behaviour and Welfare of Broilers and Laying Hens: Towards the Goal of Precision Livestock Farming. Animal 2020, 14, 617–625. [Google Scholar] [CrossRef]

- Mortensen, A.K.; Lisouski, P.; Ahrendt, P. Weight Prediction of Broiler Chickens Using 3D Computer Vision. Comput. Electron. Agric. 2016, 123, 319–326. [Google Scholar] [CrossRef]

- Neethirajan, S. ChickTrack–A Quantitative Tracking Tool for Measuring Chicken Activity. Measurement 2022, 191, 110819. [Google Scholar] [CrossRef]

- Pereira, D.F.; Nääs, I.d.A.; Lima, N.D.d.S. Movement Analysis to Associate Broiler Walking Ability with Gait Scoring. AgriEngineering 2021, 3, 394–402. [Google Scholar] [CrossRef]

- Aydin, A. Development of an Early Detection System for Lameness of Broilers Using Computer Vision. Comput. Electron. Agric. 2017, 136, 140–146. [Google Scholar] [CrossRef]

- Zhuang, X.; Zhang, T. Detection of Sick Broilers by Digital Image Processing and Deep Learning. Biosyst. Eng. 2019, 179, 106–116. [Google Scholar] [CrossRef]

- Du, X.; Teng, G. An Automatic Detection Method for Abnormal Laying Hen Activities Using a 3D Depth Camera. Eng. Agric. 2021, 41, 263–270. [Google Scholar] [CrossRef]

- Dawkins, M.S.; Wang, L.; Ellwood, S.A.; Roberts, S.J.; Gebhardt-Henrich, S.G. Optical Flow, Behaviour and Broiler Chicken Welfare in the UK and Switzerland. Appl. Anim. Behav. Sci. 2021, 234, 105180. [Google Scholar] [CrossRef]

- Herborn, K.A.; McElligott, A.G.; Mitchell, M.A.; Sandilands, V.; Bradshaw, B.; Asher, L. Spectral Entropy of Early-Life Distress Calls as an Iceberg Indicator of Chicken Welfare. J. R. Soc. Interface 2020, 17, 20200086. [Google Scholar] [CrossRef]

- Liu, L.; Li, B.; Zhao, R.; Yao, W.; Shen, M.; Yang, J. A Novel Method for Broiler Abnormal Sound Detection Using WMFCC and HMM. J. Sens. 2020, 2020, 2985478. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, T.; Cuan, K.; Fang, C. An Intelligent Method for Detecting Poultry Eating Behaviour Based on Vocalization Signals. Comput. Electron. Agric. 2021, 180, 105884. [Google Scholar] [CrossRef]

- Ellen, E.D.; van der Sluis, M.; Siegford, J.; Guzhva, O.; Toscano, M.J.; Bennewitz, J.; Van Der Zande, L.E.; Van Der Eijk, J.A.J.; De Haas, E.N.; Norton, T.; et al. Review of Sensor Technologies in Animal Breeding: Phenotyping Behaviors of Laying Hens to Select against Feather Pecking. Animals 2019, 9, 108. [Google Scholar] [CrossRef] [PubMed]

- Borgonovo, F.; Ferrante, V.; Grilli, G.; Guarino, M. An Innovative Approach for Analysing and Evaluating Enteric Diseases in Poultry Farm. Acta IMEKO 2024, 13, 1–5. [Google Scholar] [CrossRef]

- Li, L.; Zhao, Y.; Oliveira, J.; Verhoijsen, W.; Liu, K.; Xin, H. A UHF RFID System for Studying Individual Feeding and Nesting Behaviors of Group-Housed Laying Hens. Trans. ASABE 2017, 60, 1337–1347. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, Y.; Street, G.M.; Huang, Y.; Filip To, S.D.; Purswell, J.L. Classification of Broiler Behaviours Using Triaxial Accelerometer and Machine Learning. Animal 2021, 15, 100269. [Google Scholar] [CrossRef]

- Ahmed, G.; Malick, R.A.S.; Akhunzada, A.; Zahid, S.; Sagri, M.R.; Gani, A. An Approach towards Iot-Based Predictive Service for Early Detection of Diseases in Poultry Chickens. Sustainability 2021, 13, 13396. [Google Scholar] [CrossRef]

- Stachowicz, J.; Umstätter, C. Do We Automatically Detect Health- or General Welfare-Related Issues? A Framework. Proc. R. Soc. B Biol. Sci. 2021, 288, 20210190. [Google Scholar] [CrossRef] [PubMed]

- Fuchs, P.; Adrion, F.; Shafiullah, A.Z.M.; Bruckmaier, R.M.; Umstätter, C. Detecting Ultra- and Circadian Activity Rhythms of Dairy Cows in Automatic Milking Systems Using the Degree of Functional Coupling—A Pilot Study. Front. Anim. Sci. 2022, 3, 839906. [Google Scholar] [CrossRef]

- Schneider, M.; Umstätter, C.; Nasser, H.-R.; Gallmann, E.; Barth, K. Effect of the Daily Duration of Calf Contact on the Dam’s Ultradian and Circadian Activity Rhythms. JDS Commun. 2024, 5, 457–461. [Google Scholar] [CrossRef]

- Sturm, V.; Efrosinin, D.; Öhlschuster, M.; Gusterer, E.; Drillich, M.; Iwersen, M. Combination of Sensor Data and Health Monitoring for Early Detection of Subclinical Ketosis in Dairy Cows. Sensors 2020, 20, 1484. [Google Scholar] [CrossRef] [PubMed]

- da Silva Santos, A.; de Medeiros, V.W.C.; Gonçalves, G.E. Monitoring and Classification of Cattle Behavior: A Survey. Smart Agric. Technol. 2023, 3, 100091. [Google Scholar] [CrossRef]

- Riaboff, L.; Shalloo, L.; Smeaton, A.F.; Couvreur, S.; Madouasse, A.; Keane, M.T. Predicting Livestock Behaviour Using Accelerometers: A Systematic Review of Processing Techniques for Ruminant Behaviour Prediction from Raw Accelerometer Data. Comput. Electron. Agric. 2022, 192, 106610. [Google Scholar] [CrossRef]

- Diggle, P.; Giorgi, E. Time Series: A Biostatistical Introduction, 2nd ed.; Oxford University Press: Oxford, UK, 2025; ISBN 9780198714835. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2009; ISBN 978-0-387-84857-0. [Google Scholar]

- Mood, A.M.; Graybill, F.A.; Boes, D.C. Introduction to the Theory of Statistics, 3rd ed.; McGraw-Hill Kogakusha: New York, NY, USA, 1974; ISBN 0-07-085465-3. [Google Scholar]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly Detection: A Survey. ACM Comput. Surv. 2009, 41, 15. [Google Scholar] [CrossRef]

- Hyndman, R.; Koehler, A.; Ord, K.; Snyder, R. Forecasting with Exponential Smoothing: The State Space Approach; Springer: Berlin/Heidelberg, Germany, 2008; ISBN 978-3-540-71916-8. [Google Scholar]

- Basseville, M.; Nikiforov, I. V Detection of Abrupt Changes: Theory and Application; Prentice Hall: Englewood Cliffs, NJ, USA, 1993; ISBN 978-0-13-126780-0. [Google Scholar]

- Montgomery, D.C. Introduction to Statistical Quality Control, 8th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2020; ISBN 1119723094. [Google Scholar]

- Gupta, M.; Gao, J.; Aggarwal, C.C.; Han, J. Outlier Detection for Temporal Data: A Survey. IEEE Trans. Knowl. Data Eng. 2013, 26, 2250–2267. [Google Scholar] [CrossRef]

- Blázquez-García, A.; Conde, A.; Mori, U.; Lozano, J.A. A Review on Outlier/Anomaly Detection in Time Series Data. ACM Comput. Surv. 2021, 54, 56. [Google Scholar] [CrossRef]

- Kolambe, M.; Arora, S. Forecasting the Future: A Comprehensive Review of Time Series Prediction Techniques. J. Electrical Systems 2024, 20, 575–586. [Google Scholar] [CrossRef]

- Brown, H.; Prescott, R. Applied Mixed Models in Medicine, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2015; ISBN 1118778251. [Google Scholar]

- McCullagh, P.; Nelder, J.A. Generalized Linear Models, 2nd ed.; Chapman & Hall: London, UK, 1989; ISBN 0-412-31760-5. [Google Scholar]

- Skrondal, A.; Rabe-Hesketh, S. Latent Variable Modelling: A Survey. Scand. J. Stat. 2007, 34, 712–745. [Google Scholar] [CrossRef]

- Mor, B.; Garhwal, S.; Kumar, A. A Systematic Review of Hidden Markov Models and Their Applications. Arch. Comput. Methods Eng. 2021, 28, 1429–1448. [Google Scholar] [CrossRef]

- Everitt, B.S.; Landau, S.; Leese, M.; Stahl, D. Cluster Analysis, 5th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2011; ISBN 978-0-470-74991-3. [Google Scholar]

- Gilks, W.R.; Richardson, S.; Spiegelhalter, D.J. Markov Chain Monte Carlo in Practice, 1st ed.; Chapman & Hall/CRC: London, UK, 1996; ISBN 0-412-05551-1. [Google Scholar]

- Gelman, A.; Carlin, J.B.; Stern, H.S.; Rubin, D.B. Bayesian Data Analysis, 2nd ed.; Chapman & Hall/CRC: London, UK, 2004; ISBN 1-58488-388-X. [Google Scholar]

- Doucet, A.; Freitas, N.; Gordon, N. An Introduction to Sequential Monte Carlo Methods. In Sequential Monte Carlo Methods in Practice; Springer: New York, NY, USA, 2001; pp. 3–14. ISBN 978-1-4419-2887-0. [Google Scholar]

- Shine, P.; Murphy, M.D. Over 20 Years of Machine Learning Applications on Dairy Farms: A Comprehensive Mapping Study. Sensors 2022, 22, 52. [Google Scholar] [CrossRef]

- Greener, J.G.; Kandathil, S.M.; Moffat, L.; Jones, D.T. A Guide to Machine Learning for Biologists. Nat. Rev. Mol. Cell Biol. 2022, 23, 40–55. [Google Scholar] [CrossRef]

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Peter Campbell, J. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar] [PubMed]

- Simchoni, G.; Rosset, S. Integrating Random Effects in Deep Neural Networks. arXiv 2022. [Google Scholar] [CrossRef]

- Domingos, P. A Few Useful Things to Know about Machine Learning. Commun. ACM 2012, 55, 78–87. [Google Scholar] [CrossRef]

- Haveman, M.E.; van Rossum, M.C.; Vaseur, R.M.E.; van der Riet, C.; Schuurmann, R.C.L.; Hermens, H.J.; de Vries, J.P.P.M.; Tabak, M. Continuous Monitoring of Vital Signs With Wearable Sensors During Daily Life Activities: Validation Study. JMIR Form. Res. 2022, 6, e30863. [Google Scholar] [CrossRef]

- Armitage, P.; Berry, G.; Mathews, J.N.S. Statistical Methods in Medical Research, 4th ed.; Blackwell Science: Oxford, UK, 2002; ISBN 0-632-05257-0. [Google Scholar]

- Borchers, M.R.; Chang, Y.M.; Tsai, I.C.; Wadsworth, B.A.; Bewley, J.M. A Validation of Technologies Monitoring Dairy Cow Feeding, Ruminating, and Lying Behaviors. J. Dairy. Sci. 2016, 99, 7458–7466. [Google Scholar] [CrossRef]

- Lasser, J.; Matzhold, C.; Egger-Danner, C.; Fuerst-Waltl, B.; Steininger, F.; Wittek, T.; Klimek, P. Integrating Diverse Data Sources to Predict Disease Risk in Dairy Cattle-A Machine Learning Approach. J. Anim. Sci. 2021, 99, skab294. [Google Scholar] [CrossRef]

- Fleiss, J.; Levin, B.; Paik, M.C. Statistical Methods for Rates and Proportions, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2003; ISBN 9780471445425. [Google Scholar]

- Everitt, B.S. The Analysis of Contingency Tables, 2nd ed.; Chapman & Hall/CRC: London, UK, 1992; ISBN 0-412-39850-8. [Google Scholar]

- Collet, D. Modelling Binary Data; Chapman & Hall/CRC: London, UK, 1999; ISBN 0-412-38800-6. [Google Scholar]

- Tassi, R.; Schiavo, M.; Filipe, J.; Todd, H.; Ewing, D.; Ballingall, K.T. Intramammary Immunisation Provides Short Term Protection Against Mannheimia Haemolytica Mastitis in Sheep. Front. Vet. Sci. 2021, 8, 659803. [Google Scholar] [CrossRef]

- Williams-Macdonald, S.E.; Mitchell, M.; Frew, D.; Palarea-Albaladejo, J.; Ewing, D.; Golde, W.T.; Longbottom, D.; Nisbet, A.J.; Livingstone, M.; Hamilton, C.M.; et al. Efficacy of Phase I and Phase II Coxiella Burnetii Bacterin Vaccines in a Pregnant Ewe Challenge Model. Vaccines 2023, 11, 511. [Google Scholar] [CrossRef]

- McNee, A.; Smith, T.R.F.; Holzer, B.; Clark, B.; Bessell, E.; Guibinga, G.; Brown, H.; Schultheis, K.; Fisher, P.; Ramos, S.; et al. Establishment of a Pig Influenza Challenge Model for Evaluation of Monoclonal Antibody Delivery Platforms. J. Immunol. 2020, 205, 648–660. [Google Scholar] [CrossRef]

- O’Donoghue, S.; Earley, B.; Johnston, D.; McCabe, M.S.; Kim, J.W.; Taylor, J.F.; Duffy, C.; Lemon, K.; McMenamy, M.; Cosby, S.L.; et al. Whole Blood Transcriptome Analysis in Dairy Calves Experimentally Challenged with Bovine Herpesvirus 1 (BoHV-1) and Comparison to a Bovine Respiratory Syncytial Virus (BRSV) Challenge. Front. Genet. 2023, 14, 1092877. [Google Scholar] [CrossRef] [PubMed]

- Kang, H.; Brocklehurst, S.; Haskell, M.; Jarvis, S.; Sandilands, V. Do Activity Sensors Identify Physiological, Clinical and Behavioural Changes in Laying Hens Exposed to a Vaccine Challenge? Animals 2025, 15, 205. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, S.T.; Mun, H.S.; Islam, M.M.; Yoe, H.; Yang, C.J. Monitoring Activity for Recognition of Illness in Experimentally Infected Weaned Piglets Using Received Signal Strength Indication ZigBee-Based Wireless Acceleration Sensor. Asian Australas. J. Anim. Sci. 2016, 29, 149–156. [Google Scholar] [CrossRef]

- Kayser, W.C.; Carstens, G.E.; Jackson, K.S.; Pinchak, W.E.; Banerjee, A.; Fu, Y. Evaluation of Statistical Process Control Procedures to Monitor Feeding Behavior Patterns and Detect Onset of Bovine Respiratory Disease in Growing Bulls. J. Anim. Sci. 2019, 97, 1158–1170. [Google Scholar] [CrossRef]

- March, M.D.; Hargreaves, P.R.; Sykes, A.J.; Rees, R.M. Effect of Nutritional Variation and LCA Methodology on the Carbon Footprint of Milk Production From Holstein Friesian Dairy Cows. Front. Sustain. Food Syst. 2021, 5, 588158. [Google Scholar] [CrossRef]

- Pollott, G.E.; Coffey, M.P. The Effect of Genetic Merit and Production System on Dairy Cow Fertility, Measured Using Progesterone Profiles and on-Farm Recording. J. Dairy. Sci. 2008, 91, 3649–3660. [Google Scholar] [CrossRef] [PubMed]

- SRUC Dairy Research and Innovation Centre. Available online: https://www.sruc.ac.uk/research/research-facilities/dairy-research-facility/ (accessed on 2 April 2024).

- Stepmetrix from Boumatic. Available online: https://kruegersboumatic.com/automation/stepmetrix/ (accessed on 10 March 2023).

- Hokofarm Group. Available online: https://hokofarmgroup.com/ (accessed on 7 March 2023).

- Peacock Technology (Was IceRobotics). Available online: https://www.peacocktechnology.com/ids-i-qube (accessed on 10 March 2023).

- Stygar, A.H.; Frondelius, L.; Berteselli, G.V.; Gómez, Y.; Canali, E.; Niemi, J.K.; Llonch, P.; Pastell, M. Measuring Dairy Cow Welfare with Real-Time Sensor-Based Data and Farm Records: A Concept Study. Animal 2023, 17, 101023. [Google Scholar] [CrossRef] [PubMed]

- EU TechCare Project. Available online: https://techcare-project.eu/ (accessed on 15 March 2023).

- McLaren, A.; Waterhouse, A.; Kenyon, F.; MacDougall, H.; Beechener, E.S.; Walker, A.; Reeves, M.; Lambe, N.R.; Holland, J.P.; Thomson, A.T.; et al. TechCare UK Pilots-Integrated Sheep System Studies Using Technologies for Welfare Monitoring. In Proceedings of the 74th Annual Meeting of the European Federation of Animal Science, Lyon, France, 28 August 2023; Wageningen Academic Publishers: Wageningen, The Netherlands, 2023. [Google Scholar]

- Moredun Research Institute. Available online: https://moredun.org.uk (accessed on 2 April 2024).

- AX3 Accelerometer. Available online: https://axivity.com/userguides/ax3/ (accessed on 15 March 2023).

- i-gotU GT-120B Mobile Action Technology, Inc., Taiwan. Available online: https://www.mobileaction.com (accessed on 7 September 2025).

- RealTimeID Animal Trackers. Available online: https://realtimeid.no/collections/all (accessed on 29 January 2024).

- Lora, I.; Gottardo, F.; Contiero, B.; Zidi, A.; Magrin, L.; Cassandro, M.; Cozzi, G. A Survey on Sensor Systems Used in Italian Dairy Farms and Comparison between Performances of Similar Herds Equipped or Not Equipped with Sensors. J. Dairy. Sci. 2020, 103, 10264–10272. [Google Scholar] [CrossRef] [PubMed]

- Morgan-Davies, C.; Lambe, N.; Wishart, H.; Waterhouse, T.; Kenyon, F.; McBean, D.; McCracken, D. Impacts of Using a Precision Livestock System Targeted Approach in Mountain Sheep Flocks. Livest. Sci. 2018, 208, 67–76. [Google Scholar] [CrossRef]

- SRUC Hill & Mountain Research. Available online: https://www.sruc.ac.uk/research/research-facilities/hill-mountain-research/ (accessed on 2 April 2024).

- R Core Team. R: A Language and Environment for Statistical Computing, version 4.4.2; R Foundation for Statistical Computing: Vienna, Austria, 2024. [Google Scholar]

- Posit team. RStudio: Integrated Development Environment for R, version 2024.12.0 build 467; Posit Software, PBC: Boston, MA, USA, 2024. [Google Scholar]

- Barrett, T.; Dowle, M.; Srinivasan, A.; Gorecki, J.; Chirico, M.; Hocking, T.; Schwendinger, B. data.table: Extension of ‘data.frame’_, R package version 1.16.4. 2024.

- Wickham, H.; François, R.; Henry, L.; Müller, K.; Vaughan, D. Dplyr: A Grammar of Data Manipulation_, R package version 1.1.4. 2023.

- Wickham, H. ggplot2: Elegant Graphics for Data Analysis, 2nd ed.; Springer: New York, NY, USA, 2016; ISBN 978-3319242750. [Google Scholar]

- Kassambara, A. ggpubr: “ggplot2” Based Publication Ready Plots, R package version 0.6.0. 2023.

- Azzalini, A. The R Package “sn”: The Skew-Normal and Related Distributions Such as the Skew-t and the SUN, R package version 2.1.1. 2023.

| Name | Species Being Monitored 1 | What Is Being Measured | Sensor Technology | Purpose of Monitoring | Comments |

|---|---|---|---|---|---|

| 1 Individual intakes | Dairy Cows, Beef Cattle, Calves, Sheep | Individual feeding and drinking behaviour, amounts if technology allows | Sensors at feeders/drinkers that record individual RFID tags. More advanced systems that also record feed or drink taken at each bout. | Managing Nutrition and Production; Detecting Health and Welfare Problems of individuals | |

| 2 Group intakes | Dairy Cows, Beef Cattle, Calves, Pigs, Poultry, Goats, Sheep | Group feeding and drinking including amounts | Automatic livestock feeders and drinkers | Managing group Nutrition and Production; Detecting Health and Welfare Problems in groups | |

| 3 Individual weights—identified individuals | Dairy Cows, Beef Cattle, Pigs, Sheep | Individual Live weights | Walk-over weighers that record individual RFID tags. | Managing Nutrition and Production; Detecting Health and Welfare Problems of individuals | Walk-over weighers placed to maximise the number of readings (e.g., on way in/out of milking parlour) |

| 4 Individual weights—unidentified individuals | Pigs, Sheep | Live weights measured per individual but individuals not identified | Walk-over weighers (e.g., at races, or in pens) | Managing Nutrition and Production; Detecting Health and Welfare Problems in groups/of individuals | Can be used to sort into different feeding areas using marking and/or gate system. For pigs in pens, they can be placed between loafing and feeding areas or separated off if unwell. |

| 5 Estimated weights—groups/ unidentified individuals | Poultry—Broilers, Turkeys | Live weight plus number on plate hence average liv weights; individuals not identified | Weighing Plates/Platforms for individuals/groups | Managing group Nutrition and Production; Detecting Health and Welfare Problems in groups | This is a sampling of weights in the flock. Could give 1000 s of weight measurements per day. Some platforms only measure one bird at a time whilst some measure multiple birds. |

| 6 Milk parlour data | Dairy Cows | Milk yield, milking duration, peak flow; milk quality, Somatic Cell Count (SCC); position in parlour/milker | Automatic milking systems plus manual sampling | Managing Nutrition and Production; Detecting Health and Welfare Problems of individuals | Milk quality and SCC measures may not be available in real time but could be sampled regularly (e.g., once per day or week). |

| 7 Milk bulk lab data | Dairy Cows | Somatic Cell Count (SCC), Milk quality | Milk bulk sampling—manual sampling | Managing group Nutrition and Production; Detecting Health and Welfare Problems in groups | Milk quality and SCC measures may not be available in real time but could be sampled regularly (e.g., once per day or week). |

| 8 Milk bulk other data | Dairy Cows | Temperature, Volume, Stirring | Milk bulk sampling—various sensors | Managing group Milk Production and Processing | Real-time monitoring of physical attributes of milk in bulk tanks is available |

| 9 Movement—acceleration | Dairy Cows, Beef Cattle, Pigs, Sheep, Goat | Behaviour (e.g., activity or time budgets in different classes: lying/standing, grazing/not, rumination, … or raw acceleration in x, y, and z directions) | Accelerometer | Detecting Heat, Calving/Lambing/Farrowing, Health and Welfare Problems | Not usually used on pigs on real farms. For sheep cheaper options needed. For grazing animals, they are often removed at intervals for data download and recharging. Can give raw accelerometer data but sometimes measures are derived only (e.g., behaviour). |

| 10 Movement—gait | Dairy Cows, Beef Cattle, Pigs, Sheep, Goat | Behaviour (step count), other gait measurements | Pedometer | Detecting Heat, Calving/Lambing/Farrowing, Health and Welfare Problems | Less advanced than accelerometer; some just measure step count but others take measurements that can be used to detect lameness |

| 11 Location | Dairy Cows, Beef Cattle, Pigs, Sheep, Goat | Location and behaviour | GNSS (Global navigation satellite system), GPS (global positioning system) | Managing Grazing and Production; Detecting Health and Welfare Problems of individuals | |

| 12 Relative location | Dairy Cows, Beef Cattle, Pigs, Sheep, Goat | Location relative to static receivers and behaviour | Proximity loggers plus static receivers | Managing Grazing and Production; Detecting Health and Welfare Problems of individuals | Locations can be estimated as well as mother-offspring distances |

| 13 Images—unidentified individuals | Pigs | Body condition score, liveweight | 2D Imaging from above | Managing Nutrition and Production; Detecting Health and Welfare Problems of individuals | Can be placed between loafing and feeding areas and used to sort into different feeding areas using gate system |

| 14 Images—identified individuals | Cows, Pigs | Body condition score, live weight, behaviour | 2D/3D Imaging from above | Managing Nutrition and Production; Detecting Health and Welfare Problems of individuals | Identifying individuals is difficult, so it is used in combination with reading RFID tags at intervals and then tracking. |

| 15 Images—unidentified birds | Poultry—Broilers, Turkeys | Location and behaviour; dead birds; weight estimation; | 2D Imaging from above | Managing Nutrition and Production; Detecting Health and Welfare Problems of groups | Imaging systems for poultry tend to occur at a larger scale (per individual) than those for cows and pigs. |

| 16 Temperature—unidentified individuals | Dairy Cows, Beef Cattle, Calves, Pigs, Poultry, Goats, Sheep | Body temperature | Thermal Imaging | Detecting Health and Welfare Problems of individuals | Can be used for detecting heat stress, and potentially fever, pain, … |

| 17 Temperature—identified individuals | Dairy Cows | Body temperature | Thermometer | Detecting Health and Welfare Problems of individuals | |

| 18 Sound—vocalisations | Dairy Cows, Beef Cattle, Calves, Pigs, Poultry, Goats, Sheep | Specific Species-Dependent Vocalisations | Acoustic Sensors | Detecting Health and Welfare Problems of Groups | These sensors are mounted in, e.g., house, but could be used outside in confined areas |

| 19 Sound—feeding | Cows, Sheep | Feed intake, behaviour (grazing, ruminating) | Acoustic Sensors | Managing Grazing and Production | These sensors are mounted on animals |

| 20 Aerial images—extensive | Available Grazing for Cows, Sheep | Quality of grazing | Remote Sensing (Satellite imaging) | Managing Grazing | |

| 21 Aerial images—targeted | Cows, Sheep and Available Grazing | Quality of grazing; location of groups | Camera on Drone/UAV (Unmanned Aerial Vehicle) | Managing Grazing; Detecting Health and Welfare Problems | |

| 22 Local environmental conditions | Livestock | Temperature, humidity, emissions (e.g., Ammonia, Methane, CO2) | Environmental sensors | Managing Health and Welfare Problems of groups; Managing emissions | Usually for housed livestock |

| 23 Weather outside | Livestock | Temperature, humidity, Rainfall, Windspeed, … | Weather station | Managing Health and Welfare Problems of groups | Could affect housed livestock as well as livestock kept outside |

| Name | Measurement On | Timing of Sensor Measurements | Animals Are | Sensor Is | Sensor Is | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Individuals ID Known | Individuals ID Not Known | Some Individuals ID Not known | Groups or Impact on Groups | Continuous or Near-Continuous | Intermittent | Regular | Housed (Usually) | Outside/Grazing | On animal | Not on Animal | At Fixed Location 1 | Mobile | |

| 1 Individual intakes | ● | ● | ● | ● | ● | ● | |||||||

| 2 Group intakes | ● | ● | ● | ● | ● | ● | ● | ||||||

| 3 Individual weights—identified individuals | ● | ● | ● | ● | ● | ● | ● | ||||||

| 4 Individual weights—unidentified individuals | ● | ● | ● | ● | ● | ||||||||

| 5 Estimated weights—group/unidentified individuals | ● | ● | ● | ● | ● | ||||||||

| 6 Milk parlour data | ● | ● | ● | ● | ● | ● | ● | ||||||

| 7 Milk bulk lab data | ● | ● | ● | ● | ● | ● | ● | ||||||

| 8 Milk bulk other data | ● | ● | ● | ● | ● | ● | |||||||

| 9 Movement—acceleration | ● | ● | ● | ● | ● | ● | ● | ||||||

| 10 Movement—gait | ● | ● | ● | ● | ● | ● | ● | ||||||

| 11 Location | ● | ● | ● | ● | ● | ● | |||||||

| 12 Relative location | ● | ● | ● | ● | ● | ● | ● | ||||||

| 13 Images—unidentified individuals | ● | ● | ● | ● | ● | ● | |||||||

| 14 Images—identified individuals | ● | ● | ● | ● | ● | ● | |||||||

| 15 Images—unidentified birds | ● | ● | ● | ● | ● | ● | ● | ● | |||||

| 16 Temperature—unidentified individuals | ● | ● | ● | ● | ● | ● | ● | ● | |||||

| 17 Temperature—identified individuals | ● | ● | ● | ● | ● | ● | ● | ||||||

| 18 Sound—vocalisations | ● | ● | ● | ● | ● | ● | |||||||

| 19 Sound—feeding | ● | ● | ● | ● | ● | ● | ● | ||||||

| 20 Aerial images—extensive | ● | ● | ● | ● | |||||||||

| 21 Aerial images—targeted | ● | ● | ● | ● | ● | ● | ● | ||||||

| 22 Local environmental conditions | ● | ● | ● | ● | ● | ||||||||

| 23 Weather outside | ● | ● | ● | ● | ● | ||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brocklehurst, S.; Fang, Z.; Butler, A. Real-Time Auto-Monitoring of Livestock: Quantitative Framework and Challenges. Sensors 2025, 25, 5871. https://doi.org/10.3390/s25185871

Brocklehurst S, Fang Z, Butler A. Real-Time Auto-Monitoring of Livestock: Quantitative Framework and Challenges. Sensors. 2025; 25(18):5871. https://doi.org/10.3390/s25185871

Chicago/Turabian StyleBrocklehurst, Sarah, Zhou Fang, and Adam Butler. 2025. "Real-Time Auto-Monitoring of Livestock: Quantitative Framework and Challenges" Sensors 25, no. 18: 5871. https://doi.org/10.3390/s25185871

APA StyleBrocklehurst, S., Fang, Z., & Butler, A. (2025). Real-Time Auto-Monitoring of Livestock: Quantitative Framework and Challenges. Sensors, 25(18), 5871. https://doi.org/10.3390/s25185871