1. Introduction

Urban transportation networks must be efficient, safe, and sustainable. Intelligent transportation systems (ITS) are critical for achieving these goals. At the heart of any effective ITS is accurate and reliable travel time prediction (TTP). TTP is a cornerstone capability. It supports many vital applications. These include dynamic route guidance for drivers and fleet operators [

1]. They also include optimized traffic signal control and better incident management.

For travelers, precise TTP allows for smarter decisions. This reduces travel delays and enables more effective trip planning. For traffic authorities, TTP provides essential tools. They can use these tools to proactively manage congestion. This also helps lower fuel consumption and emissions. Ultimately, a reliable TTP system improves the entire network’s resilience [

2]. Despite decades of research and deployment, achieving consistently high-fidelity TTP, particularly across complex urban and freeway networks and under dynamic conditions, remains a formidable scientific and engineering challenge [

1]. This challenge stems fundamentally from the inherent limitations and complementary nature of the diverse sensor modalities currently employed for traffic data acquisition [

3]. Traditionally, ITS architectures have relied heavily on three primary data streams.

Technologies such as inductive loop sensors, radar, and microwave sensors provide high-frequency measurements of traffic variables (e.g., volume, occupancy, spot speed) at fixed locations. While offering valuable temporal granularity, their spatial resolution is inherently limited [

4]. Estimating segment or path travel times from these point measurements necessitates spatial extrapolation, a process fraught with inaccuracies, particularly under non-homogeneous traffic flow conditions often prevalent during congestion onset or dissipation. Furthermore, single-loop sensors, the most widely deployed variant, do not directly measure speed, requiring inference based on occupancy and assumed vehicle lengths, introducing further uncertainty. Sensor malfunctions, calibration drift, and counting errors also contribute to data quality issues [

5].

Systems like Automatic Vehicle Identification (AVI), License Plate Recognition (LPR), Bluetooth/Wi-Fi MAC address matching, and Dedicated Short-Range Communications (DSRC) offer direct measurements of segment travel times by re-identifying vehicles at two or more points along a corridor [

6]. These measurements are generally considered highly accurate representations of the experienced travel time for the sampled vehicles. However, the deployment infrastructure for such systems is often sparse, both spatially (covering limited network segments) and temporally (yielding infrequent updates, especially during low-flow periods). A critical limitation is the inherent latency; these systems typically report Arrival-based Travel Time (ATT), reflecting conditions experienced by vehicles that have completed their journey through the segment. This ATT can significantly diverge from the real-time Predicted Travel Time (PTT), especially when traffic conditions are rapidly evolving [

7].

Leveraging Global Positioning System (GPS) or other localization technologies embedded in smartphones, fleet vehicles (taxis, trucks, delivery vans), or dedicated probe vehicles provides trajectory data, offering the potential for broader network coverage compared to fixed infrastructure. Floating Car Data derived from these probes can yield direct or inferred travel time estimates. However, practical limitations persist. Market penetration rates are often insufficient to guarantee dense spatiotemporal coverage, leading to data sparsity [

8]. GPS measurements themselves are subject to noise, signal obstructions, and drift. Furthermore, accurately associating GPS traces with specific road segments requires sophisticated map-matching algorithms, which can introduce additional errors and computational overhead [

9].

The individual shortcomings of these sensor modalities underscore the compelling need for advanced data fusion techniques [

10]. Data fusion is more accurate, reliable, comprehensive, and robust than could be obtained from any single source alone. The fundamental premise is to leverage the complementary strengths. For instance, the high accuracy of sparse AVI data, the high temporal frequency of potentially noisy point sensor data, and the broader spatial sampling (albeit potentially sparse and noisy) of GPS probes can construct a superior representation of the underlying traffic dynamics. The core challenge lies not merely in aggregating data, but in intelligently integrating streams possessing vastly different characteristics in terms of accuracy, precision, spatial and temporal resolution, latency, and noise profiles [

11].

Existing data fusion methodologies for TTP span a spectrum of approaches. Classical statistical and filtering techniques have been widely explored. Weighted averaging methods offer simplicity but struggle to optimally combine sources with disparate quality or conflicting information [

12]. Bayesian inference provides a probabilistic framework for evidence combination but often requires strong prior assumptions [

13]. Dempster-Shafer (D-S) evidence theory offers a mechanism to handle uncertainty and conflict without priors. However, it can be computationally complex and sensitive to the definition of belief structures, particularly when dealing with continuous variables like travel time [

10]. Kalman Filtering (KF) and its non-linear extensions, the Extended KF (EKF) [

14] and the Unscented KF (UKF) [

15], represent a powerful paradigm for state estimation in dynamic systems, explicitly modeling process and measurement noise. However, the performance of KF methods hinges critically on the accuracy of the underlying system dynamic model and the appropriateness of Gaussian noise assumptions.

In parallel, the advent of deep learning (DL) has ushered in powerful data-driven paradigms for TTP and fusion. Models such as Artificial Neural Networks, and particularly sequence models like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) have demonstrated remarkable capabilities in capturing complex, non-linear temporal dependencies in traffic data [

16]. More recently, Graph Neural Networks (GNNs) have emerged as the state-of-the-art for modeling the explicit network topology and spatial dependencies inherent in traffic systems [

17]. Transformer architectures, leveraging self-attention mechanisms, excel at capturing long-range dependencies and are increasingly applied to TTP [

18]. While DL models offer unparalleled flexibility and performance, they often function as black boxes and lack interpretability. They typically require vast amounts of labeled training data, and their generalization performance beyond the training distribution can be uncertain.

Despite these advancements, a critical gap remains in the principled fusion of highly heterogeneous data streams characterized by significant disparities in precision and sparsity, particularly within a continuous-time modeling framework. Existing methods often treat all data sources somewhat uniformly within their respective frameworks [

10] (e.g., assigning different noise variances in KF or relying on the model to implicitly learn weights in DL) but lack an explicit mechanism to leverage sparse, high-precision measurements (like AVI) for strong, corrective updates to the system state, while simultaneously using denser, albeit potentially less precise or indirect, measurements (like GPS trajectories or loop sensor readings) for continuous guidance of the state evolution between these corrections. Simple averaging or standard filtering/learning approaches risk either diluting the high-value information from sparse sensors or becoming overly reliant on potentially biased, high-frequency data. Effectively harnessing the unique value proposition of each data type demands a more nuanced fusion strategy.

Furthermore, traffic dynamics are inherently continuous processes evolving over time. While discrete-time models like Recurrent Neural Networks (RNNs) approximate these dynamics, they can struggle with irregularly sampled data (common with GPS and AVI) and may not fully capture the underlying continuous evolution [

8,

19]. Neural Ordinary Differential Equations (NODEs) and their extensions, such as Neural Controlled Differential Equations (NCDEs), have recently emerged as a powerful class of deep learning models. They learn system dynamics directly in continuous time by parameterizing the derivative of the hidden state with a neural network. This continuous-time formulation makes them naturally suited for handling irregular time series data and modeling underlying physical processes. However, challenges remain regarding their computational cost (requiring ODE solvers), training stability, and inherent difficulty in modeling abrupt, discontinuous events (like traffic incidents or sudden congestion shifts) which are not well-described by standard ODEs.

To address the aforementioned limitations, this paper introduces FusionODE-TT, a novel model for accurate TTP based on the principled fusion of heterogeneous traffic sensor data within a continuous-time modeling paradigm. The core innovation of FusionODE-TT lies in its guided fusion mechanism, implemented within a NODE architecture. This mechanism explicitly differentiates between data sources based on their characteristics.

First, sparse but high-precision AVI-derived travel time measurements, when available, are used to apply a strong corrective update to the latent system state , effectively anchoring the model estimate to ground-truth observations. This concept draws inspiration from the update step in Kalman filtering and data assimilation techniques used in fields like weather forecasting, where sparse, high-impact observations are integrated. Second, denser but potentially lower-precision or indirect data streams from GPS trajectories and point sensors are utilized to provide continuous guidance to the state evolution process modeled by the NODE’s derivative function. This guidance helps the model infer state dynamics more accurately, particularly during periods lacking high-precision AVI updates.

Therefore, the contributions of this work are threefold:

First, we propose a novel guided fusion mechanism embedded within a NODE framework, specifically designed to differentially leverage heterogeneous traffic data for path travel time prediction.

Second, we detail this mechanism for integrating AVI, GPS, and point sensor data, and leverage sparse AVI data for strong correction of the latent state of path travel time.

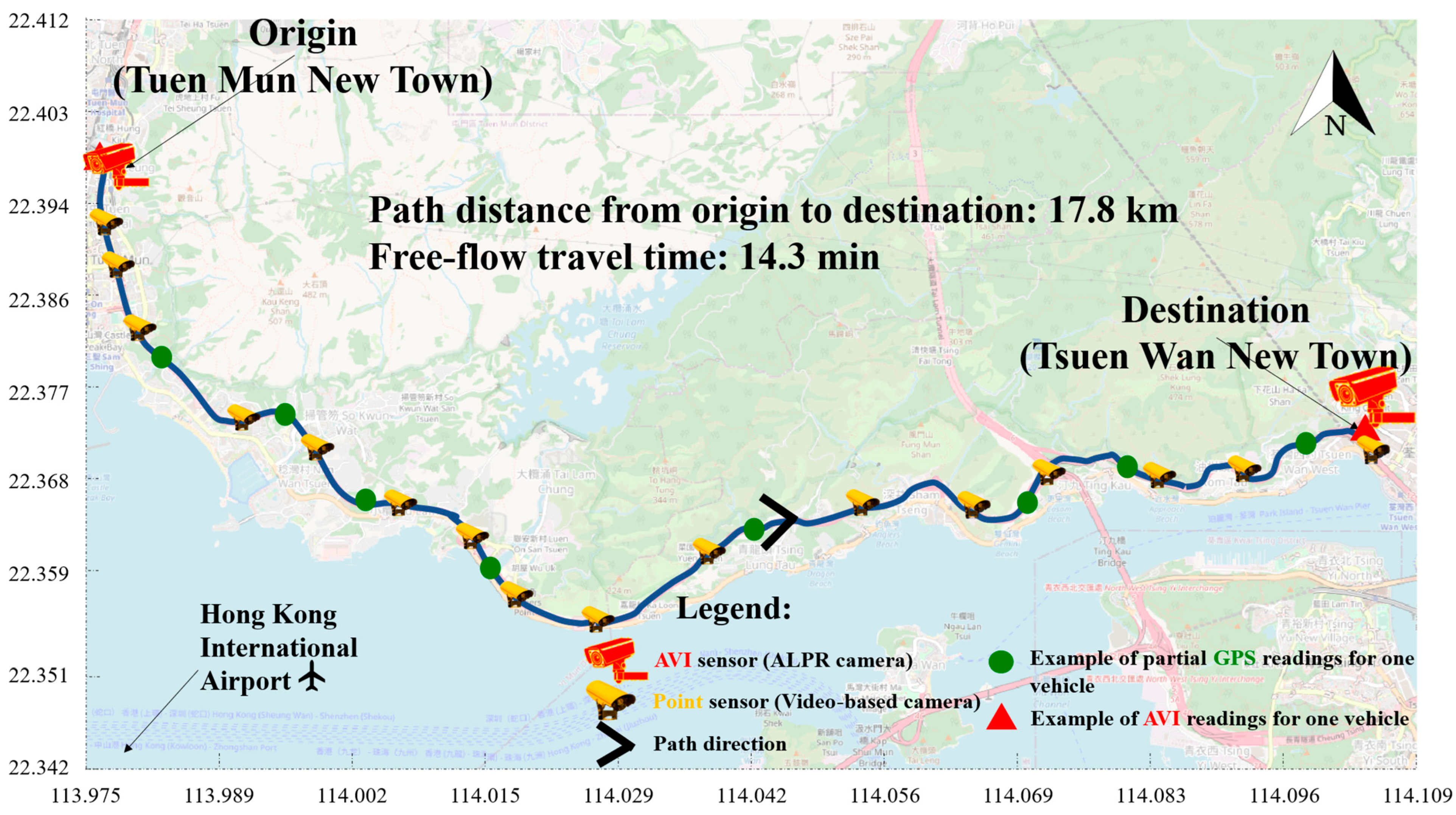

Third, real-world datasets in Hong Kong have been used for validation of the proposed FusionODE-TT model, and the performance is satisfactory.

This paper is organized as follows:

Section 2 reviews related work in data fusion and continuous-time traffic modeling in detail.

Section 3 presents the proposed FusionODE-TT model, elaborating on the NODE backbone and the guided fusion mechanism.

Section 4 describes the experimental setup and performance evaluation of the proposed FusionODE-TT model.

Section 5 provides the discussion on the efficacy of continuous-time guided fusion, model interpretability, and limitations. Finally,

Section 6 concludes the paper and outlines directions for future research.

3. Methodology

This section delineates the methodological foundation of the FusionODE-TT model, conceived for high-fidelity TTP through the principled fusion of heterogeneous traffic sensor data. Our approach leverages the expressive power of continuous-time dynamics modeling, instantiated via NODEs, coupled with a novel guided fusion mechanism. This mechanism is specifically architected to synergistically integrate high-frequency, potentially noisy guidance data derived from point sensors and probe vehicles, with sparse, yet high-accuracy, corrective data obtained from interval (AVI-type) sensors. We first establish a formal problem definition and introduce the notation employed throughout this section. Subsequently, we detail the NODE-based core architecture, followed by an in-depth exposition of the guided fusion components—continuous guidance and strong correction. Finally, we discuss the model training procedure and pertinent implementation considerations.

3.1. Problem Statement

The overarching goal of this work is to develop a robust predictive model capable of accurately forecasting the travel time along a specified route within a complex transportation network, utilizing a diverse array of sensor inputs available in contemporary ITS.

Network Representation: We model the transportation infrastructure as a directed graph , where represents the set of nodes (e.g., intersections, significant junctions, sensor locations) and denotes the set of directed edges, corresponding to unidirectional road segments connecting pairs of nodes. A path or route through the network is defined as a temporally ordered sequence of contiguous segments, , where each segment .

Heterogeneous Data Sources: The FusionODE-TT model is designed to ingest and process data from three principal sensor modalities, each possessing distinct characteristics.

Point Sensors: These sensors (e.g., inductive loops, radar, microwave sensors) are deployed at fixed locations , typically associated with a specific road segment . They provide time-stamped measurements of spot speed. The point sensor dataset () comprises tuples of the form: . gives the location of sensor , is the measurement timestamp, denotes the measured spot speed. Data from is characterized by high temporal resolution but provides only point-specific information, necessitating spatial inference to estimate segment-level conditions. Furthermore, this data is susceptible to noise and sensor malfunctions.

Interval Sensors: Systems such as AVI using toll tags, License Plate Recognition (LPR), or Bluetooth/Wi-Fi re-identification provide direct measurements of the time taken by individual vehicles to traverse a specific path . The dataset, , contains records like: ,where identifies the vehicle traversing path , is the timestamp recorded upon entering path , and is the timestamp upon exiting. The directly observed travel time for this traversal, often referred to as Arrival-based Travel Time (ATT), is calculated as . Data from is generally considered the most accurate ground-truth measurement of experienced travel time for the sampled vehicles. However, its primary limitations are significant spatiotemporal sparsity (due to limited deployment or low detection rates) and inherent latency, as the travel time only becomes known at , reflecting conditions experienced in the past time intervals.

Probe Vehicles: This data stream originates from vehicles equipped with localization devices, primarily GPS. The dataset, , consists of time-stamped trajectory points for individual vehicles: , where is a unique vehicle identifier, is the timestamp, and are the latitude and longitude of vehicle , and is the instantaneous speed of vehicle . Probe vehicles data offers the potential for extensive network coverage, overcoming the fixed-location limitation of point sensors. However, its practical utility is often constrained by variable and sometimes low market penetration rates (leading to spatiotemporal sparsity), inherent GPS positional noise and inaccuracies (especially in challenging environments like urban canyons), and the critical dependence on accurate map-matching algorithms to associate trajectory points with the network graph .

Prediction Objective: The central aim is to predict the PTT for a given path , denoted . This represents the anticipated duration required for a hypothetical vehicle, commencing its journey along path at time , to reach the end of the path. The prediction horizon specifies the future time window for which the prediction is relevant (e.g., predicting the travel time for the next 30 min). The PTT inherently requires forecasting the evolution of traffic conditions along the path during the travel period itself. It distinguishes fundamentally from the lagged ATT measurements provided by interval sensors.

3.2. The Proposed Model

To address the challenges of path travel time prediction using sparse and heterogeneous data stated in

Section 3.1, we propose a novel model, FusionODE-TT, which is designed to effectively model the underlying traffic dynamics in continuous time. The architecture of this framework, as illustrated in

Figure 1, is systematically composed of three primary modules: an encoder for latent state initialization, a core dynamic modeling block based on NODEs, and a decoder for the final prediction task.

First, time-aligned feature sequences derived from heterogeneous sensor data and historical travel times are fed into an RNN encoder (

Section 3.3). This module processes the input sequence to generate a fixed-dimensional latent state vector,

, which encapsulates the comprehensive traffic state of the path at the beginning of the prediction horizon. This vector serves as the initial condition for the core dynamic modeling block.

The core of our framework is the guided continuous-time dynamics module (

Section 3.3 and

Section 3.4), which models the evolution of the traffic state

using a NODE. This approach represents the traffic flow as a continuous process, offering a more physically plausible model than traditional discrete-time methods. A key innovation within this module is the guided fusion mechanism. This mechanism leverages sparse but highly accurate travel time observations from AVI sensors to correct the ODE’s latent state trajectory. When an AVI event is observed, the model simulates the travel time, calculates the error against the observation, and uses a gain network to compute a corrective term that recalibrates the latent state. This process anchors the model’s internal state to real-world observations, significantly enhancing long-term prediction accuracy.

The final component of our proposed framework is the decoder, which is responsible for mapping the terminal latent state vector, , into the final path travel time prediction. The latent state is the output of the core module, representing the culmination of the system’s continuous-time evolution, guided by the fusion mechanism. For this decoding task, we employ a standard Multi-Layer Perceptron (MLP). The MLP is a powerful yet efficient function approximator, well-suited for this regression task. It takes the high-dimensional latent state as input and processes it through a series of non-linear transformations (i.e., hidden layers with ReLU activation functions) to produce a single, scalar output value. This output represents the predicted travel time in minutes. The entire FusionODE-TT model, including the encoder, the Neural ODE, and the MLP decoder, is trained end-to-end. This is achieved by minimizing a composite loss function.

3.3. Continuous-Time Dynamics with NODEs

The initial step in our framework is to process the time-aligned, multivariate input sequence derived from the various sensors and historical records. This input, representing the traffic state over a historical lookback window, is fed into an RNN encoder. The primary function of this encoder is to learn and compress the complex temporal dependencies within the input sequence into a fixed-dimensional hidden state vector. This vector is then transformed through a linear projection layer to produce the initial latent state, denoted as . This latent state serves as a comprehensive summary of the path’s traffic condition at the beginning of the prediction horizon and provides the initial value for the subsequent continuous-time dynamic modeling block.

The FusionODE-TT model adopts a continuous-time perspective on traffic dynamics, diverging from traditional discrete-time modeling approaches. We hypothesize that the complex, evolving state of the traffic network can be effectively captured by a lower-dimensional latent state vector , where is the dimensionality of this latent representation. is intended to implicitly encode all pertinent information about traffic conditions (e.g., speeds, densities, queue lengths) across the network segments relevant to the prediction task at time .

The temporal evolution of this latent state is modeled using a NODE, where the core dynamics function, , is parameterized by a neural network with learnable parameters . A foundational challenge with any purely self-evolving dynamic system is its potential to drift from the true state over time if it is not continuously anchored to real-world observations. The significance of introducing continuous guidance signals, (), is to directly address this challenge. These signals, which are derived from our heterogeneous sensor data, provide the ODE with a continuous stream of external, real-time information about the traffic environment. By conditioning the latent state’s evolution on these signals—in addition to the road network’s spatial structure, represented by the graph —we ensure the model’s dynamics are constantly informed by and responsive to real-time traffic conditions, rather than evolving in isolation. This leads to the governing equation of our framework.

The temporal evolution of this latent state is defined as:

To effectively model the spatiotemporal dynamics, the function

internally leverages a Graph Neural Network (GNN). At each evaluation step within the ODE solver,

first uses a GNN layer to update the state based on neighbors in

(with adjacency matrix

), capturing spatial interactions:

Then, it combines this spatially aware state

with the original state

and the continuous guidance inputs

,

using a temporal processing block (e.g., an MLP) to compute the final derivative, which represents the output of

:

The state

at any time

, given an initial state

, is determined by the solution to the initial value problem defined by Equation (1), obtained via integration:

The continuous nature of Equation (4) provides inherent advantages over discrete-time models. It naturally handles irregularly sampled input data without requiring explicit imputation or awkward padding schemes, and it can, in principle, model the underlying continuous physical processes more faithfully.

To translate the learned latent state

into the desired output, which is the predicted travel time, a decoder function

is implemented as a neural network with learnable parameters

.

where the decoder maps the latent state at the prediction initiation time

to the PTT estimate

for the specified path

The prediction horizon

might influence the training target or could be an additional input to

3.4. The Guided Fusion Mechanism

A central innovation of FusionODE-TT is the guided fusion mechanism, designed to intelligently integrate the heterogeneous data streams (

,

,

) by explicitly recognizing and leveraging their distinct characteristics within the NODE framework. It comprises two complementary components: continuous guidance and strong correction, which are illustrated in

Section 3.4.1 and

Section 3.4.2, respectively.

3.4.1. Continuous Guidance

Data from point sensors () and probe vehicles () typically arrive more frequently than AVI data, providing a continuous, albeit potentially noisy and incomplete, stream of information about traffic conditions. This stream is used to continuously “guide” the trajectory of the latent state as it evolves according to the learned dynamics.

Raw data from and should be processed into feature vectors. For point sensors, () from point sensor relevant to the path are aggregated over short, regular time intervals (e.g., 2 min). This aggregation might involve averaging, median filtering, or more sophisticated methods. The resulting aggregated features for all relevant sensors at time interval form a feature vector . GPS trajectory points are first subjected to map-matching to associate them with network segments . Once matched, segment-level statistics (e.g., average speed, vehicle density estimates based on probe counts and segment length) are computed by aggregating matched points within short time intervals, yielding feature vectors .

To integrate these discretely observed features into the continuous-time dynamics, we must define their continuous-time counterparts,

and

. We employ piecewise-constant interpolation. Under this strategy, feature values remain constant between updates:

This method, while simple, ensures the ODE solver has defined inputs. More advanced approaches, like NCDEs, could learn the continuous path from discrete observations, offering a promising avenue for future refinement.

These continuous-time signals, and , are then incorporated into the NODE dynamics function (as defined in Equation (1)), ensuring the rate of change in the latent state is continuously influenced by the latest available guidance. The neural network learns to interpret these signals and modulate the state evolution accordingly, allowing the model to track conditions between sparser, high-accuracy corrections.

3.4.2. Strong Correction

While continuous guidance helps, interval sensor (AVI) data provides sparse but highly accurate measurements, . The strong correction mechanism explicitly leverages this accuracy to counteract potential drift in . It functions as a discrete, event-triggered update.

We treat each valid AVI measurement for segment

arriving at

as an event. The continuous integration (Equation (1)) pauses, and

is corrected. The challenge is that

is an Arrival-based Travel Time (ATT) over a past interval

. To handle this, we calculate a model-simulated ATT (

) by integrating model-derived instantaneous speeds

over the past interval:

Here,

is the segment length, and

is derived from

via a learned function (parameterized by

). This requires accessing historical

. The innovation (

) is the difference:

We denote

as the state before correction. The state is updated using:

where

is the corrected state, and

∈

is the adaptive gain vector, calculated as:

Here,

∈

is the predicted state error covariance (model uncertainty),

∈

is the measurement noise covariance (observation uncertainty), and

∈

is the linearized observation operator (Jacobian matrix):

This ensures adapts based on relative uncertainties. The corrected state then serves as the new initial condition for the ODE solver, resuming integration from using Equation (1). This mechanism stabilizes the model by anchoring it to high-confidence AVI data.

3.5. Model Training

The training process for FusionODE-TT involves optimizing the parameters of the neural networks involved: (dynamics function ), (decoder ), (observation functions ), and potentially any learnable parameters within the gain . This optimization is achieved by minimizing a composite loss function using gradient-based methods and backpropagation through the entire computational graph, including the ODE solver and the discrete correction steps.

3.5.1. Loss Function Design

The first type of loss is primary prediction loss (

): This term directly measures the discrepancy between the model’s final predicted travel time

for path

and the corresponding ground truth travel time

Suitable ground truth might come from held-out high-quality AVI data for the entire path or other reliable sources. Common choices include mean absolute error (MAE,

q = 1) or mean squared error (MSE,

q = 2).

where

is the number of samples in a batch, and

indexes the samples.

The second type is auxiliary AVI consistency loss (

). To ensure that the latent state

evolves meaningfully and that the observation functions

provide accurate segment estimates even before the strong correction is applied, we introduce an auxiliary loss term. This term penalizes the difference between the model’s segment travel time estimate immediately prior to correction

and the actual observed AVI travel time

:

where

is the number of AVI correction events occurring within the training batch/sequence, and

denotes the segment associated with the

k-th event. This loss encourages the continuous dynamics and the observation functions to remain consistent with the high-accuracy AVI data throughout the evolution.

The third type is guidance reconstruction loss (

). In some cases, it might be beneficial to ensure that the latent state

retains sufficient information about the guidance inputs

. If a reconstruction mapping

back to the space of guidance features can be defined (e.g., another neural network), a loss term can be added:

where the sum is over relevant time steps

where guidance data

and

are available. However, defining and training

q adds complexity, and this term might not always be necessary if

and

provide sufficient supervision.

Therefore, the total loss can be expressed as:

where

and

are hyperparameters that control the relative importance of the auxiliary loss terms.

3.5.2. Optimization and Gradient Computation

The model parameters (, learnable parts of ) are optimized using stochastic gradient descent variants. The core challenge lies in computing the gradients of the loss with respect to these parameters, as this requires backpropagation through the dynamics defined by the ODE solver and the discrete correction steps.

The standard technique for computing gradients through the ODE integration (Equation (4)) is the adjoint sensitivity method. This method avoids storing intermediate activation by solving a second, augmented ODE backward in time, offering constant memory complexity with respect to the number of integration steps. However, it can be computationally intensive and may suffer from numerical instability.

The strong correction step (Equation (9)) introduces discontinuities in the state trajectory

. Backpropagating gradients through these discrete jumps requires careful handling. The gradient of the loss

with respect to the state after the correction,

, needs to be correctly propagated to the state before the correction,

, and to any learnable parameters in

and those involved in calculating

(i.e.,

). If

is learnable or depends on

, its derivative path must also be considered. The relationship for propagating gradients across the update step (Equation (9)), assuming

might depend on

, can be derived using the chain rule. For instance, the gradient with respect to

would be:

where

is the identity matrix. Computing

involves differentiating Equation (8) with respect to

, which in turn requires differentiating Equation (7) (the simulated ATT). The gradients with respect to parameters of

(if any) and

(via

) also need to be carefully derived and included in the backpropagation pass. The adjoint state must be appropriately updated or reset at these event times during the backward pass.

3.5.3. Robustness of the Guided Fusion Mechanism

A critical prerequisite for the effective implementation of the guided fusion mechanism is ensuring the quality and validity of the AVI data that underpins it. To mitigate the risk of performance degradation from erroneous sensor readings, we employ a robust filtering methodology for all incoming AVI travel time observations, as proposed in [

4]. This advanced filter leverages both within-day and day-to-day variations in traffic conditions to effectively identify and remove outliers caused by systematic errors or transient sensor failures, even in low-sample-size conditions.

Furthermore, the strong correction mechanism is designed to be resilient to data outages. In a scenario where a sensor failure results in a complete lack of validated AVI events within a given prediction horizon, the guidance loss term () is not computed. In such cases, the model seamlessly relies on the uncorrected ODE trajectory derived from the historical data, ensuring the framework’s robustness and preventing performance degradation.

5. Discussion

The experimental results presented in the previous section confirm the effectiveness of the proposed FusionODE-TT model. This section provides a broader discussion of the implications of these findings, the interpretability of the model, and the limitations of the current study, which in turn suggest directions for future research.

5.1. The Efficacy of Continuous-Time Guided Fusion

The superior performance of the full FusionODE-TT model, as demonstrated in the ablation study (

Table 4), highlights the value of our two primary methodological contributions. First, the outperformance of the ODE-based models over the standard GRU baseline suggests that modeling traffic dynamics as a continuous-time process is a more effective approach. This allows the model to capture the underlying physical flow of traffic more faithfully than discrete-time models.

Second, and more critically, the significant performance gap between the “Guided” and “No-Guided” versions of the FusionODE-TT model validates the efficacy of the proposed guided fusion mechanism. This finding indicates that using sparse, high-fidelity AVI data to actively correct the model’s latent state trajectory is a powerful strategy. It effectively mitigates the problem of error accumulation and trajectory drift in long-term forecasting, demonstrating that the quality and strategic use of data can be more impactful than quantity alone.

5.2. Model Interpretability

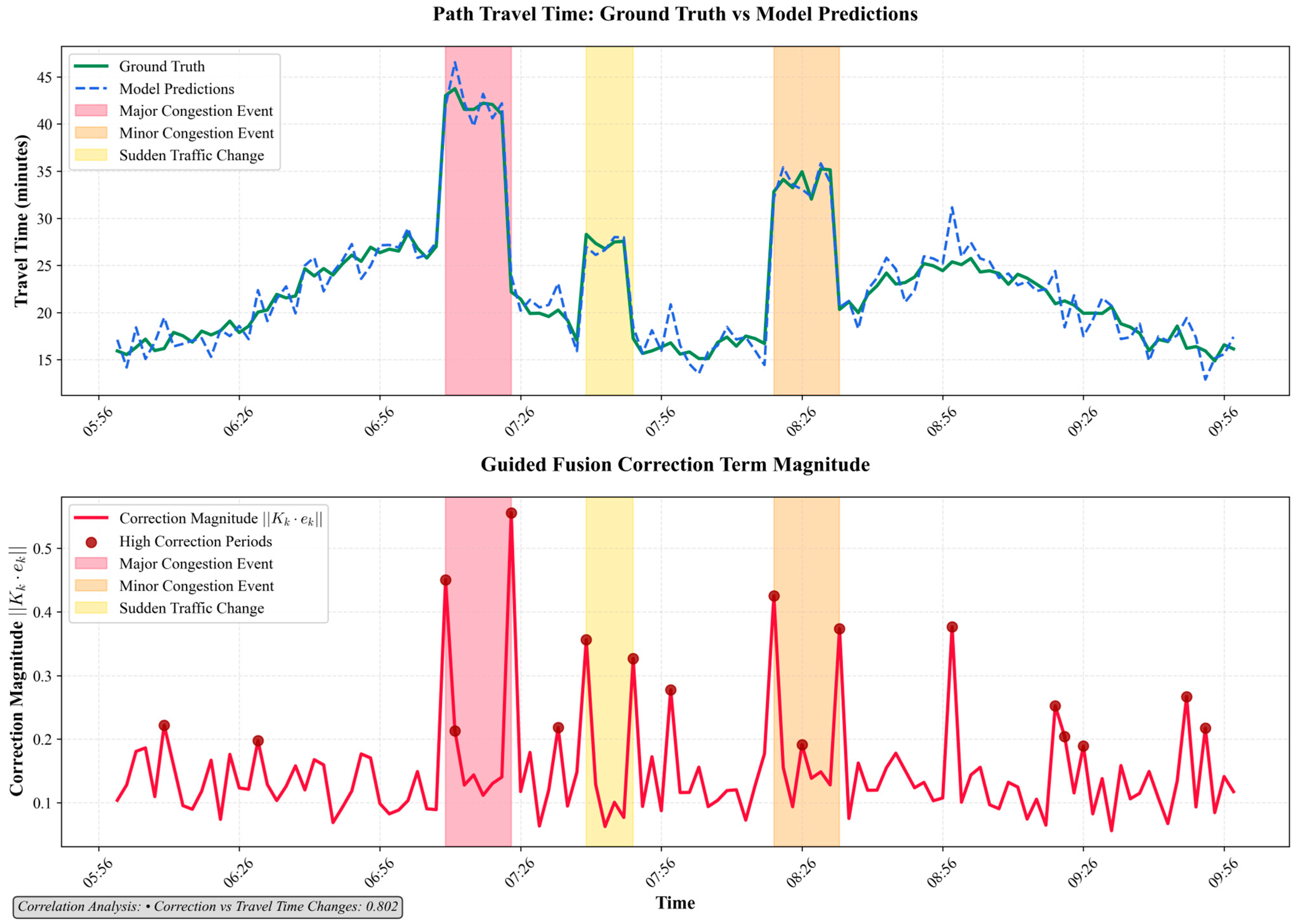

While deep learning models offer powerful predictive capabilities, their “black box” nature can be a barrier to adoption in critical systems. Therefore, it is important to provide an interpretation of the internal state and mechanisms of the proposed FusionODE-TT model. We conducted a case study on a representative period from the test dataset that includes the morning peak transition (06:00–10:00).

Figure 6 shows the relationship between the magnitude of the model’s latent state

and the ground truth travel time. The model’s latent state (blue line) shows a clear correlation with the ground truth travel time (green line); its value spikes at the onset of congestion (e.g., around 07:00 and 07:30). The model is also able to automatically highlight periods of high volatility as detected traffic events (blue dots), demonstrating that the latent state has successfully learned to represent the intensity of the traffic state in a meaningful way. Furthermore, the coefficient of correlation between latent state and travel time is 0.983. It verifies the effectiveness of the internal latent state for capturing the variations in path travel times.

Furthermore, we analyzed the magnitude of the correction term,

, to verify that the guided fusion mechanism behaves in a logical and interpretable manner. As shown in

Figure 7, the relationship between travel time change (defined as the difference in ground truth travel time between consecutive 2 min intervals) and the applied corrections is evident. During periods of stable traffic, such as before 06:30, the change in ground truth travel time between adjacent intervals is minimal, and consequently, the correction term magnitude (red spikes) remains close to zero.

However, as the morning congestion begins to build between 07:00 and 08:30, the traffic dynamics become more volatile. It is precisely during these periods that the guided fusion mechanism applies the strongest corrections, as indicated by the prominent red spikes. To quantify this relationship, we calculated the correlation between the correction term magnitude and the travel time change. The analysis yields a strong positive coefficient of correlation of 0.802, confirming that the model has learned to apply the most significant corrections when the real-world traffic state is changing most rapidly. This demonstrates that the guided fusion mechanism is not applying corrections arbitrarily but has learned an intelligent, responsive, and trustworthy strategy, which is critical for real-world deployment.

5.3. Limitations and Future Work

While this study provides strong evidence for the potential of the FusionODE-TT framework, we acknowledge several limitations that open avenues for future research. First, regarding real-time applicability, our computational analysis shows that the model’s inference time is well within the budget for typical traffic management cycles. However, the complexity of the Neural ODE presents a trade-off between accuracy and speed. Future work could explore model optimization techniques, such as quantization or pruning, to further enhance its efficiency for edge computing applications. Second, in terms of generalizability, the model was validated on a single, albeit complex, urban corridor. Future studies should test the framework’s transferability to cities with different road network topologies (e.g., grid-like vs. radial) to fully assess its robustness. Finally, the current model relies exclusively on traffic-related data. A significant opportunity for enhancement lies in the integration of external factors. Incorporating contextual data such as adverse weather conditions, traffic incidents, and special events as features could provide the model with a more comprehensive understanding of the factors influencing travel time, likely leading to further improvements in prediction accuracy.

6. Conclusions

This paper introduced FusionODE-TT, a novel model designed to address the persistent challenges of path travel time prediction from sparse and heterogeneous traffic data. By conceptualizing traffic dynamics as a continuous-time process, our model utilizes a NODE to capture the complex, non-linear evolution of the traffic state. The central contribution of our work is a guided fusion mechanism, which integrates high-fidelity but sparse AVI data to actively correct and anchor the model’s latent state trajectory.

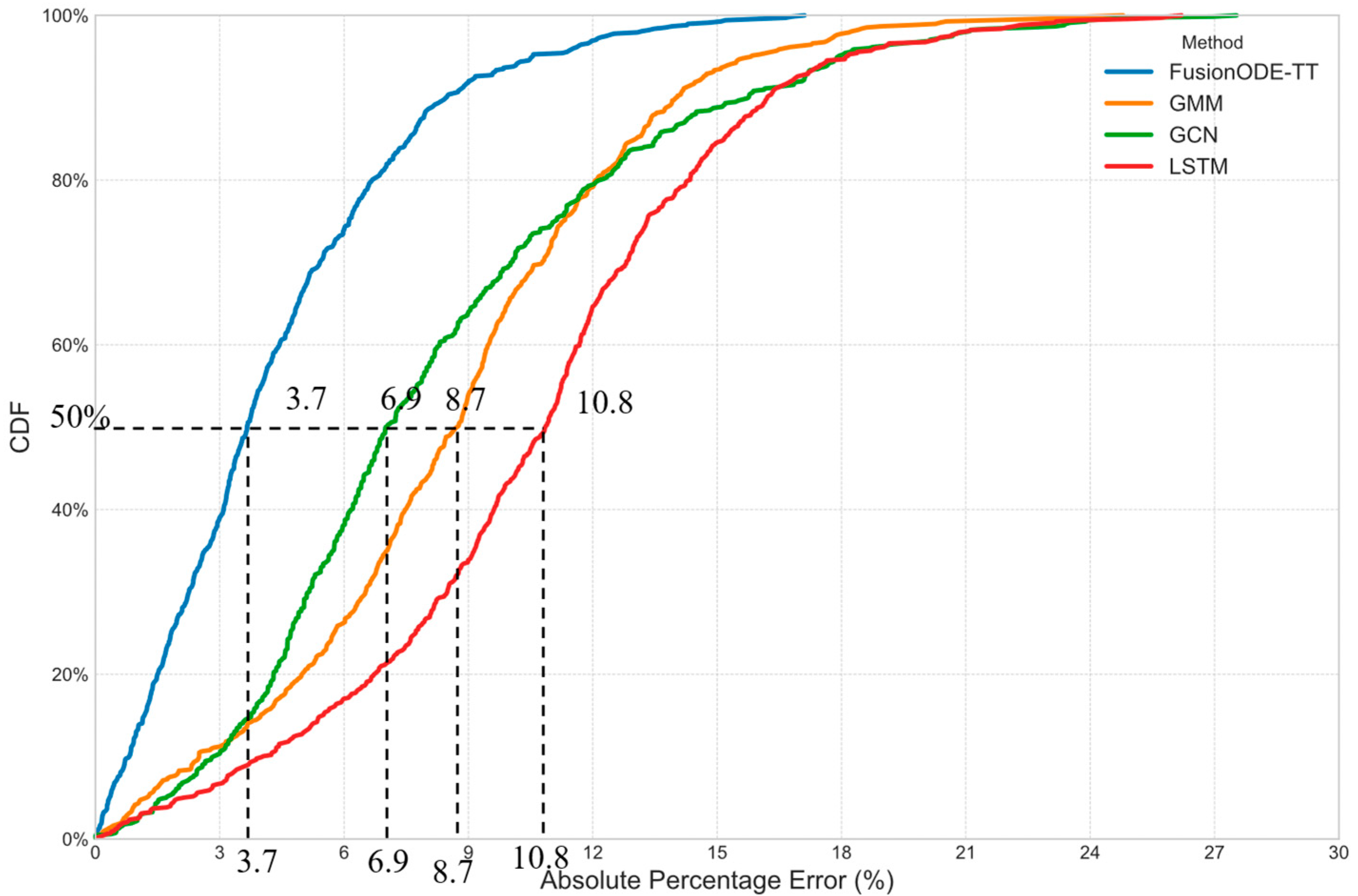

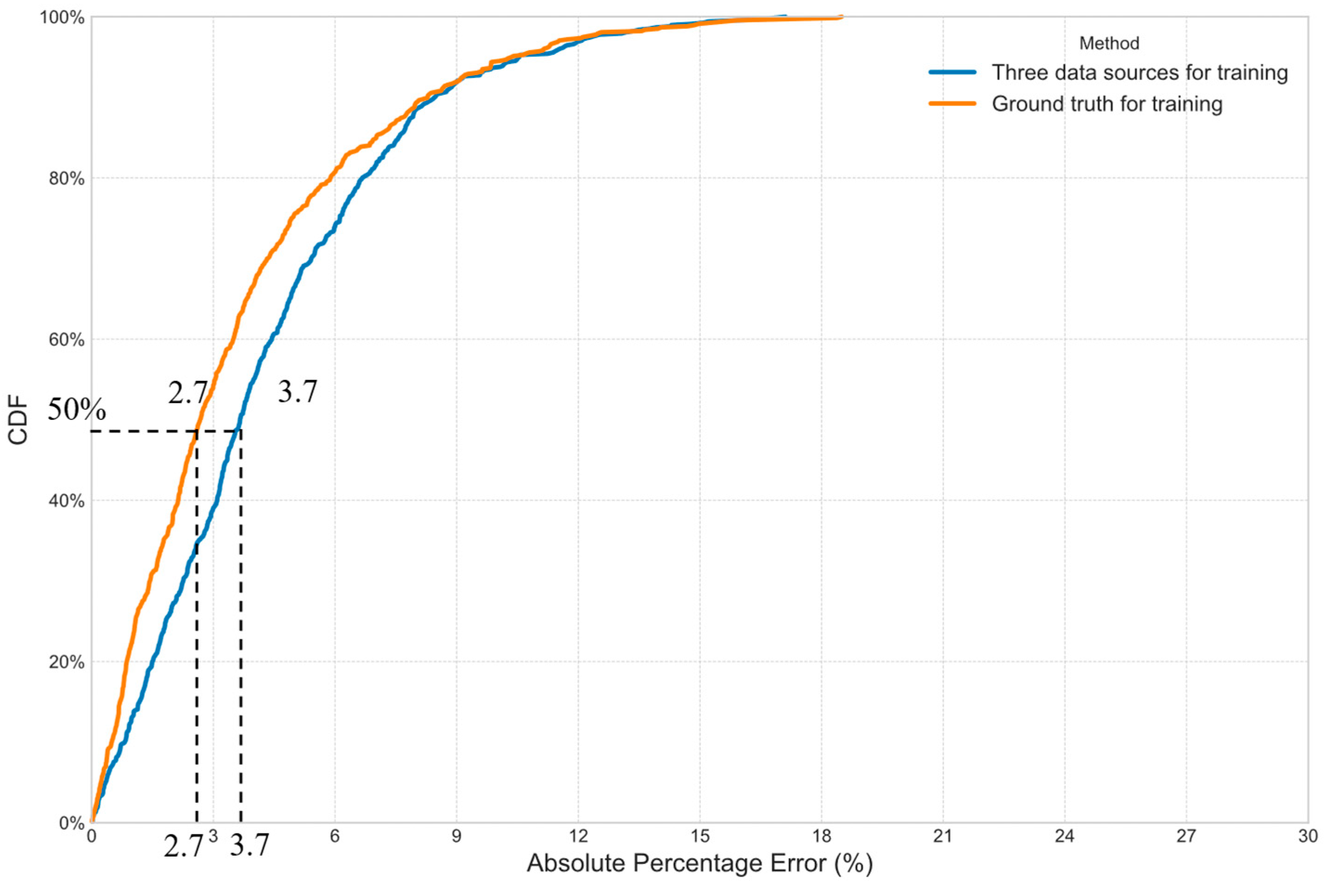

The comprehensive experiments yield several key outcomes that validate the proposed approach. The quantitative results demonstrate that: (1) fusing data from all available sensors (AVI, GPS, point sensors) using the proposed model yields a significantly more accurate prediction (MAPE of 3.1%) than using any single data source, confirming the value of a holistic, heterogeneous fusion strategy (from

Table 2); (2) the proposed model is significantly more robust to data scarcity than traditional baselines, with its error still less than 10% when training data is reduced to 60%, compared to nearly 20% for other methods (from

Table 4); and (3) an ablation study proves that both the continuous-time dynamics (NODE) and the guided fusion mechanism are essential components, each providing a significant and independent contribution to the model’s superior performance (

Table 5). Critically, our qualitative analysis further reveals that the model is not a “black box.” The model’s internal latent state is shown to be highly correlated with real-world traffic conditions (coefficient of correlation of 0.983), and the guided fusion mechanism behaves logically by applying the strongest corrections during periods of high traffic volatility (from

Figure 6 and

Figure 7).

Future research should focus on several promising directions. First, the guided fusion mechanism, while proven effective, could be enhanced by incorporating more sophisticated attention mechanisms to dynamically weight the influence of different AVI correction events based on their reliability or the current traffic state. Second, the current model architecture could be extended to a full spatiotemporal graph neural network, allowing it to model network-wide traffic propagation more effectively. Furthermore, the current framework relies exclusively on traffic-related sensor data. To create a more comprehensive prediction system, a significant avenue for future work is the integration of external contextual factors. Variables such as adverse weather conditions (e.g., rainfall intensity), traffic incidents, and special events could be incorporated as additional features into the input sequence. This would allow the model to learn the complex, non-linear impacts of these external events on traffic dynamics, further improving its prediction accuracy and practical utility for advanced traffic management.