Feasibility of an AI-Enabled Smart Mirror Integrating MA-rPPG, Facial Affect, and Conversational Guidance in Realtime

Abstract

1. Introduction

- Technical Integration: We design and implement a modular smart mirror system incorporating validated tools for real-time physiological and emotional sensing.

- Real-Time Interaction: Our platform supports AI Multimodal interaction for low-latency, bidirectional communication in both English and Korean languages, enabling interactive Smart Mirror.

- Online MA-rPPG Innovation: While prior MA-rPPG implementations function in offline mode using pre-recorded videos, we adapt it for real-time inference through continuous webcam streaming and GPU acceleration.

2. Related Works

2.1. Remote Photoplethysmography (rPPG) for Ambient Health Monitoring

2.2. Deep Learning-Based Facial Emotion Recognition for Mental Health Monitoring

2.3. Conversational AI for Mental Health Interventions

2.4. Integration in Smart Mirror Healthcare Systems

3. System Development Overview

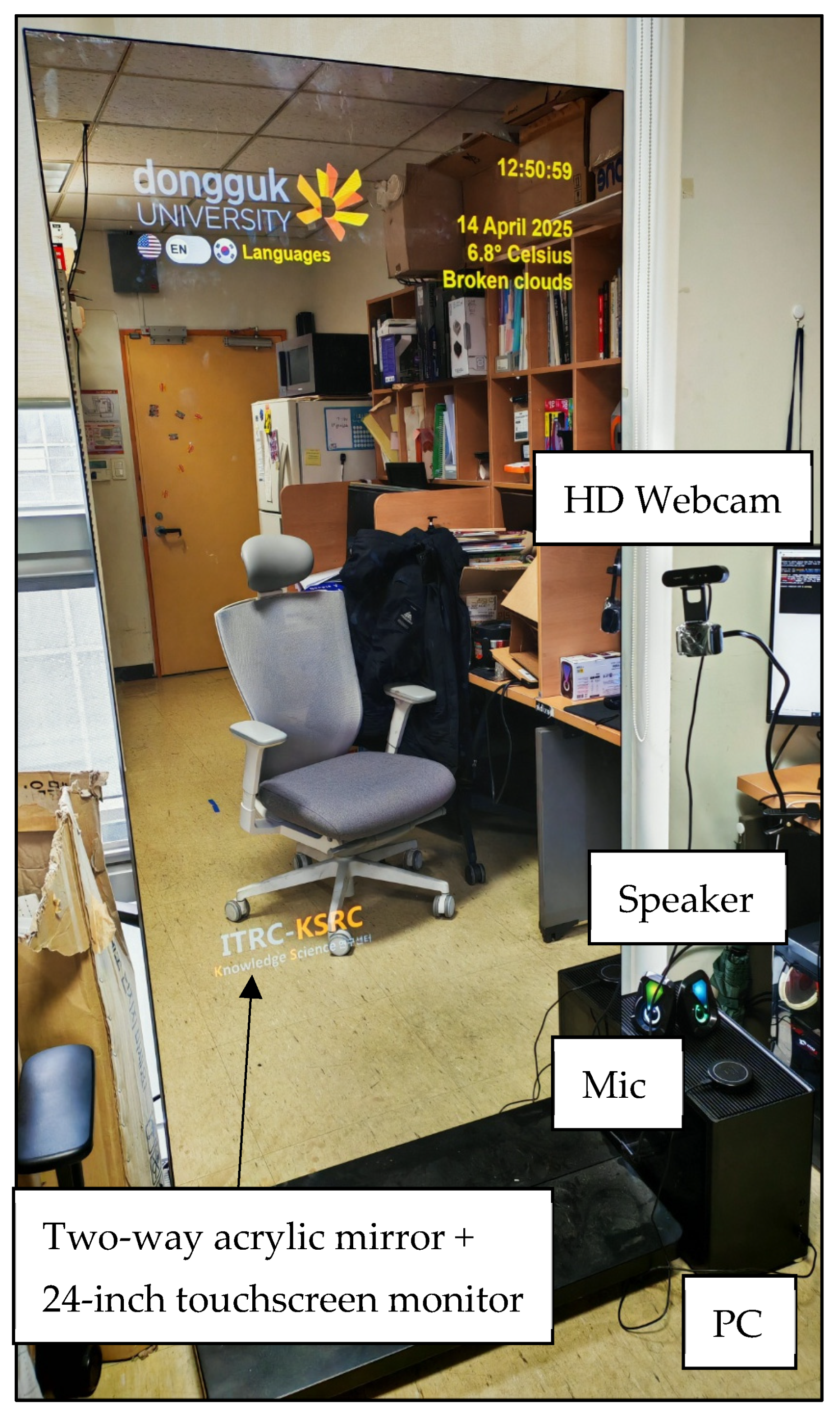

3.1. Hardware Setup

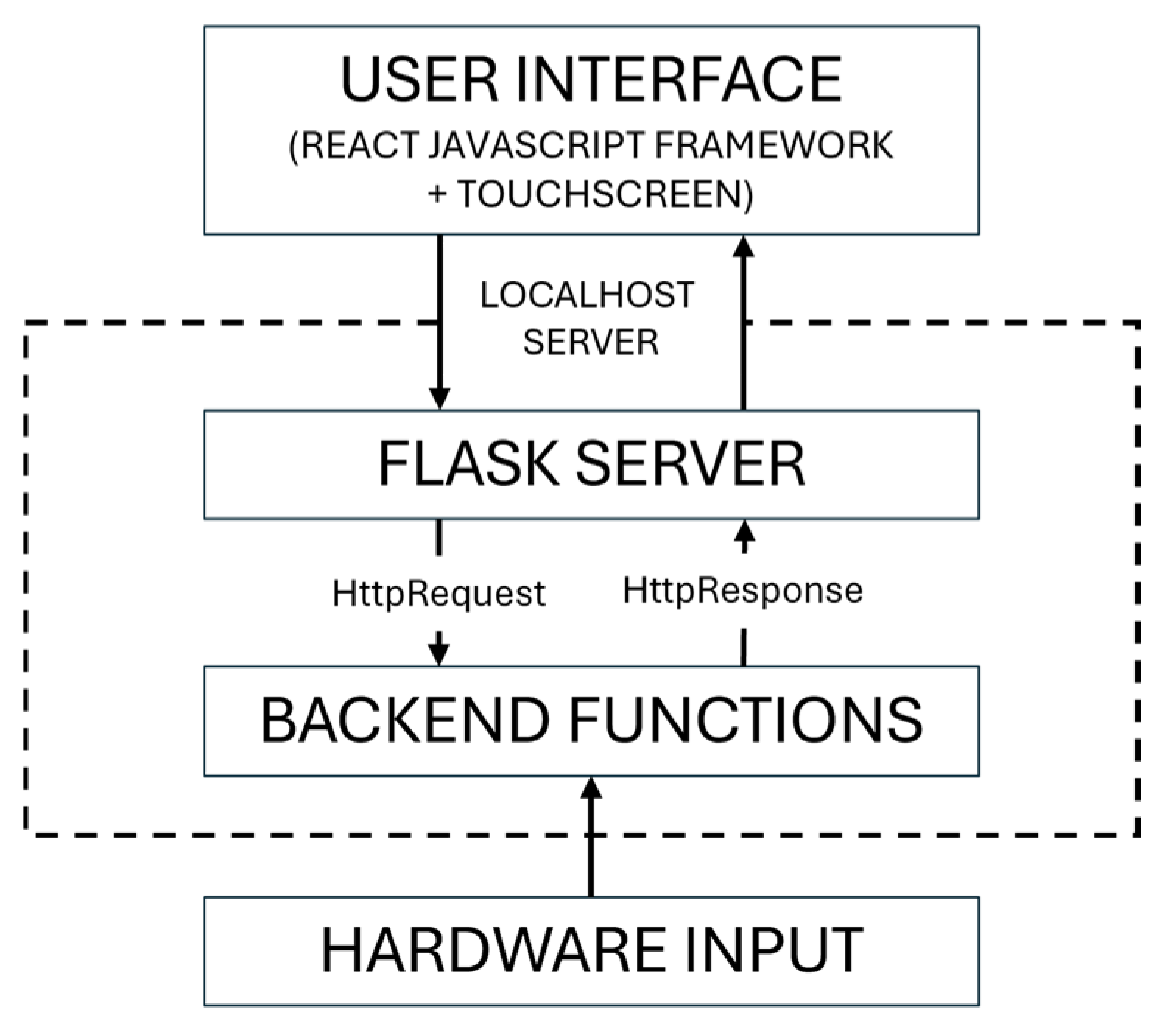

3.2. Integrated Hardware-to-GUI Pipeline Architecture

3.3. Smart Mirror Software Architecture

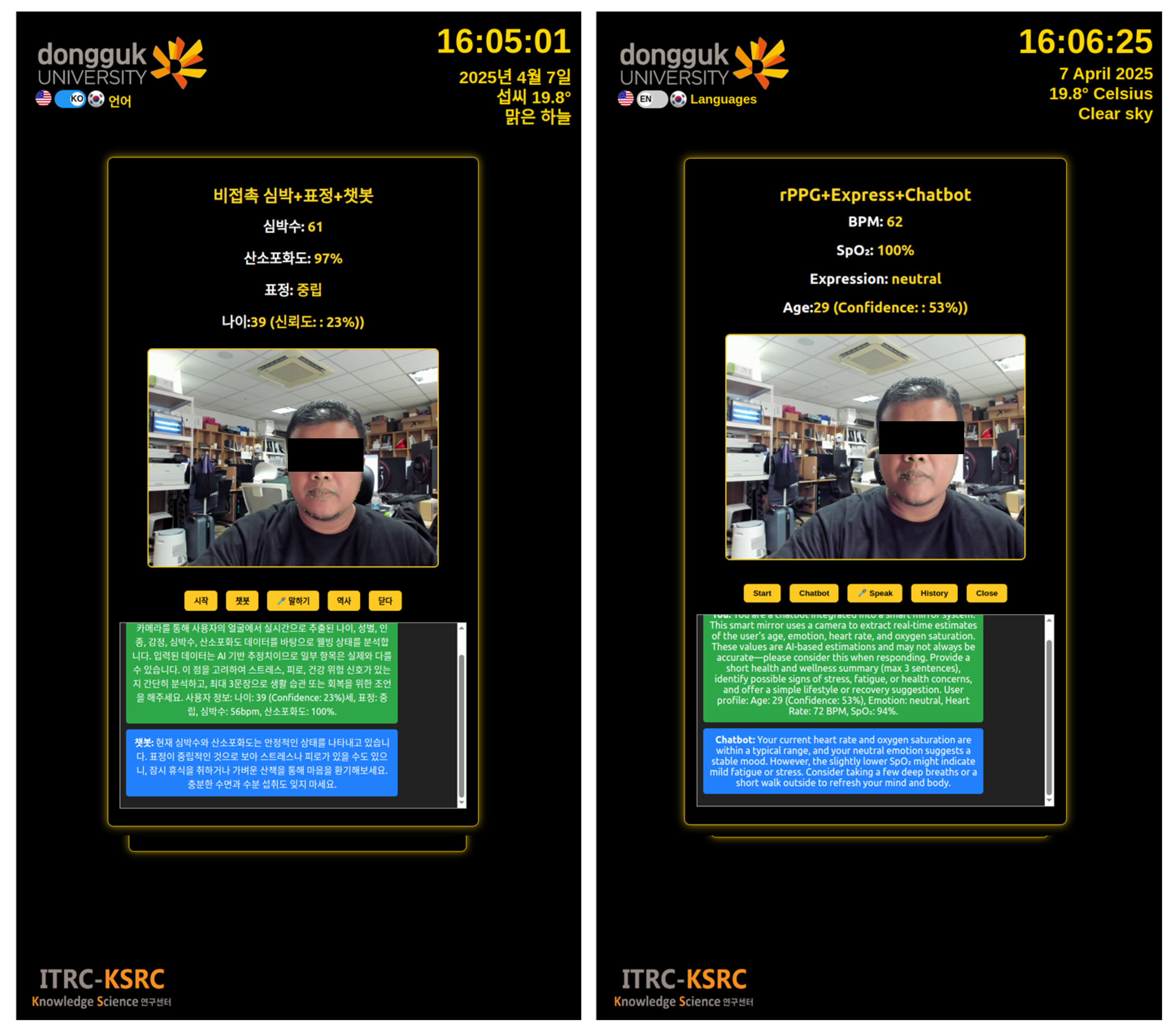

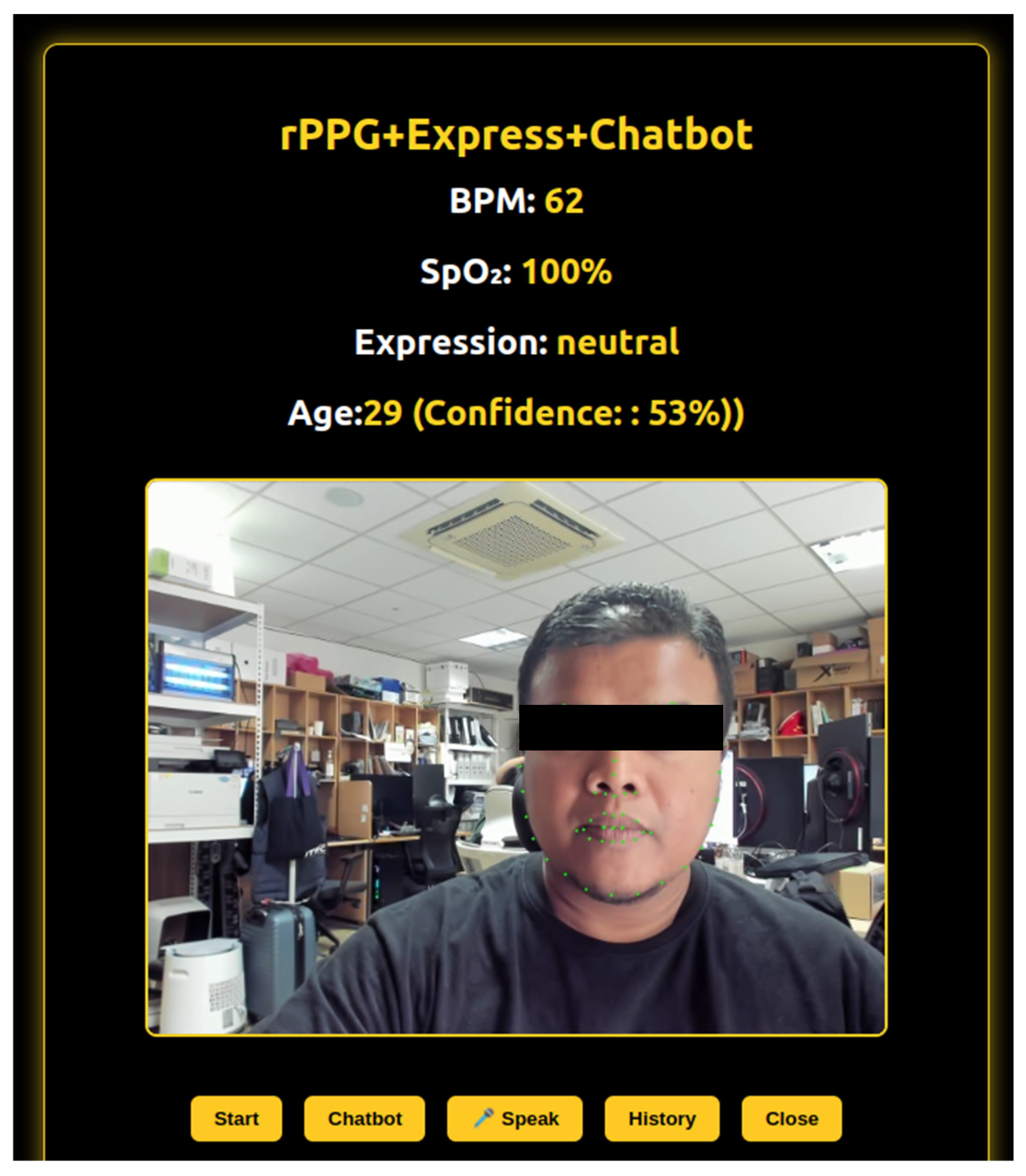

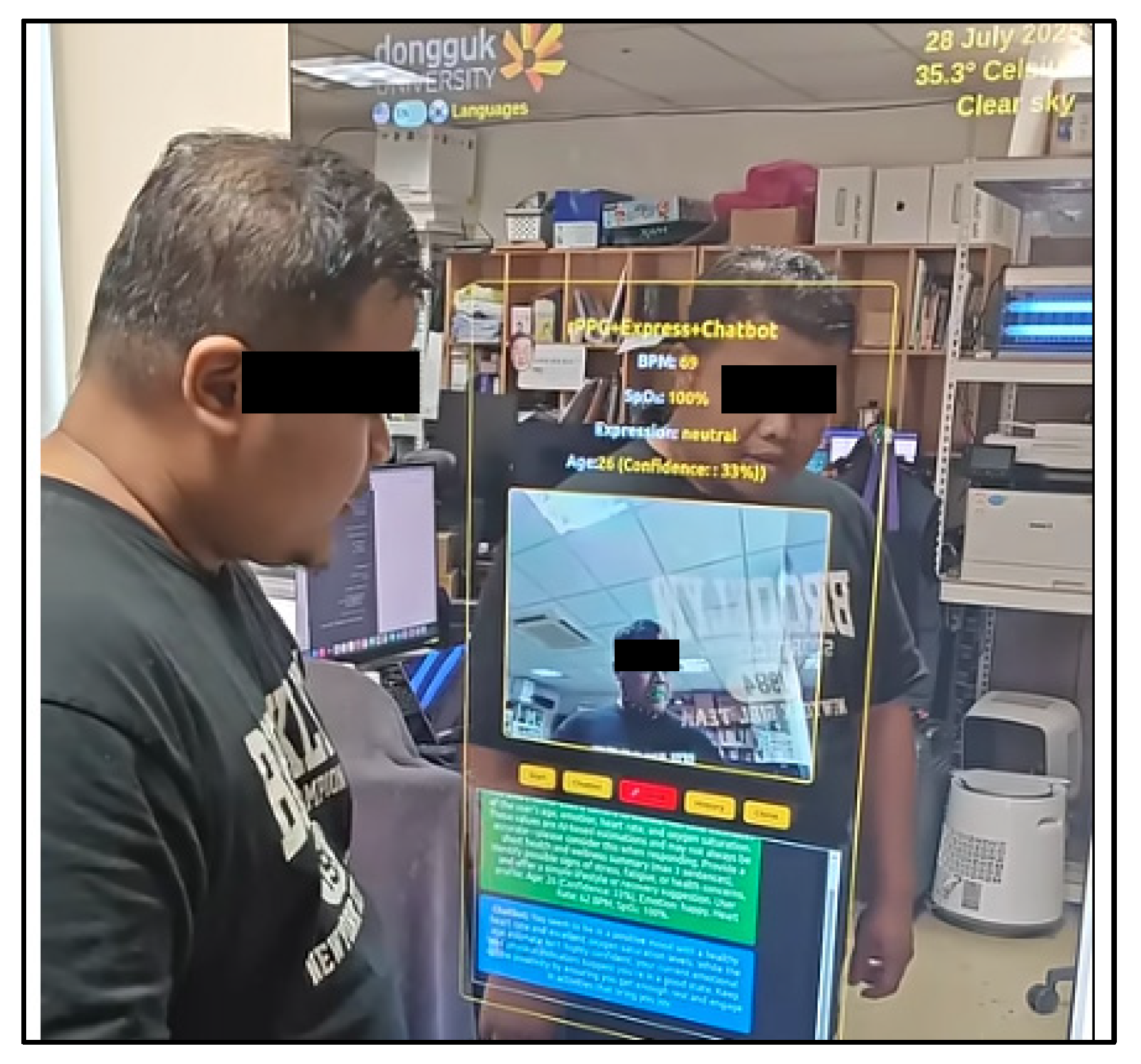

3.3.1. Smart Mirror Main GUI

3.3.2. Integrated Multimodal Feedback Submodule

3.3.3. Blood Oxygen Saturation (SpO2) Estimation

3.3.4. MA-rPPG Estimation Module

3.3.5. Emotion Detection Module

Face Detection and Alignment

- a = distance between the two eyes,

- b and c = distances from each eye to a reference point (e.g., nose tip or a midpoint below the eyes).

Feature Representation via Deep CNN

Emotion Classification

3.3.6. Chatbot Interaction Module

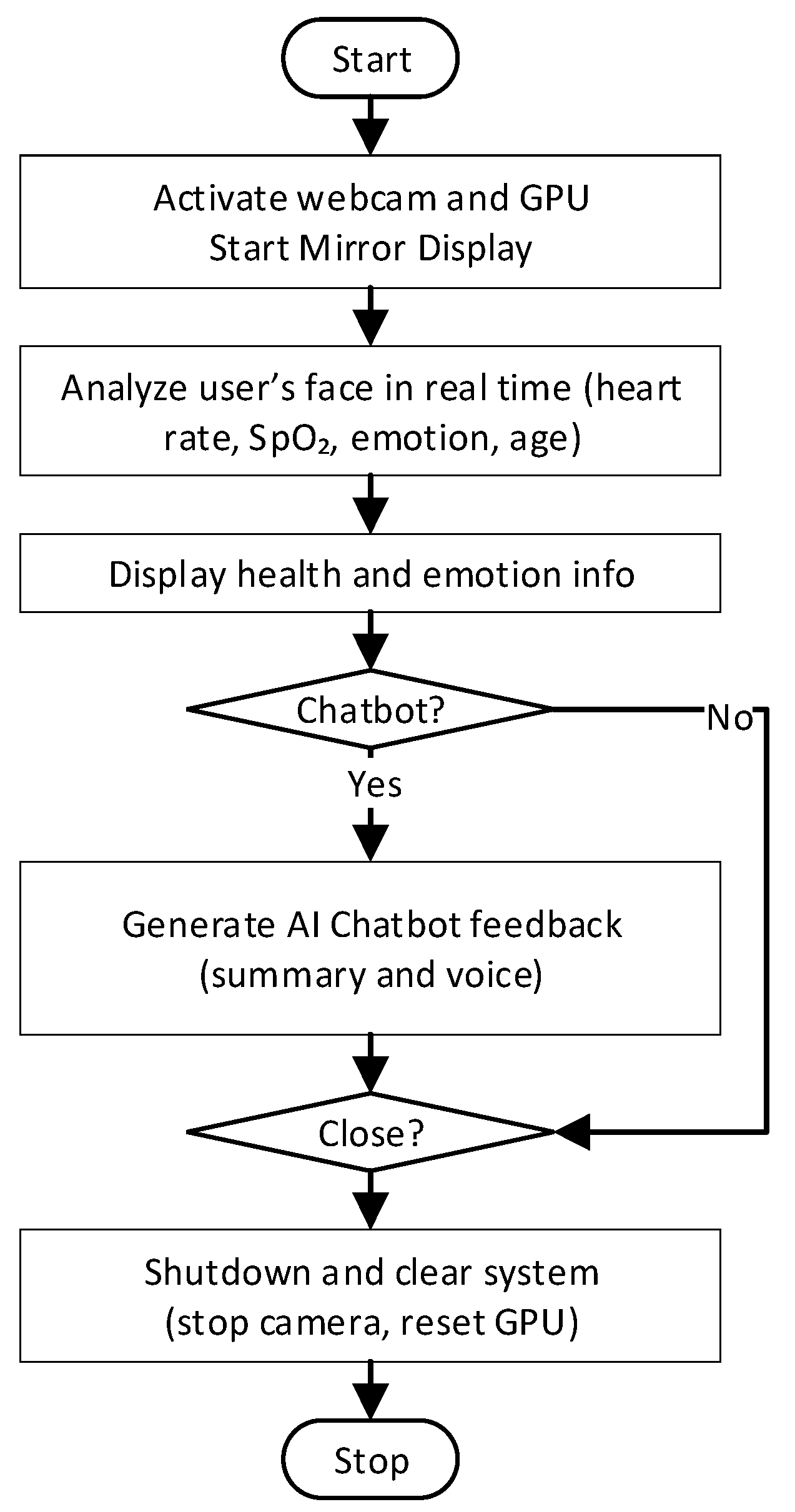

3.3.7. GUI and Programming Flow

4. Experimental Evaluation

4.1. Evaluation Protocols

- -

- Hardware and Camera Specifications: All tests were performed on a desktop workstation (Ubuntu 20.04 LTS, NVIDIA RTX 4060 Ti GPU(NVIDIA Corporation, Santa Clara, CA, USA). A Logitech HD webcam was used at 640 × 480 resolution and 30 frames per second, positioned at eye level.

- -

- Subject Distance and Pose: Participants were seated at a distance of 0.8–1.0 m from the mirror, maintaining a frontal pose without significant head rotation.

- -

- Lighting Conditions: Experiments were conducted under typical office ambient lighting (300–350 lux). No direct sunlight or additional studio lighting was used.

- -

- Stabilization Period: A 30 s stabilization window was applied prior to each measurement for rPPG/SpO2 signal normalization, consistent with prior rPPG practices.

- -

- Window Lengths: rPPG and SpO2 estimation used 2 s rolling windows for frame-level computation.

- -

- Number of Trials and Subjects: Each subject completed three consecutive trials, and results were averaged.

- -

- Exclusion Criteria: Trials were discarded if the subject moved abruptly, left the camera frame, or if face detection confidence dropped below 70%. Trigger thresholds included: minimum 70% face-detection confidence, minimum 8 s rPPG window with acceptable SNR, and successful extraction of at least one affective label with >50% confidence. These thresholds determined whether data were passed to the chatbot module for response generation.

4.2. System Performance: Latency and GPU Utilization

4.3. Physiological Accuracy: Real-Time MA-Rppg vs. Contact PPG Sensor

4.4. Affective Detection Accuracy

4.5. Adaptive Chatbot Response Evaluation

| “You are a chatbot integrated into a smart mirror system. This smart mirror uses a camera to extract real-time estimates of the user’s age, emotion, heart rate, and oxygen saturation. These values are AI-based estimations and may not always be accurate—please consider this when responding. Provide a short health and wellness summary (max 3 sentences), identify possible signs of stress, fatigue, or health concerns, and offer a simple lifestyle or recovery suggestion.” |

| “User profile: Age: ${age}, Emotion: ${expression}, Heart Rate: ${bpm} BPM, SpO2: ${spo2}%.” |

4.6. Functional Evaluation Across Emotion-Specific User Interactions

5. Discussion

5.1. Innovations in Real-Time Deployment

- Persistent GPU Threading for MA-rPPG: The backend initiates all core MA-rPPG model components (generator, keypoint detector, head pose estimator) within a continuous GPU-based worker thread (gpu_worker), avoiding repeated model instantiation and reducing latency.

- Live Video Capture and Processing: A dedicated frame acquisition thread (webcam_worker) captures facial input, performs live face detection, and feeds data directly into the inference pipeline, enabling responsive, frame-wise analysis.

- Dynamic Signal Estimation: The pipeline incorporates advanced filtering (0.7–3.5 Hz bandpass) and peak detection to extract heart rate and SpO2 data accurately from live video, addressing the challenges of motion artifacts and signal noise.

- Multimodal AI Fusion: Real-time facial expressions, demographic attributes, and physiological metrics are fused into a contextual summary. This triggers an interactive feedback loop via a GPT-4o-based mental health chatbot, with responses rendered both textually and audibly through text-to-speech synthesis.

5.2. Comparative Analysis of Existing Smart Mirror Systems

5.3. Design Considerations for Ambient and Human-Centered Use

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Video Demonstration

Appendix B. Pseudo-Code of Interfacing Implementation

| Algorithm A1 Backend Processing Pipeline for Integrated rPPG, Facial Analysis, and Chatbot Interaction |

| Input: Webcam video stream Output: Real-time BPM, SpO2, Facial Attributes (Emotion, Age Initialize: Load AI models: MA-rPPG, DeepFace Initialize queues and shared variables for inter-process communication Start Flask API server Procedure MAIN(): Start threads: THREAD WebcamWorker() THREAD GPUWorker() Procedure WebcamWorker(): while not StopSignal: Capture frame from webcam Detect face and landmarks using dlib Annotate landmarks on frame Enqueue annotated frames into FrameQueue Sleep for frame interval (30 fps) Procedure GPUWorker(): Initialize buffers: bpm_buffer, spo2_buffer Initialize counters: frame_counter = 0 while not StopSignal: Dequeue frame from FrameQueue Preprocess frame for model inference frame_counter += 1 if (frame_counter mod 5 == 0): Estimate BPM using MA-rPPG model: Generate synthetic animation Calculate rPPG signals Apply bandpass filter Detect peaks Compute BPM Update BPM shared variable if (frame_counter mod 15 == 0): Estimate SpO2 from recent ROI frames: Extract RGB channel means Calculate AC/DC ratios Compute SpO2 Update SpO2 shared variable Perform facial analysis with DeepFace every frame until thresholds are met: if Age estimation incomplete: Analyze age Accumulate results (30 samples) Update shared age result with highest frequency if (frame_counter mod 30 == 0): Analyze emotion using DeepFace Update shared emotion result Procedure FlaskAPI(): Route/start: Initialize webcam capture Clear stop signal Start MAIN() Route/stop: Set stop signal Release webcam Clear GPU memory Reset all shared variables and buffers Route/result: Return JSON containing: - BPM - SpO2 - Emotion - Age (with confidence) Route/video_feed: Stream JPEG frames from processed output queue Run FlaskAPI at host address (e.g., http://localhost:5004) |

| Algorithm A2 Frontend Interaction Pipeline for Smart Mirror Interface |

| Input: Real-time data from Backend API Output: GUI Display with Real-time Vital Signs, Facial Attributes, and Chatbot Interaction Initialize: Set state variables: BPM, SpO2, Emotion, Age Initialize video feed URL Initialize messages queue for chatbot Initialize status indicators Procedure MAIN(): Display GUI: - Vital signs (BPM, SpO2) - Facial attributes (Emotion, Age) - Live webcam feed - Chat history - Interactive buttons (Start, Chatbot, Speak, Stop) Procedure handleStart(): Send POST request to backend API to initiate processing Set video feed URL to backend webcam stream Start polling backend API for results at regular intervals (every 1 second) Procedure pollResult(): Fetch results from backend API: - BPM - SpO2 - Emotion - Age with confidence Update state variables accordingly Check if all data (BPM, SpO2, Emotion, Age) are valid: if valid: Set dataReady = true else: Set dataReady = false Procedure handleChatbot(): if not dataReady: return Construct chatbot prompt with current state values: - Age, Emotion, BPM, SpO2 Send chatbot prompt to backend TTS API Receive chatbot response text and audio URL Display chatbot response in GUI chat history Play audio response from chatbot Procedure handleSpeak(): Activate browser-based speech recognition: Set language based on user preference (English/Korean) Capture speech input and convert to text Send captured text to chatbot backend API Receive and display chatbot textual and audio response Procedure handleStop(): Send request to backend to stop data acquisition Stop polling for results Clear video feed Reset all state variables Close application interface Display Status Messages: if any errors encountered during interactions: Display user-friendly error message in GUI Run MAIN to render and manage real-time GUI interactions |

Appendix C. List of Acronyms

| Acronym | Full Form | Description |

| AI | Artificial Intelligence | Computer systems are designed to perform tasks that normally require human intelligence, such as learning and reasoning. |

| rPPG | Remote Photoplethysmography | A non-contact technique to estimate heart rate and other vital signs using video-based analysis of subtle skin color changes. |

| MA-rPPG | Moving Average Remote Photoplethysmography | An enhanced rPPG method that uses motion-augmented training for more robust vital sign estimation. |

| SpO2 | Peripheral Oxygen Saturation | A measure of the amount of oxygen-carrying hemoglobin in the blood relative to the amount of hemoglobin not carrying oxygen. |

| PPG | Photoplethysmography | A contact-based optical technique to measure blood volume changes in the microvascular bed of tissue. |

| FER | Facial Emotion Recognition | AI-based analysis of human emotions from facial expressions. |

| CNN | Convolutional Neural Network | A deep learning model commonly used for analyzing visual data such as images and videos. |

| ROI | Region of Interest | A selected area in an image or video frame used for focused analysis or processing. |

| GUI | Graphical User Interface | The visual part of a software application that allows users to interact with the system through graphical elements. |

| API | Application Programming Interface | A set of rules and endpoints that enable software applications to communicate with each other. |

| BPM | Beats Per Minute | A unit used to measure heart rate or pulse. |

| MAE | Mean Absolute Error | A metric used to measure the average magnitude of errors between predicted and true values. |

| LoA | Limits of Agreement | Statistical measure that defines the range within which most differences between two measurement methods lie. |

| GPT-4o | Generative Pre-trained Transformer 4o | An advanced large language model developed by OpenAI, used for generating text and conversational AI responses. |

| TTS | Text-to-Speech | Technology that converts written text into spoken voice output. |

| STT | Speech-to-Text | Technology that converts spoken language into written text. |

| CBT | Cognitive Behavioral Therapy | A psychological treatment method that helps patients manage problems by changing patterns of thinking or behavior. |

References

- Ghazal, T.M.; Hasan, M.K.; Alshurideh, M.T.; Alzoubi, H.M.; Ahmad, M.; Akbar, S.S.; Al Kurdi, B.; Akour, I.A. IoT for Smart Cities: Machine Learning Approaches in Smart Healthcare—A Review. Future Internet 2021, 13, 218. [Google Scholar] [CrossRef]

- Alboaneen, D.A.; Alsaffar, D.; Alateeq, A.; Alqahtani, A.; Alfahhad, A.; Alqahtani, B.; Alamri, R.; Alamri, L. Internet of Things Based Smart Mirrors: A Literature Review. In Proceedings of the 2020 3rd International Conference on Computer Applications & Information Security (ICCAIS), Riyadh, Saudi Arabia, 19–21 March 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Bianco, S.; Celona, L.; Ciocca, G.; Marelli, D.; Napoletano, P.; Yu, S.; Schettini, R. A Smart Mirror for Emotion Monitoring in Home Environments. Sensors 2021, 21, 7453. [Google Scholar] [CrossRef]

- Yu, H.; Bae, J.; Choi, J.; Kim, H. LUX: Smart Mirror with Sentiment Analysis for Mental Comfort. Sensors 2021, 21, 3092. [Google Scholar] [CrossRef]

- Shaik, T.; Tao, X.; Higgins, N.; Li, L.; Gururajan, R.; Zhou, X.; Acharya, U.R. Remote patient monitoring using artificial intelligence: Current state, applications, and challenges. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1485. [Google Scholar] [CrossRef]

- Baker, S.; Xiang, W. Artificial Intelligence of Things for smarter healthcare: A survey of advancements, challenges, and opportunities. IEEE Commun. Surv. Tutor 2023, 25, 1261–1293. [Google Scholar] [CrossRef]

- Dowthwaite, L.; Cruz, G.R.; Pena, A.R.; Pepper, C.; Jäger, N.; Barnard, P.; Hughes, A.-M.; das Nair, R.; Crepaz-Keay, D.; Cobb, S.; et al. Examining the Use of Autonomous Systems for Home Health Support Using a Smart Mirror. Healthcare 2023, 11, 2608. [Google Scholar] [CrossRef]

- Chaparro, J.D.; Ruiz, J.F.-B.; Romero, M.J.S.; Peño, C.B.; Irurtia, L.U.; Perea, M.G.; del Toro Garcia, X.; Molina, F.J.V.; Grigoleit, S.; Lopez, J.C. The SHAPES Smart Mirror Approach for Independent Living, Healthy and Active Ageing. Sensors 2021, 21, 7938. [Google Scholar] [CrossRef] [PubMed]

- Casalino, G.; Castellano, G.; Pasquadibisceglie, V.; Zaza, G. Improving a mirror-based healthcare system for real-time estimation of vital parameters. Inf. Syst. Front. 2025, 1–17, online first. [Google Scholar] [CrossRef]

- Fatima, H.; Imran, M.A.; Taha, A.; Mohjazi, L. Internet-of-Mirrors (IoM) for Connected Healthcare and Beauty: A Prospective Vision. Internet Things 2024, 28, 101415. [Google Scholar] [CrossRef]

- Paruchuri, A.; Liu, X.; Pan, Y.; Patel, S.; McDuff, D.; Sengupta, S. Motion Matters: Neural Motion Transfer for Better Camera Physiological Measurement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 4–8 January 2024; pp. 5933–5942. [Google Scholar] [CrossRef]

- Serengil, S.; Ozpinar, A. A Benchmark of Facial Recognition Pipelines and Co-Usability Performances of Modules. J. Inf. Technol. 2024, 17, 95–107. [Google Scholar] [CrossRef]

- Serengil, S.I.; Ozpinar, A. LightFace: A Hybrid Deep Face Recognition Framework. In Proceedings of the 2020 Innovations in Intelligent Systems and Applications Conference (ASYU), Istanbul, Turkey, 15–17 October 2020; pp. 23–27. [Google Scholar] [CrossRef]

- Serengil, S.I.; Ozpinar, A. HyperExtended LightFace: A Facial Attribute Analysis Framework. In Proceedings of the 2021 International Conference on Engineering and Emerging Technologies (ICEET), Istanbul, Turkey, 27–28 October 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Henriquez, P.; Matuszewski, B.J.; Andreu-Cabedo, Y.; Bastiani, L.; Colantonio, S.; Coppini, G.; D’ACunto, M.; Favilla, R.; Germanese, D.; Giorgi, D.; et al. Mirror Mirror on the Wall… An Unobtrusive Intelligent Multisensory Mirror for Well-Being Status Self-Assessment and Visualization. IEEE Trans. Multimed. 2017, 19, 1467–1481. [Google Scholar] [CrossRef]

- Song, S.; Luo, Y.; Ronca, V.; Borghini, G.; Sagha, H.; Rick, V.; Mertens, A.; Gunes, H. Deep Learning-Based Assessment of Facial Periodic Affect in Work-Like Settings. In Computer Vision—ECCV 2022 Workshops; Lecture Notes in Computer Science; Karlinsky, L., Michaeli, T., Nishino, K., Eds.; Springer: Cham, Switzerland, 2023; Volume 13805. [Google Scholar] [CrossRef]

- Manicka Prabha, M.; Jegadeesan, S.; Jayavathi, S.D.; Vinoth Rajkumar, G.; Nirmal Jothi, J.; Santhana Krishnan, R. Revolutionizing Home Connectivity with IoT-Enabled Smart Mirrors for Internet Browsing and Smart Home Integration. In Proceedings of the 2024 5th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Madurai, India, 7–8 March 2024; pp. 683–689. [Google Scholar] [CrossRef]

- Öncü, S.; Torun, F.; Ülkü, H.H. AI-Powered Standardized Patients: Evaluating ChatGPT-4o’s Impact on Clinical Case Management in Intern Physicians. BMC Med. Educ. 2025, 25, 278. [Google Scholar] [CrossRef]

- Luo, D.; Liu, M.; Yu, R.; Liu, Y.; Jiang, W.; Fan, Q.; Kuang, N.; Gao, Q.; Yin, T.; Zheng, Z. Evaluating the Performance of GPT-3.5, GPT-4, and GPT-4o in the Chinese National Medical Licensing Examination. Sci. Rep. 2025, 15, 14119. [Google Scholar] [CrossRef]

- Bazzari, A.H.; Bazzari, F.H. Assessing the Ability of GPT-4o to Visually Recognize Medications and Provide Patient Education. Sci. Rep. 2024, 14, 26749. [Google Scholar] [CrossRef]

- Leng, Y.; He, Y.; Madgamo, C.; Vranceanu, A.-M.; Ritchie, C.S.; Mukerji, S.S.; Moura, L.M.V.R.; Dickson, J.R.; Blacker, D.; Das, S. Evaluating GPT’s Capability in Identifying Stages of Cognitive Impairment from Electronic Health Data. In Proceedings of the Machine Learning for Health (ML4H) Symposium 2024, Vancouver, BC, Canada, 15–16 December 2024. [Google Scholar] [CrossRef]

- Zhu, Q.; Wong, C.-W.; Lazri, Z.M.; Chen, M.; Fu, C.-H.; Wu, M. A Comparative Study of Principled rPPG-Based Pulse Rate Tracking Algorithms for Fitness Activities. IEEE Trans. Biomed. Eng. 2025, 72, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Antink, C.H.; Lyra, S.; Paul, M.; Yu, X.; Leonhardt, S. A Broader Look: Camera-Based Vital Sign Estimation across the Spectrum. Yearb. Med. Inform. 2019, 28, 102–114. [Google Scholar] [CrossRef] [PubMed]

- Haugg, F.; Elgendi, M.; Menon, C. Effectiveness of Remote PPG Construction Methods: A Preliminary Analysis. Bioengineering 2022, 9, 485. [Google Scholar] [CrossRef]

- Gudi, A.; Bittner, M.; Lochmans, R.; van Gemert, J. Efficient Real-Time Camera Based Estimation of Heart Rate and Its Variability. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1570–1579. [Google Scholar] [CrossRef]

- Ontiveros, R.C.; Elgendi, M.; Menon, C. A Machine Learning-Based Approach for Constructing Remote Photoplethysmogram Signals from Video Cameras. Commun. Med. 2024, 4, 109. [Google Scholar] [CrossRef] [PubMed]

- Boccignone, G.; Conte, D.; Cuculo, V.; D’Amelio, A.; Grossi, G.; Lanzarotti, R. An Open Framework for Remote-PPG Methods and Their Assessment. IEEE Access 2020, 8, 216083–216101. [Google Scholar] [CrossRef]

- Liu, I.; Ni, S.; Peng, K. Enhancing the Robustness of Smartphone Photoplethysmography: A Signal Quality Index Approach. Sensors 2020, 20, 1923. [Google Scholar] [CrossRef]

- Canal, F.Z.; Müller, T.R.; Matias, J.C.; Scotton, G.G.; de Sa Junior, A.R.; Pozzebon, E.; Sobieranski, A.C. A survey on facial emotion recognition techniques: A state-of-the-art literature review. Inf. Sci. 2022, 582, 593–617. [Google Scholar] [CrossRef]

- Hans, A.S.A.; Rao, S. A CNN-LSTM Based Deep Neural Networks for Facial Emotion Detection in Videos. Int. J. Adv. Signal Image Sci. 2021, 7, 11–20. [Google Scholar] [CrossRef]

- Lyons, M.J.; Akamatsu, S.; Kamachi, M.; Gyoba, J. Coding Facial Expressions with Gabor Wavelets. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar] [CrossRef]

- Lundqvist, D.; Flykt, A.; Öhman, A. The Karolinska Directed Emotional Faces (KDEF); Department of Clinical Neuroscience, Psychology Section; Karolinska Institutet: Stockholm, Sweden, 1998; Available online: https://www.kdef.se (accessed on 27 July 2025).

- Huang, Z.-Y.; Chiang, C.-C.; Chen, J.-H.; Chen, Y.-C.; Chung, H.-L.; Cai, Y.-P.; Hsu, H.-C. A Study on Computer Vision for Facial Emotion Recognition. Sci. Rep. 2023, 13, 8425. [Google Scholar] [CrossRef]

- Akhand, M.A.H.; Roy, S.; Siddique, N.; Kamal, M.A.S.; Shimamura, T. Facial Emotion Recognition Using Transfer Learning in the Deep CNN. Electronics 2021, 10, 1036. [Google Scholar] [CrossRef]

- Elsheikh, R.A.; Mohamed, M.A.; Abou-Taleb, A.M.; Ata, M.M. Improved facial emotion recognition model based on a novel deep convolutional structure. Sci. Rep. 2024, 14, 29050. [Google Scholar] [CrossRef]

- Vanhée, L.; Andersson, G.; Garcia, D.; Sikström, S. The Rise of Artificial Intelligence for Cognitive Behavioral Therapy: A Bibliometric Overview. Appl. Psychol. Health Well Being 2025, 17, e70033. [Google Scholar] [CrossRef]

- Bangari, A.; Rani, V. AI-Powered Mental Health Support Chatbot. Int. J. Res. Publ. Rev. 2025, 6, 2385–2394. [Google Scholar]

- Danieli, M.; Ciulli, T.; Mousavi, S.M.; Riccardi, G. A Participatory Design of Conversational Artificial Intelligence Agents for Mental Healthcare Application. JMIR Form. Res. 2025, in press. [CrossRef]

- Yeh, P.L.; Kuo, W.C.; Tseng, B.L.; Chou, W.J.; Yen, C.F. Does the AI-Driven Chatbot Work? Effectiveness of the Woebot App in Reducing Anxiety and Depression in Group Counseling Courses and Student Acceptance of Technological Aids. Curr. Psychol. 2025; in press. [Google Scholar] [CrossRef]

- Na, H. CBT-LLM: A Chinese Large Language Model for Cognitive Behavioral Therapy-Based Mental Health Question Answering. arXiv 2024, arXiv:2403.16008. [Google Scholar] [CrossRef]

- Rahsepar Meadi, M.; Sillekens, T.; Metselaar, S.; van Balkom, A.J.; Bernstein, J.S.; Batelaan, N. Exploring the Ethical Challenges of Conversational AI in Mental Health Care: Scoping Review. JMIR Ment. Health 2025, 12, e60432. [Google Scholar] [CrossRef]

- Baracoda. Baracoda Unveils BMind, the World’s First Smart Mirror for Mental Wellness. Available online: https://baracoda.com/resources/press/baracoda-unveils-bmind-smart-mirror-for-mental-wellness (accessed on 7 January 2024).

- Kong, L.; Zhao, Y.; Dong, L.; Jian, Y.; Jin, X.; Li, B.; Feng, Y.; Liu, M.; Liu, X.; Wu, H. Non-Contact Detection of Oxygen Saturation Based on Visible Light Imaging Device Using Ambient Light. Opt. Express 2013, 21, 17464–17471. [Google Scholar] [CrossRef]

- PPG Sensor. Available online: https://www.seeedstudio.com/Grove-Ear-clip-Heart-Rate-Sensor.html (accessed on 16 September 2025).

| Module | GPU Usage (%) | Latency (ms) | Response Time (ms) |

|---|---|---|---|

| Real-time rPPG | 18–22% | ~160–210 ms | |

| Real-time SpO2 | 14–18% | ~180–240 ms | |

| Deepface (Emotion) | 25–30% | ~350–450 ms | |

| Deepface (Age) | 28–32% | ~500–600 ms | |

| Chatbot API Response | Idle | ~1200–1800 ms |

| Case | Input Profile | GPT-4o Response Summary |

|---|---|---|

| 1 | Age: 25, Emotion: happy, HR: 78 BPM, SpO2: 98% | “You appear to be in good physical and emotional health. No immediate signs of stress detected. Keep up your current routine and stay hydrated.” |

| 2 | Age: 42, Emotion: angry, HR: 105 BPM, SpO2: 94% | “Elevated heart rate and emotional tension suggest possible stress. Consider taking deep breaths or a short walk. Try to relax and reduce any triggers.” |

| 3 | Age: 67, Emotion: neutral, HR: 85 BPM, SpO2: 89% | “Your oxygen levels are slightly low. You may be feeling mild fatigue. Ensure proper ventilation and consider medical advice if symptoms persist.” |

| 4 | Age: 30, Emotion: sad, HR: 72 BPM, SpO2: 97% | “You may be feeling emotionally low. It’s okay to take a break and talk to someone you trust. A short walk or connecting with a friend could help.” |

| 5 | Age: 52, Emotion: fearful, HR: 112 BPM, SpO2: 92% | “High heart rate and fear may indicate anxiety or acute stress. Practice calming techniques like controlled breathing. You’re not alone, consider reaching out.” |

| User | Emotion (Happy|Sad|Neutral|Fear|Angry) | ||

|---|---|---|---|

| Relevance (1~5) | Empathy (1~5) | Suggestion (1~5) | |

| User 1 | 5|5|5|5|4 | 5|5|5|5|4 | 5|5|5|5|3 |

| User 2 | 5|5|5|5|5 | 5|5|5|5|5 | 5|5|5|5|5 |

| User 3 | 4|5|5|5|5 | 3|5|5|5|5 | 4|5|5|5|5 |

| User 4 | 4|5|5|5|5 | 3|5|5|5|5 | 4|5|5|5|5 |

| User 5 | 5|4|5|5|5 | 5|3|5|5|5 | 5|4|5|5|5 |

| User 6 | 4|5|5|5|5 | 3|5|5|5|5 | 3|5|5|5|5 |

| User 7 | 4|4|4|5|3 | 3|3|4|5|4 | 4|3|3|5|4 |

| User 8 | 5|5|5|5|5 | 5|5|5|5|5 | 5|5|5|5|5 |

| User 9 | 5|5|5|5|5 | 5|5|5|5|5 | 5|5|5|5|5 |

| User 10 | 5|5|5|5|5 | 5|5|5|5|5 | 5|5|5|5|5 |

| Suggestion Phrase | Frequency |

|---|---|

| Short Walk | 19 |

| Mindfulness | 17 |

| Deep breathing | 15 |

| Practice breathing | 6 |

| Meditation | 4 |

| Short break | 3 |

| Relaxing Activity | 3 |

| Step outside | 3 |

| Features | Original Ma-rPPG | Our Approach |

|---|---|---|

| Processing Mode | Batch (Offline) | Real-time continuous (streaming) |

| Input Source | Pre-recorded video | Live Webcam feed |

| Latency | High (post-processing) | Low (immediate output) |

| Inference Engine | Single-run inference | Persistent, threaded GPU inference |

| Physiological Metrics | Heart Rate (static) | Heart Rate (dynamic real-time) |

| Deployment | Laboratory | User-interactive smart mirror |

| User Experience | Non-interactive | Interactive, continuous engagement |

| References | Modalities | Real-Time | Emotion Detection | AI Chatbot | Strength and Limitation |

|---|---|---|---|---|---|

| Bianco et al. (2021) [3] | Emotion Monitoring | Yes | Yes | No | Facial and vocal emotion sensing but no physiological sensing or AI feedback |

| Yu et al. (2021) [4] | Sentiment Analysis | Yes | Yes | No | Korean-language sentiment model but no physiological or multimodal fusion |

| Chaparro et al. (2021) [8] | Ambient Assisted Living (AAL), Rehab Support | Partial | No | No | Elder-focused rehab tools but no real-time emotion or chatbot integration |

| Casalino et al. (2025) [9] | rPPG (HR, SpO2) | Yes | No | No | Low-cost real-time rPPG mirror but no mental health or affective feedback |

| Proposed System | rPPG + Emotion + Chatbot | Yes | Yes | Yes | Full-stack integration of real-time physiological and affective sensing with AI chatbot feedback |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kasno, M.A.; Jung, J.-W. Feasibility of an AI-Enabled Smart Mirror Integrating MA-rPPG, Facial Affect, and Conversational Guidance in Realtime. Sensors 2025, 25, 5831. https://doi.org/10.3390/s25185831

Kasno MA, Jung J-W. Feasibility of an AI-Enabled Smart Mirror Integrating MA-rPPG, Facial Affect, and Conversational Guidance in Realtime. Sensors. 2025; 25(18):5831. https://doi.org/10.3390/s25185831

Chicago/Turabian StyleKasno, Mohammad Afif, and Jin-Woo Jung. 2025. "Feasibility of an AI-Enabled Smart Mirror Integrating MA-rPPG, Facial Affect, and Conversational Guidance in Realtime" Sensors 25, no. 18: 5831. https://doi.org/10.3390/s25185831

APA StyleKasno, M. A., & Jung, J.-W. (2025). Feasibility of an AI-Enabled Smart Mirror Integrating MA-rPPG, Facial Affect, and Conversational Guidance in Realtime. Sensors, 25(18), 5831. https://doi.org/10.3390/s25185831