A Dual-Segmentation Framework for the Automatic Detection and Size Estimation of Shrimp

Abstract

1. Introduction

- This study introduces a novel framework that integrates instance and semantic segmentation to efficiently extract the centerline of individual shrimp without requiring any post-processing step. Additionally, our proposed framework is robust to variations in the pose or orientation of the shrimp.

- An enhancement to the baseline semantic segmentation model was proposed to improve the accuracy of shrimp centerline prediction. Our proposed model outperforms other baseline approaches significantly and prevents the creation of burrs along the centerline.

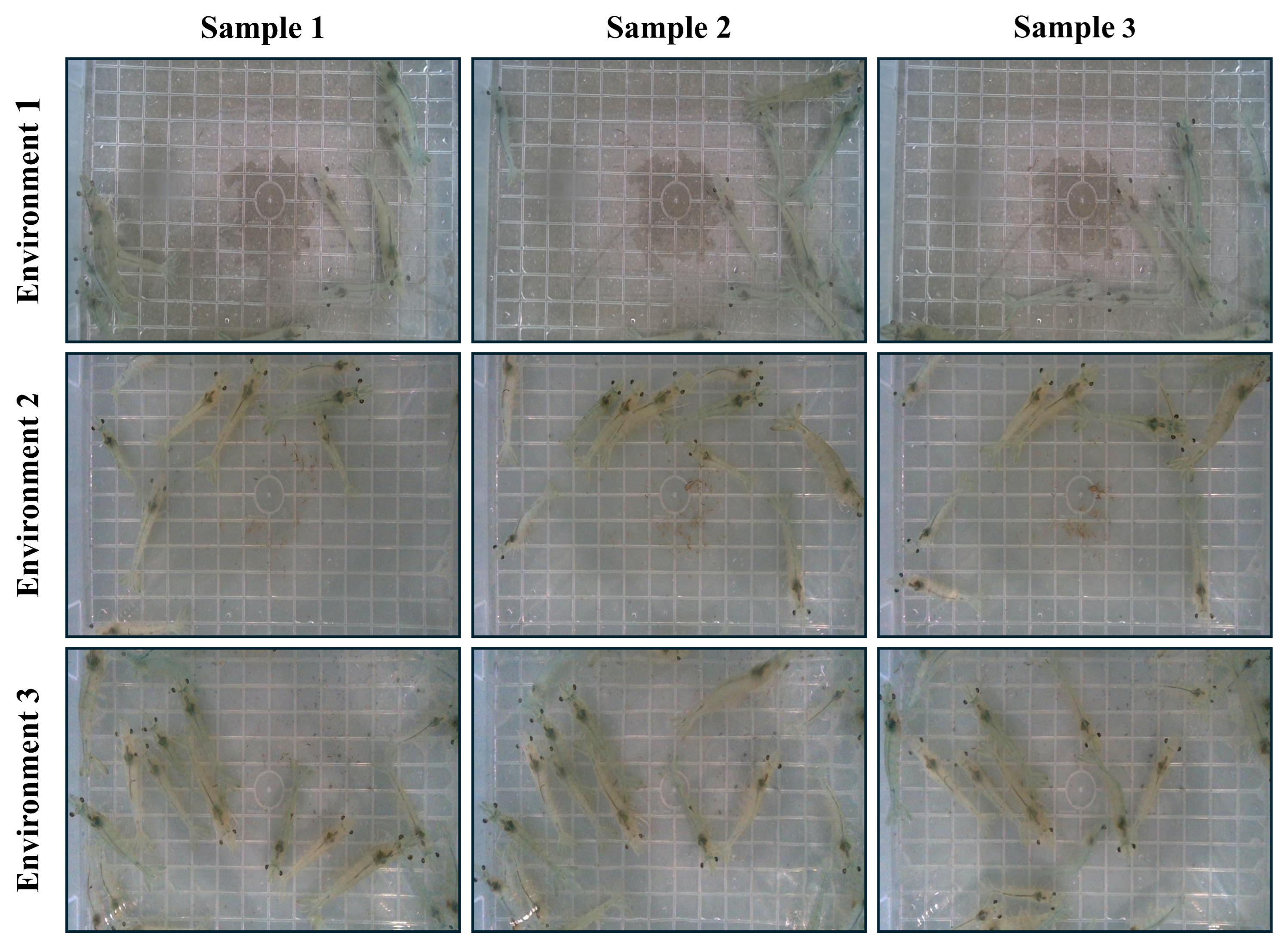

- Furthermore, our study presents two comprehensive datasets designed to advance research in shrimp instance segmentation and size estimation. The dataset comprises high-quality images collected from three diverse environments with varying numbers of shrimp.

2. Materials and Methods

2.1. Experimental Setup

2.2. Dataset

2.3. Proposed Framework Overview

2.4. Instance Segmentation of Shrimp

Model Selection for Instance Segmentation of Shrimp

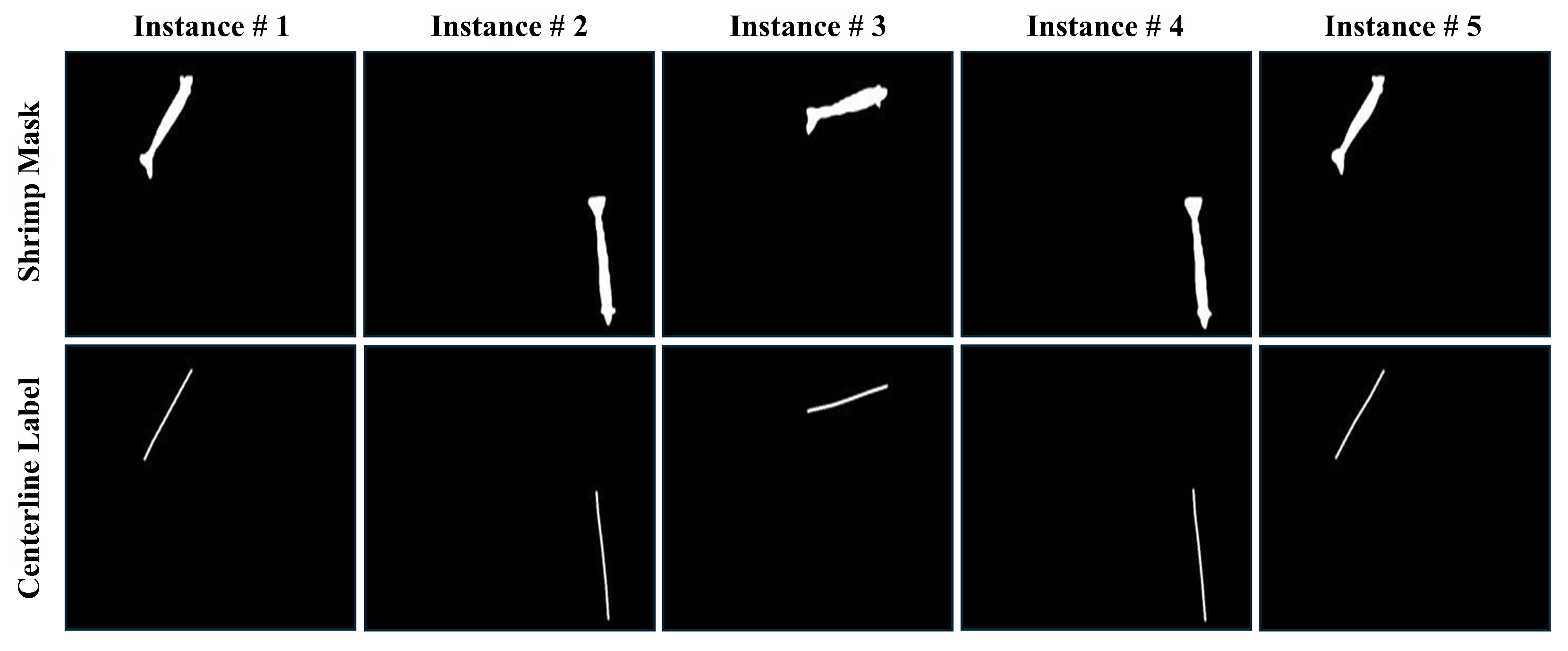

2.5. Shrimp Centerline Prediction via Semantic Segmentation

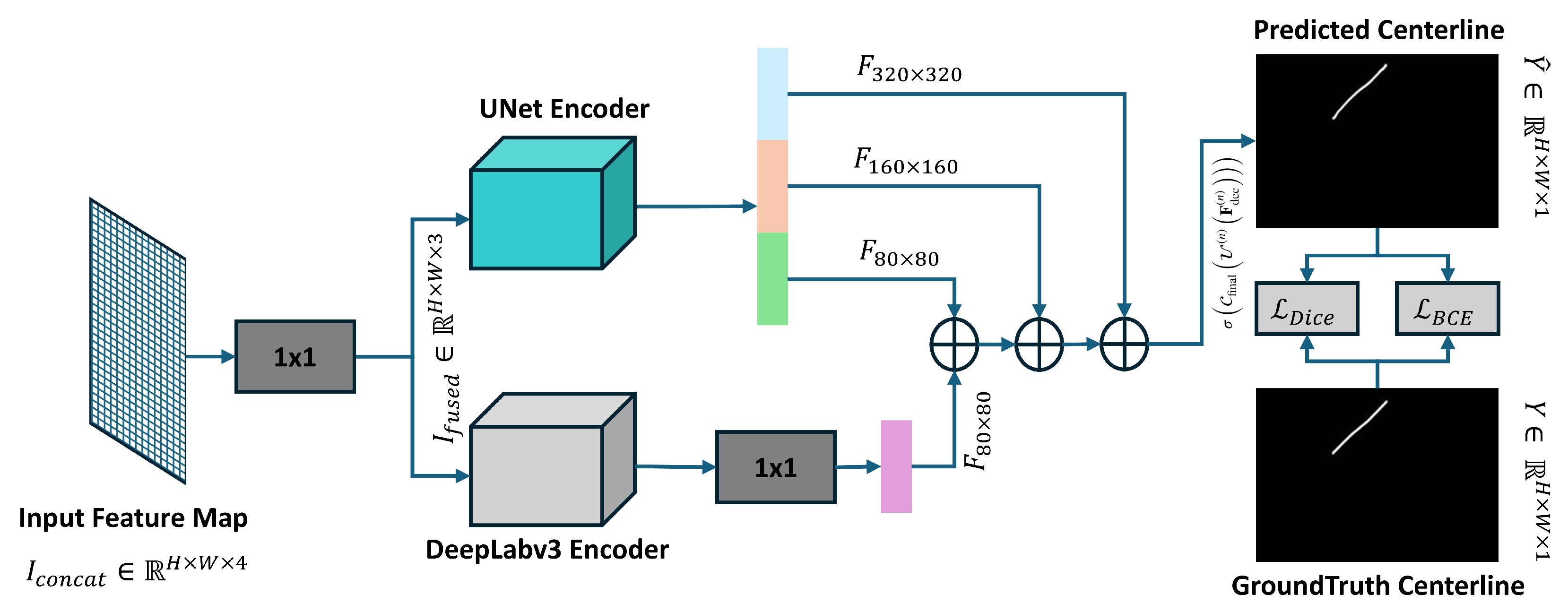

2.5.1. Proposed Model for Shrimp Centerline Predictive Module

2.5.2. Centerline Extraction for Size Estimation

3. Results

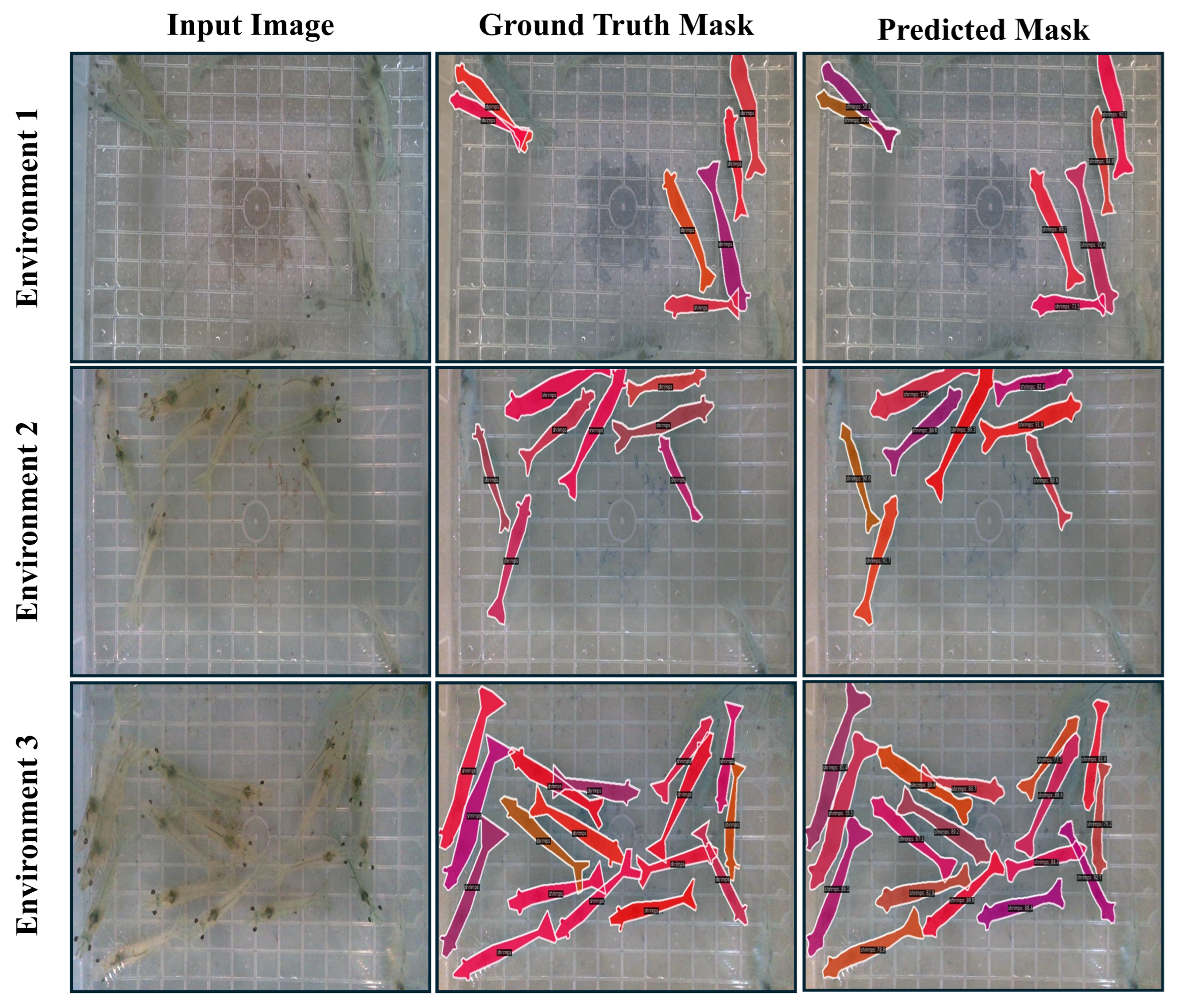

3.1. Instance Segmentation Module

3.1.1. Implementation Details

3.1.2. Experimental Results

3.2. Centerline Predictive Module

3.2.1. Implementation Details

3.2.2. Experimental Results

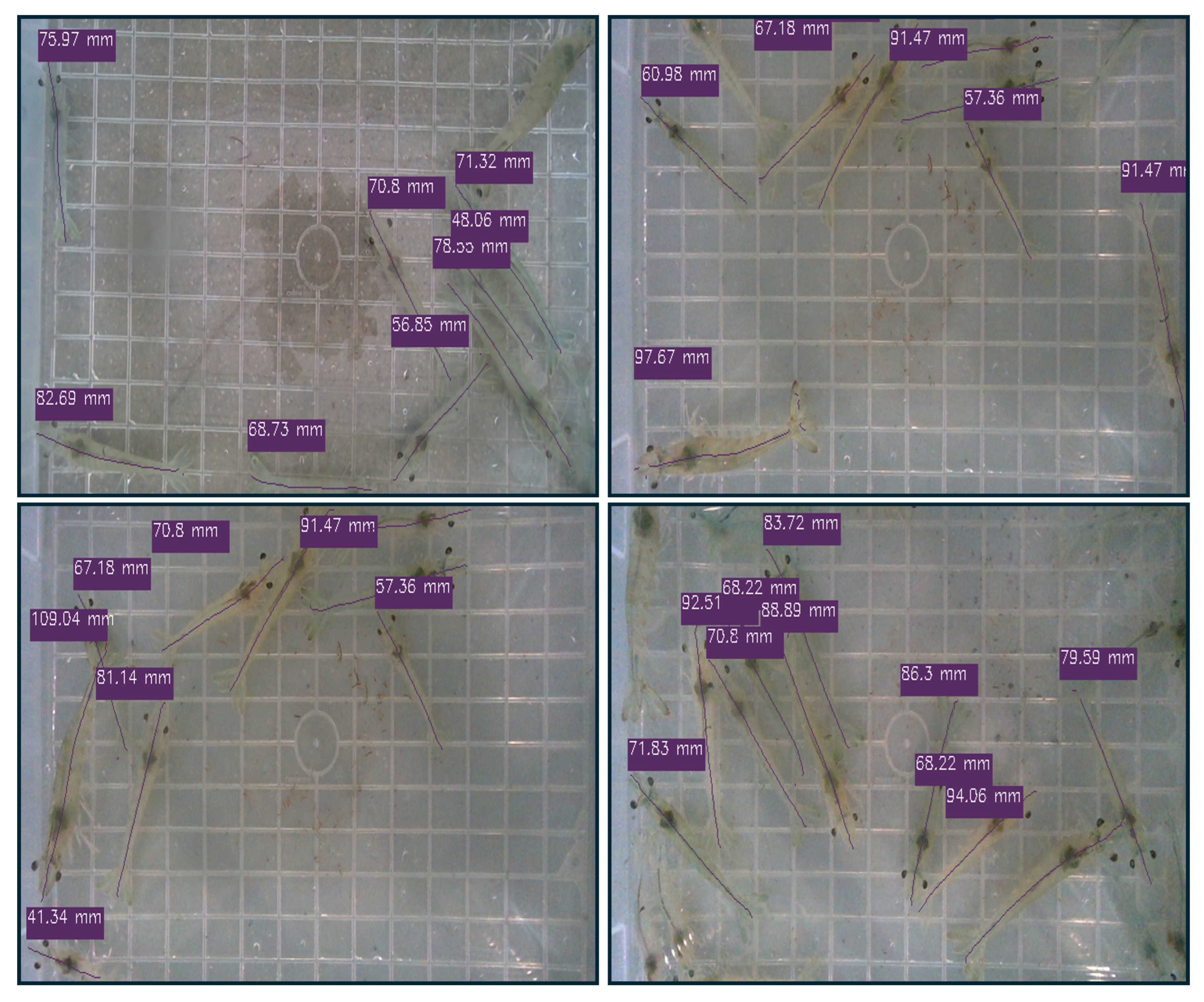

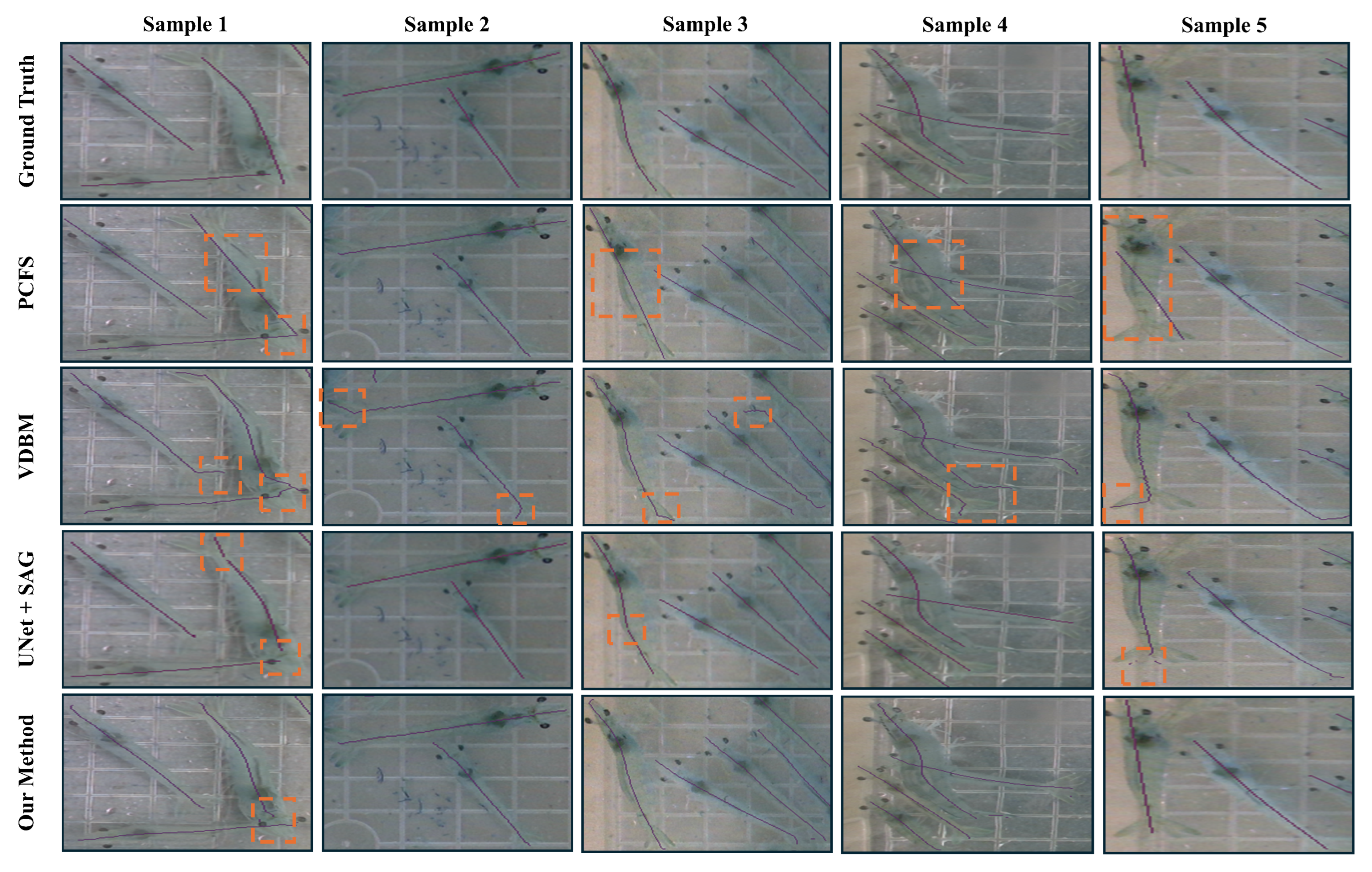

3.3. Extraction of Centerline Skeleton for Precise Shrimp Size Estimation

3.4. Comparative Study of Methods for Measuring Shrimp Size

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mandal, A.; Singh, P. Global Scenario of Shrimp Industry: Present Status and Future Prospects. In Shrimp Culture Technology: Farming, Health Management and Quality Assurance; Springer: Singapore, 2025; pp. 1–23. [Google Scholar]

- Ran, X.; Liu, Y.; Pan, H.; Wang, J.; Duan, Q. Shrimp phenotypic data extraction and growth abnormality identification method based on instance segmentation. Comput. Electron. Agric. 2025, 229, 109701. [Google Scholar] [CrossRef]

- Wang, G.; Van Stappen, G.; De Baets, B. Automated Artemia length measurement using U-shaped fully convolutional networks and second-order anisotropic Gaussian kernels. Comput. Electron. Agric. 2020, 168, 105102. [Google Scholar] [CrossRef]

- Puig-Pons, V.; Muñoz-Benavent, P.; Espinosa, V.; Andreu-García, G.; Valiente-González, J.M.; Estruch, V.D.; Ordóñez, P.; Pérez-Arjona, I.; Atienza, V.; Mèlich, B.; et al. Automatic Bluefin Tuna (Thunnus thynnus) biomass estimation during transfers using acoustic and computer vision techniques. Aquac. Eng. 2019, 85, 22–31. [Google Scholar] [CrossRef]

- Saleh, A.; Hasan, M.M.; Raadsma, H.W.; Khatkar, M.S.; Jerry, D.R.; Azghadi, M.R. Prawn morphometrics and weight estimation from images using deep learning for landmark localization. Aquac. Eng. 2024, 106, 102391. [Google Scholar] [CrossRef]

- Shi, C.; Wang, Q.; He, X.; Zhang, X.; Li, D. An automatic method of fish length estimation using underwater stereo system based on LabVIEW. Comput. Electron. Agric. 2020, 173, 105419. [Google Scholar] [CrossRef]

- Hao, M.; Yu, H.; Li, D. The measurement of fish size by machine vision-a review. In Proceedings of the International Conference on Computer and Computing Technologies in Agriculture, Dongying, China, 19–21 October 2016; pp. 15–32. [Google Scholar]

- Heng, Z.; Hoon, K.S.; Cheol, K.S.; Won, K.C.; Won, K.S.; Hyongsuk, K. Instance segmentation of shrimp based on contrastive learning. Appl. Sci. 2023, 13, 6979. [Google Scholar] [CrossRef]

- Zhou, H.; Kim, S.; Kim, S.C.; Kim, C.W.; Kang, S.W. Size estimation for shrimp using deep learning method. Smart Media J. 2023, 12, 112. [Google Scholar] [CrossRef]

- Ramírez-Coronel, F.J.; Rodríguez-Elías, O.M.; Esquer-Miranda, E.; Pérez-Patricio, M.; Pérez-Báez, A.J.; Hinojosa-Palafox, E.A. Non-Invasive Fish Biometrics for Enhancing Precision and Understanding of Aquaculture Farming through Statistical Morphology Analysis and Machine Learning. Animals 2024, 14, 1850. [Google Scholar] [CrossRef]

- Cong, X.; Tian, Y.; Quan, J.; Qin, H.; Li, Q.; Li, R. Machine vision-based estimation of body size and weight of pearl gentian grouper. Aquac. Int. 2024, 32, 5325–5351. [Google Scholar] [CrossRef]

- Hsieh, C.L.; Chang, H.Y.; Chen, F.H.; Liou, J.H.; Chang, S.K.; Lin, T.T. A simple and effective digital imaging approach for tuna fish length measurement compatible with fishing operations. Comput. Electron. Agric. 2011, 75, 44–51. [Google Scholar] [CrossRef]

- Kim, D.; Lee, K.; Kim, J. A prototype to measure rainbow trout’s length using image processing. J. Korean Soc. Fish. Aquat. Sci. 2020, 53, 123–130. [Google Scholar]

- Tseng, C.H.; Hsieh, C.L.; Kuo, Y.F. Automatic measurement of the body length of harvested fish using convolutional neural networks. Biosyst. Eng. 2020, 189, 36–47. [Google Scholar] [CrossRef]

- Zhao, Y.p.; Sun, Z.Y.; Du, H.; Bi, C.W.; Meng, J.; Cheng, Y. A novel centerline extraction method for overlapping fish body length measurement in aquaculture images. Aquac. Eng. 2022, 99, 102302. [Google Scholar] [CrossRef]

- Zhou, M.; Shen, P.; Zhu, H.; Shen, Y. In-water fish body-length measurement system based on stereo vision. Sensors 2023, 23, 6325. [Google Scholar] [CrossRef]

- Dalvit Carvalho da Silva, R.; Soltanzadeh, R.; Figley, C.R. Automated Coronary Artery Tracking with a Voronoi-Based 3D Centerline Extraction Algorithm. J. Imaging 2023, 9, 268. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.Y.; Suen, C.Y. A fast parallel algorithm for thinning digital patterns. Commun. ACM 1984, 27, 236–239. [Google Scholar] [CrossRef]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. Rtmdet: An empirical study of designing real-time object detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. Solov2: Dynamic and fast instance segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H. Conditional convolutions for instance segmentation. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 282–298. [Google Scholar]

- Cheng, T.; Wang, X.; Chen, S.; Zhang, W.; Zhang, Q.; Huang, C.; Zhang, Z.; Liu, W. Sparse instance activation for real-time instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LO, USA, 21–24 June 2022; pp. 4433–4442. [Google Scholar]

- Chen, X.; Yang, C.; Mo, J.; Sun, Y.; Karmouni, H.; Jiang, Y.; Zheng, Z. CSPNeXt: A new efficient token hybrid backbone. Eng. Appl. Artif. Intell. 2024, 132, 107886. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2024, arXiv:2305.09972. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

- Chaurasia, A.; Culurciello, E. Linknet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhao, G.; Li, Y.; Wang, L. A Voronoi-diagram-based method for centerline extraction in 3D industrial line-laser reconstruction using a graph-centrality-based pruning algorithm. Opt. Lasers Eng. 2018, 107, 129–139. [Google Scholar]

| Model | Params (M) | FPS | |||

|---|---|---|---|---|---|

| SOLOv2 | 0.899 | 0.724 | 0.593 | 46.2 | 44 |

| YOLACT | 0.783 | 0.461 | 0.437 | 34.7 | 43 |

| CondInst | 0.934 | 0.663 | 0.574 | 37.5 | 42 |

| SparseInst | 0.836 | 0.428 | 0.432 | 42.6 | 43 |

| YOLOv8-m | 0.931 | 0.735 | 0.630 | 27.2 | 55 |

| RTMDet-m | 0.960 | 0.795 | 0.631 | 34.2 | 58 |

| Model | Precision | Recall | F1-Score | mIoU | Param (M) | FPS |

|---|---|---|---|---|---|---|

| UNet | 0.839 | 0.847 | 0.843 | 0.729 | 4.4 | 164 |

| UNet++ | 0.864 | 0.893 | 0.868 | 0.784 | 4.6 | 127 |

| LinkNet | 0.826 | 0.863 | 0.844 | 0.731 | 2.1 | 160 |

| DeepLabV3 | 0.842 | 0.882 | 0.860 | 0.754 | 2.1 | 169 |

| Our Proposed Model | 0.866 | 0.900 | 0.883 | 0.791 | 2.1 | 150 |

| Model | MAE (px) | MAE (mm) | RMSE (px) | RMSE (mm) | FPS |

|---|---|---|---|---|---|

| PCFM | 84.76 | 44.61 | 105.95 | 55.76 | 10 |

| VDBM | 96.27 | 50.67 | 120.34 | 63.34 | 0.05 |

| UNet+SAG | 57.40 | 30.21 | 71.75 | 37.21 | 3.2 |

| Our Framework | 19.44 | 10.23 | 24.30 | 12.79 | 5.14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Waqar, M.M.; Ali, H.; Zhou, H.; Mohamed, H.G.; Kim, S.C.; Strzelecki, M. A Dual-Segmentation Framework for the Automatic Detection and Size Estimation of Shrimp. Sensors 2025, 25, 5830. https://doi.org/10.3390/s25185830

Waqar MM, Ali H, Zhou H, Mohamed HG, Kim SC, Strzelecki M. A Dual-Segmentation Framework for the Automatic Detection and Size Estimation of Shrimp. Sensors. 2025; 25(18):5830. https://doi.org/10.3390/s25185830

Chicago/Turabian StyleWaqar, Malik Muhammad, Hassan Ali, Heng Zhou, Heba G. Mohamed, Sang Cheol Kim, and Michal Strzelecki. 2025. "A Dual-Segmentation Framework for the Automatic Detection and Size Estimation of Shrimp" Sensors 25, no. 18: 5830. https://doi.org/10.3390/s25185830

APA StyleWaqar, M. M., Ali, H., Zhou, H., Mohamed, H. G., Kim, S. C., & Strzelecki, M. (2025). A Dual-Segmentation Framework for the Automatic Detection and Size Estimation of Shrimp. Sensors, 25(18), 5830. https://doi.org/10.3390/s25185830