Dual-Path CSDETR: Cascade Stochastic Attention with Object-Centric Priors for High-Accuracy Fire Detection

Abstract

1. Introduction

2. Related Works

3. Preliminaries

3.1. Bayesian Attention Modules

3.2. Cascade-DETR

4. Dual-Path Cascade Stochastic DETR

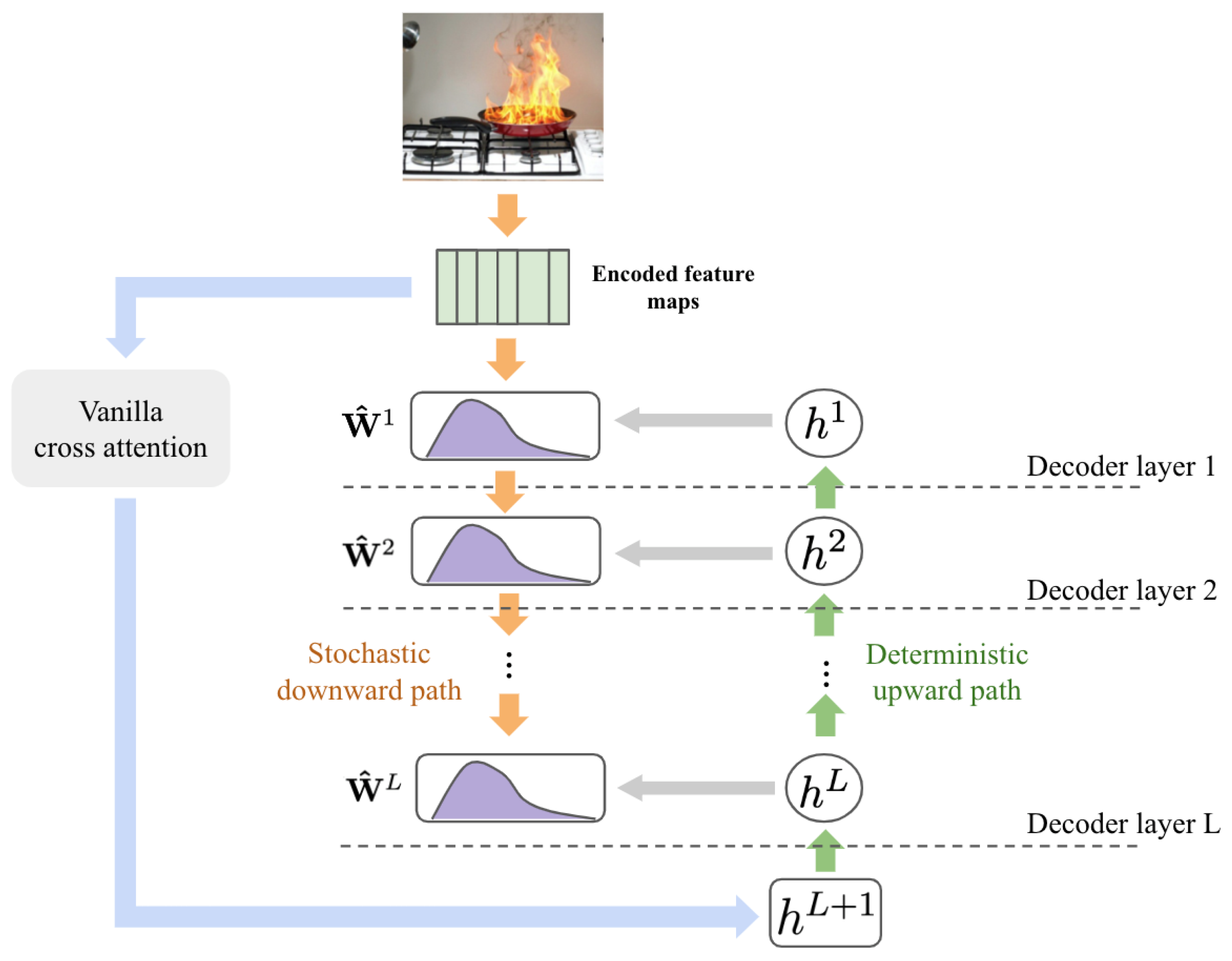

4.1. Cascade Stochastic Attention Layers

4.2. Dual-Path CSDETR Architecture

- Dual-Path Architecture

- Object-Centric Prior-Based Attention Refinement

4.3. Training and Inference

5. Experiments

5.1. Datasets and Evaluation Metrics

5.2. Comparison with State-of-the-Art

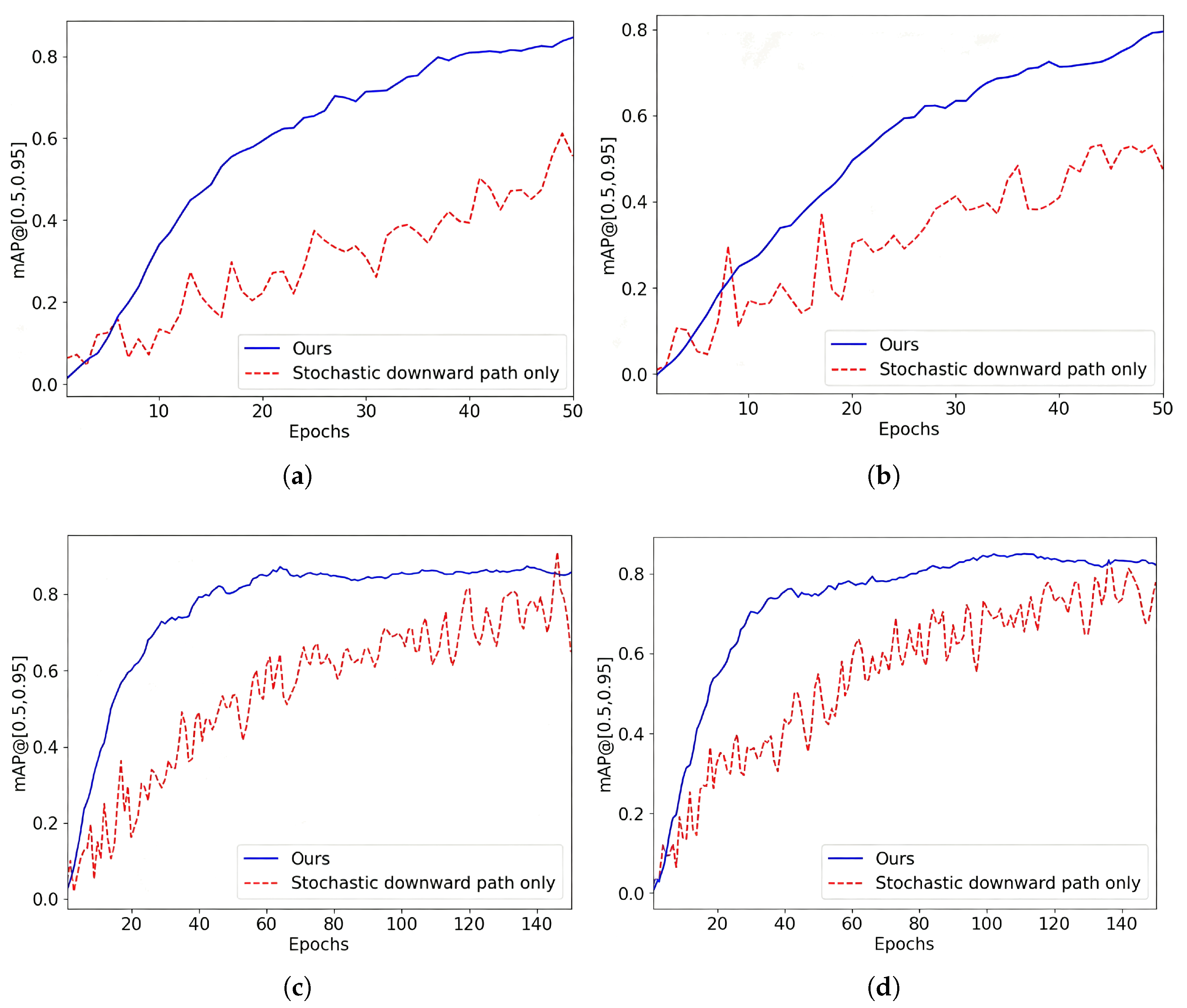

5.3. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Wang, B.; Luo, P.; Wang, L.; Wu, Y. A Metric Learning-Based Improved Oriented R-CNN for Wildfire Detection in Power Transmission Corridors. Sensors 2025, 25, 3882. [Google Scholar] [CrossRef]

- Desikan, J.; Singh, S.K.; Jayanthiladevi, A.; Bhushan, S.; Rishiwal, V.; Kumar, M. Hybrid Machine Learning-Based Fault-Tolerant Sensor Data Fusion and Anomaly Detection for Fire Risk Mitigation in IIoT Environment. Sensors 2025, 25, 2146. [Google Scholar] [CrossRef]

- Zhou, K.; Jiang, S. Forest fire detection algorithm based on improved YOLOv11n. Sensors 2025, 25, 2989. [Google Scholar] [CrossRef]

- Buriboev, A.S.; Abduvaitov, A.; Jeon, H.S. Integrating Color and Contour Analysis with Deep Learning for Robust Fire and Smoke Detection. Sensors 2025, 25, 2044. [Google Scholar] [CrossRef]

- Zhang, Z.; Tan, L.; Robert, T.L.K. An improved fire and smoke detection method based on YOLOv8n for smart factories. Sensors 2024, 24, 4786. [Google Scholar] [CrossRef]

- Abdessemed, F.; Bouam, S.; Arar, C. A Review on Forest Fire Detection and Monitoring Systems. In Proceedings of the 2023 International Conference on Electrical Engineering and Advanced Technology (ICEEAT), Batna, Algeria, 5–7 November 2023; Volume 1, pp. 1–7. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2021, arXiv:2010.04159. [Google Scholar] [CrossRef]

- Ye, M.; Ke, L.; Li, S.; Tai, Y.W.; Tang, C.K.; Danelljan, M.; Yu, F. Cascade-DETR: Delving into High-Quality Universal Object Detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 6681–6691. [Google Scholar]

- Wang, X.; Li, M.; Gao, M.; Liu, Q.; Li, Z.; Kou, L. Early smoke and flame detection based on transformer. J. Saf. Sci. Resil. 2023, 4, 294–304. [Google Scholar] [CrossRef]

- Liang, T.; Zeng, G. FSH-DETR: An Efficient End-to-End Fire Smoke and Human Detection Based on a Deformable DEtection TRansformer (DETR). Sensors 2024, 24, 4077. [Google Scholar] [CrossRef]

- Geng, X.; Su, Y.; Cao, X.; Li, H.; Liu, L. YOLOFM: An improved fire and smoke object detection algorithm based on YOLOv5n. Sci. Rep. 2024, 14, 4543. [Google Scholar] [CrossRef] [PubMed]

- Liang, D.; Bui, T.; Wang, G. Fire and Smoke Detection Method Based on Improved YOLOv5s. In Proceedings of the 2023 8th International Conference on Communication, Image and Signal Processing (CCISP), Chengdu, China, 17–19 November 2023; pp. 293–300. [Google Scholar]

- Yar, H.; Khan, Z.A.; Ullah, F.U.M.; Ullah, W.; Baik, S.W. A modified YOLOv5 architecture for efficient fire detection in smart cities. Expert Syst. Appl. 2023, 231, 120465. [Google Scholar] [CrossRef]

- Koshy, R.; Elango, S. Applying social media in emergency response: An attention-based bidirectional deep learning system for location reference recognition in disaster tweets. Appl. Intell. 2024, 54, 5768–5793. [Google Scholar] [CrossRef]

- Lv, G.; Dong, L.; Xu, W. Hierarchical interactive multi-granularity co-attention embedding to improve the small infrared target detection. Appl. Intell. 2023, 53, 27998–28020. [Google Scholar] [CrossRef]

- Wang, J.; Yu, J.; He, Z. DECA: A novel multi-scale efficient channel attention module for object detection in real-life fire images. Appl. Intell. 2022, 52, 1362–1375. [Google Scholar] [CrossRef]

- Zeng, K.; Sun, X.; He, H.; Tang, H.; Shen, T.; Zhang, L. Fuzzy preference matroids rough sets for approximate guided representation in transformer. Expert Syst. Appl. 2024, 255, 124592. [Google Scholar] [CrossRef]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.C.; Salakhutdinov, R.; Zemel, R.S.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. arXiv 2015, arXiv:1502.03044. [Google Scholar] [CrossRef]

- Fan, X.; Zhang, S.; Chen, B.; Zhou, M. Bayesian Attention Modules. arXiv 2020, arXiv:2010.10604. [Google Scholar] [CrossRef]

- Zhang, S.; Fan, X.; Chen, B.; Zhou, M. Bayesian Attention Belief Networks. arXiv 2021, arXiv:2106.05251. [Google Scholar] [CrossRef]

- Blei, D.M.; Kucukelbir, A.; McAuliffe, J.D. Variational inference: A review for statisticians. J. Am. Stat. Assoc. 2017, 112, 859–877. [Google Scholar] [CrossRef]

- Ren, W.; Jin, Z. Phase space visibility graph. Chaos Solitons Fractals 2023, 176, 114170. [Google Scholar] [CrossRef]

- Olafenwa, M. FireNET Dataset [Dataset]; GitHub: San Francisco, CA, USA, 2023. [Google Scholar]

- Pacini, M. Smoke Dataset [Dataset]; Roboflow Universe: Des Moines, IA, USA, 2022. [Google Scholar]

- Gaiasd. DFireDataset. 2023. Available online: https://github.com/gaiasd/DFireDataset (accessed on 12 July 2024).

- Wu, S.; Zhang, X.; Liu, R.; Li, B. A dataset for fire and smoke object detection. In Multimedia Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–20. [Google Scholar]

- Ren, W.; Jin, N.; Wang, T. An Interdigital Conductance Sensor for Measuring Liquid Film Thickness in Inclined Gas–Liquid Two-Phase Flow. IEEE Trans. Instrum. Meas. 2024, 73, 9505809. [Google Scholar] [CrossRef]

- Li, N.; Martin, A.; Estival, R. Heterogeneous information fusion: Combination of multiple supervised and unsupervised classification methods based on belief functions. Inf. Sci. 2021, 544, 238–265. [Google Scholar] [CrossRef]

| Datasets | Classes | Positive/Total Ratio |

|---|---|---|

| FireNet [26] | Fire | 210/502 |

| Smoke [27] | Smoke | 741/746 |

| D-Fire [28] | Fire & Smoke | 4658/21,000 |

| DFS-Fire-Smoke [29] | Fire & Smoke & Other | 6308/9462 |

| Datasets | Evaluation | Fast-RCNN | Yolo-FM | Deformable DETR | Cascade DETR | Ours |

|---|---|---|---|---|---|---|

| FireNet | AP50 | 0.85 | 0.89 | 0.82 | 0.92 | 0.94 |

| AP75 | 0.79 | 0.85 | 0.81 | 0.88 | 0.91 | |

| mAP@[0.5, 0.95] | 0.75 | 0.73 | 0.79 | 0.81 | 0.88 | |

| Smoke | AP50 | 0.92 | 0.84 | 0.89 | 0.90 | 0.91 |

| AP75 | 0.81 | 0.79 | 0.85 | 0.87 | 0.89 | |

| mAP@[0.5, 0.95] | 0.83 | 0.82 | 0.79 | 0.84 | 0.86 | |

| D-Fire | AP50 | 0.88 | 0.78 | 0.91 | 0.89 | 0.94 |

| AP75 | 0.76 | 0.73 | 0.79 | 0.90 | 0.88 | |

| mAP@[0.5, 0.95] | 0.74 | 0.71 | 0.81 | 0.82 | 0.85 | |

| DFS-Fire-Smoke | AP50 | 0.82 | 0.84 | 0.87 | 0.86 | 0.92 |

| AP75 | 0.73 | 0.79 | 0.75 | 0.81 | 0.84 | |

| mAP@[0.5, 0.95] | 0.71 | 0.73 | 0.75 | 0.80 | 0.84 |

| Datasets | Evaluation | Decoder Layers with Bayesian Attention | |||||

|---|---|---|---|---|---|---|---|

| 1 Layer | 2 Layers | 3 Layers | 4 Layers | 5 Layers | 6 Layers | ||

| FireNet | AP50 | 0.38 | 0.39 | 0.42 | 0.44 | 0.47 | 0.50 |

| AP75 | 0.31 | 0.33 | 0.36 | 0.38 | 0.41 | 0.44 | |

| mAP@[0.5, 0.95] | 0.29 | 0.35 | 0.41 | 0.42 | 0.45 | 0.46 | |

| Smoke | AP50 | 0.36 | 0.38 | 0.40 | 0.43 | 0.46 | 0.49 |

| AP75 | 0.30 | 0.32 | 0.35 | 0.37 | 0.40 | 0.43 | |

| mAP@[0.5, 0.95] | 0.29 | 0.31 | 0.33 | 0.39 | 0.38 | 0.42 | |

| D-Fire | AP50 | 0.30 | 0.32 | 0.35 | 0.38 | 0.41 | 0.44 |

| AP75 | 0.24 | 0.26 | 0.29 | 0.32 | 0.35 | 0.38 | |

| mAP@[0.5, 0.95] | 0.26 | 0.25 | 0.28 | 0.30 | 0.33 | 0.36 | |

| DFS-Fire-Smoke | AP50 | 0.25 | 0.27 | 0.30 | 0.33 | 0.36 | 0.39 |

| AP75 | 0.19 | 0.21 | 0.24 | 0.27 | 0.30 | 0.33 | |

| mAP@[0.5, 0.95] | 0.21 | 0.23 | 0.22 | 0.31 | 0.33 | 0.35 | |

| Datasets | Evaluation | Decoder Layers with the SSA Mechanism | |||||

|---|---|---|---|---|---|---|---|

| 1 Layer | 2 Layers | 3 Layers | 4 Layers | 5 Layers | 6 Layers | ||

| FireNet | AP50 | 0.60 | 0.59 | 0.68 | 0.74 | 0.85 | 0.92 |

| AP75 | 0.53 | 0.53 | 0.51 | 0.55 | 0.67 | 0.88 | |

| mAP@[0.5, 0.95] | 0.57 | 0.55 | 0.62 | 0.61 | 0.77 | 0.91 | |

| Smoke | AP50 | 0.63 | 0.59 | 0.68 | 0.69 | 0.72 | 0.89 |

| AP75 | 0.59 | 0.43 | 0.55 | 0.54 | 0.61 | 0.84 | |

| mAP@[0.5, 0.95] | 0.57 | 0.56 | 0.57 | 0.65 | 0.63 | 0.86 | |

| D-Fire | AP50 | 0.56 | 0.55 | 0.57 | 0.65 | 0.82 | 0.92 |

| AP75 | 0.48 | 0.44 | 0.43 | 0.48 | 0.66 | 0.82 | |

| mAP@[0.5, 0.95] | 0.50 | 0.52 | 0.49 | 0.50 | 0.77 | 0.89 | |

| DFS-Fire-Smoke | AP50 | 0.51 | 0.59 | 0.65 | 0.66 | 0.86 | 0.89 |

| AP75 | 0.41 | 0.45 | 0.44 | 0.49 | 0.62 | 0.73 | |

| mAP@[0.5, 0.95] | 0.45 | 0.52 | 0.60 | 0.52 | 0.80 | 0.84 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, D.; Han, B.; Zhao, X.; Ren, W. Dual-Path CSDETR: Cascade Stochastic Attention with Object-Centric Priors for High-Accuracy Fire Detection. Sensors 2025, 25, 5788. https://doi.org/10.3390/s25185788

Yu D, Han B, Zhao X, Ren W. Dual-Path CSDETR: Cascade Stochastic Attention with Object-Centric Priors for High-Accuracy Fire Detection. Sensors. 2025; 25(18):5788. https://doi.org/10.3390/s25185788

Chicago/Turabian StyleYu, Dongxing, Bing Han, Xinyi Zhao, and Weikai Ren. 2025. "Dual-Path CSDETR: Cascade Stochastic Attention with Object-Centric Priors for High-Accuracy Fire Detection" Sensors 25, no. 18: 5788. https://doi.org/10.3390/s25185788

APA StyleYu, D., Han, B., Zhao, X., & Ren, W. (2025). Dual-Path CSDETR: Cascade Stochastic Attention with Object-Centric Priors for High-Accuracy Fire Detection. Sensors, 25(18), 5788. https://doi.org/10.3390/s25185788