Full Coverage Testing Method for Automated Driving System in Logical Scenario Parameters Space

Abstract

1. Introduction

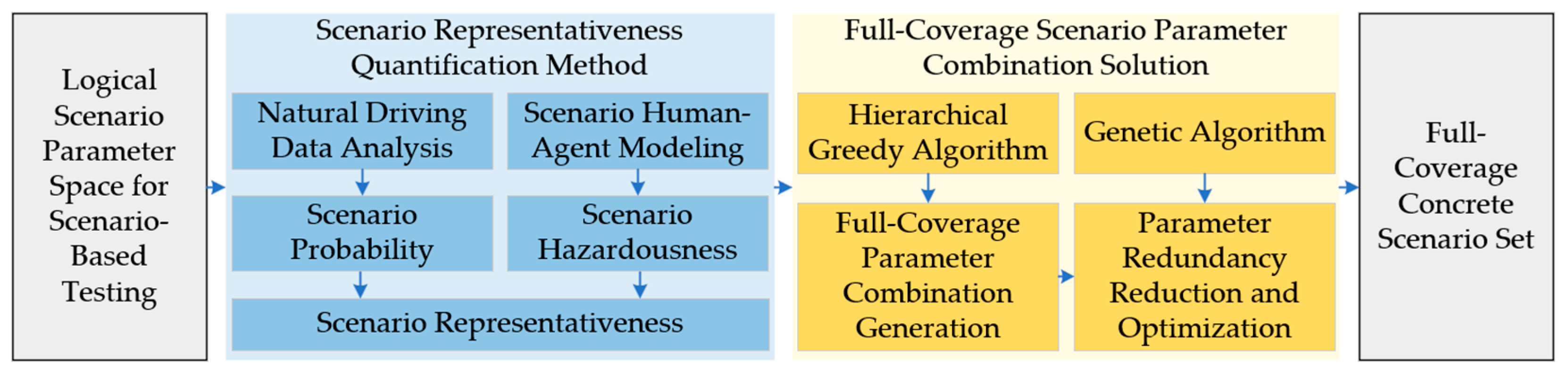

2. Full-Coverage Testing Framework

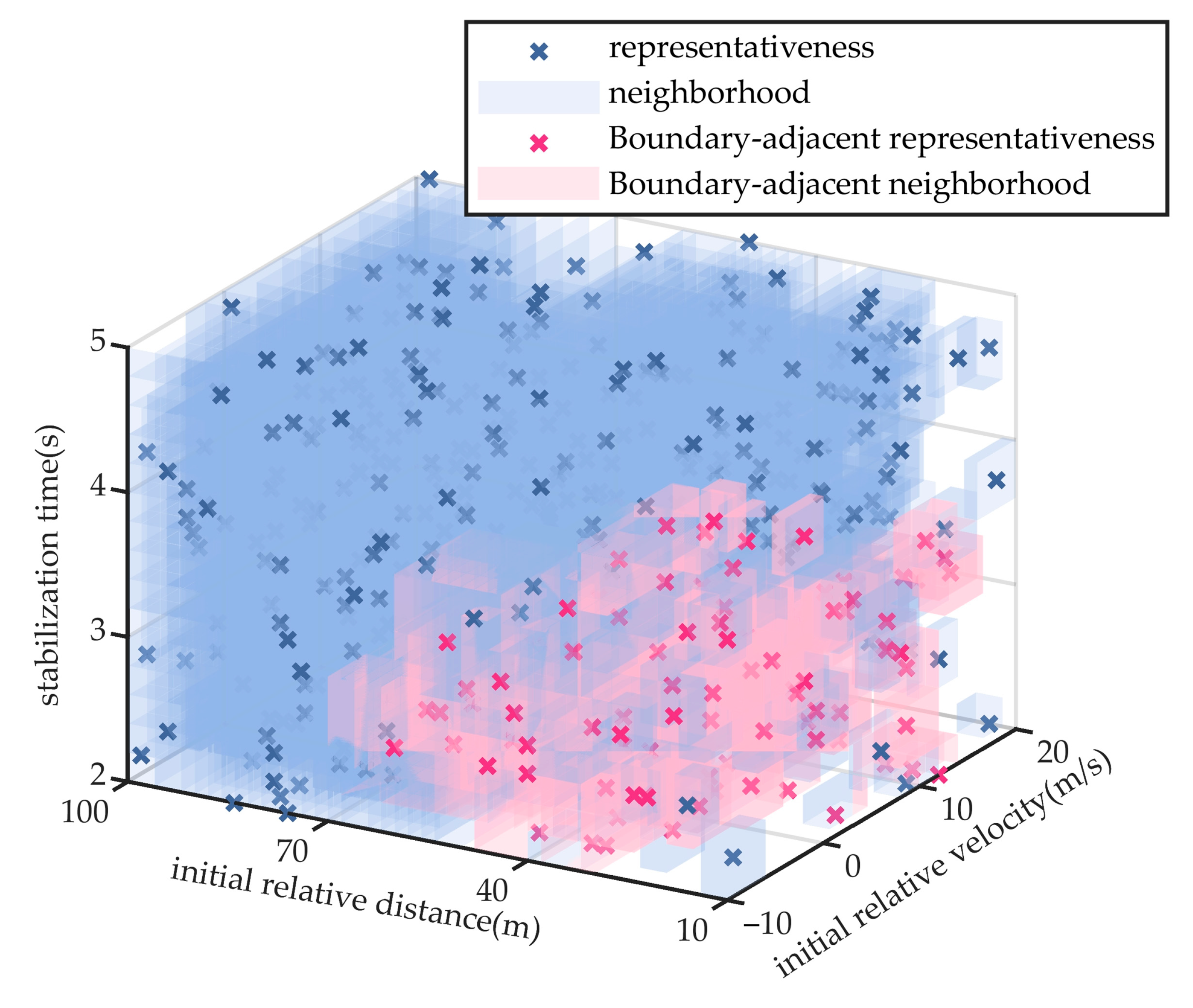

3. Quantitative Method for Scenario Representativeness

3.1. Scenario Representativeness Model

3.2. Scenario Probability Index

3.3. Scenario Hazardousness Index

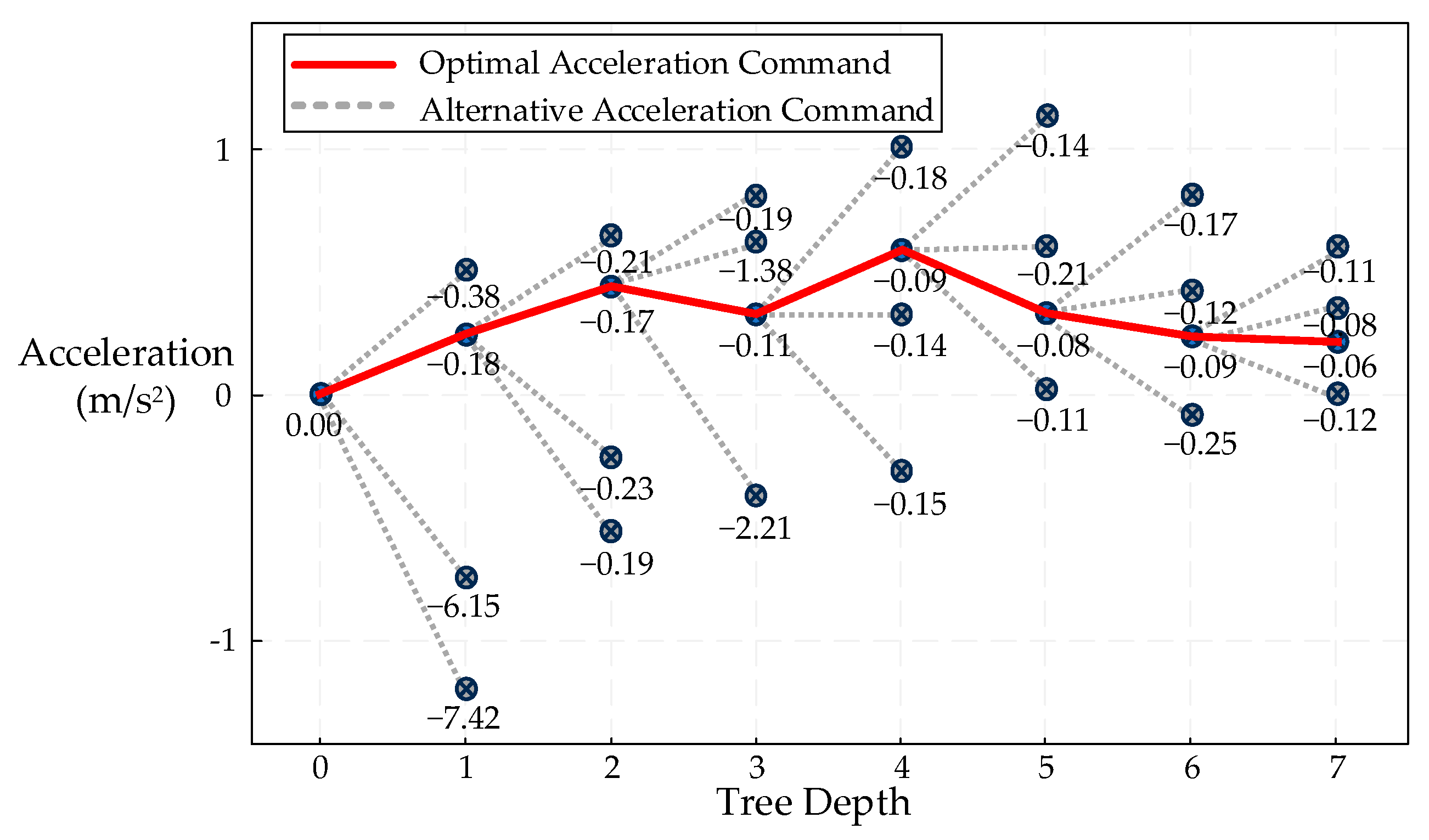

- 1.

- Selection: Starting from the root node, the most promising child node is selected by traversing the monte carlo tree. The selection aims to maximize the estimated value of the decision strategy.

- 2.

- Expansion: If the selected node is not fully expanded (i.e., it does not yet contain all possible child nodes), it will be expanded by generating one or more new child nodes.

- 3.

- Simulation: From the newly expanded node, a rollout is conducted by simulating future lane-change paths in a stochastic manner to estimate their expected cumulative return.

- 4.

- Backpropagation: The simulation result is propagated back from the leaf node to the root node, updating the reward statistics along the traversed path.

4. Computation of Fully Covering Scenario Parameter Sets

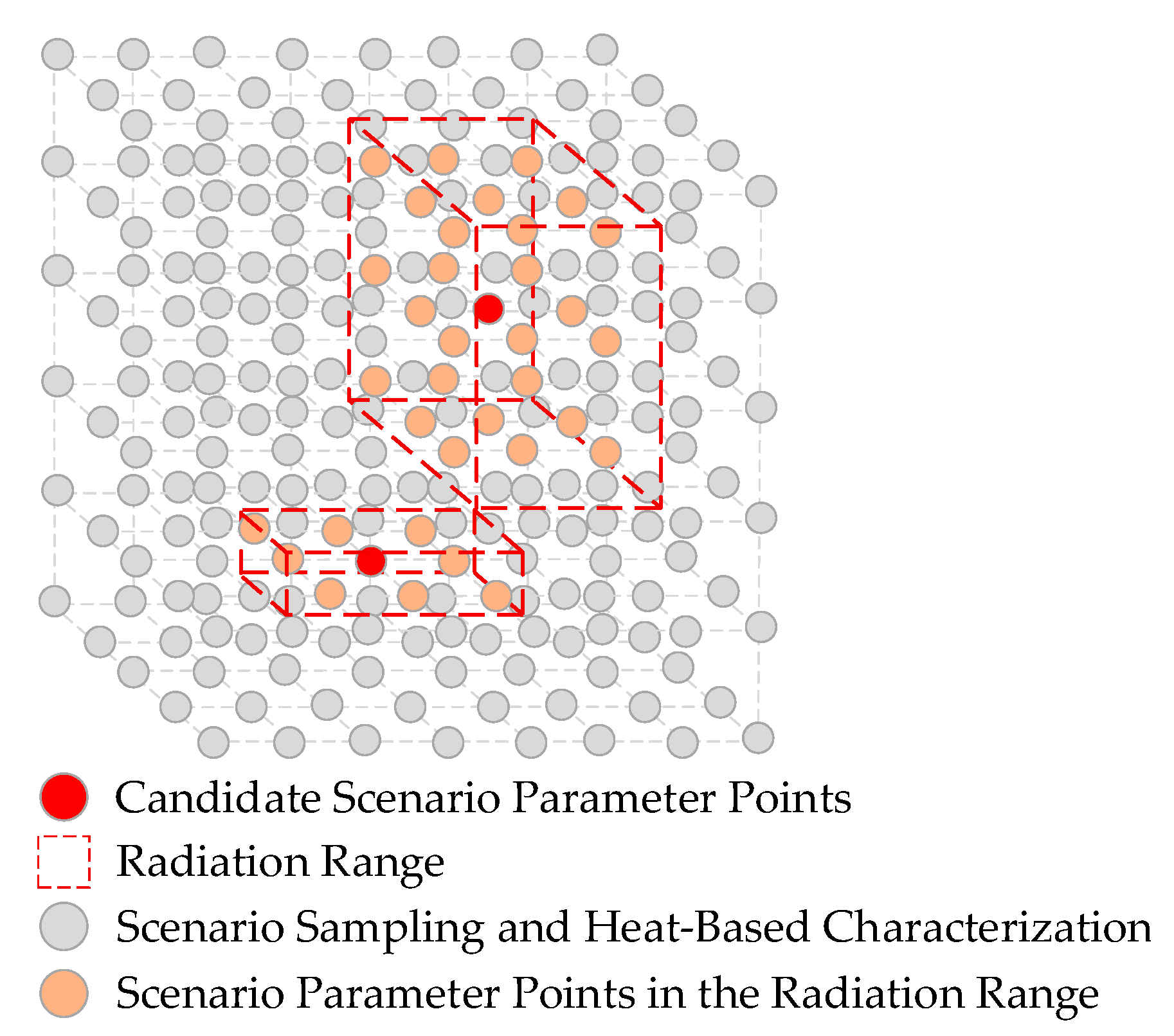

4.1. Generation of Scenario Parameter Sets for Full Coverage

4.2. Compressed Optimization of Scenario Parameter Combinations

| Algorithm 1: Process of method |

| Input: logical scenario space G; discretization steps; naturalistic statisics(μ,∑); surrogates {LSTM (longitudinal), lane-change potential U, MCTS (lateral)}; parameters ω, σ, λ, τ, ψ, rmin, rmax, rest, ϑ, η, α, δ, γ, ε, ϕ. |

| Procedure |

| 1. Initialization |

| (1) Discretize each parameter dimension according to discretization steps to form the grid G. |

| (2) Initialize the coverage indicator c(x) = 0; create an empty candidate set Q0. |

| (3) Set the prior boundary and the associated structural function W(x). |

| (4) Compute the initial heat map over G by h(x). |

| 2. Heat-guided hierarchical greedy coverage optimization |

| Repeat until c(x) = 1 for all x∈G: |

| (1) Compute the heat score s^(x) and select the maximum s^(x*). |

| (2) Compute the probability index A(x*) and the scalar risk ξ(x*) from the surrogates. |

| (3) Compute the per-dimension representativeness radius r(x*). |

| (4) Set c*(y) = 1; append x* to Q. |

| (5) Update the structural function and re-extract the boundary W(x) = 0.5. |

| (6) Recompute h(x) for all uncovered x∈G. |

| 3. Genetic algorithm under the full-coverage constraint |

| Iterate until convergence or a maximum number of generations: |

| (1) Use the full coverage set Q obtained from the H-GCO stage as the initial solution. |

| (2) Minimize the number of retained points, there must exist at least one retained qi satisfying the coverage condition for each x∈G. |

| (3) Apply roulette-wheel selection, single-point crossover, bit-flip mutation and elite preservation. |

| Output: Q*—a minimal set of concrete scenarios fully covering the discretized G. |

5. Experiment

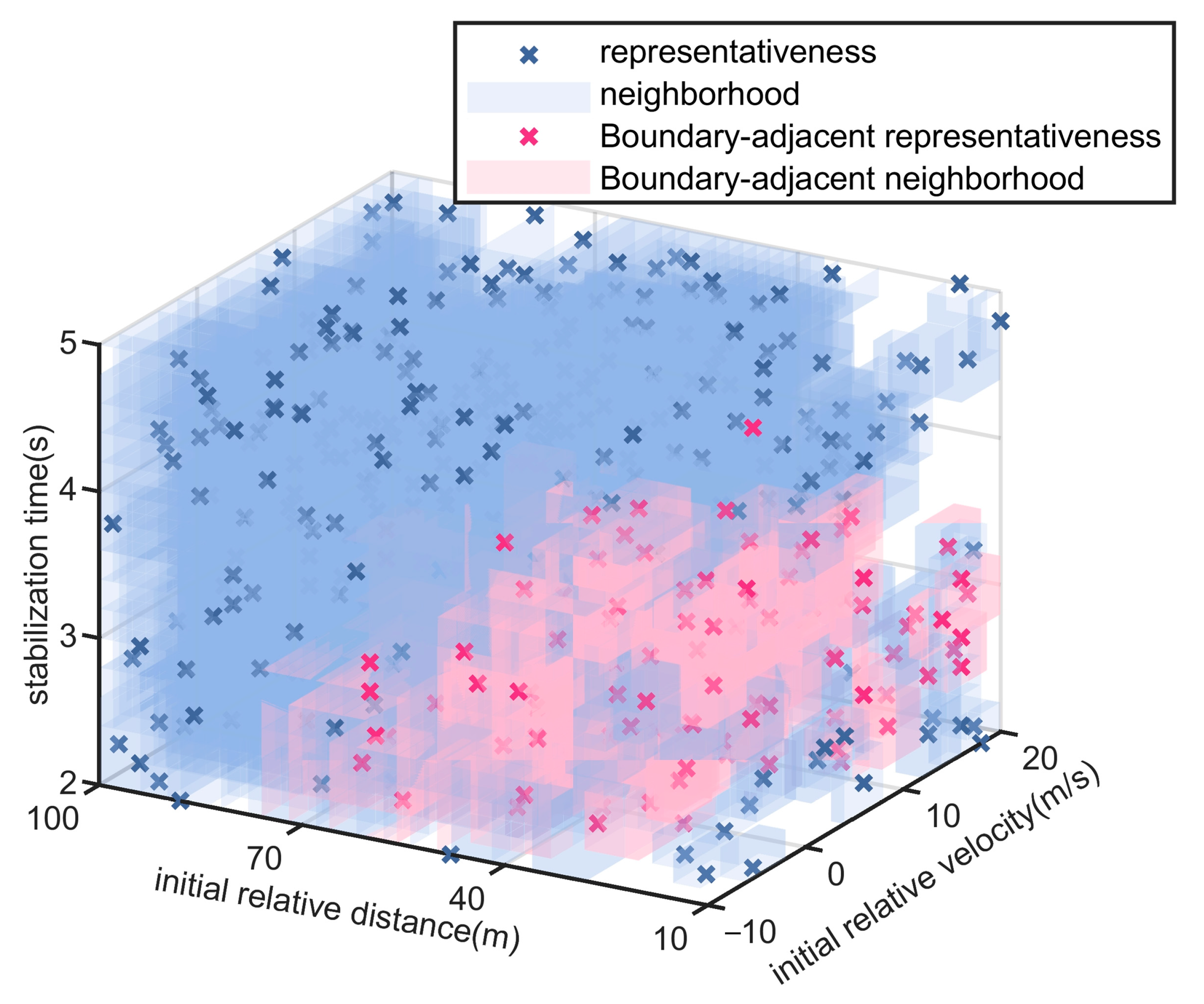

5.1. Experiment of Test Scenario Coverage Ratio

5.2. Experiment of Performance Boundary Fitting Accuracy

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADS | Automated driving systems |

| TTC | Time to collision |

| LSTM | Long short-term memory |

| MCTS | Monte carlo tree search |

| RMSE | Root mean square error |

| H-GCO | Hierarchical greedy coverage optimization |

References

- Gao, F.; Duan, J.; Han, Z. Automatic Virtual Test Technology for Intelligent Driving Systems Considering Both Coverage and Efficiency. Veh. Technol. 2020, 69, 14365–14376. [Google Scholar] [CrossRef]

- Nalic, D.; Mihalj, T.; Bäumler, M. Scenario Based Testing of Automated Driving Systems: A Literature Survey. In Proceedings of the FISITA Web Congress 2020, Virtuell, Czech Republic, 24 November 2020. [Google Scholar]

- Karunakaran, D.; Perez, J.S.B.; Worrall, S. Generating Edge Cases for Testing Autonomous Vehicles Using Real-World Data. Sensors 2024, 24, 108. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Ma, J.; Lai, E.M.-K. A Survey of Scenario Generation for Automated Vehicle Testing and Validation. Future Internet 2024, 16, 480. [Google Scholar] [CrossRef]

- Jeon, J.; Yoo, J.; Oh, T. Simulation-Based Logical Scenario Generation and Analysis Methodology for Evaluation of Autonomous Driving Systems. IEEE Access 2025, 13, 43338–433359. [Google Scholar] [CrossRef]

- Birkemeyer, L.; Fuchs, J.; Gambi, A.; Schaefer, I. SOTIF-Compliant Scenario Generation Using Semi-Concrete Scenarios and Parameter Sampling. In Proceedings of the IEEE 26th International Conference on Intelligent Transportation Systems, Bilbao, Spain, 24–28 September 2023. [Google Scholar]

- Stefan, R.; Thomas, P.; Dieter, L. Survey on Scenario-Based Safety Assessment of Automated Vehicles. IEEE Access 2020, 8, 87456–87477. [Google Scholar] [CrossRef]

- Feng, T.; Liu, L.; Xing, X.; Chen, J. Multimodal Critical-scenarios Search Method for Test of Autonomous Vehicles. J. Intell. Connect. Veh. 2022, 5, 167–176. [Google Scholar] [CrossRef]

- Mullins, G.; Stankiewicz, P.; Gupta, S. Automated Generation of Diverse and Challenging Scenarios for Test and Evaluation of Autonomous Vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation, New York, NY, USA, 29 May–3 June 2017. [Google Scholar]

- Zhao, D.; Huang, X.; Peng, H. Accelerated Evaluation of Automated Vehicles in Car-Following Maneuvers. IEEE Trans. Intell. Transp. Syst. 2018, 19, 733–744. [Google Scholar] [CrossRef]

- Yang, J.; Wang, Z.; Wang, D. Adaptive Safety Performance Testing for Autonomous Vehicles with Adaptive Importance Sampling. Transp. Res. Part C Emerg. Technol. 2025, 179, 105256. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, L.; Sastry, S.K. Safety-Critical Scenario Generation Via Reinforcement Learning Based Editing. In Proceedings of the IEEE International Conference on Robotics and Automation, New York, NY, USA, 13–17 May 2024. [Google Scholar]

- Ding, W.; Xu, C.; Arief, M. A Survey on Safety-Critical Driving Scenario Generation—A Methodological Perspective. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6971–6988. [Google Scholar] [CrossRef]

- Cai, J.; Yang, S.; Guang, H. A Review on Scenario Generation for Testing Autonomous Vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium, New York, NY, USA, 2–5 June 2024. [Google Scholar]

- Li, C.; Cheng, C.-H.; Sun, T.; Chen, Y. ComOpT: Combination and Optimization for Testing Autonomous Driving Systems. In Proceedings of the International Conference on Robotics and Automation, Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Mancini, T.; Melatti, I.; Tronci, E. Any-Horizon Uniform Random Sampling and Enumeration of Constrained Scenarios for Simulation-Based Formal Verification. IEEE Trans. Softw. Eng. 2022, 48, 4002–4013. [Google Scholar] [CrossRef]

- Li, N.; Chen, L.; Huang, Y. Research on Specific Scenario Generation Methods for Autonomous Driving Simulation Tests. World Electr. Veh. J. 2024, 15, 2. [Google Scholar] [CrossRef]

- Duan, J.; Gao, F.; He, Y. Test Scenario Generation and Optimization Technology for Intelligent Driving Systems. IEEE Intell. Transp. Syst. Mag. 2022, 14, 115–127. [Google Scholar] [CrossRef]

- Gambi, A.; Mueller, M.; Fraser, G. Automatically Testing Self-driving Cars with Search-based Procedural Content Generation. In Proceedings of the 28th ACM SIGSOFT International Symposium on Software Testing and Analysis, Beijing, China, 15–19 July 2019. [Google Scholar]

- Li, S.; Li, W.; Li, P. Novel Test Scenario Generation Technology for Performance Evaluation of Automated Vehicle. Int. J. Automot. Technol. 2023, 24, 1691–1694. [Google Scholar] [CrossRef]

- Zhu, B.; Zhang, P.; Zhao, J. Hazardous Scenario Enhanced Generation for Automated Vehicle Testing Based on Optimization Searching Method. IEEE Trans. Intell. Transp. Syst. 2022, 23, 7321–7331. [Google Scholar] [CrossRef]

- Schütt, B.; Ransiek, J.; Braun, T.; Sax, E. 1001 Ways of Scenario Generation for Testing of Self-driving Cars: A Survey. In Proceedings of the IEEE Intelligent Vehicles Symposium, Anchorage, AK, USA, 4–7 June 2023. [Google Scholar]

- Bäumler, M.; Linke, F.; Prokop, G. Categorizing Data-Driven Methods for Test Scenario Generation to Assess Automated Driving Systems. IEEE Access 2024, 12, 52032–52063. [Google Scholar] [CrossRef]

- Liu, R.; Zhao, X.; Zhu, X. Statistical Characteristics of Driver Acceleration Behaviour and its Probability Model. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2022, 236, 395–406. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, X.; Peng, S. Investigation on the Injuries of Drivers and Copilots in Rear-end Crashes Between Trucks Based on Real World Accident Data in China. Future Gener. Comput. Syst. 2017, 86, 1251–1258. [Google Scholar] [CrossRef]

- Laura, Q.; Meng, Z.; Katharina, P. Human Performance in Critical Scenarios as a Benchmark for Highly Automated Vehicles. Automot. Innov. 2021, 4, 274–283. [Google Scholar] [CrossRef]

- Lenz, D.; Kessler, T.; Knoll, A. Tactical cooperative planning for autonomous highway driving using Monte-Carlo Tree Search. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- de Gelder, E.; Hof, J.; Cator, E.; Paardekooper, J.-P.; den Camp, O.O.; Ploeg, J.; de Schutter, B. Scenario Parameter Generation Method and Scenario Representativeness Metric for Scenario-Based Assessment of Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18794–18807. [Google Scholar] [CrossRef]

- Sun, J.; Zhou, H.; Xi, H. Adaptive Design of Experiments for Safety Evaluation of Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 14497–14508. [Google Scholar] [CrossRef]

| Parameter | Data |

|---|---|

| Input Feature Dimension | 5 |

| Output Feature Dimension | 1 |

| LSTM Hidden Units | 64 |

| Number of LSTM Layers | 3 |

| Learning Rate | 0.001 |

| Dropout | 0.1 |

| Number of Epochs | 20 |

| Batch Size | 8 |

| Attention Type | / |

| Parameter Type | Parameter Value |

|---|---|

| I | 0.001 |

| Mi | 5000 kg |

| l1 | 1 |

| l2 | 0.05 |

| Scenario Generation Method | Number of Representativeness | Coverage Rates |

|---|---|---|

| proposed method | 482 | 100% |

| monte carlo method | 482 | 84.3% |

| combinatorial testing method | 482 | 86.5% |

| importance sampling method | 482 | 72.0% |

| Scenario Generation Method | RMSE |

|---|---|

| proposed method | 0.08 |

| monte carlo method | 0.19 |

| combinatorial testing method | 0.14 |

| importance sampling method | 0.07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Min, H.; Zhang, Z.; Fan, T.; Zhang, P.; Zhang, C.; Qu, G. Full Coverage Testing Method for Automated Driving System in Logical Scenario Parameters Space. Sensors 2025, 25, 5764. https://doi.org/10.3390/s25185764

Min H, Zhang Z, Fan T, Zhang P, Zhang C, Qu G. Full Coverage Testing Method for Automated Driving System in Logical Scenario Parameters Space. Sensors. 2025; 25(18):5764. https://doi.org/10.3390/s25185764

Chicago/Turabian StyleMin, Haitao, Zhiqiang Zhang, Tianxin Fan, Peixing Zhang, Cheng Zhang, and Ge Qu. 2025. "Full Coverage Testing Method for Automated Driving System in Logical Scenario Parameters Space" Sensors 25, no. 18: 5764. https://doi.org/10.3390/s25185764

APA StyleMin, H., Zhang, Z., Fan, T., Zhang, P., Zhang, C., & Qu, G. (2025). Full Coverage Testing Method for Automated Driving System in Logical Scenario Parameters Space. Sensors, 25(18), 5764. https://doi.org/10.3390/s25185764