4.1. Dataset Description

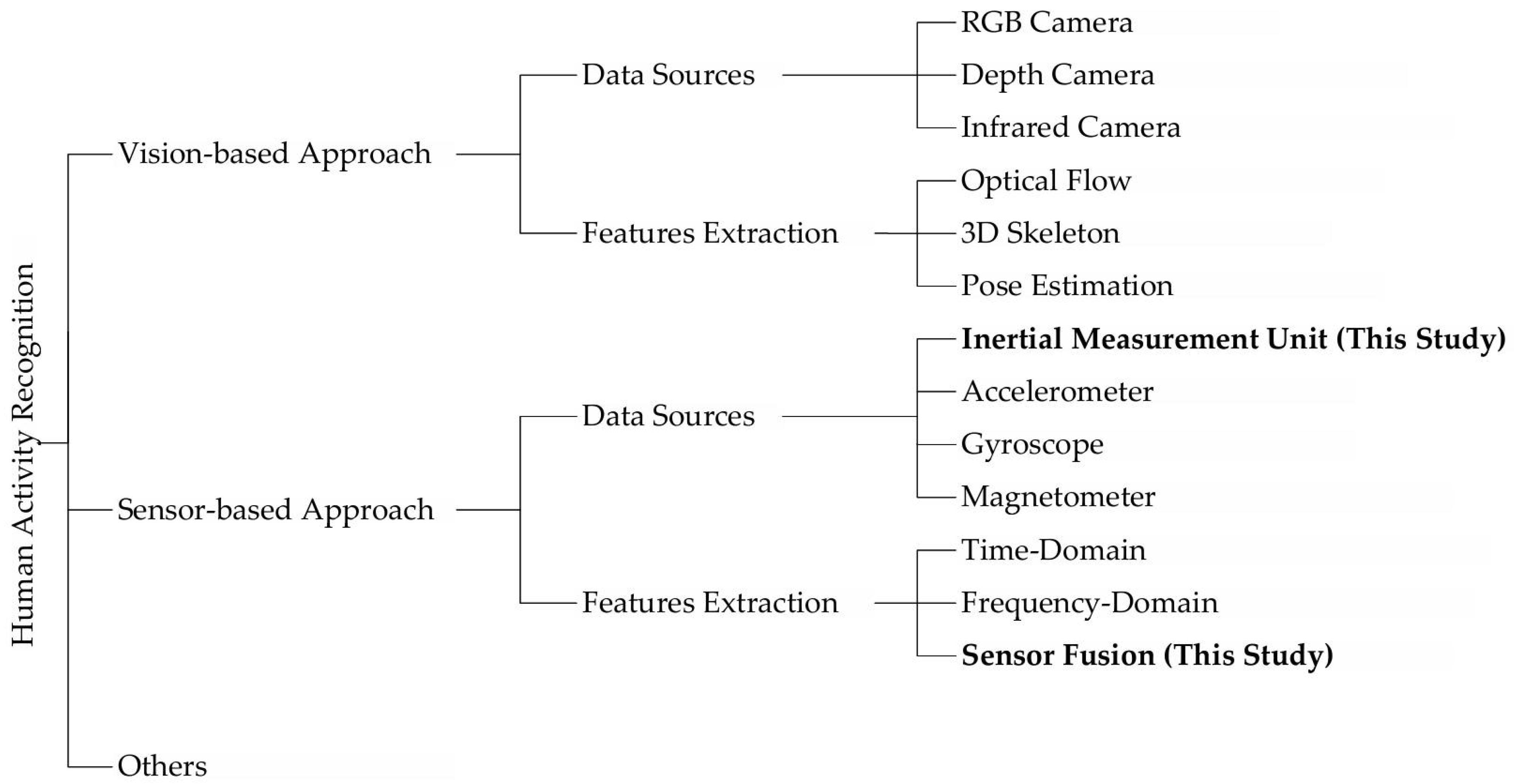

The selection of public datasets for Human Activity Recognition (HAR) research requires balancing activity diversity, sensor modalities, and experimental reproducibility. This study employs three public datasets—WISDM, USC-HAD, and PAMAP2—representing low-cost single-sensor scenarios, laboratory-grade multimodal scenarios, and complex multimodal activity scenarios, respectively. Their complementary characteristics cover recognition requirements from basic actions to high-complexity activities, providing benchmark support for research on algorithm robustness and multimodal fusion.

The WISDM dataset was collected in a laboratory-controlled environment to ensure data quality. Acceleration data for six basic daily activities (walking, jogging, ascending stairs, descending stairs, sitting, standing) were gathered via smartphone accelerometers placed in the front pants leg pockets of 36 participants. With a sampling frequency of 20 Hz, the dataset comprises 1,098,209 samples (approximately 15.25 h of acceleration data). Data collection utilized only triaxial accelerometers, reflecting the typical configuration of early mobile devices [

44,

45]. Dataset specifications are summarized in

Table 3.

USC-HAD (University of Southern California Human Activity Dataset) is a multimodal sensor dataset specifically designed for HAR, providing high-precision, diverse behavioral benchmarks for smart device interaction, healthcare monitoring, and robotic motion analysis. The dataset comprises data from 14 participants performing daily activities in a laboratory setting. Sensor data was captured by a 6-axis Inertial Measurement Unit (IMU)—including a triaxial accelerometer and gyroscope—fixed on the right front hip at a sampling frequency of 100 Hz. Each activity was repeated five times to enhance data diversity [

46,

47]. The average data collection duration per participant was approximately 6 h. Dataset specifications are detailed in

Table 4, while the executed activities are listed in

Table 5.

The PAMAP2 dataset targets complex activity recognition, capturing 18 activities in daily life environments using three Inertial Measurement Units (IMUs) (sampling frequency: 100 Hz) positioned on the wrist, chest, and ankle, and a heart rate sensor (sampling frequency: 9 Hz) worn by nine participants. The data collection duration per participant exceeded 10 h. Its multimodal signals—including acceleration, gyroscope, magnetometer, and heart rate data—support sensor fusion research but require handling high-frequency data and partial missing values. Consequently, it is exceptionally suitable for complex-scenario activity recognition and cross-modal analysis [

48,

49]. The dataset comprises 3,850,491 raw samples, with 2,724,817 valid activity data points (after preprocessing to remove invalid segments). Specifications are detailed in

Table 6, and executed activities are listed in

Table 7.

4.2. Data Preprocessing

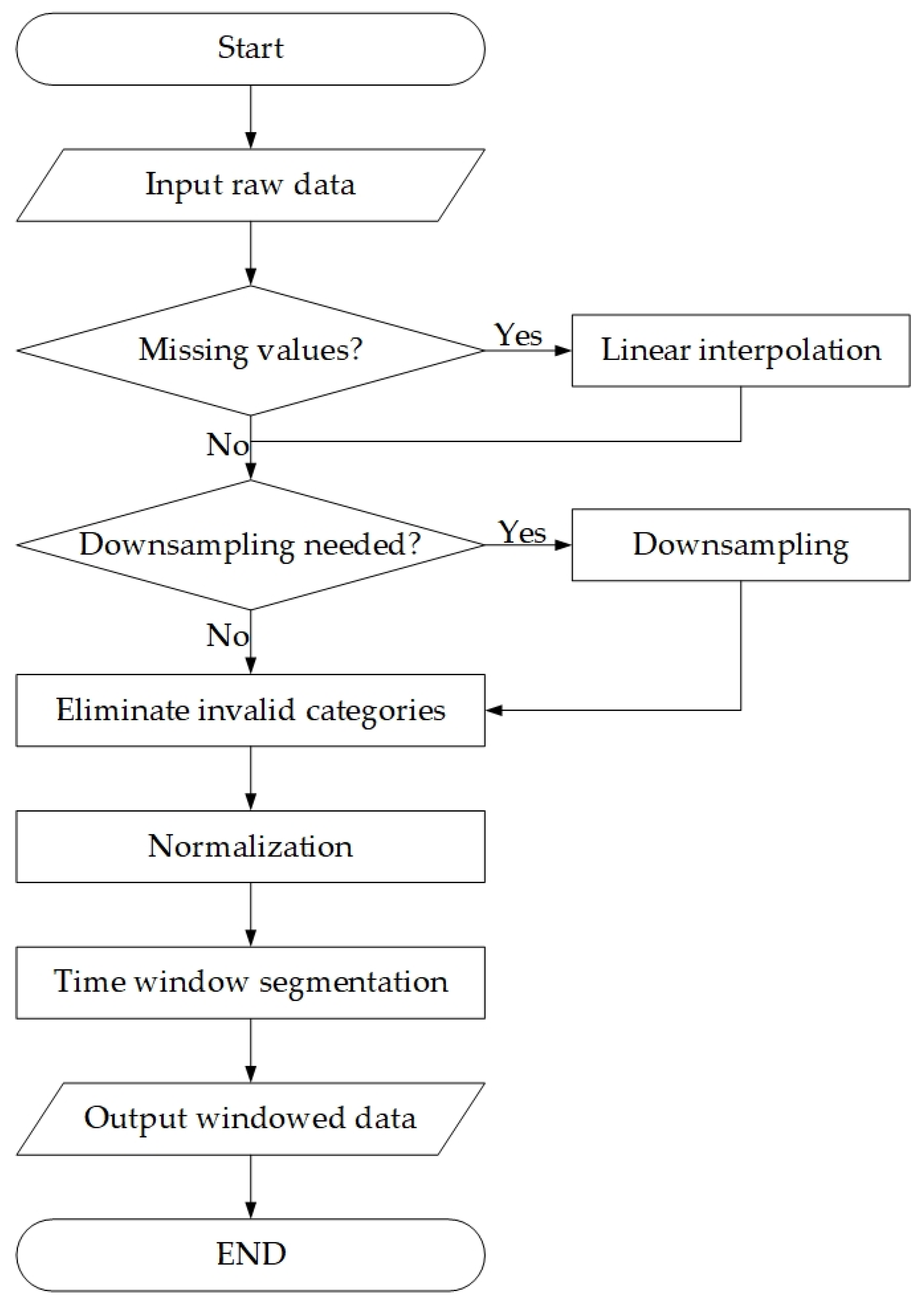

To achieve human activity recognition, time-series signals collected from diverse sensor modalities are segmented into continuous samples via the sliding window technique. These samples are then normalized to [−1, 1]. The dataset preprocessing workflow is illustrated in

Figure 7.

For the WISDM dataset, considering its low sampling frequency, a longer 4 s window (window length = 80) with 50% overlap rate is adopted during time window segmentation. This dataset approximates real-life scenarios, but its activity categories primarily focus on fundamental motion patterns. We split the training set and test set in an 8:2 ratio based on users. Specifically, the finally selected users for the test set are {1, 9, 11, 16, 19, 23, 28, 31}, and the data of the remaining users is used as the training set.

For the USC-HAD dataset, linear interpolation is first applied to repair missing values in sensor data, followed by downsampling to 50 Hz to balance computational efficiency and motion detail preservation. Time window segmentation employs a sliding window with a temporal stride of 128 (2.56 s) and 50% overlap rate, generating three-dimensional time-series data (samples × 128 × 6). We split the training set and test set in an 8:2 ratio based on users. Specifically, the finally selected users for the test set are {1, 10, 12}, and the data of the remaining users is used as the training set.

For the PAMAP2 dataset, we select 12 types of actions for classification, where the action indices correspond to those in

Table 7: {1, 2, 3, 4, 5, 6, 7, 12, 13, 16, 17, 24}. We utilize three IMUs to extract raw triaxial acceleration (±16 G range) and angular velocity. Missing values are filled via linear interpolation, and valid activity segments are selected. We extract 18-dimensional time-series data from hand-, waist-, and ankle-mounted IMUs, using activity labels as supervision signals. To reduce computational complexity, the data is downsampled to 33 Hz and subsequently normalized to the range of [−1, 1]. During time window segmentation, a window length of 169 samples (corresponding to 5.12 s) is adopted, with a stride of 84 samples (resulting in a 50% overlap rate). We select the data of Subject 105 as the test set, while the data of the remaining subjects is used as the training set.

This preprocessing preserves the temporal dynamics of sensor time-series while enhancing data regularity through downsampling, normalization, and windowing. The preprocessed data provides high-quality input for subsequent end-to-end model learning. The sample counts of training and test sets for each preprocessed dataset are summarized in

Table 8.

This study aims to further address issues commonly encountered in sensor time-series data in Human Activity Recognition (HAR) tasks, such as insufficient sample diversity and limited model generalization ability. To this end, we only perform data augmentation on the preprocessed training set data of three datasets: WISDM, USC-HAD, and PAMAP2. Specifically, random Gaussian noise is added, with a mean of 0 and standard deviation of 0.01. When combined with the downsampling, normalization, and sliding window preprocessing mentioned earlier, this data augmentation strategy can further improve the quality of input data, help the model better accommodate data variations in real-world scenarios, and provide more reliable support for subsequent end-to-end model learning.

4.3. Experimental Environment

The experiments in this study were conducted on a computational platform equipped with an NVIDIA GeForce RTX 4050 Laptop GPU and an Intel Core i7-13700H CPU. This computational unit accelerates matrix operations through the CUDA 11.8 parallel computing architecture. At the software level, the model was implemented using the PyTorch 2.2.0 deep learning framework, which is specifically optimized for mixed-precision training and dynamic computation graphs. PyTorch 2.2.0 is fully compatible with Python 3.11. The experimental environment configuration is detailed in

Table 9.

The hyperparameter configuration for this experiment is detailed in

Table 10. Parameter updates are performed using the Adam optimizer, with 400 training epochs and a fixed batch size of 300. The learning rate is set to 0.001, and the cross-entropy loss function is selected to optimize probability distribution discrepancies in classification tasks. This configuration balances training efficiency and convergence stability, empirically ensuring optimal model performance within reasonable computational overhead.

Table 11 details the holistic architectural configuration of the model. The backbone comprises a hierarchical feature processor integrating TCN, Res-BiGRU, and attention mechanism.

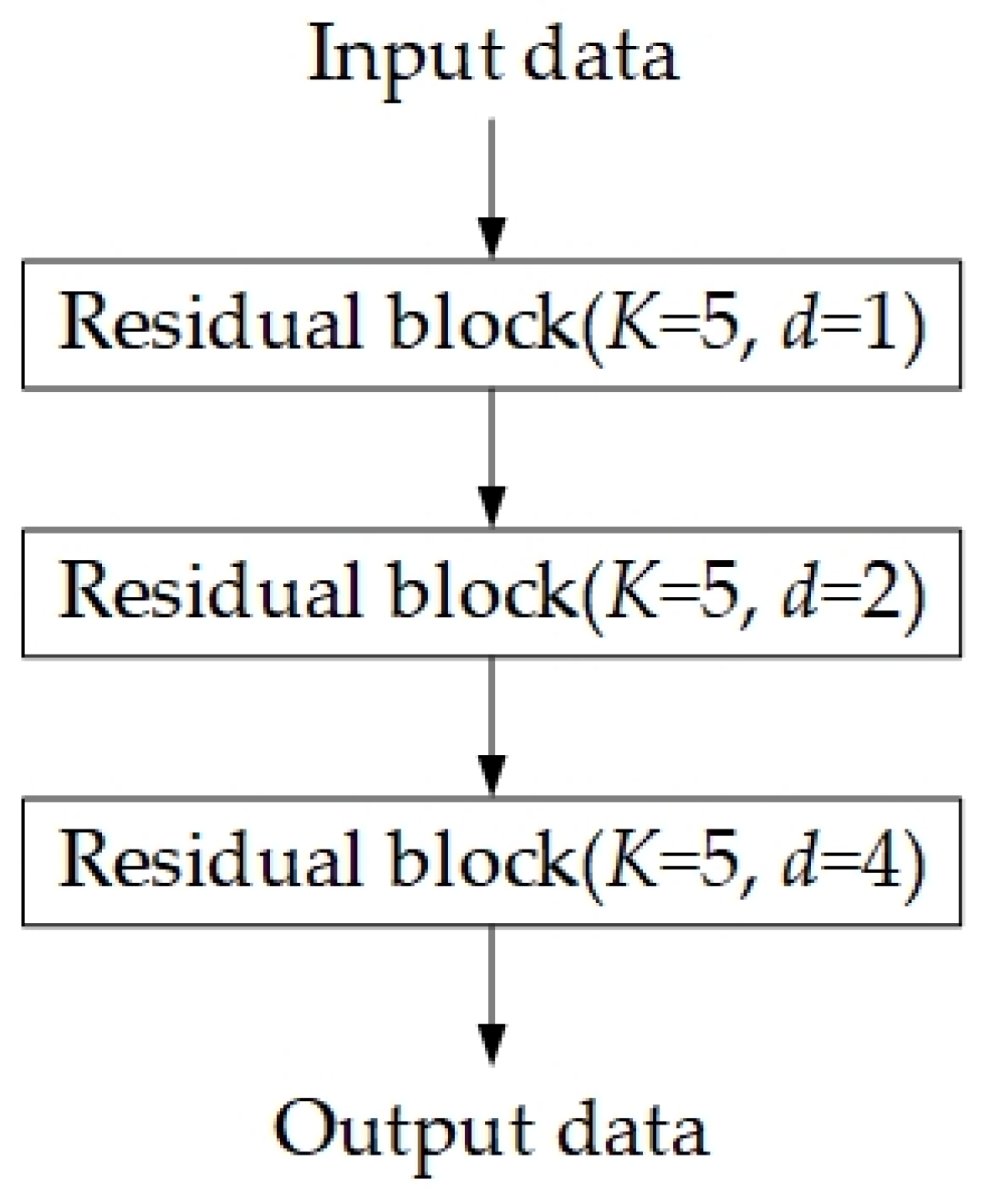

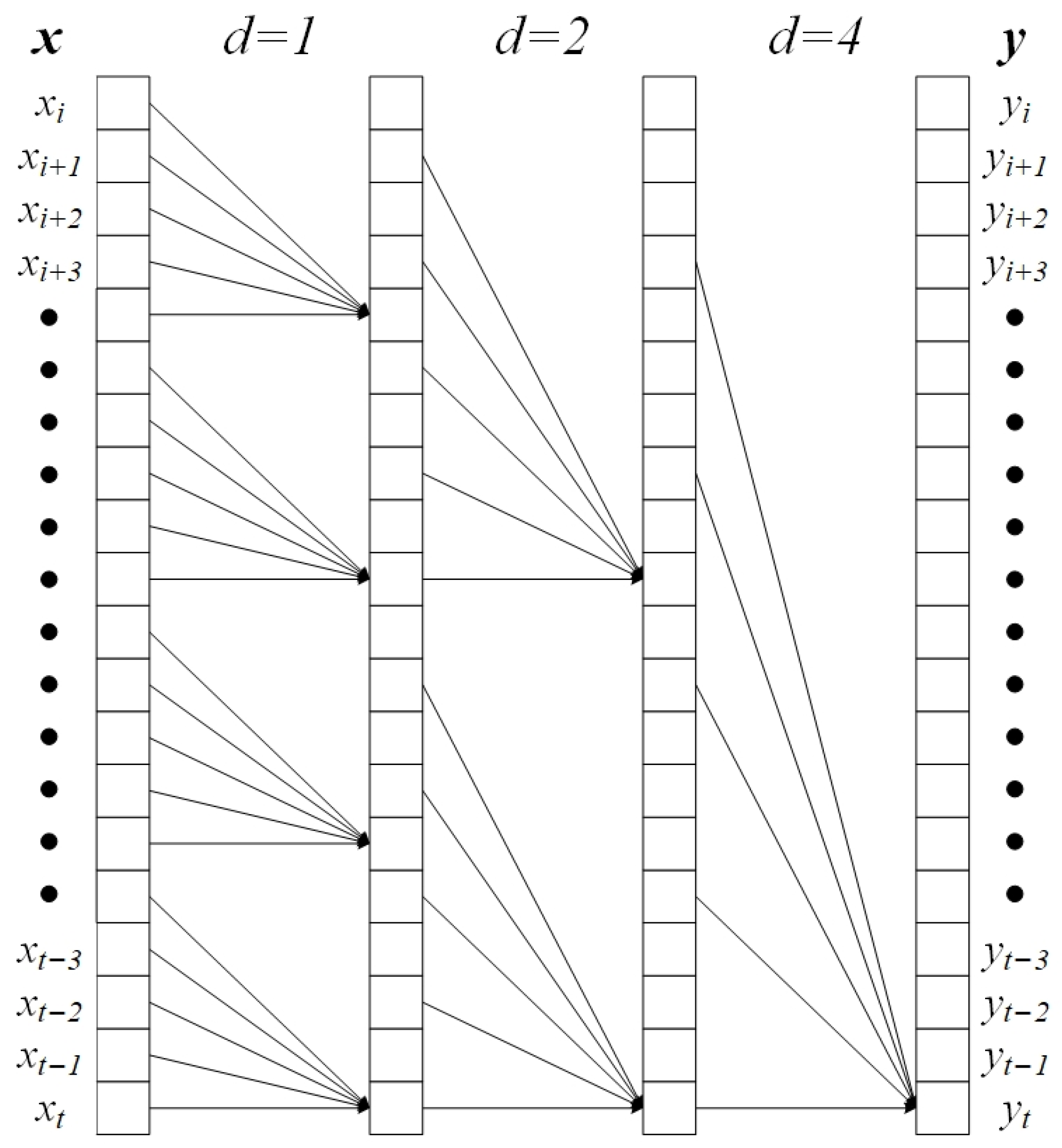

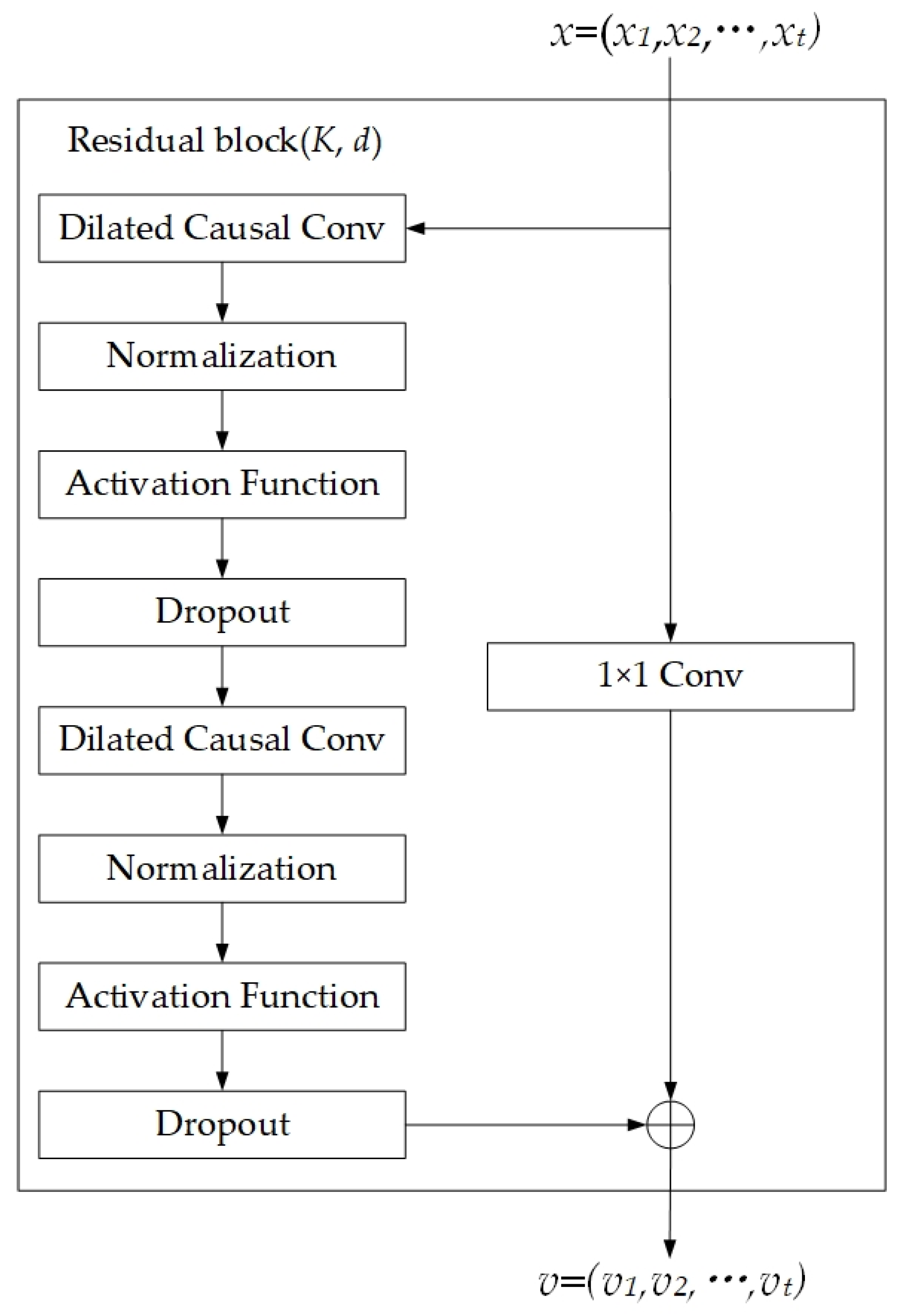

Input data first traverses a dilated convolutional stack with three residual TCN blocks. Each block employs 1D causal convolutions with 64 channels, kernel size = 5, and dilation factors sequentially set to 1, 2, and 4. The modified Swish activation function replaces conventional ReLU to enhance nonlinear representational capacity, supplemented with inter-layer batch normalization and regularization strategies.

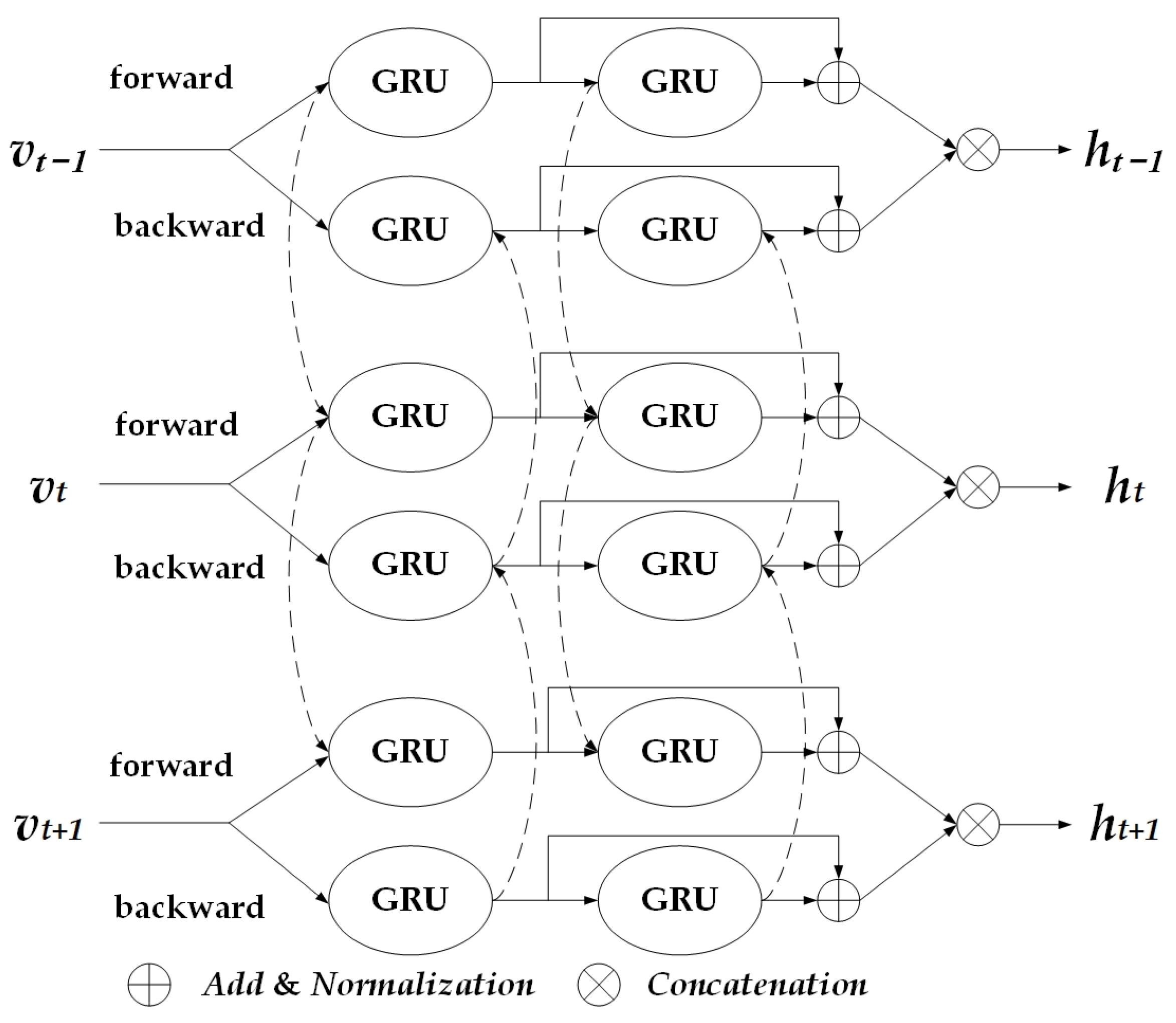

TCN output features are fed into a skip-connected bidirectional GRU network. Feature data passes through the first BiGRU layer, whose outputs serve as inputs to the second BiGRU layer. The outputs of both layers undergo residual summation, forming a gradient-stable context-aware temporal feature extraction module.

In the attention layer, adaptively weighted attention normalizes and computes importance weights per timestep, enabling dynamic feature-weighted fusion. The classifier adopts a three-stage dimensionality reduction framework, with each fully connected layer followed by LeakyReLU activation (negative slope: 0.01) to balance information flow.

4.4. Evaluation Metrics

For classification tasks, the metrics used to evaluate model performance include accuracy, precision, recall, and F1 score.

Accuracy measures the proportion of correctly classified instances relative to the total population, with higher values indicating superior overall model performance. Precision quantifies the ratio of true positives to all predicted positives for a given class, reflecting its classification exactness. Recall represents the proportion of true positives correctly identified relative to all actual positives in that class, indicating coverage completeness. F1 score, defined as the harmonic mean of precision and recall, evaluates balanced classification performance per target class.

The mathematical definitions of these metrics are given in Equations (13)–(16) [

50].

Here,

denotes the total number of classes,

denotes the number of samples correctly predicted as class

,

is the total sample count,

denotes the true sample count of class

,

denotes samples misclassified as class

but belonging to other classes, and

denote samples truly belonging to class

but mispredicted as other classes.

and

denote the precision and recall metrics for class

, respectively.

4.5. Experimental Result

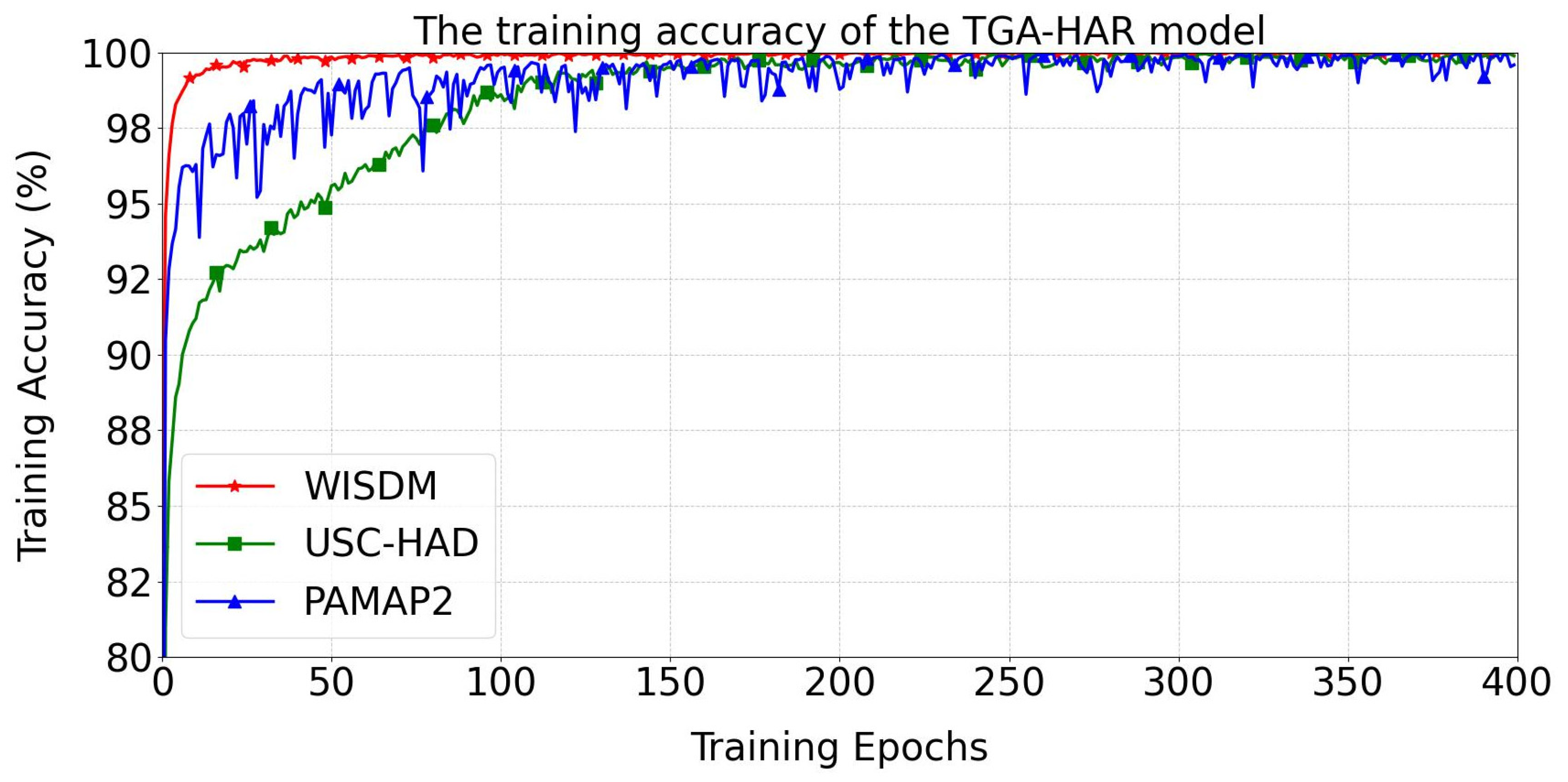

The training accuracy curves of the TGA-HAR model across three human activity datasets (WISDM, USC-HAD, and PAMAP2) are presented in

Figure 8. During initial training, the model exhibits rapid accuracy improvement on all datasets. For the WISDM dataset, training accuracy reaches 99% at Epoch 9. The USC-HAD configuration achieves 99% accuracy at Epoch 109, while the PAMAP2 variant attains 99% at Epoch 56. After Epoch 150, both WISDM and USC-HAD configurations stabilize above 99% accuracy. The PAMAP2 implementation exhibits greater volatility.

4.5.1. Overall Performance Evaluation of TGA-HAR on Three Datasets

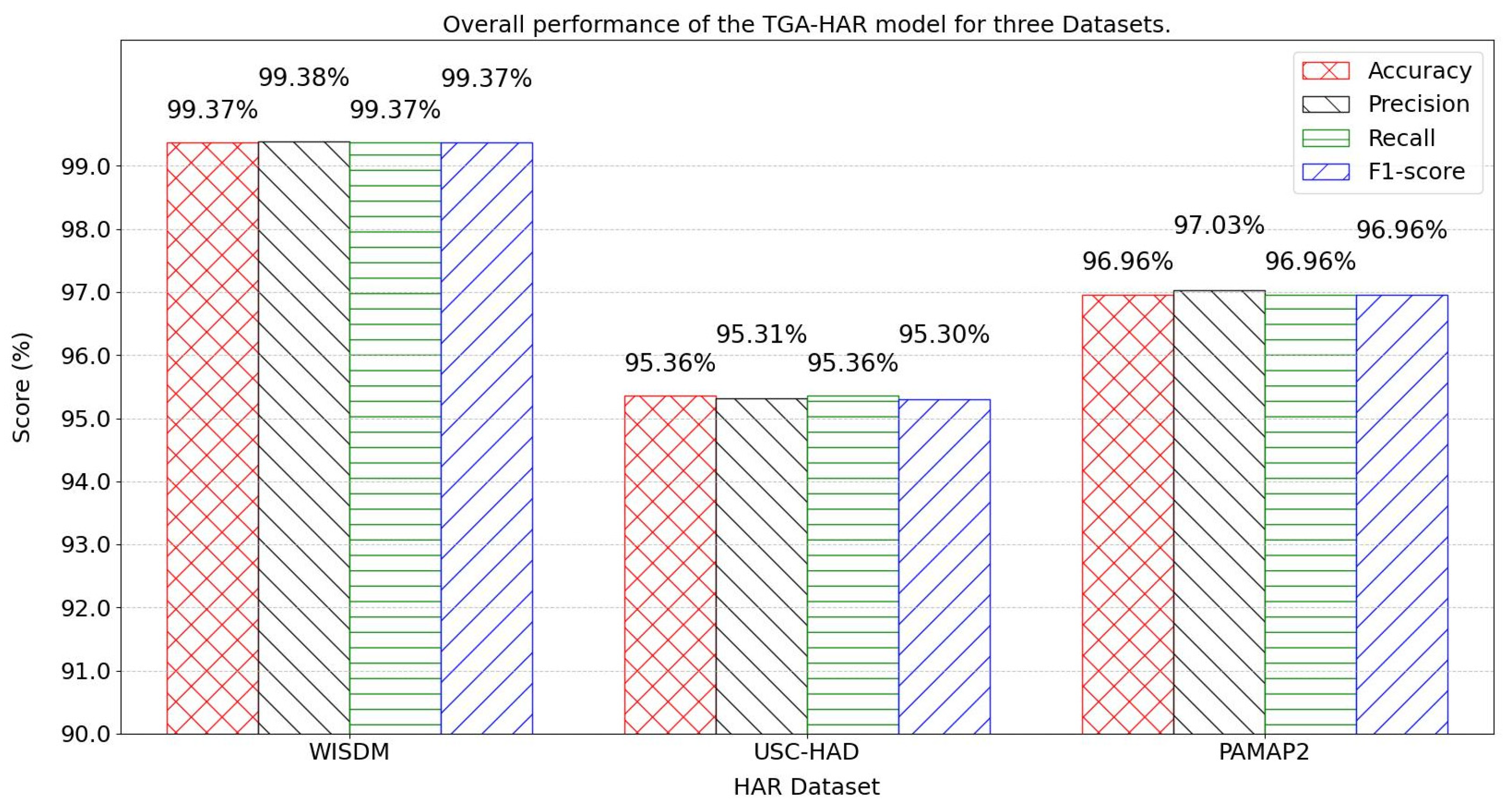

Figure 9 illustrates the model’s holistic performance across three datasets.

On the WISDM dataset, the model demonstrates exceptional generalization capability, achieving 99.37% accuracy, 99.38% precision, 99.37% recall, and 99.37% F1 score. This performance may be attributed to standardized activity types and simplified accelerometer-based data collection for basic motions.

For the USC-HAD dataset, experimental evaluation reveals robust recognition performance with 95.36% accuracy, 95.31% precision, 95.36% recall, and a corresponding 95.30% F1-score.

On the PAMAP2 dataset, the model excels in complex scenarios involving multi-sensor fusion, attaining 96.96% accuracy, 96.96% F1 score, 97.03% precision, and 96.96% recall. These metrics confirm that the TGA-HAR model effectively addresses the challenges of fusing heterogeneous sensors (chest-, ankle-, and wrist-mounted monitors) in intricate activity recognition tasks.

4.5.2. Per-Activity Performance Evaluation of TGA-HAR on Three Datasets

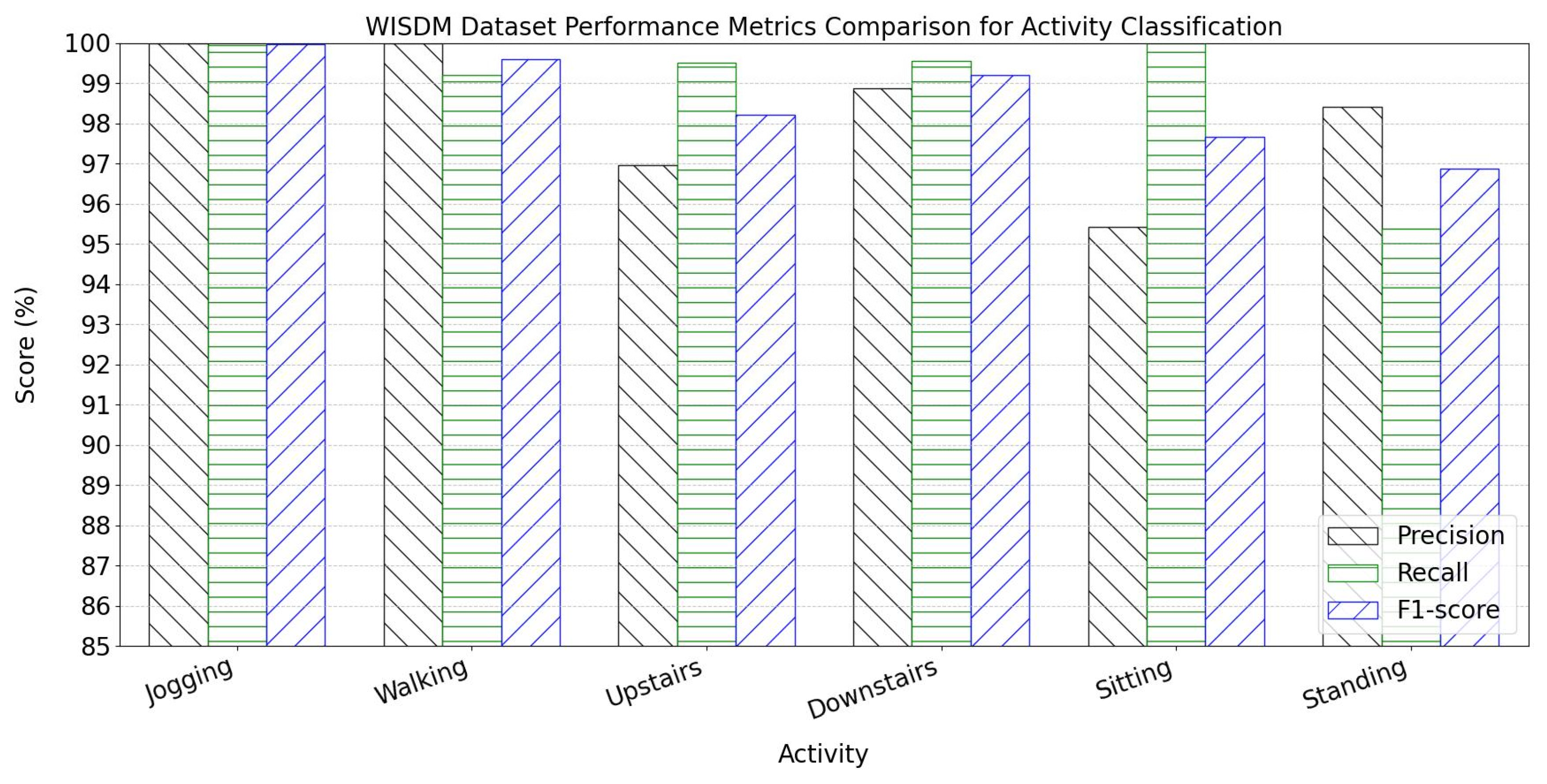

The per-activity classification metrics for the WISDM dataset are presented in

Figure 10. Experiments on six human activities demonstrate outstanding performance across all three core evaluation metrics.

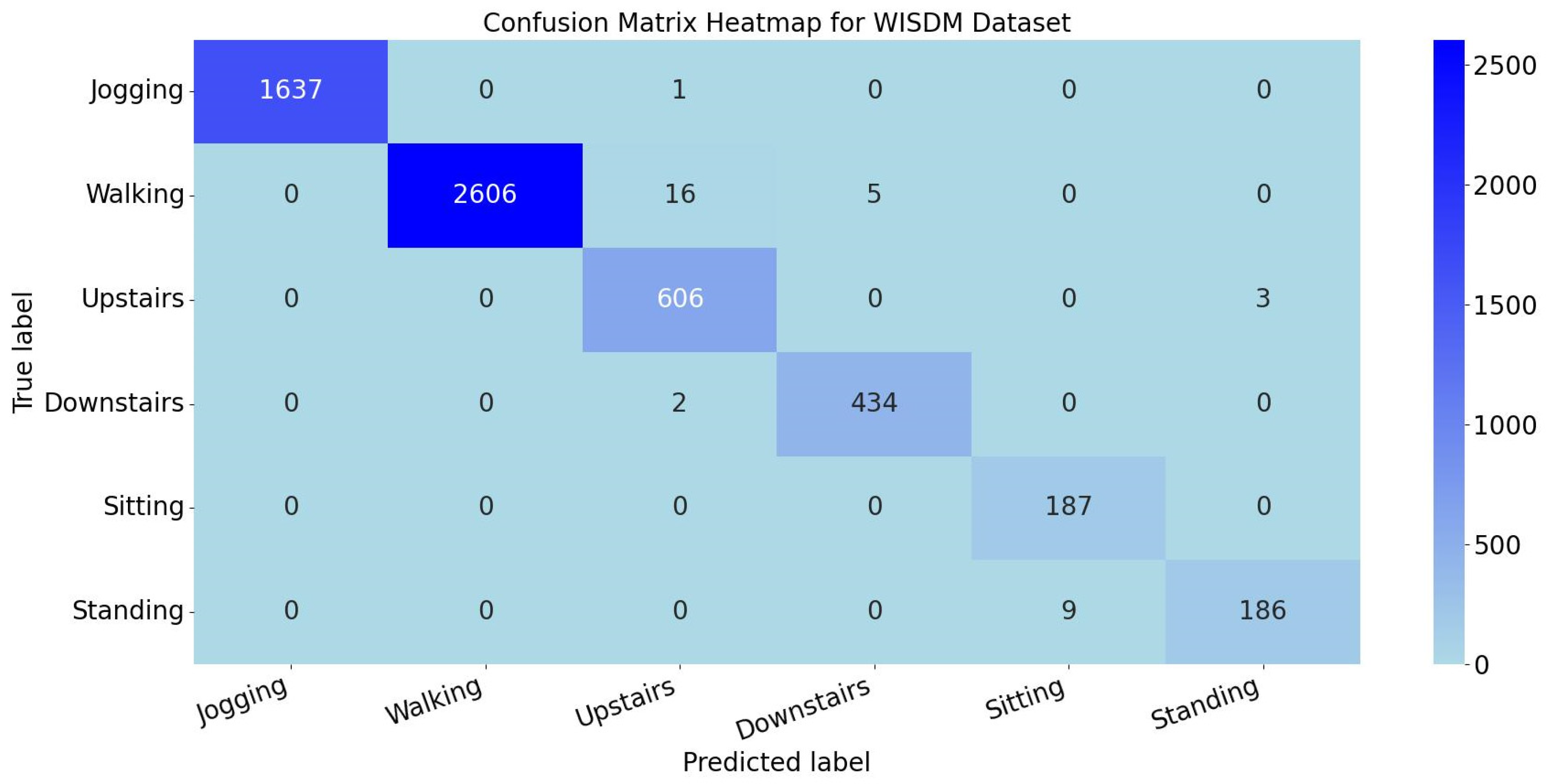

For all six activity classes in WISDM, precision, recall, and F1 score exceed 95%, confirming the model’s stable generalization capability for basic daily activities using triaxial acceleration data. The confusion matrix heatmap is shown in

Figure 11.

The heatmap reveals that misclassified cases are minimal across all categories, with an average of 6 misclassifications per activity class. Both the per-activity metrics and heatmap demonstrate the model’s strong discriminative capability in recognizing fundamental motions.

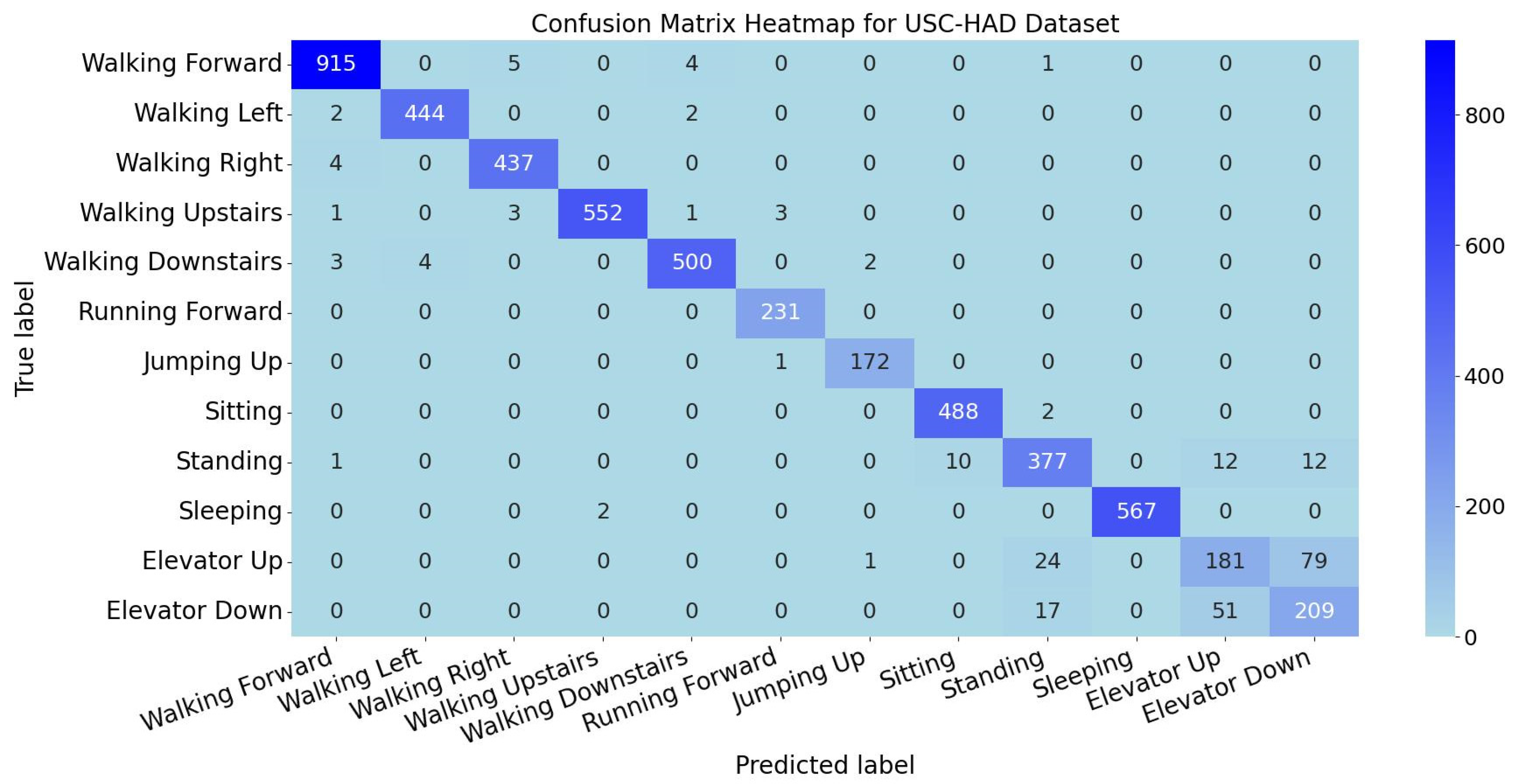

The per-activity classification metrics for the USC-HAD dataset are presented in

Figure 12. The TGA-HAR model demonstrates strong classification performance on USC-HAD, indicating high accuracy and robustness in recognizing most activities. However, metrics for elevator-related operations (ascending/descending) are significantly lower, while standing recognition metrics are slightly below other activities. This suggests potential feature similarity challenges between static postures.

The confusion matrix heatmap for activity classification is shown in

Figure 13. The heatmap reveals high diagonal accuracy but exhibits localized inter-class confusion. Minor misclassifications in walking-related activities may stem from temporal feature similarities caused by subtle gait variations. Notably, there is pronounced mutual misclassification between elevator ascending/descending and standing categories. Multiple elevator instances are misclassified as standing, and vice versa. This phenomenon occurs because elevator motions exhibit minimal variations in accelerometer and gyroscope signals during steady-state motion (except initial acceleration/final deceleration phases), where sensor readings resemble static standing. For other activities, low off-diagonal values confirm effective class discrimination. Additionally, feature extraction strategies require optimization for elevator and standing scenarios to enhance precision.

The per-activity classification metrics for the PAMAP2 dataset are shown in

Figure 14, while the confusion matrix heatmap for activity classification is displayed in

Figure 15. Comprehensive analysis of precision, recall, F1 score, and confusion matrix demonstrates that the TGA-HAR model achieves exceptional classification performance for most activity categories.

Specifically, 12 activity classes exhibit precision, recall, and F1 score consistently maintained above 92%. These values reflect high classification reliability. Confusion matrix analysis further validates the model’s robustness—diagonal elements (correctly classified samples) show significant dominance, with off-diagonal misclassification regions maintaining low values. This confirms the model’s strong discriminative power and generalization capability in multi-class recognition scenarios.

4.6. Comparative Experiment

To compare the performance of different models on three datasets, this study designed comparative experiments. The baseline CNN model consists of 6 one-dimensional convolutional layers, each using convolutional kernels of size 5 and 64 channels. After each convolution operation, batch normalization, activation function application, and Dropout regularization are performed sequentially. The RES-CNN model was developed by modifying the aforementioned CNN architecture, where residual connections are introduced after every two convolutional layers to alleviate the vanishing gradient problem in deep networks. For the CNN-LSTM model, a hybrid architecture is adopted. The CNN-LSTM model cascades two layers of unidirectional LSTM networks after the CNN feature extraction module. Each LSTM layer is configured with 64 hidden units to further extract sequential features. The results of the comparative experiments are presented in

Table 12.

On the WISDM dataset, the CNN model achieves an accuracy of 96.80% and an F1 score of 96.97%. The Res-CNN model improves these metrics to 96.98% (accuracy) and 97.04% (F1 score). The CNN-LSTM model reaches 98.66% for accuracy and 98.67% for F1 score. The TGA-HAR model (This Study) performs the best, with both accuracy and F1 score at 99.37%. On the USC-HAD dataset, the CNN model yields an accuracy of 88.95% and an F1 score of 89.02%. The Res-CNN model enhances these to 90.73% (accuracy) and 90.66% (F1 score), and the CNN-LSTM model achieves an accuracy of 92.31% with an F1 score of 92.36%. The TGA-HAR model (This Study) leads with an accuracy of 95.36% and an F1 score of 95.30%. For the PAMAP2 dataset, the CNN model obtains an accuracy of 92.21% and an F1 score of 92.24%. The Res-CNN model improves these values to 92.78% (accuracy) and 92.81% (F1 score), respectively, and the CNN-LSTM model further increases them to 94.11% (accuracy) and 94.08% (F1 score). The TGA-HAR model (This Study) again demonstrates the best performance, with both accuracy and F1 score at 96.96%.

In this paper, TGA-HAR adopts a LOSO-like partitioning strategy (i.e., test users are excluded from the training set), while TCN-Attention-HAR uses random 8:2 partitioning of the entire sample set. For cross-dataset performance comparison, TGA-HAR outperforms TCN-Attention-HAR by a slight margin on the WISDM dataset; on the USC-HAD and PAMAP2 datasets, TGA-HAR is slightly inferior to TCN-Attention-HAR, but the performance gap is within 1.5%. This discrepancy stems from the distinct evaluation scenarios—and crucially, TGA-HAR’s architectural design further shapes its adaptability to these scenarios.

Specifically, compared with TCN-Attention-HAR, TGA-HAR incorporates Res-BiGRU, which endows it with advantages in global temporal modeling. The integration of Res-BiGRU enhances TGA-HAR’s capability to capture long-range and bidirectional temporal dependencies, a feature that helps extract common activity patterns across users (rather than relying on individual-specific traits).

In random partitioning, overlap between training and test users allows models to leverage known users’ individual characteristics for recognition—an advantage that TCN-Attention-HAR may exploit more in this scenario. In contrast, LOSO-like partitioning enforces strict user isolation: TGA-HAR is required to extract shared activity features from 80% of users to adapt to unknown users, directly addressing feature shifts caused by individual differences in human activities. This setup evaluates “inter-user generalization ability,” which aligns more with real-world application scenarios but poses higher task difficulty. While TGA-HAR’s Res-BiGRU supports its generalization, the stricter LOSO-like constraint still leads to marginal performance differences on some datasets—a result that reflects the scenario’s inherent complexity rather than a flaw in TGA-HAR’s design. Overall, the TGA-HAR model proposed in this study outperforms the CNN, Res-CNN, and CNN-LSTM models in terms of both accuracy and F1 score across all three datasets. Additionally, the CNN-LSTM model performs better than the Res-CNN and CNN models, while the Res-CNN model only achieves a marginal improvement over the CNN model.

4.7. Ablation Experiment

4.7.1. Module Verification

Ablation studies constitute a core methodology for validating the effectiveness of deep learning models. This research employs a hierarchical decoupling strategy to investigate the functional contributions of individual components in temporal pattern recognition.

The TGA-HAR model adopts a multi-stage feature processing architecture:

Dilated convolutions extract multi-scale local features

Bidirectional recurrent structures capture temporal dependencies

Adaptive weighting mechanisms refine the feature space

To verify inter-module synergies, three controlled models are designed:

GRU-Only: This model retains solely the bidirectional GRU module.

TCN-Only: This model preserves only the Temporal Convolutional Network (TCN) module.

TCN-GRU-No-Attention: This model combines TCN and GRU without attention-based feature enhancement.

These controlled models quantify component contributions across feature extraction, temporal propagation, and feature refinement stages by progressively reducing modules. Experiments are conducted on WISDM, USC-HAD, and PAMAP2 datasets under identical hyperparameters and environmental conditions as the full TGA-HAR model, with results detailed in

Table 13.

In the relatively simple WISDM scenario, single-module models (TCN-Only or GRU-Only) show certain capabilities in capturing temporal patterns. The TCN-Only model achieves an accuracy of 96.70% and an F1 score of 96.77%. The GRU-Only model attains an accuracy of 96.43% and an F1 score of 96.54%. The TCN-GRU-No-Attention combination, leveraging the complementary nature of local feature extraction by TCN and global temporal feature extraction by GRU, reaches an accuracy of 99.02% and an F1 score of 99.02%. Given the characteristics of the WISDM dataset, the attention mechanism in the subsequent TGA-HAR model further optimizes performance. The TGA-HAR model (This Study) achieves an accuracy of 99.37% and an F1 score of 99.37%, showing a step-by-step improvement compared to the non-attention variant.

In more complex USC-HAD scenarios with intricate activities, standalone models have limitations. The TCN-Only model, when extracting local features, achieves an accuracy of 91.22% and an F1 score of 91.26%. The GRU-Only model, focusing on global temporal features, attains an accuracy of 91.47% and an F1 score of 91.50%. The TCN-GRU-No-Attention combination partially alleviates these problems through cross-scale feature fusion, improving the accuracy by 1.67% (compared to GRU-Only) and the F1 score by 1.62%. Importantly, the attention mechanism in the TGA-HAR model significantly enhances fine—grained activity recognition through weighted feature allocation. It increases the accuracy by 2.22% (compared to TCN-GRU-No-Attention) and the F1 score by 2.18%, finally achieving an accuracy of 95.36% and an F1 score of 95.30%.

For the multimodal complex scenario of PAMAP2, the causal convolutions of the TCN-Only model effectively extract short-term temporal correlations from multi-sensor data, achieving an accuracy of 93.45% and an F1 score of 93.41%. The GRU-Only model captures long-term temporal dependencies from multi-sensor data, attaining an accuracy of 92.88% and an F1 score of 92.89%. The TCN-GRU-No-Attention model, through the joint extraction of features by TCN and GRU, can capture both short-term correlations and long-term dependencies, improving the accuracy by 1.23% (compared to TCN-Only) and the F1 score by 1.28%. The attention layer in the TGA-HAR model dynamically weights the contributions across timesteps, enhancing the classification ability for complex activities. It achieves an accuracy of 96.96% and an F1 score of 96.96%, representing a 2.28% improvement in accuracy compared to the TCN-GRU-No-Attention model.

Performance disparities reveal intrinsic links between component functions and data characteristics. TCN-GRU synergy addresses local-global feature integration challenges, while the attention mechanism enables adaptive optimization for complex scenarios. These elements collectively construct a hierarchical feature processing pipeline for robust activity classification.

4.7.2. The Impact of the Number of GRU Layers on Performance

To investigate the impact of the number of GRU layers on the performance of the TGA-HAR model, this experiment uses the PAMAP2 dataset. The model keeps the convolutional structure of the TCN module, the computational logic of the attention mechanism, and the training hyperparameters exactly consistent. During the experiment, the number of GRU layers in the Res-BiGRU module is sequentially set to 2, 4, and 6. Through this setup, the effect of the layer count on the model is verified. After training is completed, the accuracy and F1 score are evaluated on the test set. The experimental results are shown in

Table 14.

When the number of GRU layers is 2, both the model’s accuracy and F1 score reach 96.96%. When the number of layers increases to 4, both metrics rise synchronously to 97.34%, which is an increase of 0.38 percentage points compared to the 2-layer structure. When the number of layers is further increased to 6, the accuracy reaches 97.53%, and this represents an increase of 0.19 percentage points compared to the 4-layer structure. The F1 score in this case is 97.52%, which corresponds to an increase of 0.18 percentage points compared to the 4-layer structure.

From the overall trend, increasing the number of GRU layers gradually enhances the model’s ability to model temporal features. Deeper networks can capture more complex dependency relationships, so the model performance shows a continuous upward trend. However, the magnitude of performance improvement exhibits the characteristic of “diminishing marginal returns”: the performance gain is more significant when increasing from 2 layers to 4 layers, while the magnitude of improvement has narrowed when increasing from 4 layers to 6 layers. Although deeper networks can explore more complex patterns, they are accompanied by a series of issues, such as a sharp increase in the number of parameters, extended computational latency, and greater difficulty in training convergence.

The experimental hardware platform in this study is an NVIDIA GeForce RTX 4050 Laptop GPU with 6 GB of VRAM (Video Random Access Memory). Experimental verification shows that although this platform can support the training and operation of 4-layer and 6-layer GRU (Gated Recurrent Unit) models, it leads to a significant degradation in the model’s recognition speed. Notably, human activity recognition tasks typically require real-time response capabilities (e.g., instant judgment in scenarios involving wearable devices or surveillance systems), and excessively slow recognition speed would undermine the practical application value of the model. After weighing the trade-off between performance and real-time requirements, this study provisionally designates the 2-layer GRU structure as the implementation scheme for the human activity recognition task. If subsequent research can rely on more high-performance hardware platforms (such as high-VRAM GPUs like the RTX 3090/4090, or the construction of multi-GPU parallel architectures), it will be feasible to verify the actual performance of GRU structures with more layers, thereby further tapping the model’s potential in temporal feature modeling.

4.8. Evaluation of the TGA-HAR Model in Real-World Environments

Datasets collected in the aforementioned laboratory-controlled environments fail to reproduce issues such as sensor noise, natural interruptions of activities, and variations in device wearing that occur in real-world scenarios. This results in a potential disconnect between the evaluation of model performance and its practical applications.

To address this issue, this subsection adopts the Real-World IDLab Dataset constructed by Stojchevska [

51] as the benchmark for evaluation in real-world environments. Characterized by uncontrolled longitudinal data collection, this dataset fully reflects the natural state of human daily activities and thus provides reliable support for the evaluation of model generalization ability.

The Real-World IDLab Dataset employs the Empatica E4 wristband as its data collection device, with a primary focus on utilizing data from the device’s triaxial accelerometer (sampling rate: 32 Hz). Formal data collection involved 18 participants (aged 22–45 years, including 5 females and 13 males), and no researchers were present for observation or monitoring during the collection process. Participants were fully allowed to independently determine activity scenarios (e.g., home, office, outdoor environments) and implementation methods in accordance with their daily routines. This setup naturally captures realistic characteristics such as activity interruptions (e.g., waiting for a red light while walking) and speed variations (e.g., slowing down to avoid pedestrians while cycling)—features that stand in sharp contrast to standardized activity scenarios.

The Real-World IDLab Dataset focuses on 5 categories of core daily activities (Computer Table, Cycling, Running, Standing Still, and Walking). To enhance label quality, it adopts two strategies: model-assisted annotation (pushing activity predictions every 5 min for participants to confirm or correct) and location backtracking (allowing verification of the start and end positions of activity periods). Additionally, targeted cleaning is performed on overlapping labels—for instance, when two mutually exclusive activities are labeled for the same time period, the time boundaries are adjusted or redundant labels are removed. In the data preprocessing stage, the accelerometer signals are scaled to the range of −2 g to +2 g.

Among the dataset we downloaded [

52], there is data from 15 participants. We selected the same time window as that in [

51], and segmented the data using a 12 s sliding window with a 50% overlap rate, resulting in 3 × 384 matrix samples (3 axial directions × 384 time points). After completing window segmentation, the number of samples for each of the 5 activities per participant is shown in

Table 15.

We selected data from a total of 3 participants, which included participant_7, participant_9, and participant_16, as the test set, while the data from the remaining participants was used as the training set. This ensures that the 5 activities have a sufficient number of samples in both the training and test sets. After the division was completed, the samples of the training and test sets are shown in

Table 16.

We thus selected the same evaluation metrics as those in [

51], namely F1-micro, F1-macro, and Balanced Accuracy. F1-micro, a metric for measuring overall classification accuracy, calculates precision and recall globally by treating all classes as a single whole; F1-macro, which measures the average of classification accuracies across classes, works by first computing precision and recall independently for each class and then averaging the F1 scores of all classes, whereas Balanced Accuracy, a metric that gauges the balance of a classification model’s recall across classes, is defined as the average of the recall rates of all classes. Their calculation formulas are presented in Equations (17)–(19).

Here,

denotes the total number of classes.

and

denote precision and recall at the global level, respectively.

and

denote precision and recall for the

-th class, respectively.

Using the experimental environment and hyperparameter settings consistent with those mentioned above, we completed the model training and then conducted testing using the data from the 4 participants. The results are presented in

Table 17.

The heatmap of the confusion matrix for the TGA-HAR model on the test set of the Real-World IDLab Dataset is shown in

Figure 16. As shown in the confusion matrix heatmap, the TGA-HAR model exhibits distinct classification performance across different activity categories. For Computer Table, Cycling, Running, and Walking, the model correctly identifies most samples (66,202 for Computer Table, 1080 for Cycling, 4095 for Running, and 7140 for Walking). However, for Standing Still, only 19 samples are correctly classified, indicating poor recognition performance.

This performance discrepancy can be attributed to the interplay between the TGA-HAR architecture (TCN-ResBiGRU-Attention) and the inherent characteristics of each activity. Categories like Computer Table, Cycling, Running, and Walking possess distinct and rich temporal features (e.g., stable static patterns for Computer Table, periodic motion rhythms for Cycling and Running). The TCN with dilated convolutions effectively extracts multi-scale temporal features, the Res-BiGRU captures bidirectional temporal dependencies, and the attention mechanism enhances the weights of these discriminative features—thus enabling accurate classification. In contrast, Standing Still has extremely weak temporal dynamics, making its feature distinction from other static categories (e.g., Computer Table) subtle. TCN struggles to extract meaningful patterns from near-static data, Res-BiGRU’s temporal modeling capability is limited in the absence of sequential variation, and the attention mechanism fails to identify prominent feature weights due to insufficient discriminative signals. Additionally, Standing Still has a smaller sample size compared to other categories, leading to inadequate generalization of the model for this class.

While the TGA-HAR model exhibits limited performance in recognizing the “Standing Still” category, it still demonstrates significant advantages based on its TCN-ResBiGRU-Attention architecture—specifically, the dilated convolutions in TCN enable effective extraction of multi-scale temporal features, ResBiGRU robustly captures bidirectional temporal dependencies, and the attention mechanism adaptively enhances the weights of discriminative features. This design allows the model to achieve high accuracy in recognizing dynamic activities (e.g., Cycling, Running, Walking) with rich temporal patterns, as well as static activities like “Computer Table” with distinct motionless characteristics, fully validating its robustness and adaptability in complex real-world HAR tasks.

However, the model’s weakness in recognizing “Standing Still” requires targeted improvements. Data augmentation can be applied to “Standing Still” category data (e.g., adding noise-infused samples or resampling) to expand the training dataset and improve generalization capability; alternatively, the model architecture can be adjusted—such as adding a lightweight static feature modeling branch or optimizing the attention mechanism to prioritize static feature patterns. These measures can improve the recognition performance of “Standing Still” while preserving the model’s advantages in recognizing other categories.

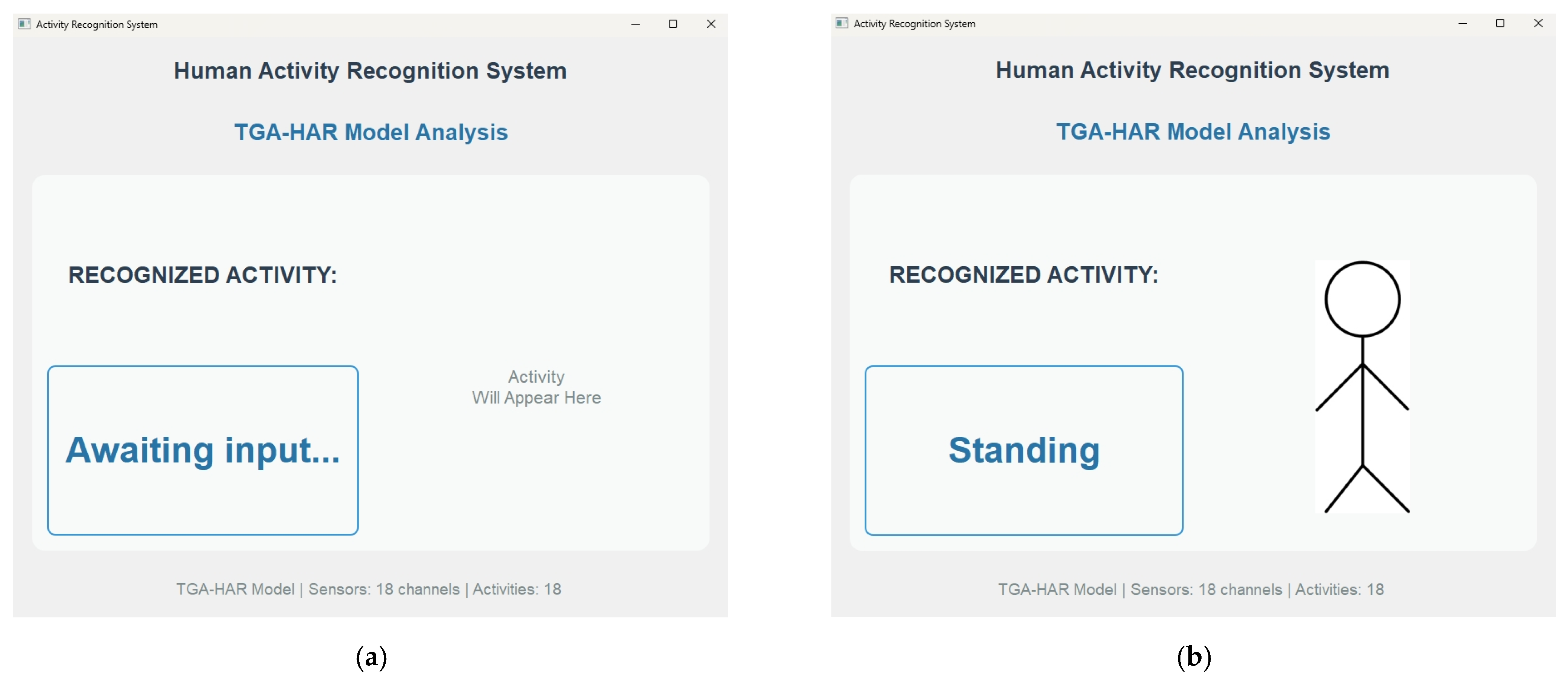

4.9. Activity Recognition System

This study aims to achieve complex activity recognition using three wireless IMUs worn by test subjects. The activities correspond to those defined in the PAMAP2 dataset (detailed in

Table 7). Sensors are positioned at the dominant wrist, ankle, and chest, with a sampling frequency of 100 Hz.

The recognition system processes 12-dimensional raw data comprising triaxial acceleration and angular velocity from three IMUs. We implement a recognition system to demonstrate experimental outcomes. The system employs the TGA-HAR model, where raw data undergoes preprocessing (

Section 4.2) before being fed into the model.

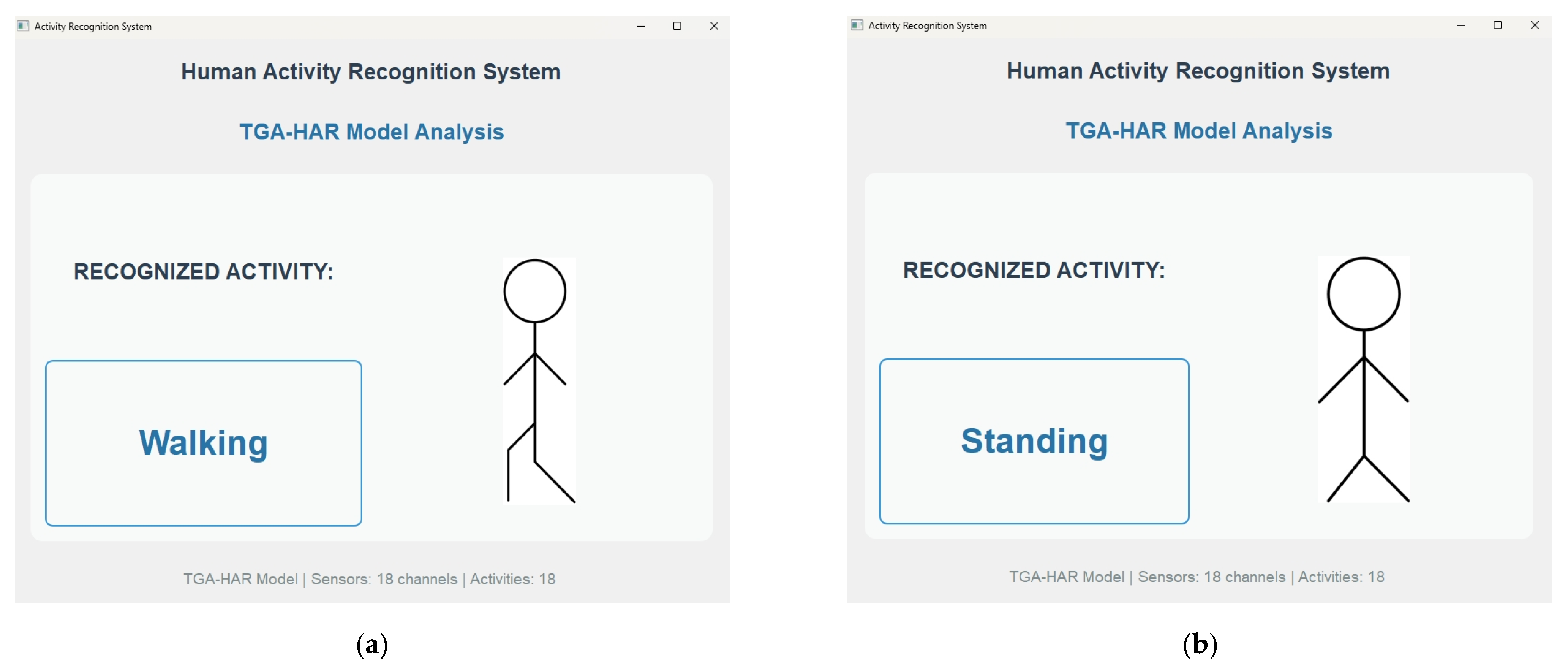

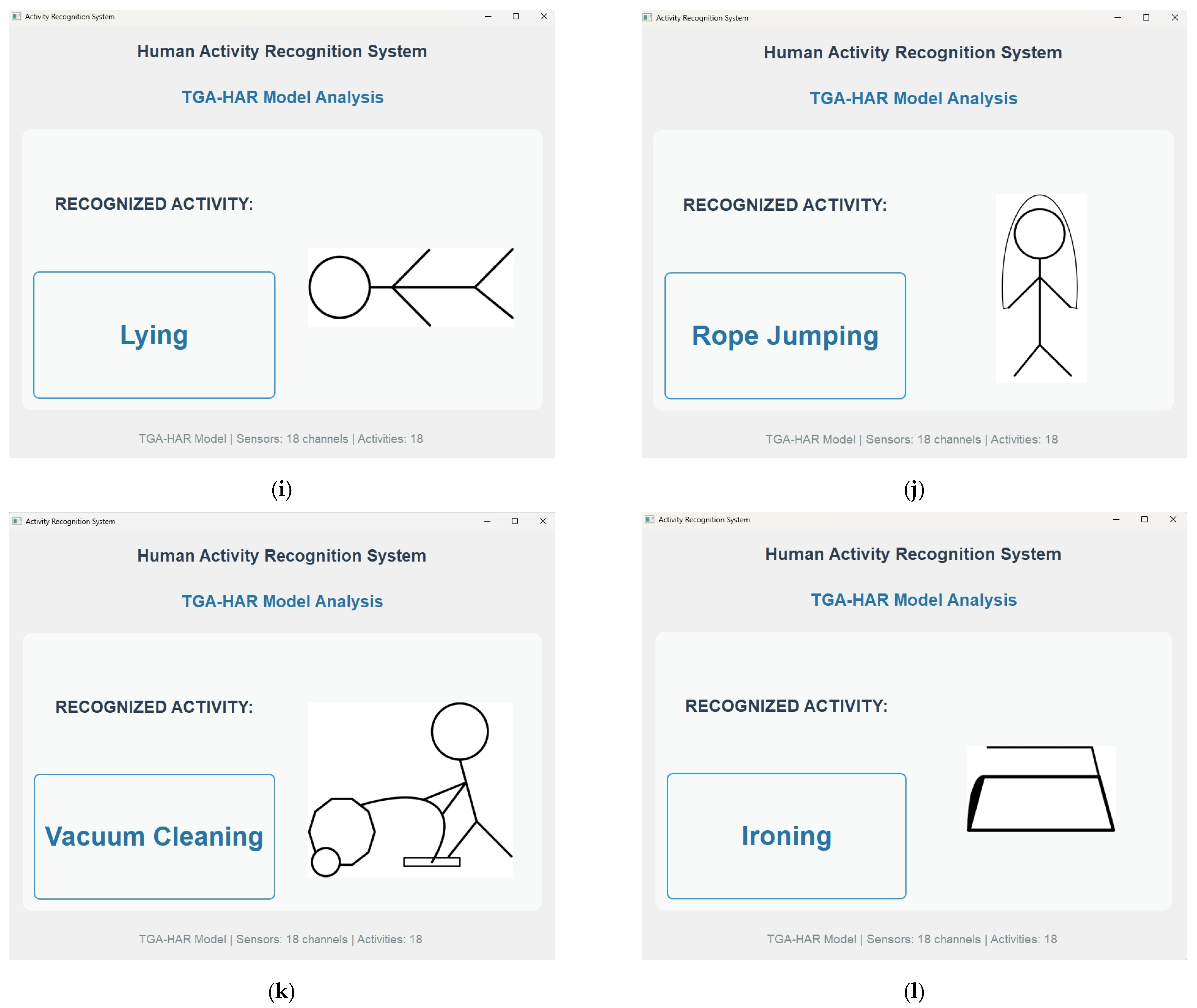

Upon completing activity recognition, the system outputs result as shown in

Figure 17.

Figure 17a shows standby state awaiting input.

Figure 17b show recognized activities during operation. Recognition results for all 18 activities are comprehensively presented in

Figure A1.

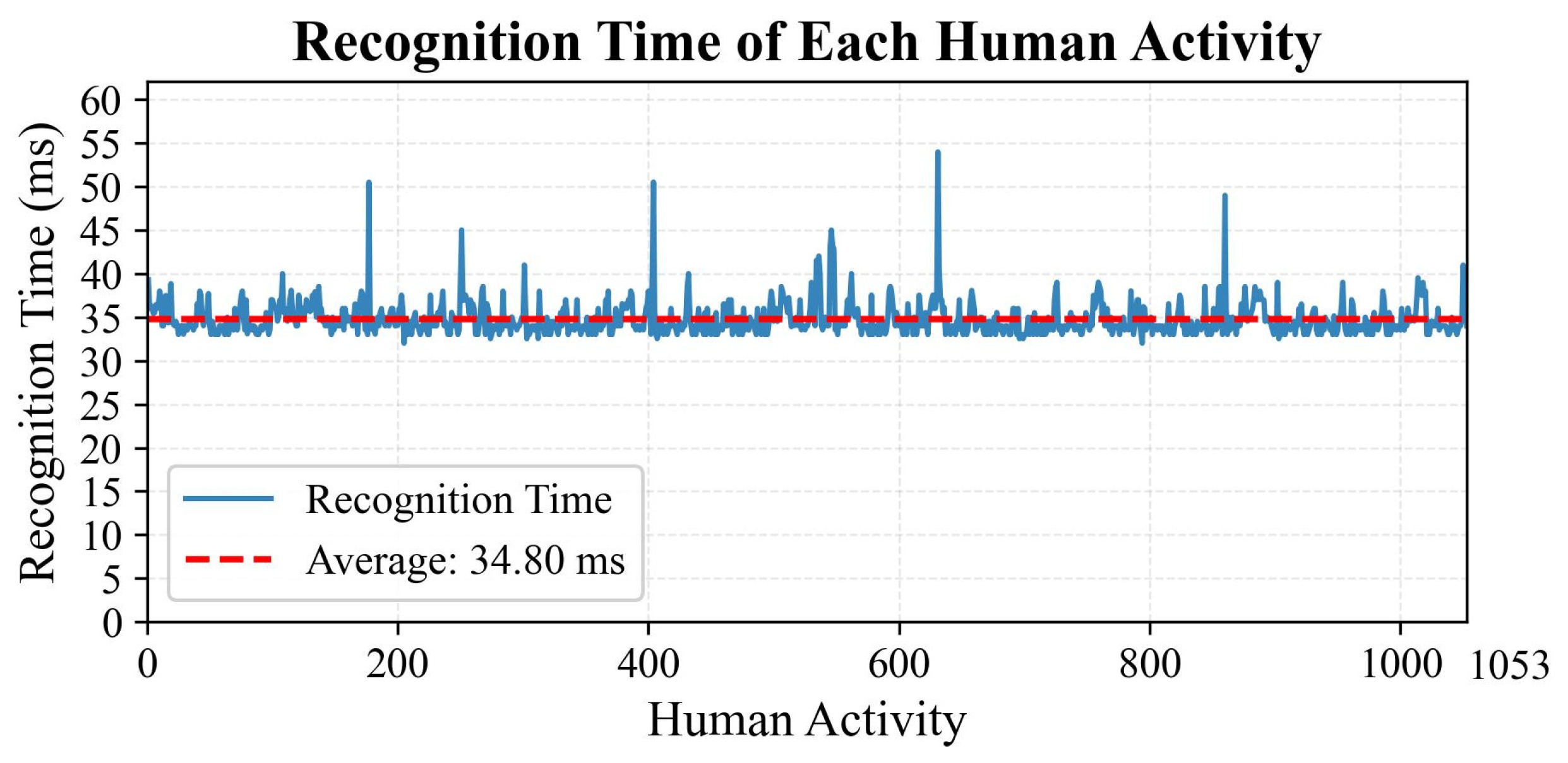

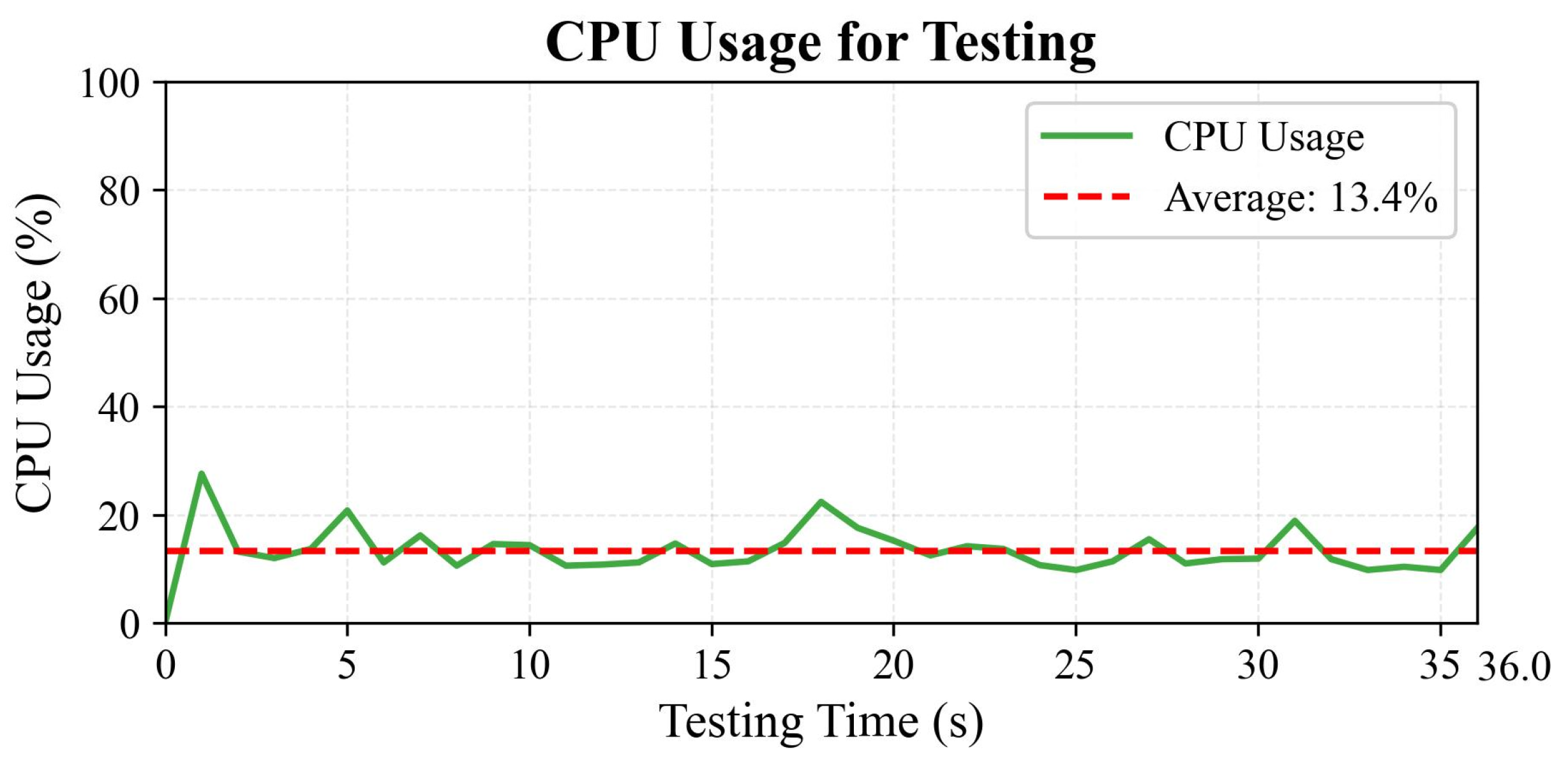

To scientifically quantify the computational efficiency of the TGA-HAR model, this study utilizes the test set of the PAMAP2 dataset as experimental samples. During experiments, a millisecond-level timer precisely captures the recognition time per sample to evaluate the model’s real-time inference performance. Concurrently, CPU resource utilization is continuously monitored at 1 s intervals throughout the recognition process.

Figure 18 illustrates the recognition time of each human activity of the TGA-HAR model on the PAMAP2 test set. The

x-axis represents 1053 human activity test samples, while the

y-axis denotes the inference latency per sample in milliseconds. A dashed horizontal line marks the average inference latency of 34.8 ms, reflecting overall performance. The solid line traces real-time dynamic fluctuations in per-sample latency, demonstrating the model’s behavior during continuous activity recognition. Data distribution reveals that most samples exhibit stable latency fluctuations around the mean, indicating consistent computational efficiency. However, six samples (Nos. 177, 251, 404, 546, 631, 860) show higher latencies (50.51 ms, 45.02 ms, 50.52 ms, 45.00 ms, 53.99 ms, 48.99 ms), attributable to transient resource contention during inference.

Figure 19 displays the CPU utilization percentage per second during TGA-HAR model inference across 1053 human activity samples, recorded from 0 to 36.0 s. The

x-axis represents elapsed time in seconds, while the

y-axis indicates the CPU usage percentage. A dashed horizontal line marks the average CPU utilization of 13.4% throughout the test. The solid line traces actual second-by-second CPU usage during single-sample inference on the PAMAP2 test set. During the initial 0–2 s, CPU usage peaks at 27.6% due to model initialization and first-frame processing. Subsequent utilization exhibits sawtooth-like fluctuations. As documented in [

53], PyTorch employs a decoupled architecture separating control flow (Python) from data flow (C++ operators). Monitoring real-time CPU usage captures switching overhead between these layers, resulting in observed fluctuations—a phenomenon consistent with PyTorch training observations in [

54].