mmPhysio: Millimetre-Wave Radar for Precise Hop Assessment

Abstract

1. Introduction

2. Related Work

2.1. Physical Activity Monitoring

2.2. Physical Activity Monitoring Through mmWave Radar

3. Methodology

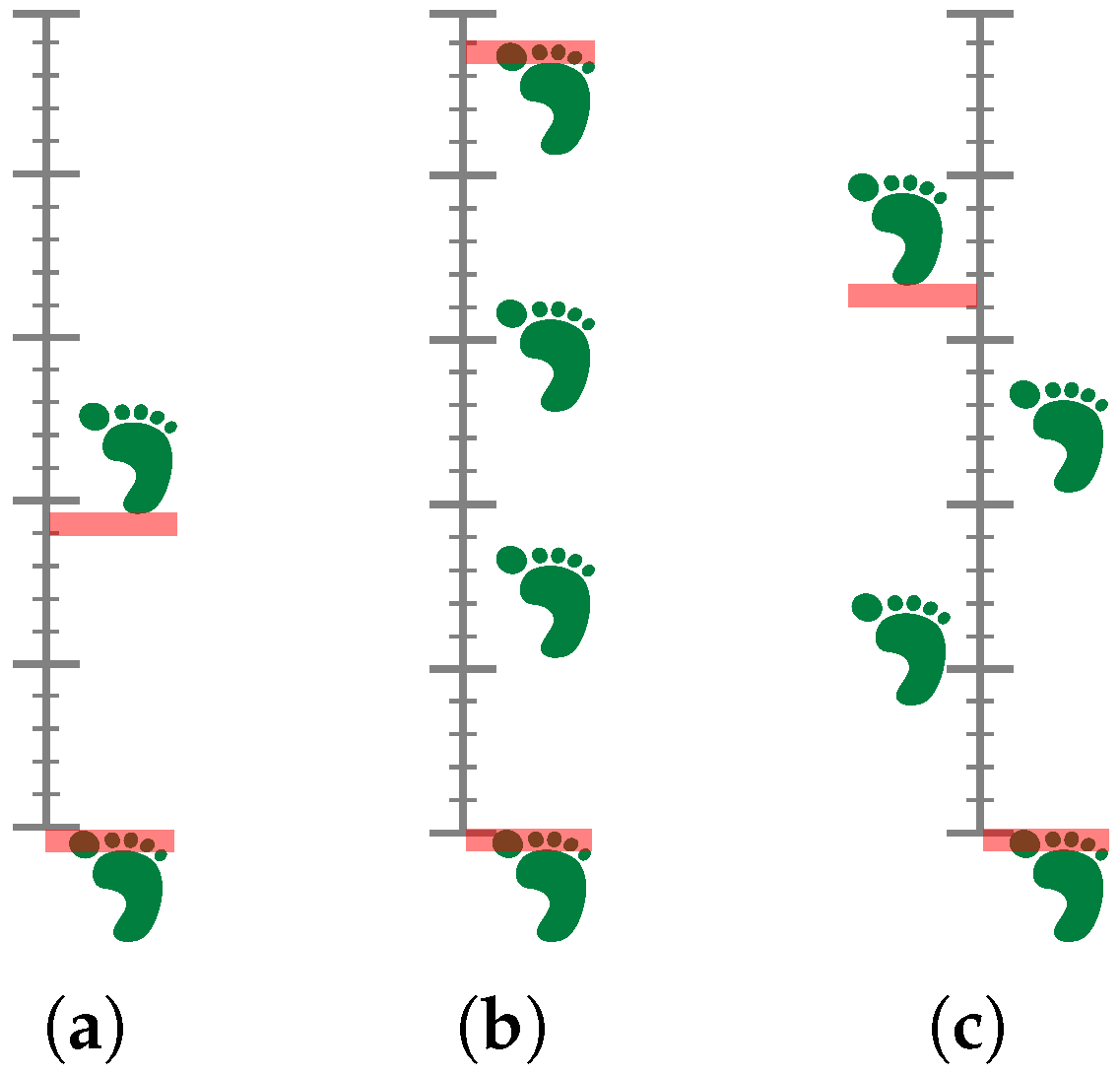

- Single Hop Test: The objective is to determine the maximum distance that can be covered with a single leg jump while maintaining balance and ensuring a firm landing. The distance is measured from the starting point to the heel of the landing leg.

- Triple Hop Test: The objective is to execute three consecutive hops on a single leg while maintaining balance and ensuring a firm landing. The distance travelled is measured from the starting point to the great toe of the landing leg.

- Crossover Hop Test: The objective is to execute three consecutive hops on a single leg, each with a maximum distance covered, while maintaining balance and ensuring a firm landing. Each hop involves a lateral movement across a midline, thereby incorporating side-to-side movement. The distance measured is from the starting line to the heel of the landing leg.

3.1. Experimental Setup

- An RGB camera is used to capture the positions of visual markers placed on the subjects’ legs. The camera is positioned at a height of m and a distance of 2 m from the subjects, ensuring a clear view of the markers during the tests.

- A mmWave radar is used to capture the motion of the subjects during the hop tests. The radar is positioned at a height of m and a distance of m from the subjects, similar to the camera setup.

3.1.1. Visual Marker System

3.1.2. Radar System

- Data Cube Formation: The radar captures raw ADC samples for each chirp and each TX-RX pair, forming a 3D data cube with dimensions corresponding to fast time (chirps per frame), slow time (number of frames), and spatial dimension (number of virtual antennas ):

- Range calculation: For each TX-RX pair, windowing and fast Fourier transform (FFT) are applied over the ADC samples (slow time) to create the range spectrum of the scene:

- Static Clutter Removal: Now, an fft is applied over the fast time dimension (chirps) to extract the velocity information. Then, after averaging across chirps, a static clutter removal step is applied per range and antenna in order to eliminate static objects, removing points with velocity from the point cloud and further processing:

- Range-Azimuth Estimation: The range-azimuth heatmap is generated by applying the Capon beamformer method [37] based on the steering vectors generated using azimuth-only transceiver pairs:

- Object detection: Performed through a 2-pass constant false alarm rate (CFAR) algorithm applied to the created range-azimuth heatmap . The first-pass CFAR returns a series of detections in the range domain per angle bin confirmed by a second-pass CFAR-caso (cell averaging smallest of) or a local peak search in the angle domain.

- Elevation Estimation: For each detected point, a new Capon beamforming is applied. The strongest peak in the elevation spectrum is selected as the elevation angle of the detected point.

- Doppler Estimation: The velocity of each detected point is extracted using again Capon beamforming, but this time over consecutive chirps in the radar cube .

4. Results, Analysis, and Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Van Der Woude, L.H.V.; Houdijk, H.J.P.; Janssen, T.W.J.; Seves, B.; Schelhaas, R.; Plaggenmarsch, C.; Mouton, N.L.J.; Dekker, R.; Van Keeken, H.; De Groot, S.; et al. Rehabilitation: Mobility, Exercise & Sports; a Critical Position Stand on Current and Future Research Perspectives. Disabil. Rehabil. 2021, 43, 3476–3491. [Google Scholar] [CrossRef]

- Chen, H. Advancements in Human Motion Capture Technology for Sports: A Comprehensive Review. Sens. Mater. 2024, 36, 2705. [Google Scholar] [CrossRef]

- Optitrack. Motion Capture Systems. 2025. Available online: https://optitrack3.payloadcms.app (accessed on 22 August 2025).

- Vicon. Award Winning Motion Capture Systems. 2025. Available online: https://www.vicon.com/ (accessed on 22 August 2025).

- Nokov. Optical Motion Capture System. 2025. Available online: https://en.nokov.com/ (accessed on 22 August 2025).

- Motion-Analysis. Premium Motion Capture Software and Systems. 2025. Available online: https://www.motionanalysis.com/ (accessed on 22 August 2025).

- Richards, M.A. Fundamentals of Radar Signal Processing, 2nd ed.; McGraw-Hill Education: New York, NY, USA, 2014. [Google Scholar]

- Losciale, J.M.; Le, C.Y. Clinimetrics: The Vertical Single Leg Hop Test. J. Physiother. 2025, 71, 136. [Google Scholar] [CrossRef]

- Ahmadian, N.; Nazarahari, M.; Whittaker, J.L.; Rouhani, H. Instrumented Triple Single-Leg Hop Test: A Validated Method for Ambulatory Measurement of Ankle and Knee Angles Using Inertial Sensors. Clin. Biomech. 2020, 80, 105134. [Google Scholar] [CrossRef]

- Capris, T.; Coelho, P.J.; Pires, I.M.; Cunha, C. Leveraging Mobile Device-Collected up-down Hop Test Data for Comprehensive Functional Mobility Assessment. Procedia Comput. Sci. 2024, 241, 594–599. [Google Scholar] [CrossRef]

- Pimenta, L.; Coelho, P.J.; Gonçalves, N.J.; Lousado, J.P.; Albuquerque, C.; Garcia, N.M.; Zdravevski, E.; Lameski, P.; Miguel Pires, I. A Low-Cost Device-Based Data Approach to Eight Hop Test. Procedia Comput. Sci. 2025, 256, 1135–1142. [Google Scholar] [CrossRef]

- Chaaban, C.R.; Berry, N.T.; Armitano-Lago, C.; Kiefer, A.W.; Mazzoleni, M.J.; Padua, D.A. Combining Inertial Sensors and Machine Learning to Predict vGRF and Knee Biomechanics during a Double Limb Jump Landing Task. Sensors 2021, 21, 4383. [Google Scholar] [CrossRef]

- Baxter, J.R.; Corrigan, P.; Hullfish, T.J.; ORourke, P.; Silbernagel, K.G. Exercise Progression to Incrementally Load the Achilles Tendon. Med. Sci. Sport. Exerc. 2021, 53, 124–130. [Google Scholar] [CrossRef]

- Ahmadian, N.; Nazarahari, M.; Whittaker, J.L.; Rouhani, H. Quantification of Triple Single-Leg Hop Test Temporospatial Parameters: A Validated Method Using Body-Worn Sensors for Functional Evaluation after Knee Injury. Sensors 2020, 20, 3464. [Google Scholar] [CrossRef] [PubMed]

- Ebert, J.R.; Edwards, P.; Preez, L.D.; Furzer, B.; Joss, B. Knee Extensor Strength, Hop Performance, Patient-Reported Outcome and Inter-Test Correlation in Patients 9–12 Months after Anterior Cruciate Ligament Reconstruction. Knee 2021, 30, 176–184. [Google Scholar] [CrossRef]

- Peebles, A.T.; Maguire, L.A.; Renner, K.E.; Queen, R.M. Validity and Repeatability of Single-Sensor Loadsol Insoles during Landing. Sensors 2018, 18, 4082. [Google Scholar] [CrossRef]

- Novel USA. Available online: https://www.novelusa.com/loadsol (accessed on 22 August 2025).

- Prill, R.; Walter, M.; Królikowska, A.; Becker, R. A Systematic Review of Diagnostic Accuracy and Clinical Applications of Wearable Movement Sensors for Knee Joint Rehabilitation. Sensors 2021, 21, 8221. [Google Scholar] [CrossRef]

- Humantrak Movement Analysis System. Available online: https://valdperformance.com/products/humantrak (accessed on 22 August 2025).

- Bilesan, A.; Behzadipour, S.; Tsujita, T.; Komizunai, S.; Konno, A. Markerless Human Motion Tracking Using Microsoft Kinect SDK and Inverse Kinematics. In Proceedings of the 2019 12th Asian Control Conference (ASCC), Kitakyushu-shi, Japan, 9–12 June 2019; pp. 504–509. [Google Scholar]

- Lepetit, K.; Hansen, C.; Mansour, K.B.; Marin, F. 3D Location Deduced by Inertial Measurement Units: A Challenging Problem. Comput. Methods Biomech. Biomed. Eng. 2015, 18, 1984–1985. [Google Scholar] [CrossRef]

- Kramer, A.; Heckman, C. Radar-Inertial State Estimation and Obstacle Detection for Micro-Aerial Vehicles in Dense Fog. In Experimental Robotics; Siciliano, B., Laschi, C., Khatib, O., Eds.; Springer: Cham, Switzerland, 2021; pp. 3–16. [Google Scholar]

- Regani, S.D.; Wu, C.; Wang, B.; Wu, M.; Liu, K.J.R. mmWrite: Passive Handwriting Tracking Using a Single Millimeter Wave Radio. IEEE Internet Things J. 2021, 8, 13291–13305. [Google Scholar] [CrossRef]

- Piotrowsky, L.; Jaeschke, T.; Kueppers, S.; Siska, J.; Pohl, N. Enabling High Accuracy Distance Measurements with FMCW Radar Sensors. IEEE Trans. Microw. Theory Tech. 2019, 67, 5360–5371. [Google Scholar] [CrossRef]

- Huang, X.; Tsoi, J.K.P.; Patel, N. mmWave Radar Sensors Fusion for Indoor Object Detection and Tracking. Electronics 2022, 11, 2209. [Google Scholar] [CrossRef]

- Pegoraro, J.; Rossi, M. Real-Time People Tracking and Identification from Sparse Mm-Wave Radar Point-Clouds. IEEE Access Pract. Innov. Open Solut. 2021, 9, 78504–78520. [Google Scholar] [CrossRef]

- Parralejo, F.; Paredes, J.A.; Aranda, F.J.; Álvarez, F.J.; Moreno, J.A. Millimetre Wave Radar System for Safe Flight of Drones in Human-Transited Environments. In Proceedings of the 2023 13th International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nuremberg, Germany, 25–28 September 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Sengupta, A.; Jin, F.; Zhang, R.; Cao, S. Mm-Pose: Real-time Human Skeletal Posture Estimation Using mmWave Radars and Cnns. IEEE Sens. J. 2020, 20, 10032–10044. [Google Scholar] [CrossRef]

- Jin, F.; Sengupta, A.; Cao, S.; Wu, Y.J. MmWave Radar Point Cloud Segmentation Using GMM in Multimodal Traffic Monitoring. In Proceedings of the 2020 IEEE International Radar Conference (RADAR), Washington, DC, USA, 28–30 April 2020; pp. 732–737. [Google Scholar] [CrossRef]

- Seifert, A.K.; Amin, M.G.; Zoubir, A.M. Toward Unobtrusive In-Home Gait Analysis Based on Radar Micro-Doppler Signatures. IEEE Trans. Biomed. Eng. 2019, 66, 2629–2640. [Google Scholar] [CrossRef] [PubMed]

- Jin, F.; Zhang, R.; Sengupta, A.; Cao, S.; Hariri, S.; Agarwal, N.K.; Agarwal, S.K. Multiple Patients Behavior Detection in Real-Time Using mmWave Radar and Deep Cnns. In Proceedings of the 2019 IEEE Radar Conference (RadarConf), Boston, MA, USA, 22 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Dogu, E.; Paredes, J.A.; Alomainy, A.; Jones, J.; Rajab, K. A Falls Risk Screening Tool Based on Millimetre-Wave Radar. In Proceedings of the 10th International Conference on Information and Communication Technologies for Ageing Well and E-Health, Angers, France, 28–30 April 2024; pp. 161–168. [Google Scholar] [CrossRef]

- Wang, L.; Ni, Z.; Huang, B. Extraction and Validation of Biomechanical Gait Parameters with Contactless FMCW Radar. Sensors 2024, 24, 4184. [Google Scholar] [CrossRef] [PubMed]

- Brasiliano, P.; Carcione, F.L.; Pavei, G.; Cardillo, E.; Bergamini, E. Radar-Based Deep Learning for Gait Smoothness Estimation: A Feasibility Study. In Proceedings of the 2025 IEEE Medical Measurements & Applications (MeMeA), Chania, Greece, 28–30 May 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Romero-Ramirez, F.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Speeded up Detection of Squared Fiducial Markers. Image Vis. Comput. 2018, 76, 38–47. [Google Scholar] [CrossRef]

- OpenCV. OpenCV: Camera Calibration. 2025. Available online: https://docs.opencv.org/4.x/dc/dbb/tutorial_py_calibration.html (accessed on 22 August 2025).

- Capon, J. High-Resolution Frequency-Wavenumber Spectrum Analysis. Proc. IEEE 1969, 57, 1408–1418. [Google Scholar] [CrossRef]

- Texas-Instruments. Tracking Radar Targets with Multiple Reflection Points. 2023. Available online: https://dev.ti.com/tirex/explore/node?node=A__AbnseWQ.JhKu2Kp1u4qtEw__radar_toolbox__1AslXXD__LATEST (accessed on 22 August 2025).

| Parameter | Value |

|---|---|

| Start Frequency | 60.75 GHz |

| Bandwidth | 3.23 GHz |

| Frame Period | 55 ms |

| Range Resolution | 8.43 cm |

| Max Range | 8 m |

| Velocity Resolution | 0.1 m/s |

| Max Velocity | 4.62 m/s |

| Hop | Camera 1 | mmWave Radar 1 |

|---|---|---|

| Single | 0.738 | 0.704 |

| Triple | 1.870 | 1.743 |

| Crossover | 1.865 | 1.828 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paredes, J.A.; Parralejo, F.; Aguilera, T.; Álvarez, F.J. mmPhysio: Millimetre-Wave Radar for Precise Hop Assessment. Sensors 2025, 25, 5751. https://doi.org/10.3390/s25185751

Paredes JA, Parralejo F, Aguilera T, Álvarez FJ. mmPhysio: Millimetre-Wave Radar for Precise Hop Assessment. Sensors. 2025; 25(18):5751. https://doi.org/10.3390/s25185751

Chicago/Turabian StyleParedes, José A., Felipe Parralejo, Teodoro Aguilera, and Fernando J. Álvarez. 2025. "mmPhysio: Millimetre-Wave Radar for Precise Hop Assessment" Sensors 25, no. 18: 5751. https://doi.org/10.3390/s25185751

APA StyleParedes, J. A., Parralejo, F., Aguilera, T., & Álvarez, F. J. (2025). mmPhysio: Millimetre-Wave Radar for Precise Hop Assessment. Sensors, 25(18), 5751. https://doi.org/10.3390/s25185751