3.1. Reference NDVI Curves for the All Classes

This section presents the averaged NDVI time series for the main crop types cultivated in Khabarovsk Krai—soybean, grain crops, buckwheat, and perennial grasses—as well as fallow land, for the years 2022–2024, based on imagery from Sentinel, Landsat (following data recovery using function fitting), and Meteor.

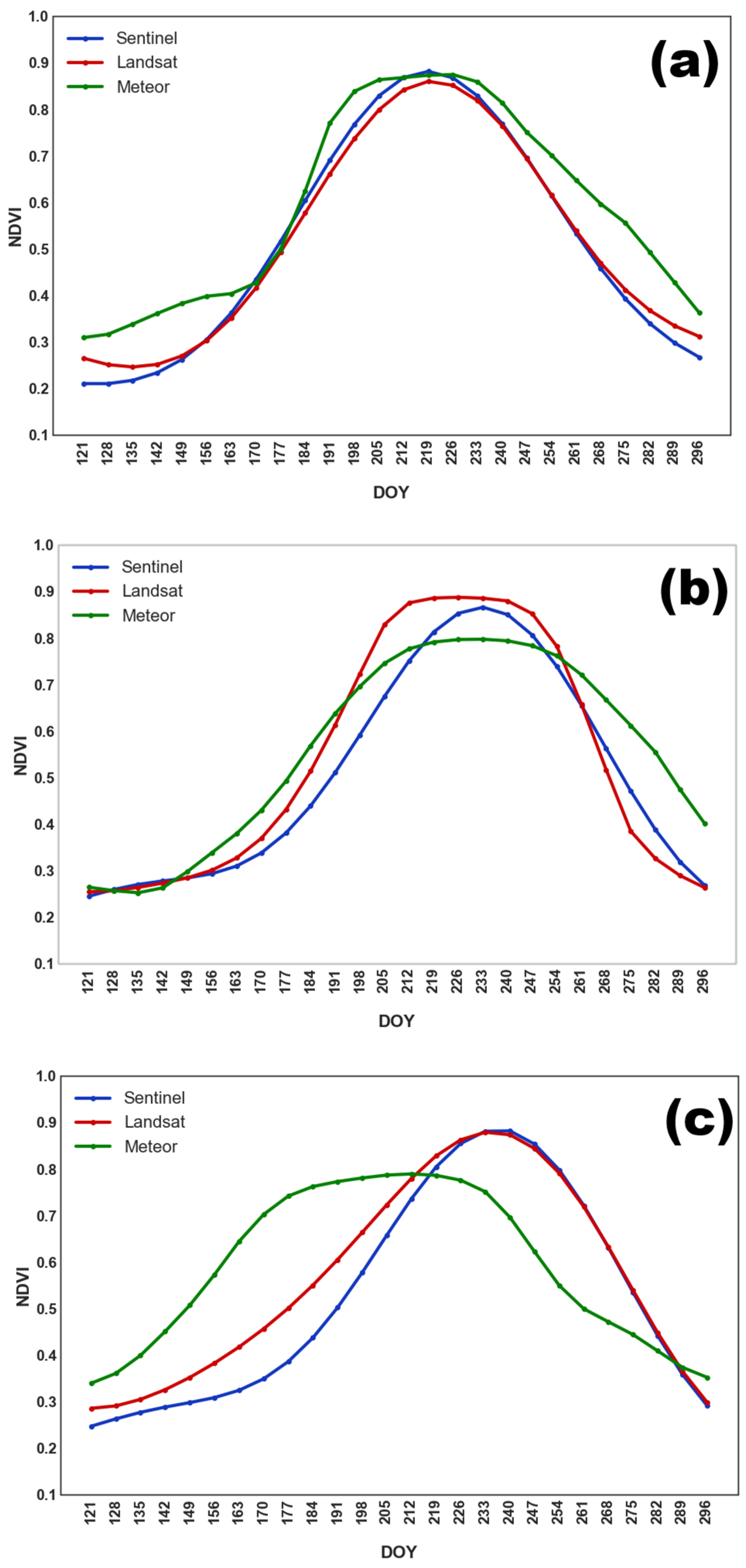

The seasonal NDVI profiles for soybean (

Figure 3) reflect the main stages of crop development. Soybeans were sown later than other crops (except buckwheat), from late May to mid-June. The NDVI maximum was observed between late July and late August (DOY 205–240). A gradual decline in NDVI followed, associated with green mass loss, until late September.

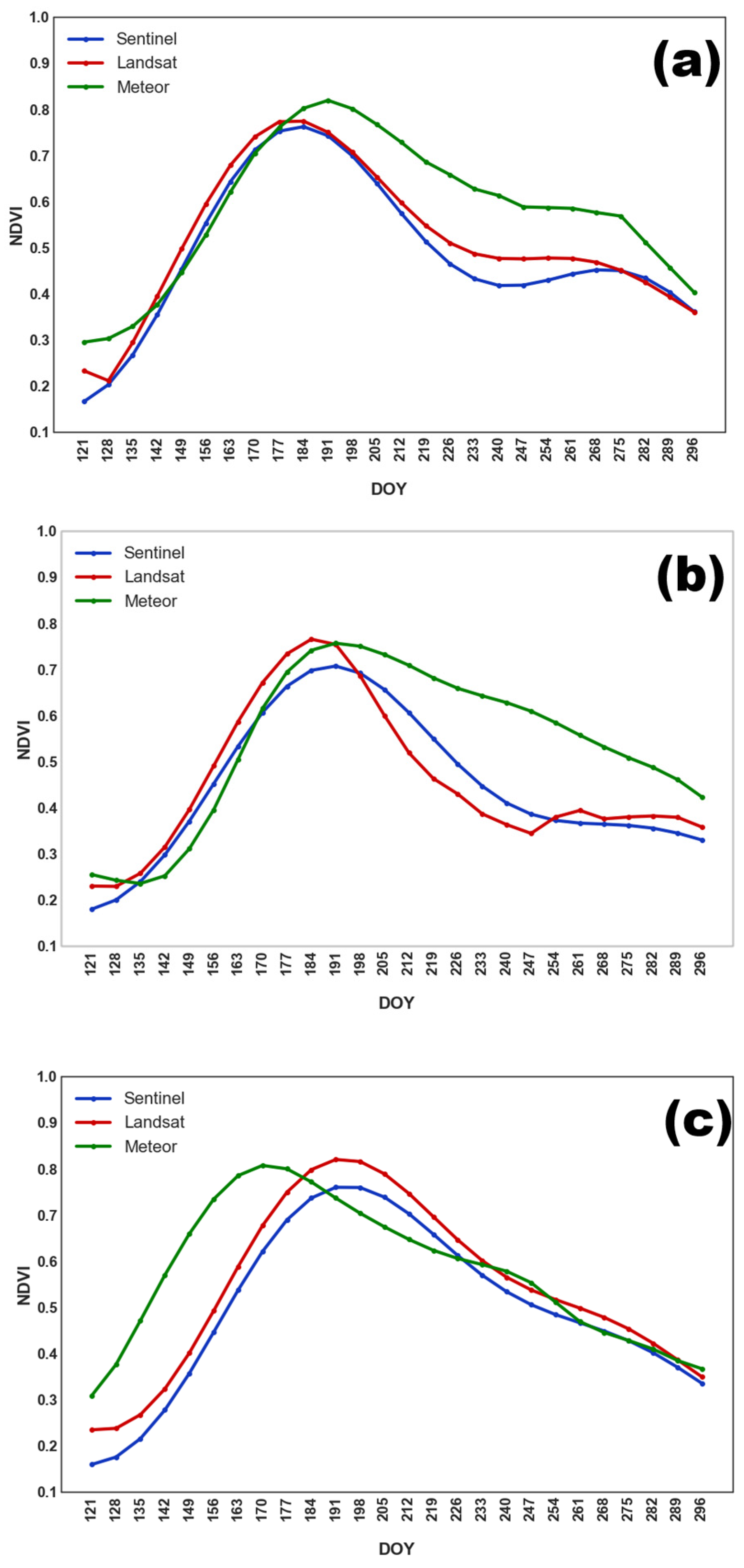

Grain crops, such as oat, wheat, and barley, exhibited similar NDVI dynamics, justifying their aggregation into a single class. As shown in

Figure 4, NDVI peaked earlier than in soybean fields, with maximum values occurring between DOY 190–205 (mid to late July). This was followed by a decline in NDVI due to harvesting.

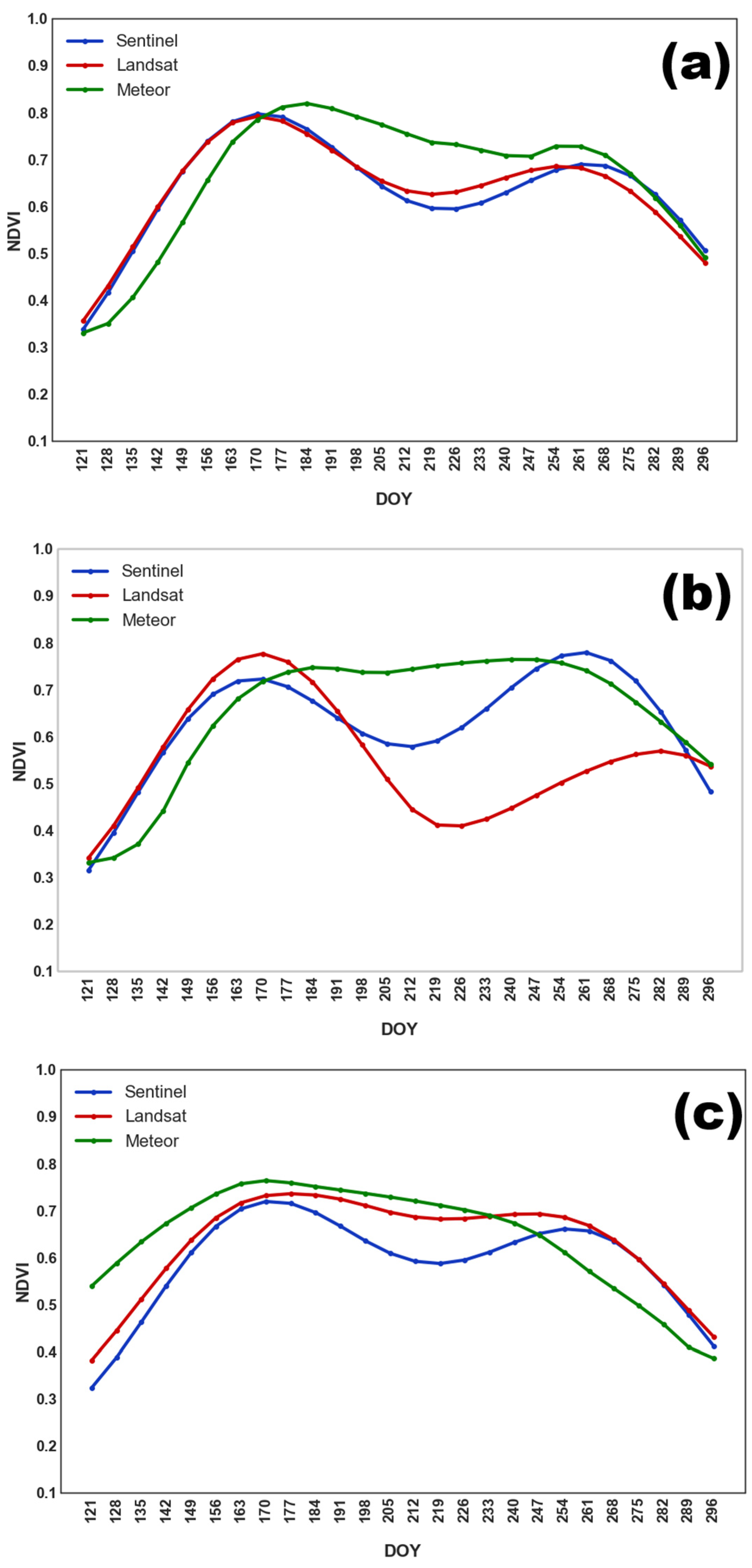

Similarly to grain crops, perennial grasses were a heterogeneous class consisting of timothy grass, clover, and other grasses. As a result, harvesting was carried out on different dates. However, NDVI values typically increased until late June or early July, followed by a second peak at the end of September. In most cases, a second mowing was not performed. The averaged NDVI seasonal profile for perennial grasses is shown in

Figure 5.

In 2022 (

Figure 6a), early sowing of buckwheat resulted in a single NDVI peak in late July, followed by a gradual decline. In contrast, the 2023 and 2024 seasons (

Figure 6b,c) displayed two distinct peaks: an initial rise before plowing the field, and a second maximum in August or September, following sowing in July.

The NDVI profile for fallow land (

Figure 7) exhibited a characteristic plateau shape, with a gradual increase in biomass leading to a maximum in July, followed by a steady decline in NDVI values until the end of the season.

Table 3 presents the mean NDVI maximum values (

), the mean dates of maximum NDVI (

), and the associated variability of these indicators for soybean fields across different years. For Sentinel data,

ranged from 0.87 to 0.89, with a variation of 5–7%. Landsat data yielded the highest average NDVI maximum in 2023 (

= 0.91), with

occurring in August. In contrast, the Meteor-derived composites consistently showed lower

values (0.8–0.81) with some variation between 2022 and 2023, and a tendency for the maximum to occur earlier in the season. For example, in 2024,

occurred on DOY 203, corresponding to the third decade of July.

The results of ANOVA analysis and Tukey’s pairwise test revealed statistically significant variations in the timing of NDVI maxima () for soybeans across the three years and all satellites (p < 0.001). However, no significant differences in NDVI peak values () were found between 2022 and 2023 or 2022 and 2024 for Sentinel, and between 2022 and 2024 for Landsat (p > 0.05).

Table 4 presents the NDVI time series indicators for grain crop fields, based on data from multiple satellite sources for 2022–2024. It is evident that the

values for grain crops were consistently lower than those observed for soybean crops. Additionally, greater variability in

was observed for grain crops, particularly in 2023 and 2024, when variability reached 9–10% for both Sentinel and Landsat data.

Among the satellite sources, Sentinel data, which has the highest spatial resolution, recorded the lowest average NDVI maxima (0.75–0.77). In contrast, Landsat-derived values were higher (0.8–0.83). For both satellite systems, the value occurred at analogous times: during the first decade of July in 2022 and 2023, and shifted to the third decade of July in 2024 (p < 0.05). The years 2022 and 2024 also exhibited substantial variability in maximum NDVI values, reaching up to 15%.

The NDVI series derived from Meteor-M imagery was characterized by lower variability in both (3–6%) and (3–4%). Notably, in 2024, the maximum NDVI for Meteor was recorded on DOY 173 (i.e., the third decade of June), which is approximately one month earlier than for Sentinel and Landsat, and three weeks earlier than in 2022 and 2023 (p < 0.0001).

The results of the ANOVA and post hoc Tukey test revealed no statistically significant differences in between years for both Landsat and Sentinel (p > 0.05). However, for Meteor, was 0.83 in 2022, which was significantly higher than the corresponding values observed in 2022 and 2023 (p < 0.0001).

For perennial grasses,

ranged from 0.77 to 0.86, with the highest value recorded for Sentinel in 2023, and the lowest for Meteor in 2024. The occurrence of

differed depending on mowing schedules; fields with summer mowing reached maximum NDVI in the second half of June, while fields with autumn mowing reached their maximum in late August. Consequently, for perennial grasses, the early and late NDVI maxima were analyzed separately. For the first NDVI maximum, the average values ranged from 0.77 to 0.83 for Meteor, 0.76 to 0.83 for Landsat, and slightly lower values, 0.74 to 0.81, for Sentinel. The greatest variability in the first maximum values was observed for Landsat in 2024, with a variation of up to 16.5%, while Meteor composites showed the lowest variation of only 2.8% in 2022. For Sentinel, the timing of the first maximum remained relatively consistent across years (DOY 174–176, p

ANOVA > 0.05). However, for Landsat, the first maximum in 2024 was significantly delayed, occurring at DOY 189, compared to 2022–2023 (

p < 0.0001). The most recent first maximum for perennial grasses was observed in 2023, on DOY 199, which was significantly later than in 2022 and 2024 (

p < 0.0001). Significant variations in the second NDVI maximum were observed across datasets and years. For Sentinel, values ranged from 0.66 in 2024 (0.1 less than the first maximum) to 0.85 in 2023 (0.1 higher than the first maximum), with statistically significant differences across these years (p

ANOVA < 0.0001). The lowest maximum in 2024 also occurred significantly earlier than in 2022–2023 (

p < 0.0001). For Landsat, the second maximum was observed in 2022 (0.78), with the earliest second maximum recorded on DOY 189, which was significantly earlier than in 2023 and 2024 (

p < 0.0001). For Meteor, the lowest second NDVI maximum (0.71) and the earliest occurrence (DOY 222) were observed in 2024 (

p < 0.0001). The detailed NDVI time series characteristics for perennial grasses observed by different satellite systems during the 2022–2024 period are summarized in

Table 5 and

Table 6.

Table 7 presents the

values for buckwheat fields during 2022–2024. A shift in sowing dates in 2022 resulted in earlier attainment of peak NDVI values, with elevated

levels ranging from 0.83 to 0.88 across different satellite systems. In that year, The NDVI maximum was observed as early as the second half of July, specifically on DOY 196 for Landsat and Sentinel, and on 210 for Meteor. The lowest value of

was recorded in 2023, ranging from 0.76 to 0.8, with the peak occurring during the second half of August, on DOY 227 for Landsat and Meteor, and on DOY 242 for Sentinel. Across all three years, Meteor consistently showed the lowest

values for buckwheat crops: 0.76 in 2023–2024 and 0.83 in 2022. The ANOVA results confirmed the observed differences in

across years were statistically significant for Sentinel, Landsat, and Meteor (

p < 0.001).

Table 8 summarizes the characteristics of the NDVI time series for unused fields (fallow land) based on satellite data from 2022 to 2024. The highest values of

were observed in Landsat data, ranging from 0.87 in 2022 to 0.81 in 2023), with a relatively high variation of 9–12% in 2023 and 2024. In contrast, Sentinel data yielded the lowest

values, which ranged from 0.75 in 2023 to 0.77 in 2024. Overall, the highest NDVI values were observed in unused fields in 2022 (0.84–0.87), with the maximum reached during the first half of July (DOY 186–196). Results of the one-way ANOVA confirmed that the value of

in 2022 was significantly higher and occurred earlier than in other years (

p < 0.0001). In addition, no statistically significant differences in

were found between 2023 and 2024 (

p > 0.05).

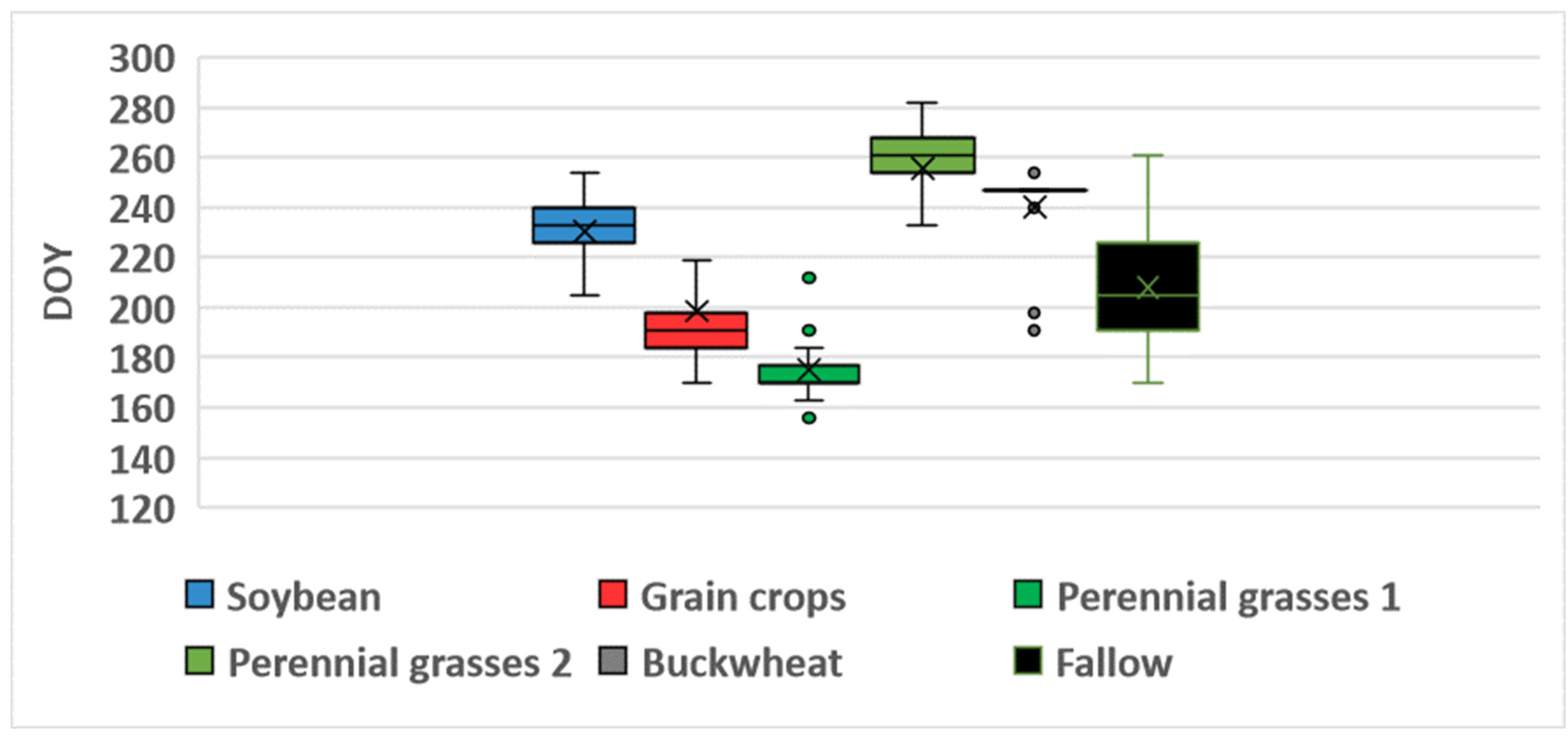

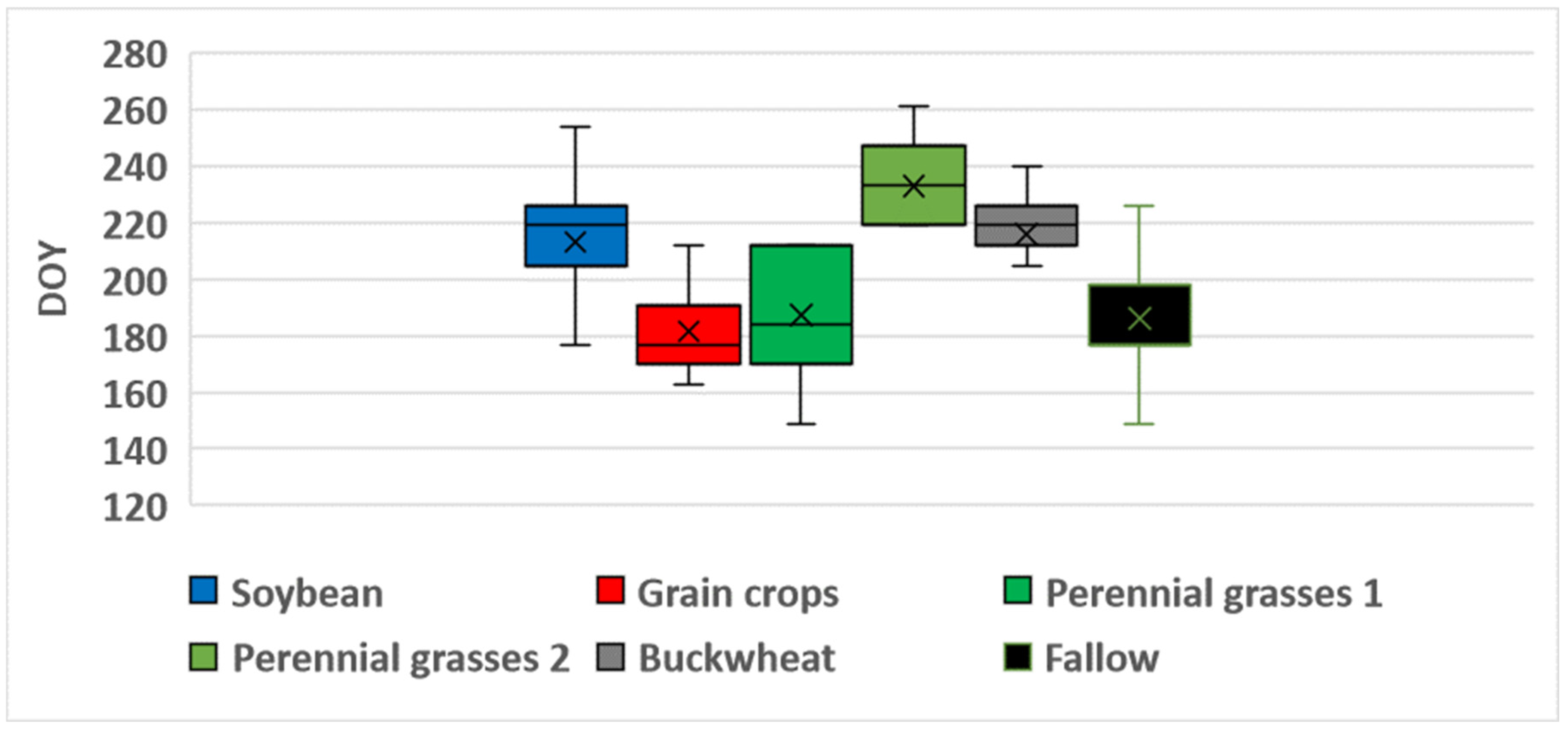

Figure 8,

Figure 9 and

Figure 10 present boxplots of

for various crop types and fallow land, derived from Sentinel, Landsat, and Meteor satellite data. These plots clearly demonstrate the differences in

for different crops, as well as the similarity in NDVI patterns across different satellites. On average, the earliest

was observed for grain crops, with a

of 197–198 for both Landsat and Sentinel, and a variation of 23–26 days. In contrast, perennial grasses exhibited significant variability in

, with standard deviations of 40 days for Landsat and 43 days for Sentinel. The

value could occur either in late June (first maximum) or in early September (second maximum). Both the timing and the number of harvests varied. For fallow land, the peak NDVI value was typically reached in the third decade of July, with a standard deviation of 20–22 days). NDVI heatmaps, showing the dynamics of NDVI values and allowing to estimate the values and time of reaching the maximum, is presented in

Figures S1–S3.

Figure 8 shows that, for 33 of 43 buckwheat fields monitored over three years, Sentinel recorded

on DOY 247 (early September), with 2022 as an exception, showing a peak in mid-July. On average,

for Landsat occurred 11 days earlier than for Sentinel and exhibited greater variability. For soybean, the

value was typically reached around DOY 229–230 (mid-August), with a low variation of approximately 10 days, for both Landsat and Sentinel data. In general, Meteor composites exhibited an earlier

across all classes, by an average of 10–20 days, compared to the fitted NDVI curves from Sentinel and Landsat. The only exception was for perennial grasses, where the timing of the Landsat-derived maximum aligned closely with Meteor observations. In addition, the variation in

was consistently lower for Meteor than for Landsat and Sentinel, ranging from 11 days for grain crops to 34 days for perennial grasses.

3.2. Mapping Results

NDVI time series constructed using three satellite systems were used for classification using the RF algorithm from 2022 to 2024.

Table 9,

Table 10,

Table 11 and

Table 12 present the confusion matrices, including F1 scores for each class and OA, for each satellite individually and for the multi-sensor approach. Among the individual satellite datasets, the Landsat-based classification yielded the lowest accuracy (

Table 9), with OA declining from 94% in 2022 to 90% in 2023, and further to 84% in 2024. Considering the varying sample sizes across years, the weighted OA was 87%. The F1 score for individual classes was less than 0.80 for buckwheat, perennial grasses, and fallow land, and 0.80 for grain crops. The highest performance was recorded for the most common class, soybean, with an F1 score of 0.94 overall, ranging from 0.91 in 2023 to 0.97 in 2022. Classification accuracy using Meteor data was 2% higher than that obtained using Landsat data, with values of 93% in 2022, 90% in 2023, and 87% in 2024. F1 scores for three classes were also higher: 0.85 for fallow land, 0.86 for grain crops (an improvement of 0.06 over Landsat), and 0.95 for soybean, with annual scores ranging from 0.93 in 2024 to 0.98 in 2022. However, a significant decline in classification accuracy was observed for buckwheat, with an F1 score of only 0.65, with a consistent decrease of over 0.1 each year.

The highest classification accuracy was achieved using the Sentinel NDVI time series, with OA values of 93% in 2023 and 94% in both 2022 and 2024. Meanwhile, F1 scores for all crop classes derived from Sentinel data were higher than those obtained from Landsat and Meteor. Specifically, the F1 score for soybean was 0.96, ranging from 0.95 to 0.97 across three years, 0.93 for grain crops, 0.89 for fallow land, 0.86 for buckwheat, and 0.79 for perennial grasses.

The multi-sensor NDVI-based approach improved classification accuracy by 1% compared to using Sentinel data alone, achieving an OA of 94% across the three years (with 96% in 2022, 95% in 2023, and 94% in 2024). The highest performance was observed for soybeans (F1 = 0.98) and grain crops (F1 = 0.94). The mapping accuracy for buckwheat increased substantially—by 0.06 in 2022 and 2024, and by 0.18 compared to Sentinel alone—resulting in an OA of at least 94% each year. The classification accuracies for perennial grasses and fallow were similar to those obtained using Sentinel data, averaging 0.79 and 0.89, respectively.

Table 13 presents the cross-validation results for crops and fallow land classification from 2022 to 2024, using NDVI time series from three individual satellite systems and a combined approach. In 2022, all four methods demonstrated comparable OA. The highest OA of 97% was achieved by the multi-sensor approach. The mean F1 score (

) was also higher for the combined approach and Sentinel, outperforming Meteor and Landsat. In 2023, the multi-sensor method again achieved the highest performance, with an OA of 96% and an

of 0.92. Landsat showed the weakest results, with an OA of 89% and an

of 0.75. In 2024, the highest accuracy was obtained using Sentinel and the combined approach, with OA ranging from 92% to 93% and

values between 0.87 and 0.88.

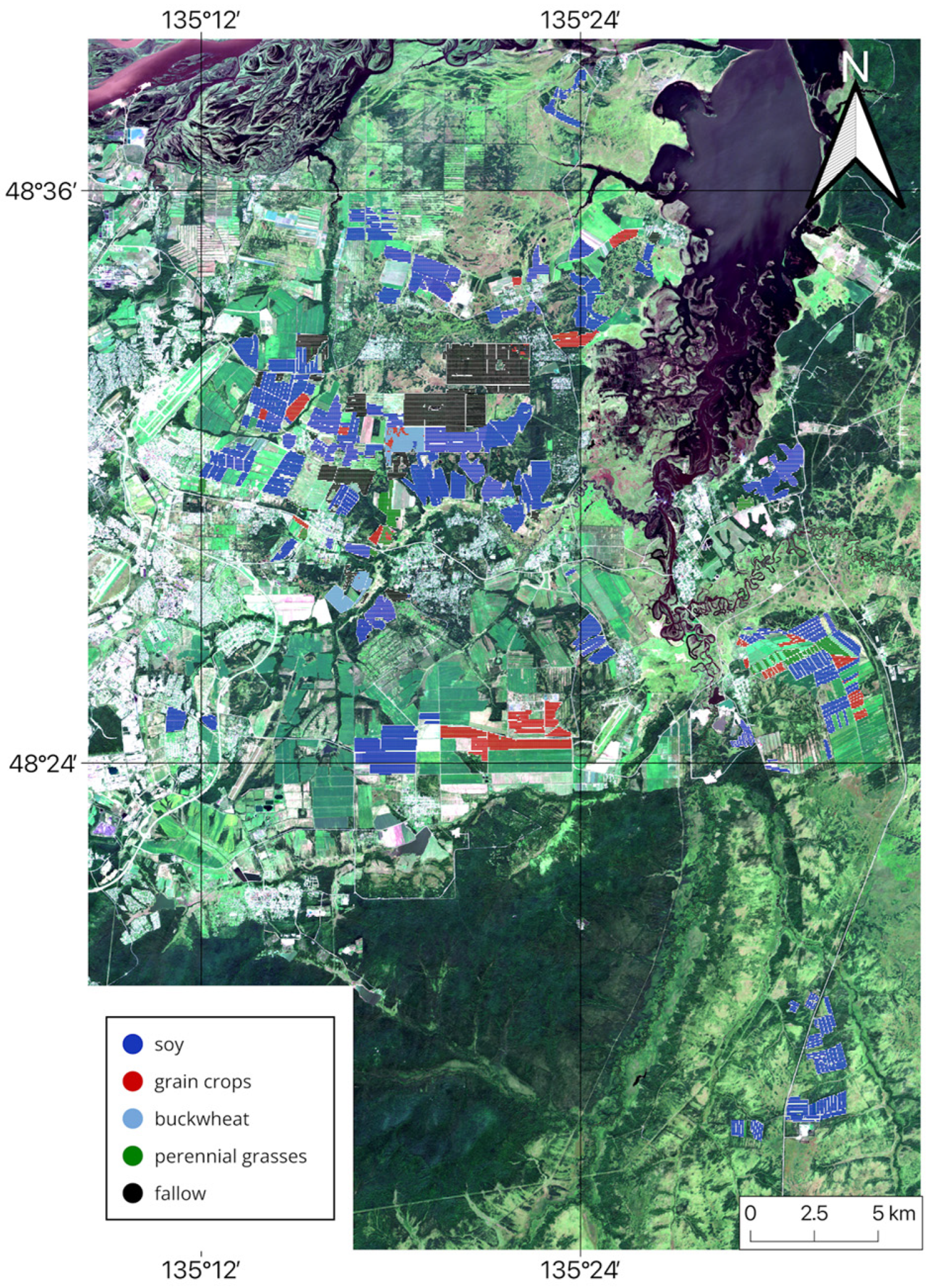

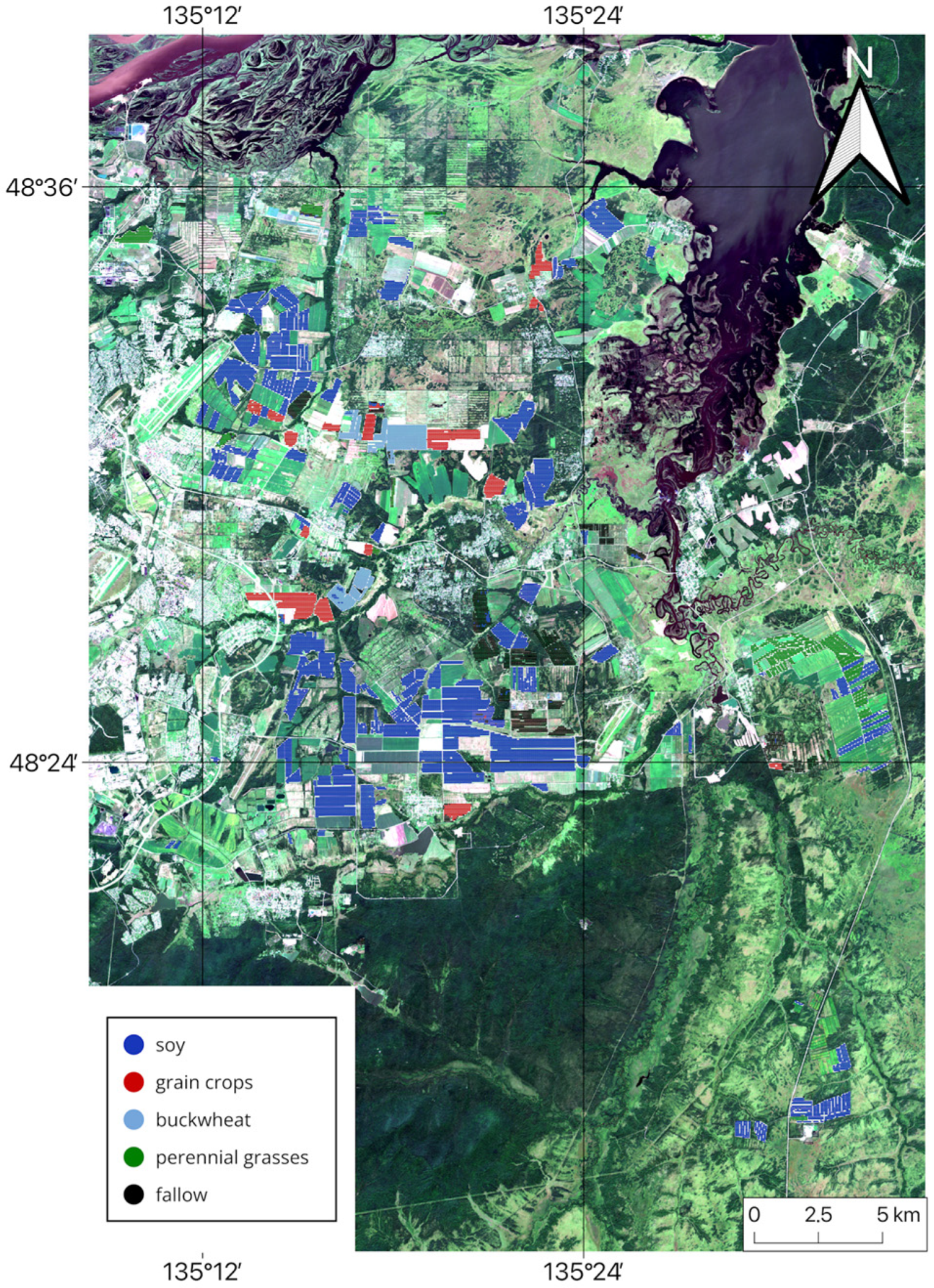

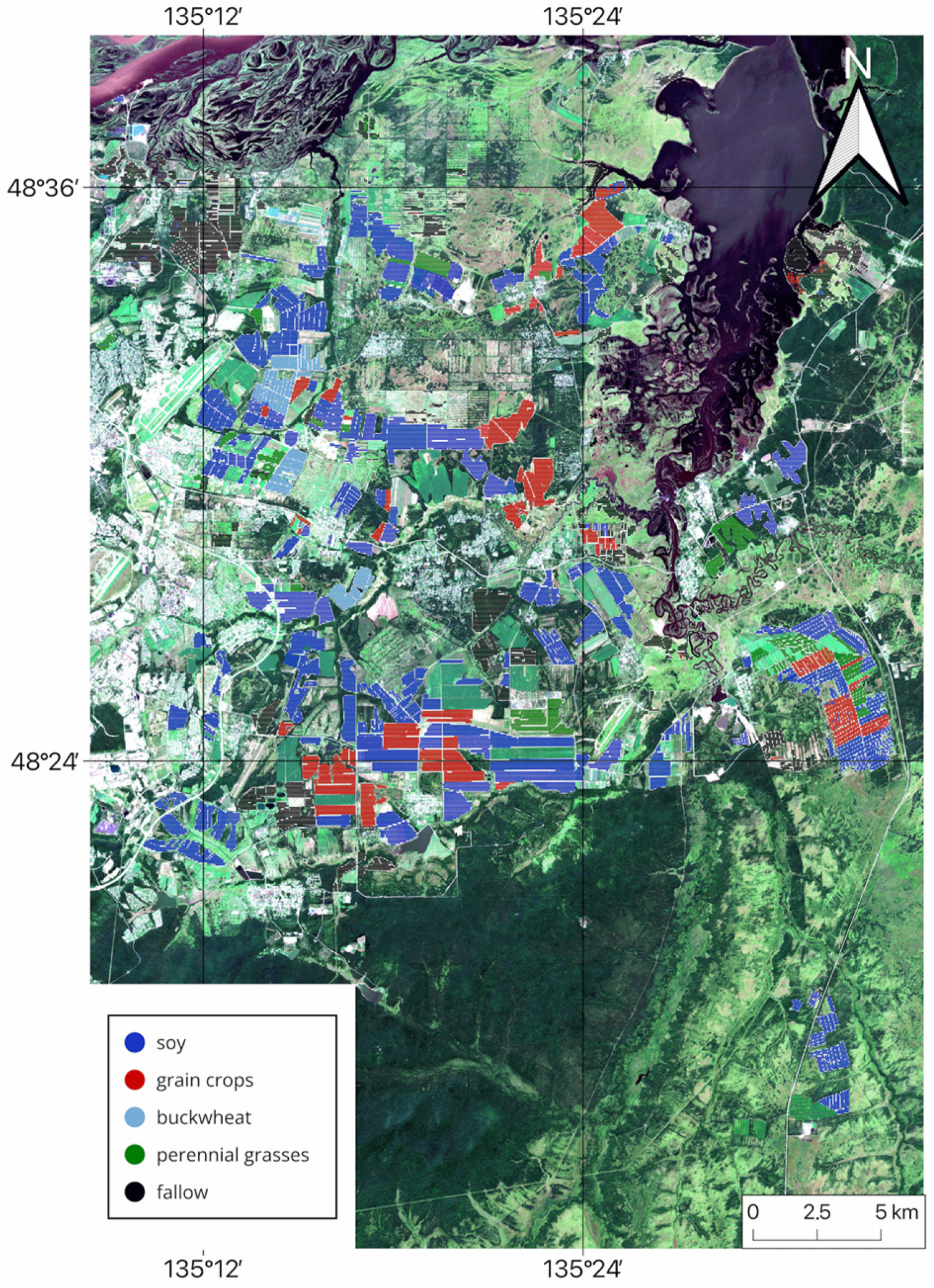

Thus, the analysis of classification results demonstrates that the multi-sensor approach is highly efficient and enables the creation of accurate cropland maps. Based on the findings of this study, cropland maps for the Khabarovsk district from 2022 to 2024 were generated using NDVI data from three satellites and the RF classification method. These maps indicate the dominant crop type for each field (see

Figure 11,

Figure 12 and

Figure 13).