1. Introduction

The increasing adoption of Internet of Things (IoT) technologies has led to a revolutionary change in many areas, transforming the way devices communicate, create and process information [

1]. As IoT applications expand into everyday life from smart homes and wearable technology to industrial automation and healthcare systems, the demand for smart, scalable and efficient computing infrastructure is increasing [

2]. These interacting devices, ranging from sensors in industrial equipment to real-time health monitoring wearables, constantly generate huge amounts of heterogeneous data that require high bandwidth, low latency and real-time processing [

3]. Of all the subsystems in the Internet of Multimedia Things (IoMT), the IoT world is the most unusual because it requires high-intensity computing, fast data transmission and enormous storage, in most cases, real-time video, audio and image data. The volume and intensity of multimedia data generated from IoMT-based applications are often used to test the responsiveness and capacity of traditional cloud-based systems and create latency and bandwidth issues that can impair free operation and user experience [

4].

To overcome these shortcomings, cloud computing has long been used as a centralized model for providing scalable, virtualized resources over the Internet. It facilitates resource sharing, storage management, and on-demand computing [

5] services required to handle large-scale data streams from the IoT. However, the centralized nature of cloud computing presents inherent disadvantages when faced with latency-demanding, location-based, and real-time applications [

6]. For example, network-edge-generated data must reach centralized data centers for processing, which causes significant latency and reduces the quality of services [

7]. Additionally, growing data security concerns, heavy network traffic, and wasteful bandwidth consumption further reduce the applicability of centralized cloud infrastructures to contemporary IoT needs. As IoT devices mature to enable next-generation applications with instant responsiveness and reliability, a new paradigm in architecture is needed—one that brings computational intelligence closer to the data source.

Fog computing acts as a vital paradigm to bridge the gap between cloud data centers and edge devices. By bringing cloud features to the network’s edge, fog computing makes it possible to handle computing, storage, and networking near the devices that create data [

8]. This decentralized approach not only reduces data transmission latency but also relieves bandwidth pressure at the core network and increases the responsiveness of latency-intensive applications. In this context, fog nodes—typically lightweight servers, routers or gateways—act as intermediate processing units that allow for local decision-making and task execution [

9]. This proximity to data sources enables fog computing to effectively support real-time applications such as augmented reality, industrial automation, autonomous driving and remote health diagnostics. In addition to latency minimization, fog computing allows for increased data security and privacy for transmitting sensitive data to centralized points, which is critical for applications that handle personal or critical infrastructure data [

10,

11].

However, the dynamic characteristics of a fog environment and the decentralization impose new challenges, particularly in task scheduling. Efficiently assigning and executing computational jobs across geographically dispersed and resource-constrained fog nodes is no small task. IoT tasks are typically represented as directed acyclic graphs (DAGs), where each node represents an individual computer task and an edge represents task dependency. In these representations, scheduling means mapping tasks onto appropriate fog nodes without violating their execution order and resource requirements. The key goals of task scheduling using fog are to minimize makespan (the sum of the time it takes for all tasks to complete reduce energy), optimize operational cost, and ensure high reliability and fault tolerance. These goals are typically interdependent and must be traded off to achieve maximum system performance.

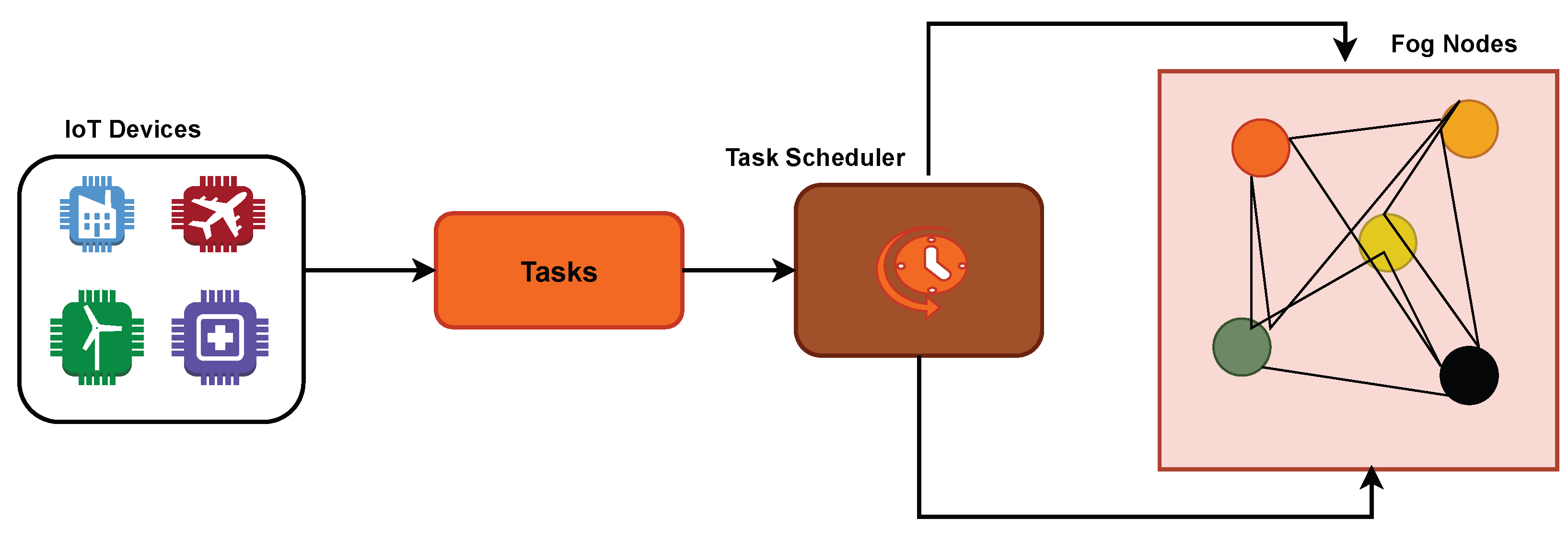

Figure 1 describes the fog computing architecture. The layers of fog computing architecture are:

IoT/End Devices Layer (Edge Layer): Comprises sensors, actuators, smart devices, and mobile devices that generate raw data. Performs data collection and can do preliminary filtering or processing. Sends data upstream to fog nodes for further processing.

Fog Layer (Intermediate Layer): Consists of fog nodes deployed near the network edge, such as gateways, routers, micro data centers, or edge servers. Responsible for local data processing, storage, and analytics, reducing latency and network congestion. Supports real-time decision making, task offloading, and context-aware services. Communicates with both the IoT layer and cloud layer.

Cloud Layer (Central Layer): Centralized, large-scale data centers for long-term storage, heavy computation, and advanced analytics. Handles non-latency-sensitive tasks that require high processing power. Acts as a backup and coordination layer for fog nodes.

Despite the advantages of fog computing, efficient task scheduling remains a major challenge due to fluctuating workloads, heterogeneous resources, limited energy availability, and the need to handle task priorities in real time. Task scheduling is an NP-hard problem because of the increasing number of devices and dynamic operational environments. Traditional heuristic and metaheuristic methods often optimize only a single objective or fail to adapt under dynamic conditions; although they are computationally efficient, they often fail to adapt to real-time demands and balance multiple performance metrics, leading to poor resource utilization and degradation of QoS. To overcome this, we propose a simulated annealing (SA)-based multi-objective optimization framework for fog task scheduling. SA, a probabilistic technique inspired by metallurgical annealing, is effective in exploring large solution spaces and avoiding local optima. Our approach optimizes a composite objective function that includes makespan, energy consumption, cost, and reliability. A priority-based penalty mechanism is included to ensure that urgent tasks are not delayed by less critical ones, which improves system responsiveness. The algorithm starts with an initial task-node mapping and iteratively explores neighboring schedules. Each configuration is evaluated using a cost function, and new schedules are accepted based on temperature-controlled probabilities, balancing exploration, and convergence. Gradual cooling of the schedule allows for stable, nearly optimal solutions to be found.

1.1. Motivation and Key Challenges

1.1.1. Motivation

The boom in IoT devices has led to big changes in computing approaches. It has brought challenges like handling huge data and meeting instant processing demands. Cloud systems have become key players because they offer the capacity to meet the fast response and dependability needs of time-sensitive IoT applications. Fog computing, on the other hand, brings computing closer to the edge. This approach lowers delays and reduces data use. However, distributing tasks this way creates issues. These include changes in resource availability, different node abilities, and unpredictable task loads. To tackle this, there is a strong need to create scheduling methods. Such methods should use the closeness of fog nodes to provide faster, more dependable, and energy-friendly task execution.

1.1.2. Challenges

To schedule tasks in fog environments, planners need to tackle multiple challenges and aim to achieve goals like shorter makespan, lower energy use, cost reductions, and higher system reliability. Many existing methods fail because they depend on fixed techniques or aim at narrow goals. This issue gets worse when task priorities change or node conditions shift. Handling task scheduling in fog networks is NP-hard. Traditional algorithms do not perform in large systems. The need to assign task priorities complicates things further, as it requires prioritizing heavy tasks while ensuring fairness. Edge nodes with limited power face significant challenges in staying energy efficient. Keeping operational costs low is also necessary to sustain fog infrastructure in the long run. Handling fault tolerance and keeping nodes reliable makes scheduling harder. This situation creates the need to adopt systems capable of managing potential failures. Shifting computing closer to the network’s edge helps lower delays and reduce data usage. However, this distributed setup creates challenges with resource availability that changes, differences in how capable nodes are, and varying workloads. These challenges highlight the demand to develop better scheduling methods. These methods can take advantage of the nearby fog nodes to provide quick, dependable, and energy-saving task performance.

1.2. Key Contributions

We proposed a priority-aware, multi-objective task scheduling framework for fog–cloud environments. This framework explicitly integrates urgent task responsiveness into the scheduling process, which is often overlooked in prior methods.

We developed a Simulated Annealing (SA)-based scheduling algorithm that incorporates priority-aware penalties. Unlike GA- or RL-based schedulers, this approach balances cost and makespan while improving responsiveness for critical tasks.

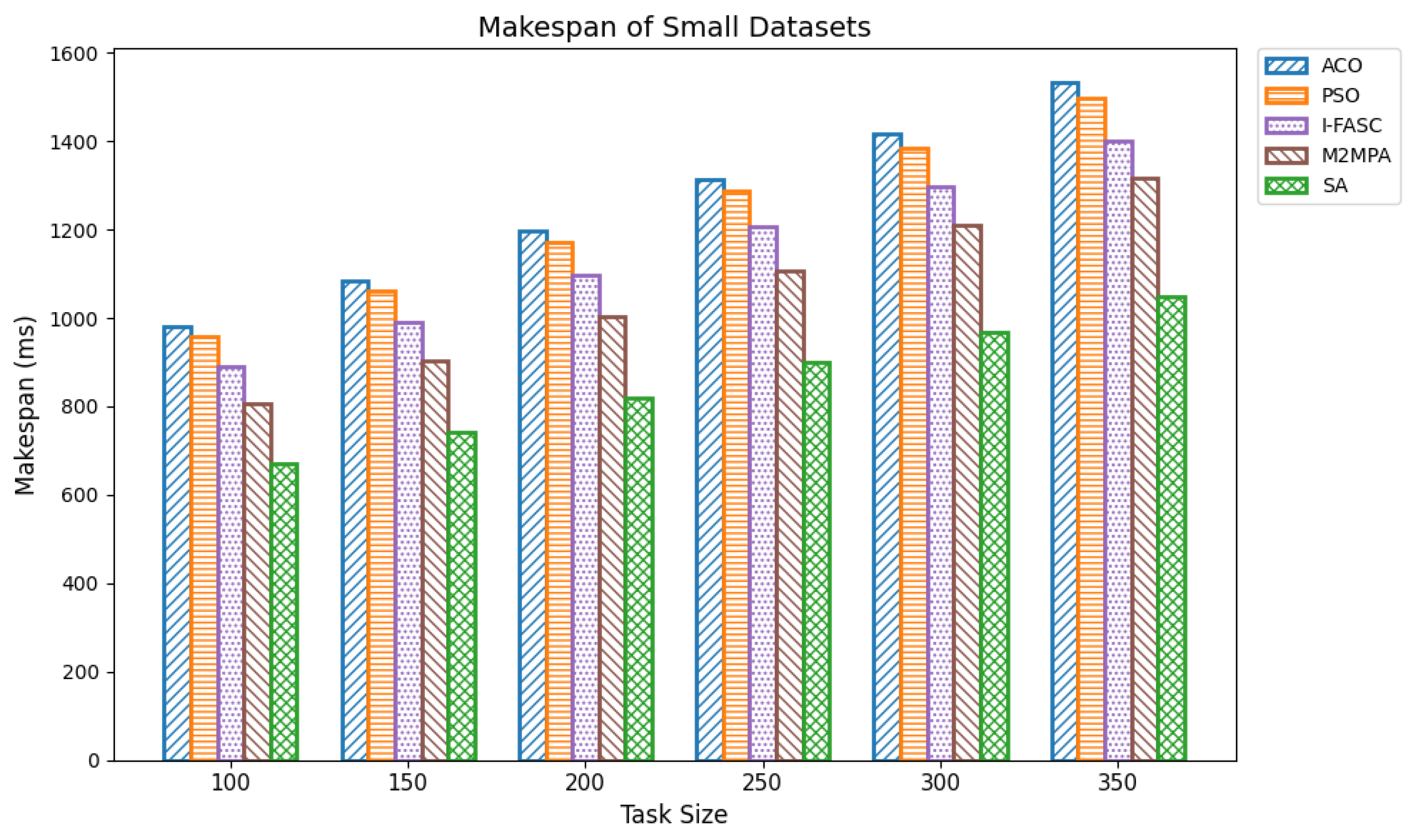

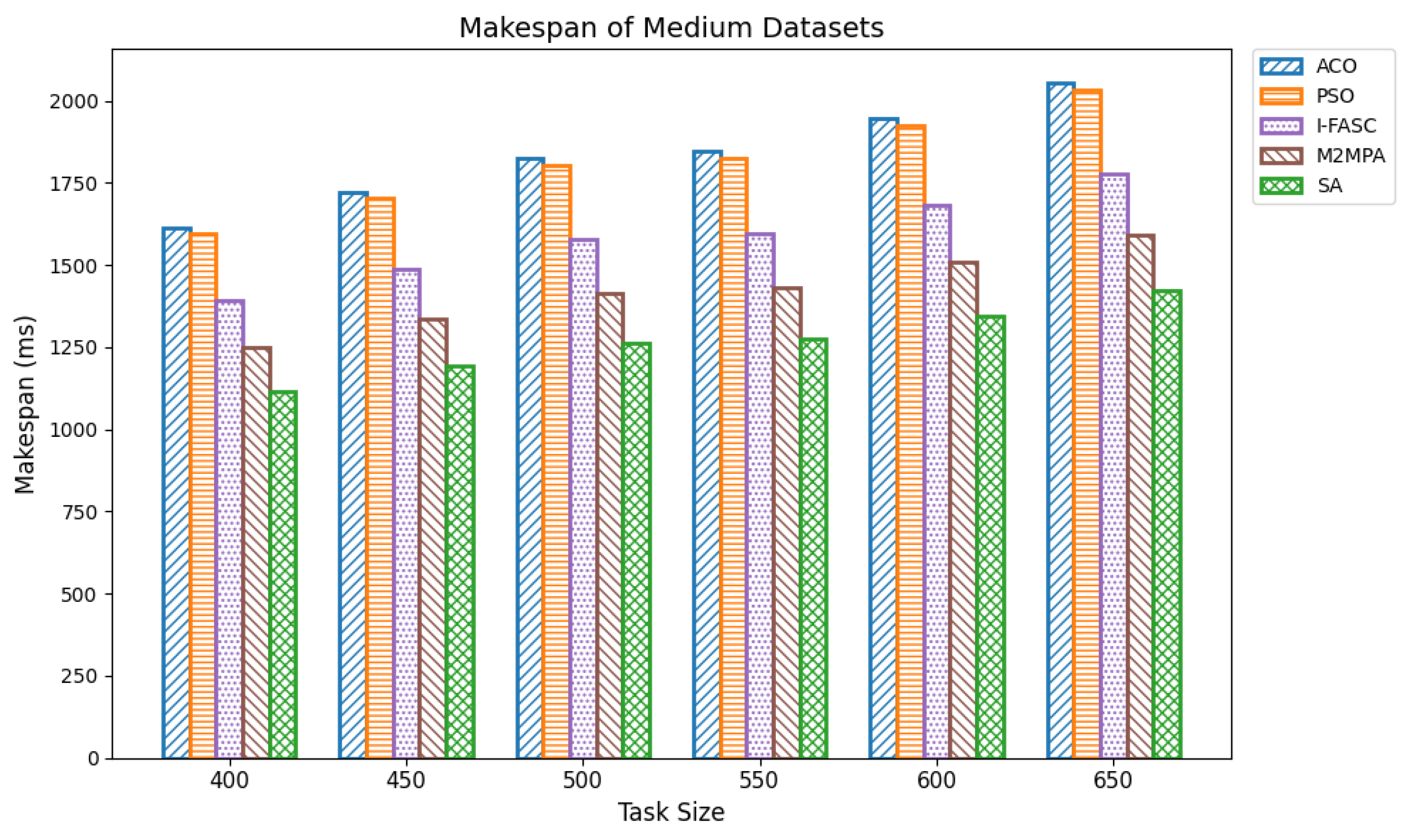

The Simulated Annealing algorithm is tested against the GoCJ dataset (task sizes between 15,000 and 900,000 units), ensuring the evaluation against realistic and varied workloads found in fog–cloud environments.

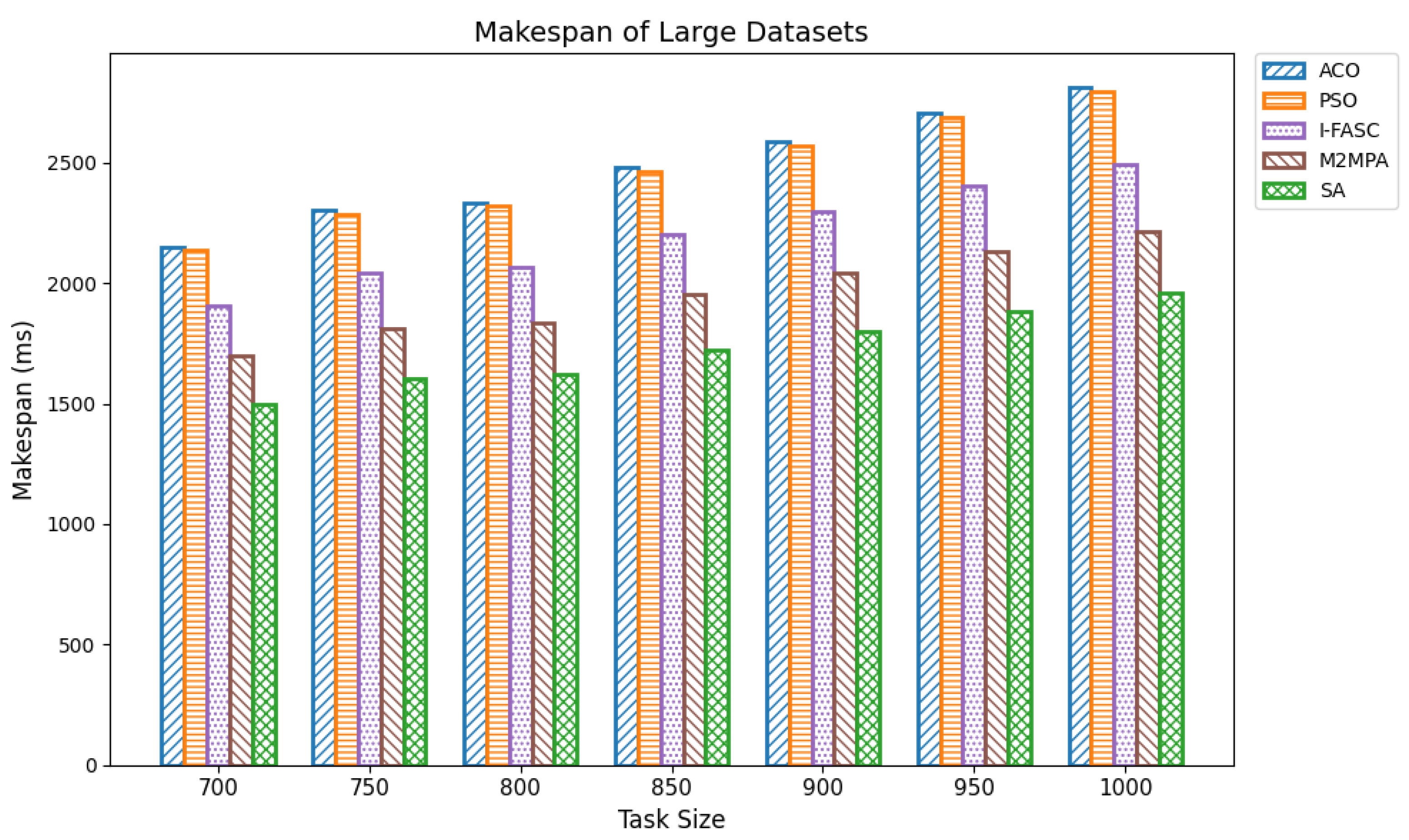

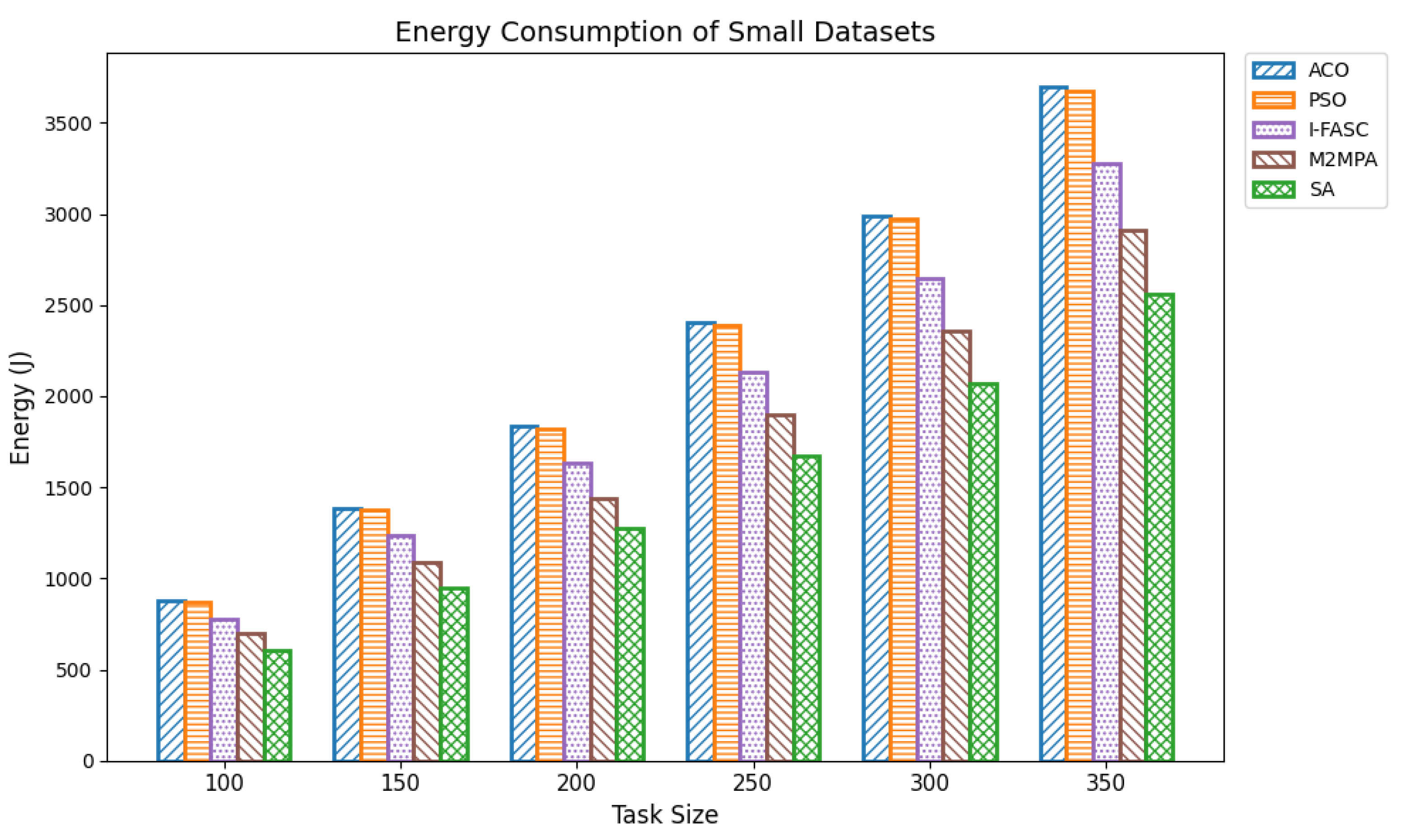

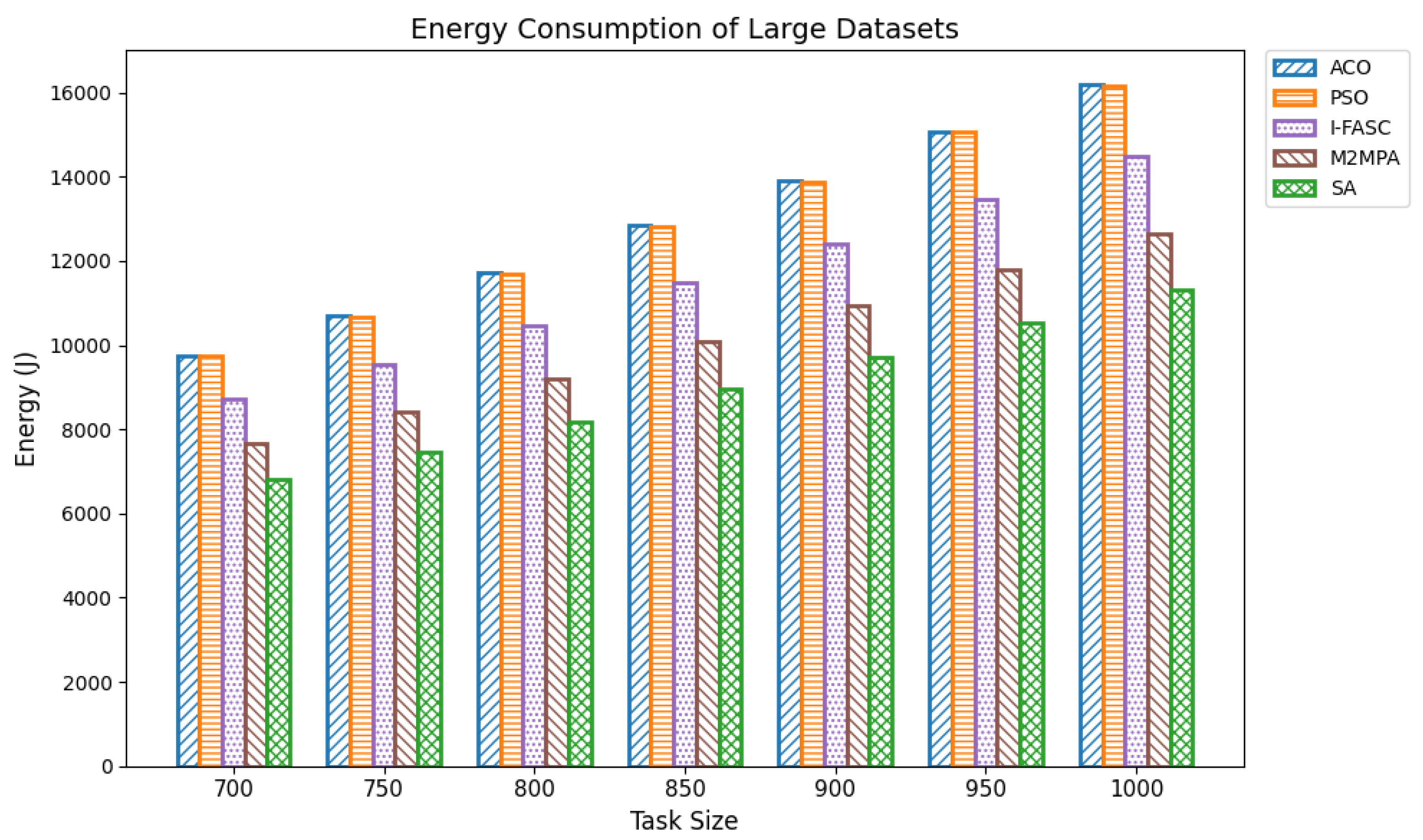

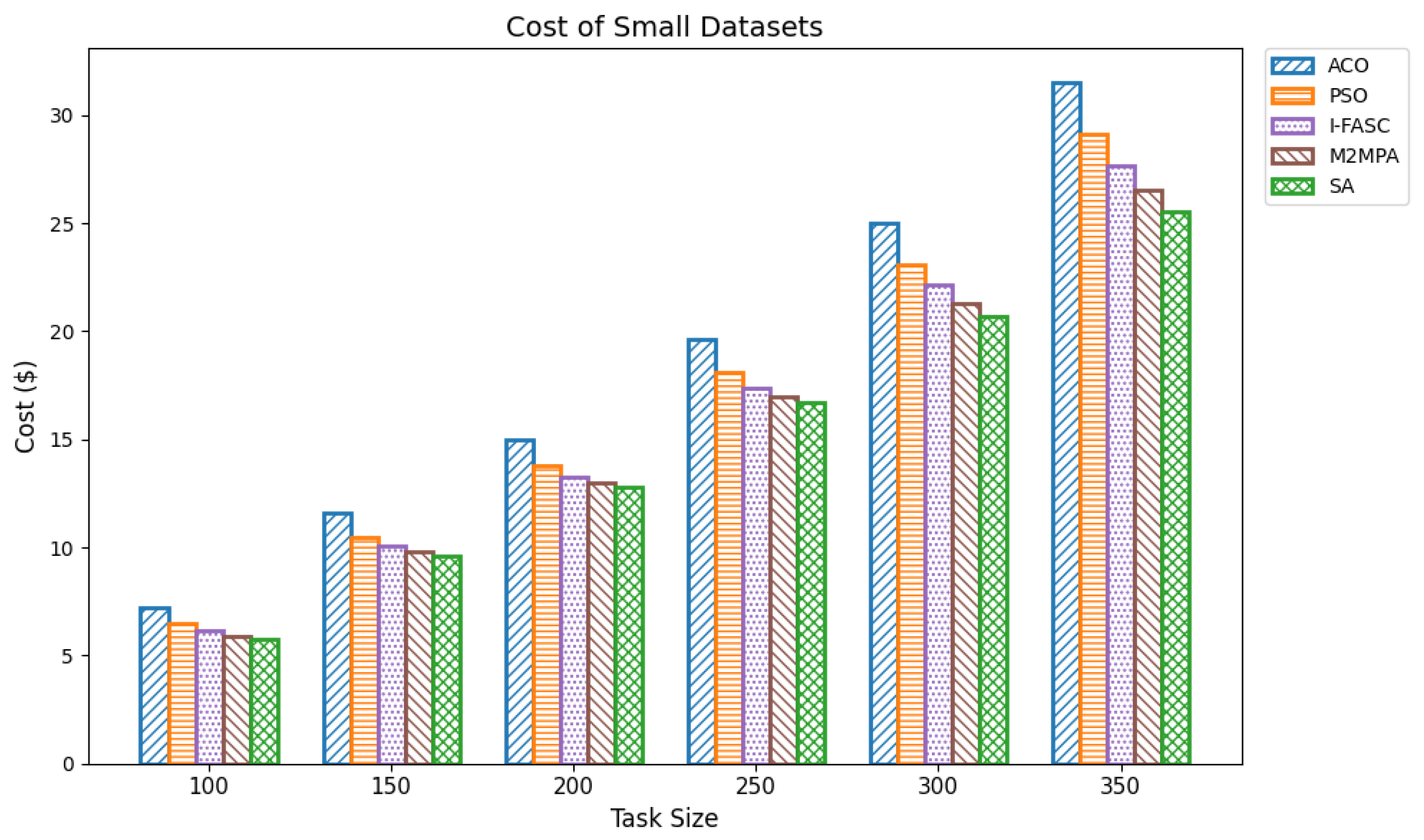

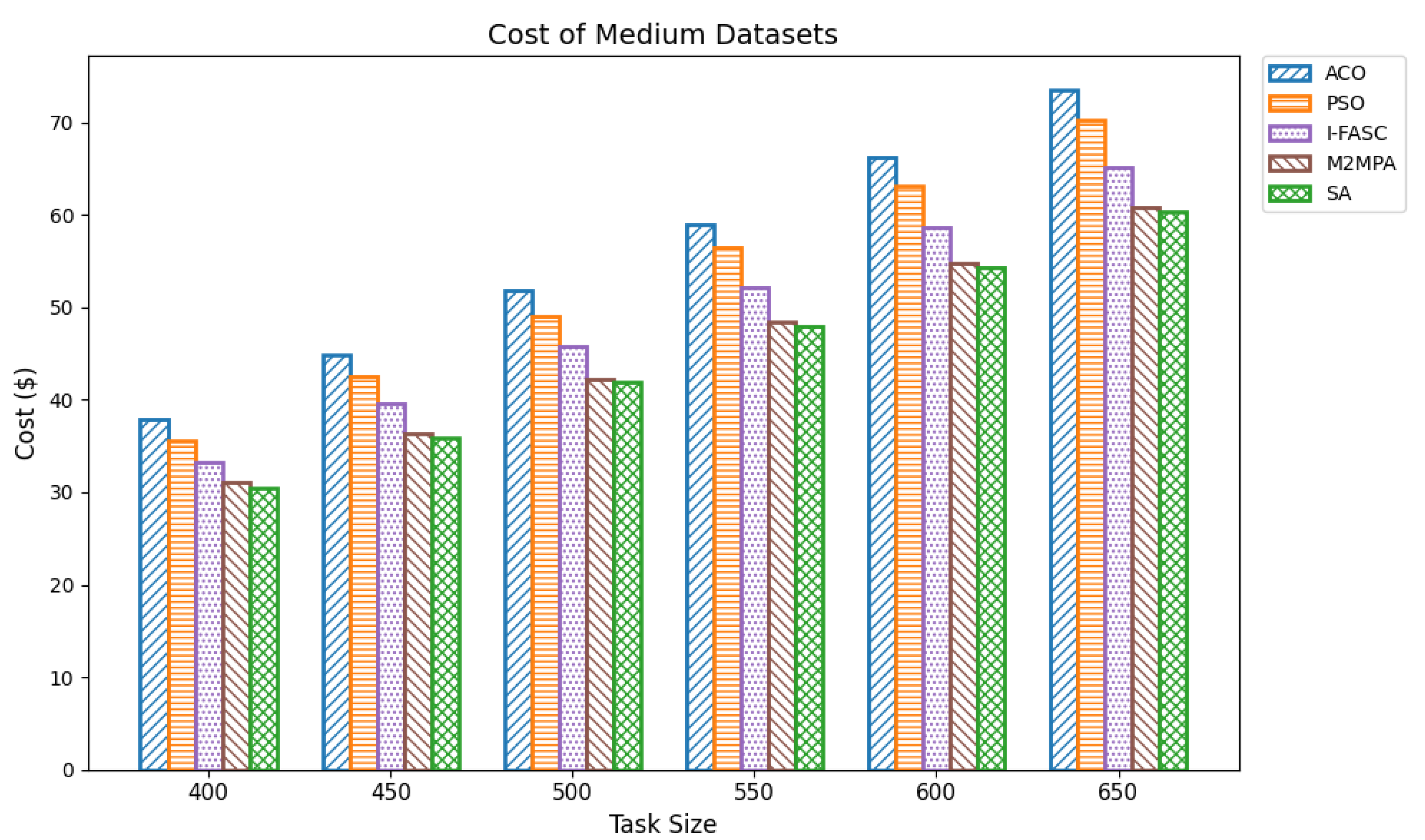

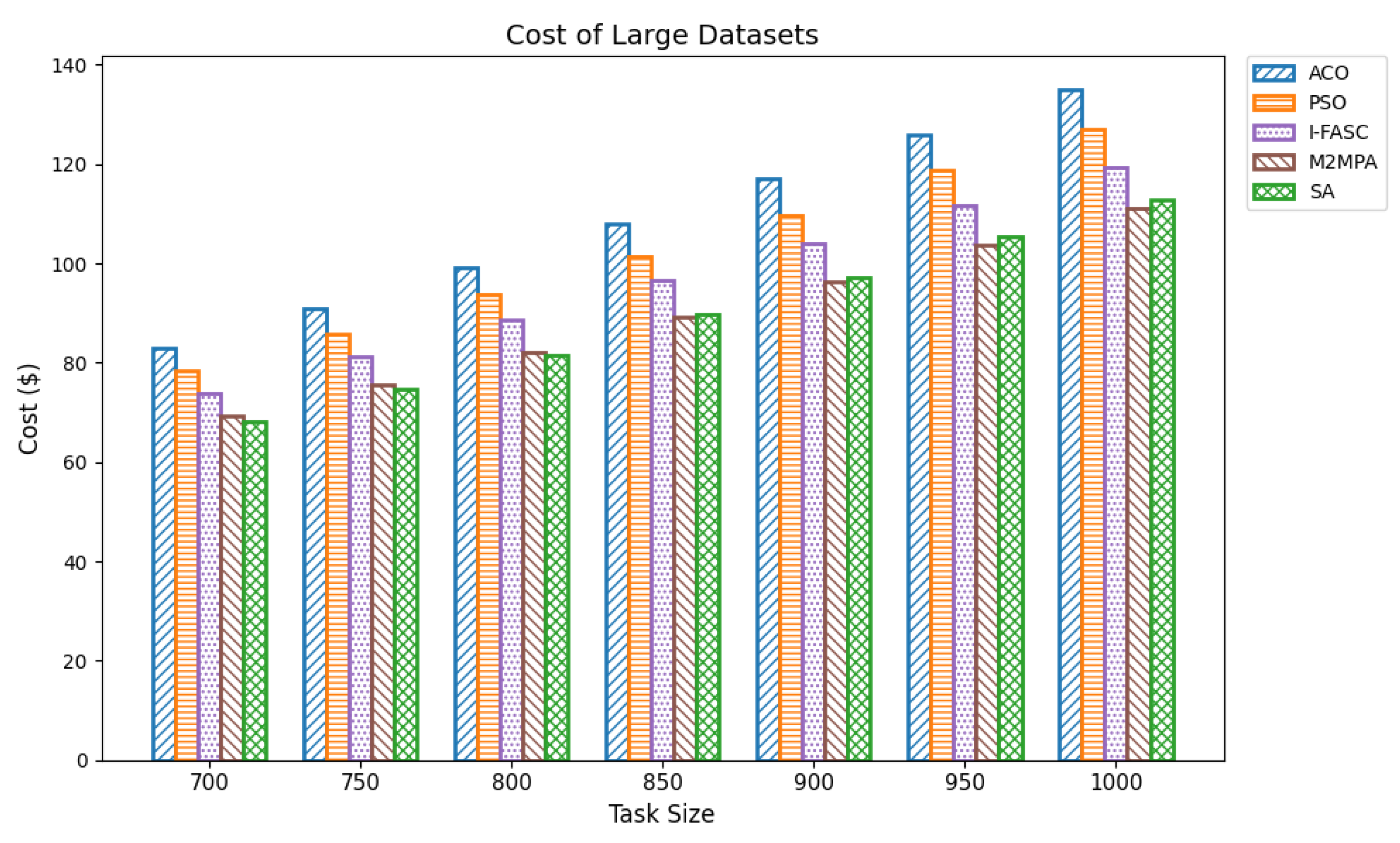

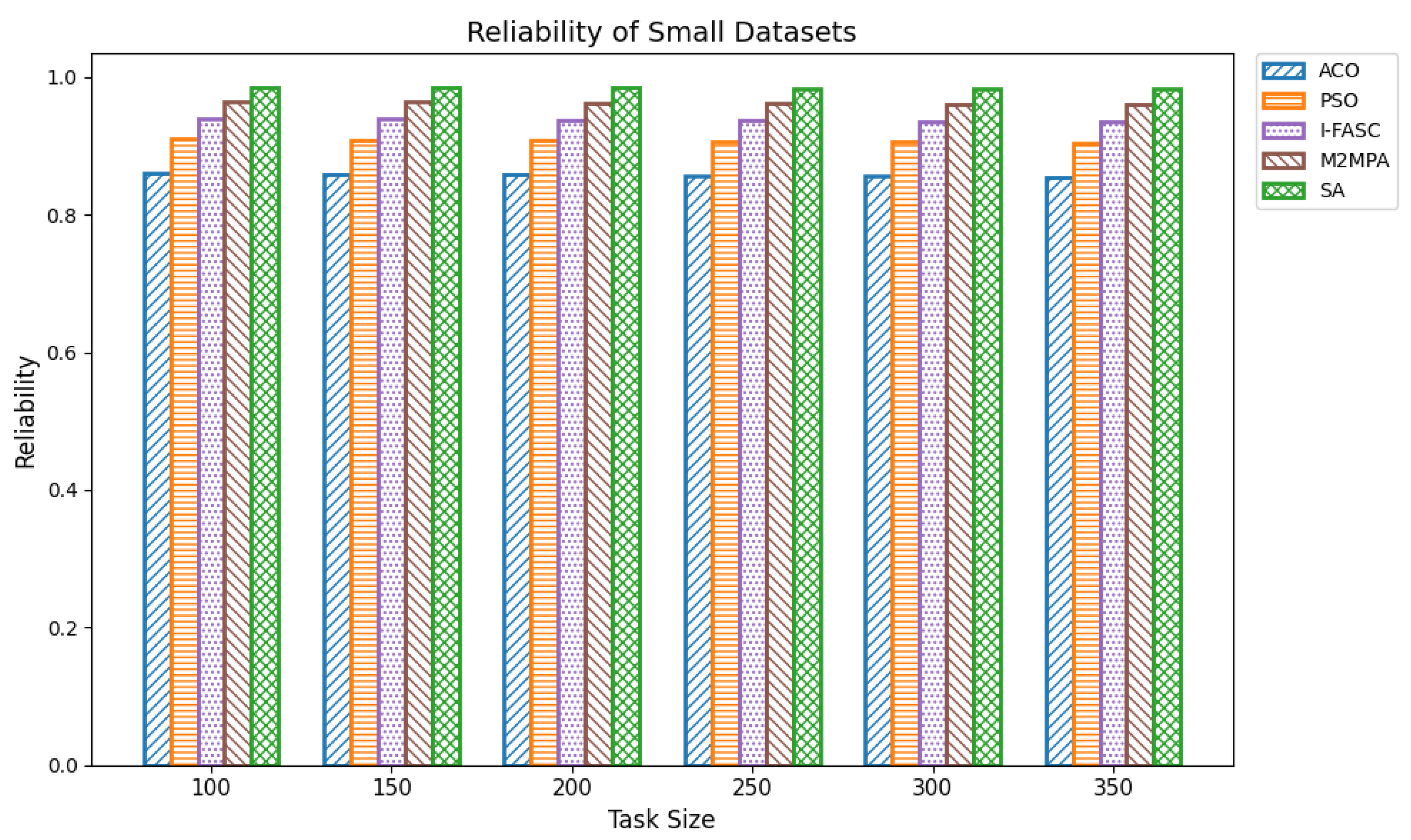

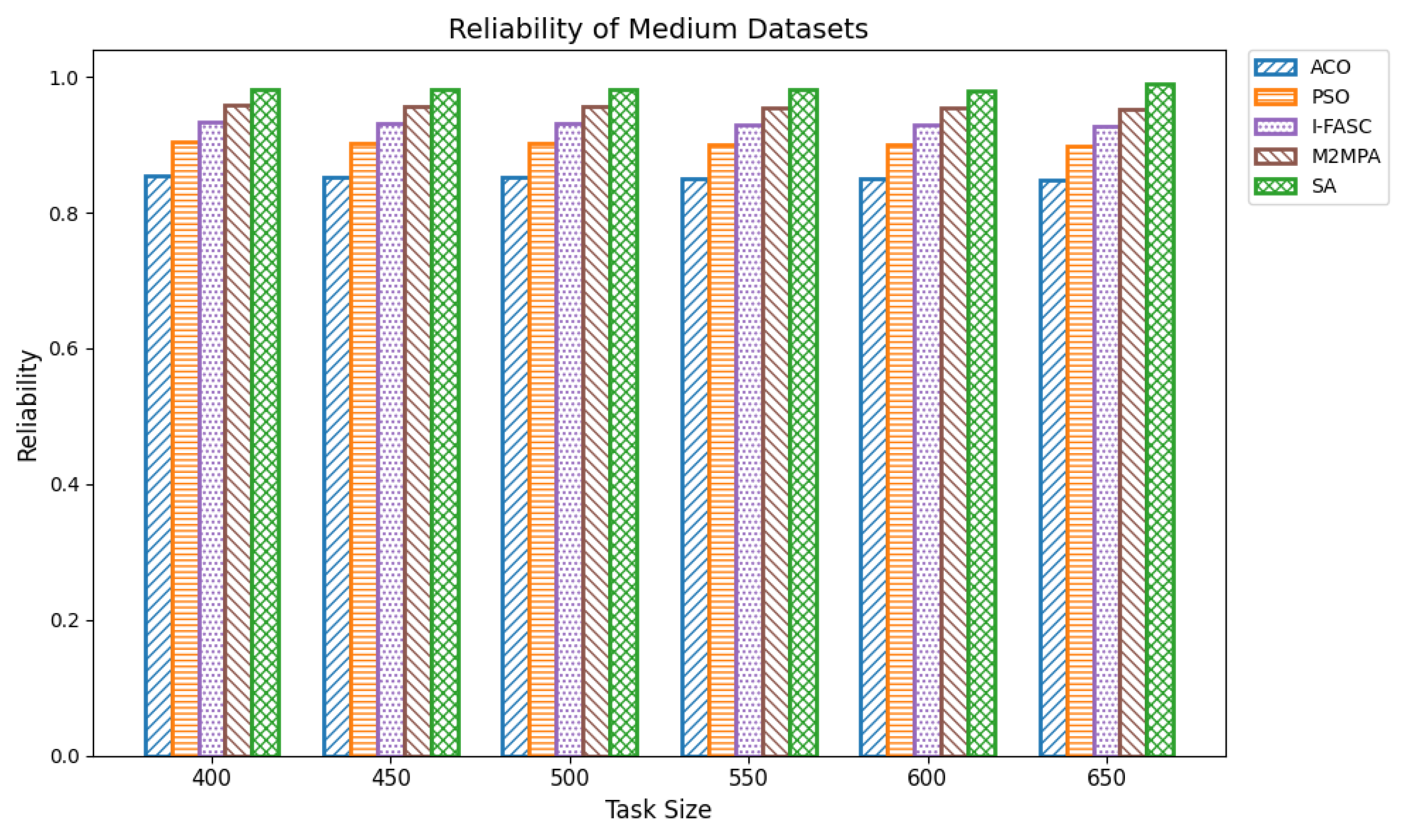

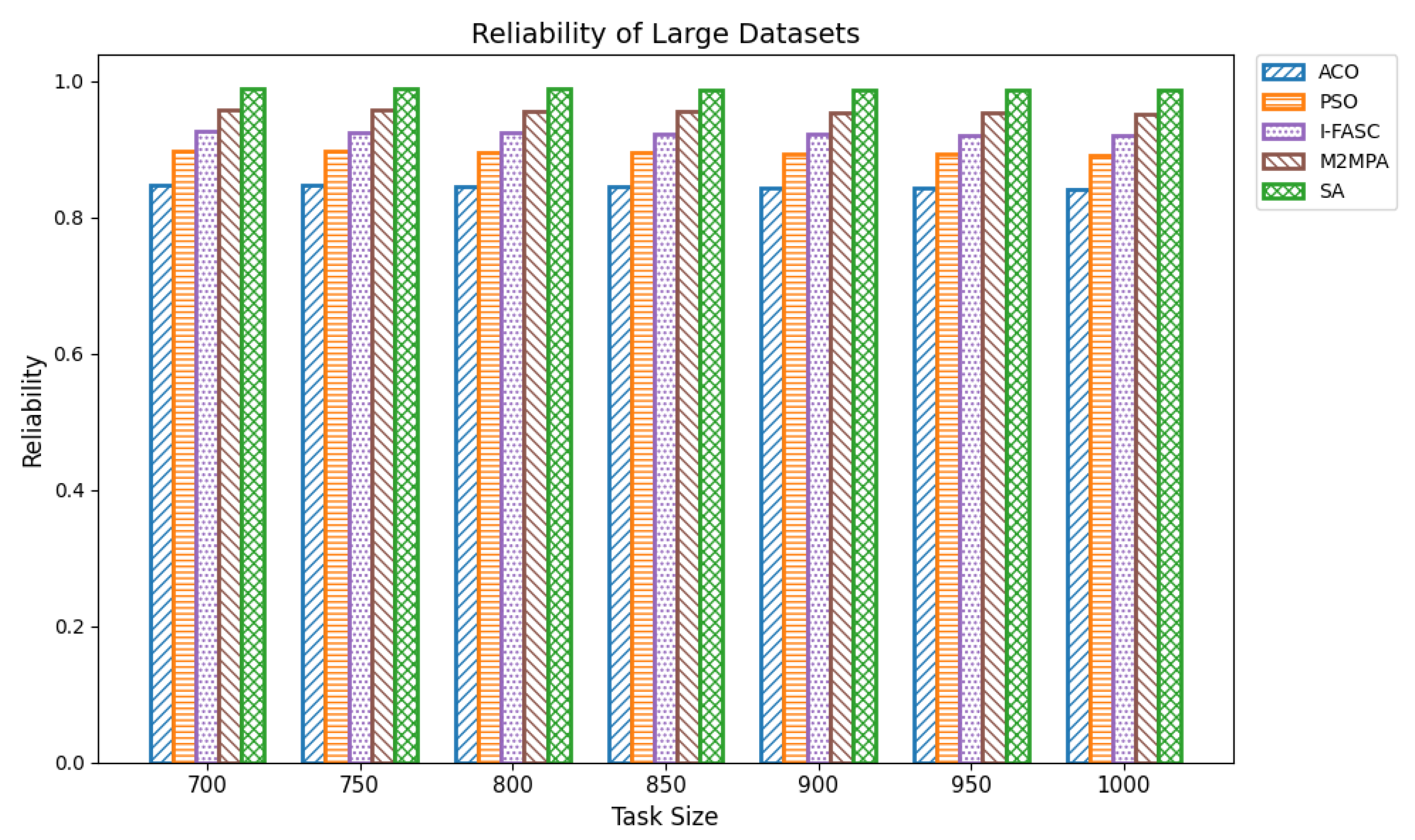

The proposed SA method is systematically compared against well-known scheduling frameworks, including ACO, PSO, I-FASC, and M2MPA. This allows us to demonstrate how the proposed model addresses their limitations, particularly in handling urgent tasks.

The outline of the paper is condensed as follows:

Section 2 reviews the related literature relevant to the proposed method.

Section 3 describes the problem formulation of the proposed work.

Section 4 illustrates the working procedure of Simulated Annealing for Task Scheduling.

Section 5 reports the experimental results and provides a detailed discussion based on performance metrics, and finally,

Section 6 concludes the paper, highlighting key findings and suggesting directions for future work.

2. Related Work

The authors of [

12] examine how to schedule tasks in fog-based IoT apps to cut down on service delays and energy expenses while meeting task deadlines. The researchers introduce a new way to schedule tasks called Fuzzy Reinforcement Learning (FRL), which combines fuzzy control and reinforcement learning techniques. The rise in IoT devices has brought big changes to computing models, leading to huge amounts of data and the need for quick processing. Cloud systems play a key role by handling the fast-response and high-reliability demands of time-sensitive IoT applications. Fog computing provides a flexible solution by bringing computing power closer to the edge to reduce delay and lower data usage. They build a mixed-integer nonlinear programming (MINLP) model to minimize the energy consumed by fog resources and the time required to complete tasks. The approach also considers deadlines and resource limitations. To manage a large number of tasks in changing environments, they use a fuzzy-based reinforcement learning technique. This method starts by sorting tasks with fuzzy logic, focusing on things like deadlines, data sizes, and instruction counts. After that, they apply an on-policy reinforcement learning scheduling method called SARSA learning, which has a stronger influence on long-term rewards compared to Q-learning. The results reveal that their FRL method performs better than other algorithms, leading to a 23% improvement in service latency and an 18% increase in energy costs. This method handles service time and energy use, ensuring deadlines are met to maximize resource use in fog computing setups.

The authors of [

13] suggest an effective load-balancing system specifically tailored for resource scheduling in cloud-based communication systems, focusing mainly on healthcare applications. The authors tackle the increasing need for real-time data processing in healthcare systems, where the most important phase is the allocation of resources on time. In order to overcome inefficiencies like unbalanced load assignment, absence of fault tolerance, and higher latency in current methods, the current framework employs RL and genetic algorithm to dynamically assign the resources. Agent-based models are used by the system for assigning resources and scheduling tasks with the goal of minimizing make-span, throughput, and latency. According to experimental tests, the current framework has been shown to outperform other approaches.

The authors of [

14] address the intricate issue of scheduling Bag-of-Tasks (BoT) applications in fog-based Internet of Things (IoT) networks by developing a multi-objective optimization strategy. The core objective is to achieve an optimal balance among three inherently conflicting performance metrics: minimizing execution time (makespan), reducing operational costs, and enhancing system reliability. The makespan criterion focuses on ensuring timely task completion, while cost optimization considers the monetary expenses associated with CPU, memory, and bandwidth usage. Reliability is quantified through a novel metric, the Scheduling Failure Factor (SFF), which reflects the historical failure tendencies of fog nodes based on Mean-Time-To-Failure (MTTF) data.

The authors of [

15] proposed a hybrid approach, combining an FIS with a GA to address multi-objective optimization and condition satisfaction problems. The proposed model integrates sequencing, scheduling algorithms, and partitioning to optimize the trade-off between the users and resource providers, such as cost, resource utilization, and makespan. The FIS performs scheduling based on constraints like budget and deadline, while the GA optimizes task allocation by resource usage and reducing cost and makespan. The experiments are implemented using workflows, and the results are outperformed in terms of makespan by reducing to 48% compared with EM-MOO and 41% compared with MAS-GA. Additionally, the process ensures better compliance with user-defined deadlines and budget constraints.

The author [

16] introduces a novel approach for cyber-physical-social systems (CPSSs), utilizing a modified version of the MPA, referred to as M2MPA. The main task is to minimize the energy consumption and makespan during the processing of IoT tasks offloaded to fog nodes. The authors argue that the existing metaheuristic-based schedulers and traditional techniques are insufficient for solving this NP-hard problem with high precision in an appropriate time. To address this, M2MPA incorporates two key improvements over the classical MPA: changing the fish aggregating devices (FAD) mechanism with a polynomial crossover operator to enhance examination and using an adaptive CF parameter to improve exploitation.

The authors of [

17] proposed an improved task scheduling method called I-FASC (Improved Firework Algorithm-based Scheduling and Clustering) for fog computing environments. The authors address the challenges of minimizing the processing time and check balanced load distribution across fog devices, which are critical due to the weak processing and limited resource capabilities of fog nodes compared to cloud servers. To achieve this, they introduce an enhanced version of the Firework Algorithm (I-FA), incorporating an explosion radius detection mechanism to prevent optimal solutions from being overlooked. The I-FASC method includes task clustering based on likely storage space, completion time, and bandwidth requirements, enabling targeted resource scheduling. Resources in the fog layer are categorized into three types: storage, computing, and bandwidth, and allocated according to their respective capacities. The fitness function measures both time and load values, with weights tuned to focus on either execution time or load balance according to the optimization target.

The author [

18] proposes FLight, for federated learning (FL) on the edge- and fog-computing platforms. The authors generalize the FogBus2 framework to develop FLight, incorporating new machine learning (ML) models and accommodating mechanisms for access control, worker selection, and storage implementation. The framework is intended to be conveniently extensible, with the capability to add different ML models as long as import/export and merging of model weights are specified. FLight also accommodates asynchronous FL and provides flexibility in storing model weights on different media, which eases deployment across various computing resources. The paper mentions two worker selection algorithms presented in FLight to enhance training time effectively. These algorithms emphasize quicker workers while increasingly the utilization of slower ones in subsequent rounds of training. This approach balances accuracy improvement with training time efficiency, avoiding the pitfalls of excluding slower workers entirely or over-relying on them. The authors also discuss the trade-offs between energy consumption, model accuracy, and time efficiency, noting that previous research often focused on accuracy-energy trade-offs without fully considering time efficiency.

The authors [

19] introduce a novel EEIoMT. The aim of EEIoMT is to reduce latency, reduce energy consumption, and enhance the QoS for IoMT applications. It is specially suited for monitoring the patient data to raise the quality of life by reducing medical expenses. The framework categorizes tasks into three categories (normal, moderate, and critical) based on the requirement for efficient scheduling by reducing energy consumption. The proposed framework utilizes the ifogsim2 simulator, and results demonstrated that the EEIoMT significantly reduces energy consumption, network utilization, and response time to existing methods. However, authors did not consider important parameters like cost and reliability for efficient scheduling.

The authors of [

20] address the challenge of task scheduling in fog computing environments, proposing an optimized solution using an ant colony algorithm to achieve multi-objective goals such as minimizing makespan, improving load balancing, and reducing energy consumption. The authors are specifically interested in Directed Acyclic Graph (DAG) problems, which are prevalent in most applications involving effective resource allocation and processing. The method proposed starts with the initialization of pheromone levels on all possible routes, with ants deployed randomly on these routes to search for potential solutions. Each ant computes transition probabilities from heuristic information and pheromone trails, updating the levels of the pheromones after every iteration in order to steer subsequent searches towards better solutions. This is how ants solve the shortest path to food using positive feedback processes and converge towards near-optimal solutions for the NP-hard DAG task scheduling problem.

The authors of [

21] introduced an EDLB framework, which combines MPSO and CNN for the limitations of existing load balancing methods. The framework is composed of three modules: Resource Monitor(FRM), CNN-Base classifier (CBC) and Optimized Dynamic Scheduler (ODS) to achieve real-time load balancing. Frm is used to monitor the resources and store the data, CBC calculates the fitness function of the servers and ODS schedules tasks dynamically by optimizing resource utilization and latency. EDLB evaluation results are better than the GA, BLA, WRR, and EPSO in terms of cost, makespan, resource utilization and load balancing. However, the authors did not consider impact parameters like reliability and energy consumption to improve the efficiency of scheduling.

The primary aim of [

22] is to enhance real-time data processing and reduce latency for critical healthcare tasks by leveraging fog computing’s proximity to end-users. HealthFog uses fog nodes to process and analyze cardiac patient data locally, minimizing reliance on cloud resources and improving overall efficiency. The proposed framework consists of three main components: workload management, resource arbitration, and deep learning modules. The workload manager monitors task queues and job requests, while the arbitration component assigns fog or cloud resources for optimal load balancing. The deep learning module utilizes patient datasets for training, testing, and validation in a 7:2:1 ratio, enabling automated analysis of cardiac data. By deploying HealthFog within the FogBus framework, the system achieves seamless integration with IoT-edge-cloud environments, ensuring real-time data processing. The authors identify a number of the benefits of fog computing compared to conventional cloud-based systems, including lower data movement, removal of bottlenecks due to centralized systems, reduced data latency, location awareness, and enhanced security for encrypted data. These capabilities render fog computing well-suited to time-sensitive healthcare applications, like continuous patient monitoring.

The challenge of providing accurate execution of tasks in fog computing systems is addressed by the authors of [

23] through the presentation of a learning-based primary-backup approach named ReLIEF (Reliable Learning based In Fog Execution). The authors concentrate on enhancing the reliability of running tasks within fog nodes with deadlines and reducing delays. The suggested strategy comes into play especially for applications that demand low-latency processing near the data source. ReLIEF provides a framework for choosing both primary and secondary fog nodes for task processing. Contrary to conventional methods where a task is allocated to one single fog node without redundancy, this method provides fault tolerance by allocating a standby node to process tasks in case the primary one fails or encounters delays. The selection process uses machine learning algorithms to estimate the most appropriate primary and backup nodes according to capabilities such as computational power, network status, and past performance data. The paper compares ReLIEF with three cutting-edge approaches: DDTP (Dynamic Deadline-aware Task Partitioning), MOO (Multi Objective Optimization), and DPTO (Deadline and Priority aware Task Offloading). Experimental results show that ReLIEF performs better than these algorithms on reliability, with a greater percentage of tasks completing their deadlines even in the face of fluctuating workload levels and resource limitations. In particular, the strategy performs well in high uncertainty or unforeseen environmental conditions, including sudden hikes in network latency or short-term unavailable fog nodes.

From

Table 1, existing research on task scheduling, ACO and PSO algorithms are metaheuristic approaches used for global search and task allocation. However, they often suffer from premature convergence and lack of ability to adapt dynamic fog–cloud workloads, which reduces their applicability in latency-sensitive environments. Frameworks like I-FASC and M2MPA are important for fog scheduling, but their focus is mainly on cost efficiency and throughput, and they do not address the priority handling or urgent task responsiveness, both of which are critical in fog computing. Recent research incorporating reinforcement learning and deep learning is adaptive, but suffers from high computational overhead. Hybrid fog–cloud models utilize fog for latency-critical tasks and cloud for compute-intensive tasks, but it is difficult to balance workload against reliability. To solve these issues, our Simulated Annealing (SA) model incorporates a priority-aware penalty mechanism that balances cost and makespan while also ensuring responsiveness to urgent tasks. Therefore, while ACO, PSO, I-FASC, and M2MPA are valuable baselines, their limitations in addressing urgency and priority-awareness highlight the necessity of our SA-based solution.

3. Problem Formulation

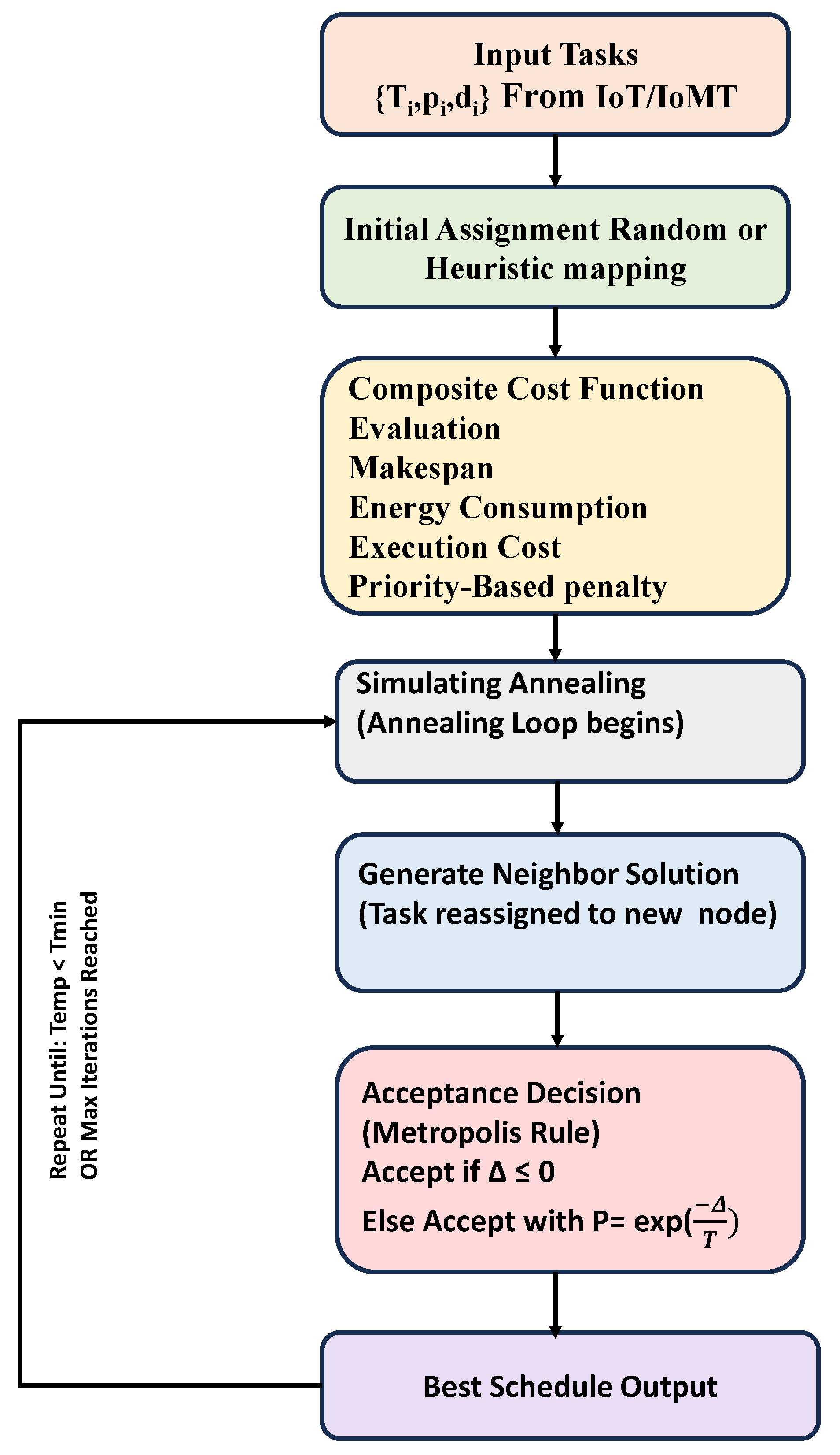

Figure 2 shows the proposed architecture of the priority-aware multi-objective task scheduling framework in fog computing. In the architecture, there are three major components:

Input Layer: Tasks generated from IoT/IoMT devices are collected, each having parameters such as execution time, reliability requirement, energy demand, cost factor, and priority level. These inputs form the basis for scheduling decisions.

Scheduling Core: At the heart of the framework lies the Simulated Annealing (SA)-based scheduler. This module will explore the task-to-node mapping space using a balancing exploration, probabilistic search strategy, and exploitation. The optimization works through a combined cost function. It ties together four main goals: makespan, energy use, execution cost, and reliability. A priority-focused penalty gets used to make sure important tasks go to nodes that are less crowded and more dependable. Each part of the architecture matches up with specific math formulas (Equations (

1)–(

7)), which are laid out in the problem description. This connects the theoretical aspects with how the system functions.

Execution & Feedback Layer: After deciding on schedules, the system runs tasks across fog nodes. It tracks metrics such as how long tasks take, how work is shared, and how resources are used. These results go back to the scheduler, allowing the task allocation process to improve through repeated adjustments.

Within the context of fog computing systems where many tasks are being scheduled across a distributed collection of edge and fog nodes, it is necessary to integrate multi-objective optimization techniques that account for task-specific properties like execution time, energy usage, cost, node reliability, and most importantly, task priority. Task priority is instrumental in making the system responsive and efficient, especially for time-critical or mission-critical applications. Each task

is assigned a priority level

, such that higher values of pi represent higher urgency. The objective of the scheduler is to allocate the high-priority jobs to less-loaded and more reliable nodes to reduce execution delays and minimize resource contention. The Simulated Annealing (SA) algorithm is used as the fundamental optimization paradigm, able to navigate intricate scheduling spaces and escape local minima. For the purpose of directing the optimization, a composite cost function is specified, with four primary performance measures: energy usage, execution cost, makespan, and a priority-aware penalty on tasks. The makespan

M is the maximum completion time among all fog nodes and reflects the overall system latency. It is formally defined as:

where

is the execution duration of task

,

is the index of the node assigned to

,

N is the total number of fog nodes, and

is the indicator function, which equals 1 if the condition inside holds true and 0 otherwise. This approach makes sure tasks get spread out so all nodes have a similar workload, cutting down the time needed to finish the whole schedule.

Total energy consumed,

E, is determined by summing up the amount of energy each activity consumes while running. Assuming each fog node consumes power at a constant rate P (watts), the total energy consumption for all activities resembles this:

This measure, expressed in joules or kilojoules, has an important role in situations where energy resources are scarce such as in green computing scenarios or devices powered by batteries. The running cost

C, is calculated by taking the time it takes to complete a task and multiplying it by a unit cost rate,

. The unit cost rate represents the monetary cost per unit of computation time:

This concept lets users schedule while considering economic factors. This becomes important when fog resources charge based on the amount of computation used.

The system’s reliability

R is defined as the likelihood that all planned tasks finish without any issues. If we assume each node has a reliability factor

, and if we consider the nodes to be independent, the reliability for carrying out all

T tasks becomes:

This product assumes all nodes are reliable to keep things simple. However, it can be extended to work with different environments where each node has its own reliability .

To integrate task priority explicitly into the optimization model, a priority penalty function

is introduced. This penalty discourages assigning high-priority tasks to heavily loaded nodes, which would result in increased waiting time and QoS degradation. The priority penalty is defined as:

where

is the total workload on the node

to which task

is assigned. The workload

on node

j is computed as:

where Equation (

6) computes the workload of node j by adding up the demands (

) of all tasks that are mapped to it. The indicator function

guarantees that only tasks mapped onto the node are added.

The introduction of energy, cost, reliability, and the priority aware penalty into the optimization model is driven by realistic constraints of fog computing. Equation (

2) accounts for limited power budgets of fog nodes; the cost of execution Equation (

3) captures the economic viability of using resources such as reliability; Equation (

4) provides assurance against failures in heterogeneous nodes; and the priority penalty Equation (

5) ensures responsiveness towards time-critical tasks. Together, these metrics meet the core QoS objectives of fog-enabled IoT systems and thus make the optimization framework theoretically sound and practically feasible.

This penalty ensures that tasks with higher values (more urgent tasks) significantly increase the cost when scheduled on overloaded nodes, encouraging the scheduler to favor lightweight and fast nodes for important tasks.

Bringing together all the above components, the total cost function used for Simulated Annealing becomes:

Here, are tunable weights that determine the importance of each metric in the optimization process. These weights allow the scheduler to prioritize different objectives depending on the application context, for instance, assigning greater weight to in scenarios where task urgency dominates.

While optimizing, the Simulated Annealing method looks at the solution space by creating fresh schedule options by reassigning tasks to different nodes. If the new schedule

ends up with a total cost smaller than the current schedule

S, it gets accepted. If not, it might still be accepted based on a probability determined by the Boltzmann distribution.

where

, and

T represents the current temperature in the annealing schedule, which falls over time. Algorithm 1 can optimize and converge toward an optimal or nearly optimal schedule when the temperature drops because there is less chance of accepting subpar solutions. By adding task priority to the multi-criteria cost model. This model ensures that the scheduler can adapt dynamically to crucial application demands without sacrificing system wide efficiency, in addition to makespan, energy, cost, and reliability. The result is a robust scheduling framework suitable for the latency-sensitive and heterogeneous characteristics of modern fog computing systems.

| Algorithm 1 Priority-Aware Task Scheduling in Fog Computing |

Require: : Set of tasks : Priority of each task (1 to 10; higher = higher priority) : Execution time for each task : Set of fog nodes Ensure: schedule[]: Final task-to-node assignments workload[]: Workload per node after assignment

- 1:

Initialize workload[] - 2:

Initialize schedule[] - 3:

for each task in T do - 4:

None - 5:

- 6:

for each node in N do - 7:

workload[] - 8:

- 9:

if then - 10:

- 11:

- 12:

end if - 13:

end for - 14:

Assign to - 15:

workload[] - 16:

schedule[] - 17:

end for

|

The time complexity of the priority-aware task scheduling algorithm in Fog Computing is O(n.k), where n denotes the number of tasks, and k denotes the number of fog nodes. In the first stage, workload and schedule arrays take the time of O(n + k) from the initialization part. The outer loop from lines 3–17 runs with n iterations. The inner loop that runs from lines 6–13 takes O(k) as it needs to find the best assignment of a task to be chosen from the available k nodes. The total time complexity is calculated as O(n + k) + O(n.k). The term n.k dominates for larger inputs. Therefore, the time complexity for the above approach is O(n.k).

4. Simulated Annealing Task Scheduling

The Simulated Annealing

Figure 3 begins by making a random task plan for nodes. A cost function then evaluates this plan. It considers things like task completion time, energy usage, execution cost, and a penalty tied to priority. After that, the algorithm tweaks the plan with small changes called neighbors. Sometimes, it even chooses worse options based on probability linked to temperature. This helps it escape local optima and work toward a near-optimal setup that balances the main goals. The method continues to run a set number of times or stops when the temperature drops under a specific limit. At that moment, it returns the best schedule found.

Unlike GA-based schedulers [

15] and RL-based methods [

12,

13], which primarily focus on cost and energy, our SA-based model incorporates priority-aware penalties to improve responsiveness for critical tasks. Previous models such as I-FASC [

17] and EEIoMT [

19] do not consider urgent task responsiveness, whereas our method integrates the urgency and priority-awareness within a multi-objective formulation, positioning it as an advancement over these existing approaches.

4.1. Initialization

To begin the Simulated Annealing (SA) process for task scheduling, we first formalize a schedule

as a mapping

where each task

is assigned to a fog node

. In its simplest form, this initial schedule is generated uniformly at random:

ensuring that every possible assignment has equal probability. This purely stochastic start helps the algorithm cover the entire solution space without bias, promoting broader exploration in early iterations.

However, uniform random seeding can be augmented by heuristic or hybrid strategies to improve convergence speed. For example, one might pre-assign high-priority tasks to the least-loaded nodes using a simple greedy rule, or incorporate domain knowledge by placing tasks with the longest durations on more powerful nodes. Such heuristic seeding yields an initial closer to the optimum, reducing the number of uphill moves required during the annealing process.

Once

is fixed, we compute its initial cost via the multi-objective function:

This value populates both the current cost and best-so-far cost:

Maintaining these two references allows SA to track progress and preserve the most promising schedule encountered.

A critical SA hyperparameter is the initial temperature

. If chosen too low, the algorithm behaves like a greedy hill-climber and easily becomes trapped in local minima; if too high, it wastes time exploring dominated regions. A common approach is to estimate

based on the cost landscape of random samples. Specifically, by generating

S random schedules

, computing their pairwise cost differences

, and taking the sample standard deviation

, one sets

where

is a target initial acceptance probability (e.g.,

). This principled choice ensures that a high fraction of uphill moves are accepted at the start, facilitating wide-ranging exploration.

Finally, before entering the main annealing loop, practitioners often precompute cost components (e.g., node workloads, per-task energy) and initialize logging structures to record metrics such as cost, temperature, and acceptance rates. This rich initialization phase—combining random or heuristic seeding, informed temperature selection, and systematic bookkeeping—lays a robust foundation for the subsequent SA iterations, dramatically improving both the efficiency and the quality of the final task schedule.

4.2. Neighbor Generation

In the Neighbor Generation phase of Simulated Annealing (SA) task scheduling, the focus is on generating new candidate solutions by making small, incremental changes to the current schedule. This iterative process allows the algorithm to explore the solution space in a controlled manner, gradually improving the task-to-node assignments over time. The concept is to shake up the current schedule to explore the solution space and move towards the optimal or near-optimal solutions.

In each round

k, the system picks a task

at random from the set of tasks

. After choosing task

, the system gives it to a new node

which is not the same as its current node

. The new node is chosen randomly from the set of available nodes

excluding the current node assignment, i.e.,

. The new schedule,

, is then formed by applying this perturbation. Formally, the new schedule is given by:

This simple yet effective step creates a neighboring schedule that differs slightly from the current one by ensuring that only one task is changed at a time. The algorithm ensures that the search process is controlled and focused by making only small changes to the schedule. What makes this strategy beautiful is one change to the task-node allocation affects only two workloads: the workload of the old node that performed the task,

and the workload of the new node,

. This small change makes it less expensive to examine the new schedule because only the two workloads that need to be updated are changed. This strategy is useful because the new schedule cost is updated incrementally. Because only two nodes are changed, the algorithm does not need to recalculate the entire schedule’s cost. It can compute the cost through the changes based on the workload nodes, which is faster and more effective. We define the cost function as

,) where

represents a particular schedule. After we reassign task

to node

, we can express the change in cost

like this:

where

and

represent the changes in the workloads of the affected nodes. This formulation allows for efficient cost evaluation without needing to recompute the entire schedule’s cost from scratch.

A detailed search enables Simulated Annealing to methodically navigate through the solution space, tweaking small elements that impact the overall schedule cost, which is crucial in optimizing the schedule. The method guarantees that the solution space is approached in a very constrained, incremental way to avoid large, sudden shifts that may result in a locally optimal solution. Also, the changes make sure that each new schedule links to the one before, creating a chain of better and better schedules as the algorithm goes on. This method is good because it can balance looking around the solution space with using the best solutions found so far. At the beginning of the search, when the temperature is still high, the algorithm is more likely to accept steps down the hierarchy that are far from optimal in order to circumvent local optima. When the temperature is lowered, the algorithm becomes more focused on the best solutions found so far and only attempts to change them in a more fine-grained manner. The Simulated Annealing algorithm’s effectiveness in solving the task scheduling problems in the changing balance between exploration and exploitation.

Thus, the way in which neighbors are formed is critical to the SA task-scheduling algorithm. It offers a means to enhance the schedule without excessive computational expense. The algorithm is able to gradually and purposely improve the schedule by making small changes, computing the cost of each change and iteratively refining the schedule towards an optimal solution.

4.3. Cost Evaluation

In the Cost Evaluation phase of Simulated Annealing (SA) for task scheduling, the goal is to assess how the new schedule compares to the current one by calculating the change in cost:

However, instead of figuring out the whole cost from the beginning, we use the fact that one task’s assignment changes. This means we can work out the change in cost bit by bit, looking at just the parts affected by the switch. This makes our calculations more efficient.

When it comes to task scheduling, the cost function for a specific schedule has two main parts: the makespan and the priority penalty terms. The makespan is the total time it takes to finish all tasks, which depends on how much work each node has to do. The priority penalty term deals with the order in which tasks need to be done punishing schedules that do not follow the right task order.

For a given schedule

, you can figure out how much work node

j has by adding up the time it takes to do each task

i that is assigned to that node:

Now, when we perturb the schedule by moving task

from node

to a new node

, the workloads of nodes

a and

b are updated accordingly. Specifically, the new workload for node

a, denoted

, is obtained by subtracting the duration of the moved task

, while the new workload for node

b, denoted

, is obtained by adding the duration of the moved task

:

The changes to the node workloads have an impact on the schedule’s makespan. We define the makespan as the highest workload among all nodes. The difference between the new and old makespan is what we call the change in makespan,

. The new makespan is calculated as:

This equation reflects the change in the overall task completion time as a result of reassigning task to node b. By only recalculating the workloads of the two affected nodes, this process is computationally efficient and avoids the need to recompute the makespan for the entire schedule.

The task reassignment affects not only the makespan but also the priority-penalty term. This penalty represents the cost when tasks do not follow their priority rules. For task

, if it is assigned to node

a, it incurs a priority penalty

. When it is moved to node

b, the priority penalty becomes

. The change in the priority penalty, denoted

, is given by:

This term adjusts the total cost based on how the task reassignment impacts the priority order and the penalty associated with violating the priority constraints.

Finally, the total change in cost

is a weighted sum of the changes in makespan and priority penalty:

where

and

are user-defined constants that control the relative importance of the makespan and priority-penalty components in the overall cost function. The values of

and

are typically set based on the specific requirements of the scheduling problem, such as whether the makespan or priority penalties should be more heavily penalized.

Reviewing the cost changes step by step makes the Cost Evaluation process quicker. This method examines small changes that happen when tasks are moved around. It lets the system decide if the change makes things better or worse and whether to keep or reject the new plan. Fast checks are important to how Simulated Annealing works. These checks help it test many options without using too much time.

The approach works better when the cost or energy function is made simpler. If nodes behave by using resources or dealing with costs, it can balance the energy and money used. This simplifies the evaluation process and reduces its difficulty. It offers an easy way to assess costs and keeps the SA task scheduling method effective and simple to handle. This makes it a solid and flexible choice to manage scheduling for large tasks.

4.4. Acceptance Criterion

In the Acceptance Criterion step of Simulated Annealing, which is applied in task scheduling, deciding whether to accept a new neighbor schedule relies on the Metropolis rule. This rule uses probability to introduce randomness into the exploration of solutions. In the annealing process, this randomness helps the algorithm look at different parts of the solution space. During this stage, the algorithm prioritizes exploring new options over refining existing ones. Using this approach prevents the algorithm from getting stuck in local optima too, ensuring it has a chance to find the best overall solution. The Metropolis rule’s core idea compares the difference in the cost function written as to a factor that depends on temperature.

The basic form of the Metropolis rule involves comparing the change in the cost function,

, with a temperature-dependent factor. If the change in cost

is less than or equal to zero, the proposed solution

is accepted unconditionally, as it improves the overall cost or does not worsen it. In mathematical terms, this is expressed as:

Here, represents the probability of accepting the new schedule , and is the current temperature at iteration k. The temperature plays a crucial role in determining the likelihood of accepting a worsening solution (i.e., a solution with ). As the temperature decreases over time (due to the cooling schedule), the acceptance probability for worse solutions becomes lower, which ensures that the algorithm gradually shifts from exploration to exploitation.

When , meaning the new schedule results in a worse solution, the decision to accept it is made probabilistically. The probability of accepting a worse solution decreases as the temperature lowers, with probability given by the exponential function . This function means that when the temperature is high, even large increases in cost may be accepted, allowing the algorithm to escape from local minima and explore broader regions of the solution space. On the other hand, as the temperature cools, the acceptance of worse solutions becomes increasingly rare, and the algorithm focuses more on refining the current best solution.

The acceptance decision is made by drawing a uniform random number

u from the interval

, i.e.,

. If this random value is less than or equal to

, the new schedule

is accepted, and the current schedule

is updated to

. In other words, the acceptance condition is:

This random acceptance mechanism ensures that an algorithm does not get stuck in local optima too early in the process. By allowing uphill moves (i.e., accepting worse solutions) with a certain probability, the algorithm maintains the capacity to explore the solution space more widely in the early stages of the process.

The temperature starts high and gradually decreases according to the cooling schedule. The annealing process begins with the algorithm choosing weaker solutions since the temperature starts off high. This allows it to explore more options. By doing so, it avoids getting stuck in poor local spots and improves its chances of reaching an optimal or global result. When the temperature lowers, the algorithm leans more toward the stronger solutions it has already uncovered. It fine-tunes its focus to move closer to the best possible outcome.

The acceptance criterion plays a key role in balancing exploration and exploitation. The temperature schedule serves as the main factor in this process. It decides how the algorithm shifts from accepting a wider range of exploratory solutions to favoring fewer, more-refined exploitative solutions. Striking this balance is crucial to allowing the Simulated Annealing algorithm to find effective solutions to tough optimization tasks like task scheduling.

4.5. Cooling

The Cooling and Termination phase in Simulated Annealing (SA) allows the algorithm to change from exploring possible solutions to focusing on refining a single solution. It balances searching the solution space while settling on a high-quality result. A geometric cooling schedule controls this phase by lowering the temperature

with each step. The temperature update rule is given by:

where

is the temperature at iteration

k,

is the temperature at the next iteration, and

is a cooling rate parameter, typically chosen to lie within the range

. This cooling rate parameter

controls the speed at which the temperature decreases. When

is close to 1, the temperature decreases slowly, allowing the algorithm to continue exploring for a longer period of time. Conversely, smaller values of

result in faster cooling, causing the algorithm to shift more quickly toward exploitation of the best solutions found.

The cooling schedule plays a role in balancing exploration and exploitation in the algorithm when the temperature remains high, and the acceptance rule allows it to accept worse solutions more often. This helps to explore the solution space and lets the algorithm move past local minima. When the temperature lowers, the chances of choosing weaker solutions decrease, and the algorithm focuses more on using the best solutions it has found up to that point. This gradual cooling helps the algorithm settle into an ideal or almost ideal solution while keeping the search area open enough to avoid cutting off possibilities too soon. The algorithm runs by adjusting the temperature and evaluating potential solutions. It stops when one of two conditions is met. The first condition happens if the temperature falls below a set minimum . The second condition occurs if it completes the maximum allowed number of iterations, K. The level is typically a very low value close to zero. This ensures the process ends when there is little chance of finding further improvements. The iteration limit K is a second stopping criterion that avoids the algorithm running infinitely, setting an upper limit on the iterations and guaranteeing that the algorithm will terminate after a reasonable amount of computational effort.

As termination is reached, the algorithm outputs the optimum schedule discovered in all iterations. The schedule assigns tasks to nodes in a way that lowers the cost function or meets other optimization goals specific to the problem being solved. The best schedule balances exploration and exploitation during the annealing steps.

The way the cooling and stopping methods are managed has a big effect on the performance of the Simulated Annealing algorithm. By reducing the temperature geometrically, the algorithm ensures that it explores broadly in the early stages and converges more tightly toward a high-quality solution in the later stages. Additionally, the termination conditions provide a mechanism to stop the algorithm after a predefined amount of time or computational resources, ensuring that the solution process is both efficient and effective. The final schedule returned is the result of a delicate interplay between randomness and control, balancing the need for global exploration with the goal of achieving a high-quality, optimal schedule.

The proposed SA-based scheduling framework directly addresses the gaps identified in existing literature. While traditional metaheuristics such as ACO and PSO [

20] mainly optimize makespan or energy, they do not effectively handle task priority. Similarly, reinforcement learning-based methods [

12,

13] improve adaptability but introduce high computational overheads. Other works, such as I-FASC [

17] and EEIoMT [

19], consider load balancing or energy but neglect reliability and urgency. In contrast, our model integrates four critical objectives—makespan, energy, cost, and reliability—along with a priority-aware penalty function. This explicit inclusion of task priority ensures responsiveness for urgent IoT applications, thereby positioning our method as a novel, multi-objective, and priority-aware solution for fog-enabled IoT environments.