1. Introduction

In recent decades, remote sensing technology has witnessed remarkable advancements. Notably, imaging technology has undergone rapid development, paving the way for more in-depth analysis in the field of intelligent Earth observation. With enhanced data acquisition capabilities—including high-resolution imagery, multi-spectral and hyperspectral sensing, and synthetic aperture radar—remote sensing data are now widely applied in critical domains such as land use planning, mineral resource exploration, agricultural quality assessment, precision agriculture, ecological monitoring, and national defense [

1,

2,

3,

4]. Among these applications, pixel-based remote sensing image classification serves as a core research task and has become a key driver in advancing remote sensing technology toward greater quantification and intelligence through the accurate identification of ground objects and detailed information extraction.

In the early stages, research on remote sensing image classification primarily relied on single data sources, such as multispectral or hyperspectral imagery [

5]. Currently, hyperspectral data has emerged as a prominent resource in the field of remote sensing, attracting significant attention due to its hundreds or even thousands of spectral channels, which enable the acquisition of rich spectral information. It demonstrates notable advantages in identifying surface objects and has yielded favorable application outcomes [

2,

4]. However, the use of hyperspectral image (HSI) data alone for land classification is constrained by two key phenomena: “same object with different spectra” (where the spectral characteristics of the same ground object vary under different environmental conditions) and “different objects with the same spectra” (where distinct ground objects exhibit similar spectral signatures). These phenomena limit classification accuracy [

6]. For instance, in urban environments, sidewalks and building rooftops may be constructed from similar materials, resulting in highly similar spectral curves. Additionally, environmental factors such as illumination conditions and atmospheric scattering can alter the spectral properties of surface objects, further exacerbating the “different objects with the same spectra” issue [

1]. Consequently, relying solely on spectral data makes it challenging to accurately distinguish between certain land use and surface cover types.

Light Detection and Ranging (LiDAR) data can generate precise three-dimensional profiles by measuring the distance between the sensor and the Earth’s surface, thereby providing high-precision topographic and structural information [

3]. Considering the challenges in HSI classification mentioned in the previous paragraph, the elevation information provided by LiDAR offers critical vertical dimension data that can help mitigate these classification ambiguities. Therefore, the fusion of hyperspectral and LiDAR data enables the full utilization of the complementary strengths of both data modalities, thereby effectively enhancing the classification accuracy [

7].

The integration of HSI and LiDAR data has garnered considerable attention within the domain of multimodal remote sensing. However, effectively combining the rich spectral information from HSI with the elevation features provided by LiDAR remains a critical challenge. Traditional classification approaches predominantly emphasize data-level fusion strategies [

8]. For instance, Ghamisi et al. [

9] employed the Attribute Profiles model to capture spatial characteristics of both data types and further extracted multimodal spatial features using the Extinction Profiles technique. Although conventional machine learning techniques—such as Support Vector Machine (SVM) [

10], Extreme Learning Machine (ELM) [

11], and Random Forest (RF) [

12]—have demonstrated moderate success in the classification of multimodal remote sensing data, these shallow models are limited in their ability to uncover deep and complex data relationships. Particularly when confronted with the nonlinear characteristics of HSI data, such methods often compromise the original spatial and spectral structure, leading to the loss of valuable implicit information [

7]. For example, SVM and RF rely heavily on manually engineered features and linear assumptions, which hinder their capacity to accurately model the intricate nonlinear relationships inherent in high-dimensional hyperspectral data [

13]. Furthermore, these models frequently encounter the “curse of dimensionality”—even with sufficient training samples, their classification performance falls short compared to that of deep learning approaches [

14].

Compared with traditional approaches, deep learning methods demonstrate superior feature representation capabilities, enabling the automatic extraction of multi-level and highly abstract features from raw data [

15,

16]. Among these, Convolutional Neural Networks (CNNs) are widely employed as fundamental models owing to their effective local receptive fields and parameter sharing mechanisms [

17]. Zhao et al. [

18] proposed a dual-interactive hierarchical adaptive fusion network built upon a dual-branch CNN architecture. This network is capable of extracting discriminative, high-level semantic features from both HSI and LiDAR data, thereby achieving improved classification performance. Huang et al. [

19] proposed a method based on convolutional neural networks. By incorporating a cross-attention mechanism, significant spatial weights are assigned to LiDAR data with respect to HSI, thereby enhancing the interaction between the two modalities and fully exploiting the complementary information from data fusion. Ge et al. [

20] proposed a multi-scale CNN framework that integrates parameter sharing with a local-global cross-attention mechanism. This approach enables the joint deep semantic representation and data fusion of HSI and LiDAR data. Liu et al. [

21] developed a multi-scale spatial feature module and achieved feature fusion through concatenation operations, thereby proposing a multi-scale and multi-directional feature extraction network. Indeed, the design of multi-scale modules and the incorporation of attention mechanisms into CNNs can mitigate the limitation of fixed receptive fields and enhance the capability of extracting remote sensing features. However, stacking such modules significantly increases the number of parameters and computational complexity [

22,

23]. In addition, due to the inherent local receptive field of convolutional operations, these models still face challenges in effectively capturing long-range dependencies across different scenarios and in handling the long-sequence characteristics of spectral features.

Given the exceptional capability of Vision Transformers (ViT) in modeling long-distance dependencies in visual tasks, researchers have incorporated the Transformer architecture into remote sensing image classification tasks [

24,

25]. Yang et al. [

26] developed a stackable modal fusion block as the central component of the model and introduced a multi-modal data fusion framework tailored for the integration and classification of HSI and LiDAR data, achieving an overall classification accuracy of 99.91%. Huang et al. [

27] integrated a CNN with the latest Transformer architecture and proposed a novel multi-modal cross-layer fusion Transformer network, aiming to enhance both the stability and performance of the model. Sun et al. [

28] proposed a multi-scale 3D-2D hybrid CNN feature extraction framework combined with a lightweight attention-free fusion network for multi-source data, based on the integration of a convolutional neural network and Transformer architectures, thereby substantially enhancing the performance of joint classification. Ni et al. [

29] focused on the selective convolutional kernel mechanism and the spectral-spatial interaction transformer for feature learning, and subsequently proposed a selective spectral-spatial aggregation transformer network. Roy et al. [

30] introduced cross-attention, extended the traditional self-attention mechanism, and proposed a novel multi-modal deep learning framework that effectively integrates remote sensing data.

Although current methodologies have demonstrated substantial improvements in classification accuracy, the integration of remote sensing data for fusion-based classification continues to present certain challenges.

Feature extraction remains suboptimal. Solely relying on a simplistic network architecture to extract basic information from HSI and LiDAR data limits the in-depth exploration of the complementary characteristics of multimodal data. For instance, the integrated representation and cross-scale interaction among spectral, spatial, and elevation features are not effectively achieved, leading to underutilization of feature diversity.

There exists a feature fusion defect. Relying solely on simple feature stacking and fusion overlooks the correlations between heterogeneous features, which in turn limits the model’s final decision-making capability.

To address the aforementioned challenges, this paper proposes a cross-modal cross-attention Transformer (CCFormer) network that enables efficient fusion and classification through multi-scale feature interaction and semantic guidance. The network features a three-level architectural design. First, shallow feature extraction is performed using a dual-stream pyramid module. In the spectral branch, multi-scale convolutional kernels are employed to capture fine-grained spectral features from hyperspectral data, while the LiDAR branch utilizes multi-scale convolutional kernels to extract elevation structure features. Second, a cross-attention Transformer encoder is introduced to achieve cross-modal feature alignment and bidirectional semantic guidance through a cross self-attention mechanism. Finally, class probabilities are generated via a lightweight classification head. The proposed framework effectively mitigates the redundancy caused by simple feature concatenation and enhances the model’s decision-making capability through pyramid-based multi-scale feature refinement and cross-modal attention interaction.

The main contributions of this work are summarized as follows.

In the shallow feature extraction stage, considering the rich spectral characteristics of HSI and the elevation information provided by LiDAR, a pyramid spectral multi-scale module and a spatial multi-scale module are, respectively, designed. Through a two-level multi-scale feature extraction process, the features are progressively refined, allowing for a comprehensive exploration of the intrinsic representations of each modality. This lays a solid foundation for subsequent cross-attention fusion by providing complementary features.

A CCFormer is proposed to enable bidirectional interaction between HSI and LiDAR features by reformulating the attention calculation paradigm. Utilize the spectral features of HSI as the query, and the elevation-structure features of LiDAR as the key and value. Dynamically assign weights to enhance the correlation between heterogeneous features. Meanwhile, employ the LiDAR features as the query and the HSI features as the key and value to construct a bidirectional attention mechanism.

Performance evaluation was conducted on three representative remote sensing datasets. The experimental results demonstrate that the proposed algorithm surpasses the current state-of-the-art methods.

3. Methodology

In this section, the overall architecture of the proposed method is introduced, followed by a detailed analysis of its key internal modules, which include shallow feature extraction module and a cross-attention Transformer.

3.1. Overall Architecture

Considering the complementary characteristics of HSIs—which offer high spectral resolution but limited spatial detail—and LiDAR data—which provide rich three-dimensional structural information but lack spectral features—this study addresses the challenge of enhancing the robustness of ground object classification in scenarios with limited training samples. To this end, a multi-scale cross-guided CCFormer framework is proposed. By leveraging a cross-modal feature mutual guidance and fusion mechanism, the framework effectively integrates the spectral-spatial information from hyperspectral images with the elevation-structural information from LiDAR data, thereby mitigating classification ambiguities caused by the limitations of single-source data.

Figure 2 illustrates the multi-modal cross-guidance and fusion strategy employed in the proposed framework. This framework achieves collaborative enhancement of spectral details and geometric features through a dual-guided cross-attention mechanism, offering a novel solution for small-sample classification in complex scenarios. The method follows a hierarchical architecture, comprising a shallow multi-scale feature extraction module, a CCFormer encoder, and a classification decision layer, thereby establishing an end-to-end cross-modal fusion classification framework.

The inputs to the model consist of the raw HSI data and LiAR data, denoted as and , respectively, where and correspond to the spatial width and height dimensions of the data, while denotes the spectral dimension of the HSI. During the data preprocessing phase, a square window of size is applied in a sliding manner pixel by pixel, and each resulting block is treated as an individual sample. The samples derived from the HSI data and LiDAR data are denoted as and , respectively. Subsequently, a shallow multiscale feature extraction process is applied to each sample, involving a two-level multiscale feature mining strategy. With respect to the spectral dimension of HSI, multiscale band grouping is performed along with local spectral correlation modeling. Regarding the spatial dimension of LiDAR data, multiscale spatial filtering and local geometric structure analysis are implemented. In the cross-modal feature fusion stage, the HSI features and LiDAR features are fed into the cross-attention Transformer encoder unit. Bidirectional information exchange is facilitated through cross-modal feature interaction guidance, enabling comprehensive feature fusion at multiple levels via a multi-level attention mechanism. Finally, the classification head performs the final classification by mapping the extracted high-dimensional features into the corresponding category label space.

It is worth noting that traditional HSIC methods typically flatten either a single-pixel spectral vector or the spectral information of a local region into a one-dimensional vector to serve as input tokens for the Transformer. In contrast, this study employs a per-band feature serialization strategy. Specifically, given an input feature

, a linear projection is applied to generate b band-level tokens, as shown in the following equation:

where

represents the spatial dimension,

represents the spectral dimension,

is a sequence of tokens (a total of b tokens), and

is a single token. This approach aims to provide a structured input format that facilitates the evaluation of the importance of each spectral band, particularly when integrated with LiDAR data in subsequent analyses.

3.2. Shallow Multiscale Feature Extraction

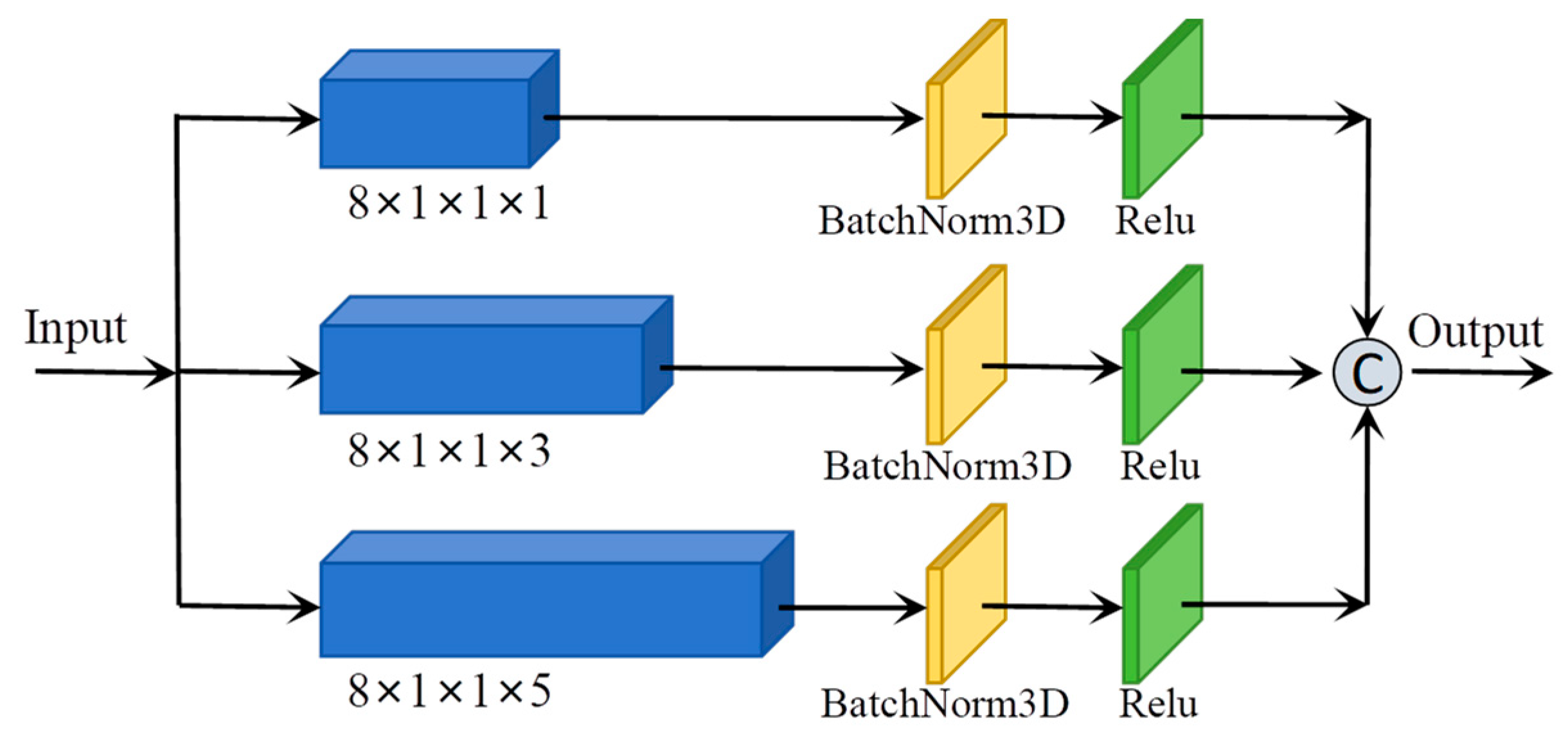

In the shallow feature extraction stage, considering the distinct characteristics of HSI and LiDAR data, a spectral pyramid-based multiscale feature extraction module and a spatial pyramid-based multiscale feature extraction module are designed, respectively. The former achieves multi-granularity decoupling of spectral features through multi-scale band grouping and local spectral correlation modeling. The latter enables multi-level decomposition of spatial features by employing multi-scale spatial filtering and local geometric structure analysis.

As illustrated in

Figure 3, the Spectral Pyramid-Based Multiscale Feature Extraction Module comprises a spectral-dimensional pyramid structure composed of three layers. The pyramid structure performs feature extraction at multiple scales by utilizing convolutional kernels of dimensions

,

, and

respectively along the spectral dimension. Following convolution in each layer, batch normalization is applied to standardize the data distribution across batches, thereby maintaining a relatively stable input distribution for subsequent layers. Subsequently, the ReLU activation function is employed to introduce nonlinearity, which facilitates faster network convergence and helps mitigate the risk of overfitting. This structure can be mathematically represented by the following equation:

where

represents a sample of the input HSI,

and

denote the functional output of the i-th layer of the pyramid and the overall output features of the pyramid, respectively. Additionally,

,

,

and

denote the ReLu, 3DBatchNorm, 3Dconvolution, and Concatenate functions, respectively.

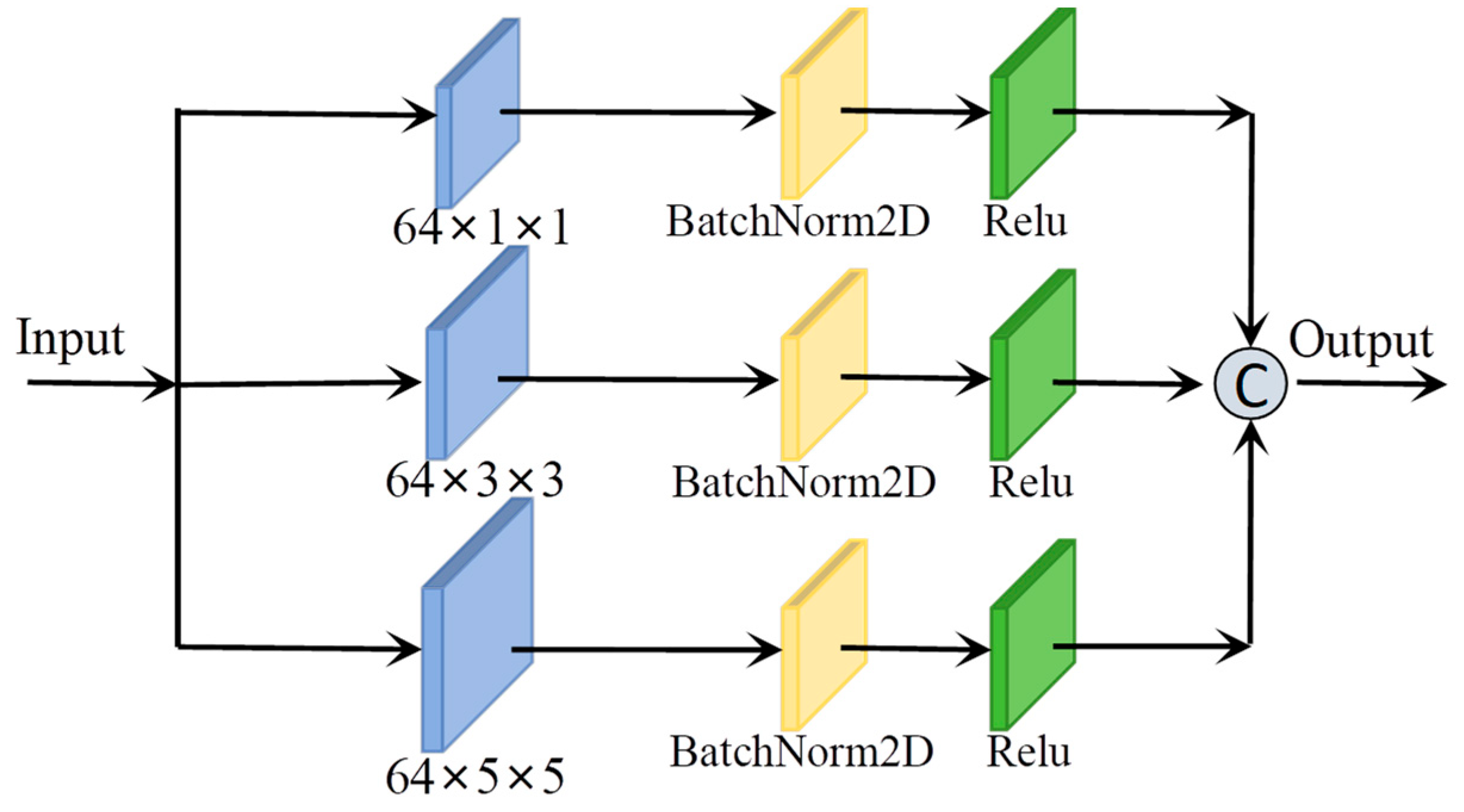

As illustrated in

Figure 4, the Spatial Pyramid-Based Multiscale Feature Extraction Module comprises a spatial dimension pyramid formed by three layers of convolutional kernels with kernel sizes of

,

, and

, respectively. Its structure is similar to that of the Spectral Pyramid-Based Multiscale Feature Extraction Module. The difference is that one is based on two dimensions and the other is based on three dimensions. The module can be mathematically represented by the following equation:

where

represents a sample of the input HSI,

and

denote the functional output of the i-th layer of the pyramid and the overall output features of the pyramid, respectively, and

represents the 2Dconvolution function.

The two feature extraction modules have similar structures. The primary distinction lies in their operational spaces: one performs multi-scale feature extraction in the planar domain, with a focus on spatial feature extraction; the other operates in the three-dimensional space, emphasizing spectral feature extraction.

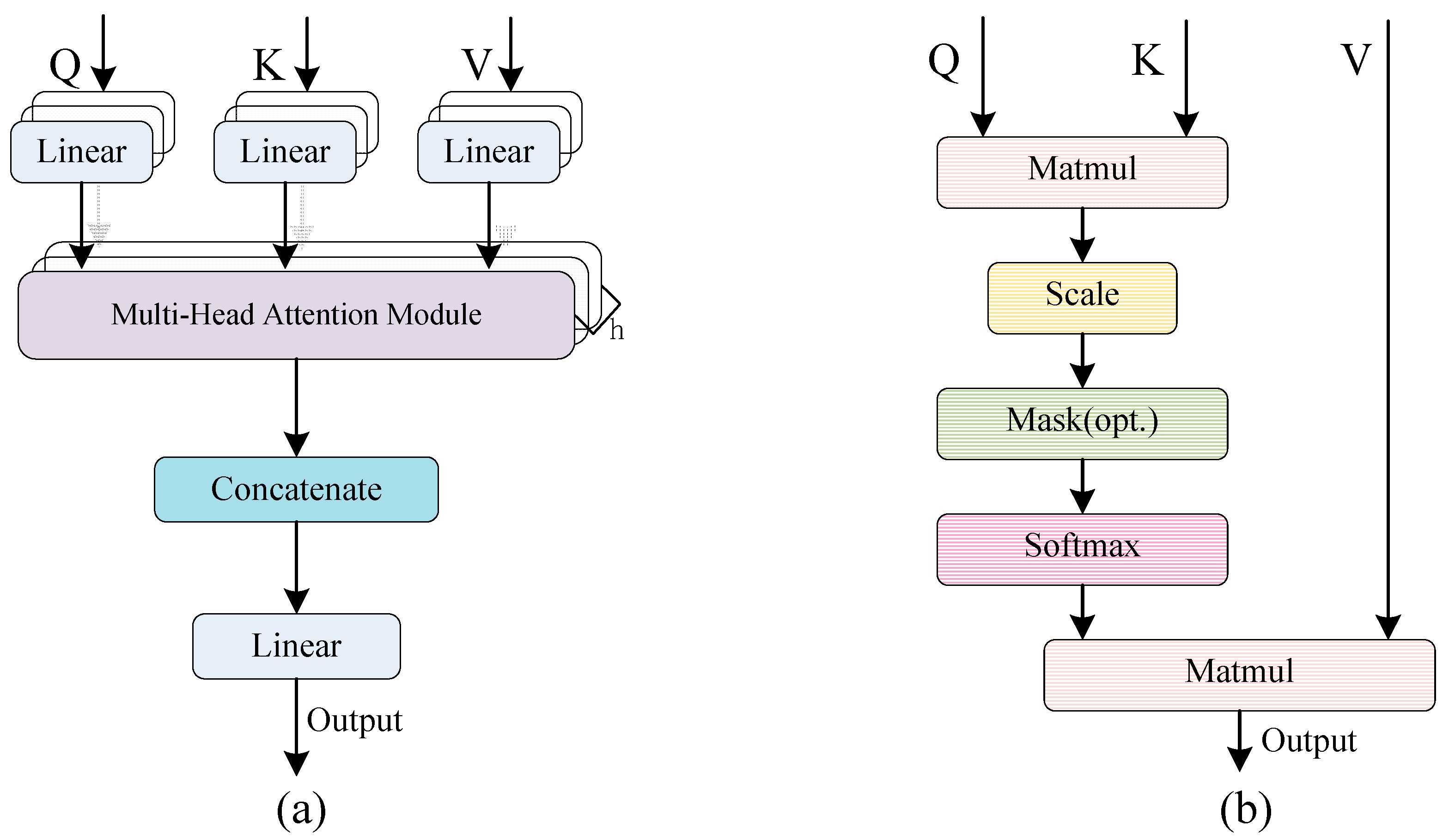

3.3. Cross-Attention Transformer

As the core component of the Transformer architecture, the multi-head self-attention mechanism plays a crucial role in capturing long-range dependencies among features. Inspired by the cross-modal complementarity between elevation features and spectral-spatial features in remote sensing scenarios, this study enhances the multi-head self-attention mechanism by incorporating a feature interaction strategy, thereby constructing a cross-attention Transformer module. The architectural design of CCFormer is elaborated in the left portion of

Figure 2.

The query vector matrix , as a central element of the self-attention mechanism, functions as a dynamic interface that facilitates cross-modal feature interaction. To effectively integrate multi-source remote sensing data, this mechanism computes similarity scores between the query vector matrix and the key vector matrix across different modalities, followed by a weighted aggregation of the value vector matrix , thereby enhancing the spatial-spectral correlation within key regions. Specifically, the query vector matrix from the LiDAR branch offers attention-based guidance for the spectral features of the HSI, whereas the query vector matrix from the HSI branch assesses the discriminative significance of the LiDAR elevation data, establishing a bidirectional cross-modal attention framework. This interactive guidance approach enables the adaptive fusion of elevation and spectral-spatial information through dynamic feature weighting, laying a theoretical foundation for the collaborative representation of multi-modal remote sensing data.

Specifically, the token sequences of HSI and LiDAR are first subjected to feature normalization using LayerNorm. Subsequently, each modality undergoes feature projection through linear transformation and chunking operations to generate the corresponding query matrix (

and

), key matrix (

and

), and value matrix (

and

). In the cross-modal interaction phase, the affinity score is computed by constructing the similarity matrix between the query vector from one modality and the key vector from the other. To preserve spatial relative position information, rotary position encoding is integrated into this computation. Finally, regularization is applied through the Dropout mechanism. This process can be represented by the following formula:

where

and

denote the similarity scores between the LiDAR elevation feature query vector

and the HSI key vector

, and between the HSI query vector

and the LiDAR key vector

, respectively.

,

,

, and

are derived from the matrices

,

,

, and

, respectively. The function

refers to the rotary position embedding operation, and

denotes the dropout regularization operation.

After that, perform a weighted sum of the two sets of cross-modal attention scores

and

with a set of value vectors

, respectively. The resulting output is then multiplied by another set of value vectors

to derive the final single-head attention output

. As illustrated in the following equation:

where

, and

are derived from the matrices

and

, respectively.

represents a linear function, specifically expressed as

(

denotes the weight matrix and b represents the bias term, both parameters are learnable). Subsequently, the output of multi-head attention is obtained through a weighted concatenation of the outputs from individual single-head attention mechanisms. The process is illustrated in Equation (4) presented above.

Then, the output of the multi-head attention is first combined with the original input through a residual connection, which helps alleviate the issue of gradient vanishing. Following this, a feed-forward neural network is applied to extract high-order features, ultimately yielding the output tensor

. This procedure can be concisely expressed using the following mathematical formulation:

where

represents the input of the multi-head attention.

,

and

denote the multi-head attention, the layer normalization operation, and the feed-forward neural network, respectively. And

,

and

are, respectively, the weight matrix of the first layer and the weight parameter of the second layer. Both

and

are biases.

4. Experiments and Analysis

In this section, we first present the experimental data and subsequently provide a detailed description of the experimental settings. To evaluate the performance of the proposed network, we conducted comparative experiments with several widely adopted models. Furthermore, ablation studies were carried out to validate the contribution of each individual component within the model.

4.1. Datasets Description

To comprehensively evaluate the applicability and effectiveness of the proposed method across diverse scenarios, we selected three widely recognized remote sensing datasets: MUUFL, Trento, and Houston2013. These datasets encompass a range of typical land cover types, including parks, agricultural areas, university campuses, and urban environments. They vary in terms of spatial resolution and spectral bands, thereby enabling the simulation of realistic application conditions. Detailed parameter specifications for each dataset are provided in

Table 1.

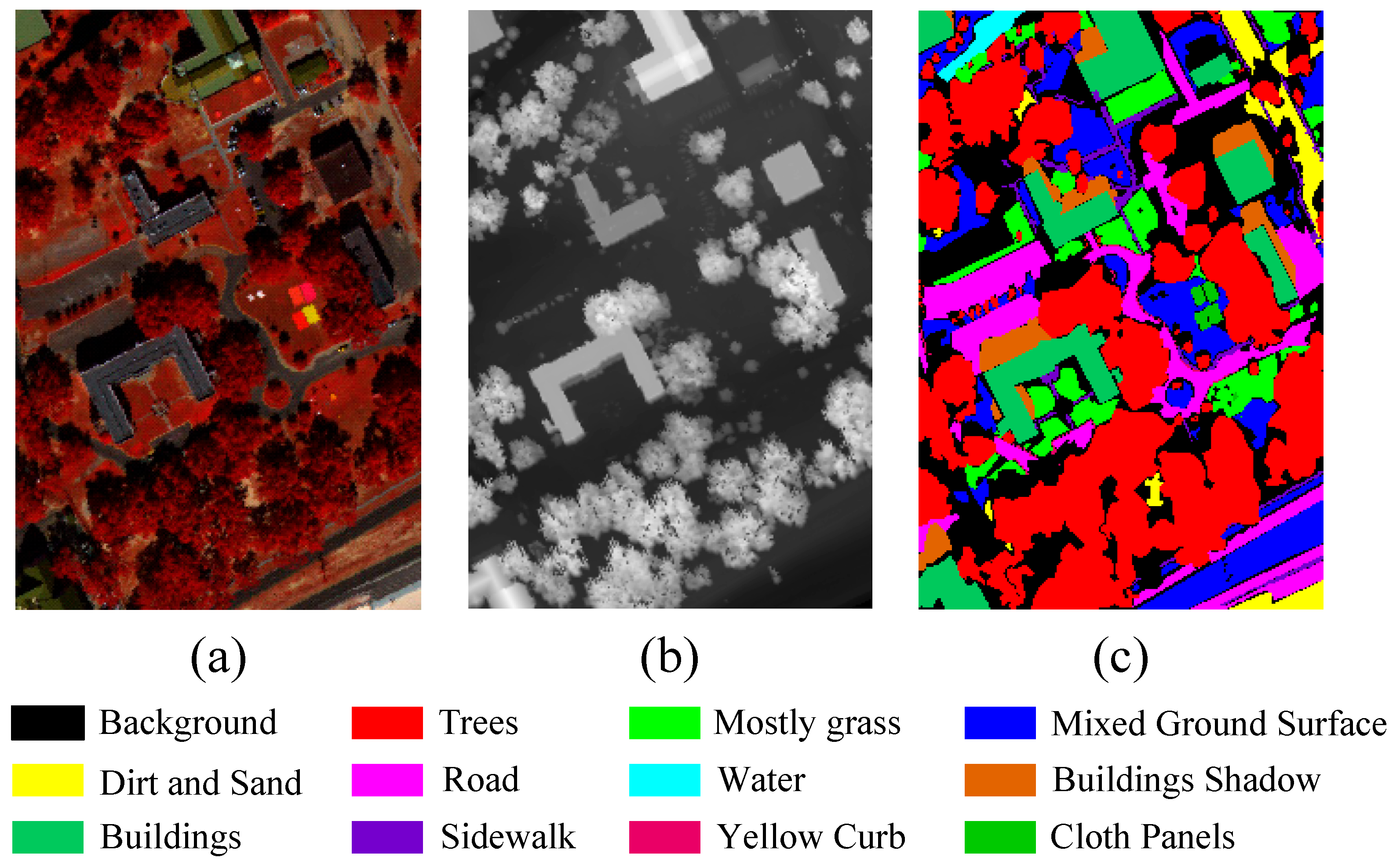

MUUFL: The MUUFL dataset integrates HSI and LiDAR data, which were collected in November 2010 over the campus of the University of Southern Mississippi Gulf Park in Long Beach, Mississippi, USA. The hyperspectral data were acquired using the ITRES CASI-1500 sensor, covering a spectral range of 375–1050 nm (0.38–1.05 µm) with 64 spectral bands. The spatial dimensions of the HSI data are 325 × 220 pixels, with a spatial resolution of 0.54 × 1.0 m

2. The LiDAR data were collected using the Gemini airborne system, with a spatial resolution of 0.60 × 0.78 m

2. The dataset comprises 11 land cover classes and includes a total of 53,687 labeled samples.

Figure 5 presents the false-color composite of the hyperspectral data, the digital surface model (DSM) derived from LiDAR data, and the land cover map. The distribution of samples across the training and test sets is summarized in

Table 2.

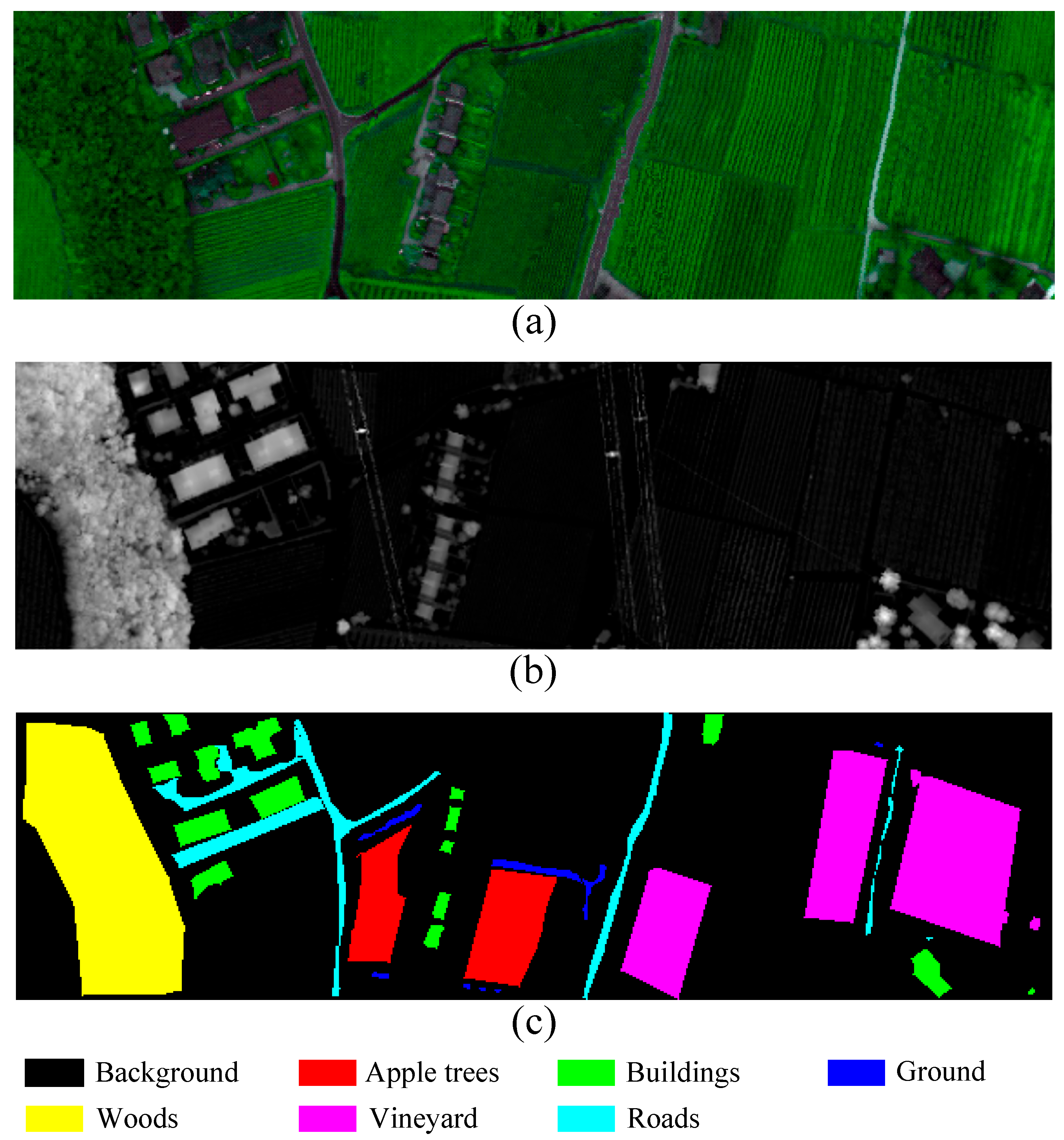

Trento: The Trento dataset combines HSI and LiDAR data collected from rural areas of Trento, located in southern Italy. The HSI data was captured using the airborne Eagle sensor and comprises 63 spectral bands ranging from 0.42 to 0.99 µm. The dataset covers an area of 166 × 600 pixels with a spatial resolution of 1 m. The LiDAR data were obtained from the Optech ALTM 3100 EA airborne sensor, which produced a single raster dataset and, together with the HS data, was used to generate a DSM. This dataset is primarily intended for land cover classification involving six distinct categories and includes a total of 30,214 labeled samples.

Figure 6 presents the hyperspectral false-color image of the Trento data, the LiDAR-derived DSM, and the ground truth map. The distribution of samples in the training and test sets is summarized in

Table 3.

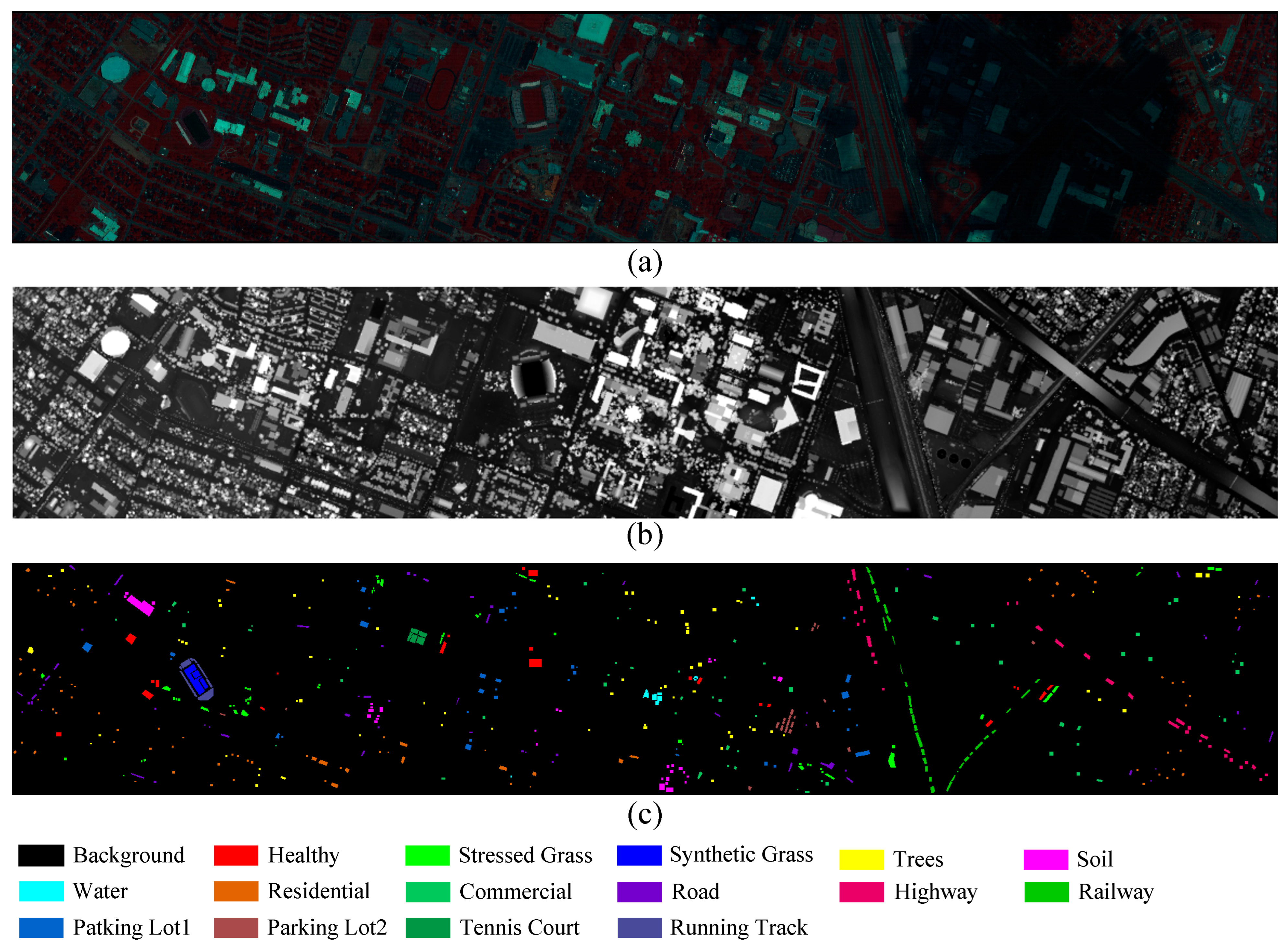

Houston 2013: The Houston 2013 dataset was provided by the IEEE GRSS Data Fusion Contest. It was collected in June 2012 by the National Center for Airborne Laser Mapping (NCALM) in the United States, covering areas within and around the campus of the University of Houston, Texas. This dataset combines HSI data with a DSM derived from LiDAR. The HS data was captured using the ITRES CASI-1500 sensor, comprising 144 spectral bands within the wavelength range of 0.38 to 1.05 µm. It has a spatial resolution of 2.5 m and a scene size of 349 × 1905 pixels. The LiDAR data is single-band and matches the HS data in both spatial resolution and scene dimensions. The primary objective of this dataset is to enable the classification of 15 distinct land use/land cover categories, and it includes a total of 15,029 labeled samples.

Figure 7 presents the false-color HSI of the Trento data, the LiDAR-derived DSM, and the ground truth map. The distribution of samples in the training and test sets is summarized in

Table 4.

4.2. Experimental Setup

This study constructs an experimental system using the PyTorch 1.13.1 framework. The hardware environment is equipped with an NVIDIA RTX 4090 GPU (24 GB VRAM, Santa Clara, CA, USA), and the programming environment is established on Python 3.8. The experimental parameters are configured as follows: 20 training samples are assigned to each class, with an input window size of 11 × 11 pixels. During training, the model is trained for 200 epochs, with an initial learning rate of 0.001, a batch size of 512, and the Adam optimizer is employed for model optimization; additionally, a dropout probability of 0.4 is applied to mitigate overfitting. At the network architecture level, the number of hidden channels is set to 32, the encoder adopts a two-layer structure with 6 attention heads, the temperature parameter is set to 1.0, and the hierarchical loss coefficient is set to 0.005.

The experiment uses the Overall Accuracy (OA), Average Accuracy (AA), and Kappa coefficient as quantitative indicators to evaluate the performance of the method. Among them, OA is the proportion of correctly classified pixels to the total number of pixels; AA is the average of the accuracies of each category; the Kappa coefficient measures the overall performance of the classifier by statistically analyzing the consistency between the model predictions and the true labels. The specific mathematical representation formulas are as follows.

Among them, denotes the number of pixels that truly belong to class i but are classified as class j (with representing the number of correctly classified samples). c refers to the total number of object class categories, and indicates the total number of test samples. Furthermore, and denote the sum of the i-th row and the sum of the i-th column of the confusion matrix, respectively.

4.3. Classification Results

To evaluate the performance of the proposed method, we conducted comparative experiments involving seven SOTA models: HybridSN [

15], SSFTT [

16], DCTN [

23], MS2CANet [

24], MFT [

8], MADNet [

25], and PyionNet [

3]. In the experiment, HybridSN, SSFTT, and DCTN—recognized as classical models for HSI classification—utilize a single HSI as input. In contrast, MS2CANet, MFT, MADNet, and PyHypeNet, which are SOTA models for HSI-LiDAR data fusion and classification, incorporate both the HSI and the DSM derived from LiDAR as input modalities.

HybridSN is a hybrid neural network specifically designed for HSI classification. Its core innovation lies in integrating the advantages of 3D convolution and 2D convolution to effectively capture the spatial-spectral joint features in hyperspectral data. SSFTT is based on the Transformer architecture. Through spatial-spectral feature fusion and a self-attention mechanism, it achieves high accuracy and robustness in the HSI classification task. DCTN employs a dual-branch convolutional Transformer architecture and incorporates an efficient interactive adaptive mechanism, thereby achieving outstanding performance in the HSIC task. MS2CANet is a multi-scale pyramid fusion framework that incorporates spatial-spectral cross-modal attention. It enhances the model’s capacity to learn multi-scale information, thereby improving classification accuracy. MFT is a multi-modal fusion transformer network that incorporates the mCrossPA mechanism to integrate complementary information sources with HSI tokens for land cover classification. MADNet is a multi-level attention-based dynamic scaling network that employs an attention module to extract features from HSIs and LiDAR data across multiple levels. PyionNet incorporates a pyramid multi-scale feature extraction module and a progressive cross-fusion mechanism, thereby significantly enhancing the classification accuracy of multi-source data integration.

To ensure the fairness of the experiment, all models are configured with the optimal parameters reported in the literature and are independently executed 10 times using the same training and test sets. Random errors are minimized by statistically analyzing the average performance and standard deviations, thereby improving the comparability across different methods.

4.3.1. Quantitative Analysis

The classification performance of the eight methods was evaluated using three datasets: MUUFL, Trento, and Houston2013. The results of these evaluations are summarized in

Table 5,

Table 6 and

Table 7. For clarity, the highest OA, AA, Kappa coefficient, and per-class classification accuracies are highlighted in bold.

Table 5 presents the classification results of various methods on the MUUFL dataset. The results indicate that the proposed method in this study achieves the highest performance, with an OA, AA, and Kappa coefficient of 88.35%, 87.03%, and 87.43%, respectively. These metrics are 2.27%, 1.46%, and 1.79% higher than those of the second-best method, PyionNet. PyionNet demonstrates relatively balanced classification accuracy across object classes, achieving an OA of 86.08%. In contrast, HybridSN performs the least effectively, with an OA of only 71.5%. Other methods improve feature fusion and spatial-spectral relationship modeling by incorporating attention mechanisms or Transformer architectures, resulting in significantly higher classification accuracy compared to HybridSN. Furthermore, all classification methods that integrate HSI and LiDAR data achieve OA values exceeding 80%, which is notably higher than the performance of the three methods based solely on single-source HSI data.

Table 6 presents a comparison of the classification performance of eight different methods on the Trento dataset. This dataset was acquired from a rural area characterized by large, homogeneous farmlands with significant inter-class spectral variations, thereby offering favorable conditions for classification. The experimental results indicate that, with the exception of HybridSN (OA = 85.51%), the OA of the remaining seven methods exceed 94%. Among them, PyionNet achieved commendable classification performance, with the OA, AA, and Kappa coefficient reaching 98.09%, 97.23%, and 97.65%, respectively. This performance can be attributed to its mechanism of multi-scale feature extraction and cross-fusion. Notably, the method proposed in this study demonstrated superior results, achieving an OA of 99.02%, an AA of 98.59%, and a Kappa value of 99.15%.

Table 7 presents a comparison of the classification performance of eight models on the Houston 2013 dataset. The results indicate that the five methods employing joint classification of HSI and LiDAR data yield significantly better performance than the three methods based on single-source HSI classification. Furthermore, MS2CANet, MADNet, and PyionNet demonstrate superior performance in certain specific categories. For instance, PyionNet achieves the highest classification accuracy for the categories Trees, Water, and Parking Lot2. Notably, the method proposed in this study exhibits outstanding performance, achieving the highest OA (91.85%), AA (92.33%), and Kappa coefficient (91.63%). These results not only validate the effectiveness of multi-modal data fusion in classification tasks but also highlight the superiority of the proposed method in handling complex urban environments.

In conclusion, the following conclusions can be drawn: Across the three datasets, models based on multi-source data demonstrate superior classification performance compared to single-source input models. Among the single-source HSI models, DCTN significantly outperforms SSFT and HybridSN in classification accuracy, as it integrates the technical strengths of both CNNs and Transformer. Among the multimodal models, MADNet employs a spectral angle attention mechanism that enables dynamic scale selection, resulting in overall performance that surpasses that of MS2CANet and MFT, which rely on simple fusion strategies. Furthermore, PyionNet exhibits strong competitiveness due to its efficient fusion architecture. Particularly on the Trento dataset, it achieves an OA of 98.09%, with consistently high and balanced performance across all categories on the other two datasets. Notably, the method proposed in this study achieves the highest classification accuracy across multiple object categories, with an overall accuracy surpassing all comparative models, thereby fully demonstrating its effectiveness and robustness.

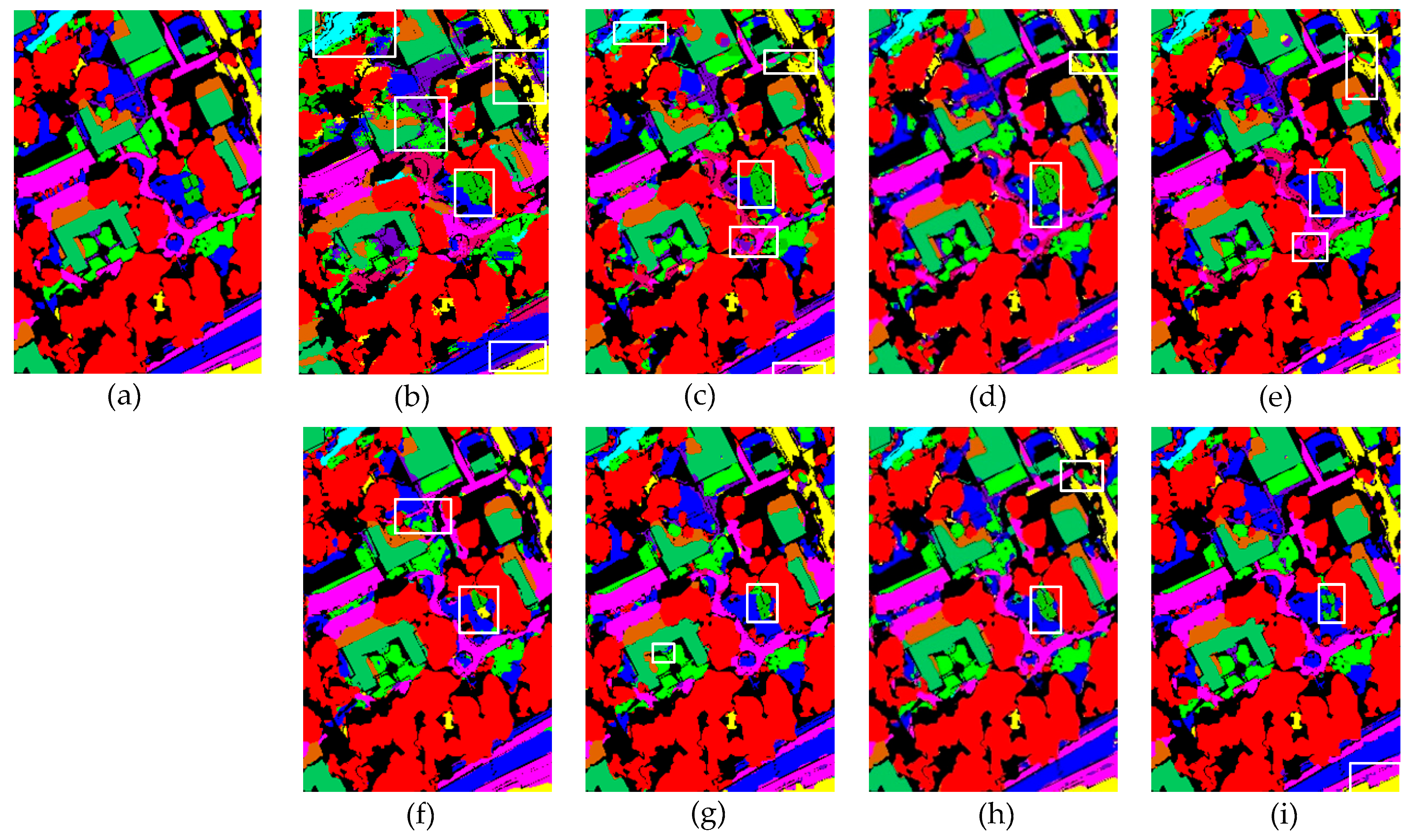

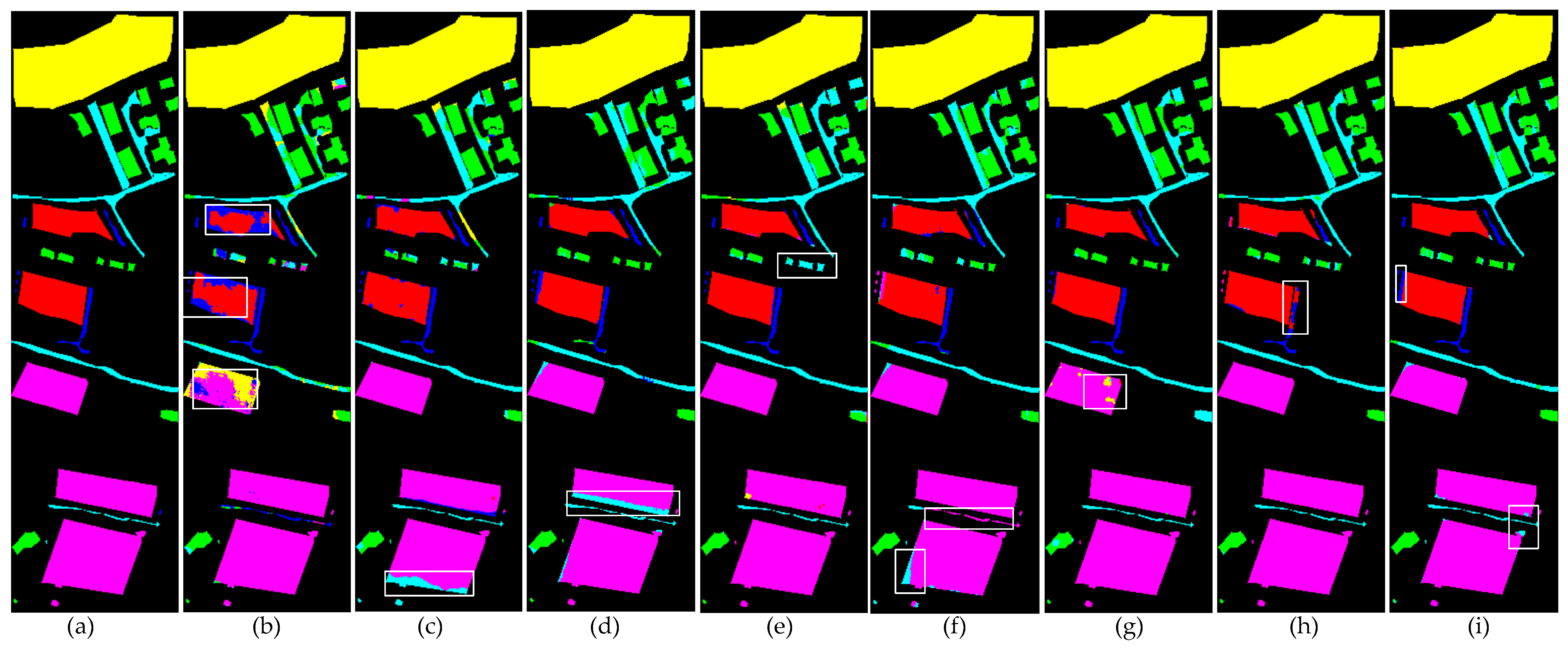

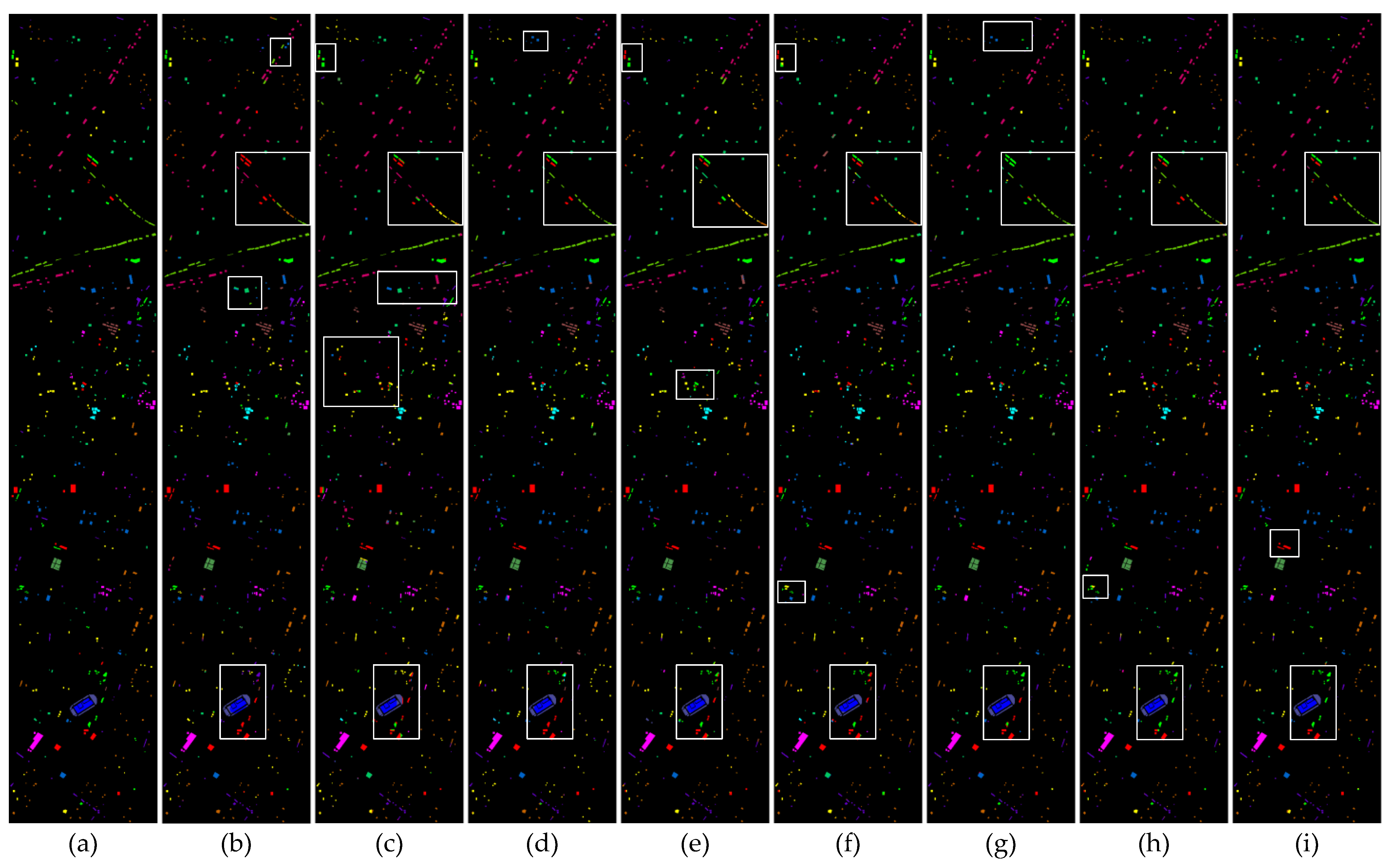

4.3.2. Qualitative Analysis

To systematically evaluate the performance differences between the proposed method and existing comparative approaches, this study conducted qualitative visual analysis experiments on three publicly available datasets. The experimental results are presented in

Figure 8,

Figure 9 and

Figure 10. Through direct comparison of the classification performance of different methods in representative scenarios, the advantages of the proposed method in terms of boundary preservation, detail representation, and noise suppression can be further substantiated.

Figure 8 presents the classification visualization results of each model on the MUUFL dataset. Due to the relatively low spectral discrimination among multiple adjacent object classes in this dataset, single-source input models (HybridSN, SSFTT, DCTN) exhibit noticeable confusion at the boundaries of different land cover types, particularly at the junctions of water/grass/forest and sand/mixed land surfaces in the upper region. Among the multi-source data models, PyionNet demonstrates clear classification boundaries and a competitive visual performance. In comparison, the method proposed in this study excels in detail representation and boundary preservation, effectively mitigating inter-class misclassification and the blurring effect.

Figure 9 presents a comparison of the classification visualization results obtained by various methods on the Trento dataset. As can be observed from the figure, HybridSN produces a significant amount of noise in the vineyard and apple tree regions. SSFTT, DCTN, and MS2CANet exhibit minor misclassifications at the boundary between the lower vineyard and the road. MFT demonstrates noticeable classification errors in the building area. PyionNet shows relatively better performance in these regions, with only a limited number of noisy points appearing in the central ground area. In contrast, the method proposed in this study not only minimizes classification errors but also achieves the best overall classification performance.

Figure 10 presents the visual classification results of eight algorithms on the Houston 2013 dataset. This dataset is characterized by a relatively scattered sample distribution and a complex urban scene. As a result, the first six methods exhibit noticeable classification errors in regions such as railways, stressed grass areas, and the junctions between running tracks and grass in the lower portion of the map. In contrast, PyionNet and the method proposed in this study demonstrate superior classification performance, with no evident misclassification observed.

4.4. Ablation Experiment

To evaluate the effectiveness of multimodal fusion in the system, this study designed two experimental frameworks—single-modal (HSI/LiDAR) and dual-modal fusion—for comparative analysis. Experimental results (as presented in

Table 8) demonstrate that, across three standard remote sensing datasets, the dual-modal fusion approach substantially outperforms the single-modal approach in various classification metrics. Notably, the performance advantage is more pronounced in test sets characterized by higher scene complexity. This comparative analysis confirms the superiority of the multimodal data fusion strategy in remote sensing image classification, suggesting that the complementary nature of multi-source information can effectively enhance the model’s ability to distinguish complex land cover types.

To systematically evaluate the effectiveness of each module within the model, this study conducted three ablation experiments: (1) EXP1 excluded the shallow multi-scale feature extraction module and retained only the CCformer encoder along with the classifier; (2) EXP2 removed the CCformer encoder and instead employed a basic feature concatenation and fusion strategy in conjunction with the shallow multi-scale feature extraction module; (3) Full denotes the complete model architecture. As presented in

Table 9, the experimental results demonstrate that although the CCformer encoder alone (EXP1) achieves relatively high classification accuracy, the integration of the shallow feature extraction module with the CCformer encoder leads to further performance enhancement. Specifically, the full model significantly outperforms the two simplified variants in terms of overall accuracy (OA) and average accuracy (AA), confirming the effectiveness of combining multi-scale feature extraction with the Transformer-based architecture. This comparison clearly illustrates the complementary nature of the model’s components, where the shallow feature extraction module provides a more robust and semantically rich foundation for subsequent high-level feature learning.