1. Introduction

The rapid penetration of distributed energy resources and the advancement of smart metering infrastructure, while driving the intelligence of distribution networks (DNs), also pose challenges concerning system stability and protection. DN operators must thoroughly understand grid conditions, avoid faults, and promptly address any that occur to ensure power supply reliability. Therefore, it is essential to develop effective and efficient fault diagnosis methods to promptly detect, locate, and mitigate faults, ensuring the stable and reliable operation of DNs.

Traditional methods include impedance-based methods [

1,

2], signal injection methods [

3,

4], traveling wave methods [

5,

6,

7], and matrix methods [

8,

9]. However, in addition to the limitations of the methods themselves, the intermittent and distributed characteristics of renewable energy also challenge these traditional coordination methods in many aspects, such as bidirectional power flow [

10].

Early data-driven approaches primarily utilized multilayer perceptron (MLP) and convolutional neural network (CNN) architectures. Surin et al. [

11] use five MLP networks to classify inverter output voltages and identify fault types and locations in multilevel inverter systems. Javadian et al. [

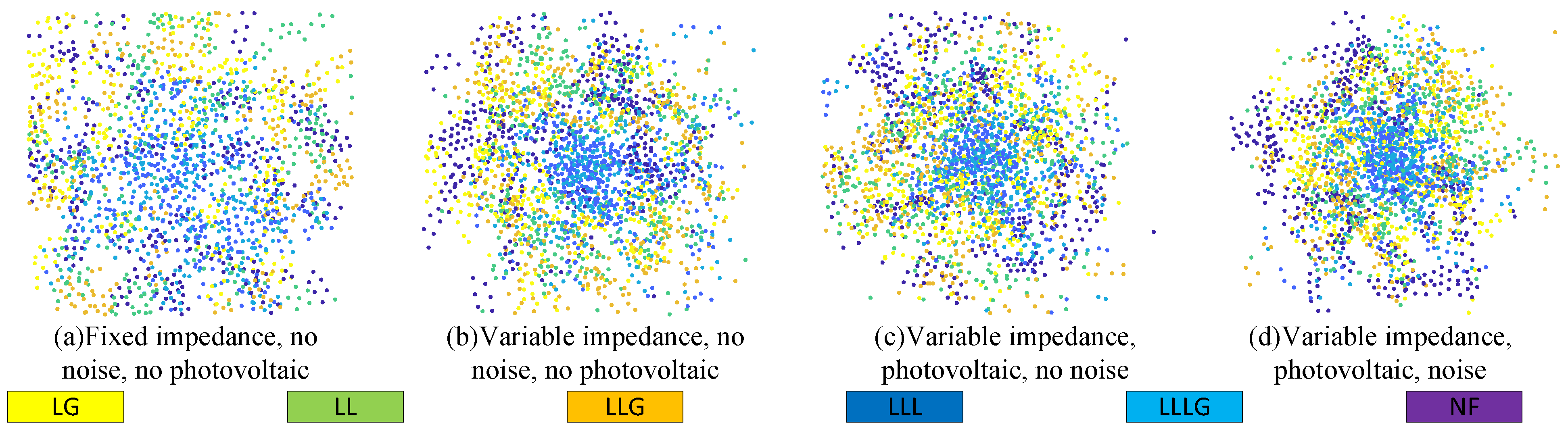

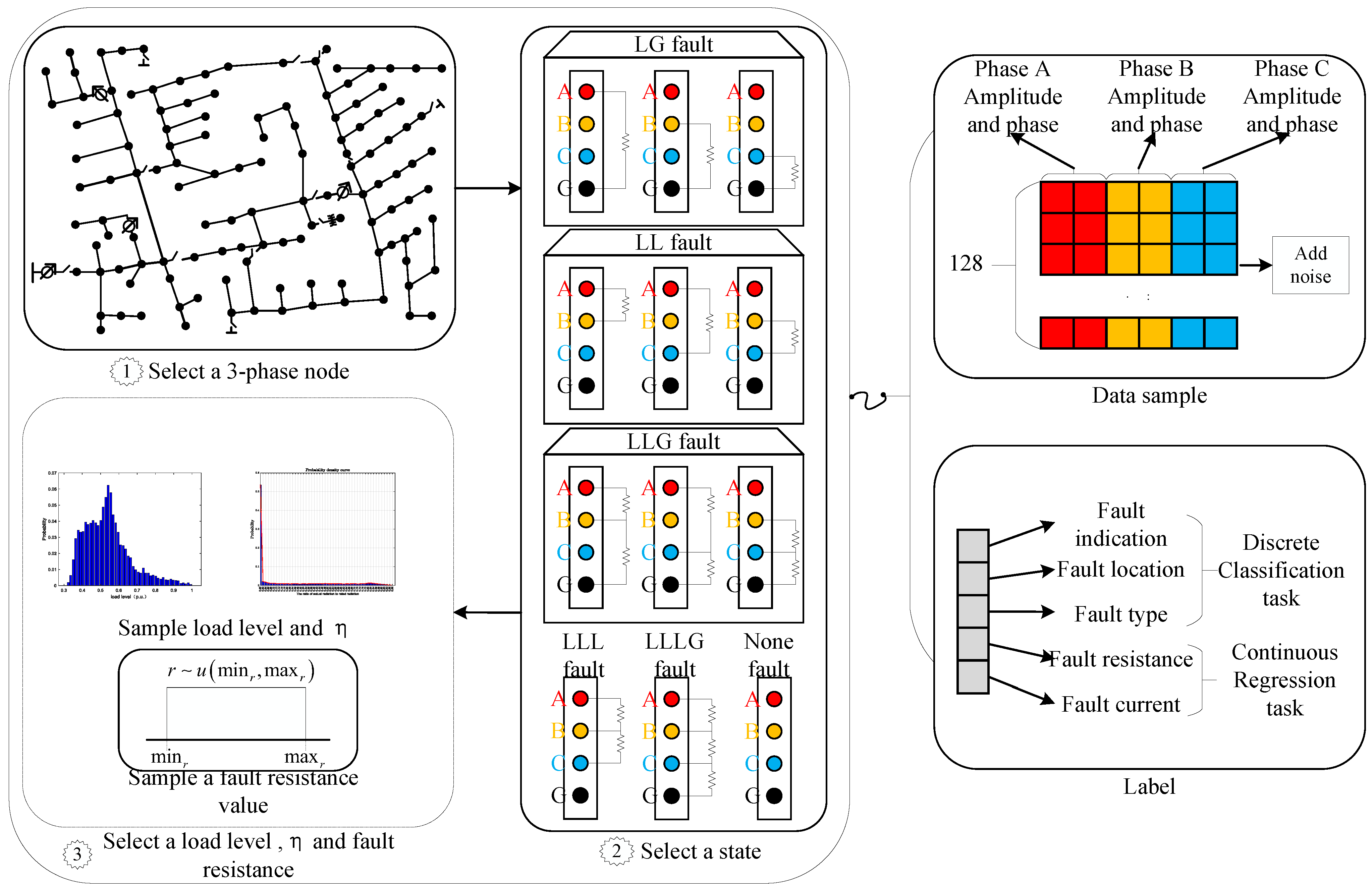

12] used normalized fault currents as input to mitigate the impact of fault impedance on positioning results. Nevertheless, in practical scenarios, measurement noise, variable fault impedance, and the intermittency of new energy sources can obscure fault signatures, as illustrated in

Figure 1. This figure shows the impact of adding and varying fault impedance, the photovoltaic system, and measurement noise on the data distribution, where each color signifies distinct scenarios, such as NF, LG, LL, LLG, LLLG, and LLL. Compared with (a), the other three graphs show shorter mapping distances between various faults, which means weaker fault characteristics and higher discrimination difficulty. The phenomenon shown in (b) and (c) confirms that the intermittency and randomness of new energy power generation lead to stronger dynamics and randomness in fault characteristics. In (d), the mapping distances of various faults after dimensionality reduction are the shortest. This indicates that the fault characteristics, when these three factors are taken into account, are less distinct.

Many researchers have proposed solutions to deal with these problems. For example, to address measurement noise, Aljohani et al. [

13] combine the Stockwell transform (ST) with a multilayer perceptron neural network (MLP-NN), utilizing the time–frequency features of fault signals for classification. Guo et al. [

14] introduced a deep learning approach based on the continuous wavelet transform (CWT) and CNNs to enable CNNs to automatically extract features and detect fault feeders. Li et al. [

15] establish a relationship between the admittance matrix

, the voltage change matrix

, the current change matrix

, and the unbalanced current

based on Kirchhoff’s laws. Then they further confront the variable fault impedance in the model, but they do not review a scenario with new energy. In contrast, Paul [

16] and Siddique [

17] propose CNN-based approaches for the new energy scenario. However, CNN-based methods require extracting temporal features from signals, necessitating the sampling of transient fault waveforms. Most existing power grid data are uploaded every five minutes, which fails to meet the algorithms’ need for high-resolution data. Moreover, as DNs expand, the above methods face more challenges beyond the lack of high-resolution data, such as the low training sample rate caused by the scarcity of fault records. In addition, the large scale of DNs causes low observation rates due to the high cost of phasor measurement unit (PMU) placement. These issues are rarely addressed in the CNN-based methods described above.

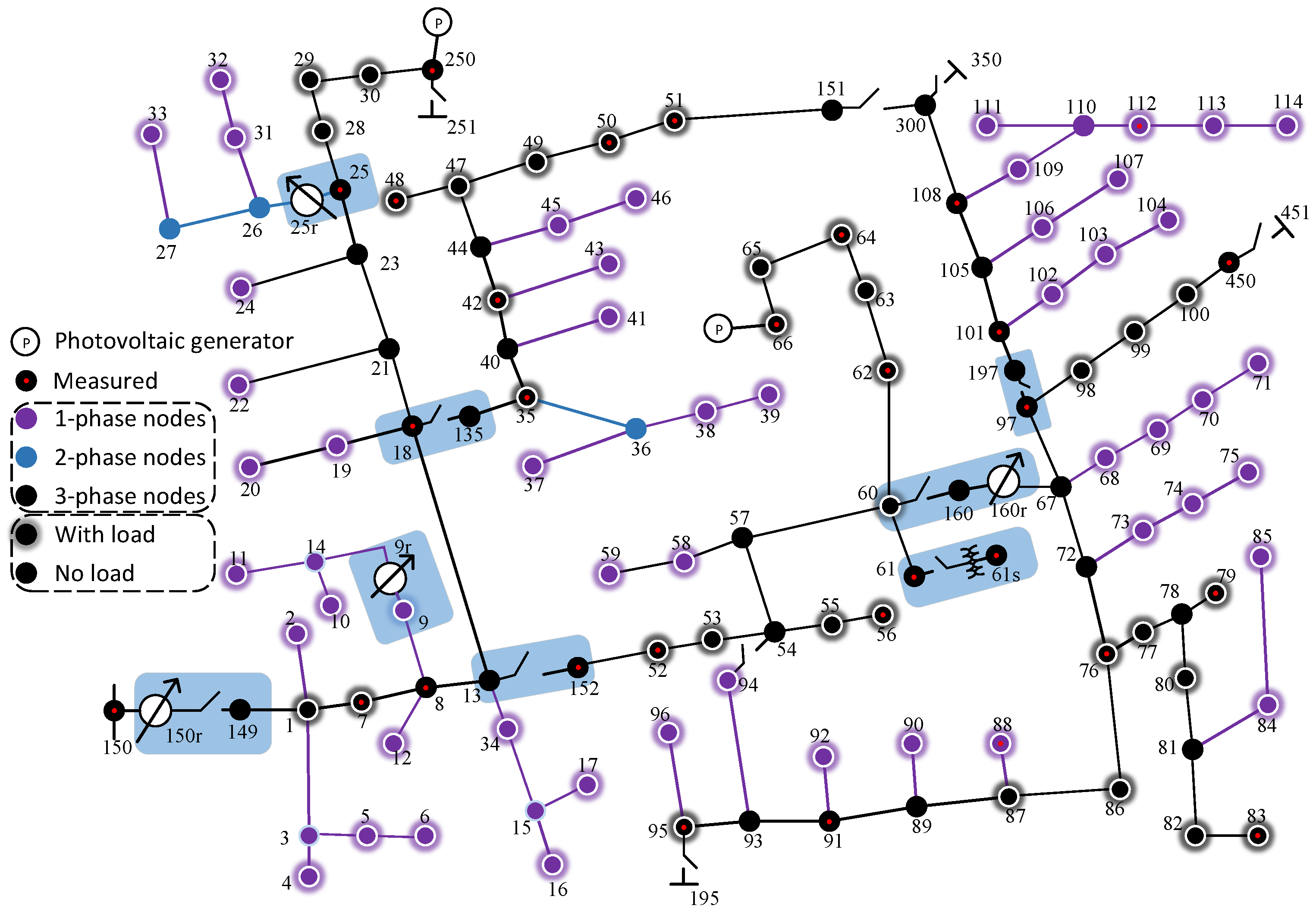

Schlichtkrull et al. [

18] indicated that GCNs can be utilized to model relational data. Since then, graph neural networks (GNNs) have attracted significant attention for the fault diagnosis task of DNs because they can explicitly utilize the inherent topological information of the DN. The DN can be modeled as a homogeneous graph based on node lines, and the voltage data measured by PMUs can be used to fit GNNs. A notable study [

19], by integrating voltage vectors and branch current data and leveraging GCNs’ spatial correlation capture capabilities, improved fault location accuracy in scenarios with measurement noise and variable fault impedance. Mo et al. [

20] proposed a distribution network fault diagnosis method based on a depth graph attention network (GAT) to solve the problem of sparse measurement points in constant conditions. Li [

21], combining the scenarios of the previous two studies, explored a fault location architecture under sparse measurement nodes to enhance fault location performance in environments with measurement noise and variable fault impedance. Based on these works, Chanda et al. [

22] introduced a heterogeneous multitask learning GNN framework that simultaneously performs five tasks and independently addresses the issues of sparse measurement points and low training sample rates. Nevertheless, all the aforementioned studies were conducted in distribution network settings without new energy sources, and they ignored the analysis of the impact of new energy on fault characteristics, as shown in

Figure 1d. In research on new energy fields, Ngo et al. [

23] proposed a deep GAT that combines one-dimensional convolutional layers with graph attention layers, enhancing the model’s ability to process time-series and spatial data but requiring bus voltage and branch current measurements at 1 kHz. Prasad et al. [

24] presented a GNN-based fault diagnosis framework (GFDF). They integrated the multihead attention mechanism with current measurements, topological information, and line parameters for fault diagnosis but did not analyze influencing factors such as low observation rates.

It is worth noting that recently a class of models known as Physics-Informed Neural Networks (PINNs) has garnered significant attention among many researchers. PINNs enable the synergistic combination of mathematical models and data [

25]. Falas et al. [

26] proposed a hybrid approach utilizing PINNs, leveraging graph neural network architectures to more effectively process the graph-structured data of power systems, and incorporated physical knowledge constructed from the branch current formulation, making the method adaptable to both transmission and distribution systems. Ngo et al. [

27] employed domain-specific physical knowledge and graph neural networks to estimate bus voltage magnitude and phase angles under load change conditions. Unlike [

19,

22], these PINN-based methods are more commonly applied in the domain of distribution network state estimation and often involve the use of temporal information. Additionally, key information such as active power P and reactive power Q is crucial for constructing physics-informed models, which are not within the scope of the present study and thus are not discussed in detail.

Table 1 summarizes existing GNN methods used for fault diagnosis. Apart from the methods from [

23,

24], all the other methods are based on steady-state fault data recorded when the electrical quantities (voltage, current, etc.) of a power system enter a new steady-state operating point after a fault occurs. In conclusion, existing methods based on steady-state fault data have difficulties in addressing the challenge of fully considering the combined impact of sparse measurements, low training sample rates, measurement noise, variable fault impedance, and new energy fluctuations on the model. Under the co-influence of multiple perturbations, the progressive collapse of inter-class separation among fault samples markedly compromises the diagnostic accuracy of existing approaches.

Furthermore, almost all of the above models adopt the general GNN framework. The propagation of distribution network faults is closely related to network line parameters and the superior–subordinate relationship between nodes. Effective utilization of this coupling information is conducive to improving the accuracy of the fault diagnosis model. On the other hand, the above-mentioned models, such as [

20], adopt a dynamic aggregation mechanism to address issues such as sparse measurement nodes, but the receptive field of local attention is limited. The computational complexity of global attention is high, and it is vulnerable to interference from redundant information and measurement noise. Furthermore, they processed the electrical quantity characteristics and topological structure separately, and the attention mechanism failed to fully integrate the electrical–topological joint features. Therefore, our work can be summarized as follows:

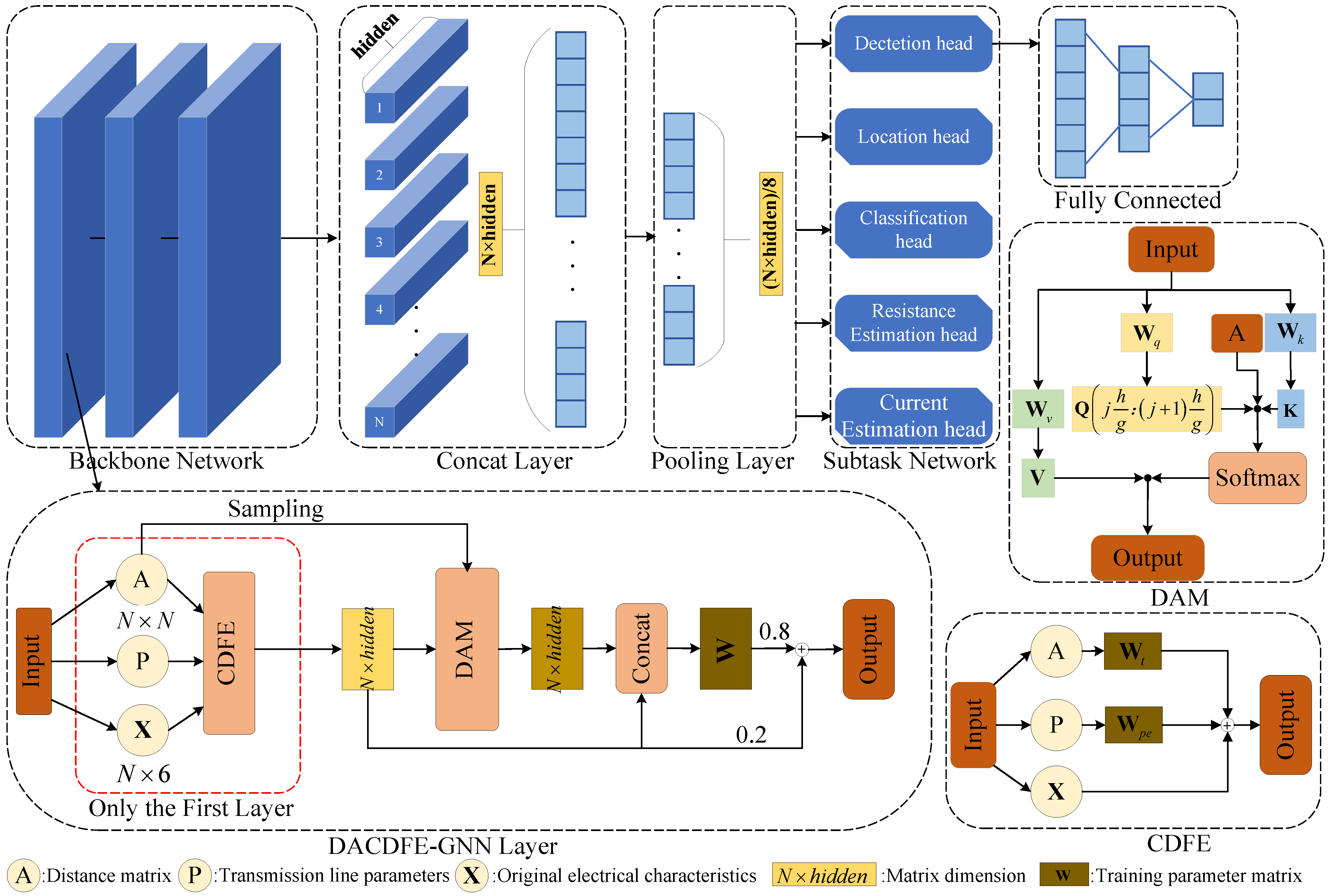

A location coding method called coupled dual-field encoding (CDFE) is proposed. A trainable location coding matrix is designed by using the transmission line characteristics and distance parameters of the connecting nodes to assist the model in understanding the law of fault propagation and learning the coupling relationship between electrical features and transmission line characteristics.

A dynamic adaptive graph structure-aware aggregation mechanism (DAM) is proposed. The calculation of the attention coefficient is guided by the sampled relation matrix, fully integrating the electrical–topological joint features, improving the generalization ability of the model and better capturing the rich feature information after CDFE without increasing additional computational overhead.

In a distribution network environment with characteristics such as sparse measurement points, a low training sample rate, measurement noise, variable fault impedance, and new energy volatility, the performance of the proposed model is superior to that of the baseline model.

The remainder of this paper is organized as follows:

Section 2 first outlines the objectives and task formulation of the fault diagnosis endeavor. Then a detailed description of the proposed fault diagnosis model, DACDFE-GNN, is provided, including its architecture and key components.

Section 3 sets the experimental parameters for simulation verification and discusses the experimental results. The performance of the proposed model under various conditions is analyzed and compared with existing methods. Additionally, ablation studies are conducted to substantiate the effectiveness of the modules. Finally,

Section 4 offers a summary of the paper and suggestions for future research.

4. Discussion

In this paper, the DACDFE-GNN is designed for a new energy integration DN. This model addresses the challenge of weak fault signals that arise from measurement noise, variable fault impedance, and photovoltaic output fluctuations, especially when only limited nodes are under observation with a low training sample rate. Extensive experiments demonstrate its superiority over baseline methods in key metrics such as noise resilience, low training sample rate, topological changes, and different photovoltaic scenarios. We attribute the success of this model to CDFE and the DAM. The CDFE module guides the model to learn the propagation patterns of fault characteristics among different nodes, helping the model understand the hierarchical relationships between nodes and thereby improving the accuracy of various tasks in fault diagnosis. Then, the DAM effectively captures the rich features encoded by CDFE and enhances the adaptability of the model.

Although this experiment was only verified in the photovoltaic scenario, the model uses steady-state signals for fault diagnosis and thus can easily be extended to other new energy scenarios, such as wind power generation. Moreover, the computational complexity of the CDFE module in the proposed method is closely related to the scale of the distribution network. When the network scale is large, a hierarchical model can be sampled for integration and the network can be divided by levels. The lower-level network serves as a node of the upper-level network, and the proposed model is used to train each network.

Nevertheless, this paper is limited to simulation data. There are still some issues that need to be addressed with respect to real data, such as possible abnormal noise in real data and the scarcity of fault samples. In upcoming work, we will study the modeling of real networks, supplement the scarce fault samples through simulation, and finally use a small amount of real data to transfer the model to practical applications. Regarding the model itself, the effectiveness of the GCN model in real-world settings and the uncertainty from distributed generation’s random access remain areas for future research and improvement. Furthermore, conducting higher-precision estimation of high fault impedance in complex environments and reasonable weight design in multitask architectures will also be part of our future work.