Transparent EEG Analysis: Leveraging Autoencoders, Bi-LSTMs, and SHAP for Improved Neurodegenerative Diseases Detection

Abstract

Highlights

- Novel hybrid architecture: Combined autoencoders with bidirectional LSTM networks for enhanced EEG signal classification, achieving 98% accuracy in distinguishing AD, FTD, and healthy controls.

- Explainable AI integration: Implemented SHAP (SHapley Additive exPlanations) framework to enhance model transparency and identify entropy as the most influential feature for neurodegenerative disease detection.

- Optimal temporal segmentation: Demonstrated that 5-s EEG windows with 50% overlap provide the best balance between classification accuracy and computational efficiency.

- Comprehensive feature extraction: Utilized Power Spectral Density (PSD) analysis across standard frequency bands (Delta, Theta, Alpha, Beta, Gamma) following autoencoder-based dimensionality reduction.

- Superior performance validation: Outperformed traditional machine learning methods (KNN: 38%, SVM: 40%) and unidirectional LSTM (84%) with the proposed Bi-LSTM approach achieving 98% accuracy.

- Clinical applicability focus: Addressed interpretability challenges in deep learning for medical diagnosis, providing feature-level explanations essential for clinical trust and adoption.

Abstract

1. Introduction

2. Related Works

2.1. Feature Extraction Techniques

2.2. Classical Machine Learning Models

2.3. Deep Learning Approaches

2.4. Limitations and Research Gaps

2.5. Motivation and Contribution

- Uses Autoencoders to perform unsupervised feature extraction and reduce the dimensionality of EEG signals while preserving relevant patterns;

- Integrates Bidirectional Long Short-Term Memory (Bi-LSTM) networks to explicitly model temporal dependencies in EEG recordings;

- Incorporates SHapley Additive exPlanations (SHAP) to interpret model predictions at the feature level.

3. Methods and Materials

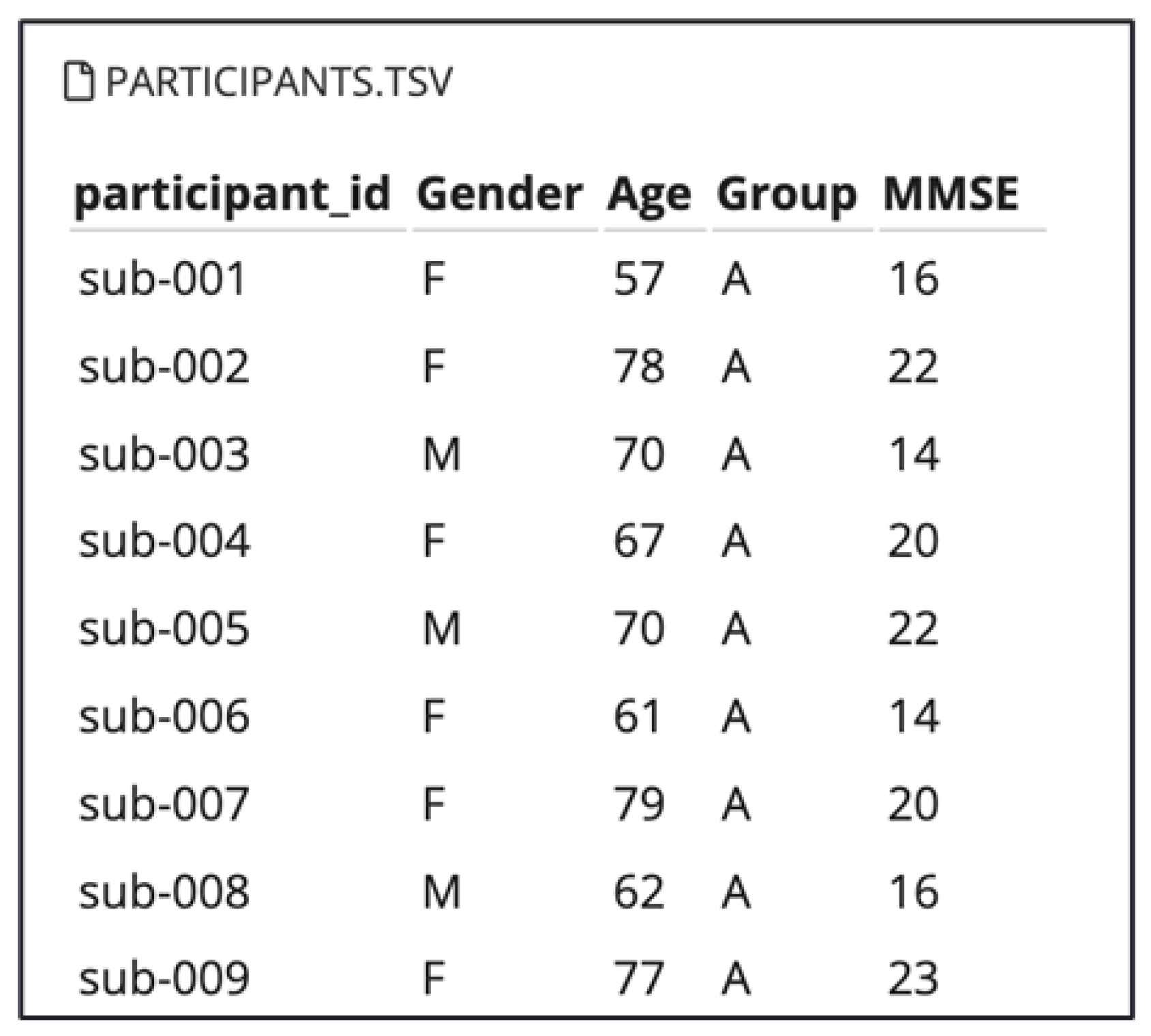

3.1. Dataset Overview

3.1.1. Dataset Imbalance Considerations

- AD group: Mean duration of 13.5 min (min = 5.1, max = 21.3);

- FTD group: Mean duration of 12 min (min = 7.9, max = 16.9);

- CN group: Mean duration of 13.8 min (min = 12.5, max = 16.5).

3.1.2. Recording Duration Impact Mitigation

3.2. Signal Pre-Processing and Feature Extraction

3.2.1. Data Pre-Processing

3.2.2. Signal Segmentation

3.2.3. Duration Bias Mitigation

3.2.4. Data Standardization

3.2.5. Data Reduction

3.2.6. Feature Extraction

- Delta: (1–4 Hz);

- Theta: (4–8 Hz);

- Alpha: (8–13 Hz);

- Beta: (13–30 Hz);

- Gamma: (30–60 Hz).

4. Implemented Approaches

4.1. Machine Learning Models

4.1.1. K-Nearest Neighbors (KNN)

4.1.2. Support Vector Machine (SVM)

4.2. Methodology Justification

4.2.1. Autoencoder Selection

4.2.2. Bidirectional LSTM Selection

4.2.3. Combined Approach Benefits

4.3. Deep Learning Architectures

Class Imbalance Mitigation

4.4. EXplainibilty AI, XAI

5. Results and Discussion

5.1. Clinical Decision Support Applications

5.2. Duration Bias Impact Assessment

5.3. Class Imbalance Impact Assessment

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Babiloni, C.; Lizio, R.; Marzano, N.; Capotosto, P.; Soricelli, A.; Triggiani, A.I.; Cordone, S.; Gesualdo, L.; Del Percio, C. Brain neural synchronization and functional coupling in Alzheimer’s disease as revealed by resting state EEG rhythms. Int. J. Psychophysiol. 2016, 103, 88–102. [Google Scholar] [CrossRef]

- Oltu, B.; Akşahin, M.F.; Kibaroğlu, S. A novel electroencephalography based approach for Alzheimer’s disease and mild cognitive impairment detection. Biomed. Signal Process. Control 2021, 63, 102223. [Google Scholar] [CrossRef]

- Breijyeh, Z.; Karaman, R. Comprehensive Review on Alzheimer’s Disease: Causes and Treatment. Molecules 2020, 25, 5789. [Google Scholar] [CrossRef]

- Alzheimer’s & dementia: The journal of the Alzheimer’s Association. 2024 Alzheimer’s disease facts and figures. Alzheimers Dement 2024, 20, 3708–3821. Available online: https://pmc.ncbi.nlm.nih.gov/articles/PMC11095490/#:~:text=However%2C%20because%20of%20the%20large,and%20other%20dementias%20is%20a (accessed on 16 December 2024).

- Mayo Clinic Staff. Frontotemporal Dementia; Mayo Clinic: Rochester, MN, USA, 2024; Available online: https://www.mayoclinic.org/diseases-conditions/frontotemporal-dementia/symptoms-causes/syc-20354737 (accessed on 16 December 2024).

- Alzheimer’s Research UK. Frontotemporal Dementia (FTD): What Is It and How Close Is a Cure? Alzheimer’s Research UK: Cambridge, UK, 2024. [Google Scholar]

- Harvard Gazette Staff. Start of a New Era for Alzheimer’s Treatment; Harvard Gazette: Cambridge, MA, USA, 2023; Available online: https://news.harvard.edu/gazette/story/2023/06/start-of-new-era-for-alzheimers-treatment/ (accessed on 16 December 2024).

- Alzheimer’s Society. Tests and Scans for Dementia Diagnosis; Alzheimer’s Society: London, UK, 2024; Available online: https://www.alzheimers.org.uk/about-dementia/symptoms-and-diagnosis/dementia-diagnosis/how-to-get-dementiadiagnosis/tests-and-scans#:~:text=Your%20scan%20may%20not%20show,be%20seen%20on%20the%20scan (accessed on 16 December 2024).

- Mouazen, B.; Benali, A.; Chebchoub, N.T.; Abdelwahed, E.H.; De Marco, G. Machine learning and clinical EEG data for multiple sclerosis: A systematic review. Artif. Intell. Med. 2025, 166, 103116. [Google Scholar] [CrossRef]

- Lal, U.; Chikkankod, A.V.; Longo, L. A Comparative Study on Feature Extraction Techniques for the Discrimination of Frontotemporal Dementia and Alzheimer’s Disease with Electroencephalography in Resting-State Adults. Brain Sci. 2024, 14, 335. [Google Scholar] [CrossRef]

- Haggag, S.; Mohamed, S.; Bhatti, A.; Haggag, H.; Nahavandi, S. Noise level classification for eeg using hidden markov models. In Proceedings of the 2015 10th System of Systems Engineering Conference (SoSE), San Antonio, TX, USA, 17–20 May 2015; pp. 439–444. [Google Scholar] [CrossRef]

- Kim, B.; Chun, J.; Jo, S. Dynamic motion artifact removal using inertial sensors for mobile bci. In Proceedings of the 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015; pp. 37–40. [Google Scholar] [CrossRef]

- Mouazen, B.; Benali, A.; Chebchoub, N.T.; Abdelwahed, E.H.; De Marco, G. Enhancing EEG-Based Emotion Detection with Hybrid Models: Insights from DEAP Dataset Applications. Sensors 2025, 25, 1827. [Google Scholar] [CrossRef] [PubMed]

- Miltiadous, A.; Tzimourta, K.D.; Afrantou, T.; Ioannidis, P.; Grigoriadis, N.; Tsalikakis, D.G.; Angelidis, P.; Tsipouras, M.G.; Glavas, E.; Giannakeas, N.; et al. A Dataset of Scalp EEG Recordings of Alzheimer’s Disease, Frontotemporal Dementia and Healthy Subjects from Routine EEG. Data 2023, 8, 95. [Google Scholar] [CrossRef]

- Şeker, M.; Özbek, Y.; Yener, G.; Özerdem, M.S. Complexity of EEG Dynamics for Early Diagnosis of Alzheimer’s Disease Using Permutation Entropy Neuromarker. Comput. Methods Programs Biomed. 2021, 206, 106116. [Google Scholar] [CrossRef] [PubMed]

- Safi, M.S.; Safi, S.M.M. Early detection of Alzheimer’s disease from EEG signals using Hjorth parameters. Biomed. Signal Process. Control 2021, 65, 102338. [Google Scholar] [CrossRef]

- AlSharabi, K.; Bin Salamah, Y.; Abdurraqeeb, A.M.; Aljalal, M.; Alturki, F.A. EEG Signal Processing for Alzheimer’s Disorders Using Discrete Wavelet Transform and Machine Learning Approaches. IEEE Access 2022, 10, 89781–89797. [Google Scholar] [CrossRef]

- Bi, X.; Wang, H. Early Alzheimer’s disease diagnosis based on EEG spectral images using deep learning. Neural Networks 2019, 114, 119–135. [Google Scholar] [CrossRef]

- Pirrone, D.; Weitschek, E.; Di Paolo, P.; De Salvo, S.; De Cola, M.C. EEG Signal Processing and Supervised Machine Learning to Early Diagnose Alzheimer’s Disease. Appl. Sci. 2022, 12, 5413. [Google Scholar] [CrossRef]

- Alves, C.L.; Pineda, A.M.; Roster, K.; Thielemann, C.; Rodrigues, F.A. EG functional connectivity and deep learning for automatic diagnosis of brain disorders: Alzheimer’s disease and schizophrenia. J. Phys. Complex. 2022, 3, 025001. [Google Scholar] [CrossRef]

- Falaschetti, L.; Biagetti, G.; Alessandrini, M.; Turchetti, C.; Luzzi, S.; Crippa, P. Multi-Class Detection of Neurodegenerative Diseases from EEG Signals Using Lightweight LSTM Neural Networks. Sensors 2024, 24, 6721. [Google Scholar] [CrossRef]

- A Dataset of EEG Recordings from: Alzheimer’s Disease, Frontotemporal Dementia and Healthy Subjects. OpenNeuro. [Dataset]; OpenNeuro: 2024. Available online: https://openneuro.org/datasets/ds004504/versions/1.0.8 (accessed on 16 December 2024).

- Kurlowicz, L.; Wallace, M. Mini-Mental State Examination (MMSE). J. Gerontol. Nurs. 1999, 25, 8–9. [Google Scholar] [CrossRef] [PubMed]

- Miltiadous, A.; Tzimourta, K.D.; Giannakeas, N.; Tsipouras, M.G.; Afrantou, T.; Ioannidis, P.; Tzallas, A.T. Alzheimer’s Disease and Frontotemporal Dementia: A Robust Classification Method of EEG Signals and a Comparison of Validation Methods. Diagnostics 2021, 11, 1437. [Google Scholar] [CrossRef] [PubMed]

- Tzimourta, K.D.; Giannakeas, N.; Tzallas, A.T.; Astrakas, L.G.; Afrantou, T.; Ioannidis, P.; Grigoriadis, N.; Angelidis, P.; Tsalikakis, D.G.; Tsipouras, M.G. EEG Window Length Evaluation for the Detection of Alzheimer’s Disease over Different Brain Regions. Brain Sci. 2019, 9, 81. [Google Scholar] [CrossRef]

- Ihianle, I.K.; Nwajana, A.O.; Ebenuwa, S.H.; Otuka, R.I.; Owa, K.; Orisatoki, M.O. A Deep Learning Approach for Human Activities Recognition from Multimodal Sensing Devices. IEEE Access 2020, 8, 179028–179038. [Google Scholar] [CrossRef]

- Petrov, M.; Wortmann, T. Latent Fitness Landscapes: Exploring Performance Within the Latent Space of Post-Optimization Results. 2021. Available online: https://www.researchgate.net/publication/350966235_Latent_fitness_landscapes_-Exploring_performance_within_the_latent_space_of_post-optimization_results (accessed on 16 December 2024).

- Frangopoulou, M.S.; Alimardani, M. qEEG Analysis in the Diag- nosis of Alzheimer’s Disease: A Comparison of Functional Connectivity and Spectral Analysis. Appl. Sci. 2022, 12, 5162. [Google Scholar] [CrossRef]

- Wang, R.; Wang, J.; Yu, H.; Wei, X.; Yang, C.; Deng, B. ower spectral density and coherence analysis of Alzheimer’s EEG. Acta Neuropathol. 2014, 128, 357–376. [Google Scholar] [CrossRef]

- Li, W.; Varatharajah, Y.; Dicks, E.; Barnard, L.; Brinkmann, B.H.; Crepeau, D.; Worrell, G.; Fan, W.; Kremers, W.; Boeve, B.; et al. Data-driven retrieval of population-level EEG features and their role in neurodegenerative diseases. Brain Commun. 2024, 6, fcae227. [Google Scholar] [CrossRef]

- Solomon, O.M., Jr. PSD computations using Welch’s method. [Power Spectral Density (PSD). Brain Commun. 2024, 6. [Google Scholar] [CrossRef]

- Altman, N.S. An Introduction to Kernel and Nearest-Neighbor Nonparametric Regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest Neighbor Pattern Classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Vapnik, V. Statistical Learning Theory; Wiley-Interscience: Hoboken, NJ, USA, 1998. [Google Scholar]

- ScienceDirect. Deep Learning. 2024. Available online: https://www.sciencedirect.com/topics/computer-science/deep-learning (accessed on 16 December 2024).

- Gatfan, S.K. A Review on Deep Learning for Electroencephalogram Signal Classification. J.-Al-Qadisiyah Comput. Sci. Math. 2024, 16, 137–151. [Google Scholar] [CrossRef]

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A Review on the Long Short-Term Memory Model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Networks 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Nohara, Y.; Matsumoto, K.; Soejima, H.; Nakashima, N. Explanation of machine learning models using shapley additive explanation and application for real data in hospital. Comput. Methods Programs Biomed. 2022, 214, 106584. [Google Scholar] [CrossRef] [PubMed]

- Alsuradi, H.; Park, W.; Eid, M. Explainable Classification of EEG Data for an Active Touch Task Using Shapley Values. In International Conference on Human-Computer Interaction; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

| Component | LSTM (Initial Model) | Bi-LSTM (Improved Model) |

|---|---|---|

| First Layer | LSTM (64 units, tanh activation) | Bidirectional LSTM (128 units, tanh activation) |

| Sequence Handling | return_sequences = True | return_sequences = True |

| Regularization | Dropout (0.3) + Batch Normalization | Dropout (0.3) + Batch Normalization |

| Second Layer | LSTM (32 units, tanh, return_sequences = False) | LSTM (64 units, tanh, return_sequences = False) |

| Dense Layers | 1 × Dense (32 units, ReLU) | 2 × Dense (64 and 32 units, ReLU) |

| Output Layer | Softmax (3 classes: AD, FTD, CN) | Softmax (3 classes: AD, FTD, CN) |

| Optimizer | Adam | Adam |

| Loss Function | Categorical cross-entropy | Categorical crossentropy (with class weights) |

| Temporal Processing | Unidirectional | Bidirectional (forward + backward) |

| The Model | Accurancy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| KNN | 38% | 43% | 49% | 46% |

| SVM | 40% | 45% | 47% | 49% |

| LSTM | 84% | 83% | 84% | 71% |

| Bidrectional LSTM | 98% | 99% | 99% | 99% |

| Sliding Winodw | 3 s | 5 s | 7 s | 10 s | 12 s |

|---|---|---|---|---|---|

| Accurancy | 0.987703 | 0.981313 | 0.964953 | 0.934151 | 0.879695 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mouazen, B.; Bendaouia, A.; Bellakhdar, O.; Laghdaf, K.; Ennair, A.; Abdelwahed, E.H.; De Marco, G. Transparent EEG Analysis: Leveraging Autoencoders, Bi-LSTMs, and SHAP for Improved Neurodegenerative Diseases Detection. Sensors 2025, 25, 5690. https://doi.org/10.3390/s25185690

Mouazen B, Bendaouia A, Bellakhdar O, Laghdaf K, Ennair A, Abdelwahed EH, De Marco G. Transparent EEG Analysis: Leveraging Autoencoders, Bi-LSTMs, and SHAP for Improved Neurodegenerative Diseases Detection. Sensors. 2025; 25(18):5690. https://doi.org/10.3390/s25185690

Chicago/Turabian StyleMouazen, Badr, Ahmed Bendaouia, Omaima Bellakhdar, Khaoula Laghdaf, Aya Ennair, El Hassan Abdelwahed, and Giovanni De Marco. 2025. "Transparent EEG Analysis: Leveraging Autoencoders, Bi-LSTMs, and SHAP for Improved Neurodegenerative Diseases Detection" Sensors 25, no. 18: 5690. https://doi.org/10.3390/s25185690

APA StyleMouazen, B., Bendaouia, A., Bellakhdar, O., Laghdaf, K., Ennair, A., Abdelwahed, E. H., & De Marco, G. (2025). Transparent EEG Analysis: Leveraging Autoencoders, Bi-LSTMs, and SHAP for Improved Neurodegenerative Diseases Detection. Sensors, 25(18), 5690. https://doi.org/10.3390/s25185690