IFE-CMT: Instance-Aware Fine-Grained Feature Enhancement Cross Modal Transformer for 3D Object Detection

Abstract

1. Introduction

2. Related Work

2.1. Visual 3D Object Detection

2.2. LIDAR 3D Object Detection

2.3. Vision-Point Cloud Fusion 3D Object Detection

3. Method

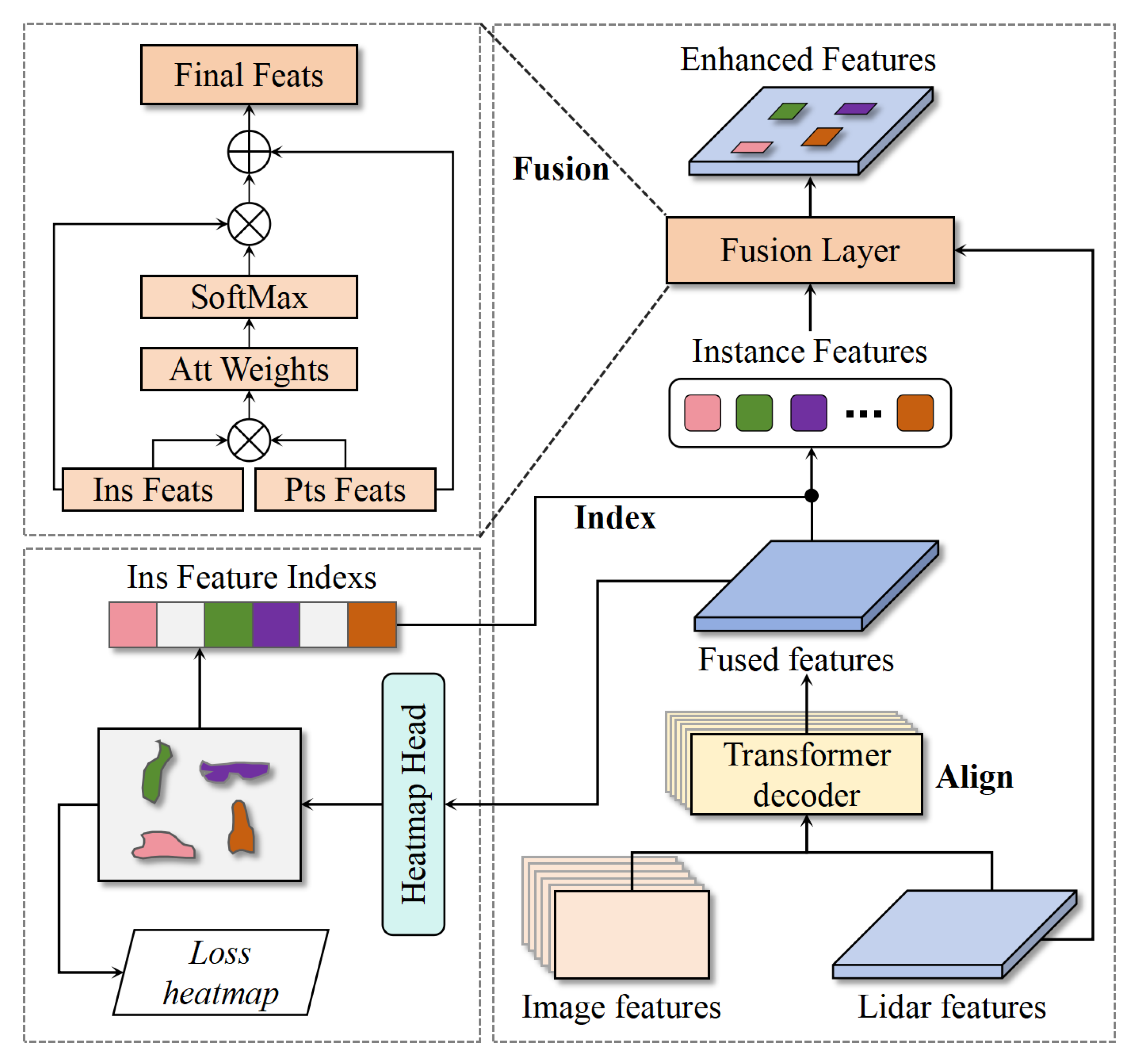

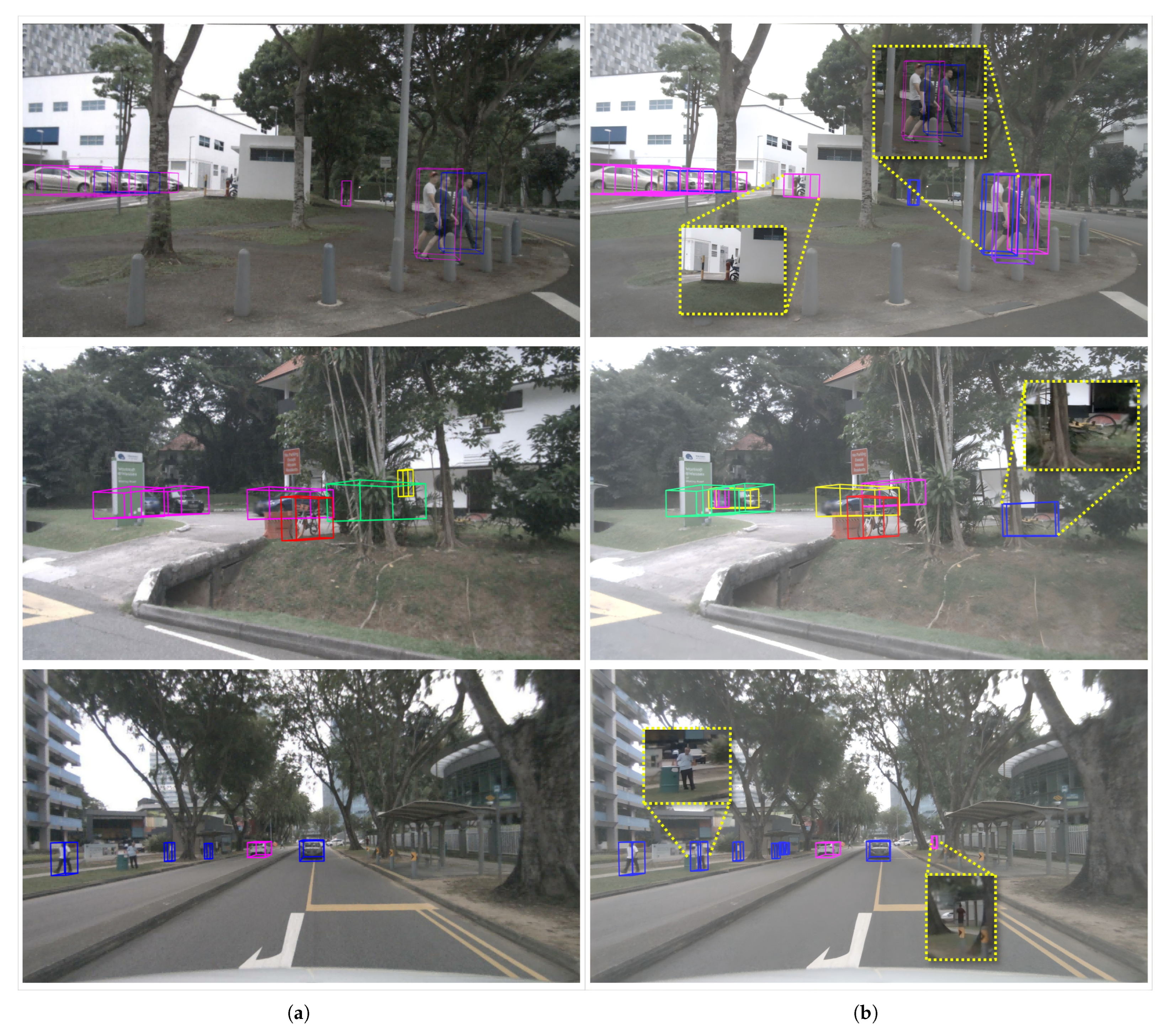

3.1. Instance Feature Enhancement Module (IE-Module)

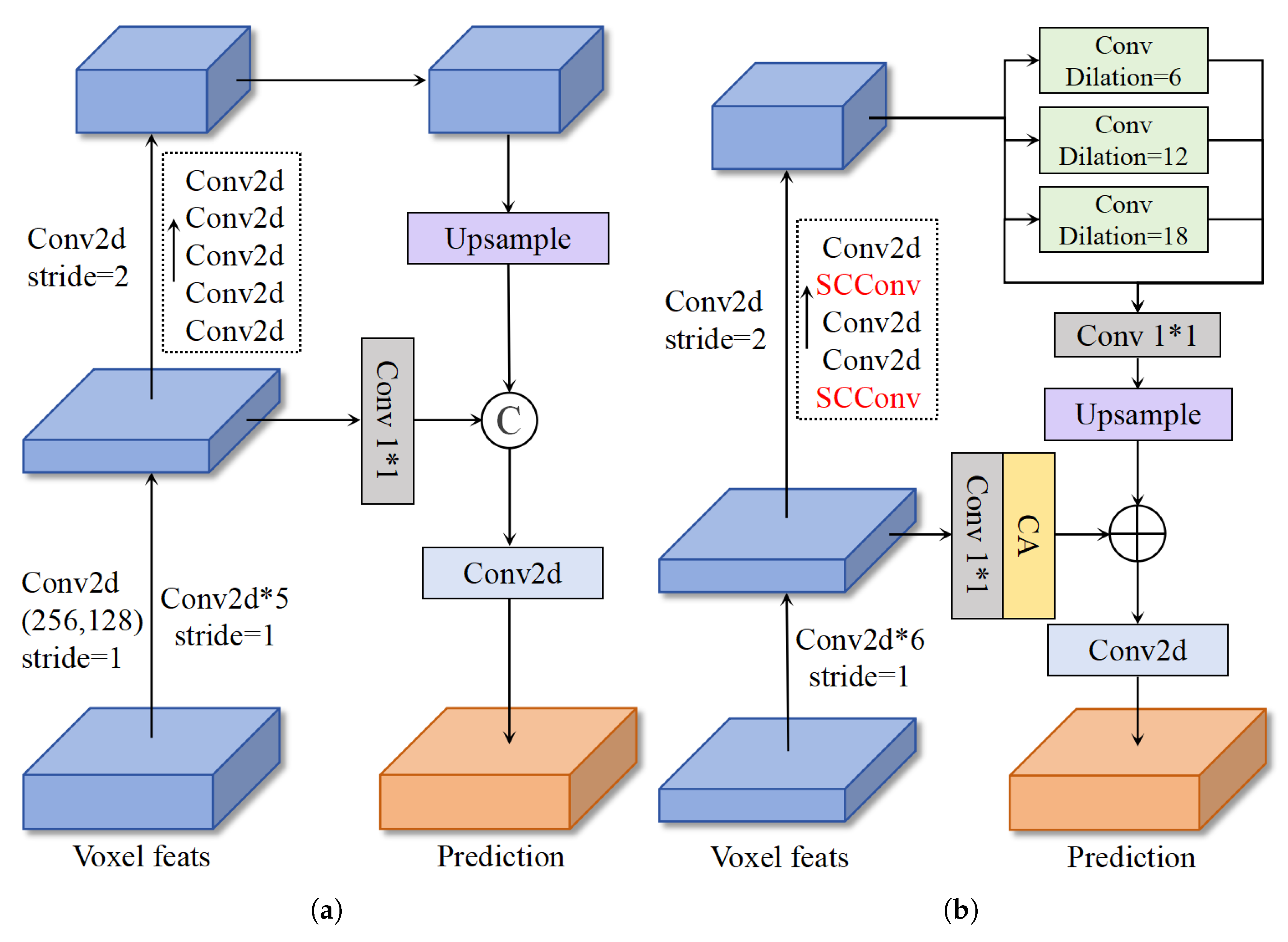

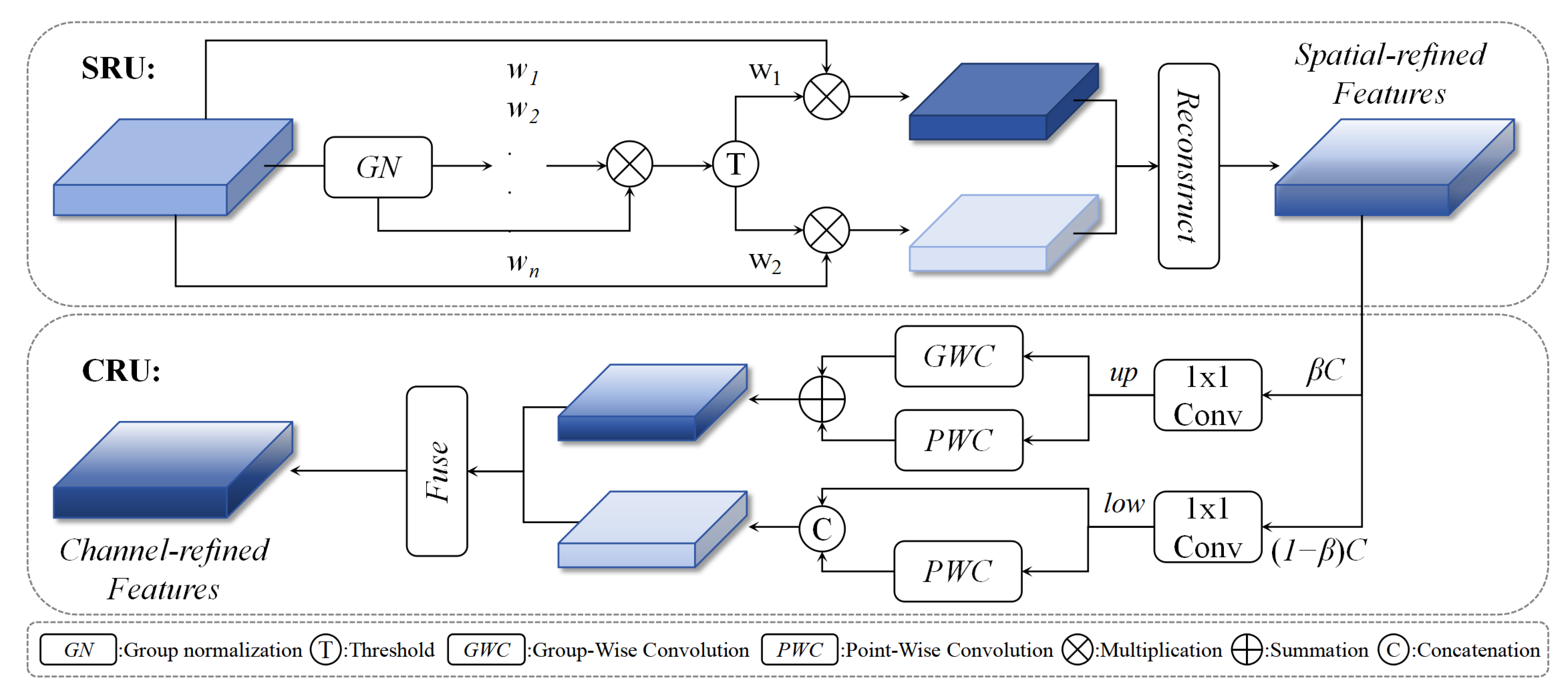

3.2. Improved Point Cloud Branch

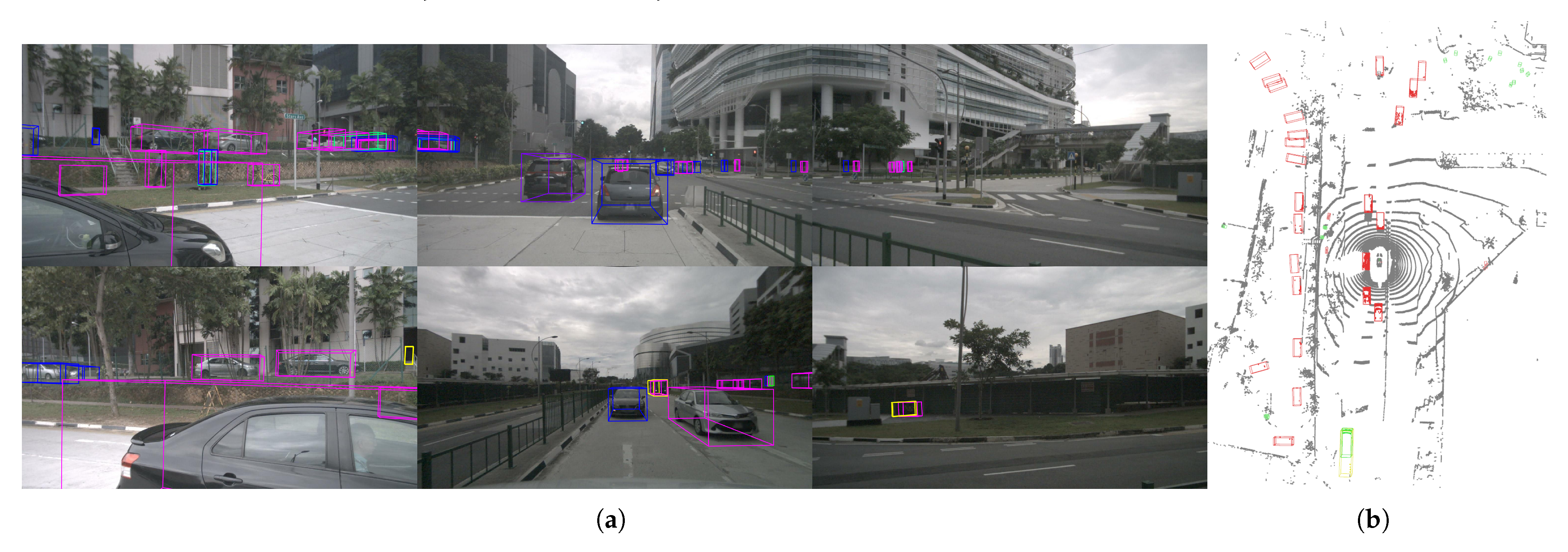

4. Experiments

4.1. Datasets and Metrics

4.2. Implementation Details

4.3. Main Results

4.4. Ablation Experiment

4.4.1. IE-Module

4.4.2. Improved Point Cloud Branch

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3D Point Clouds: A Survey. arXiv 2020, arXiv:1912.12033. [Google Scholar] [CrossRef] [PubMed]

- Wei, Z.; Zhang, F.; Chang, S.; Liu, Y.; Wu, H.; Feng, Z. MmWave Radar and Vision Fusion for Object Detection in Autonomous Driving: A Review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef] [PubMed]

- Pravallika, A.; Hashmi, M.F.; Gupta, A. Deep Learning Frontiers in 3D Object Detection: A Comprehensive Review for Autonomous Driving. IEEE Access 2024, 12, 173936–173980. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H.; Dong, H. A Survey of Deep Learning-Driven 3D Object Detection: Sensor Modalities, Technical Architectures, and Applications. Sensors 2025, 25, 3668. [Google Scholar] [CrossRef] [PubMed]

- Philion, J.; Fidler, S. Lift, Splat, Shoot: Encoding Images from Arbitrary Camera Rigs by Implicitly Unprojecting to 3D. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 194–210. [Google Scholar] [CrossRef]

- Li, Y.; Ge, Z.; Yu, G.; Yang, J.; Wang, Z.; Shi, Y.; Sun, J.; Li, Z. BEVDepth: Acquisition of reliable depth for multi-view 3D object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington DC, USA, 7–14 February 2023; AAAI Press: Washington, DC, USA, 2023. AAAI’23/IAAI’23/EAAI’23. [Google Scholar] [CrossRef]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. DETR3D: 3D Object Detection from Multi-view Images via 3D-to-2D Queries. In Proceedings of the 5th Conference on Robot Learning, London, UK, 8–11 November 2022; Faust, A., Hsu, D., Neumann, G., Eds.; PMLR (Proceedings of Machine Learning Research): New York, NY, USA, 2022; Volume 164, pp. 180–191. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, T.; Zhang, X.; Sun, J. PETR: Position Embedding Transformation for Multi-view 3D Object Detection. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–24 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 531–548. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. VoxelNeXt: Fully Sparse VoxelNet for 3D Object Detection and Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 21674–21683. [Google Scholar] [CrossRef]

- Pan, X.; Xia, Z.; Song, S.; Li, L.E.; Huang, G. 3D Object Detection with Pointformer. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7459–7468. [Google Scholar] [CrossRef]

- Misra, I.; Girdhar, R.; Joulin, A. An End-to-End Transformer Model for 3D Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 2886–2897. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection from RGB-D Data. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krähenbühl, P. Multimodal Virtual Point 3D Detection. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34, pp. 16494–16507. [Google Scholar] [CrossRef]

- Yan, J.; Liu, Y.; Sun, J.; Jia, F.; Li, S.; Wang, T.; Zhang, X. Cross Modal Transformer: Towards Fast and Robust 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 18268–18278. [Google Scholar] [CrossRef]

- Bai, X.; Hu, Z.; Zhu, X.; Huang, Q.; Chen, Y.; Fu, H.; Tai, C.L. TransFusion: Robust LiDAR-Camera Fusion for 3D Object Detection with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 21–24 June 2022; pp. 1090–1099. [Google Scholar] [CrossRef]

- Vinodkumar, P.K.; Karabulut, D.; Avots, E.; Ozcinar, C.; Anbarjafari, G. A Survey on Deep Learning Based Segmentation, Detection and Classification for 3D Point Clouds. Entropy 2023, 25, 635. [Google Scholar] [CrossRef]

- Wang, X.; Li, K.; Chehri, A. Multi-Sensor Fusion Technology for 3D Object Detection in Autonomous Driving: A Review. IEEE Trans. Intell. Transp. Syst. 2024, 25, 1148–1165. [Google Scholar] [CrossRef]

- Tang, Y.; He, H.; Wang, Y.; Mao, Z.; Wang, H. Multi-modality 3D object detection in autonomous driving: A review. Neurocomputing 2023, 553, 126587. [Google Scholar] [CrossRef]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D Proposal Generation and Object Detection from View Aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, Z.; Tang, H.; Amini, A.; Yang, X.; Mao, H.; Rus, D.L.; Han, S. BEVFusion: Multi-Task Multi-Sensor Fusion with Unified Bird’s-Eye View Representation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2774–2781. [Google Scholar] [CrossRef]

- Liang, T.; Xie, H.; Yu, K.; Xia, Z.; Lin, Z.; Wang, Y.; Tang, T.; Wang, B.; Tang, Z. BEVFusion: A Simple and Robust LiDAR-Camera Fusion Framework. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 10421–10434. [Google Scholar] [CrossRef]

- Hu, H.; Wang, F.; Su, J.; Wang, Y.; Hu, L.; Fang, W.; Xu, J.; Zhang, Z. EA-LSS: Edge-aware Lift-splat-shot Framework for 3D BEV Object Detection. arXiv 2023, arXiv:2303.17895. [Google Scholar] [CrossRef]

- Xie, Y.; Xu, C.; Rakotosaona, M.J.; Rim, P.; Tombari, F.; Keutzer, K.; Tomizuka, M.; Zhan, W. SparseFusion: Fusing Multi-Modal Sparse Representations for Multi-Sensor 3D Object Detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 17545–17556. [Google Scholar] [CrossRef]

- Alaba, S.Y.; Ball, J.E. A Survey on Deep-Learning-Based LiDAR 3D Object Detection for Autonomous Driving. Sensors 2022, 22, 9577. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Zhang, S.; Ogai, H. Deep 3D Object Detection Networks Using LiDAR Data: A Review. IEEE Sens. J. 2021, 21, 1152–1171. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wen, Y.; He, L. SCConv: Spatial and Channel Reconstruction Convolution for Feature Redundancy. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar] [CrossRef]

- Qi, S.; Ning, X.; Yang, G.; Zhang, L.; Long, P.; Cai, W.; Li, W. Review of multi-view 3D object recognition methods based on deep learning. Displays 2021, 69, 102053. [Google Scholar] [CrossRef]

- Huang, J.; Huang, G.; Zhu, Z.; Ye, Y.; Du, D. Bevdet: High-performance multi-camera 3d object detection in bird-eye-view. arXiv 2021, arXiv:2112.11790. [Google Scholar] [CrossRef]

- Li, Z.; Wang, W.; Li, H.; Xie, E.; Sima, C.; Lu, T.; Yu, Q.; Dai, J. BEVFormer: Learning Bird’s-Eye-View Representation from LiDAR-Camera via Spatiotemporal Transformers. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2020–2036. [Google Scholar] [CrossRef] [PubMed]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Liu, Y.; Yan, J.; Jia, F.; Li, S.; Gao, A.; Wang, T.; Zhang, X. PETRv2: A Unified Framework for 3D Perception from Multi-Camera Images. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3239–3249. [Google Scholar] [CrossRef]

- Wang, Z.; Huang, Z.; Fu, J.; Wang, N.; Liu, S. Object as Query: Lifting any 2D Object Detector to 3D Detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3768–3777. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12689–12697. [Google Scholar] [CrossRef]

- Li, J.; Luo, C.; Yang, X. PillarNeXt: Rethinking Network Designs for 3D Object Detection in LiDAR Point Clouds. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 17567–17576. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar] [CrossRef]

- Zhu, M.; Gong, Y.; Tian, C.; Zhu, Z. A Systematic Survey of Transformer-Based 3D Object Detection for Autonomous Driving: Methods, Challenges and Trends. Drones 2024, 8, 412. [Google Scholar] [CrossRef]

- Zhang, G.; Chen, J.; Gao, G.; Li, J.; Liu, S.; Hu, X. SAFDNet: A Simple and Effective Network for Fully Sparse 3D Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 14477–14486. [Google Scholar] [CrossRef]

- Wang, H.; Shi, C.; Shi, S.; Lei, M.; Wang, S.; He, D.; Schiele, B.; Wang, L. DSVT: Dynamic Sparse Voxel Transformer with Rotated Sets. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13520–13529. [Google Scholar] [CrossRef]

- Tang, Q.; Liang, J.; Zhu, F. A comparative review on multi-modal sensors fusion based on deep learning. Signal Process. 2023, 213, 109165. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Wang, J.; Li, F.; Bi, H. Gaussian Focal Loss: Learning Distribution Polarized Angle Prediction for Rotated Object Detection in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4707013. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, T.; Wang, Y.; Wang, Y.; Zhao, H. FUTR3D: A Unified Sensor Fusion Framework for 3D Detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 172–181. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krähenbühl, P. Center-based 3D Object Detection and Tracking. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11779–11788. [Google Scholar] [CrossRef]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. arXiv 2019, arXiv:1911.10150. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Lee, Y.; Hwang, J.W.; Lee, S.; Bae, Y.; Park, J. An Energy and GPU-Computation Efficient Backbone Network for Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

| Methods | Advantage | Disadvantage | Papers | Limitation | |

|---|---|---|---|---|---|

| Visual 3D Object Detection | BEV Methods | Achieve more precise object positioning. | The computation is complex and information loss occurs. | [5,6] | Visual methods lack accurate spatial information, resulting in low accuracy. |

| Non-BEV Methods | No explicit view transformation, low computational complexity. | Low localization accuracy and weak detection capability. | [7,8] | ||

| Lidar 3D Object Detection | Voxelization Methods | Structured data facilitate processing. | Low resolution leads to loss of fine-grained information. | [9,10] | Lidar point clouds are sparse and semantically weak, hindering scene understanding. |

| Transformer Methods | Long-sequence modeling yields high accuracy and rich contextual information. | High computational load and low detection speed. | [11,12] | ||

| Vision-Point Cloud Fusion 3D Object Detection | Data-level Fusion | Full data utilization and complete features. | Relies on visual detection results. | [13,14] | Vision-point-cloud fusion algorithms achieve data complementarity, but existing methods exhibit coarse fusion granularity. |

| Feature-level Fusion | Stronger semantics and higher detection accuracy. | Algorithms are complex and computationally intensive. | [15,16] | ||

| Decision-level Fusion | Algorithm is concise and clear feature. | Low fusion level and poor accuracy. | - | ||

| Models | Modality | Resolution | Voxel Size | Backbone | mAP ↑ | NDS ↑ |

|---|---|---|---|---|---|---|

| Validation dataset | ||||||

| DETR3D [7] | C | 1600 × 900 | - | Res101 | 0.303 | 0.374 |

| PETR [8] | C | 1600 × 900 | - | Res101 | 0.370 | 0.442 |

| FUTR3D_L [48] | L | - | (0.075, 0.075) | VoxelNet | 0.593 | 0.655 |

| CenterPoint [49] | L | - | (0.075, 0.075) | VoxelNet | 0.596 | 0.668 |

| PointPainting [50] | C & L | - | - | - | 0.653 | 0.685 |

| FUTR3D [48] | C & L | 1600 × 900 | (0.075, 0.075) | Res101 & VoxelNet | 0.645 | 0.683 |

| Transfusion [16] | C & L | 800 × 448 | (0.1, 0.1) | Res50 & VoxelNet | 0.648 | 0.691 |

| CMT-R50 [15] | C & L | 800 × 320 | (0.1, 0.1) | Res50 & VoxelNet | 0.655 | 0.694 |

| Ours | C & L | 800 × 320 | (0.1, 0.1) | Res50 & VoxelNet | 0.676 | 0.702 |

| Test dataset | ||||||

| CMT-R50 [15] | C & L | 800 × 320 | (0.1, 0.1) | Res50 & VoxelNet | 0.662 | 0.696 |

| Ours | C & L | 800 × 320 | (0.1, 0.1) | Res50 & VoxelNet | 0.681 | 0.703 |

| Model | Car | Truck | Construction Vehicle | Bus | Trailer | Pedestrian | Motorcycle | Bicycle | Traffic Cone | Barrier |

|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.864 | 0.616 | 0.294 | 0.729 | 0.416 | 0.843 | 0.727 | 0.624 | 0.730 | 0.706 |

| Ours | 0.875 | 0.637 | 0.316 | 0.748 | 0.424 | 0.854 | 0.764 | 0.690 | 0.742 | 0.707 |

| +1.1% | +2.1% | +2.2% | +1.9% | +0.8% | +1.1% | +3.7% | +6.6% | +1.2% | +0.1% |

| Models | Modality | mAP | NDS | Latency (ms) | Memory (MB) | FPS |

|---|---|---|---|---|---|---|

| FUTR3D_L [48] | L | 0.593 | 0.655 | 144.2 | 3515 | 6.9 |

| CenterPoint [49] | L | 0.596 | 0.668 | 62.0 | 2563 | 16.1 |

| PointPainting [50] | C & L | 0.653 | 0.685 | 182.0 | 5290 | 5.5 |

| FUTR3D [48] | C & L | 0.645 | 0.683 | 315.7 | 6477 | 3.2 |

| Transfusion [16] | C & L | 0.648 | 0.691 | 153.9 | 5628 | 6.5 |

| CMT-R50 [15] | C & L | 0.655 | 0.694 | 91.8 | 4298 | 10.9 |

| Ours | C & L | 0.676 | 0.702 | 104.2 | 5196 | 9.6 |

| IE-Module | Pts Branch | mAP ↑ | NDS ↑ | FPS ↑ |

|---|---|---|---|---|

| - | - | 0.655 | 0.694 | 10.9 |

| ✔ | - | 0.663 | 0.698 | 10.7 |

| - | ✔ | 0.666 | 0.695 | 10.2 |

| ✔ | ✔ | 0.676 | 0.702 | 9.6 |

| Method | Channel | mAP ↑ | FPS ↑ |

|---|---|---|---|

| CMT-R50 | - | 0.655 | 10.9 |

| Ours | 128 | 0.663 | 10.7 |

| 256 | 0.663 | 10.1 |

| Neck | SCConv | mAP ↑ | NDS ↑ |

|---|---|---|---|

| - | - | 0.663 | 0.698 |

| ✔ | - | 0.669 | 0.698 |

| ✔ | (1) | 0.671 | 0.699 |

| ✔ | (1, 3) | 0.676 | 0.702 |

| ✔ | (1, 3, 5) | 0.673 | 0.701 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, X.; Zhang, H.; Liu, H.; Wang, X.; Wang, L. IFE-CMT: Instance-Aware Fine-Grained Feature Enhancement Cross Modal Transformer for 3D Object Detection. Sensors 2025, 25, 5685. https://doi.org/10.3390/s25185685

Song X, Zhang H, Liu H, Wang X, Wang L. IFE-CMT: Instance-Aware Fine-Grained Feature Enhancement Cross Modal Transformer for 3D Object Detection. Sensors. 2025; 25(18):5685. https://doi.org/10.3390/s25185685

Chicago/Turabian StyleSong, Xiaona, Haozhe Zhang, Haichao Liu, Xinxin Wang, and Lijun Wang. 2025. "IFE-CMT: Instance-Aware Fine-Grained Feature Enhancement Cross Modal Transformer for 3D Object Detection" Sensors 25, no. 18: 5685. https://doi.org/10.3390/s25185685

APA StyleSong, X., Zhang, H., Liu, H., Wang, X., & Wang, L. (2025). IFE-CMT: Instance-Aware Fine-Grained Feature Enhancement Cross Modal Transformer for 3D Object Detection. Sensors, 25(18), 5685. https://doi.org/10.3390/s25185685