1. Introduction

In modern industries, more and more sensors are involved in different systems to monitor the performance of each component during operation for the purposes of control or safety. Sensors, however, may generate anomalous signals as well due to harsh environmental conditions, poor installation, inadequate maintenance and so on [

1], which will influence the stability, reliability, and accuracy of the systems. In fact, sensor anomaly may even cause system failure or other safety issues. Therefore, sensor fault detection is also necessary to monitor the state of sensors.

Sensor anomaly detection methods can be classified into two categories: one is model-based methods, and the other is data-driven methods. Model-based methods, having been applied in power systems [

2], health monitoring [

3], and so on, construct computational models for the system to estimate the sensor values. By comparing the differences between the estimated values and the real sensor values, sensor faults can be detected. However, these methods require accurate simulation or analytic models for the system, which are usually very complex and time-consuming. Moreover, the models are usually application-dependent, which means when the monitored system changes, or even the working conditions change, a new model should be constructed. On the other hand, data-driven methods, which do not need exact knowledge of the monitored system, rely on historical data to estimate the performance of the sensors. Recently, different data-driven sensor anomaly detection methods have been developed, such as random forest (RF) [

4], support vector machines (SVM) [

5], and neural networks (NN) [

6]. One of the main research directions is to use classification methods to detect faults by using different machine learning approaches. For example, Gao et al. [

7] employed SVM to classify the residuals of each sensor to detect fault sensors in a binary mode. In those classification studies, labeled data, especially data including faults, are needed to train the classifiers to achieve an accurate prediction. However, it is usually not possible in the real world to manually label fault data from large amounts of raw data, or the fault data are difficult to obtain, which makes these classification methods difficult to apply.

To overcome the problem of lack of labeled data, multiple data-driven approaches are applied in sensor anomaly detection, relying only on correct sensor data. One aspect of the approaches is the autoencoder (AE). As an unsupervised learning method, AE can learn and extract hidden representations from raw data to provide an estimation of sensor measurements. Thus, AE is suitable for fault detection problems only with correct data. Under the concept of AE, different kinds of neural networks are employed to realize the estimation of the sensor measurements. In [

8], an auto-associative neural network (AANN) is used as an autoencoder method to detect and isolate multiple sensor faults in a non-linear system. Instead of estimating sensor measurements for a certain time spot, some studies use different networks to estimate the sensor measurements during a period. A long short-term memory (LSTM) network, as a type of recurrent neural network, is used to build the AE to estimate the sensor measurements during a period and isolate failures with insufficient data conditions in different input feature spaces [

9]. Jana et al. employed convolutional neural networks (CNN) to capture the features of the sensor signal plot for a period, which is used in the AE framework [

10].

Different from AE which can be defined as a re-construction process, another group of approaches uses NNs to predict sensor measurements according to historical data [

11,

12]. Darvishi et al. employed two multi-layer perceptron (MLP) networks to work as an estimator and a predictor, respectively [

13]. The differences between the results from the estimator and the predictor are used to detect sensor faults and to classify the fault categories. Due to their ability to learn sequential information, RNNs, especially LSTM and its variants, are widely used as predictors to predict sensor measurements and their values are used to compare with the measurements from the sensors to detect faults. In [

14], an RNN is used to predict sensor measurements according to the previous timestep’s value, which is compared with the predictions from a feedforward neural network (FFNN) to detect failures.

Besides lacking data containing anomalous signals, another aspect of lacking labeled data is the working conditions. In some cases, the machine may not work in a certain condition, and different workloads may cause different behaviors in the sensor measurements, such as the problem tackled in this paper, where the sensors mounted on a truck working in a mine are studied. During operation, a truck may work in different states, such as stalls, idling, accelerating, breaking and so on, and it may work in different environments, like up-hill or down-hill. Different working states and different environments may lead to different sensor measurement behaviors. Additionally, the driver’s behavior is influenced by the prevailing working conditions, thereby affecting the operation of the truck. However, the working conditions and decisions from the drivers are not labeled or recorded. Therefore, the detection method should be able to work in multiple environments, which is not well-studied. In this case, LSTM networks, which have the ability to learn features from sequential data, are employed to predict sensor measurements according to the historical behavior of the sensors. Additionally, a continuous fault threshold is set up to make sure to filter the sudden changes in the working conditions. To increase the fault detection sensitivity and reduce the rate of fake detection, the length of the time window is also studied in this paper.

Another feature that needs to be considered in multi-sensor anomaly detection is the input of each LSTM network to predict the behavior of each sensor. In previous studies, the predictors or estimators usually use either all the sensors’ data or the same sensor data as input. Considering all sensor data may cause a redundancy of information, leading to a fake detection in a certain sensor. On the other hand, only using their own historical data will result in the loss of information from other sensors to judge the accuracy of the prediction. Take the case study in the paper as an example, where sensors are utilized to assess the condition of a truck’s power system, thereby highlighting the existence of physical relationships between sensor measurements. For instance, the vehicle’s velocity is contingent upon the operational speed of the engine and the selected transmission gear. The employment of physics relationships in sensor measurement prediction enables the elimination of the influence of redundant data from less important sensors. To capture the knowledge of the physics relationships, an input selection method is proposed and added in the sensor fault detection process to find the most related sensors.

In summary, an LSTM-based sensor anomaly detection and isolation method is proposed to detect anomaly sensors under variate working conditions and with unknown inputs from outside operators (i.e., workers or drivers). The LSTM network is used to extract knowledge from historical data and predict subsequent timestep measurements based on the near timestep data. An input selection method has been developed to select the input sensors’ data for the measurement prediction. This method aims to inherit the physics relationships between sensors and reduce the influence of redundant information from less important sensor measurements.

The rest of the paper is organized as follows:

Section 2 introduces the sensor anomaly detection problem solved in this study.

Section 3 presents the details of the fault detection methods. The experiment data are presented and introduced in

Section 4.

Section 5 summarizes the comparison results of the proposed method to illustrate the performance of the LSTM-based sensor anomaly detection method with input selection features.

Section 6 discusses the results and provides the influences of the window size selection on the detection accuracy.

Section 7 concludes the paper.

2. Problem Formulation

In this study, six sensors mounted on a truck working in a mine are considered, as shown in

Table 1. All the sensors are the exiting sensors on the truck to monitor the power system. They are all digital sensors. The data were provided through Body builder manufacturer gateway in the truck and routed to the cloud by IoE-GW (Internet of Everything-GateWay). The sensor signals conform with SAE J1939-71 [

15] definitions.

The states of the truck include stall, idling, accelerating, breaking, uniform motion, and so on. However, the exact state at a given time is not clearly labeled. Additionally, the sensor data are not labeled with normal or abnormal, either. Therefore, the problem in this paper is defined as a sensor anomaly detection and isolation problem in a multi-task working condition. Additionally, the sensor measurements exhibit a strong correlation with the behavior of the drivers and the external environment. The absence of such data hinders the capacity for accurate measurement prediction by sensors.

In this study, physics relationships exist between sensor measurements. The position of the acceleration pedal exerts a direct influence on the engine speed and engine fuel rate. On the other hand, the vehicle speed is determined by the combination of the engine speed and the transmission selected gear. Consequently, the interrelationships among disparate sensors can also be leveraged to detect anomaly measurement when incongruent behaviors are identified in multiple interconnected sensors.

3. Methods

Since the states of the truck varied during operation and the states were not labeled, the traditional multi-layer neural network was not suitable as they usually work for one certain case. In this case, an LSTM network considering sensor data in the previous timesteps was employed to overcome the issue of the unlabeled working state by assuming the truck state does not have sudden changes during operation. The overall process of the fault sensor detection and isolation is shown in

Figure 1. The raw data were normalized to make sure that the inputs for the LSTM network were on the same scale to increase accuracy. Each sensor had its own LSTM network. To estimate the influence of the other sensors on the studied sensor, the selection of input sensors was performed for each sensor in the input selection block. The anomaly period was identified in the anomaly detection block through a comparison of the prediction data with the measurement data. The identification of the anomaly period was achieved through the integration of measurements from multiple sensors, with consideration given to the interrelationships that exist among them. In conclusion, for the period in which an anomaly was detected, the anomaly sensor(s) were isolated according to the residuals of each sensor. The following sections present the details of each block.

3.1. Normalization Block

The training and testing data were normalized into 0–1 range using Equation (1),

where

is the real sensor measurement and

is the normalized value. The maximum and minimum values for each sensor in the training set were selected as the upper (

ub) and lower boundaries (

lb) in the normalization. Note that the boundaries were selected from the training set; some of the sensor values in the testing sets may be out of the selected boundaries and the normalized values may be smaller than 0 or larger than 1, especially for the fault sensor data.

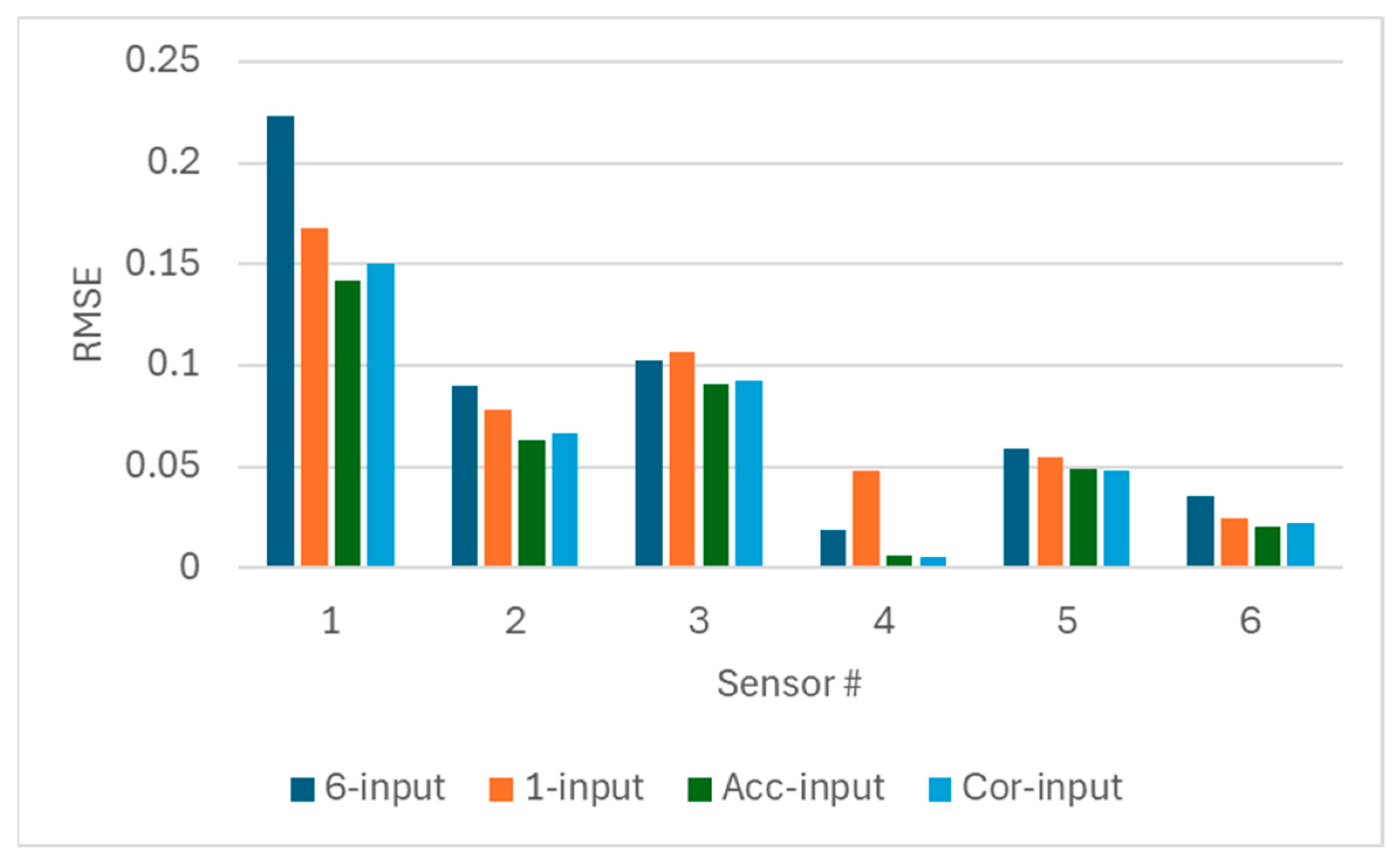

3.2. Input Selection Block

The relationships among different sensors are considered in the anomaly detection process by selecting input sensors of the LSTM network. Two sensor selection methods are proposed and tested in this paper. First is the selection method based on prediction errors. For a given sensor, all the possible combinations of input sets are enumerated. In this case, there were six sensors. The number of combinations of input is 6 + 15 + 20 + 15 + 5 + 1 = 62. Then, LSTM networks using different combinations of input were trained by a small amount of data. Then, the prediction error, presented by using mean square error, was estimated using another test dataset. The input set with the smallest error was selected for the given sensor. The process was repeated for all the sensors to find the input set for every sensor.

The second method is employing the correlation matrix to ascertain the most important input sensors for a specific sensor. The correlation matrix for the training dataset is calculated and if the correlation coefficient for a sensor pair exceeds a threshold, the two sensors are determined to have a strong relationship. The threshold can be selected according to the distribution of the correlation coefficients in the case. As a result, when constructing the prediction network for one sensor in the pair, the other sensor in the pair is considered an input sensor.

3.3. LSTM Network Block

Considering the problem tackled in this study, the state of the truck during the operation was not clearly labeled. A time-series prediction model is applied by considering the historical data in the previous timesteps to ignore the influence of the different states of the truck. An LSTM network is a kind of recurrent neural network which can be applied to time-series prediction problems [

16]. By using an input gate, an output gate, and a forget gate to control the flow of information into and out of the cell, an LSTM network has the ability to provide a short-term memory for a long time. A network with two LSTM layers, one fully connected layer and one output layer has been constructed in this study. The structure of the network is shown in

Figure 2.

As illustrated in

Figure 2, the data in time window

Tw is fed into LSTM layer 1 to generate a set of time-series data for the extraction of time-series features. LSTM layer 2 is responsible for making a prediction based on the captured feature with the objective of outputting a vector for the predicted timestep. After a fully connected layer and an output layer, the value of the sensor at the next timestep is predicted. To avoid overfitting, two dropout layers are added after the LSTM layer 1 and the fully connected layer. The dropout rate is set to be 0.5 for both. A linear function is used as the activation function for all the layers. The mean square error (MSE) is used as the loss function, and the Adam optimizer is used to train the networks. All the training and prediction are realized by using TensorFlow-2.10.0 library in Python-3.10.

3.4. Anomaly Detection Block

Instead of only considering the prediction error of a single sensor to detect the anomaly, a joint prediction error is used to identify the anomaly period, considering the relationships between measurements from different sensors. The square prediction error (SPE) [

17] is used as an index to detect the period when fault appears. The SPE is defined as follows:

where

is the measurement from the j-th sensor;

is the prediction value from the LSTM network;

is the number of sensors, i.e., six in this study.

As in [

18], the confident limit of the training set can be calculated as

at

confidence level. In this study, the confidence level is set to be 95%.

is the normal variable corresponding to the confidence level, i.e.,

.

can be calculated as

where

are the eigenvalues of the sample covariance matrix

S, which is defined as

where

is the number of the training samples,

is the residual value of the j-th sensor in the i-th training sample, i.e.,

.

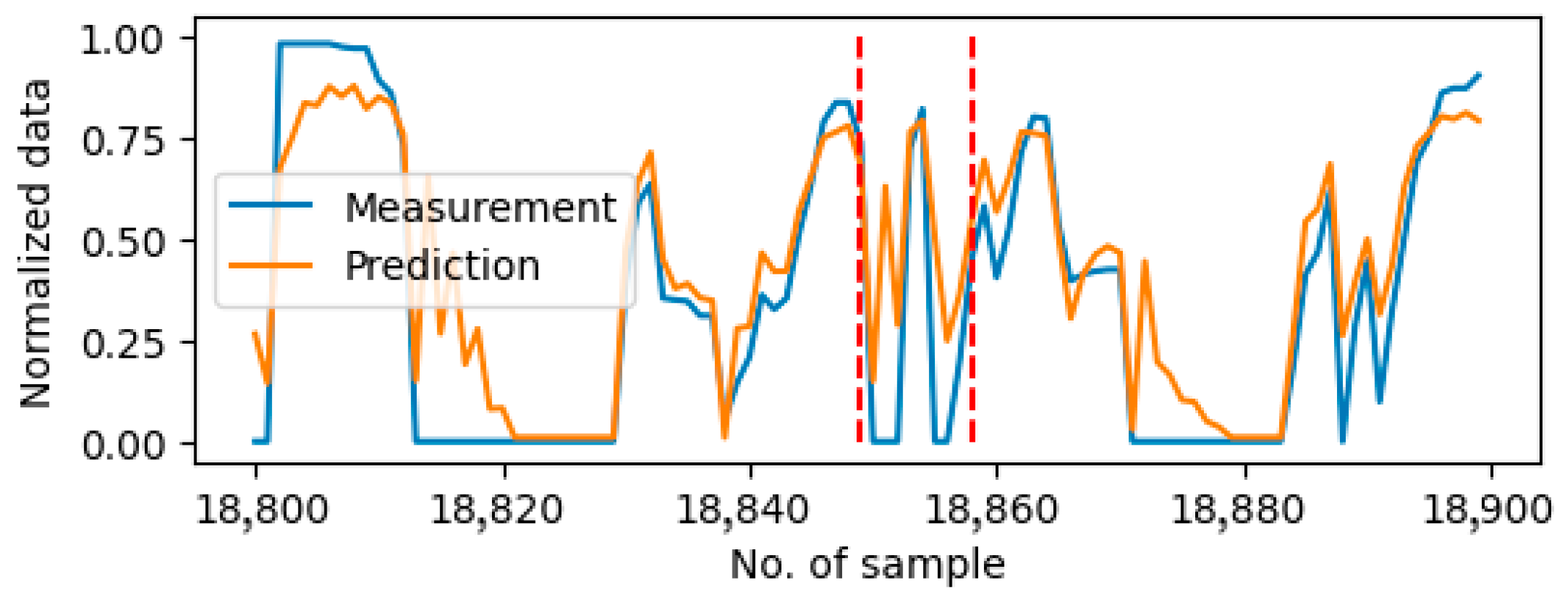

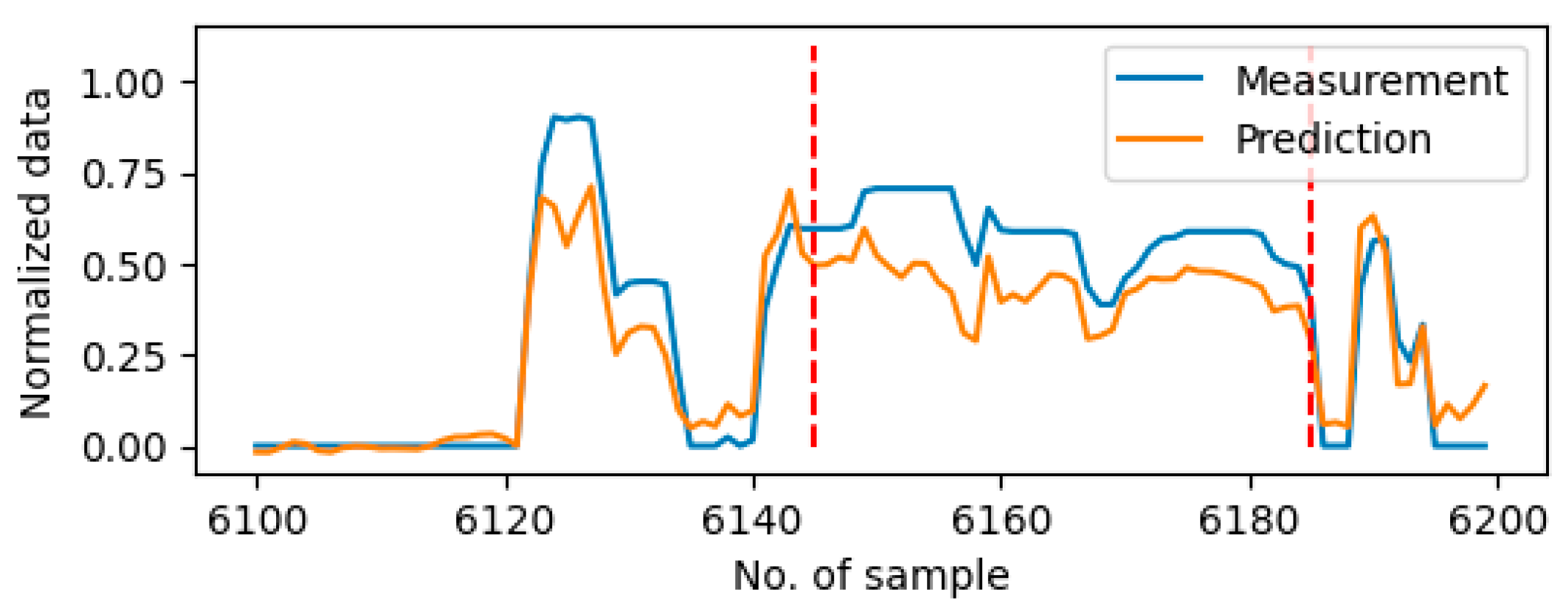

Therefore, if the SPE value calculated for a given timestep is larger than , an anomaly is detected. Since there are prediction errors that appear in the LSTM network, the SPE value for some timesteps will be larger than . To reduce the sensitivity of these random prediction errors, a continuous threshold is checked to determine if it is an anomaly measurement, which means the anomaly is detected when the anomaly lasts for at least tf timesteps. The value of tf can be determined from the training set by finding the longest continuous anomaly appearing period. In this study, tf = 7.

3.5. Anomaly Isolation Block

When a continuous anomaly is detected through the previous block, the next step is to isolate the fault sensors. The mean absolute error (MAE) of each sensor in the fault period, i.e., is calculated, where is the length of the fault period. Given the mean and standard deviation of the absolute error for the training set are and for the j-th sensor, if is out of the range [], the sensor is determined to have fault in this period.

4. Experiment Data Preparation

Sensor data from 10 individual days were well-selected and used in this research and are designated as Day1 to Day10. Note that the data recorded on a single day is continuous, while the data from two separate days is not continuous. Furthermore, the duration of the recorded data on each day exhibits variability. Consequently, the data are entered into the algorithm on separate days. On a daily basis, the sensor data undergoes synchronization with a 1 s timestep, with all data recorded continuously during the designated recording period. During the experiment period, the truck’s power system functioned without any issues, and the driver performed normally during the period. The data were meticulously screened for the experiment. No anomaly sensor measurements were identified in the data from Day1 to Day9, but stall errors were marked on Day10. To demonstrate the efficacy of the proposed method in other types of anomalies, five periods of random drift errors were introduced to the data on Day9. In each period, the anomaly data recorded by the sensors were also random.

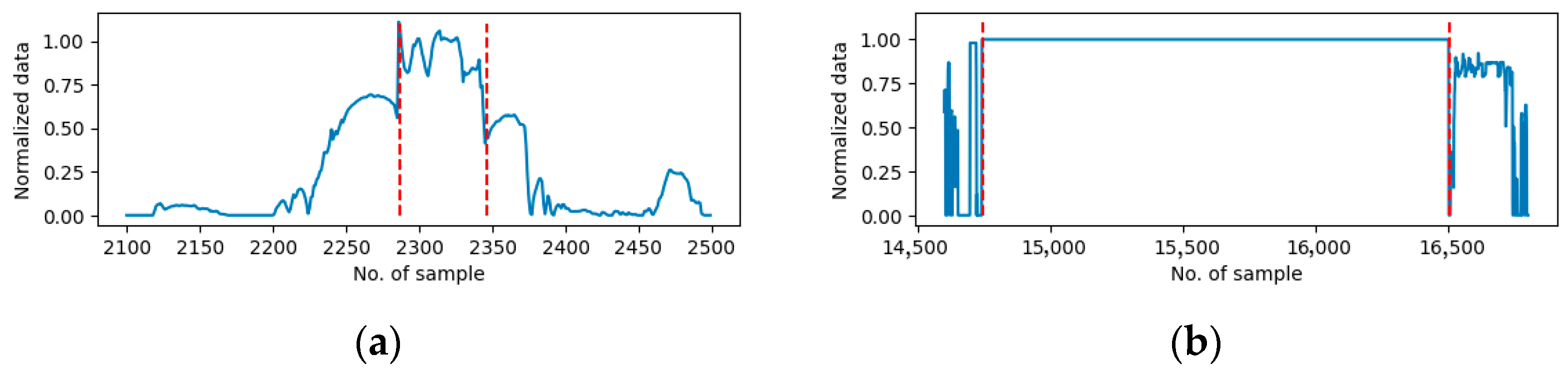

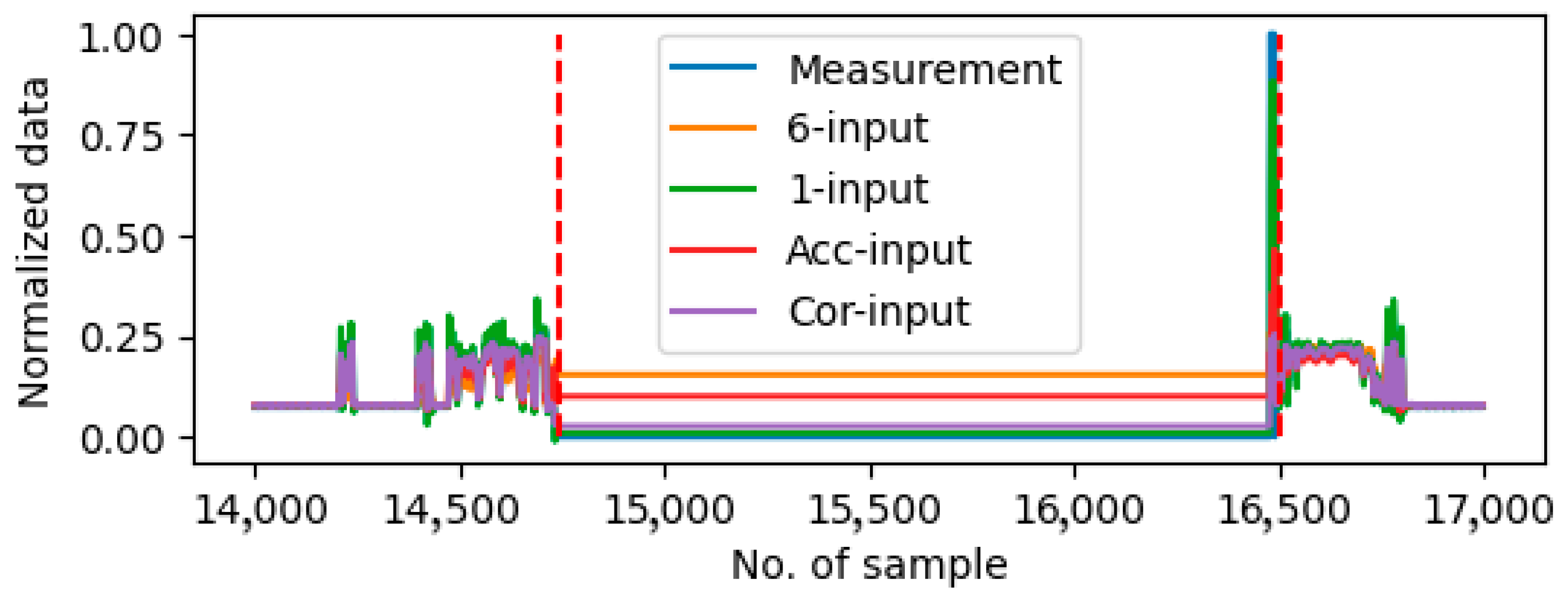

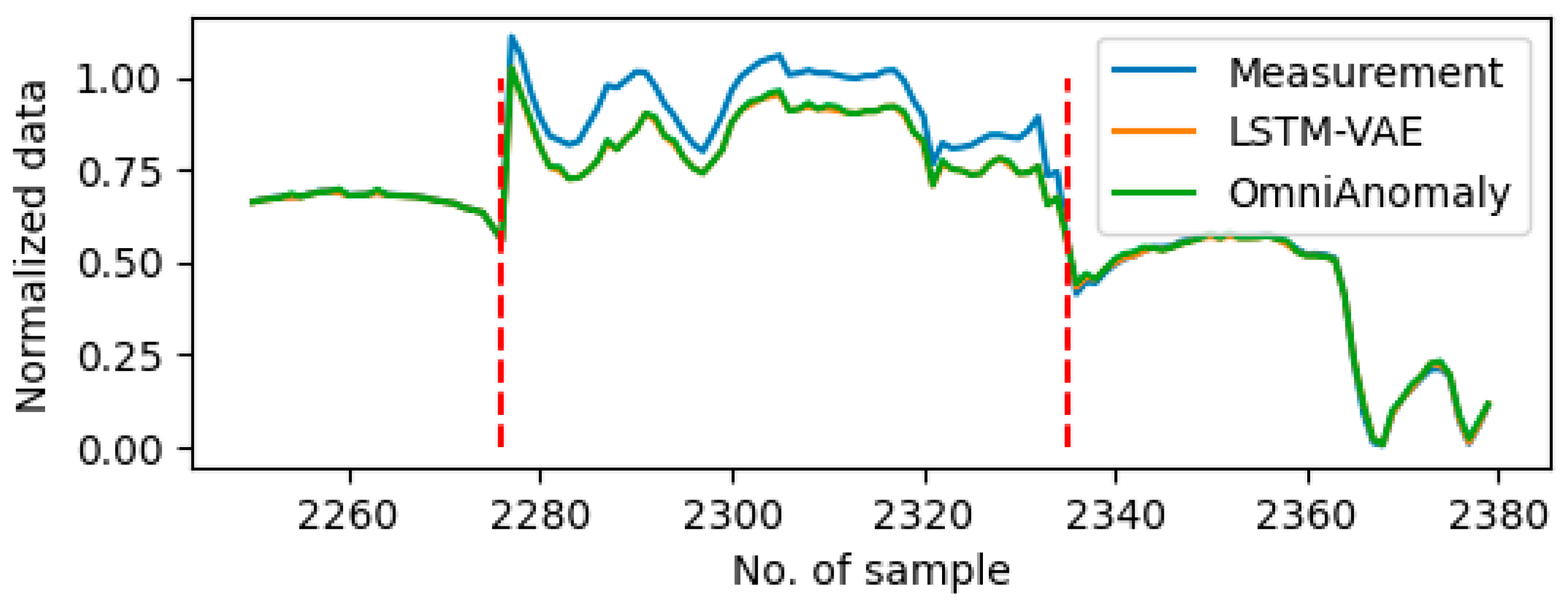

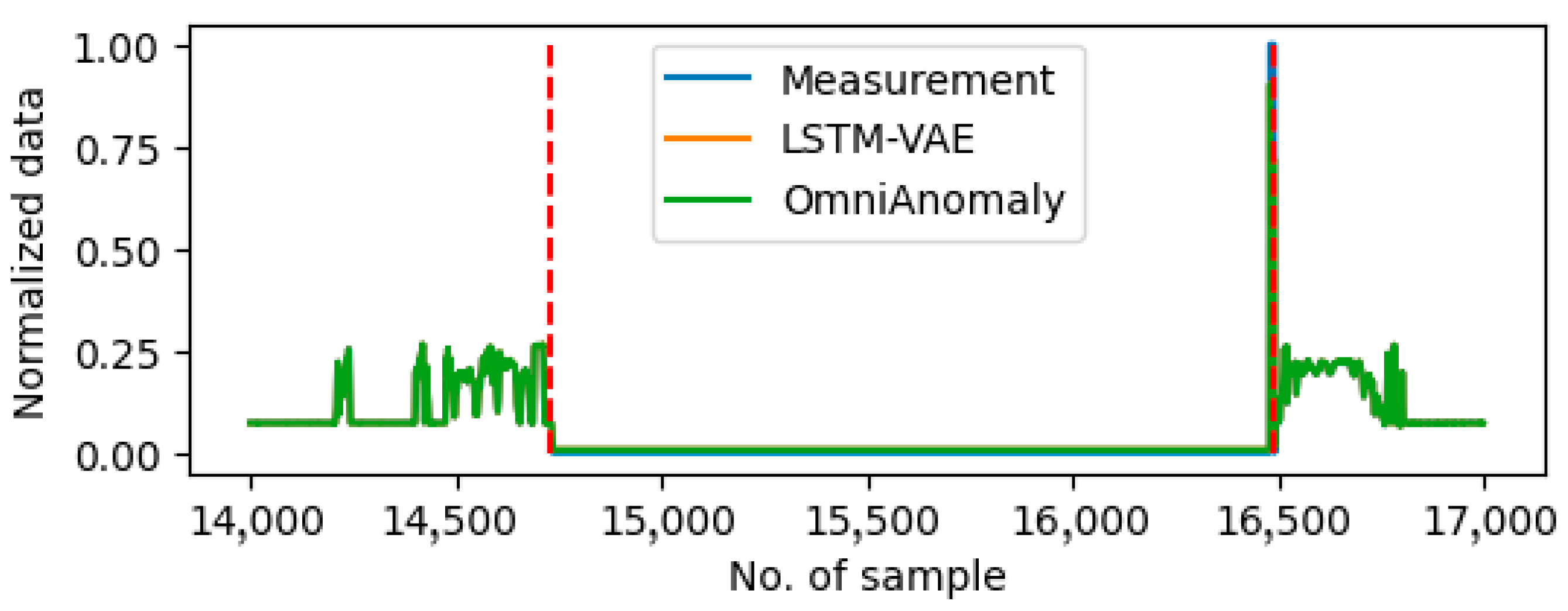

Figure 3 shows the example of drift error in the vehicle speed sensor on Day9 and the stall error on the accelerate paddle position sensor on Day10.

Table 2 and

Table 3 show the anomaly sensors on Day9 and Day10 marked using (X), separately. The final column lists the percentage of the anomaly measurements in the total data for the specific day.

In this case, the 10-day data are divided into two groups, the first four-day data (Day1 to Day4) are used to train and validate the LSTM-based prediction models, and the latter six-day data (Day5 to Day10) are used to assess the performance of the proposed methods. In summary, the training and validation set contain 50,743 s, while the testing set contains 111,706 s data.