Comprehensive Review of Open-Source Fundus Image Databases for Diabetic Retinopathy Diagnosis

Abstract

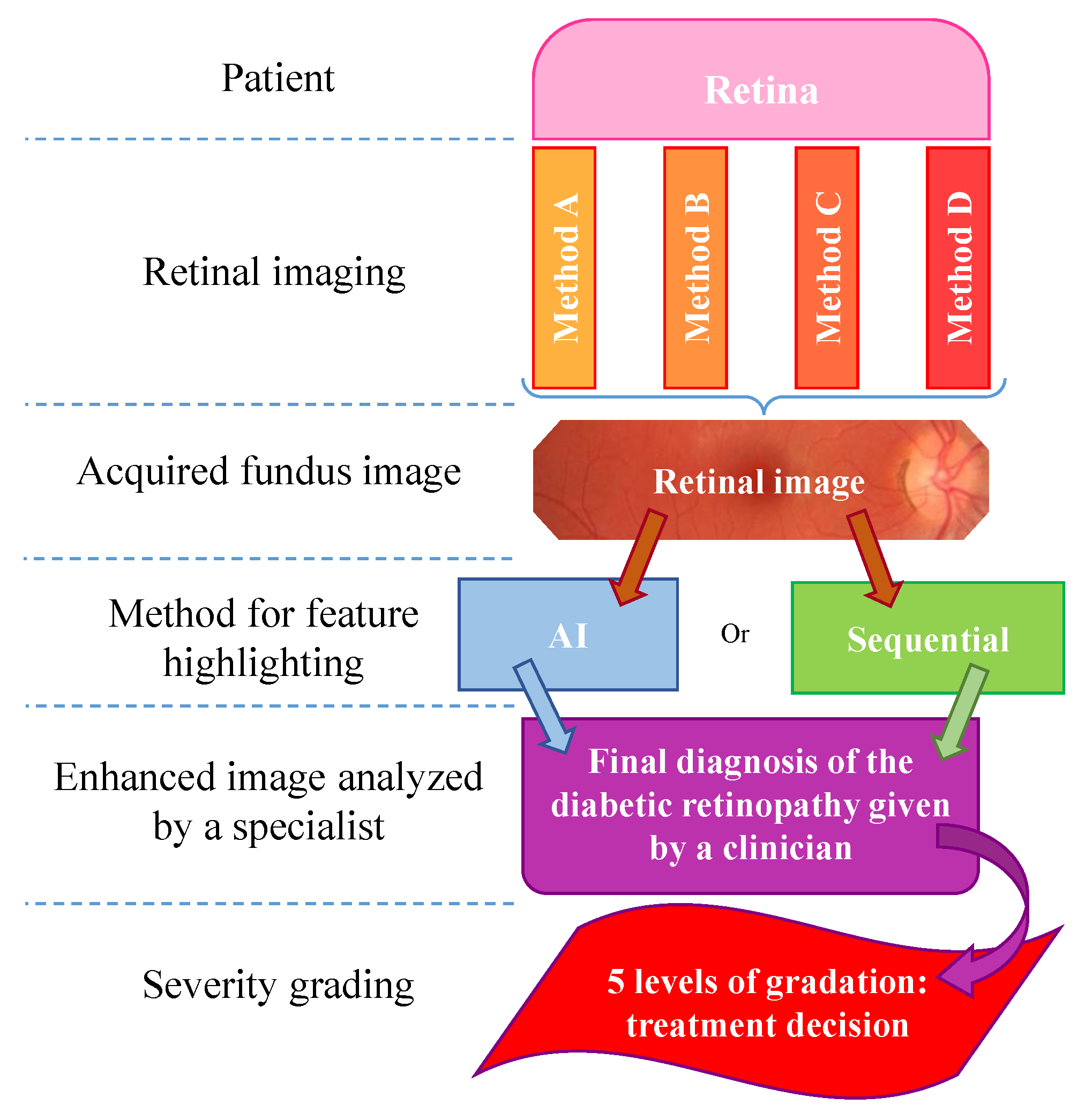

1. Introduction

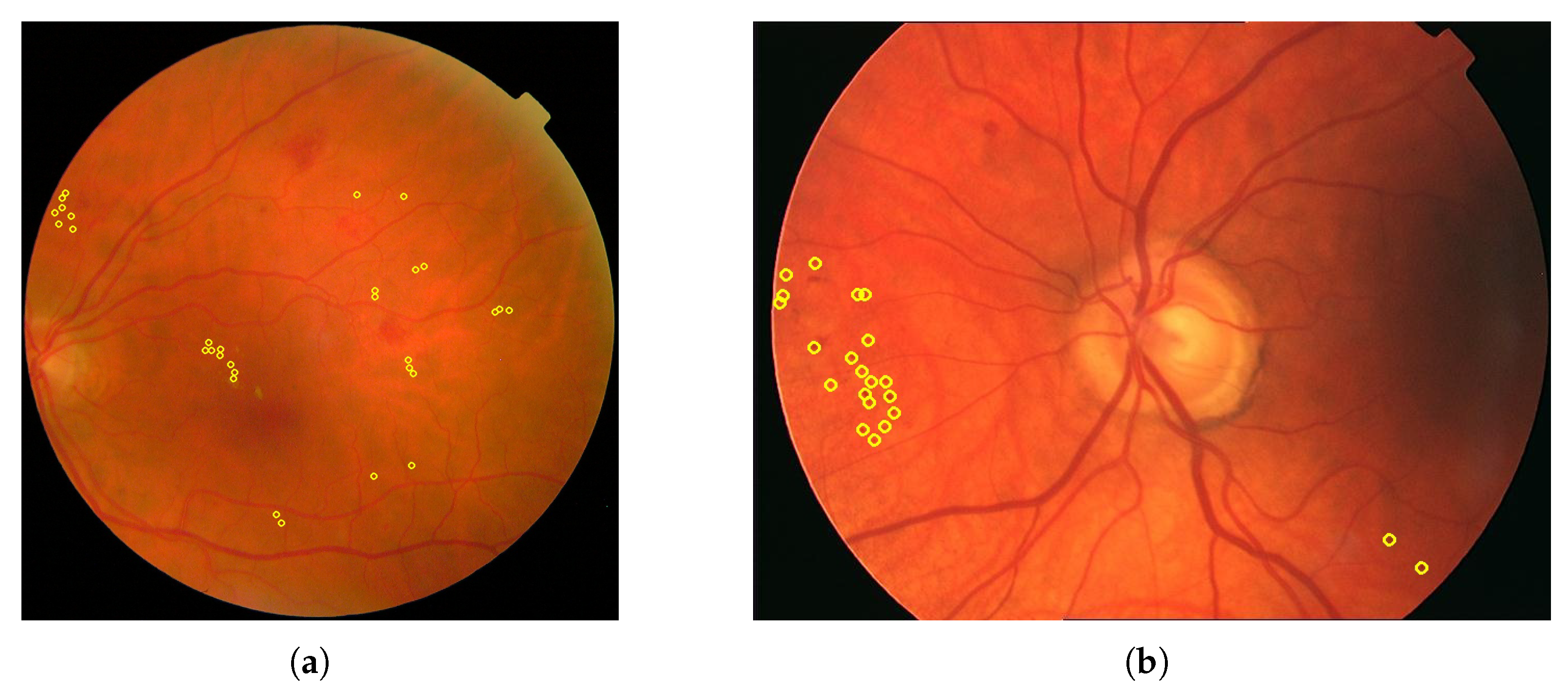

- Non-proliferative diabetic retinopathy (NPDR): This stage is marked by weakened, bulging, or leaking retinal blood vessels, resulting in microaneurysms, hemorrhages, and fluid accumulation. It can lead to retinal edema and the formation of exudates. As ischemia progresses, additional vascular occlusions worsen the oxygen deprivation in the retina. An example is displayed in Figure 1b.

- Proliferative diabetic retinopathy (PDR): Characterized by neovascularization as an attempt to compensate for hypoxia, these fragile new blood vessels are prone to bleeding, leading to serious complications such as vitreous hemorrhage, retinal detachment, and neovascular glaucoma. An example is presented in Figure 1c.

- Blood vessels: These carry blood to and from the retina, appearing as fine lines in fundus images.

- Macula: Oval-shaped, yellowish area near the center of the retina responsible for detailed central vision; it appears darker in retinal images.

- Fovea: Located within the macula, the fovea has the highest density of cones in the retina.

- Optic nerve: Transmits visual information to the brain, appearing as a large spot positioned medial to the macula.

2. Retinal Picturing Methods

Retinal Photography

3. Disease Classification and Assessment

3.1. Learning-Based Methods

3.2. Sequential (Rule-Based) Processing

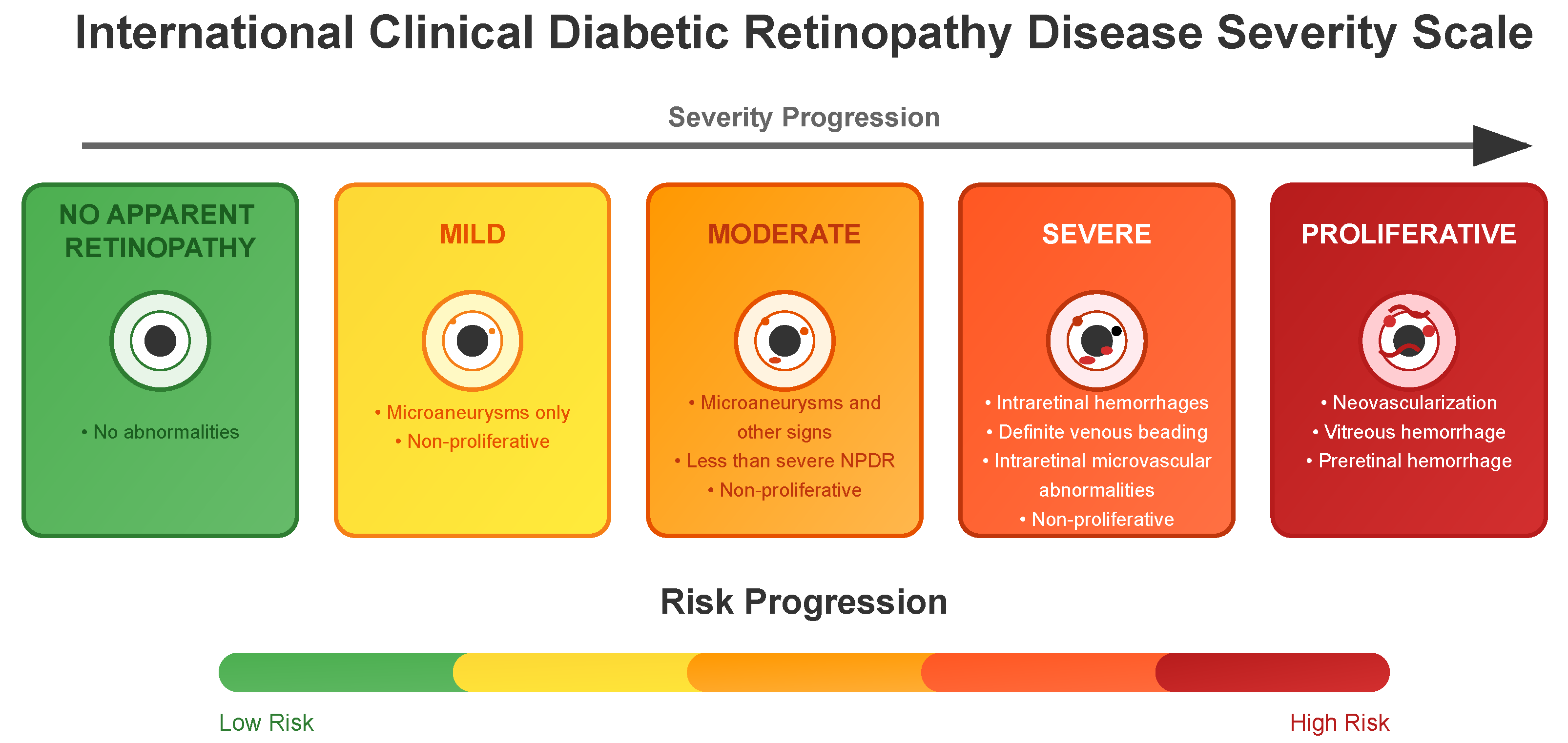

3.3. DR Severity Grading

- No apparent retinopathy (Level 0): No abnormalities are present. Patients typically continue with routine diabetes management and regular eye examinations.

- Mild (Level 1): Microaneurysms only and non-proliferative. Increased monitoring is recommended, and patients may be advised on better glycemic and blood pressure control to prevent progression.

- Moderate (Level 2): More than just microaneurysms but less than severe non-proliferative diabetic retinopathy. The patient might need more frequent eye exams, and the healthcare team may consider medical interventions to manage diabetes more aggressively.

- Severe (Level 3): A significant worsening of the condition and a high risk of progression to proliferative DR, presence of signs such as intraretinal hemorrhages, definite venous beading, or prominent intraretinal microvascular abnormalities.

- Proliferative State (Level 4): The most advanced and sight-threatening stage with one or more of the following: neovascularization, vitreous or preretinal hemorrhage. Urgent treatment is required, which may include intravitreal injections, laser therapy, or vitrectomy to prevent permanent vision loss.

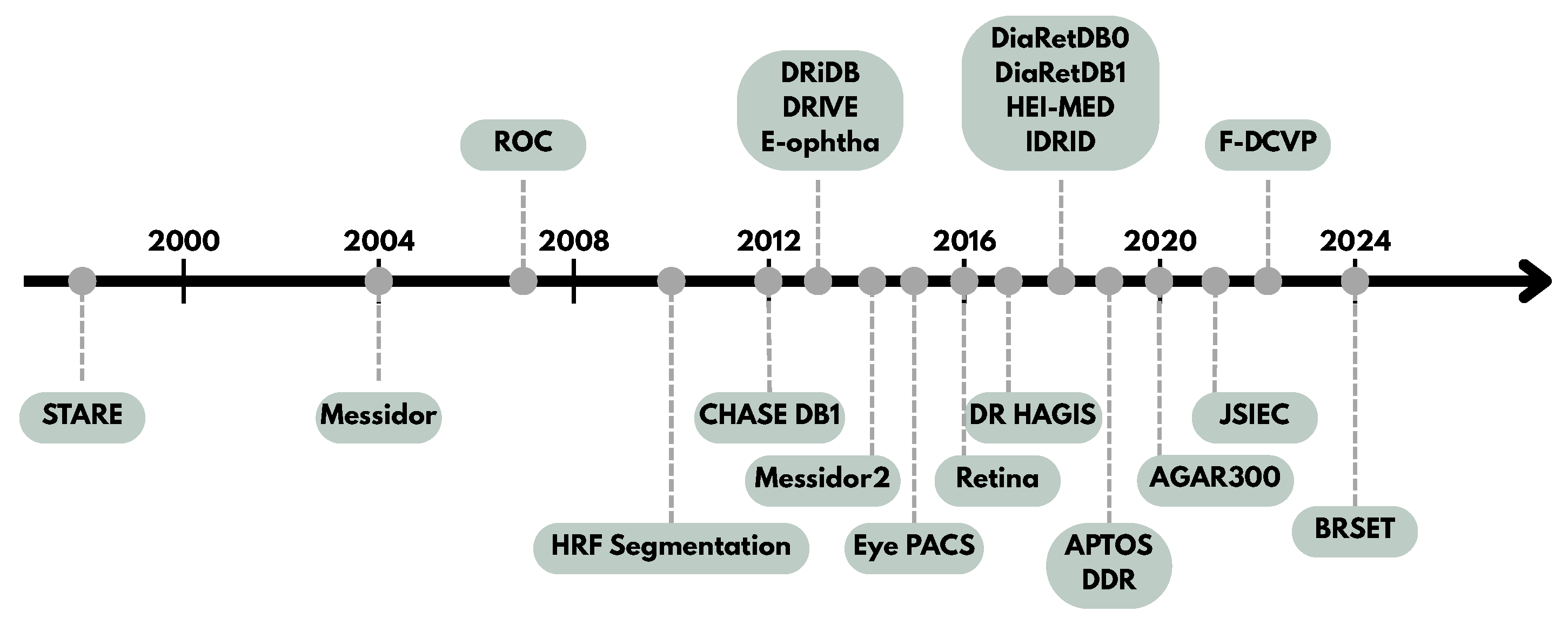

4. Retinal Image Databases for DR Research

4.1. Key Points

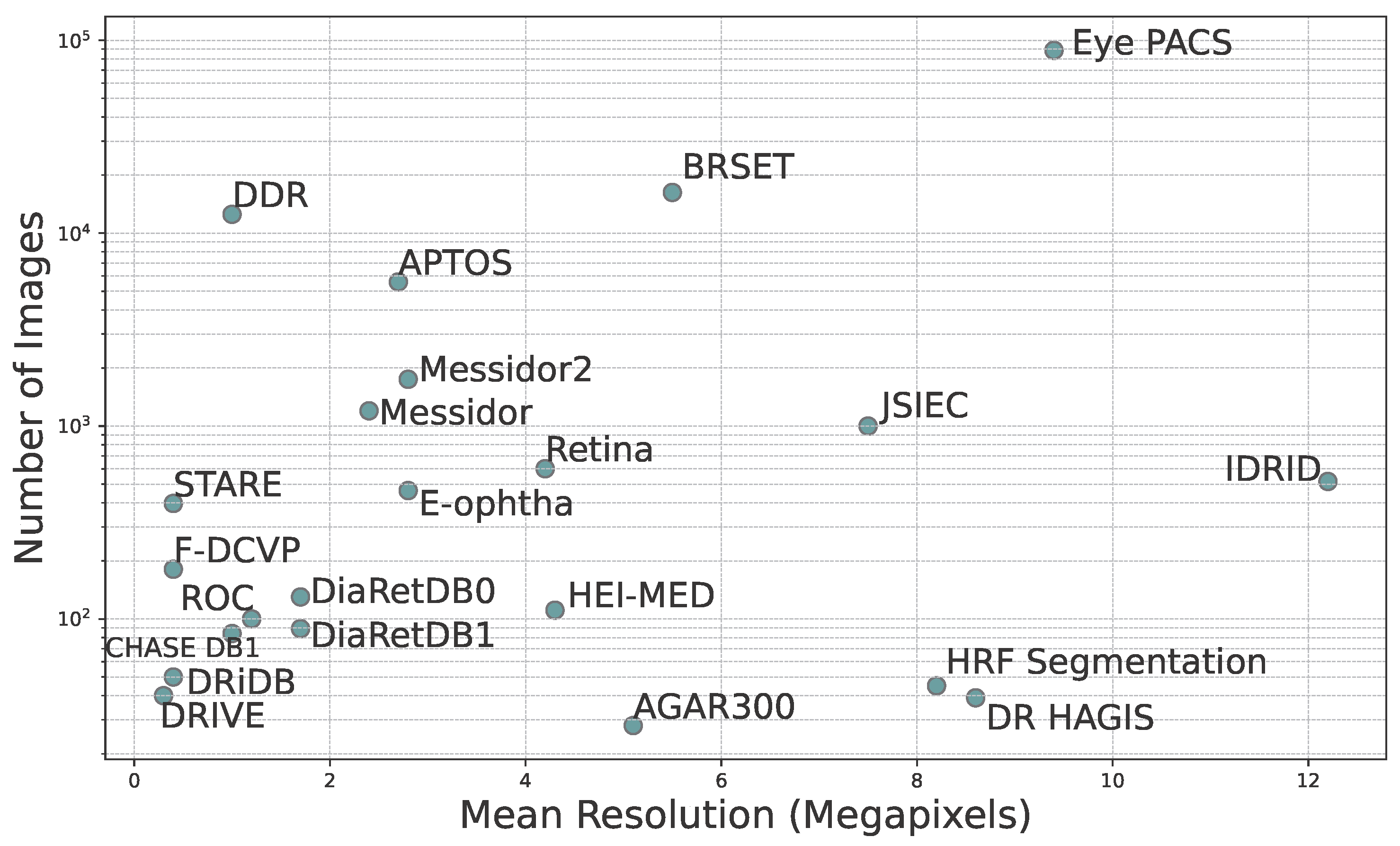

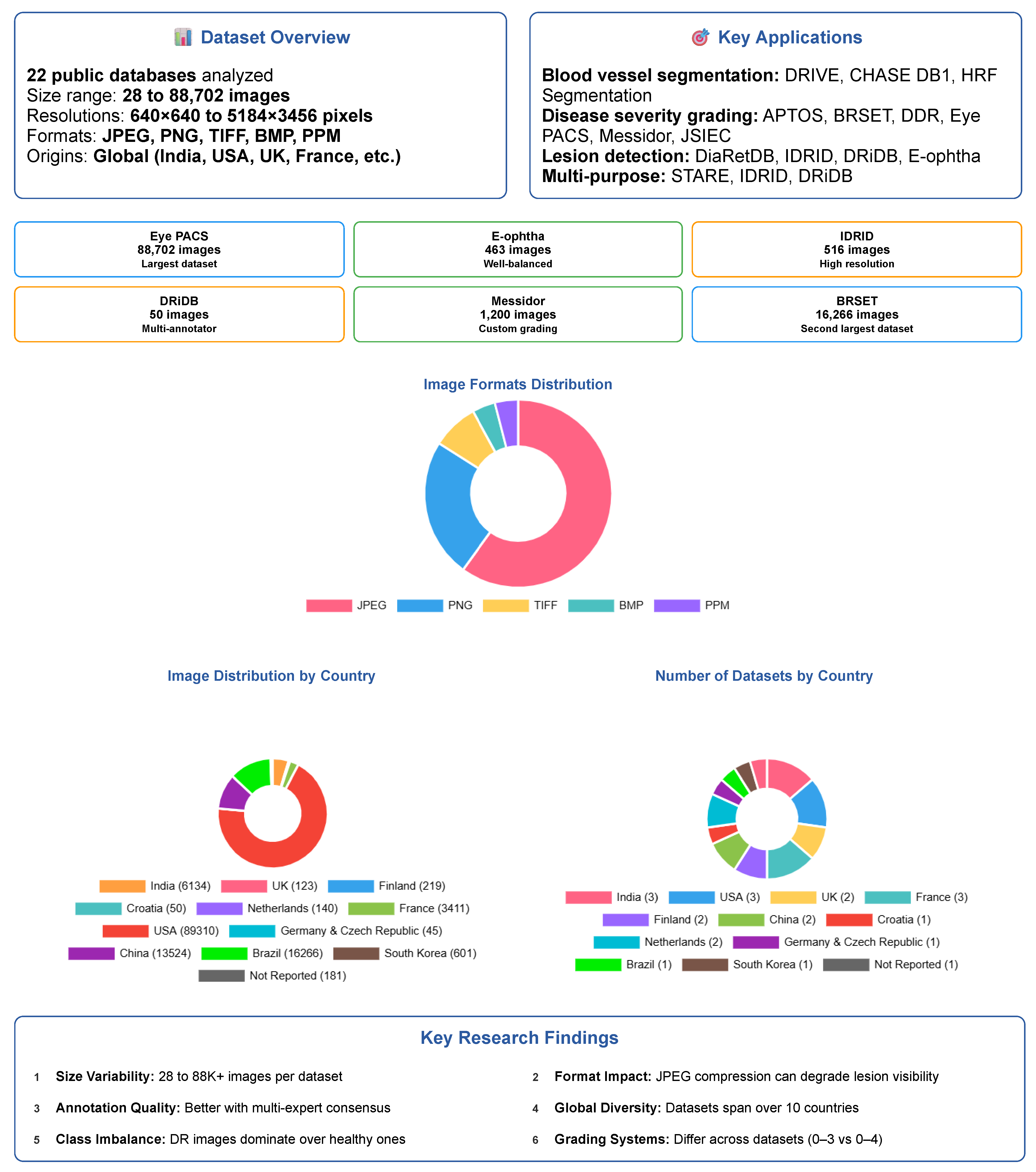

- Dataset Variability: There is significant variability in dataset sizes, ranging from 28 images in AGAR300 to 88,702 images in EyePACS. Image resolutions vary significantly, potentially affecting the reliability and accuracy of subsequent analyses.

- Image Formats: The datasets employ a range of standard image formats—JPEG, PNG, TIFF, and BMP—each with varying compression characteristics that affect file size and storage efficiency, as well as image quality, especially the preservation of fine details and the presence of compression artifacts.

- Labels and Annotations: A key aspect of dataset utility for deep learning is the availability of either image-level labels or pixel-level annotations, which correspond to two distinct use cases. Labels typically refer to DR severity grades (e.g., “moderate NPDR”, “proliferative DR”) and are used in classification tasks. Annotations, in contrast, provide lesion-level localization (e.g., masks for microaneurysms, exudates, hemorrhages) and are used in detection or segmentation tasks. Most deep learning models rely only on labels for severity classification, while others focus on localizing lesions regardless of severity grade. Some datasets offer both, enabling hybrid approaches. Anatomical landmarks such as the optic disc and macula may also be annotated to increase clinical relevance.

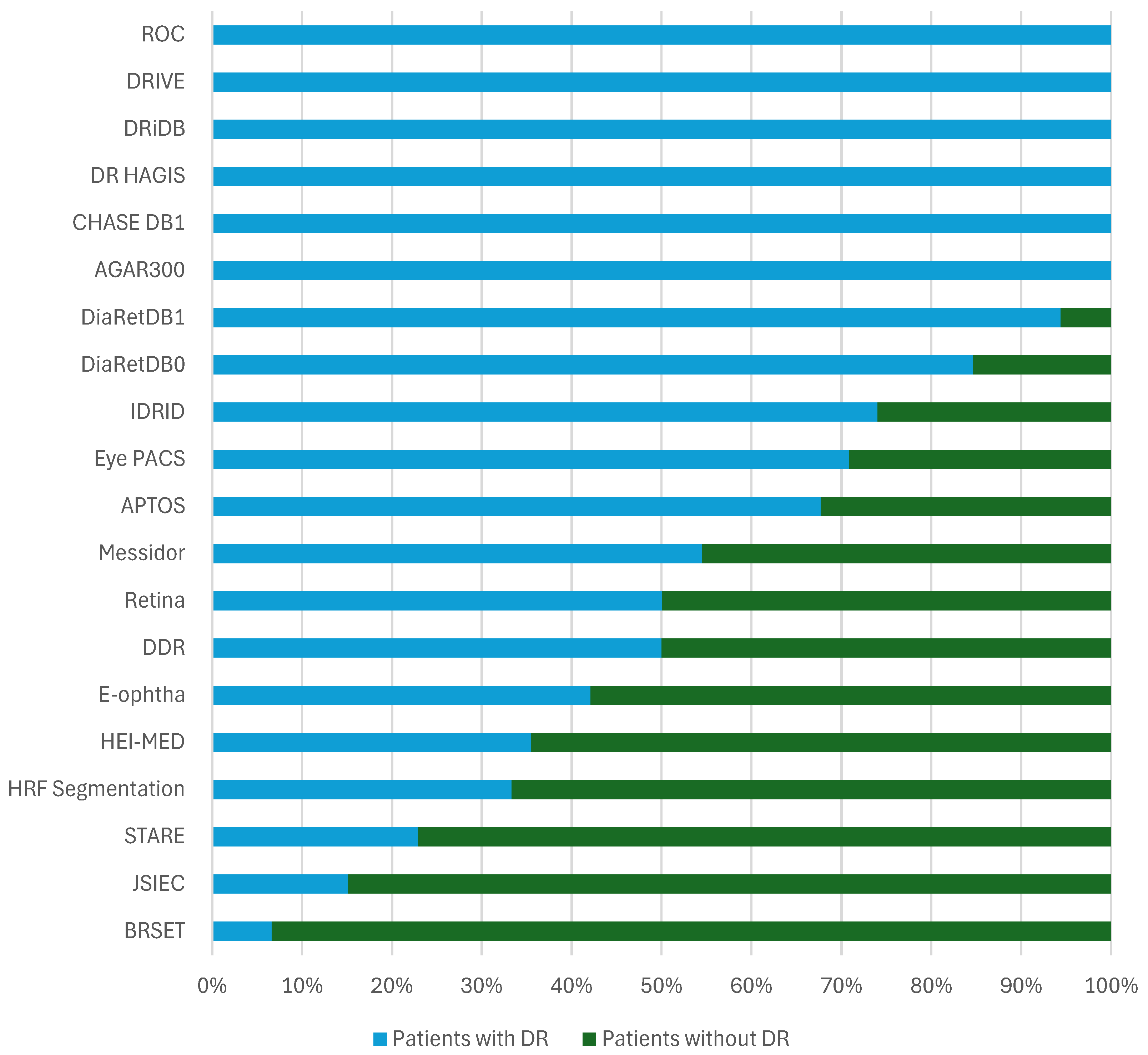

- Data Distribution: The distribution of patients affected and unaffected by DR differs markedly between datasets. While large-scale datasets like EyePACS include many unaffected patients, others like DRiDB and AGAR300 focus exclusively on DR patients. Such variability affects model training and may introduce bias if class imbalance is not carefully managed.

- Grading Systems: The grading systems for disease severity are not consistent across datasets. For example, Messidor uses its own grading system based on the number of microaneurysms, hemorrhages, and neovascularization, while others use the International Clinical Diabetic Retinopathy Scale.

- Annotated Areas: Datasets provide annotations for various anatomical characteristics and signs, such as blood vessels, exudates, microaneurysms, and hemorrhages. The annotation formats vary, with some datasets providing segmentation masks and others using XML documents. Clarifying whether these annotations are intended for lesion detection or severity classification is essential for selecting appropriate datasets for model development.

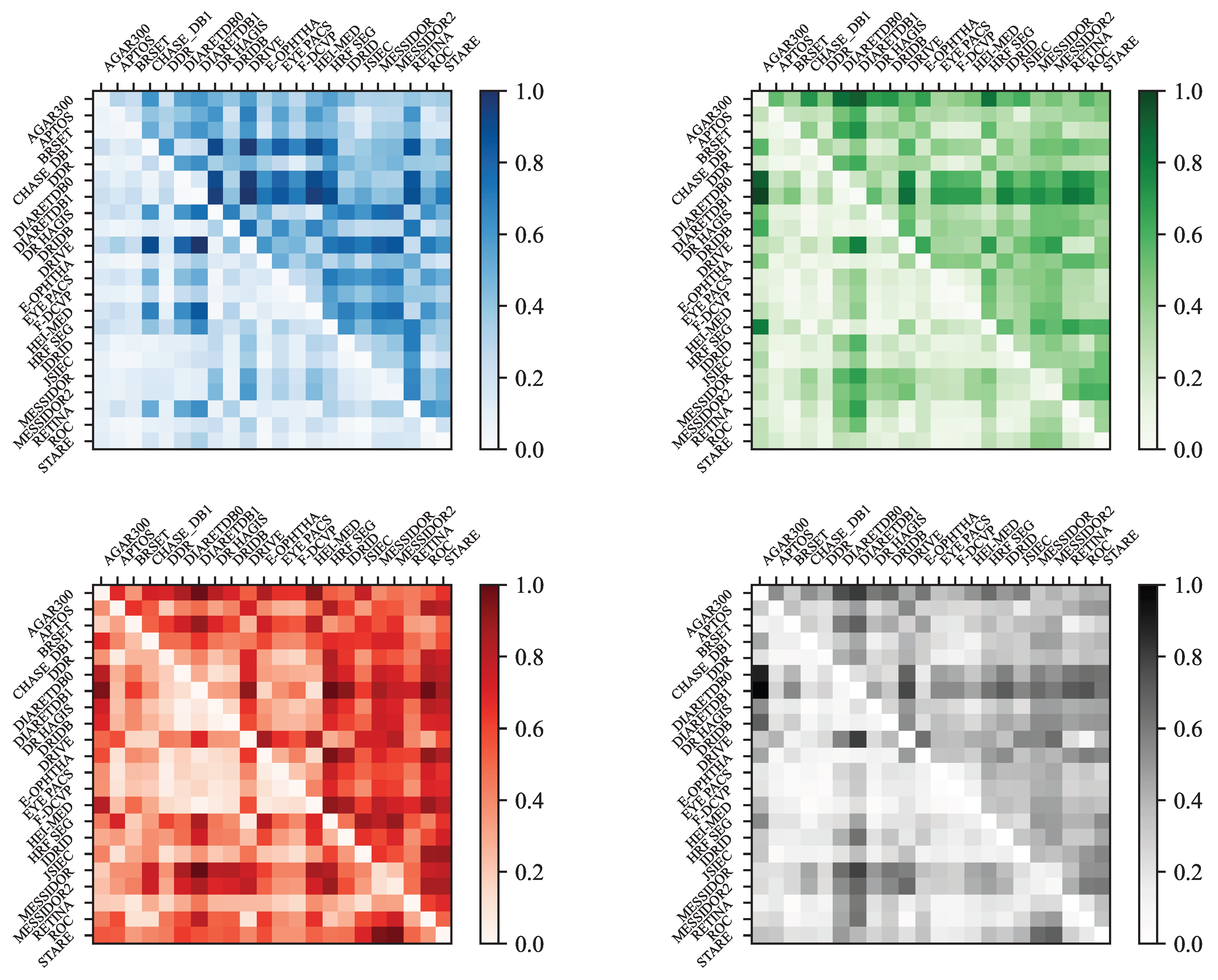

- Color Analysis: The section includes an analysis of color histograms and statistical features for each dataset, showing differences and similarities in color distribution and image quality.

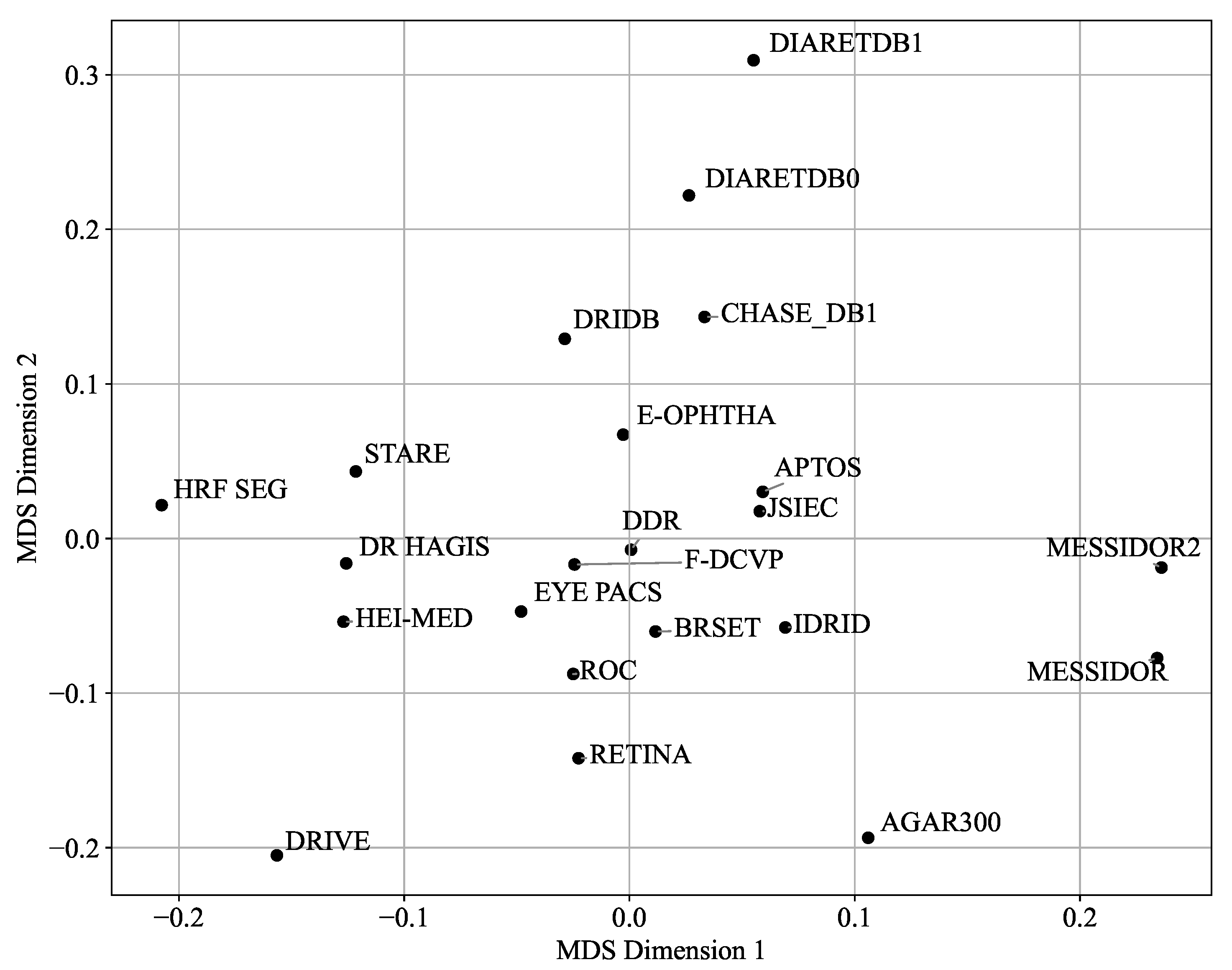

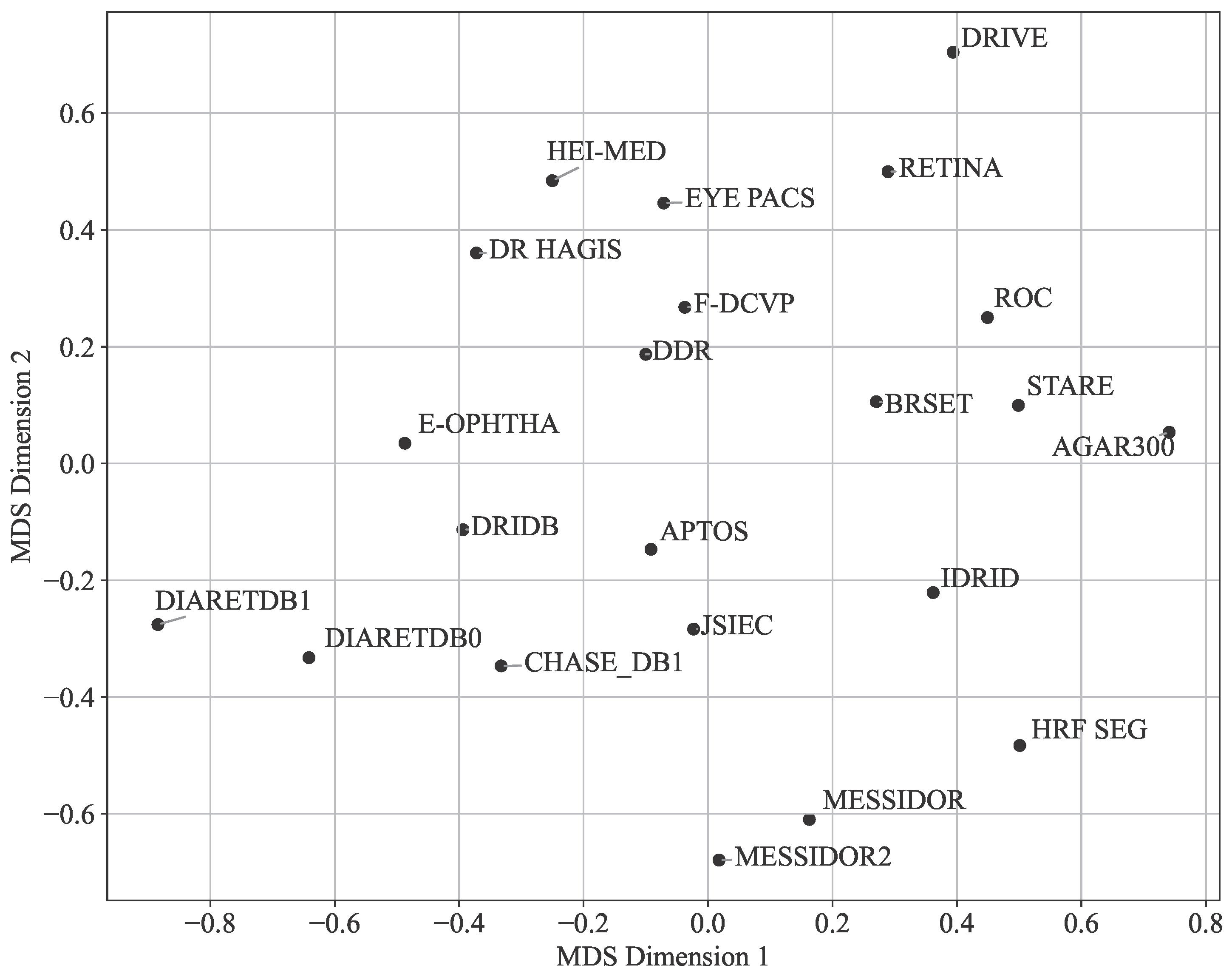

- Visualization and Analysis: Techniques like Multi-Dimensional Scaling (MDS) and Principal Component Analysis (PCA) are used to visualize and analyze the similarities and differences between datasets based on color histograms and other features.

4.2. General Overview

- AGAR300: Annotated Germs for Automated Recognition [30]

- APTOS: Asia Pacific Tele-Ophthalmology Society [31]

- BRSET: Brazilian Multilabel Ophthalmological Dataset [32]

- CHASE DB1: CHASE DataBase 1 [33]

- DDR: Dataset for Diabetic Retinopathy [34]

- DiaRetDB0: Diabetic Retinopathy DataBase 0 [35]

- DiaRetDB1: Diabetic Retinopathy DataBase 1 [36]

- DR HAGIS: Diabetic Retinopathy, Hypertension, Age-related Macular Degeneration and Glaucoma Images [37]

- DRiDB: Diabetic Retinopathy image DataBase [38]

- DRIVE: Digital Retinal Images for Vessel Extraction [39]

- E-ophtha: E-ophtha [40]

- Eye PACS: Eye Picture Archive Communication System [11]

- F-DCVP: Fundus-Data Computer Vision Project [41]

- HEI-MED: Hamilton Eye Institute Macular Edema Dataset [42]

- HRF: High-Resolution Fundus Segmentation [43]

- IDRID: Indian Diabetic Retinopathy Image Dataset [28]

- JSIEC: Joint Shantou International Eye Centre [44]

- Messidor: Methods to Evaluate Segmentation and Indexing Techniques in the field of Retinal Ophthalmology [45]

- Retina: Retina [47]

- ROC: Online Challenge [48]

- STARE: Structured Analysis of the Retina [10]

4.3. Data Distribution and Patient Health Status

4.4. Grading and Annotations

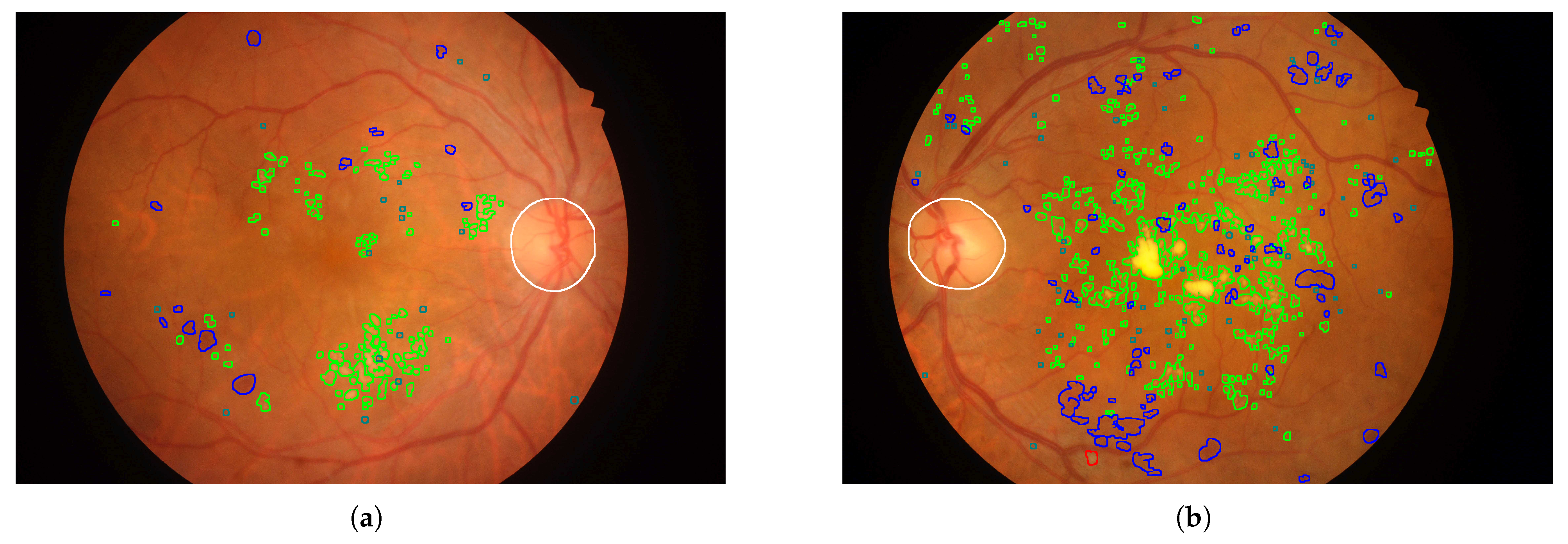

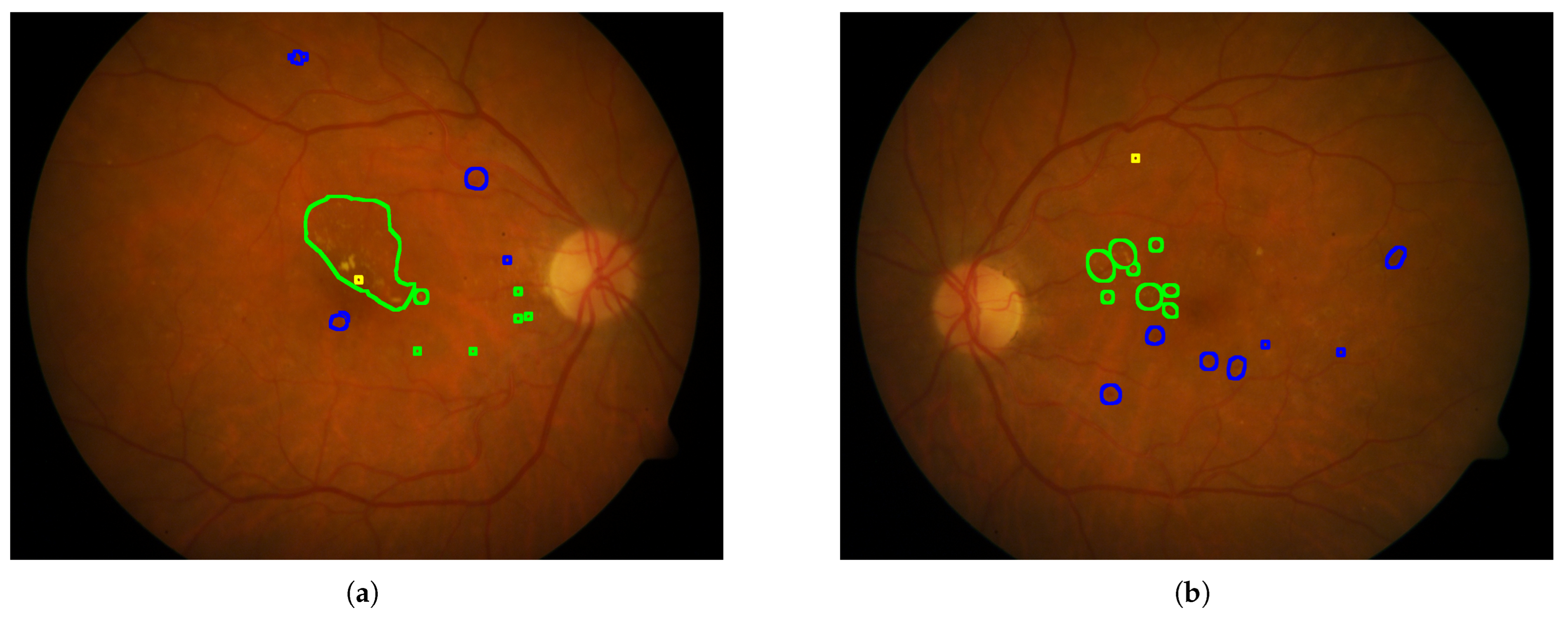

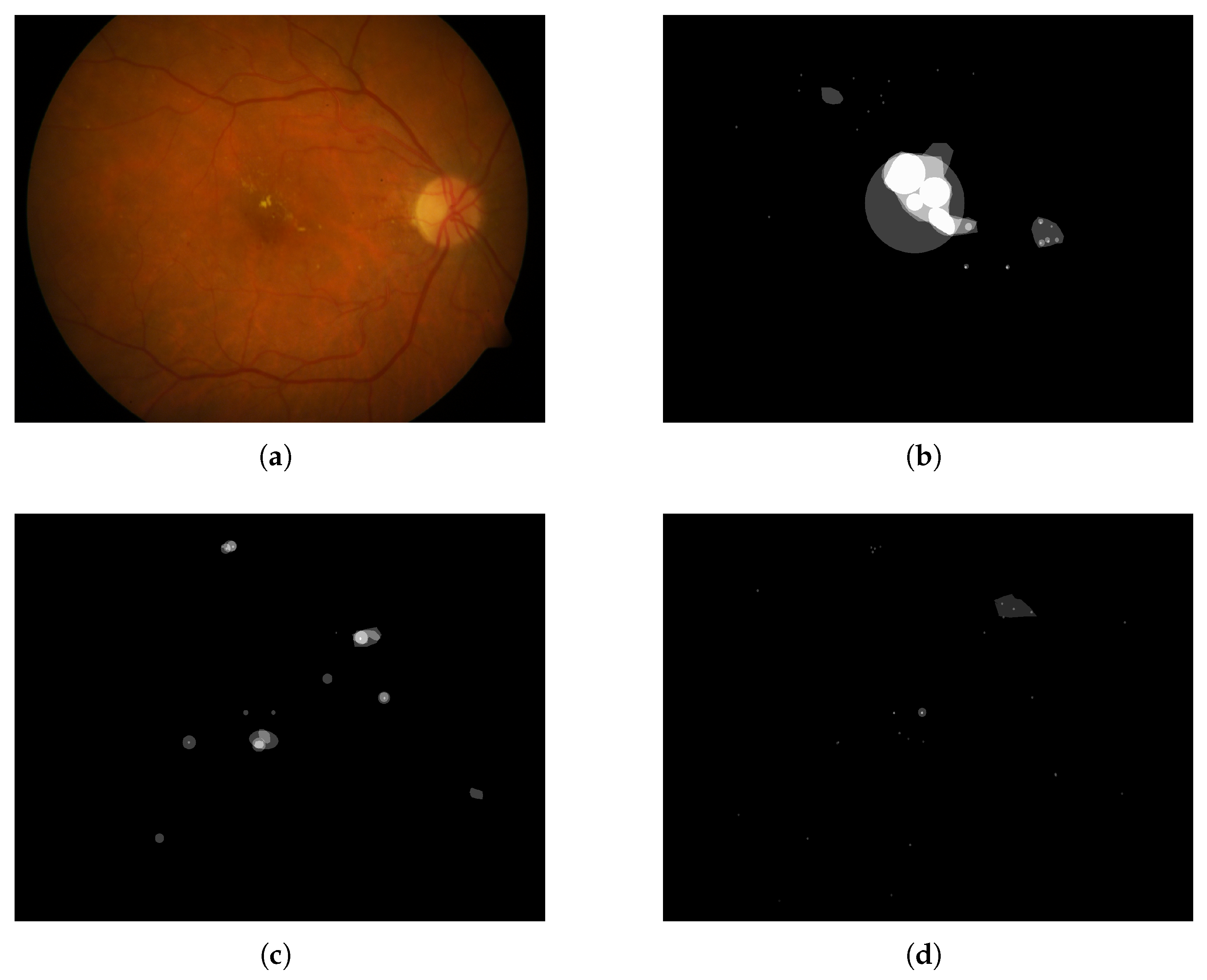

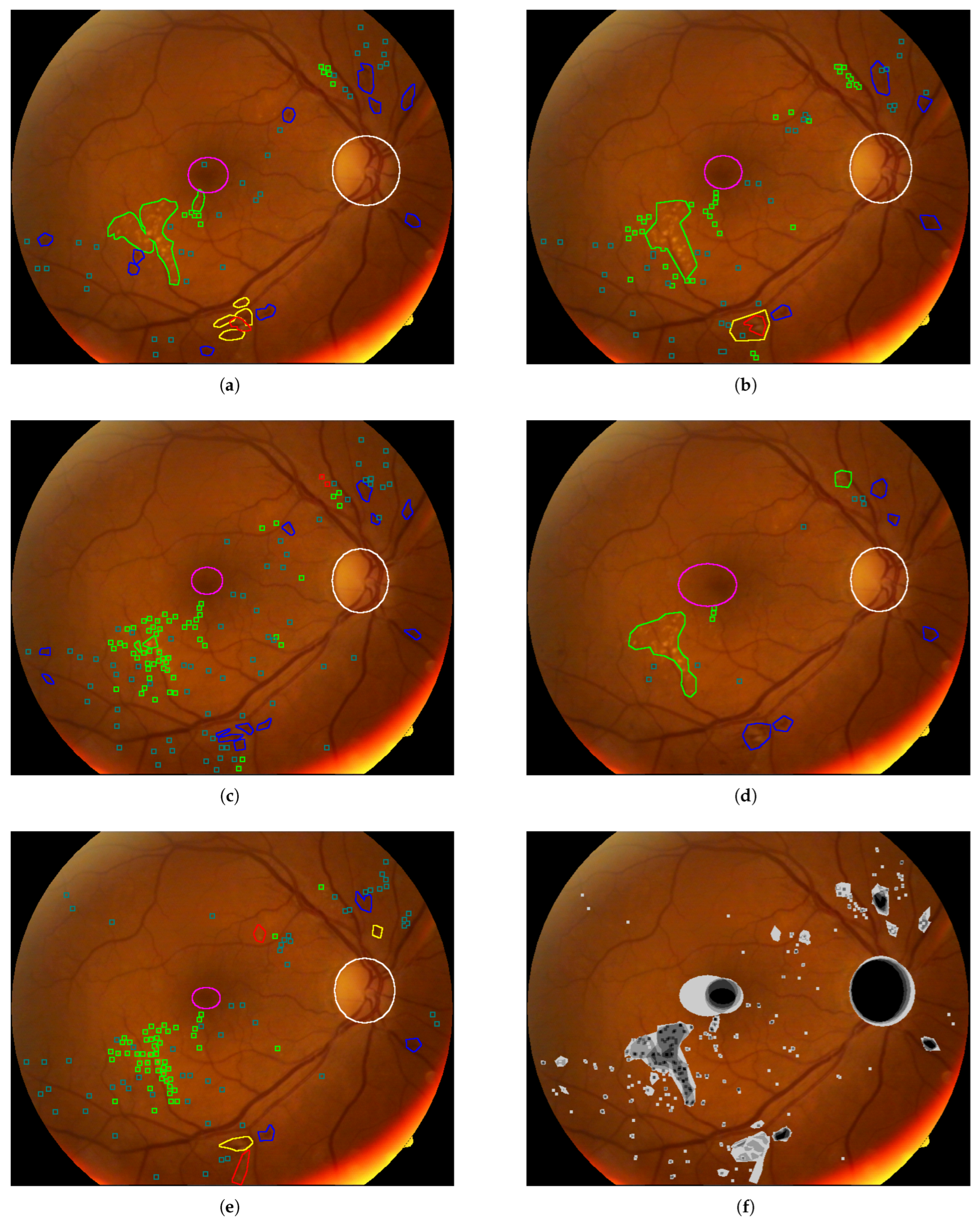

4.5. Analyses of the Annotations

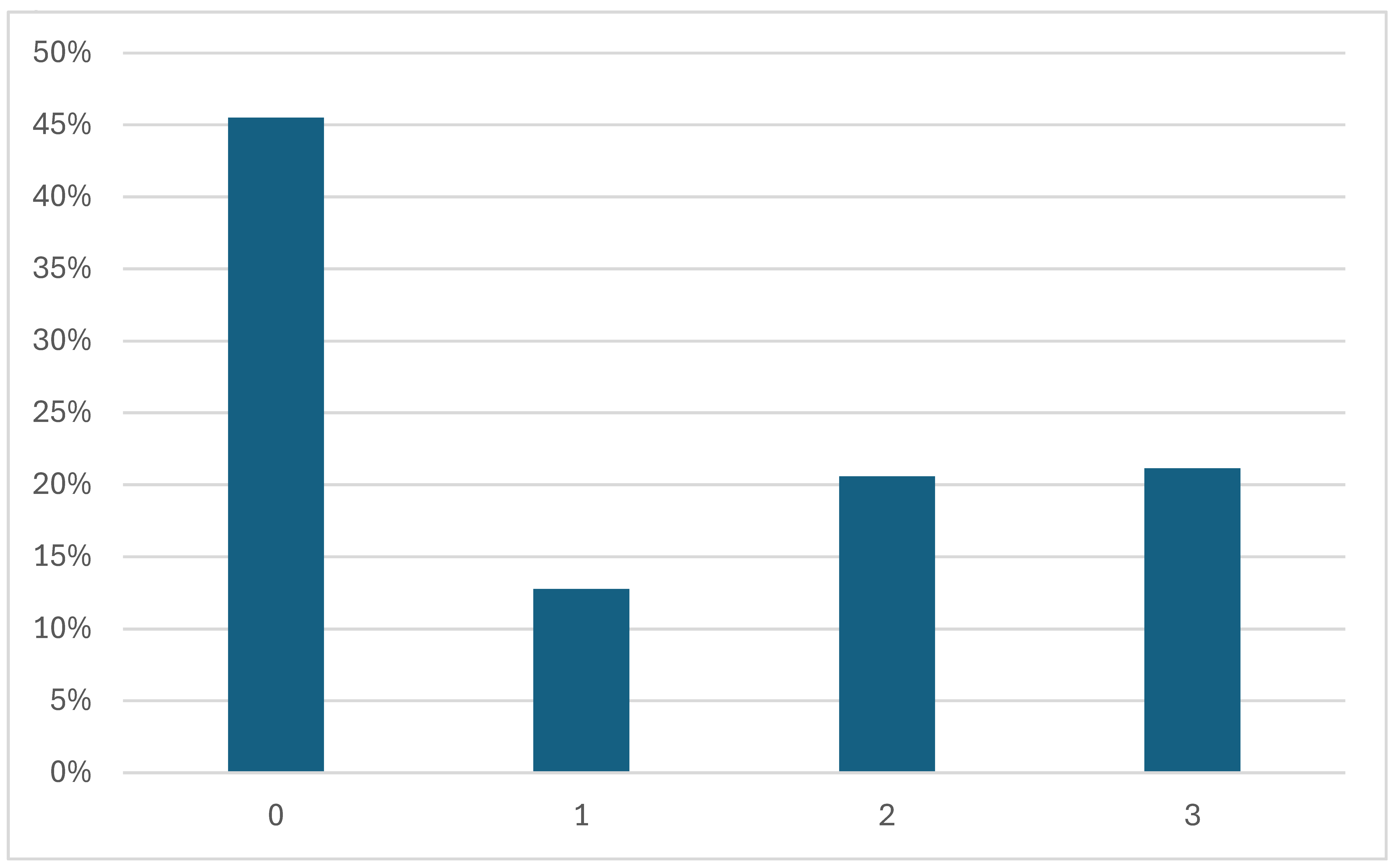

4.5.1. Disease Grading

- 0—No apparent retinopathy

- 1—Mild

- 2—Moderate

- 3—Severe

- 4—Proliferative DR

4.5.2. Annotated Areas

4.6. Annotation Quality and Bias Assessment

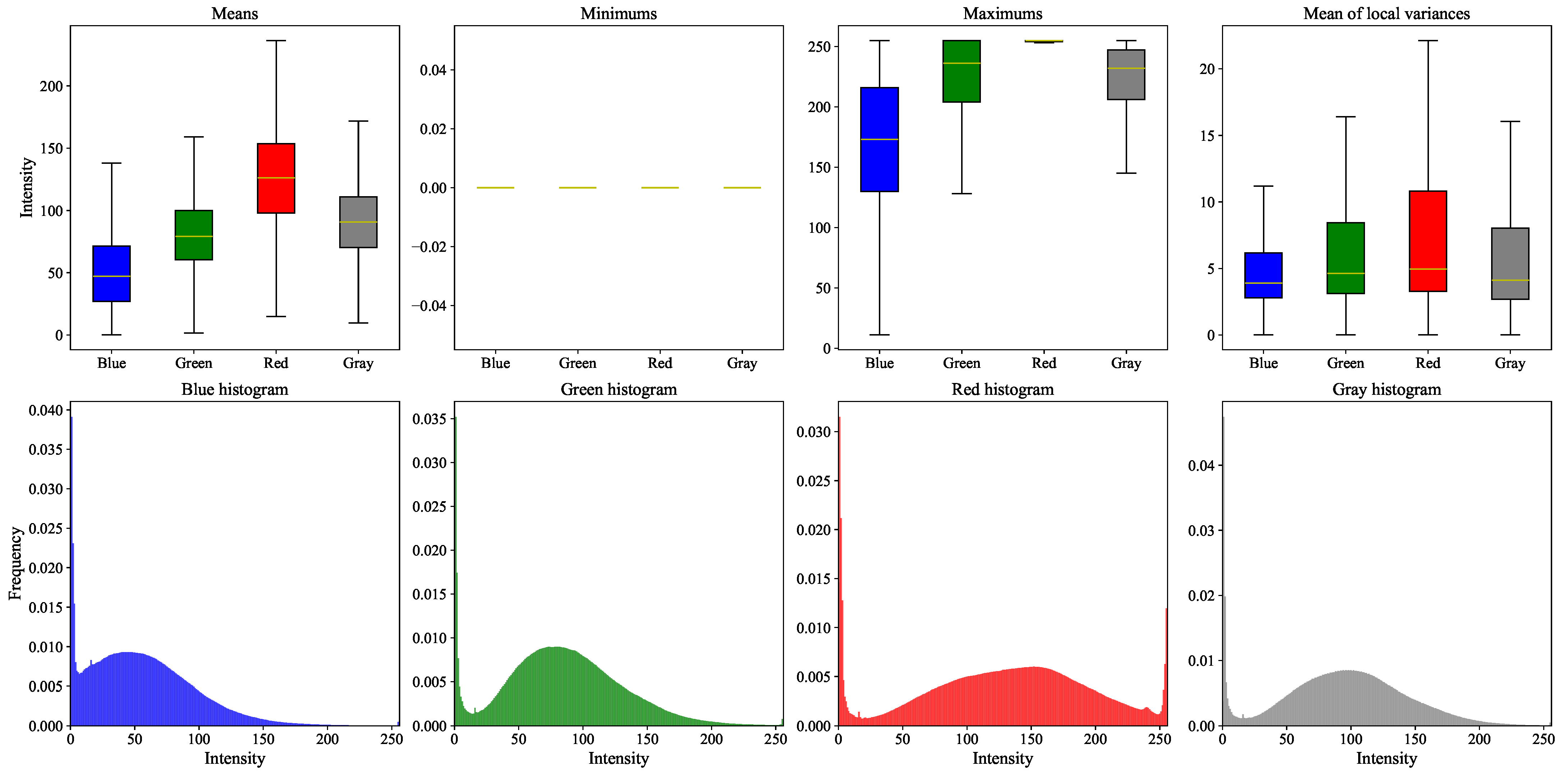

Color Analysis

- AGAR300: NR

- APTOS: NR

- BRSET: Canon CR2 camera, Nikon NF5050 retinal camera

- CHASE DB1: Nidek NM-200-D fundus camera. The images were captured at a 30-degree field of view with a resolution of 1280 × 960 pixels

- DDR: TRC NW48, Nikon D5200, Canon CR 2 cameras

- DiaRetDB0: 50-degree field-of-view digital fundus camera

- DiaRetDB1: 50-degree field-of-view digital fundus camera

- DR HAGIS: TRC-NW6s (Topcon), TRC-NW8 (Topcon), or CR-DGi fundus camera (Canon)

- DRiDB: Zeiss VISUCAM 200 fundus camera at a 45-degree field of view

- DRIVE: Canon CR5 non-mydriatic 3CCD camera with a 45-degree field of view (FOV). Each image was captured using 8 bits per color plane at 768 × 584 pixels.

- E-ophtha: NR

- Eye PACS: Centervue DRS (Centervue, Italy), Optovue iCam (Optovue, USA), Canon CR1/DGi/CR2 (Canon), and Topcon NW (Topcon)

- F-DCVP: NR

- HEI-MED: Visucam PRO fundus camera (ZEISS, Germany)

- HRF: CF-60UVi camera (Canon)

- IDRID: VX-10 alpha digital fundus camera (Kowa, USA)

- JSIEC: NR

- Messidor: Topcon TRC NW6 non-mydriatic fundus camera with a 45-degree field of view using 8 bits per color channel and a resolution of 1440 × 960, 2240 × 1488 or 2304 × 1536 pixels.

- Messidor2: Topcon TRC NW6 non-mydriatic fundus camera with a 45-degree field of view using 8 bits per color channel and a resolution of 1440 × 960, 2240 × 1488 or 2304 × 1536 pixels.

- Retina: NR

- ROC: TRC-NW100 (Topcon), TRC-NW200 (Topcon), or CR5–45NM (Canon)

- STARE: TRV-50 fundus camera (Topcon)

- Mean;

- Minimum;

- Maximum;

- Mean of the local variances (computed using a 5 × 5 window);

- Histograms (blue, green, red, and grayscale).

- The top row shows box plots for the first four statistical features listed above;

- The bottom row presents the normalized histograms, computed as the average of all image histograms within each dataset.

- Manhattan distance (L1 norm):

- Bhattacharyya distance (if histograms are not normalized):where

5. Conclusions and Discussion

Recommendations

- For segmentation tasks: Favor datasets with pixel-level annotations and central positioning in the PCA space (e.g., DRiDB and IDRID for lesions segmentation, HRF Segmentation and DRIVE for blood vessel segmentation). Use caution when incorporating outlier datasets like AGAR300, as their distinct characteristics may hinder generalization.

- For classification: Datasets such as APTOS and EYE-PACS, which lie near the PCA centroid, may provide a good balance of feature diversity and representativeness for model training. They are also based on the ICDR severity scale, and one of the severity classes is not under-represented.

- For dataset selection or benchmarking: Central datasets like MESSIDOR and EYE-PACS can serve as robust baselines. Outliers such as HEI-MED or DiaRetDB0 can be used to evaluate performance under less-controlled acquisition settings.

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| DR | Diabetic Retinopathy |

| NPDR | Non-Proliferative Diabetic Retinopathy |

| NR | Not Reported |

| PDR | Proliferative Diabetic Retinopathy |

| PCA | Principal Component Analysis |

| ICDR | International Clinical Diabetic Retinopathy |

| AGAR | Annotated Germs for Automated Recognition |

| APTOS | Asia Pacific Tele-Ophthalmology Society |

| BRSET | Brazilian Multilabel Ophthalmological Dataset |

| DDR | Dataset for Diabetic Retinopathy |

| DiaRetDB | Diabetic Retinopathy DataBase |

| DR HAGIS | Diabetic Retinopathy, Hypertension, Age-related macular degeneration and Glaucoma Images |

| DRiDB | Diabetic Retinopathy image DataBase |

| DRIVE | Digital Retinal Images for Vessel Extraction |

| Eye PACS | Eye Picture Archive Communication System |

| F-DCVP | Fundus-Data Computer Vision Project |

| HEI-MED | Hamilton Eye Institute Macular Edema Dataset |

| HRF | High-Resolution Fundus Segmentation |

| IDRID | Indian Diabetic Retinopathy Image Dataset |

| JSIEC | Joint Shantou International Eye Center |

| ROC | Online Challenge |

| STARE | Structured Analysis of the Retina |

References

- American Diabetes Association. Diagnosis and classification of diabetes mellitus. Diabetes Care 2010, 33, S62–S69, Erratum in Diabetes Care 2010, 33, e57.. [Google Scholar] [CrossRef]

- Brownlee, M. Biochemistry and molecular cell biology of diabetic complications. Nature 2001, 414, 813–820. [Google Scholar] [CrossRef]

- Yau, J.; Rogers, S.; Kawasaki, R.; Lamoureux, E.; Kowalski, J.; Bek, T.; Chen, S.; Dekker, J.; Fletcher, A.; Grauslund, J.; et al. Global prevalence and major risk factors of diabetic retinopathy. Diabetes Care 2012, 35, 556–564. [Google Scholar] [CrossRef]

- Ruta, L.; Magliano, D.; Lemesurier, R.; Taylor, H.; Zimmet, P.; Shaw, J. Prevalence of diabetic retinopathy in Type 2 diabetes in developing and developed countries. Diabet. Med. 2013, 30, 387–398. [Google Scholar] [CrossRef]

- Cheung, N.; Mitchell, P.; Wong, T.Y. Diabetic Retinopathy. Lancet 2010, 376, 124–136. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Wang, W.; Ren, H.; Li, X.; Wen, Y. Prediction and analysis of risk factors for diabetic retinopathy based on machine learning and interpretable models. Heliyon 2024, 10, e29497. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Tan, T.; Shao, Y.; Wong, T.; Li, X. Classification of diabetic retinopathy: Past, present and future. Front. Endocrinol. 2022, 13, 1079217. [Google Scholar] [CrossRef]

- American Academy of Ophthalmology. Preferred Practice Pattern®: Diabetic Retinopathy; American Academy of Ophthalmology: San Francisco, CA, USA, 2022. [Google Scholar]

- Wong, T.Y.; Sun, J.; Kawasaki, R.; Ruamviboonsuk, P.; Gupta, N.; Lansingh, V.C.; Maia, M.; Mathenge, W.; Moreker, M.; Muqit, M.M.; et al. Diabetic Retinopathy. Nat. Rev. Dis. Prim. 2016, 2, 16012. [Google Scholar] [CrossRef]

- Goldbaum, M. Structured Analysis of the Retina (STARE). Master’s Thesis, University of California, San Diego, CA, USA, 2003. Available online: https://cecas.clemson.edu/~ahoover/stare/ (accessed on 30 May 2024).

- Dugas, E.; Jorge, J.; Cukierski, W. Diabetic Retinopathy Detection. Kaggle. 2015. Available online: https://kaggle.com/competitions/diabetic-retinopathy-detection (accessed on 22 May 2025).

- Kunikata, H.; Aizawa, N.; Fuse, N.; Abe, T.; Nakazawa, T. 25-Gauge Microincision Vitrectomy to Treat Vitreoretinal Disease in Glaucomatous Eyes after Trabeculectomy. J. Ophthalmol. 2014, 2014, 306814. [Google Scholar] [CrossRef]

- Prathibha, S. Advancing diabetic retinopathy diagnosis with fundus imaging: A comprehensive survey of computer-aided detection, grading and classification methods. Glob. Transit. 2024, 6, 93–112. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, B.; Huang, L.; Cui, S.; Shao, L. A benchmark for studying diabetic retinopathy: Segmentation, grading, and transferability. IEEE Trans. Med. Imaging 2020, 40, 818–828. [Google Scholar] [CrossRef]

- Quellec, G.; Charriere, K.; Boudi, Y.; Cochener, B.; Lamard, M. Deep image mining for diabetic retinopathy screening. Med. Image Anal. 2017, 39, 178–193. [Google Scholar] [CrossRef]

- Playout, C.; Cheriet, F. Cross-dataset generalization for retinal lesions segmentation. arXiv 2024, arXiv:2405.08329. [Google Scholar] [CrossRef]

- Fadugba, J.; Köhler, P.; Koch, L.; Manescu, P.; Berens, P. Benchmarking Retinal Blood Vessel Segmentation Models for Cross-Dataset and Cross-Disease Generalization. arXiv 2024, arXiv:2406.14994. [Google Scholar]

- Wei, H.; Shi, P.; Miao, J.; Zhang, M.; Bai, G.; Qiu, J.; Liu, F.; Yuan, W. Caudr: A causality-inspired domain generalization framework for fundus-based diabetic retinopathy grading. Comput. Biol. Med. 2024, 175, 108459. [Google Scholar] [CrossRef]

- Le Monde. More than 1.3 Billion People Will Have Diabetes by 2050. 2023. Available online: https://www.lemonde.fr/en/environment/article/2023/06/24/more-than-1-3-billion-people-will-have-diabetes-by-2050_6036228_114.html (accessed on 3 July 2025).

- Gollogly, H.E.; Hodge, D.O.; St Sauver, J.L.; Erie, J.C. Increasing incidence of cataract surgery: Population-based study. J. Cataract. Refract. Surg. 2013, 39, 1383–1389. [Google Scholar] [CrossRef] [PubMed]

- Abràmoff, M.D.; Garvin, M.K.; Sonka, M. Retinal Imaging and Image Analysis. IEEE Rev. Biomed. Eng. 2010, 3, 169–208. [Google Scholar] [CrossRef] [PubMed]

- International Agency for the Prevention of Blindness (IAPB). IAPB Technical Specifications; Rapport Technique Version Finale 08/04/2021; International Agency for the Prevention of Blindness: London, UK, 2021. [Google Scholar]

- Ghasemi Falavarjani, K.; Wang, K.; Khadamy, J.; Sadda, S.R. Ultra-wide-field imaging in diabetic retinopathy; an overview. J. Curr. Ophthalmol. 2016, 28, 57–60. [Google Scholar] [CrossRef]

- Thomas, R.L. Delaying and preventing diabetic retinopathy. Pract. Diabetes 2021, 38, 31–34. [Google Scholar] [CrossRef]

- Andrade-Miranda, G.; Jaouen, V.; Tankyevych, O.; Cheze Le Rest, C.; Visvikis, D.; Conze, P.H. Multi-modal medical Transformers: A meta-analysis for medical image segmentation in oncology. Comput. Med. Imaging Graph. 2023, 110, 102308. [Google Scholar] [CrossRef]

- Andrade-Miranda, G.; Vega, P.S.; Taguelmimt, K.; Dang, H.P.; Visvikis, D.; Bert, J. Exploring transformer reliability in clinically significant prostate cancer segmentation: A comprehensive in-depth investigation. Comput. Med. Imaging Graph. 2024, 118, 102459. [Google Scholar] [CrossRef] [PubMed]

- Singh, N.; Tripathi, R. Automated Early Detection of Diabetic Retinopathy Using Image Analysis Techniques. Int. J. Comput. Appl. 2010, 8, 18–23. [Google Scholar] [CrossRef]

- Porwal, P.; Pachade, S.; Kamble, R.; Kokare, M.; Deshmukh, G.; Sahasrabuddhe, V.; Meriaudeau, F. Indian Diabetic Retinopathy Image Dataset (IDRiD). IEEE Dataport 2018. [Google Scholar] [CrossRef]

- Wilkinson, C.; Ferris, F.L.; Klein, R.E.; Lee, P.P.; Agardh, C.D.; Davis, M.; Dills, D.; Kampik, A.; Pararajasegaram, R.; Verdaguer, J.T. Proposed international clinical diabetic retinopathy and diabetic macular edema disease severity scales. Ophthalmology 2003, 110, 1677–1682. [Google Scholar] [CrossRef]

- Derwin, D.J.; Selvi, S.T.; Singh, O.J.; Shan, B.P. A novel automated system of discriminating Microaneurysms in fundus images. Biomed. Signal Process. Control 2020, 58, 101839. [Google Scholar] [CrossRef]

- Karthik, M.; Dane, S. APTOS 2019 Blindness Detection. Kaggle. 2019. Available online: https://kaggle.com/competitions/aptos2019-blindness-detection (accessed on 12 December 2024).

- Nakayama, L.F.; Goncalves, M.; Zago Ribeiro, L.; Santos, H.; Ferraz, D.; Malerbi, F.; Celi, L.A.; Regatieri, C. A Brazilian Multilabel Ophthalmological Dataset (BRSET) (Version 1.0.1); RRID:SCR_007345; PhysioNet: Cambridge, MA, USA, 2024. [Google Scholar] [CrossRef]

- Fraz, M.M.; Remagnino, P.; Hoppe, A.; Uyyanonvara, B.; Rudnicka, A.R.; Owen, C.G.; Barman, S.A. An Ensemble Classification-Based Approach Applied to Retinal Blood Vessel Segmentation. IEEE Trans. Biomed. Eng. 2012, 59, 2538–2548. [Google Scholar] [CrossRef]

- Li, T.; Gao, Y.; Wang, K.; Guo, S.; Liu, H.; Kang, H. Diagnostic Assessment of Deep Learning Algorithms for Diabetic Retinopathy Screening. Inf. Sci. 2019, 501, 511–522. [Google Scholar] [CrossRef]

- Kauppi, T.; Kalesnykiene, V.; Kamarainen, J.K.; Lensu, L.; Sorri, I.; Raninen, A.; Voutilainen, R.; Pietilä, J.; Kälviäinen, H.; Uusitalo, H. Diabetic Retinopathy Database—DIARETDB0; Technical Report; Department of Ophthalmology, Faculty of Medicine, University of Kuopio: Kuopio, Finland, 2018. [Google Scholar]

- Kauppi, T.; Kalesnykiene, V.; Kamarainen, J.K.; Lensu, L.; Sorri, I.; Raninen, A.; Voutilainen, R.; Pietilä, J.; Kälviäinen, H.; Uusitalo, H. Diabetic Retinopathy Database—DIARETDB1; Technical Report; Department of Ophthalmology, Faculty of Medicine, University of Kuopio: Kuopio, Finland, 2018. [Google Scholar]

- Holm, S.; Russell, G.; Nourrit, V.; McLoughlin, N. DR HAGIS—A fundus image database for the automatic extraction of retinal surface vessels from diabetic patients. J. Med. Imaging 2017, 4, 014503. [Google Scholar] [CrossRef]

- Prentašić, P.; Lončarić, S.; Vatavuk, Z.; Benčić, G.; Subašić, M.; Petković, T.; Dujmović, L.; Malenica-Ravlić, M.; Budimlija, N.; Tadić, R. Diabetic retinopathy image database (DRiDB): A new database for diabetic retinopathy screening programs research. In Proceedings of the 2013 8th International Symposium on Image and Signal Processing and Analysis (ISPA), Trieste, Italy, 4–6 September 2013; pp. 711–716. [Google Scholar]

- Grand Challenge. DRIVE: Digital Retinal Images for Vessel Extraction. 2017. Available online: https://drive.grand-challenge.org/ (accessed on 30 May 2024).

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gainville, B.; Deb-Joardar, H.; Massin, P.; Quellec, G.; et al. TeleOphta: Machine learning and image processing methods for teleophthalmology. IRBM 2013, 34, 196–203. [Google Scholar] [CrossRef]

- FundusData. Fundus-Data Dataset. 2022. Available online: https://universe.roboflow.com/fundusdata/fundus-data (accessed on 2 June 2025).

- Giancardo, L.; Meriaudeau, F.; Karnowski, T.P.; Li, Y.; Garg, S.; Tobin, K.W., Jr.; Chaum, E. Exudate-based diabetic macular edema detection in fundus images using publicly available datasets. Med. Image Anal. 2012, 16, 216–226. [Google Scholar] [CrossRef]

- Budai, A.; Odstrcilik, J.; Kondermann, D.; Hornegger, J.; Michelson, G. Robust Vessel Segmentation in Fundus Images. Int. J. Biomed. Imaging 2013, 2013, 154860. [Google Scholar] [CrossRef]

- Cen, L.P.; Ji, J.; Lin, J.W.; Ju, S.T.; Lin, H.J.; Li, T.P.; Wang, Y.; Yang, J.F.; Liu, Y.F.; Tan, S.; et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat. Commun. 2021, 12, 4828. [Google Scholar] [CrossRef]

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gainville, B.; Massin, P.; Deb-Joardar, H.; Danno, B.; et al. Feedback on a publicly distributed database: The Messidor database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Folk, J.C.; Han, D.P.; Walker, J.D.; Williams, D.F.; Russell, S.R.; Massin, P.; Cochener, B.; Gain, P.; Tang, L.; et al. Automated analysis of retinal images for detection of referable diabetic retinopathy. JAMA Ophthalmol. 2013, 131, 351–357. [Google Scholar] [CrossRef]

- Jr2ngb. Cataract Dataset. 2023. Available online: https://www.kaggle.com/datasets/jr2ngb/cataractdataset (accessed on 30 May 2024).

- Retinopathy Online Challenge. Retinopathy Online Challenge (ROC) Dataset. 2008. Available online: https://roc.healthcare.uiowa.edu/ (accessed on 30 May 2024).

- Pourjavan, S.; Bourguignon, G.-H.; Marinescu, C.; Otjacques, L.; Boschi, A. Evaluating the Influence of Clinical Data on Inter-Observer Variability in Fundus Photograph Assessment. Clin. Ophthalmol. 2024, 18, 3999–4009. [Google Scholar] [CrossRef]

- Du, K.; Shah, S.; Bollepalli, S.C.; Ibrahim, M.N.; Gadari, A.; Sutharahan, S.; Sahel, J.; Chhablani, J.; Vupparaboina, K.K. Inter-rater reliability in labeling quality and pathological features of retinal OCT scans: A customized annotation software approach. PLoS ONE 2024, 19, e0314707. [Google Scholar] [CrossRef]

- Srinivasan, S.; Suresh, S.; Chendilnathan, C.; Prakash V, J.; Sivaprasad, S.; Rajalakshmi, R.; Anjana, R.M.; Malik, R.A.; Kulothungan, V.; Raman, R.; et al. Inter-observer agreement in grading severity of diabetic retinopathy in ultra-wide-field fundus photographs. Eye 2023, 37, 1231–1235. [Google Scholar] [CrossRef]

- Abdulrahman, H.; Magnier, B.; Montesinos, P. From contours to ground truth: How to evaluate edge detectors by filtering. J. WSCG 2017, 25, 133–142. [Google Scholar]

- Teoh, C.S.; Wong, K.H.; Xiao, D.; Wong, H.C.; Zhao, P.; Chan, H.W.; Yuen, Y.S.; Naing, T.; Yogesan, K.; Koh, V.T.C. Variability in Grading Diabetic Retinopathy Using Retinal Photography and Its Comparison with an Automated Deep Learning Diabetic Retinopathy Screening Software. Healthcare 2023, 11, 1697. [Google Scholar] [CrossRef]

- Dumitrascu, O.M.; Li, X.; Zhu, W.; Woodruff, B.K.; Nikolova, S.; Sobczak, J.; Youssef, A.; Saxena, S.; Andreev, J.; Caselli, R.J.; et al. Color Fundus Photography and Deep Learning Applications in Alzheimer Disease. Mayo Clin. Proc. Digit. Health 2024, 2, 548–558. [Google Scholar] [CrossRef]

- Comaniciu, D.; Ramesh, V.; Meer, P. Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 564–577. [Google Scholar] [CrossRef]

- Borg, I.; Groenen, P.J.F. Modern Multidimensional Scaling: Theory and Applications; Springer: New York, NY, USA, 1997. [Google Scholar]

| Name | #Images | Resolution | Format | Geographical Origin | Year of Publication | DR and Other Diseases | Annotations | |

|---|---|---|---|---|---|---|---|---|

| AGAR300 | 28 | from 1020 × 1225 to 3696 × 2448 | JPEG |  | India | 2020 | No | |

| APTOS | 5590 | from 474 × 358 to 4288 × 2848 | JPEG |  | India | 2019 | No | |

| BRSET | 16,266 | from 951 × 874 to 2420 × 1880 | PNG, JPEG |  | Brazil | 2024 | Yes | |

| CHASE DB1 | 84 | 999 × 960 | JPEG, PNG |  | UK | 2012 | Yes | |

| DDR | 12,524 | from 512 × 512 to 5184 × 3456 | JPEG |  | China | 2019 | No | |

| DiaRetDB0 | 130 | 1500 × 1152 | PNG |  | Finland | 2018 | Yes | |

| DiaRetDB1 | 89 | 1500 × 1152 | PNG |  | Finland | 2018 | Yes | |

| DR HAGIS | 39 | from 2816 × 1880 to 4752 × 3168 | JPEG, PNG |  | UK | 2017 | ✓ | Yes |

| DRiDB | 50 | 720 × 576 | BMP |  | Croatia | 2013 | Yes | |

| DRIVE | 40 | 768 × 584 | JPEG |  | Netherlands | 2013 | Yes | |

| E-ophtha | 463 | from 1440 × 960 to 2544 × 1696 | JPEG |  | France | 2013 | Yes | |

| Eye PACS | 88,702 | 640 × 640 | JPEG |  | USA | 2015 | Yes | |

| F-DCVP | 181 | 640 × 640 | JPEG | ? | ? | 2022 | No | |

| HEI-MED | 111 | 4752 × 3168 | JPEG |  | USA | 2018 | Yes | |

| HRF Segmentation | 45 | 3504 × 2336 | JPEG |   | Germany and Czech Republic | 2010 | ✓ | Yes |

| IDRID | 516 | 4288 × 2848 | JPEG |  | India | 2018 | Yes | |

| JSIEC | 1000 | from 768 × 576 to 3152 × 3000 | JPEG |  | China | 2021 | ✓ | No |

| Messidor | 1187 | 2240 × 1488 | TIFF |  | France | 2004 | Yes | |

| Messidor2 | 1748 | 2240 × 1488 | TIFF |  | France | 2014 | No | |

| Retina | 601 | from 1848 × 1224 to 2592 × 1728 | PNG |  | South Korea | 2016 | ✓ | No |

| ROC | 100 | from 768 × 576 to 1394 × 1391 | JPEG |  | Netherlands | 2007 | Yes | |

| STARE | 397 | 700 × 605 | PPM |  | USA | before 2000 | ✓ | Yes |

| Name | Labels | Blood Vessels | Lesions | Annotation Format | ||

|---|---|---|---|---|---|---|

| Exudates | Microaneurysms | Hemorrhages | ||||

| AGAR300 | ✓ | None | ||||

| APTOS | ✓ | None | ||||

| BRSET | ✓ | ✓ | None | |||

| CHASE DB1 | ✓ | PNG | ||||

| DDR | ✓ | None | ||||

| DiaRetDB0 | ✓ | ✓ | ✓ | PNG | ||

| DiaRetDB1 | ✓ | ✓ | ✓ | PNG | ||

| DR HAGIS | ✓ | PNG | ||||

| DRiDB | ✓ | ✓ | ✓ | ✓ | BMP | |

| DRIVE | ✓ | GIF | ||||

| E-ophtha | ✓ | ✓ | PNG | |||

| Eye PACS | ✓ | None | ||||

| HEI-MED | ✓ | ✓ | Matlab files | |||

| HRF Segmentation | ✓ | TIFF | ||||

| IDRID | ✓ | ✓ | ✓ | ✓ | TIFF | |

| JSIEC | ✓ | None | ||||

| Messidor | ✓ | None | ||||

| ROC | ✓ | XML | ||||

| STARE | ✓ | ✓ | ✓ | ✓ | ✓ | TXT |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Conquer, V.; Lambolais, T.; Andrade-Miranda, G.; Magnier, B. Comprehensive Review of Open-Source Fundus Image Databases for Diabetic Retinopathy Diagnosis. Sensors 2025, 25, 5658. https://doi.org/10.3390/s25185658

Conquer V, Lambolais T, Andrade-Miranda G, Magnier B. Comprehensive Review of Open-Source Fundus Image Databases for Diabetic Retinopathy Diagnosis. Sensors. 2025; 25(18):5658. https://doi.org/10.3390/s25185658

Chicago/Turabian StyleConquer, Valérian, Thomas Lambolais, Gustavo Andrade-Miranda, and Baptiste Magnier. 2025. "Comprehensive Review of Open-Source Fundus Image Databases for Diabetic Retinopathy Diagnosis" Sensors 25, no. 18: 5658. https://doi.org/10.3390/s25185658

APA StyleConquer, V., Lambolais, T., Andrade-Miranda, G., & Magnier, B. (2025). Comprehensive Review of Open-Source Fundus Image Databases for Diabetic Retinopathy Diagnosis. Sensors, 25(18), 5658. https://doi.org/10.3390/s25185658