Abstract

Federated learning is increasingly recognized as a viable solution for deploying distributed intelligence across resource-constrained platforms, including smartphones, wireless sensor networks, and smart home devices within the broader Internet of Things ecosystem. However, traditional federated learning approaches face serious challenges in resource-constrained settings due to high processing demands, substantial memory requirements, and high communication overhead, rendering them impractical for battery-powered IoT environments. These factors increase battery consumption and, consequently, decrease the operational longevity of the network. This study proposes a streamlined, single-shot federated learning approach that minimizes communication overhead, enhances energy efficiency, and thereby extends network lifetime. The proposed approach leverages the k-nearest neighbors (k-NN) algorithm for edge-level pattern recognition and utilizes majority voting at the server/base station to reach global pattern recognition consensus, thereby eliminating the need for data transmissions across multiple communication rounds to achieve classification accuracy. The results indicate that the proposed approach maintains competitive classification accuracy performance while significantly reducing the required number of communication rounds.

1. Introduction

Over the past few years, federated learning has become a widely adopted paradigm for distributed, collaborative machine learning, particularly suitable for privacy-sensitive applications [1,2,3,4,5]. Originally introduced by Google, federated learning shifts the focus from centralized data processing to on-device computation, enabling devices to exchange only model updates rather than raw sensor data [6]. In federated learning, devices collaboratively construct a global model by communicating locally computed model updates instead of exchanging raw data, thereby preserving user privacy. This process iteratively improves the global model while keeping raw data local, reducing the need to transmit large amounts of sensor data across the network to the server or base station [1,2,7,8]. The key characteristics of federated learning include scalability, device and statistical heterogeneity, and data privacy [1,9].

The performance of federated learning is often comparable to—and in some cases surpasses—that of traditional centralized machine learning approaches [7]. Comparable principles have also been observed in graph neural network research [10,11,12,13], where distributed learning is employed to optimize communication efficiency and maintain data locality. Traditional machine learning approaches require substantial computational power, memory storage, and large training datasets [2,14]. Moreover, conventional CPUs or GPUs are generally unavailable in IoT devices and are unsuitable for untethered and battery-powered deployments [2]. Crucially, most machine learning methods rely on centralized processing, necessitating that both data and models reside in a single location [1,14]. Federated learning algorithms enable multiple nodes to collaboratively train a global model in a fully distributed manner without communicating raw data to a central location for processing [2,15].

Federated learning has been proposed as an effective solution for distributed information processing in the Internet of Things (IoT). The IoT represents a transformative, ubiquitous paradigm that has been seamlessly integrated into the fabric of everyday life [16,17,18,19,20]. Initially, the term IoT was associated with radio frequency identification (RFID) tags [21]. However, the current IoT paradigm encompasses a diverse range of interconnected devices, including near-field communication (NFC) devices, sensors, actuators, wireless sensor networks (WSNs), and smartphones. IoT devices are now pervasive in daily life and influence decision-making, behavior, and lifestyle [21]. The application domains of the IoT are vast and continually expanding across various domains and sectors [21,22].

This study focuses on WSNs and wireless IoT (WIoT) devices, both of which form important subsets of the broader IoT domain. These devices act as bridges between the physical and digital environments by acquiring sensor data and transmitting it to other IoT nodes or end-users [16,17,18,23,24,25,26,27,28,29,30,31,32]. Typically constrained by limited processing power, memory capacity, and battery life [21], WSNs and WIoT devices are often deployed for remote sensing in locations where battery replacement or physical maintenance is impractical or infeasible. In such scenarios, energy efficiency becomes a critical design objective, directly affecting network lifespan and operational reliability [33,34].

To ensure reliable operation, IoT applications must optimize processing power, memory storage, and communication overhead to conserve the limited battery energy of the IoT devices [16]. Sensor data collected from IoT environments require local storage, processing, and communication; however, these processes are constrained by limited device resources [24]. Furthermore, IoT network communication is often highly dynamic, less reliable, and slower than that of traditional infrastructure, making the assumption of continuous node availability unrealistic in many deployment scenarios [1]. Beyond device-level challenges, large-scale IoT deployments exert heavy demands on communication networks and end-systems such as base stations and servers.

Technological advances have driven the rise of federated learning-enabled IoT edge computing, offering efficient solutions and innovative applications [35]. This synergy enables diverse applications: in healthcare, federated learning–IoT integration accelerates patient diagnosis and treatment while preserving data privacy [36]. Specifically for medical IoT, federated learning enhances both privacy and classification accuracy in applications such as chronic disease prediction [37,38]. Furthermore, federated learning is increasingly applied in smart city domains such as traffic management and smart vehicles, where federated learning enables collaborative solutions including an improved recognition of unlabeled traffic signs among vehicles and a more efficient identification of charging stations [39,40].

Recent research has explored lightweight and secure distributed learning paradigms that align operational constraints with the approach proposed in this study. For example, the combinatorial optimization graph neural network (CO-GNN) employs graph neural networks (GNNs) to jointly optimize beamforming, power allocation, and intelligent reflecting surface (IRS) phase shifts in non-orthogonal multiple access (NOMA) systems, achieving secure, resource-efficient transmission without explicit channel state information (CSI) estimation [41]. Similarly, the work in [42] introduced a safety-optimal, fault-tolerant control method for unknown nonlinear systems with actuator faults and asymmetric input constraints, ensuring both safety and optimality. This approach integrates control barrier functions, neural network-based system identification, and an adaptive critic scheme to guarantee stability and efficiency. Furthermore, the authors in [43] proposed a collaboration-based node selection (CNS) approach that enhances wireless security by combining relay selection with friendly interference, thereby improving secrecy and reliability through selection combining (SC) and maximal ratio combining (MRC) strategies, with performance validated through both theoretical analysis and simulation.

Despite extensive research on federated learning and the IoT, achieving result model convergence and acceptable accuracy often requires a large number of communication rounds, during which local model updates from active network nodes are communicated throughout the network and aggregated at a central server or base stations. These iterative communication rounds introduce substantial communication overhead and impose significant energy demands on participating nodes. These limitations underscore the need for energy-efficient strategies to improve federated learning performance and the lifetime of the network. Consequently, the implications of communication overhead and energy consumption remain underexplored in the context of resource-constrained IoT environments. The main contributions of this paper are as follows:

- Novel Federated Learning Approach: A federated learning approach is proposed, namely the tiny federated nearest neighbors (TFNN) approach, tailored for IoT devices and networks that significantly reduces communication overhead, computational load, and energy conservation—critical considerations in resource-constrained environments.

- Single-shot Communication: This study leverages the k-nearest neighbors (k-NN) algorithm to perform collaborative pattern recognition in a single communication round, thereby minimizing communication overhead.

- Performance Benchmarking: TFNN is benchmarked with the classical k-NN and federated learning baseline algorithms, demonstrating that TFNN achieves competitive accuracy while requiring only a single communication round, in contrast to the multiple rounds typically needed by conventional federated learning approaches.

The rest of the paper is organized as follows: Section 2 provides an overview of related work on federated learning and k-NN. Section 3 describes the proposed TFNN approach in detail. Section 4 outlines the evaluation methodology, and Section 5 presents the simulation results and performance analysis. Finally, Section 6 concludes the paper by summarizing the key findings, discussing limitations, and proposing future research directions.

2. Related Work

This study explores the synergy between the k-NN algorithm and federated learning to enable distributed, collaborative pattern recognition in IoT systems. Before presenting the TFNN approach, we review the principles of k-NN and federated learning and examine how these algorithms interact in the context of distributed sensing.

2.1. k-Nearest Neighbors

The k-NN algorithm, originally introduced by Fix and Hodges [44,45], is a supervised non-parametric method widely employed in diverse classification problems. Its appeal stems from the absence of a dedicated training stage, its straightforward implementation, and its ability to handle multi-classes. Instead of iterative parameter updates, the k-NN algorithm essentially memorizes the training dataset and applies distance metrics to classify new instances.

The k-NN algorithm is described by the pseudocode in Algorithm 1. The k-NN algorithm employs a training set D to perform pattern recognition. Each instance in D consists of a pair (, ) that describes a pattern and its label. For a given target sample x (i.e., the event pattern whose label is to be predicted), the algorithm computes the distance between x and each training pattern in .

The distance metric can be defined using various methods, such as Euclidean distance, Manhattan distance, or other suitable distance measures. After computing the distance, the algorithm selects the k samples with the smallest distances. The parameter k specifies the number of nearest neighbors used to determine the label of the test sample.

Finally, the algorithm determines the majority label—the label that occurs most frequently—among the samples in N by counting the occurrence of each label y in , as described in the following formula:

where denotes the indicator function. The majority label is the label that appears most frequently among the kth nearest neighbors. The classified label for is defined as follows:

Formula (2) states that the predicted label for the test pattern is the label that occurs most frequently among its k-nearest neighbors. In other words, it selects the label with the highest count, i.e., the majority winning label. The k-NN algorithm is outlined in the pseudocode shown in Algorithm 1.

| Algorithm 1 k-NN algorithm. |

|

2.2. Federated Learning

The federated averaging (FedAvg) algorithm [8] is a widely used aggregation algorithm in federated learning, where in each communication round, a subset of devices independently trains local models on private data. Instead of sharing raw data, these devices upload their local models to a central server, which produces a new global model by averaging the updates. This process iterates until the model converges to a satisfactory performance level.

A federated learning scenario for an IoT network consists of N nodes (IoT devices) and a centralized location for final processing—a server or base stations. Federated learning can be mathematically formulated as a distributed optimization problem for the global loss function :

where represents the global model weights, K is the total number of data points across all devices, and denotes the number of data points stored on device n.

As described earlier, FedAvg is based on averaging the results of local stochastic gradient descent (SGD) updates, as summarized in Algorithm 2 [8]. The server selects a subset of nodes to participate in each round and distributes the current global model to all selected nodes. Upon receiving the global model, each node updates its own local model by performing the SGD procedure. The nodes then transmit their updated local models to the server, which computes a weighted average of the received updates to produce the new global model [46].

Initially, the global model is randomly initialized. In each round t, the server randomly selects a subset of clients (determined by a fixed participation fraction) and distributes the current global model to all nodes in . All selected nodes operate in parallel, updating their local models according to . Each node then computes its local gradient descent step and sends its updated local model to the server. The server aggregates these updates to generate the new global model .

| Algorithm 2 FedAvg algorithm. |

|

Nonetheless, FedAvg incurs substantial communication costs, since a large number of communication rounds are often required to achieve convergence. Moreover, local updates can sometimes cause FedAvg to diverge from the optimal global solution [47,48,49,50,51]. FedAvg also faces scalability challenges and may struggle to adapt to the dynamic conditions of IoT networks. In addition, its reliance on the arithmetic mean for model aggregation makes it vulnerable to data corruption and outliers [46,52].

FedProx, proposed in [53], extends the standard federated learning paradigm by introducing a proximal term into the local optimization objective function. This additional term discourages excessive deviation from the global model, thereby helping stabilize training in scenarios where participating IoT devices differ in computational capacity, data volume, or distribution. While this approach enhances robustness in non-IID conditions, it comes with notable trade-offs. The need to compute the proximal term increases computational workload on each device, and model behavior can vary significantly depending on the chosen regularization coefficient. Moreover, FedProx still requires multiple client–server communication rounds before convergence and remains dependent on the accuracy and stability of the global model used as a reference.

SCAFFOLD is a widely used federated learning algorithm designed to mitigate client drift caused by non-IID data [47]. It employs control variates—auxiliary vectors exchanged between the server and clients—to guide local updates toward the global optimum. SCAFFOLD offers provable convergence guarantees and performs effectively in heterogeneous data settings with significantly fewer communication rounds. Nonetheless, SCAFFOLD requires tens of communication rounds of model updates and the exchange of gradients and therefore still suffers from communication overhead and computational cost.

In general, it is important to note that achieving target accuracy in federated learning entails substantial communication overhead. Table 1 compares federated learning algorithms, namely FedAvg, FedProx, SCAFFOLD, and AdaptiveFL.

Table 1.

Comparison of federated learning algorithms FedAvg, FedProx, SCAFFOLD, and AdaptiveFL.

3. Tiny Federated Nearest Neighbors

This study proposes a federated learning nearest neighbor–based approach, referred to as TFNN, building upon the work presented in [55]. TFNN is designed to provide an energy-efficient federated learning solution for the IoT, explicitly addressing the memory, processing, and communication constraints of IoT nodes.

Pseudocode Algorithm 3 outlines the TFNN approach, in which IoT devices collaborate to transform their local inferences into a global event recognition outcome through decision fusion at the server or base station. In this approach, each IoT device stores training patterns locally and applies the k-NN algorithm to classify input test (event) patterns, as described in Algorithm 1.

In TFNN, the server first initializes the network by identifying the participating nodes, denoted as . Each node independently executes a local inference process in parallel by invoking the Node_Update function, where k represents the number of nearest neighbors used in the local k-NN classifier. The parameter P is not transmitted; instead, each node is assumed to access the event pattern directly through local observation. The local prediction from each node is then returned to the server.

Finally, the base station performs global decision fusion by applying majority voting over the class labels received from IoT nodes to produce the final predicted label for the event pattern . Let denote the class label of the nearest neighbors of x. The indicator function outputs 1 if and 0 otherwise.

where denotes the number of neighbors labeled as class c.

Here, represents the majority class label selected as the final prediction. The winning class is defined as the class receiving the majority of votes (), as defined in Formulas (4) and (5).

| Algorithm 3 Tiny federated nearest neighbors (TFNN) approach. |

|

This federated learning design ensures that raw data is never exchanged, thereby preserving privacy and enhancing efficiency. Each node transmits only its winning class label, which the server aggregates through majority voting. This approach provides a lightweight, communication-efficient, and privacy-preserving federated learning mechanism well suited for low-power and resource-constrained IoT environments.

4. Evaluation

Assessing federated learning algorithms for IoT deployments requires evaluating multiple performance factors. In this study, we employ analytical models and simulations to quantify these metrics for TFNN and baseline methods, focusing on their implications for energy consumption, communication overhead, and recognition performance.

4.1. Computational Complexity

In federated learning scenarios—particularly within IoT networks constrained by limited computational and power resources—local computational efficiency is critical. The TFNN approach addresses this challenge by eliminating iterative model training, thereby reducing processing demands. Instead, each IoT node performs lightweight local inference using a k-NN algorithm on a fixed-size pattern dataset.

The local computational cost at each node in TFNN is primarily determined by the k-NN classification step. For a local prototype set of size and input feature vectors of dimension d, each node locally computes the distance between the input event pattern and stored patterns, resulting in a per-pattern complexity of

Compared to machine learning algorithms that require forward passes through large neural networks, this cost is both low and predictable, making TFNN well suited for microcontrollers and IoT-grade processors. Furthermore, TFNN avoids backpropagation, weight storage, and floating-point intensive operations common in many federated learning algorithms. This significantly reduces the memory footprint and energy consumption at each node—an essential advantage for battery-powered and intermittently connected devices. Overall, TFNN provides a favorable trade-off by enabling decentralized intelligence with minimal local computation, making it highly practical for resource-constrained federated edge environments.

4.2. Accuracy Analysis

The classification accuracy of the TFNN approach depends on several interdependent factors, including the number of participating nodes (m) and the number of nearest neighbors (k). At the local level, each node executes a k-NN classifier, with accuracy determined by the quality of its local dataset and the choice of k. While smaller values of k may lead to overfitting or increased sensitivity to noise, larger values generally improve stability but may reduce specificity.

The size and quality of local data directly affect prediction accuracy, with larger local datasets generally improving k-NN performance due to better neighborhood coverage. When using k-nearest samples for classification, the risk of underfitting or overfitting becomes more pronounced. In extreme cases, k-NN may yield unreliable results if or become overly sensitive if . Formula (7) describes the accuracy trend of k-NN accordingly:

Consequently, a small k results in low bias and high variance, making the classifier sensitive to noise and prone to misclassifications due to outliers or label noise. In contrast, a large k provides better generalization with high bias and low variance; however, it may overlook minority classes. In general, an effective choice is k ≈, where represents the number of samples at node i.

Moreover, let the local accuracy of the k-NN classifier at node i be denoted by . Then, the local accuracy of the k-NN classifier at node i is given by

Under regularity conditions—such as independent and identically distributed samples and the Lipschitz continuity of the conditional distribution—as , the local accuracy converges to

where denotes the Bayes-optimal accuracy for the classification problem, is a constant that depends on the underlying data distribution, k is the number of nearest neighbors, and d is the dimensionality of the feature space.

For practical finite datasets, a tight upper bound described in [44] is given by

where denotes the expected classification accuracy at node i, k is the number of nearest neighbors, is a constant that depends on the underlying data distribution and class overlap, and is the accuracy of the Bayes-optimal classifier.

In the TFNN approach, the final classification is obtained by aggregating local predictions from m IoT nodes using unweighted majority voting, as described in Formula (5). Each node independently predicts a class label using a local k-NN classifier, and the server or base stations then computes the global output using the same majority voting rule. The resulting global accuracy depends on the statistical properties of the ensemble decision-making process.

Assuming that each node makes an independent prediction with a probability p of being correct, the probability that the majority of nodes produce the correct label is given by the binomial cumulative distribution function (CDF):

where denotes the binomial coefficient, represent the probability that exactly i nodes predict correctly, and represents the probability that the remaining nodes predict incorrectly.

This reflects the classic ensemble effect: as long as individual nodes perform better than random guessing (), increasing the number of participating IoT nodes improves the global accuracy, enabling the ensemble to outperform any individual node. In the asymptotic case, with IID data and a sufficiently large number of nodes, the performance of TFNN can approach that of a globally trained k-NN model.

4.3. Communication Overhead

Minimizing communication overhead is a critical concern for IoT devices operating on limited battery reserves. Generally, federated learning strategies require multiple exchanges of large iterative model parameters between distributed clients and a coordinating server. TFNN, by contrast, streamlines this process through a single-shot classification approach. Each participating node independently executes a lightweight k-NN classifier on its locally stored data and transmits only the resulting class labels to the central aggregator at the server or base station.

Let m denote the number of participating nodes and C the number of classes. Assuming each prediction is encoded as a one-hot vector of length C or as an integer requiring bits, TFNN incurs negligible downlink cost since the datasets are fixed and locally stored. Unlike conventional federated learning, the server does not broadcast global model updates back to the nodes.

In addition to abstract communication complexity, practical physical costs such as radio transmission energy, propagation distance, and receiver wake-up durations must also be considered. Each IoT node transmits a scalar index , requiring bits, or a one-hot vector of length C, requiring C bits. Thus, the total uplink communication costs for m nodes is given by

If denotes the distance from node i to the server or base station and is the path loss exponent, the per-node transmission energy scales as

Aggregating over all nodes yields the total uplink transmission energy:

In duty-cycled networks, transceivers consume a wake-up energy cost () in addition to transmission or reception energy. If denotes the per-bit reception energy, the server’s total reception energy is given by

Including wake-up costs, the per-classification communication energy is expressed as

By communicating only a single scalar class label per IoT node in one communication round, TFNN achieves a substantially lower communication overhead than standard federated learning, making it well suited for low-power, bandwidth-constrained IoT deployments.

4.4. Privacy Considerations

Similar to conventional federated learning methods that exchange model updates, TFNN transmits only a scalar class label from each node for each inference. This substantially reduces the information available to potential eavesdroppers and mitigates risks associated with model inversion or data reconstruction attacks. Raw sensor data or detailed model parameters are never shared across the network. However, in scenarios where the class label space itself may reveal sensitive information (e.g., rare disease diagnosis), even label-only transmission could expose limited contextual details to a malicious observer. In such cases, additional privacy-enhancing techniques—such as label perturbation or differential privacy—can be integrated without significantly affecting TFNN efficiency.

5. Results

The on-board battery energy of IoT devices is predominantly consumed during communication. Therefore, this study focuses on evaluating the communication efficiency and classification accuracy of the TFNN approach by comparing its performance with traditional federated learning and pattern recognition methods, specifically AdaptiveFL, FedProx, SCAFFOLD, FedAvg, and the k-NN algorithm, respectively. The evaluation of TFNN was conducted using a custom simulator developed in MATLAB 2024b and Python 3.13.3 [56,57]. The experiments employed three widely used benchmark datasets—MNIST [58], CIFAR-10, and CIFAR-100 [59]—across various experimental setups. The CIFAR-10 dataset contains 60,000 color images of size pixels across 10 classes, while the CIFAR-100 dataset comprises 100 classes with 600 images per class. MNIST consists of handwritten digits (0–9, 10 classes), where each image comprises grayscale patterns.

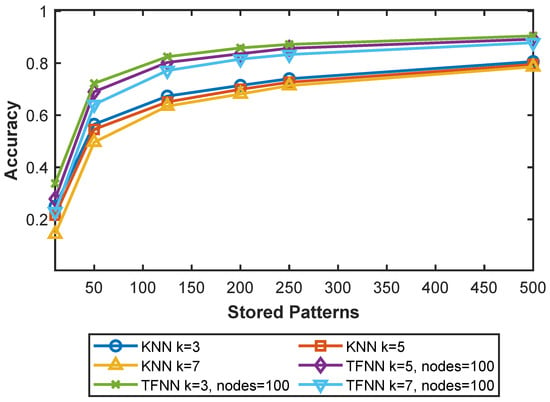

Figure 1 illustrates the classification accuracy of the k-NN algorithm with different values of k compared with the proposed TFNN approach, using 100 IoT nodes, and varying the number of IID stored training patterns from 10 to 500 from the MNIST dataset. As the number of stored patterns increases, the accuracy of both methods improves; however, TFNN consistently achieves higher accuracy than k-NN across all configurations. While k-NN serves as a simple and interpretable baseline, TFNN leverages lightweight collaborative inference in a federated setting, resulting in substantially improved performance. Notably, TFNN achieves approximately 90% accuracy when the number of stored patterns reaches 500.

Figure 1.

Accuracy comparison between traditional k-NN and TFNN using 100 IoT nodes, evaluated at , , and while increasing the number of stored patterns from 10 to 500 patterns.

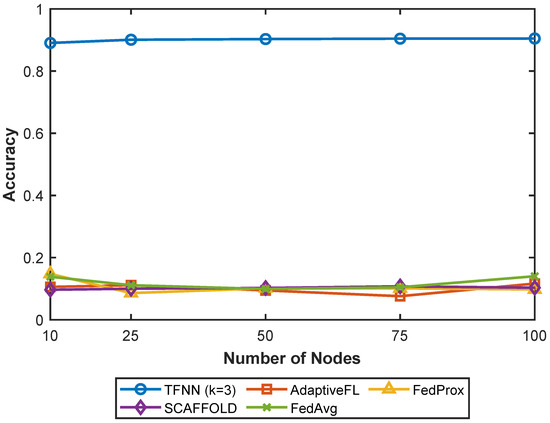

Figure 2 compares the single-shot classification accuracy of the proposed TFNN () approach with AdaptiveFL, FedProx, SCAFFOLD, and FedAvg in IoT networks ranging from 10 to 100 nodes, with each node storing 500 IID MNIST patterns. The results show that TFNN consistently outperforms all federated learning baselines, achieving approximately 90% accuracy across all network sizes and thereby demonstrating strong communication efficiency—an essential feature for resource-constrained IoT environments.

Figure 2.

Single-shot (one communication round) classification accuracy comparing TFNN () with AdaptiveFL, FedProx, SCAFFOLD, and FedAvg.

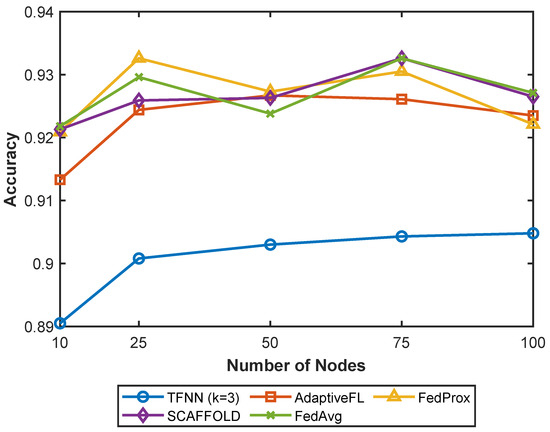

Figure 3 presents the accuracy of the federated baselines after 200 communication rounds compared with the single-shot TFNN result. While the baselines achieve approximately 92%, this requires nearly 200 additional client–server exchanges beyond TFNN’s one-round operation and yields only about a 2% improvement in accuracy. These results underscore TFNN’s ability to achieve competitive accuracy with orders-of-magnitude lower communication overhead—and, by implication, lower communication energy—in practical IoT deployments.

Figure 3.

Single-shot classification accuracy of TFNN () compared with AdaptiveFL, FedProx, SCAFFOLD, and FedAvg after 200 communication rounds.

Table 2, Table 3 and Table 4 present the classification accuracy of AdaptiveFL, FedProx, SCAFFOLD, FedAvg, and the proposed TFNN () under both IID and Non-IID partitions of the MNIST, CIFAR-10, and CIFAR-100 datasets. The simulations were conducted over 200 communication rounds with network sizes ranging from 10 to 100 IoT nodes, where each node stored 500 patterns.

Table 2.

Accuracy after 200 communication rounds using MNIST dataset under IID vs. non-IID partitions across varying numbers of participating nodes.

Table 3.

Accuracy after 200 communication rounds using CIFAR-10 dataset under IID vs. non-IID partitions across varying numbers of participating nodes.

Table 4.

Accuracy after 200 communication rounds using CIFAR-100 dataset under IID vs. non-IID partitions across varying numbers of participating nodes.

Whilst using the MNIST dataset, all baseline algorithms achieved accuracies in the range of 92–93% under IID conditions, confirming strong convergence on relatively simple data. TFNN attained accuracy of approximately 80–90%, representing a modest trade-off in accuracy in exchange for an approximately 200-fold reduction in communication energy, as TFNN operates in a single-shot manner.

On the CIFAR-10 dataset, baseline federated learning methods achieved accuracies clustered around 36–39% under IID conditions, whereas TFNN remained consistently lower (≈23–25%). Under non-IID partitions, accuracy remained low for both the baseline federated learning algorithm and TFNN. The CIFAR-100 dataset is, in general, challenging, with all algorithms—including TFNN—achieving only about 10% accuracy or less. The reduced performance of TFNN on CIFAR-10 and CIFAR-100 can be attributed to the higher feature dimensionality and greater class complexity of these datasets.

Overall, the results reveal a clear energy-efficiency and accuracy trade-off. TFNN achieves competitive accuracy while requiring only a single communication round, thereby drastically reducing communication costs and, consequently, energy consumption compared with classical federated learning algorithms. These findings position TFNN as a viable lightweight alternative for resource-constrained IoT and WSN deployments, where communication efficiency is critical and absolute accuracy may be moderately sacrificed. However, the utilization of TFNN needs to take into account the complexity of the event patterns in order to maintain acceptable accuracy.

6. Conclusions

This study proposed TFNN, a lightweight and communication-efficient approach to federated learning tailored for resource-constrained IoT and WSN environments. Unlike conventional federated learning algorithms that require tens to hundreds of communication rounds and the exchange of large model parameter updates, TFNN performs single-shot pattern recognition by transmitting only local class labels from IoT nodes to the server or base station. Consequently, TFNN significantly reduces the energy consumed in communication compared with traditional federated learning while enabling federated pattern recognition in a single communication round.

The simulation results demonstrated that TFNN achieves competitive classification accuracy while reducing communication cost by up to two folds compared with baseline federated learning methods. This makes TFNN particularly promising for battery-powered and bandwidth-constrained IoT deployments, where extending network lifetime is more critical than achieving absolute accuracy.

Future studies will focus on refining and optimizing TFNN, specifically addressing its sensitivity to complex datasets by integrating lightweight feature extraction or prototype-sharing mechanisms. This is expected to enhance TFNN’s ability to capture discriminative representations in high-dimensional data. Furthermore, deploying TFNN in practical IoT environments will allow for an exploration of its full potential across diverse real-world scenarios. Such extensions will further establish TFNN as a scalable and energy-efficient solution for next-generation IoT systems.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.C.; Yang, Q. Federated Learning in Mobile Edge Networks: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef]

- Zhang, T.; Gao, L.; He, C.; Zhang, M.; Krishnamachari, B.; Avestimehr, A.S. Federated Learning for the Internet of Things: Applications, Challenges, and Opportunities. IEEE Internet Things Mag. 2022, 5, 24–29. [Google Scholar] [CrossRef]

- He, C.; Annavaram, M.; Avestimehr, S. Group knowledge transfer: Federated learning of large CNNs at the edge. In Proceedings of the Advances in Neural Information Processing Systems 34, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020. Number 1180 in NIPS ’20. p. 13. [Google Scholar]

- Niknam, S.; Dhillon, H.S.; Reed, J.H. Federated Learning for Wireless Communications: Motivation, Opportunities, and Challenges. IEEE Commun. Mag. 2020, 58, 46–51. [Google Scholar] [CrossRef]

- Tahir, M.; Ali, M.I. On the Performance of Federated Learning Algorithms for IoT. Internet Things 2022, 3, 273–284. [Google Scholar] [CrossRef]

- Shaheen, M.; Farooq, M.S.; Umer, T.; Kim, B.S. Applications of Federated Learning; Taxonomy, Challenges, and Research Trends. Electronics 2022, 11, 670. [Google Scholar] [CrossRef]

- Hu, K.; Li, Y.; Xia, M.; Wu, J.; Lu, M.; Zhang, S.; Weng, L. Federated Learning: A Distributed Shared Machine Learning Method. Complexity 2021, 2021, 8261663. [Google Scholar] [CrossRef]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Baqer, M. Energy-Efficient Pattern Recognition for Wireless Sensor Networks. In Mobile Intelligence; Wiley-IEEE Press: Hoboken, NJ, USA, 2010. [Google Scholar]

- Baqer, M. VRNS: A new energy efficient event recognition approach via Voting with Random Node Selection. In Proceedings of the 2010 6th IEEE International Conference on Distributed Computing in Sensor Systems Workshops (DCOSSW), Santa Barbara, CA, USA, 21–23 June 2010; pp. 1–2. [Google Scholar]

- Baqer, M.; Khan, A.I.; Baig, Z.A. Implementing a Graph Neuron Array for Pattern Recognition Within Unstructured Wireless Sensor Networks. In Proceedings of the International Conference on Embedded and Ubiquitous Computing: EUC 2005 Workshops, Nagasaki, Japan, 6–9 December 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 208–217. [Google Scholar]

- Khan, A.I.; Ramachandran, V. A Peer-to-Peer Associative Memory Network for Intelligent Information Systems. In Proceedings of the Thirteenth Australasian Conference on Information Systems, Melbourne, Australia, 3–6 December 2002. [Google Scholar]

- Lazzarini, R.; Tianfield, H.; Charissis, V. Federated Learning for IoT Intrusion Detection. AI 2023, 4, 509–530. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, J.; Song, S.; Letaief, K.B. Client-Edge-Cloud Hierarchical Federated Learning. In Proceedings of the IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar]

- Atzori, L.; Iera, A.; Morabito, G. The Internet of Things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Baqer, M.; Kamal, A. S-Sensors: Integrating physical world inputs with social networks using wireless sensor networks. In Proceedings of the 2009 International Conference on Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), Melbourne, Australia, 7–10 December 2009. [Google Scholar]

- Estrin, D.; Culler, D.; Pister, K.; Sukhatme, G. Connecting the physical world with pervasive networks. IEEE Pervasive Comput. 2002, 1, 59–69. [Google Scholar] [CrossRef]

- Baqer, M.; Balbis, L. A Sampling Sensor Nodes to Extend the Longevity of Wireless Sensor Networks. Int. J. Sens. Wirel. Commun. Control 2019, 9, 53–63. [Google Scholar] [CrossRef]

- Baqer, M.; Al Mutawah, K. Random node sampling for energy efficient data collection in wireless sensor networks. In Proceedings of the 2013 IEEE Eighth International Conference on Intelligent Sensors, Sensor Networks and Information Processing, Melbourne, Australia, 2–5 April 2013; pp. 467–472. [Google Scholar]

- Ashton, K. That ’Internet of Things’ Thing. RFID J. 2009, 22, 97–114. Available online: https://www.rfidjournal.com/expert-views/that-internet-of-things-thing/73881/ (accessed on 15 November 2024).

- Elijah, O.; Rahman, T.A.; Orikumhi, I.; Leow, C.Y.; Hindia, M.N. An Overview of Internet of Things (IoT) and Data Analytics in Agriculture: Benefits and Challenges. IEEE Internet Things J. 2018, 5, 3758–3773. [Google Scholar] [CrossRef]

- Vermesan, O.; Friess, P.; Guillemin, P.; Gusmeroli, S.; Sundmaeker, H.; Bassi, A.; Jubert, I.; Mazura, M.; Harrison, M.; Eisenhauer, M.; et al. Internet of Things Strategic Research Roadmap. In Internet of Things—Global Technological and Societal Trends from Smart Environments and Spaces to Green ICT; CRC Press: Boca Raton, FL, USA, 2022; pp. 9–52. [Google Scholar]

- Sethi, P.; Sarangi, S.R. Internet of Things: Architectures, Protocols, and Applications. J. Electr. Comput. Eng. 2017, 2017, 9324035. [Google Scholar] [CrossRef]

- Akyildiz, I.; Su, W.S.; Sankarasubramaniam, Y.; Cayirci, E. A Survey on Sensor Networks. IEEE Commun. Mag. 2002, 19, 102–114. [Google Scholar] [CrossRef]

- Mainwaring, A.; Culler, D.; Polastre, J.; Szewczyk, R.; Anderson, J. Wireless Sensor Networks for Habitat Monitoring. In Proceedings of the 1st ACM International Workshop on Wireless Sensor Networks and Applications (WSNA ’02), Atlanta, GA, USA, 28 September 2002. [Google Scholar]

- Kim, S.; Pakzad, S.; Culler, D.; Demmel, J.; Fenves, G.; Glaser, S.; Turon, M. Wireless Sensor Networks for Structural Health Monitoring. In Proceedings of the 4th International Conference on Embedded Networked Sensor Systems (SenSys’06), Boulder, CO, USA, 31 October–3 November 2006. [Google Scholar]

- Bonnet, P.; Gehrke, J.; Seshadri, P. Querying the Physical World. IEEE Pers. Commun. 2000, 7, 10–15. [Google Scholar] [CrossRef]

- Kramp, T.; Kranenburg, R.V.; Lange, S. Introduction to the Internet of Things. In Enabling Things to Talk: Designing IoT Solutions with the IoT Architectural Reference Model; Springer: Berlin/Heidelberg, Germany, 2013; pp. 1–10. [Google Scholar]

- Abdul-Qawy, P.P.J.A.S.; Magesh, E.; Srinivasulu, T. The Internet of Things (IoT): An Overview. Int. J. Eng. Res. Appl. 2015, 5, 71–82. [Google Scholar]

- Mashal, I.; Alsaryrah, O.; Chung, T.Y.; Yang, C.Z.; Kuo, W.H.; Agrawal, D.P. Choices for interaction with Things on Internet and Underlying Issues. Ad Hoc Netw. 2015, 28, 68–90. [Google Scholar] [CrossRef]

- Khan, R.; Khan, S.U.; Zaheer, R.; Khan, S. Future Internet: The Internet of Things Architecture, Possible Applications and Key Challenges. In Proceedings of the 10th International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 17–19 December 2012. [Google Scholar]

- Patil, K.; Banerjee, S. Brief Overview on Wireless Sensor Network Technologies in the Internet of Things (IoT). Int. J. Eng. Manag. Res. 2023, 13, 1–8. [Google Scholar] [CrossRef]

- Arampatzis, T.; Lygeros, J.; Manesis, S. A Survey of Applications of Wireless Sensors and Wireless Sensor Networks. In Proceedings of the 2005 IEEE International Symposium on Mediterranean Conference on Control and Automation, Limassol, Cyprus, 27–29 June 2005. [Google Scholar]

- Lopez, P.G.; Montresor, A.; Epema, D.; Datta, A.; Higashino, T.; Lamnitchi, A.; Barcellos, M.M.; Felber, P.; Riviere, E. Edge-centric Computing: Vision and Challenges. ACM SIGCOMM Comput. Commun. Rev. 2015, 45, 37–42. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pham, Q.V.; Pathirana, P.N.; Le, L.B.; Seneviratne, A.; Li, J.; Niyato, D.; Poor, H.V. Federated Learning Meets Blockchain in Edge Computing: Opportunities and Challenges. IEEE Internet Things J. 2021, 8, 12806–12825. [Google Scholar] [CrossRef]

- Alsamhi, S.H.; Shvetsov, A.V.; Hawbani, A.; Shvetsova, S.V.; Kumar, S.; Zhao, L. Survey on Federated Learning enabling indoor navigation for industry 4.0 in B5G. Future Gener. Comput. Syst. 2023, 148, 250–265. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Verma, A.; Tanwar, S. (Eds.) Federated Learning for Internet of Medical Things: Concepts, Paradigms, and Solutions, 1st ed.; CRC Press: Boca Raton, FL, USA, 2023. [Google Scholar]

- Albaseer, A.; Ciftler, B.S.; Abdallah, M.; Al-Fuqaha, A. Exploiting Unlabeled Data in Smart Cities using Federated Edge Learning. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; pp. 1666–1671. [Google Scholar]

- Kumar, A.; Braud, T.; Tarkoma, S.; Hui, P. Trustworthy AI in the Age of Pervasive Computing and Big Data. In Proceedings of the 2020 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; pp. 1–6. [Google Scholar]

- Liang, L.; Tian, Z.; Huang, H.; Li, X.; Yin, Z.; Zhang, D.; Zhang, N.; Zhai, W. Heterogeneous Secure Transmissions in IRS-Assisted NOMA Communications: CO-GNN Approach. IEEE Internet Things J. 2025, 12, 34113–34125. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, Y.; Meng, L.; Yan, J.; Qin, C. Adaptive critic design for safety-optimal FTC of unknown nonlinear systems with asymmetric constrained-input. ISA Trans. 2024, 155, 309–318. [Google Scholar] [CrossRef] [PubMed]

- Liang, L.; Li, X.; Huang, H.; Yin, Z.; Zhang, N.; Zhang, D. Securing Multidestination Transmissions with Relay and Friendly Interference Collaboration. IEEE Internet Things J. 2024, 11, 18782–18795. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Fix, E.; Hodges, J.L. An Important Contribution to Nonparametric Discriminant Analysis and Density Estimation. Int. Stat. Rev. 1951, 57, 233–247. [Google Scholar]

- Aledhari, M.; Razzak, R.; Parizi, R.M.; Saeed, F. Federated Learning: A Survey on Enabling Technologies, Protocols, and Applications. IEEE Access 2020, 8, 140699–140725. [Google Scholar] [CrossRef] [PubMed]

- Karimireddy, S.P.; Kale, S.; Mohri, S.R.M.; Stich, S.; Suresh, A.T. SCAFFOLD: Stochastic Controlled Averaging for Federated Learning. In Proceedings of the International Conference on Machine Learning, Online, 13–18 July 2020. [Google Scholar]

- Malinovskiy, G.; Kovalev, D.; Gasanov, E.; Condat, L.; Richtarik, P. From Local SGD to Local Fixed-Point Methods for Federated Learning. In Proceedings of the International Conference on Machine Learning, Online, 13–18 July 2020. [Google Scholar]

- Pathak, R.; Wainwright, M.J. Fedsplit: An algorithmic framework for fast federated optimization. In Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS’20), Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Charles, Z.; Konečnỳ, J. Convergence and Accuracy Trade-offs in Federated Learning and Meta-learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 13–15 April 2021. [Google Scholar]

- Wang, J.; Liu, Q.; Liang, H.; Joshi, G.; Poor, H.V. Tackling the objective inconsistency problem in heterogeneous federated optimization. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Collins, L.; Hassani, H.; Mokhtari, A.; Shakkottai, S. FedAvg with Fine Tuning: Local Updates Lead to Representation Learning. In Proceedings of the 36th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated Optimization in Heterogeneous Networks. In Proceedings of the 3rd Machine Learning and Systems Conference, Austin, TX, USA, 2–4 March 2020; Volume 2, pp. 429–450. [Google Scholar]

- Jia, C.; Hu, M.; Chen, Z.; Yang, Y.; Xie, X.; Chen, L.; Zhang, J. AdaptiveFL: Adaptive Heterogeneous Federated Learning for Resource-Constrained AIoT Systems. ACM Trans. Des. Autom. Electron. Syst. 2025, 30, 40. [Google Scholar]

- Baqer, M. Energy-Efficient Federated Learning for Internet of Things: Leveraging In-Network Processing and Hierarchical Clustering. Future Internet 2025, 17, 4. [Google Scholar] [CrossRef]

- MATLAB, Version 24.2.0.2923080 (R2024b); The MathWorks Inc.: Natick, MA, USA, 2023.

- Python Software Foundation. Python, Version 3.13.3; Python Software Foundation: Beaverton, OR, USA, 2025.

- Deng, L. The MNIST Database of Handwritten Digit Images for Machine Learning Research. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. Technical Report. 2009. Available online: https://www.cs.utoronto.ca/~kriz/learning-features-2009-TR.pdf (accessed on 15 August 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).