CogMamba: Multi-Task Driver Cognitive Load and Physiological Non-Contact Estimation with Multimodal Facial Features

Abstract

1. Introduction

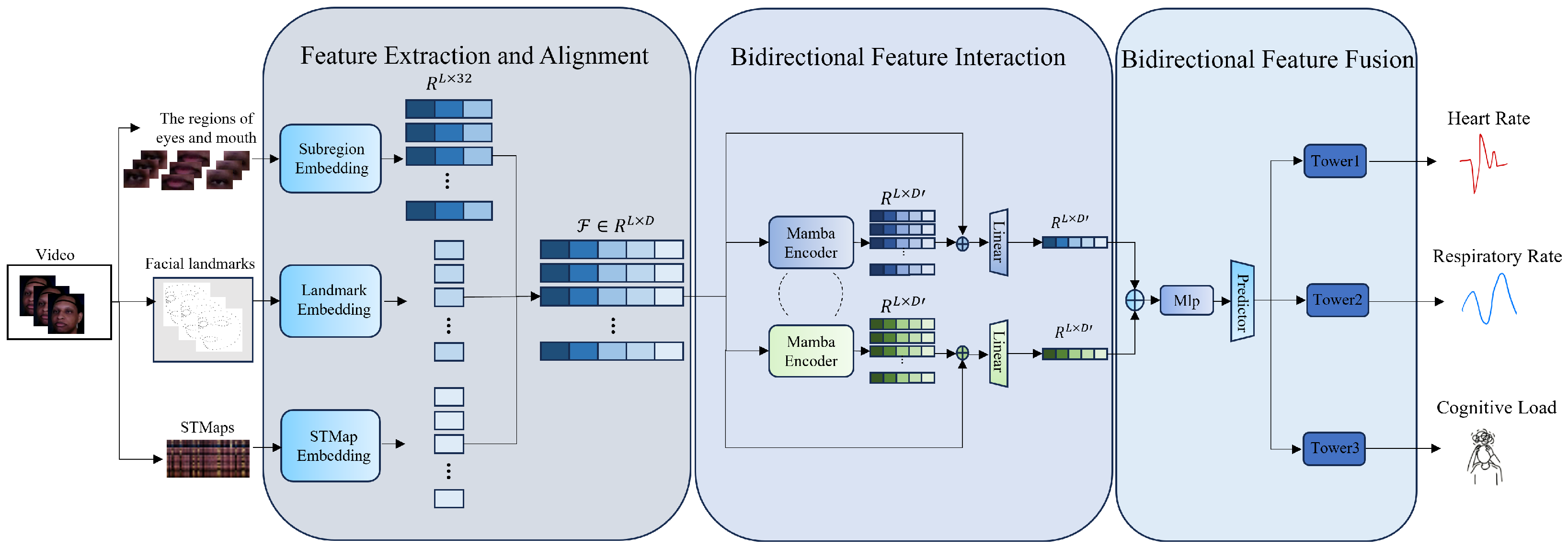

- As far as we know, this work is the first end-to-end multi-task non-contact driver cognitive load and physiological estimation model with multimodal facial features from a camera.

- The proposed CogMamba utilizes STMap and key facial features—including landmarks, eye regions, and mouth area—instead of full-frame video input, thereby significantly reducing model parameters and computational load.

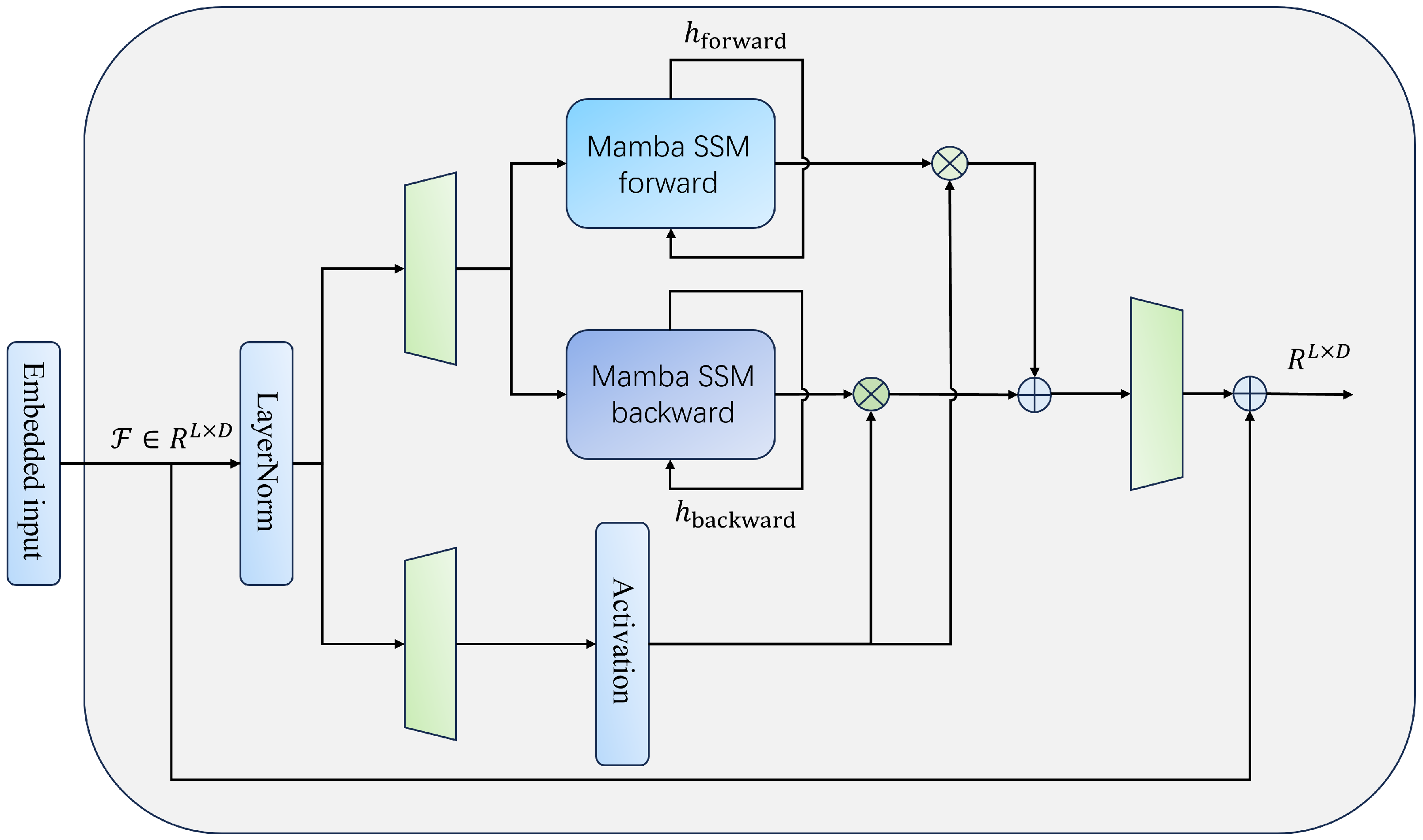

- We incorporate the Mamba architecture to enhance the extraction of both local and global temporal features, achieving higher efficiency and lower resource consumption compared to traditional attention mechanisms. This efficiency gain stems from the recursive nature of SSMs, which eliminates the need for attention matrix computation. Additionally, lightweight MLPs are employed to further simplify the model architecture and reduce overall complexity.

- The proposed system demonstrates strong performance in assessing driver cognitive load. Furthermore, the experimental results show that the system performs robustly under varying lighting conditions and across different skin tones.

2. Related Works

2.1. Contact-Based Cognitive Load Detection

2.2. Non-Contact-Based Cognitive Load Detection

2.3. Mamba

3. Methodology

3.1. Preliminaries

3.1.1. State Space Modeling and Discretization Principles

3.1.2. Advantages of the Convolution Equivalence Form and Mamba Model

3.2. Overview

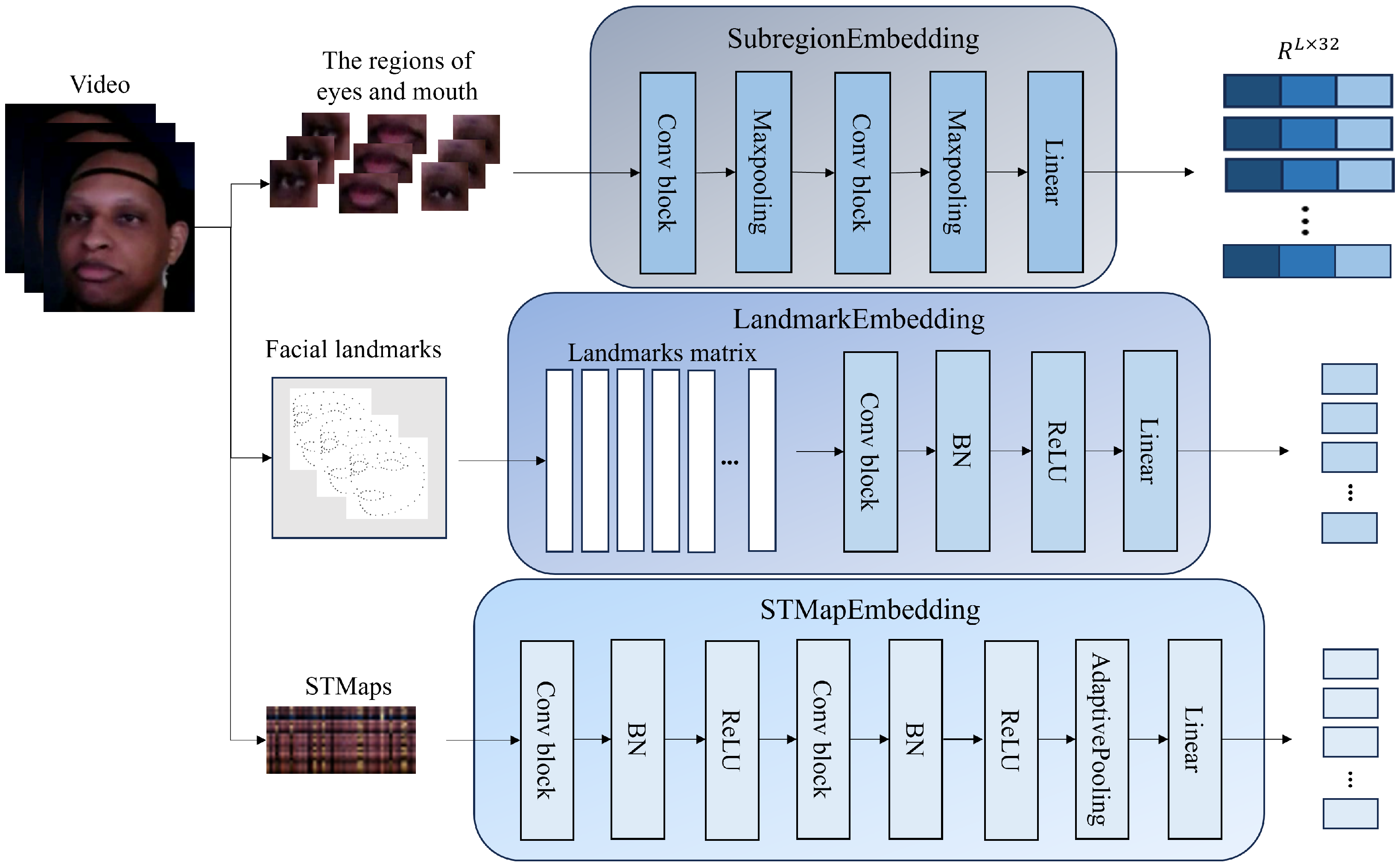

3.3. Feature Extraction and Alignment

Data Processing

3.4. Bidirectional Feature Interaction

3.4.1. Feature Extraction and Alignment

3.4.2. Bidirectional Feature Fusion

3.5. Optimization Goal

| Algorithm 1: Multimodal facial sequence modeling for HR, RR and cognitive load estimation |

Input: Video samples with corresponding labels: Output: Predicted HR , RR , and Cognitive Load // Step 1: Feature Extraction Extract region-specific features: ; ; ; ; ; // Step 2: Feature Alignment and Concatenation Align and concatenate all extracted features along the channel dimension:; // Step 3: Bidirectional Feature Interaction via Mamba ; ; ; ; ; // Step 4: Temporal Aggregation ; // Step 5: Multi-task Prediction ; ; ; // Step 6: Compute Loss and Backpropagate ; ; ; Compute ; ; Backpropagate and update parameters. |

4. Experiment

4.1. Datasets and Evaluation Metrics

4.2. Baselines

4.3. Implementation Details

4.4. Results of the Comparison Experiment

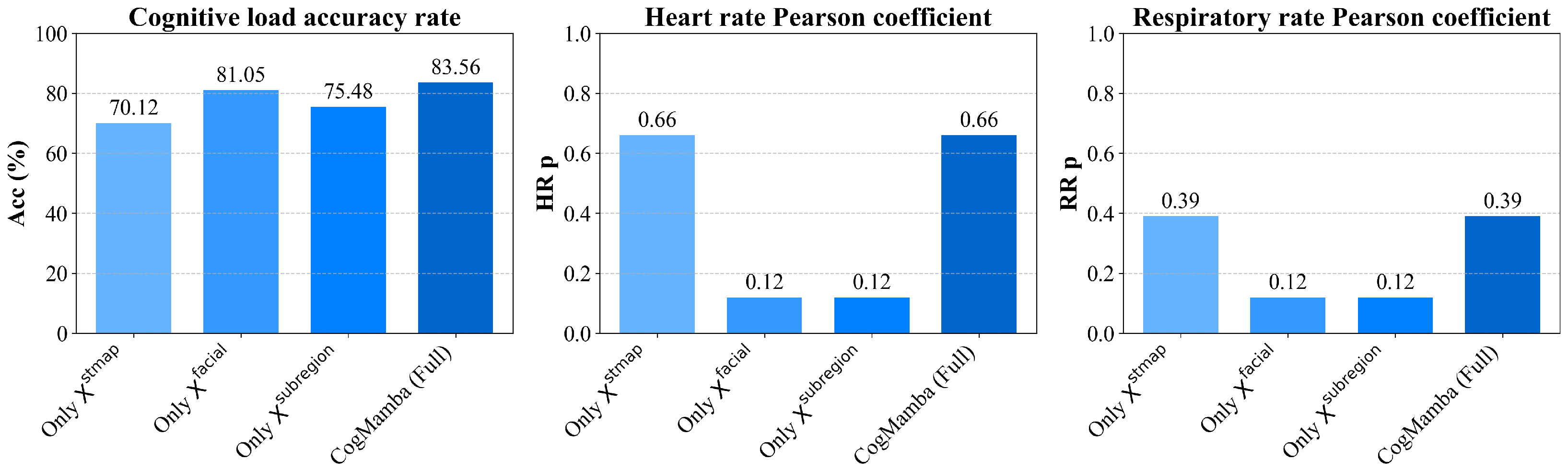

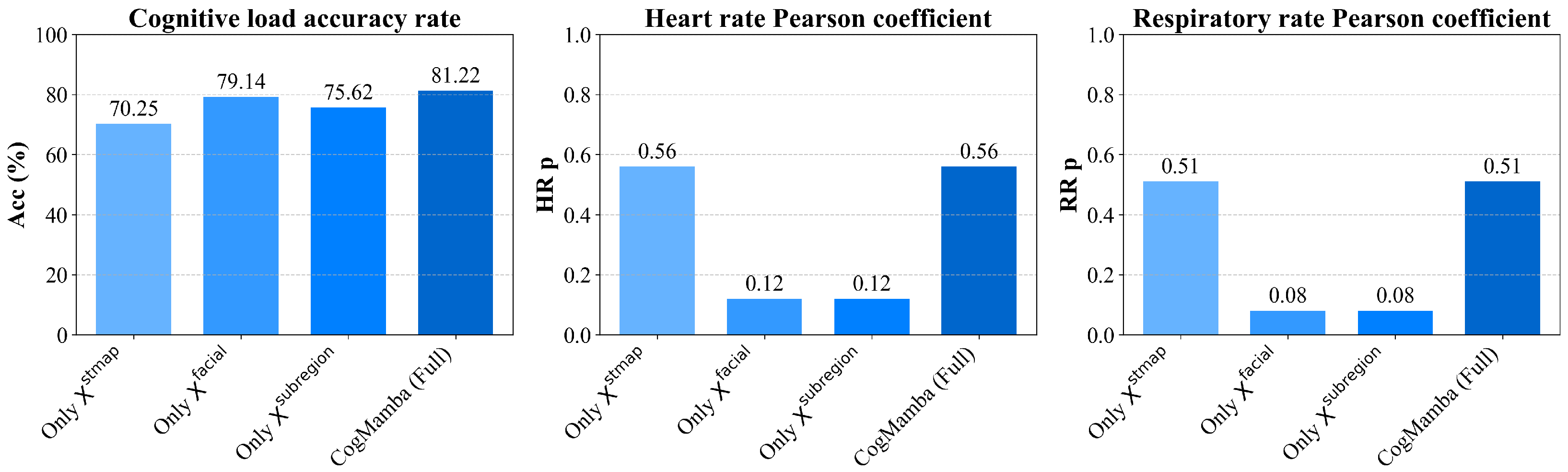

4.5. Results of Ablation Study

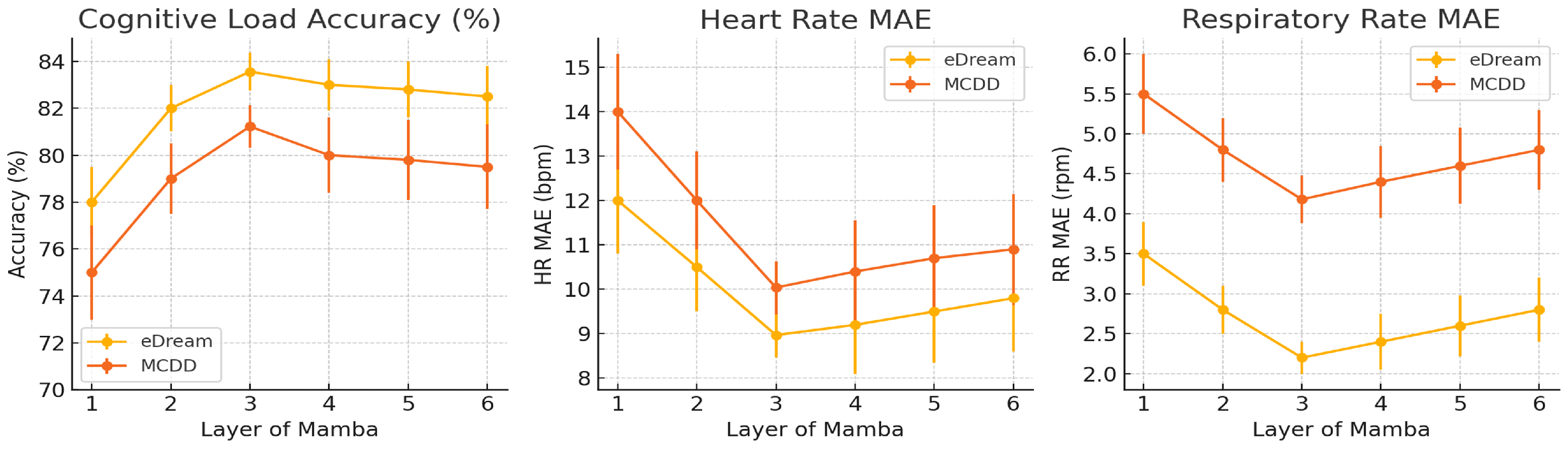

4.6. Impact of the Number of Mamba Layers

4.7. Cross-Dataset Estimation

4.8. Results of the Computational Cost Study

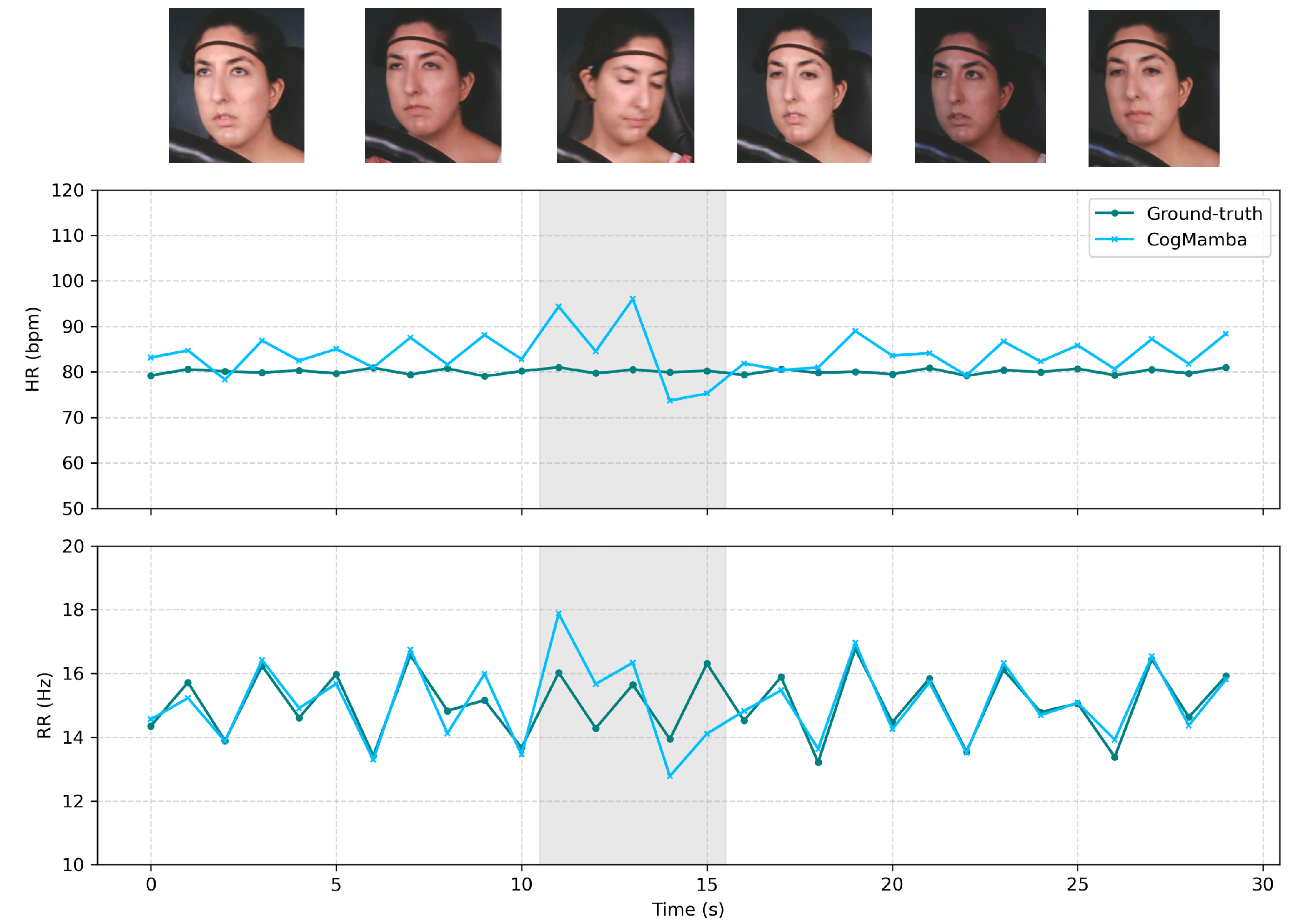

4.9. Case Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| NDRT | Non-driving-related Task |

| HR | Heart Rate |

| RR | Respiratory Rate |

| SAE | The Society of Automotive Engineers |

| TORs | Take-over Requests |

| EEG | Electroencephalography |

| ML | Machine Learning |

| rPPG | Remote Photoplethysmography |

| STMap | Spatial-temporal Map |

| SSMs | State Space Models |

| MLP | Multilayer Perceptron |

| ECG | Electrocardiography |

| EDA | Electrodermal Activity |

| SVM | Support Vector Machines |

| RF | Random Forests |

| CNN | Convolutional Neural Networks |

| RNN | Recurrent Neural Networks |

| BSSM | Bidirectional State Space Models |

| LTI | Linear Time-invariant |

| ZOH | Zero-order Hold |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Square Error |

References

- Tandrayen-Ragoobur, V. The economic burden of road traffic accidents and injuries: A small island perspective. Int. J. Transp. Sci. Technol. 2025, 17, 109–119. [Google Scholar] [CrossRef]

- Cruz, O.G.D.; Padilla, J.A.; Victoria, A.N. Managing road traffic accidents: A review on its contributing factors. IOP Conf. Ser. Earth Environ. Sci. 2021, 822, 012015. [Google Scholar] [CrossRef]

- Lee, J.D. Perspectives on automotive automation and autonomy. J. Cogn. Eng. Decis. Mak. 2018, 12, 53–57. [Google Scholar] [CrossRef]

- Gresset, C.; Morda, D. Assessing the Human Barriers and Impact of Autonomous Driving in Transportation Activities: A Multiple Case Study. 2021. Available online: https://www.diva-portal.org/smash/get/diva2:1560021/FULLTEXT01.pdf (accessed on 1 September 2025).

- Häuslschmid, R.; Pfleging, B.; Butz, A. The influence of non-driving-related activities on the driver’s resources and performance. In Automotive User Interfaces: Creating Interactive Experiences in the Car; Springer International Publishing: Cham, Switzerland, 2017; pp. 215–247. [Google Scholar]

- Wang, J.; Wang, A.; Yan, S.; He, D.; Wu, K. Revisiting Interactions of Multiple Driver States in Heterogenous Population and Cognitive Tasks. arXiv 2024, arXiv:2412.13574. [Google Scholar] [CrossRef]

- Wang, A.; Wang, J.; Huang, C.; He, D.; Yang, H. Exploring how physio-psychological states affect drivers’ takeover performance in conditional automated vehicles. Accid. Anal. Prev. 2025, 216, 108022. [Google Scholar] [CrossRef]

- Dixit, V.V.; Chand, S.; Nair, D.J. Autonomous vehicles: Disengagements, accidents and reaction times. PLoS ONE 2016, 11, e0168054. [Google Scholar] [CrossRef]

- Huang, C.; Wang, J.; Wang, A.; Huang, Q.; He, D. The Effect of Advanced Driver Assistance Systems on Truck Drivers’ Defensive Driving Behaviors: Insights from a Preliminary On-Road Study. Int. J. Hum.-Comput. Interact. 2025, 1–15. [Google Scholar] [CrossRef]

- Wei, D.; Zhang, C.; Fan, M.; Ge, S.; Mi, Z. Research on multimodal adaptive in-vehicle interface interaction design strategies for hearing-impaired drivers in fatigue driving scenarios. Sustainability 2024, 16, 10984. [Google Scholar] [CrossRef]

- Wang, A.; Huang, C.; Wang, J.; He, D. The association between physiological and eye-tracking metrics and cognitive load in drivers: A meta-analysis. Transp. Res. Part F Traffic Psychol. Behav. 2024, 104, 474–487. [Google Scholar] [CrossRef]

- Vanneste, P.; Raes, A.; Morton, J.; Bombeke, K.; Van Acker, B.B.; Larmuseau, C.; Depaepe, F.; Van den Noortgate, W. Towards measuring cognitive load through multimodal physiological data. Cogn. Technol. Work 2021, 23, 567–585. [Google Scholar] [CrossRef]

- Wang, A.; Wang, J.; Shi, W.; He, D. Cognitive Workload Estimation in Conditionally Automated Vehicles Using Transformer Networks Based on Physiological Signals. Transp. Res. Rec. 2024, 2678, 1183–1196. [Google Scholar]

- Yedukondalu, J.; Sunkara, K.; Radhika, V.; Kondaveeti, S.; Anumothu, M.; Murali Krishna, Y. Cognitive load detection through EEG lead wise feature optimization and ensemble classification. Sci. Rep. 2025, 15, 842. [Google Scholar] [CrossRef]

- Anwar, U.; Arslan, T.; Hussain, A. Hearing Loss, Cognitive Load and Dementia: An Overview of Interrelation, Detection and Monitoring Challenges with Wearable Non-invasive Microwave Sensors. arXiv 2022, arXiv:2202.03973. [Google Scholar] [CrossRef]

- Razak, S.F.A.; Yogarayan, S.; Aziz, A.A.; Abdullah, M.F.A.; Kamis, N.H. Physiological-based driver monitoring systems: A scoping review. Civ. Eng. J. 2022, 8, 3952–3967. [Google Scholar] [CrossRef]

- Rahman, H.; Ahmed, M.U.; Barua, S.; Funk, P.; Begum, S. Vision-based driver’s cognitive load classification considering eye movement using machine learning and deep learning. Sensors 2021, 21, 8019. [Google Scholar] [CrossRef]

- Misra, A.; Samuel, S.; Cao, S.; Shariatmadari, K. Detection of driver cognitive distraction using machine learning methods. IEEE Access 2023, 11, 18000–18012. [Google Scholar] [CrossRef]

- Lu, H.; Niu, X.; Wang, J.; Wang, Y.; Hu, Q.; Tang, J.; Zhang, Y.; Yuan, K.; Huang, B.; Yu, Z.; et al. Gpt as psychologist? Preliminary evaluations for gpt-4v on visual affective computing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 322–331. [Google Scholar]

- Alshanskaia, E.I.; Zhozhikashvili, N.A.; Polikanova, I.S.; Martynova, O.V. Heart rate response to cognitive load as a marker of depression and increased anxiety. Front. Psychiatry 2024, 15, 1355846. [Google Scholar] [CrossRef]

- Ayres, P.; Lee, J.Y.; Paas, F.; Van Merrienboer, J.J. The validity of physiological measures to identify differences in intrinsic cognitive load. Front. Psychol. 2021, 12, 702538. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Lu, H.; Wang, A.; Yang, X.; Chen, Y.; He, D.; Wu, K. Physmle: Generalizable and priors-inclusive multi-task remote physiological measurement. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 4908–4925. [Google Scholar]

- Wang, J.; Wang, A.; Hu, H.; Wu, K.; He, D. Multi-Source Domain Generalization for ECG-Based Cognitive Load Estimation: Adversarial Invariant and Plausible Uncertainty Learning. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 1631–1635. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. 2021, 34, 5586–5609. [Google Scholar] [CrossRef]

- Larraga-García, B.; Bejerano, V.R.; Oregui, X.; Rubio-Bolívar, J.; Quintana-Díaz, M.; Gutiérrez, Á. Physiological and performance metrics during a cardiopulmonary real-time feedback simulation to estimate cognitive load. Displays 2024, 84, 102780. [Google Scholar] [CrossRef]

- Zhou, W.; Zhao, L.; Zhang, R.; Cui, Y.; Huang, H.; Qie, K.; Wang, C. Vision Technologies with Applications in Traffic Surveillance Systems: A Holistic Survey. arXiv 2024, arXiv:2412.00348. [Google Scholar] [CrossRef]

- Doudou, M.; Bouabdallah, A.; Berge-Cherfaoui, V. Driver drowsiness measurement technologies: Current research, market solutions, and challenges. Int. J. Intell. Transp. Syst. Res. 2020, 18, 297–319. [Google Scholar] [CrossRef]

- Yang, H.; Wu, J.; Hu, Z.; Lv, C. Real-time driver cognitive workload recognition: Attention-enabled learning with multimodal information fusion. IEEE Trans. Ind. Electron. 2023, 71, 4999–5009. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Wang, Z.; Wei, X.; Wang, A.; He, D.; Wu, K. Efficient mixture-of-expert for video-based driver state and physiological multi-task estimation in conditional autonomous driving. arXiv 2024, arXiv:2410.21086. [Google Scholar]

- Wang, J.; Wei, X.; Lu, H.; Chen, Y.; He, D. Condiff-rppg: Robust remote physiological measurement to heterogeneous occlusions. IEEE J. Biomed. Health Inform. 2024, 28, 7090–7102. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Tang, J.; Wang, J.; Lu, Y.; Cao, X.; Hu, Q.; Wang, Y.; Zhang, Y.; Xie, T.; Zhang, Y.; et al. Sage Deer: A Super-Aligned Driving Generalist Is Your Copilot. arXiv 2025, arXiv:2505.10257. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Hu, Q.; Tang, J.; Liu, C.; He, D.; Wang, Y.; Chen, Y.; Wu, K. PhysDrive: A Multimodal Remote Physiological Measurement Dataset for In-vehicle Driver Monitoring. arXiv 2025, arXiv:2507.19172. [Google Scholar]

- Wang, J.; Lu, H.; Wang, A.; Chen, Y.; He, D. Hierarchical style-aware domain generalization for remote physiological measurement. IEEE J. Biomed. Health Inform. 2023, 28, 1635–1643. [Google Scholar] [CrossRef]

- Wang, J.; Lu, H.; Han, H.; Chen, Y.; He, D.; Wu, K. Generalizable Remote Physiological Measurement via Semantic-Sheltered Alignment and Plausible Style Randomization. IEEE Trans. Instrum. Meas. 2024, 74, 5003014. [Google Scholar] [CrossRef]

- Wang, C.; Tsepa, O.; Ma, J.; Wang, B. Graph-mamba: Towards long-range graph sequence modeling with selective state spaces. arXiv 2024, arXiv:2402.00789. [Google Scholar]

- Xie, X.; Cui, Y.; Tan, T.; Zheng, X.; Yu, Z. Fusionmamba: Dynamic feature enhancement for multimodal image fusion with mamba. Vis. Intell. 2024, 2, 37. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Stojmenova, K.; Duh, S.E.; Sodnik, J. A review on methods for assessing driver’s cognitive load. IPSI BGD Trans. Internet Res. 2018, 14, 1–8. [Google Scholar]

- Reimer, B.; Mehler, B.; Coughlin, J.F.; Godfrey, K.M.; Tan, C. An on-road assessment of the impact of cognitive workload on physiological arousal in young adult drivers. In Proceedings of the 1st International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Essen, Germany, 21–22 September 2009; pp. 115–118. [Google Scholar]

- Zander, T.O.; Andreessen, L.M.; Berg, A.; Bleuel, M.; Pawlitzki, J.; Zawallich, L.; Krol, L.R.; Gramann, K. Evaluation of a dry EEG system for application of passive brain-computer interfaces in autonomous driving. Front. Hum. Neurosci. 2017, 11, 78. [Google Scholar] [CrossRef]

- Gerjets, P.; Walter, C.; Rosenstiel, W.; Bogdan, M.; Zander, T.O. Cognitive state monitoring and the design of adaptive instruction in digital environments: Lessons learned from cognitive workload assessment using a passive brain-computer interface approach. Front. Neurosci. 2014, 8, 385. [Google Scholar] [CrossRef] [PubMed]

- Kumar, N.; Kumar, J. Measurement of cognitive load in HCI systems using EEG power spectrum: An experimental study. Procedia Comput. Sci. 2016, 84, 70–78. [Google Scholar] [CrossRef]

- Ahmed, M.U.; Begum, S.; Gestlöf, R.; Rahman, H.; Sörman, J. Machine Learning for Cognitive Load Classification—A Case Study on Contact-Free Approach. In Proceedings of the Artificial Intelligence Applications and Innovations: 16th IFIP WG 12.5 International Conference, AIAI 2020, Neos Marmaras, Greece, 5–7 June 2020; Proceedings, Part I 16. Springer: Cham, Switzerland, 2020; pp. 31–42. [Google Scholar]

- Ahmed, S.G.; Verbert, K.; Siedahmed, N.; Khalil, A.; AlJassmi, H.; Alnajjar, F. AI Innovations in rPPG Systems for Driver Monitoring: Comprehensive Systematic Review and Future Prospects. IEEE Access 2025, 13, 22893–22918. [Google Scholar] [CrossRef]

- Nasri, M.; Kosa, M.; Chukoskie, L.; Moghaddam, M.; Harteveld, C. Exploring Eye Tracking to Detect Cognitive Load in Complex Virtual Reality Training. In Proceedings of the 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bellevue, WA, USA, 21–25 October 2024; pp. 51–54. [Google Scholar]

- Razzaq, K.; Shah, M. Machine learning and deep learning paradigms: From techniques to practical applications and research frontiers. Computers 2025, 14, 93. [Google Scholar] [CrossRef]

- Plazibat, I.; Gašperov, L.; Petričević, D. Nudging Technique in Retail: Increasing Consumer Consumption. JCGIRM 2021, 2, 1–9. [Google Scholar] [CrossRef]

- Paulchamy, B.; Yahya, A.; Chinnasamy, N.; Kasilingam, K. Facial expression recognition through transfer learning: Integration of VGG16, ResNet, and AlexNet with a multiclass classifier. Acadlore Trans. AI Mach. Learn. 2025, 4, 25–39. [Google Scholar] [CrossRef]

- Khan, M.A.; Asadi, H.; Qazani, M.R.C.; Lim, C.P.; Nahavandi, S. Functional near-infrared spectroscopy (fNIRS) and Eye tracking for Cognitive Load classification in a Driving Simulator Using Deep Learning. arXiv 2024, arXiv:2408.06349. [Google Scholar]

- Arumugam, D.; Ho, M.K.; Goodman, N.D.; Van Roy, B. Bayesian reinforcement learning with limited cognitive load. Open Mind 2024, 8, 395–438. [Google Scholar] [CrossRef]

- Ding, L.; Terwilliger, J.; Parab, A.; Wang, M.; Fridman, L.; Mehler, B.; Reimer, B. CLERA: A unified model for joint cognitive load and eye region analysis in the wild. ACM Trans. Comput.-Hum. Interact. 2023, 30, 1–23. [Google Scholar] [CrossRef]

- Yadav, S.; Tan, Z.H. Audio mamba: Selective state spaces for self-supervised audio representations. arXiv 2024, arXiv:2406.02178. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, Q.; Liu, H.; Xiao, T.; Qian, X.; Ahmed, B.; Ambikairajah, E.; Li, H.; Epps, J. Mamba in speech: Towards an alternative to self-attention. IEEE Trans. Audio Speech Lang. Process. 2025, 33, 1933–1948. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Gholipour, Y.; Mirabdollahi Shams, E. Introduction New Combination of Zero-Order Hold and First-Order Hold. 2014. Available online: https://ssrn.com/abstract=5231692 (accessed on 30 July 2014).

- Reed, P.; Steed, I. The effects of concurrent cognitive task load on recognising faces displaying emotion. Acta Psychol. 2019, 193, 153–159. [Google Scholar] [CrossRef] [PubMed]

- Moon, J.; Ryu, J. The effects of social and cognitive cues on learning comprehension, eye-gaze pattern, and cognitive load in video instruction. J. Comput. High. Educ. 2021, 33, 39–63. [Google Scholar] [CrossRef]

- Fridman, L.; Reimer, B.; Mehler, B.; Freeman, W.T. Cognitive load estimation in the wild. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–9. [Google Scholar]

- Abilkassov, S.; Kairgaliyev, M.; Zhakanov, B.; Abibullaev, B. A System For Drivers’ Cognitive Load Estimation Based On Deep Convolutional Neural Networks and Facial Feature Analysis. In Proceedings of the 2021 22nd IEEE International Conference on Industrial Technology (ICIT), Virtual, 10–12 March 2021; Volume 1, pp. 994–1000. [Google Scholar]

- Zhong, R.; Zhou, Y.; Gou, C. Est-tsanet: Video-based remote heart rate measurement using temporal shift attention network and estmap. IEEE Trans. Instrum. Meas. 2023, 73, 1–14. [Google Scholar] [CrossRef]

- Kim, D.Y.; Cho, S.Y.; Lee, K.; Sohn, C.B. A study of projection-based attentive spatial–temporal map for remote photoplethysmography measurement. Bioengineering 2022, 9, 638. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Lu, H.; He, D.; Wu, K. Align the GAP: Prior-based Unified Multi-Task Remote Physiological Measurement Framework For Domain Generalization and Personalization. arXiv 2025, arXiv:2506.16160. [Google Scholar]

- Yang, X.; Fan, Y.; Liu, C.; Su, H.; Guo, W.; Wang, J.; He, D. Not Only Consistency: Enhance Test-Time Adaptation with Spatio-temporal Inconsistency for Remote Physiological Measurement. arXiv 2025, arXiv:2507.07908. [Google Scholar]

- Liu, C.C. Data Collection Report. 2017. Available online: https://www.dsp.toronto.edu/projects/eDREAM/publications/edream/test.pdf (accessed on 30 July 2014).

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Peng, Y.; Deng, H.; Xiang, G.; Wu, X.; Yu, X.; Li, Y.; Yu, T. A multi-source fusion approach for driver fatigue detection using physiological signals and facial image. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16614–16624. [Google Scholar] [CrossRef]

- Hara, K.; Kataoka, H.; Satoh, Y. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 6836–6846. [Google Scholar]

- Li, K.; Li, X.; Wang, Y.; He, Y.; Wang, Y.; Wang, L.; Qiao, Y. Videomamba: State space model for efficient video understanding. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2024; pp. 237–255. [Google Scholar]

- De Haan, G.; Jeanne, V. Robust pulse rate from chrominance-based rPPG. IEEE Trans. Biomed. Eng. 2013, 60, 2878–2886. [Google Scholar] [CrossRef]

- Wang, W.; Den Brinker, A.C.; Stuijk, S.; De Haan, G. Algorithmic principles of remote PPG. IEEE Trans. Biomed. Eng. 2016, 64, 1479–1491. [Google Scholar] [CrossRef] [PubMed]

- Tarassenko, L.; Villarroel, M.; Guazzi, A.; Jorge, J.; Clifton, D.; Pugh, C. Non-contact video-based vital sign monitoring using ambient light and auto-regressive models. Physiol. Meas. 2014, 35, 807. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Han, H.; Zhou, S.K. Dual-GAN: Joint BVP and Noise Modeling for Remote Physiological Measurement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 12404–12413. [Google Scholar]

- Liu, X.; Fromm, J.; Patel, S.; McDuff, D. Multi-task temporal shift attention networks for on-device contactless vitals measurement. Adv. Neural Inf. Process. Syst. 2020, 33, 19400–19411. [Google Scholar]

- Narayanswamy, G.; Liu, Y.; Yang, Y.; Ma, C.; Liu, X.; McDuff, D.; Patel, S. Bigsmall: Efficient multi-task learning for disparate spatial and temporal physiological measurements. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 7914–7924. [Google Scholar]

- Huo, C.; Yin, P.; Fu, B. MultiPhys: Heterogeneous Fusion of Mamba and Transformer for Video-Based Multi-Task Physiological Measurement. Sensors 2024, 25, 100. [Google Scholar] [CrossRef]

- McDuff, D.J.; Estepp, J.R.; Piasecki, A.M.; Blackford, E.B. A survey of remote optical photoplethysmographic imaging methods. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6398–6404. [Google Scholar]

- Zagermann, J.; Pfeil, U.; Reiterer, H. Studying eye movements as a basis for measuring cognitive load. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–6. [Google Scholar]

- Shi, L.; Zhong, B.; Liang, Q.; Hu, X.; Mo, Z.; Song, S. Mamba Adapter: Efficient Multi-Modal Fusion for Vision-Language Tracking. IEEE Trans. Circuits Syst. Video Technol. 2025; early access. [Google Scholar]

- Philipp, G.; Song, D.; Carbonell, J.G. The exploding gradient problem demystified-definition, prevalence, impact, origin, tradeoffs, and solutions. arXiv 2017, arXiv:1712.05577. [Google Scholar]

| Symbol | Description |

|---|---|

| L | Length of the input time window (number of frames, e.g., 300) |

| D | Total concatenated feature dimension after alignment |

| Feature sequences extracted from left eye, right eye, and mouth | |

| Feature sequence extracted from 106-point facial landmarks | |

| Feature sequence extracted from STMap (spatio-temporal map) | |

| F | Concatenated multi-region feature sequence |

| Forward and backward Mamba-encoded features | |

| Projected embeddings via linear layers after Mamba | |

| Concatenated bidirectional features | |

| Temporal-aggregated global representation via mean pooling | |

| Predicted heart rate, respiration rate, and cognitive load | |

| Ground truth labels for HR, RR, and cognitive load | |

| Smooth L1 losses for HR and RR regression | |

| Truncated cross-entropy loss for cognitive load classification | |

| Adaptation weight |

| Method | eDream Dataset | MCDD Dataset | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Acc (%) | F1 (%) | Sens (%) | Spec (%) | Acc (%) | F1 (%) | Sens (%) | Spec (%) | ||

| CNN [17] | 65.23 | 53.18 | 58.97 | 71.02 | 64.01 | 52.05 | 58.04 | 69.56 | |

| LSTM [17] | 66.84 | 54.79 | 60.98 | 72.71 | 65.62 | 53.63 | 59.95 | 71.34 | |

| CNN + SVM [17] | 68.35 | 56.17 | 62.94 | 74.28 | 67.14 | 55.02 | 61.97 | 72.73 | |

| AE + SVM [17] | 69.52 | 57.63 | 64.48 | 75.55 | 68.37 | 56.41 | 63.49 | 74.21 | |

| ResNet3D [67] | 77.18 | 65.19 | 70.96 | 81.05 | 72.04 | 61.10 | 68.33 | 73.80 | |

| ViViT [68] | 78.46 | 66.97 | 72.98 | 82.16 | 71.76 | 56.57 | 57.25 | 78.63 | |

| CLERA [51] | 75.82 | 62.95 | 68.94 | 79.53 | 75.09 | 62.07 | 68.02 | 78.96 | |

| VDMoE [29] | 79.89 | 68.96 | 86.51 | 77.59 | 79.96 | 68.81 | 77.13 | 80.77 | |

| VideoMamba [69] | 80.15 | 70.25 | 72.50 | 84.33 | 73.00 | 58.52 | 58.48 | 71.11 | |

| CogMamrba | 83.56 | 74.48 | 88.25 | 82.19 | 81.22 | 71.62 | 79.33 | 84.02 | |

| Method | Heart Rate (HR) | Respiration Rate (RR) | |||||

|---|---|---|---|---|---|---|---|

| MAE ↓ | RMSE ↓ | p ↑ | MAE ↓ | RMSE ↓ | p ↑ | ||

| CHROM [70] | 20.31 | 25.71 | 0.21 | — | — | — | |

| POS [71] | 18.53 | 22.42 | 0.25 | — | — | — | |

| ARM-RR [72] | — | — | — | 4.24 | 5.11 | 0.26 | |

| Dual-GAN [73] | 15.85 | 18.52 | 0.47 | — | — | — | |

| ConDiff-rPPG [30] | 15.41 | 18.72 | 0.49 | — | — | — | |

| HSRD [33] | 14.82 | 17.91 | 0.51 | — | — | — | |

| DG-rPPG [34] | 14.22 | 17.22 | 0.53 | — | — | — | |

| MTTS-CAN [74] | 13.62 | 16.52 | 0.57 | 3.62 | 4.32 | 0.31 | |

| BigSmall [75] | 12.91 | 15.91 | 0.58 | 3.42 | 4.11 | 0.32 | |

| MultiPhys [76] | 12.51 | 15.41 | 0.60 | 3.32 | 4.01 | 0.32 | |

| PhysMLE [22] | 11.91 | 14.81 | 0.62 | 3.22 | 3.91 | 0.33 | |

| ResNet3D [67] | 10.50 | 14.00 | 0.65 | 2.91 | 3.82 | 0.37 | |

| ViViT [68] | 10.21 | 13.70 | 0.65 | 2.82 | 3.72 | 0.38 | |

| VDMoE [29] | 9.17 | 13.80 | 0.65 | 2.53 | 3.02 | 0.34 | |

| VideoMamba [69] | 9.83 | 12.72 | 0.66 | 2.45 | 3.57 | 0.37 | |

| CogMamba | 8.97 | 13.10 | 0.66 | 2.20 | 2.69 | 0.39 | |

| Method | Heart Rate (HR) | Respiration Rate (RR) | |||||

|---|---|---|---|---|---|---|---|

| MAE ↓ | RMSE ↓ | p ↑ | MAE ↓ | RMSE ↓ | p ↑ | ||

| CHROM [70] | 18.33 | 19.70 | 0.20 | — | — | — | |

| POS [71] | 17.33 | 20.02 | 0.22 | — | — | — | |

| ARM-RR [72] | — | — | — | 7.32 | 9.16 | 0.11 | |

| Dual-GAN [73] | 13.29 | 18.86 | 0.31 | — | — | — | |

| ConDiff-rPPG [30] | 15.32 | 19.58 | 0.29 | — | — | — | |

| HSRD [33] | 13.65 | 17.29 | 0.30 | — | — | — | |

| DG-rPPG [34] | 12.97 | 17.58 | 0.31 | — | — | — | |

| MTTS-CAN [74] | 13.96 | 18.31 | 0.30 | 5.22 | 6.68 | 0.37 | |

| BigSmall [75] | 13.13 | 18.02 | 0.32 | 5.26 | 6.72 | 0.37 | |

| MultiPhys [76] | 12.17 | 18.80 | 0.45 | 5.53 | 7.02 | 0.38 | |

| PhysMLE [22] | 12.03 | 17.11 | 0.46 | 5.12 | 7.03 | 0.38 | |

| ResNet3D [67] | 14.27 | 19.02 | 0.29 | 5.63 | 6.58 | 0.14 | |

| ViViT [68] | 14.92 | 19.61 | 0.28 | 5.33 | 6.10 | 0.16 | |

| VDMoE [29] | 10.32 | 15.37 | 0.53 | 4.98 | 6.53 | 0.45 | |

| VideoMamba [69] | 14.02 | 18.65 | 0.42 | 4.53 | 6.02 | 0.36 | |

| CogMamba | 10.04 | 14.20 | 0.56 | 4.18 | 5.39 | 0.51 | |

| Model Variant | Cognitive Load | Heart Rate (HR) | Respiration Rate (RR) | |||||

|---|---|---|---|---|---|---|---|---|

| Acc (%) | F1 (%) | MAE ↓ | p ↑ | MAE ↓ | p ↑ | |||

| ResNet3D [67] | 77.18 | 65.19 | 10.50 | 0.65 | 2.91 | 0.37 | ||

| ViViT [68] | 78.46 | 66.97 | 10.21 | 0.65 | 2.82 | 0.38 | ||

| CogMamba w/o | 82.56 | 73.65 | 15.12 | 0.41 | 6.41 | 0.12 | ||

| CogMamba w/o | 81.23 | 71.23 | 9.11 | 0.65 | 2.61 | 0.35 | ||

| CogMamba w/o | 70.54 | 62.01 | 9.01 | 0.66 | 2.34 | 0.38 | ||

| CogMamba w/o | 70.76 | 62.23 | 9.02 | 0.65 | 2.35 | 0.37 | ||

| CogMamba w/o | 75.54 | 70.01 | 8.99 | 0.66 | 2.27 | 0.39 | ||

| CogMamba (Full) | 83.56 | 74.48 | 8.97 | 0.66 | 2.20 | 0.39 | ||

| Model Variant | Cognitive Load | Heart Rate (HR) | Respiration Rate (RR) | |||||

|---|---|---|---|---|---|---|---|---|

| Acc (%) | F1 (%) | MAE ↓ | p ↑ | MAE ↓ | p ↑ | |||

| ResNet3D [67] | 72.04 | 61.10 | 14.27 | 0.29 | 5.63 | 0.14 | ||

| ViViT [68] | 71.76 | 56.57 | 14.92 | 0.28 | 5.33 | 0.16 | ||

| CogMamba w/o | 80.23 | 70.62 | 16.22 | 0.12 | 8.31 | 0.08 | ||

| CogMamba w/o | 78.90 | 70.00 | 10.11 | 0.54 | 4.61 | 0.50 | ||

| CogMamba w/o | 69.56 | 60.12 | 10.11 | 0.55 | 4.24 | 0.50 | ||

| CogMamba w/o | 69.70 | 60.28 | 10.12 | 0.55 | 4.25 | 0.50 | ||

| CogMamba w/o | 75.50 | 66.91 | 10.05 | 0.56 | 4.27 | 0.51 | ||

| CogMamba (Full) | 81.22 | 71.62 | 10.04 | 0.56 | 4.18 | 0.51 | ||

| Method | Cognitive Load | Heart Rate (HR) | Respiration Rate (RR) | |||||

|---|---|---|---|---|---|---|---|---|

| Acc (%) | F1 (%) | MAE ↓ | p ↑ | MAE ↓ | p ↑ | |||

| ResNet3D [67] | 57.03 | 52.63 | 17.07 | 0.424 | 4.96 | 0.236 | ||

| ViViT [68] | 58.92 | 51.88 | 16.26 | 0.420 | 4.31 | 0.226 | ||

| VDMoE [29] | 65.97 | 60.17 | 11.92 | 0.456 | 3.29 | 0.238 | ||

| HSRD [33] | — | — | 13.65 | 0.455 | 3.79 | 0.255 | ||

| DG-rPPG [34] | — | — | 13.27 | 0.455 | 3.67 | 0.264 | ||

| MultiPhys [76] | — | — | 16.26 | 0.420 | 4.31 | 0.226 | ||

| PhysMLE [22] | — | — | 15.48 | 0.434 | 4.18 | 0.233 | ||

| CogMamba | 68.49 | 62.14 | 12.66 | 0.412 | 3.88 | 0.243 | ||

| Method | Cognitive Load | Heart Rate (HR) | Respiration Rate (RR) | |||||

|---|---|---|---|---|---|---|---|---|

| Acc (%) | F1 (%) | MAE ↓ | p ↑ | MAE ↓ | p ↑ | |||

| ResNet3D [67] | 55.43 | 52.77 | 18.55 | 0.204 | 7.32 | 0.098 | ||

| ViViT [68] | 56.23 | 59.60 | 19.40 | 0.197 | 6.93 | 0.113 | ||

| VDMoE [29] | 65.97 | 58.17 | 17.75 | 0.210 | 5.20 | 0.210 | ||

| HSRD [33] | — | — | 13.42 | 0.371 | 6.47 | 0.315 | ||

| DG-rPPG [34] | — | — | 13.05 | 0.392 | 5.43 | 0.357 | ||

| MultiPhys [76] | — | — | 15.82 | 0.315 | 7.19 | 0.266 | ||

| PhysMLE [22] | — | — | 15.64 | 0.322 | 6.66 | 0.266 | ||

| CogMamba | 66.85 | 60.13 | 16.86 | 0.217 | 5.72 | 0.203 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, Y.; Guo, B. CogMamba: Multi-Task Driver Cognitive Load and Physiological Non-Contact Estimation with Multimodal Facial Features. Sensors 2025, 25, 5620. https://doi.org/10.3390/s25185620

Xie Y, Guo B. CogMamba: Multi-Task Driver Cognitive Load and Physiological Non-Contact Estimation with Multimodal Facial Features. Sensors. 2025; 25(18):5620. https://doi.org/10.3390/s25185620

Chicago/Turabian StyleXie, Yicheng, and Bin Guo. 2025. "CogMamba: Multi-Task Driver Cognitive Load and Physiological Non-Contact Estimation with Multimodal Facial Features" Sensors 25, no. 18: 5620. https://doi.org/10.3390/s25185620

APA StyleXie, Y., & Guo, B. (2025). CogMamba: Multi-Task Driver Cognitive Load and Physiological Non-Contact Estimation with Multimodal Facial Features. Sensors, 25(18), 5620. https://doi.org/10.3390/s25185620