DPCR-SLAM: A Dual-Point-Cloud-Registration SLAM Based on Line Features for Mapping an Indoor Mobile Robot

Abstract

1. Introduction

- 1.

- Designs a system framework based on double registration, strengthening the data association between reference point clouds and target point clouds, improving the positioning accuracy and mapping effect of indoor cleaning robots.

- 2.

- Adopts an improved Point-to-Line ICP algorithm leveraging structural regularities in indoor environments, achieving faster convergence than conventional PLICP through directional error constraints.

- 3.

- Performs graph optimization based on data between regional maps, with lower dimensionality of error equations, thus reducing graph optimization processing time.

- 4.

- Only regional maps are needed to store as nodes of the graph structure, reducing the storage space required for maps.

2. Related Work

2.1. Iterative Closest Point

2.2. Filter-Based SLAM

2.3. Graph Optimization SLAM

3. System Description

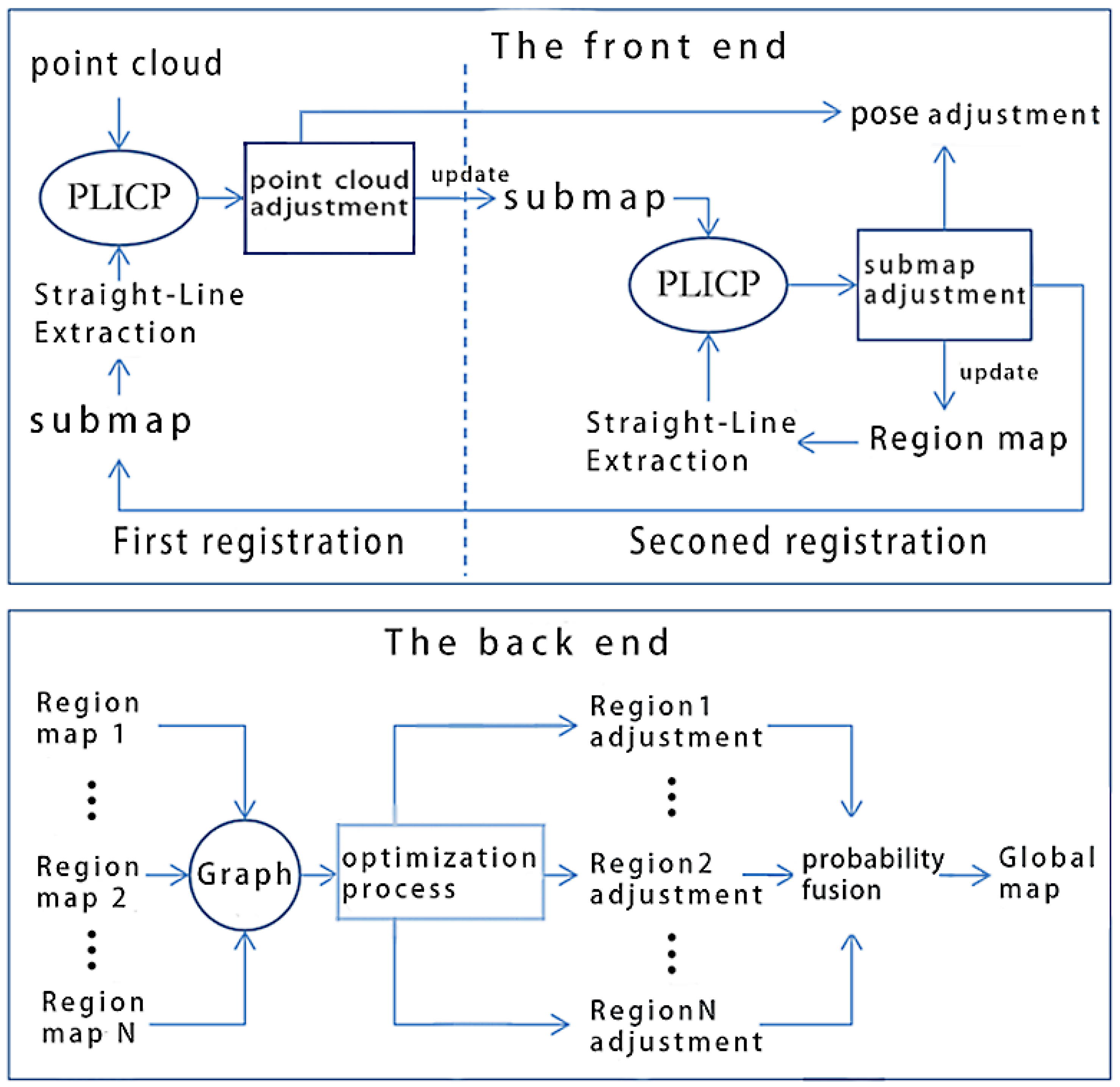

3.1. Workflow

- First registration: Using the improved PLICP, the point cloud and submap are registered. Then, the point cloud and robot pose are adjusted based on the registration results. The adjusted point cloud will be used to update the submap. We establish the submap from the most recent N frames of point clouds. At the start of the operation, when no submap exists, the point cloud is directly used to initialize the submap.

- Second registration: Align the updated submap and regional map by using the improved PLICP. Based on the registration results, the submap and robot pose are adjusted. The regional map is a grid map formed by fusing submaps within a region. These regions are obtained by dividing the workspace into relatively independent sections at a set distances. In the initial stage of the robot entering a region, the regional map has not yet been established, so the submap is directly used to update the regional map.

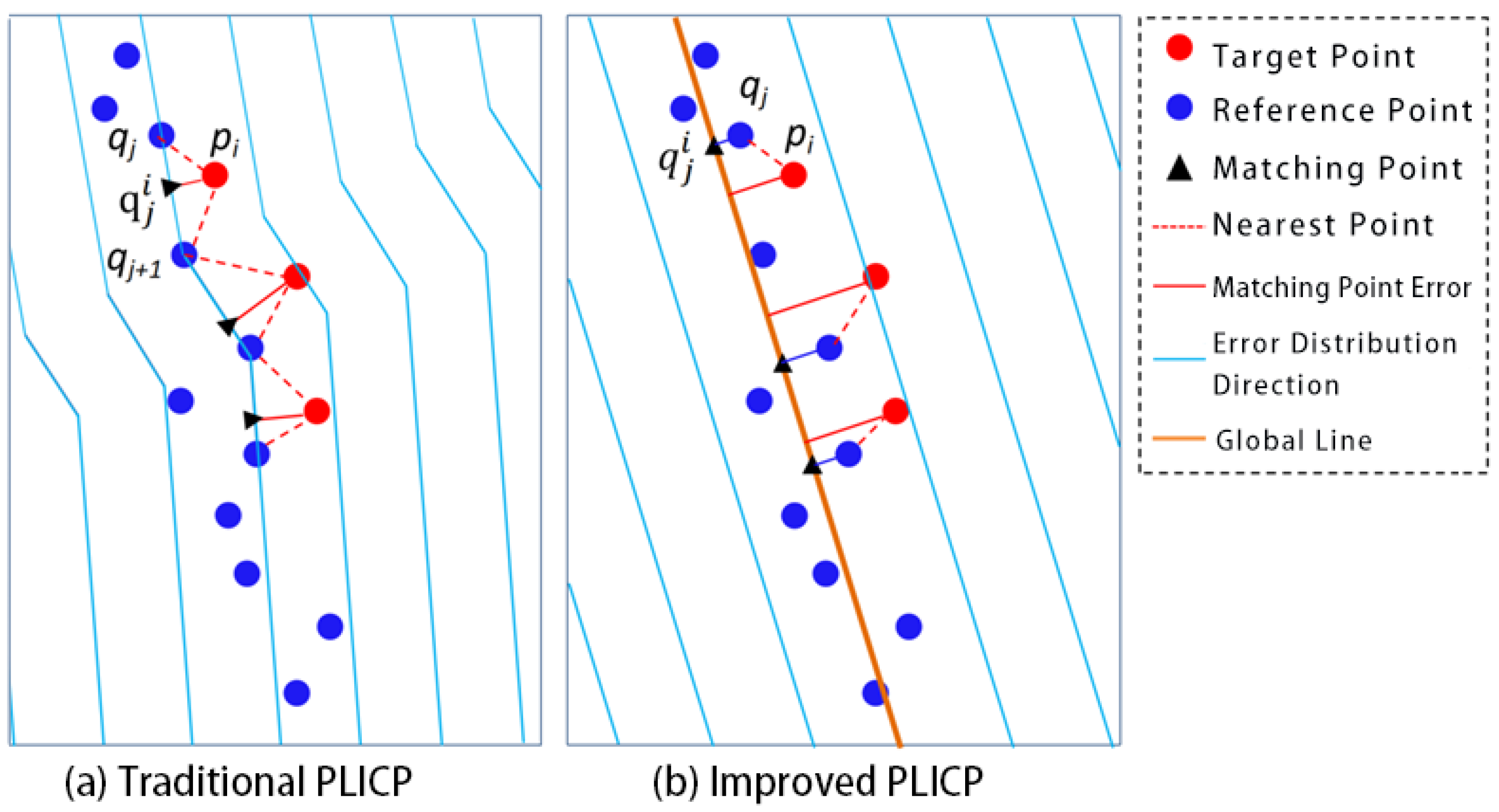

3.2. Improved PLICP

3.2.1. Steps

- (1)

- Use the RM-Line [31] algorithm to extract line features from the grid map. Mainly because, compared to the state-of-the-art algorithms, this algorithm has better performance on 2D indoor grid maps.

- (2)

- Set the iteration count k = 0, the initial rotation , and the initial translation .

- (3)

- Search for matching point pairs. Let the reference point cloud be and the target point cloud be . For each target point , search for the nearest free reference point . Calculate the foot of the perpendicular from to the line feature as the matching point . Record the matching point pair as .

- (4)

- Repeat step 3 to traverse all target points.

- (5)

- Solve the rigid body transformation for the matching point pairs , where the rotation angle is and the translation is .

- (6)

- Rotate and translate the target point cloud, as shown in Equation (1):where is the target point cloud before the kth iteration, is the rotation transformation, and is the translation transformation.

- (7)

- Update the error function, as shown in Equation (2):where is the ith data point in the target point cloud after the kth iteration and transformation, N is the number of points, and is the matching point of .

- (8)

- Repeat steps 3–7 until the error falls below the threshold or the maximum number of iterations is reached.

3.2.2. Comparison with Conventional PLICP Algorithms

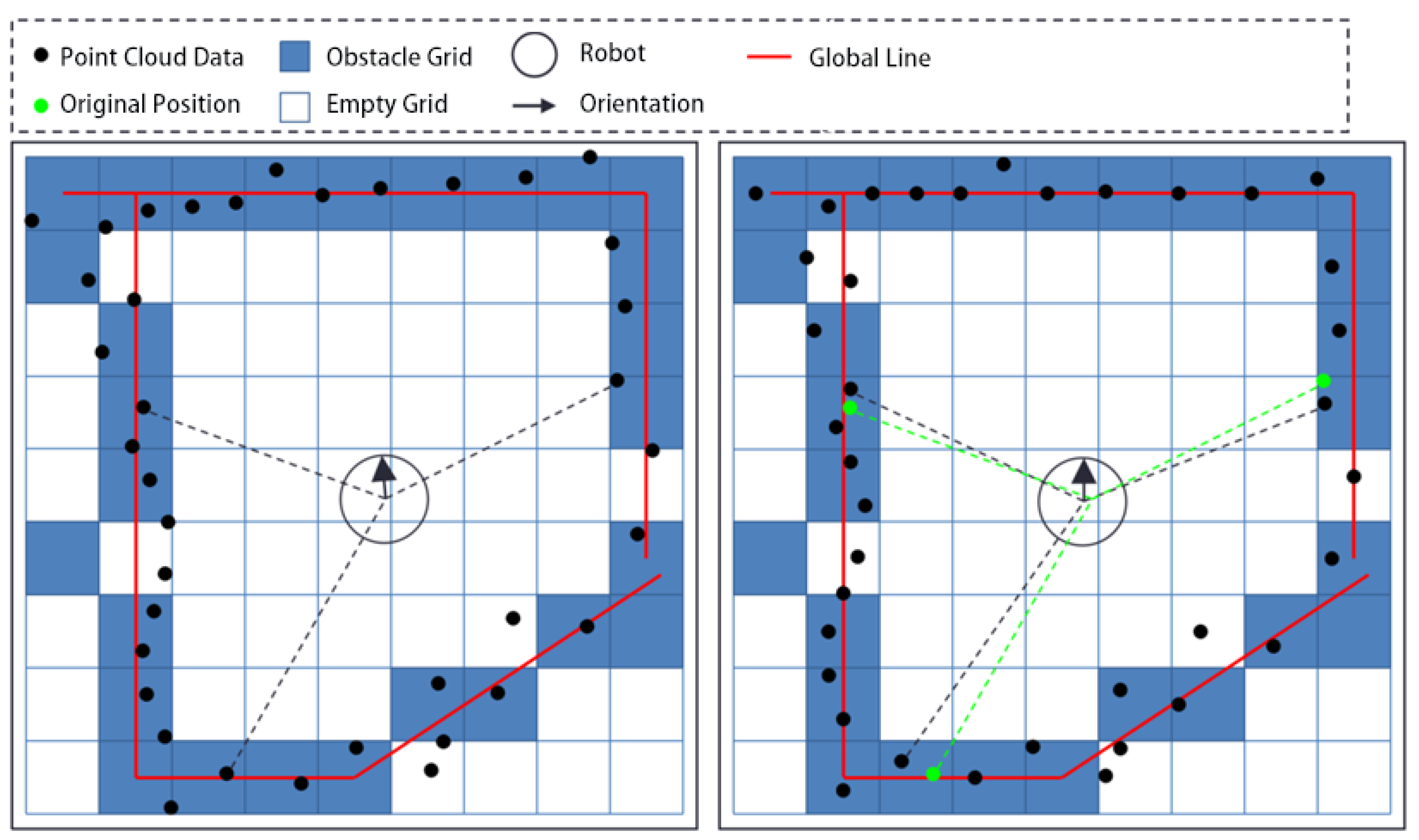

3.3. The First Registration Process

- (1)

- Extract lines from the submap at regular intervals: The improved PLICP algorithm requires extracting line features from the submap. However, in indoor environments most obstacles are relatively stable and the grid map has statistical properties. Therefore, the line features in the submap change relatively slowly. Based on this, the first registration process extracts line features from the submap every frames to reduce computational complexity.

- (2)

- Extract obstacle grid cells from the submap and use their center points as data points to form a reference point cloud.

- (3)

- Execute the improved PLICP algorithm.

- (4)

- Adjust the point cloud and robot pose based on the matching results.

- (5)

- Update the submap grid using the adjusted point cloud.

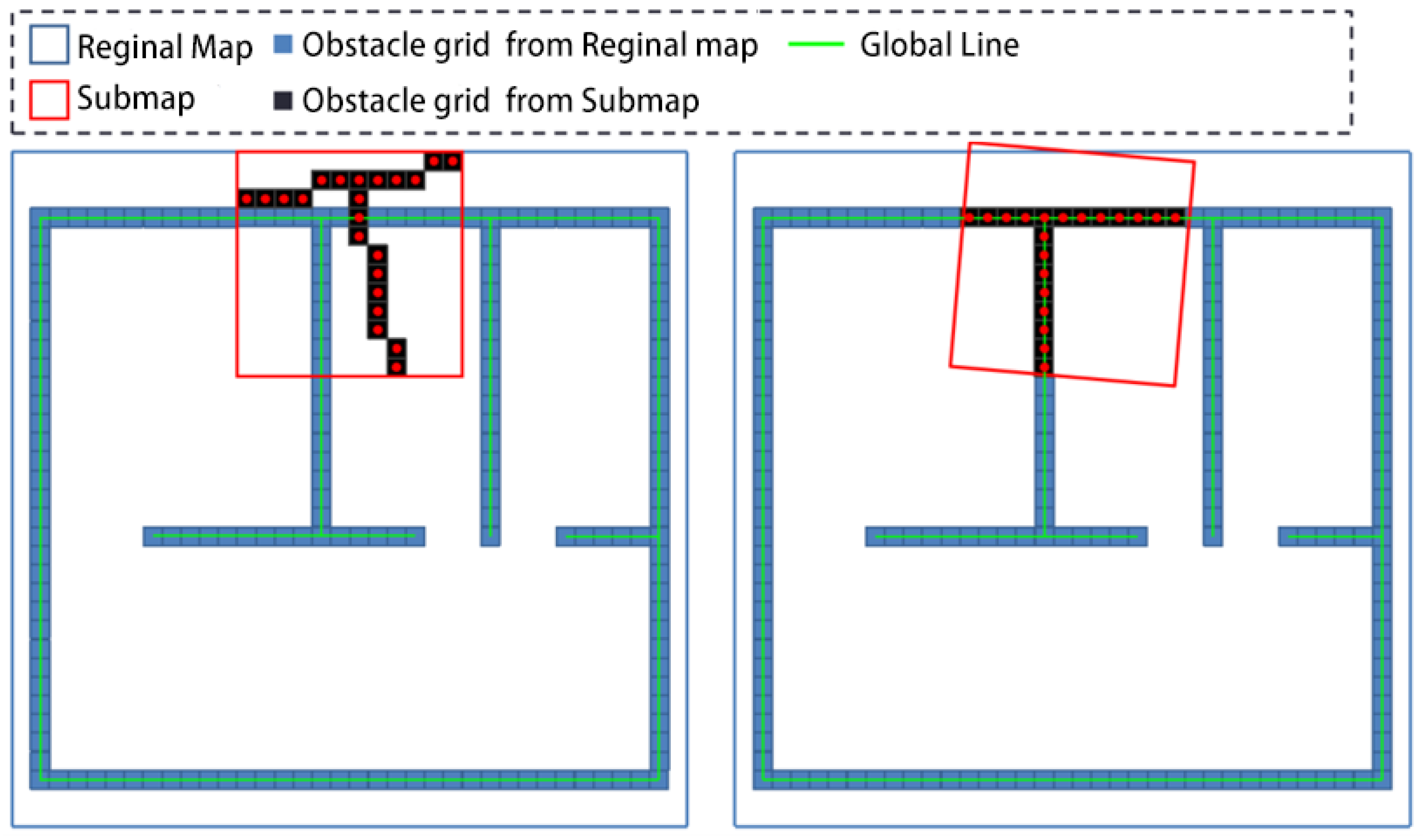

3.4. The Second Registration Process

3.4.1. Regional Division

3.4.2. Registration of Submap and Regional Map

- (1)

- Extract lines from the regional map at regular intervals. Similar to the first registration, the line features in the regional map change more slowly than those in the submap. In the second registration process, extract lines from the regional map every frames, and .

- (2)

- Scan the obstacle grids from the submap and use their center points as data points to form the target point cloud; scan the obstacle grids from the regional map and form a reference point cloud with their center points.

- (3)

- Execute the improved PLICP algorithm.

- (4)

- Adjust the submap and robot pose based on the matching results.

- (5)

- Update the regional map by using the adjusted submap and the target point cloud.

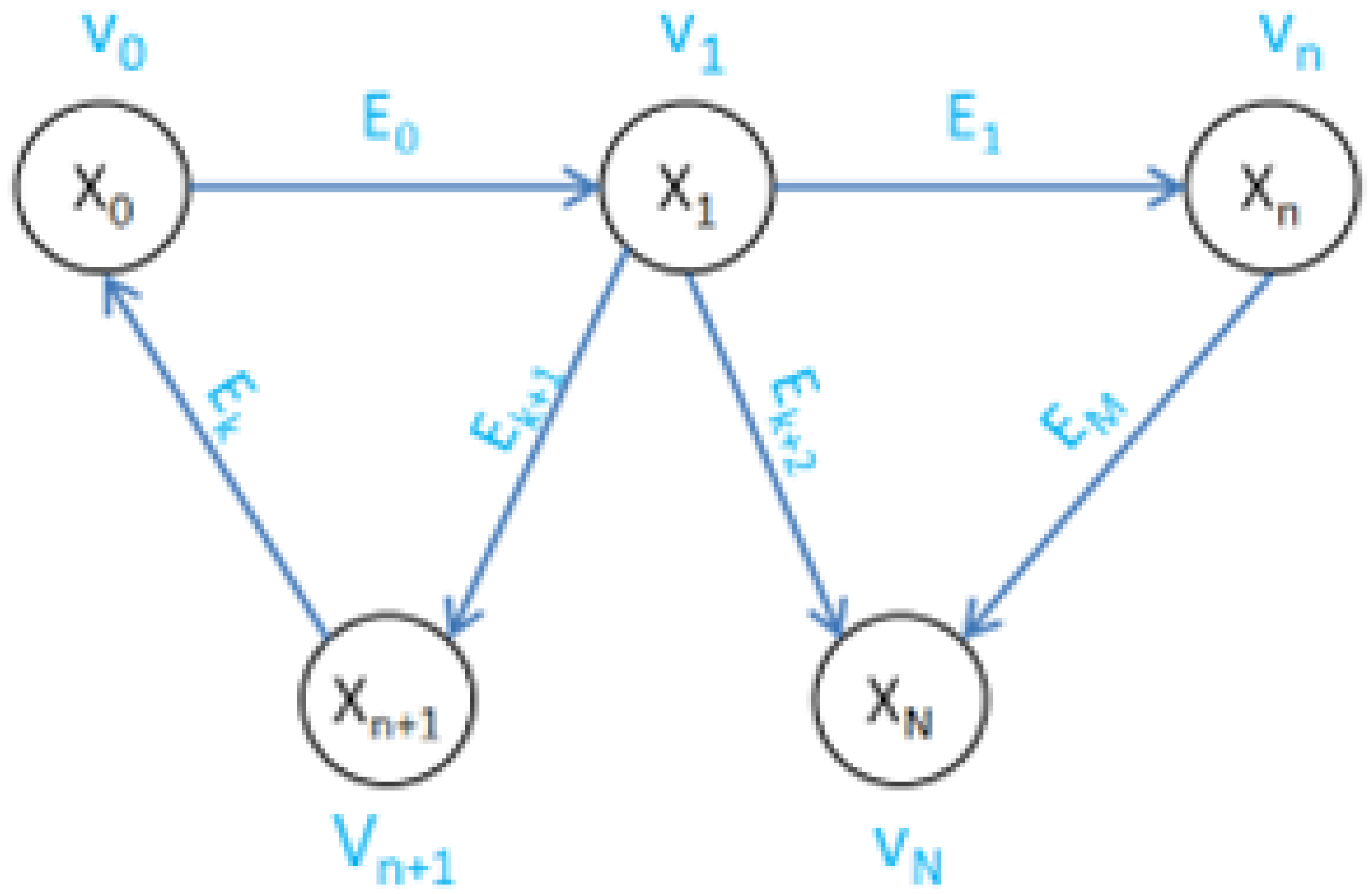

3.5. Region-Based Graph Optimization Method

- (1)

- Upon the robot leaving region m, incorporate point cloud and pose corresponding to the regional map to the graph structure as the initial vertex .

- (2)

- Examine the correlation between other regional maps and . If the conditions are satisfied, the point cloud and pose corresponding to will be incorporated into the graph structure as a new vertex . Form an edge between and , where the vertex with the earlier regional map establishment time is used as the starting point of the edge and the later one as the endpoint. The following parameters are utilized to evaluate the correlation of regional maps.

- : The distance between the centers of the regions on the map. Due to the limitations of sensor measurement distance, the robot’s detection range within each region is limited. When the centers of two regions are far apart, the correlation between the corresponding regional maps becomes very small or non-existent. The center distance of two regional maps forming an edge should satisfywhere S is the region width, is a proportional coefficient, and .

- : Regional map matching rate. After matching point clouds from two regional maps, we can obtain the matching degree of the two maps, as shown in Equation (11).where is the number of matching point pairs and TerNum1 and TerNum2 are the number of data points in the two point clouds. Regional maps with a matching rate below the threshold will be considered as unrelated.

By selectively adding constraints based on the and MatchRate thresholds, the graph structure remains sparse, ensuring optimization efficiency and good scalability as the map size increases. - (3)

- For each vertex already incorporated to the graph structure, examine its correlation with other regional maps. If the set conditions are satisfied, incorporate the regional map to the graph structure as a new vertex and establish an edge between the two vertices.

- (4)

- Use the Gauss–Newton method to optimize and solve the graph structure.

- (5)

- Adjust the regional maps based on the graph optimization results.

- (6)

- Obtain the global map through the probabilistic fusion of regional maps.

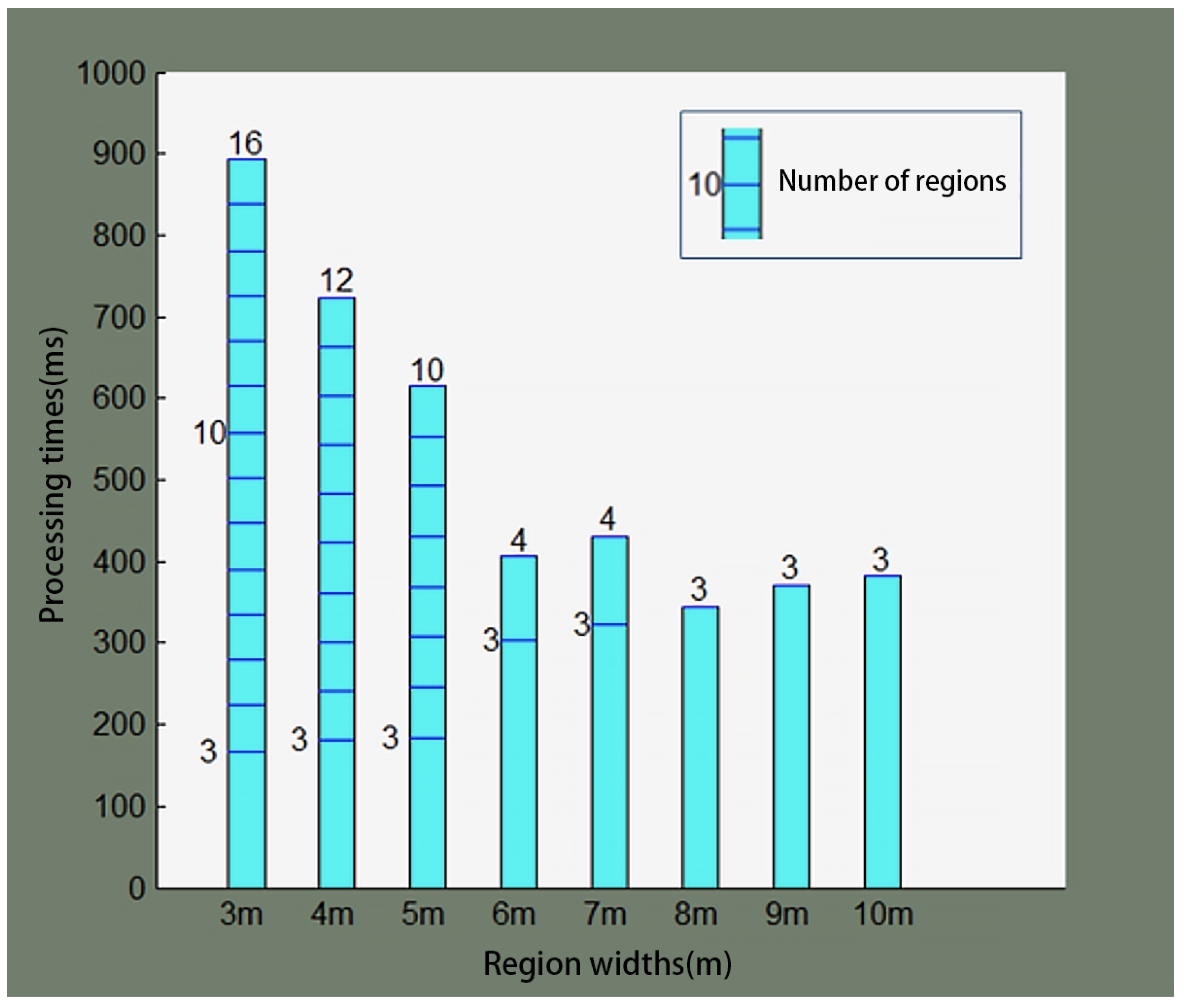

4. Analysis of Regional Width

4.1. Effect of Area Width on System Performance

4.1.1. Influence of Region Width on Regional Map Overlap Degree

4.1.2. Influence of Region Width on Regional Map Storage Space

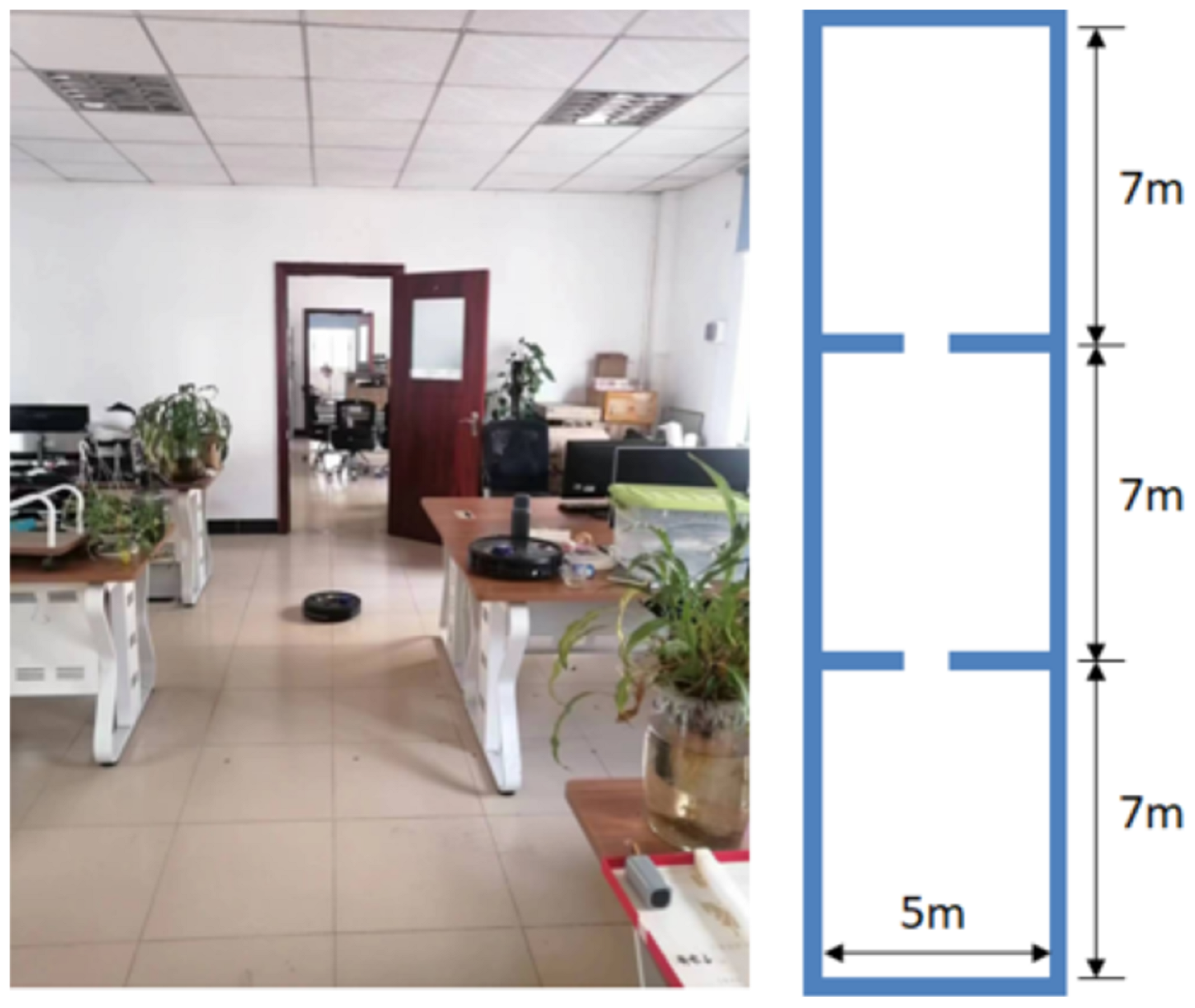

4.2. Region Width Test

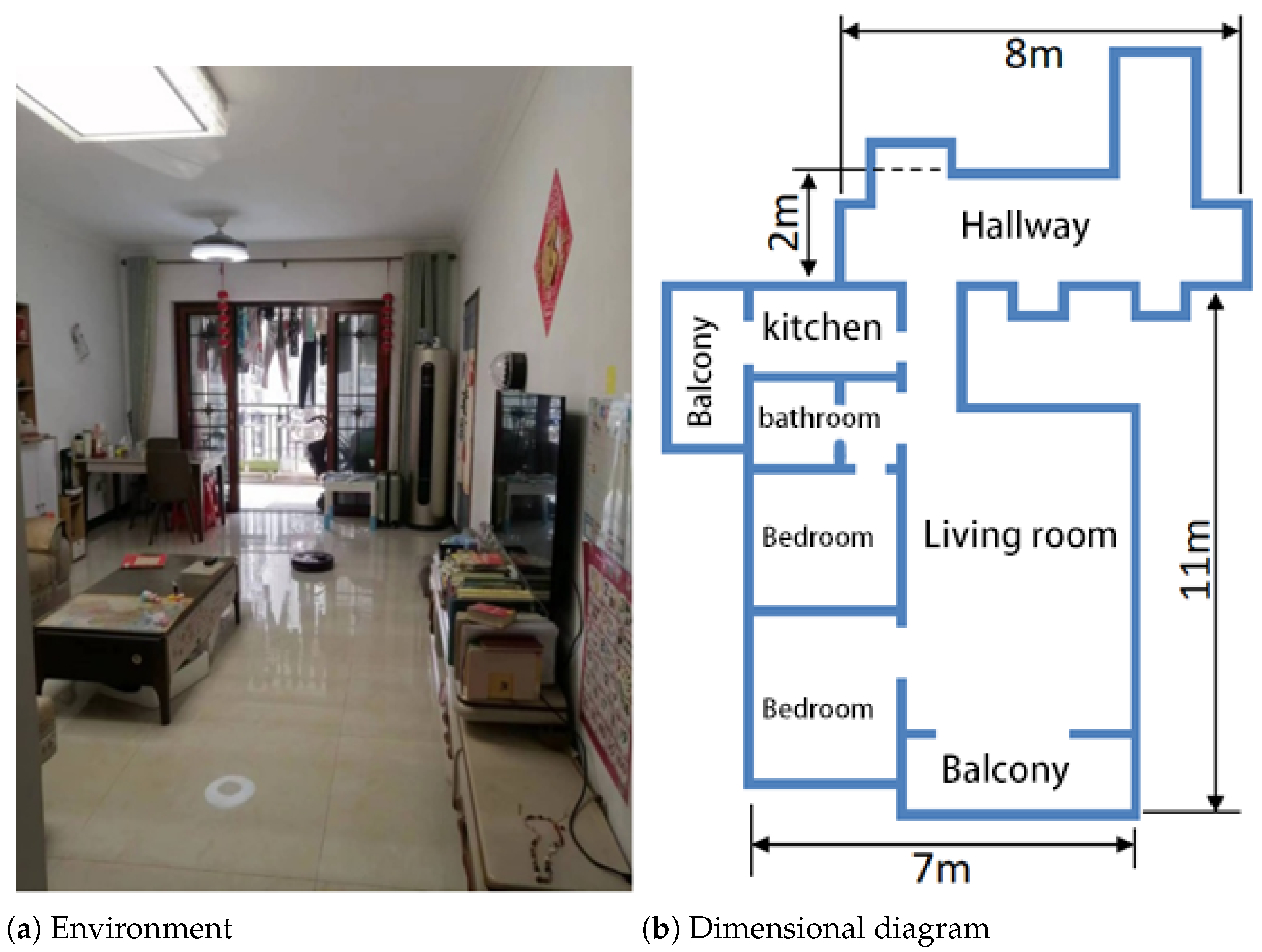

5. Experiment and Evaluation

5.1. Evaluation Standard

- (1)

- Map Storage Space: The memory space required to store the global map, regional maps, and submaps.

- (2)

- Point Cloud Processing Time (): The time required for the robot to process the collected point cloud data, including data pre-processing, point cloud registration, map updates, and pose updates. A shorter point cloud processing time indicates higher algorithm efficiency and better real-time performance, and vice versa.

- (3)

- Graph Optimization Processing Time (): The time required for the system to perform one graph optimization, including graph structure establishment, objective function solving, and node optimization. Graph optimization is performed when the robot leaves a region; this involves extensive computation and data processing. A shorter graph optimization processing time indicates higher algorithm efficiency, lower hardware load, and better real-time performance. An excessively long processing time may lead to system task congestion and high CPU utilization.

- (4)

- Average Localization Error (): The distance error between the robot’s calculated position and the actual position in the environment. Pose samples are taken along the robot’s trajectory at regular intervals, and the localization error at these sample points is calculated. The average of the top 10% largest errors is taken as the average localization error, as shown in (17).where N is the number of pose samples and err is the array of localization errors sorted in descending order, with being the nth error. Smaller average localization errors indicate higher robot localization accuracy and better map-building performance; larger errors indicate lower accuracy and poorer performance.

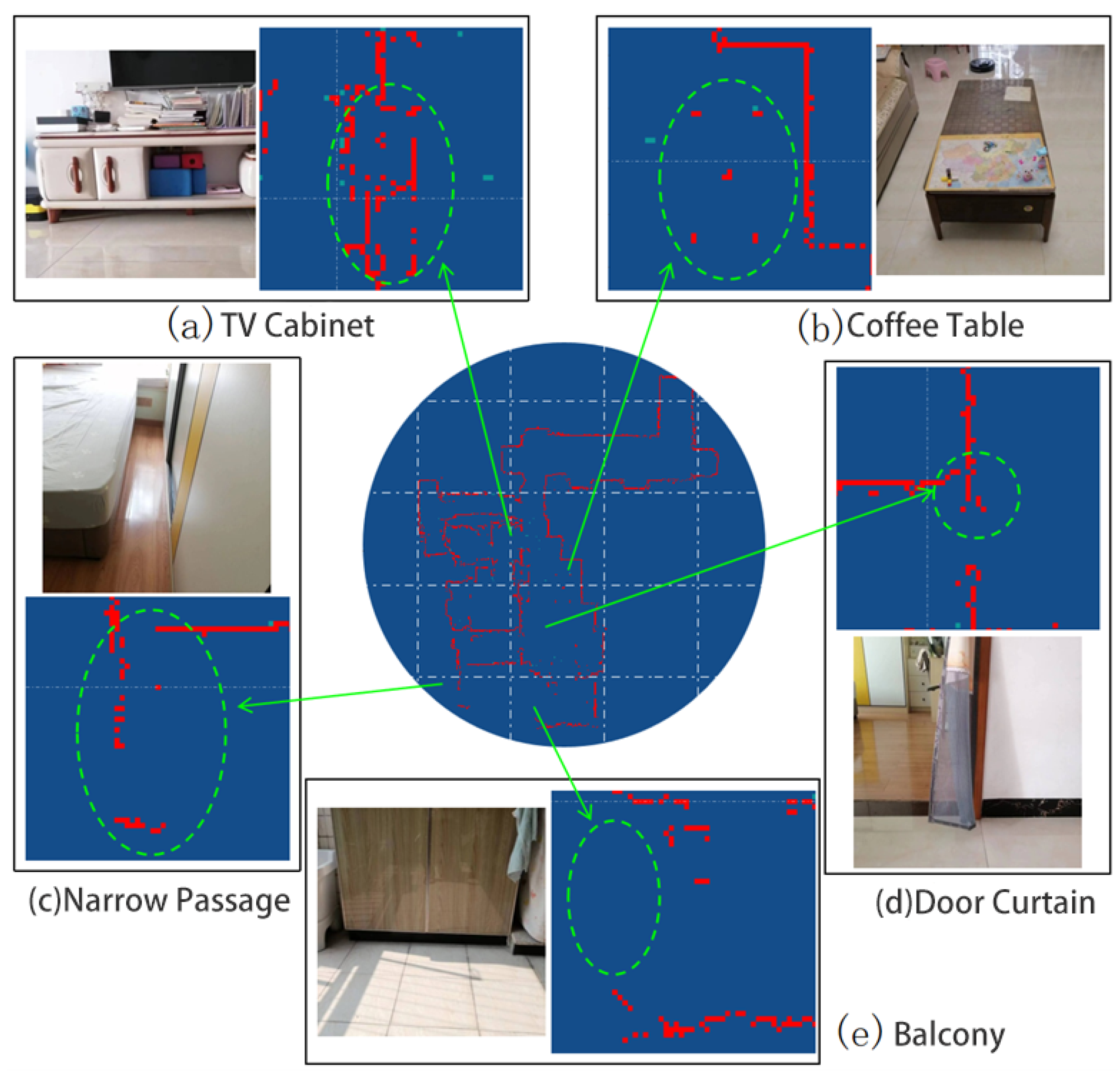

5.2. Experiment and Results Analysis in Indoor Environment

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, H.; Zhang, G.; Cao, H.; Hu, K.; Wang, Q.; Deng, Y.; Gao, J.; Tang, Y. Geometry-Aware 3D Point Cloud Learning for Precise Cutting-Point Detection in Unstructured Field Environments. J. Field Robot. 2025. [Google Scholar] [CrossRef]

- Choi, K.; Park, J.; Kim, Y.H.; Lee, H.K. Monocular SLAM with undelayed initialization for an indoor robot. Robot. Auton. Syst. 2012, 60, 841–851. [Google Scholar] [CrossRef]

- Li, Z.X.; Cui, G.H.; Li, C.L.; Zhang, Z.S. Comparative study of SLAM algorithms for mobile robots in complex environment. In Proceedings of the 2021 6th International Conference on Control, Robotics and Cybernetics (CRC), Shanghai, China, 9–11 October 2021; IEEE: New York, NY, USA, 2021; pp. 74–79. [Google Scholar]

- Trejos, K.; Rincón, L.; Bolaños, M.; Fallas, J.; Marín, L. 2d slam algorithms characterization, calibration, and comparison considering pose error, map accuracy as well as cpu and memory usage. Sensors 2022, 22, 6903. [Google Scholar] [CrossRef] [PubMed]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; IEEE: New York, NY, USA, 2008; pp. 19–25. [Google Scholar]

- Vizzo, I.; Guadagnino, T.; Mersch, B.; Wiesmann, L.; Behley, J.; Stachniss, C. Kiss-icp: In defense of point-to-point icp–simple, accurate, and robust registration if done the right way. IEEE Robot. Autom. Lett. 2023, 8, 1029–1036. [Google Scholar] [CrossRef]

- Hong, S.; Ko, H.; Kim, J. VICP: Velocity updating iterative closest point algorithm. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; IEEE: New York, NY, USA, 2010; pp. 1893–1898. [Google Scholar]

- Yang, J.; Li, H.; Campbell, D.; Jia, Y. Go-ICP: A globally optimal solution to 3D ICP point-set registration. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 2241–2254. [Google Scholar] [CrossRef]

- Alismail, H.; Baker, L.D.; Browning, B. Continuous trajectory estimation for 3D SLAM from actuated lidar. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: New York, NY, USA, 2014; pp. 6096–6101. [Google Scholar]

- Wang, H.; Yin, Y.; Jing, Q. Comparative analysis of 3D LiDAR scan-matching methods for state estimation of autonomous surface vessel. J. Mar. Sci. Eng. 2023, 11, 840. [Google Scholar] [CrossRef]

- Khodarahmi, M.; Maihami, V. A review on Kalman filter models. Arch. Comput. Methods Eng. 2023, 30, 727–747. [Google Scholar] [CrossRef]

- Ferrarini, B.; Waheed, M.; Waheed, S.; Ehsan, S.; Milford, M.J.; McDonald-Maier, K.D. Exploring performance bounds of visual place recognition using extended precision. IEEE Robot. Autom. Lett. 2020, 5, 1688–1695. [Google Scholar] [CrossRef]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Peel, H.; Luo, S.; Cohn, A.; Fuentes, R. Localisation of a mobile robot for bridge bearing inspection. Autom. Constr. 2018, 94, 244–256. [Google Scholar] [CrossRef]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419841532. [Google Scholar] [CrossRef]

- Tang, C.; Dou, L. An improved game theory-based cooperative localization algorithm for eliminating the conflicting information of multi-sensors. Sensors 2020, 20, 5579. [Google Scholar] [CrossRef]

- Rosinol, A.; Violette, A.; Abate, M.; Hughes, N.; Chang, Y.; Shi, J.; Gupta, A.; Carlone, L. Kimera: From SLAM to spatial perception with 3D dynamic scene graphs. Int. J. Robot. Res. 2021, 40, 1510–1546. [Google Scholar] [CrossRef]

- Lenac, K.; Kitanov, A.; Cupec, R.; Petrović, I. Fast planar surface 3D SLAM using LIDAR. Robot. Auton. Syst. 2017, 92, 197–220. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Kaess, M.; Ranganathan, A.; Dellaert, F. iSAM: Incremental smoothing and mapping. IEEE Trans. Robot. 2008, 24, 1365–1378. [Google Scholar]

- Konolige, K.; Grisetti, G.; Kümmerle, R.; Burgard, W.; Limketkai, B.; Vincent, R. Efficient sparse pose adjustment for 2D mapping. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; IEEE: New York, NY, USA, 2010; pp. 22–29. [Google Scholar]

- Cao, Y.; Deng, Z.; Luo, Z.; Fan, J. A Multi-sensor Deep Fusion SLAM Algorithm based on TSDF map. IEEE Access 2024, 12, 154535–154545. [Google Scholar] [CrossRef]

- Yong, Z.; Renjie, L.; Fenghong, W.; Weiting, Z.; Qi, C.; Derui, Z.; Xinxin, C.; Shuhao, J. An autonomous navigation strategy based on improved hector slam with dynamic weighted a* algorithm. IEEE Access 2023, 11, 79553–79571. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: New York, NY, USA, 2016; pp. 1271–1278. [Google Scholar]

- Wang, H.; Wang, C.; Chen, C.L.; Xie, L. F-loam: Fast lidar odometry and mapping. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: New York, NY, USA, 2021; pp. 4390–4396. [Google Scholar]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. Kinectfusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; IEEE: New York, NY, USA, 2011; pp. 127–136. [Google Scholar]

- Kang, J.; Lee, S.; Jang, M.; Lee, S. Gradient flow evolution for 3D fusion from a single depth sensor. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 2211–2225. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York, NY, USA, 2018; pp. 4758–4765. [Google Scholar]

- Liu, H.; Zhang, Y. RM-line: A ray-model-based straight-line extraction method for the grid map of mobile robot. Appl. Sci. 2022, 12, 9754. [Google Scholar] [CrossRef]

- Hu, K.; Chen, Z.; Kang, H.; Tang, Y. 3D vision technologies for a self-developed structural external crack damage recognition robot. Autom. Constr. 2024, 159, 105262. [Google Scholar] [CrossRef]

| Region Width (m) | Number of Regions | Regional Map Storage (MB) | Processing Time (ms) | System Localization Errors (cm) | |

|---|---|---|---|---|---|

| 35 min | 70 min | ||||

| 3 | 16 | 1.93 | 893 | 1.2 | 3.5 |

| 4 | 12 | 1.5 | 724 | 2 | 4.6 |

| 5 | 10 | 1 | 615 | 2.3 | 5.1 |

| 6 | 4 | 0.98 | 406 | 3.1 | 6.8 |

| 7 | 4 | 1.13 | 431 | 3.6 | 7.5 |

| 8 | 3 | 0.69 | 345 | 4.8 | 10.2 |

| 9 | 3 | 0.79 | 371 | 5.5 | 11.1 |

| 10 | 3 | 0.67 | 382 | 6.9 | 14.6 |

| Storage (MB) | (ms) | (ms) | (cm) | |

|---|---|---|---|---|

| DPCR-SLAM | 2.2 | 115 | 870 | 4.1 |

| Cartographer | 9.3 | 260 | 3900 | 4.6 |

| Improvement | 76.3% | 55.8% | 77.7% | 10.90% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, Y.; Ni, J.; Huang, Y. DPCR-SLAM: A Dual-Point-Cloud-Registration SLAM Based on Line Features for Mapping an Indoor Mobile Robot. Sensors 2025, 25, 5561. https://doi.org/10.3390/s25175561

Cao Y, Ni J, Huang Y. DPCR-SLAM: A Dual-Point-Cloud-Registration SLAM Based on Line Features for Mapping an Indoor Mobile Robot. Sensors. 2025; 25(17):5561. https://doi.org/10.3390/s25175561

Chicago/Turabian StyleCao, Yibo, Junheng Ni, and Yonghao Huang. 2025. "DPCR-SLAM: A Dual-Point-Cloud-Registration SLAM Based on Line Features for Mapping an Indoor Mobile Robot" Sensors 25, no. 17: 5561. https://doi.org/10.3390/s25175561

APA StyleCao, Y., Ni, J., & Huang, Y. (2025). DPCR-SLAM: A Dual-Point-Cloud-Registration SLAM Based on Line Features for Mapping an Indoor Mobile Robot. Sensors, 25(17), 5561. https://doi.org/10.3390/s25175561