1. Introduction

As one of the important tasks in the field of computer vision, object detection aims to identify and locate objects of specific categories in an image. It is widely used in security monitoring, autonomous driving, smart cities, and other scenarios.

In recent years, with the rapid development of deep learning technology, object detection algorithms have achieved significant improvements in accuracy and efficiency. However, when applied to UAV platforms, traditional object detection methods face severe challenges due to highly flexible flight platforms and complex, variable imaging environments.

UAVs offer high mobility, broad coverage, and low deployment cost, making them suitable for aerial monitoring, environmental sensing, and disaster response. However, UAV imagery often suffers from low resolution, large variations in object scale, unique aerial viewpoints, and complex occlusion and lighting conditions, which seriously affect the generalization ability and detection accuracy of object detection models. Especially in small object detection scenarios, traditional methods struggle to effectively identify and localize small objects due to their limited semantic information, small size, and blurred boundaries.

As the first end-to-end model based on the Transformer architecture, DETR [

1] has attracted widespread attention due to its novel design. However, its high computational cost and poor real-time performance make it unsuitable for real-time scenarios. To address this, RT-DETR [

2] significantly improves inference efficiency while maintaining detection accuracy, providing an effective solution for real-time object detection. Nevertheless, existing methods still face key limitations in dense small object scenes: (1) the convolutional feature extractor has limited ability to retain edge and detail information; (2) semantic inconsistencies and boundary weakening occur during multi-scale feature fusion; and (3) fixed positional embeddings cannot effectively model complex spatial relationships, limiting the spatial modeling capability of the model.

To overcome these limitations, we propose ACD-DETR (Adaptive Cross-scale Detection Transformer), a novel and lightweight framework specifically designed for robust and efficient detection of small objects. The architecture introduces three core modules to enhance spatial sensitivity, semantic consistency, and positional modeling capability.

Our main contributions are summarized as follows:

- 1.

We propose a Multi-Scale Edge-Enhanced Feature Fusion Module (MSEFM) that combines multi-scale pooling, explicit edge feature enhancement, and channel attention to enrich fine-grained feature representations, especially for small objects across scales (i.e., integrating features from different spatial resolutions to combine detail-rich shallow features with semantically strong deep features).

- 2.

We design a novel Omni-Grained Boundary Calibrator (OG-BC) that hierarchically fuses semantic and structural information, utilizing dynamic alignment and boundary-aware interaction to enhance object localization in dense scenes.

- 3.

We introduce a Dynamic Position Bias Attention-based Intra-scale Feature Interaction (DPB-AIFI) model to replace fixed positional embeddings with learnable, relative spatial priors, thereby improving spatial modeling and enhancing detection accuracy under complex object layouts.

The remainder of this paper is organized as follows.

Section 2 reviews related work on UAV object detection and real-time end-to-end detectors.

Section 3 details the proposed ACD-DETR framework, including the MSEFM, OG-BC, and DPB-AIFI modules.

Section 4 presents the experimental setup, evaluation results, and ablation studies. Finally,

Section 5 concludes the paper and discusses future work.

3. Methodology

This section presents ACD-DETR, an efficient end-to-end detection framework specifically designed for UAV-based small object detection.

Figure 1 provides a comprehensive overview of the proposed architecture, which features multiple interconnected components designed to work collaboratively for enhanced small-object detection performance.

The overall architecture builds upon RT-DETR but incorporates three key innovations strategically positioned throughout the network to address fundamental small-object detection challenges. The framework follows a detection pipeline of feature extraction, feature fusion, and detection head stages, with significant enhancements improving small object perception and localization accuracy.

As shown in the left side of

Figure 1, the MSEFM is integrated into each backbone stage. This module processes input features through multiple parallel branches with adaptive pooling scales (3 × 3, 6 × 6, 9 × 9, and 12 × 12), capturing contextual information at various scales. Each branch incorporates edge enhancement mechanisms that explicitly compensate for fine-grained details lost during downsampling, with outputs fused through an EMA (Efficient Multi-scale Attention) [

15] attention mechanism to generate boundary-aware multi-scale representations.

The middle portion illustrates the OG-BC, which replaces traditional feature pyramid structures with intelligent fusion. Rather than using P2 features as direct detection heads, OG-BC strategically employs them as guidance through SPDConv [

16] operations while maintaining computational efficiency. The hierarchical fusion pathway alternately applies SBA and RepC3 (Reparameterized C3) blocks, with DySample (Upsampling by Dynamic Sampling) [

17] modules ensuring adaptive multi-scale semantic alignment.

The right side shows the enhanced encoder where traditional AIFI modules are replaced with DPB-AIFI. This introduces learnable dynamic position bias through Multi-Layer Perceptron networks, enabling adaptive modeling of the spatial relationships crucial for complex aerial imagery layouts.

Together, these modules create a comprehensive small-object detection capability by addressing fundamental challenges. MSEFM mitigates information loss during downsampling by preserving fine-grained details across multiple receptive field scales. It also explicitly enhances boundary information, which is critical for small object discrimination. OG-BC resolves semantic inconsistency across feature pyramid levels through guided fusion mechanisms, maintaining scale-consistent representations without the semantic dilution typical of naive concatenation approaches. DPB-AIFI models complex spatial dependencies in dense aerial scenes, with learnable position bias improving small object discrimination from background clutter and handling occlusions where static positional encodings prove insufficient.

Furthermore, the integrated design reduces parameters while improving detection accuracy, demonstrating that architectural innovations can achieve both effectiveness and efficiency for real-time applications.

3.1. Multi-Scale Edge-Enhanced Feature Fusion Module

In general object detection tasks, small objects are inherently challenging due to their limited size, blurred boundaries, and weak semantic information, making them difficult to model effectively with deep networks. In particular, repeated convolution and downsampling operations tend to weaken or even eliminate the fine-grained edges and high-frequency details originally present in the image, leading to incomplete feature representations and frequent instances of missed or false detections.

Moreover, small object detection is highly sensitive to contextual information. Unlike large objects that occupy more spatial area, small objects rely more heavily on multi-scale semantic cues to provide discriminative features. However, traditional feature extraction networks often lack the ability to perceive edge details, and their fixed-scale convolutional structures are insufficient for capturing rich multi-scale contextual information. Therefore, it is essential to develop a lightweight feature extraction module that can simultaneously enhance edge representation and contextual modeling, thereby improving the backbone network’s perception of complex small objects.

To address this, we propose a novel feature extraction module called the Multi-Scale Edge-Enhanced Feature Fusion Module (MSEFM). This module is designed with a synergistic approach that combines multi-scale receptive field expansion, explicit edge enhancement, and channel-wise attention mechanisms. It dynamically reconstructs the input features at each stage of the backbone network, significantly improving the network’s edge awareness and modeling capability for small objects.

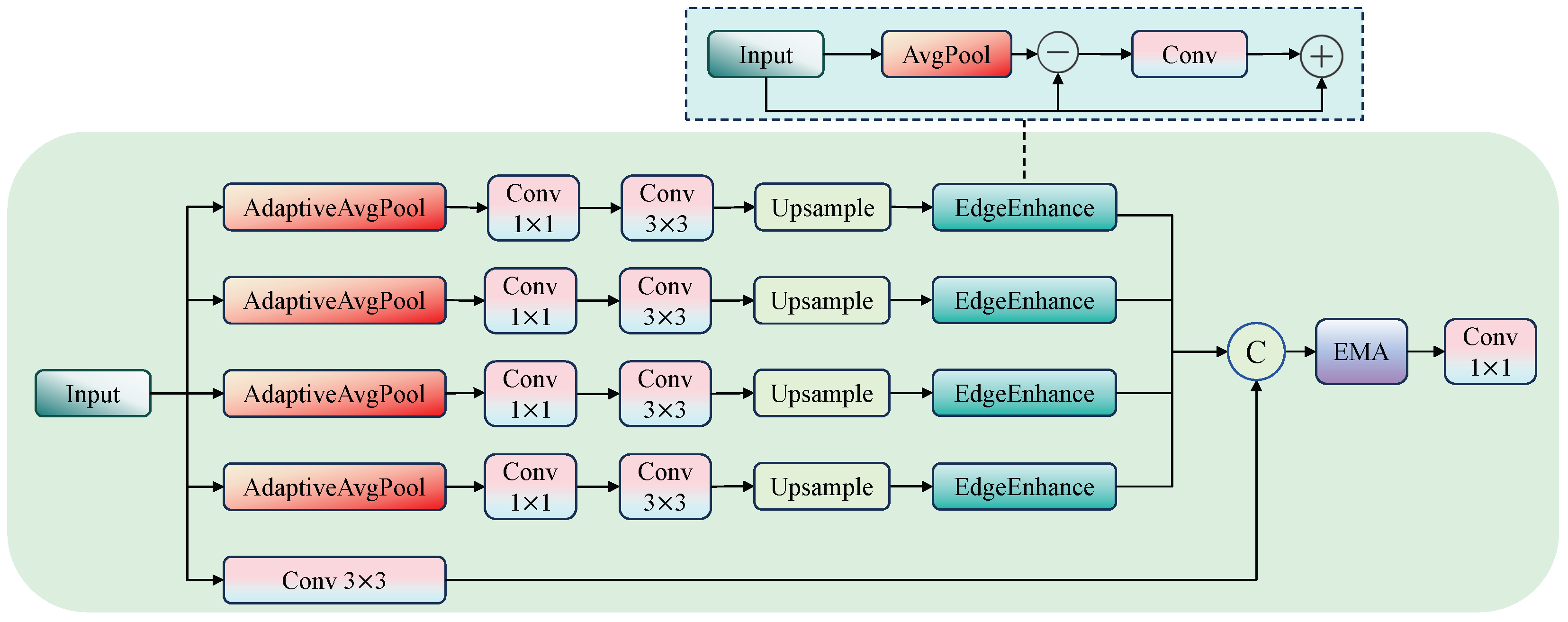

As shown in

Figure 2, the module first applies multi-scale adaptive average pooling (e.g., bins = [3, 6, 9, 12]) to the input features to construct multiple scale branches, enabling the extraction of both local and global contextual information. The multi-scale feature extraction process is defined as:

Here, and X denotes the input feature map. Each scale branch applies a convolution to reduce the channel dimensionality, followed by a convolution to capture spatial structural information. This process effectively expands the receptive field to support multi-scale target modeling.

Next, an edge enhancement module is embedded in each scale branch. The edge enhancement mechanism is formulated as

Here, denotes the sigmoid activation function and ⊙ represents element-wise multiplication. The module first extracts a smoothed version of the input feature using average pooling, computes the edge response map by subtracting it from the original feature, and then generates an edge attention map through convolution followed by sigmoid activation. This attention map is used to weight the original feature, effectively enhancing fine-grained details. The mechanism explicitly compensates for edge information lost during downsampling and makes the network more sensitive to contour variations, which is especially beneficial for detecting dense small objects.

Finally, all enhanced features from different scales are upsampled and concatenated along the channel dimension to form the fused feature map. To further emphasize key semantics and suppress redundant information, an EMA module is introduced. EMA divides the feature channels into groups and uses multi-branch pathways to capture global context and spatial dependencies, dynamically generating attention weights to filter information across channels and spatial locations.

The complete computation flow of the MSEFM module can be summarized as

where

denotes the edge enhancement module in the

i-th scale branch, calculated according to the edge enhancement equations above. Finally, a

convolution is applied to integrate all channels and produce high-quality fused features.

3.2. Omni-Grained Boundary Calibrator

In dense-small-object detection tasks, conventional detection layers such as P3, P4, and P5 often struggle to adequately capture the boundaries and semantic details of tiny objects. Although directly introducing a P2 detection head can partially alleviate this issue, it significantly increases the model’s computational overhead and post-processing complexity. Traditional approaches that use P2 features as an additional detection head require extra prediction layers, anchor processing, and post-processing mechanisms, substantially increasing computational cost. To address this trade-off, we propose the Omni-Grained Boundary Calibrator (OG-BC) module, a feature fusion architecture that balances small object perception capability with computational efficiency.

The fundamental challenge lies in the inherent contradiction between shallow and deep features: shallow features possess rich spatial details and clear boundaries but lack semantic context, while deep features contain abundant semantic information but suffer from spatial resolution loss. Direct fusion often leads to semantic inconsistency and information redundancy, which is particularly problematic for tiny objects requiring both precise localization and semantic understanding.

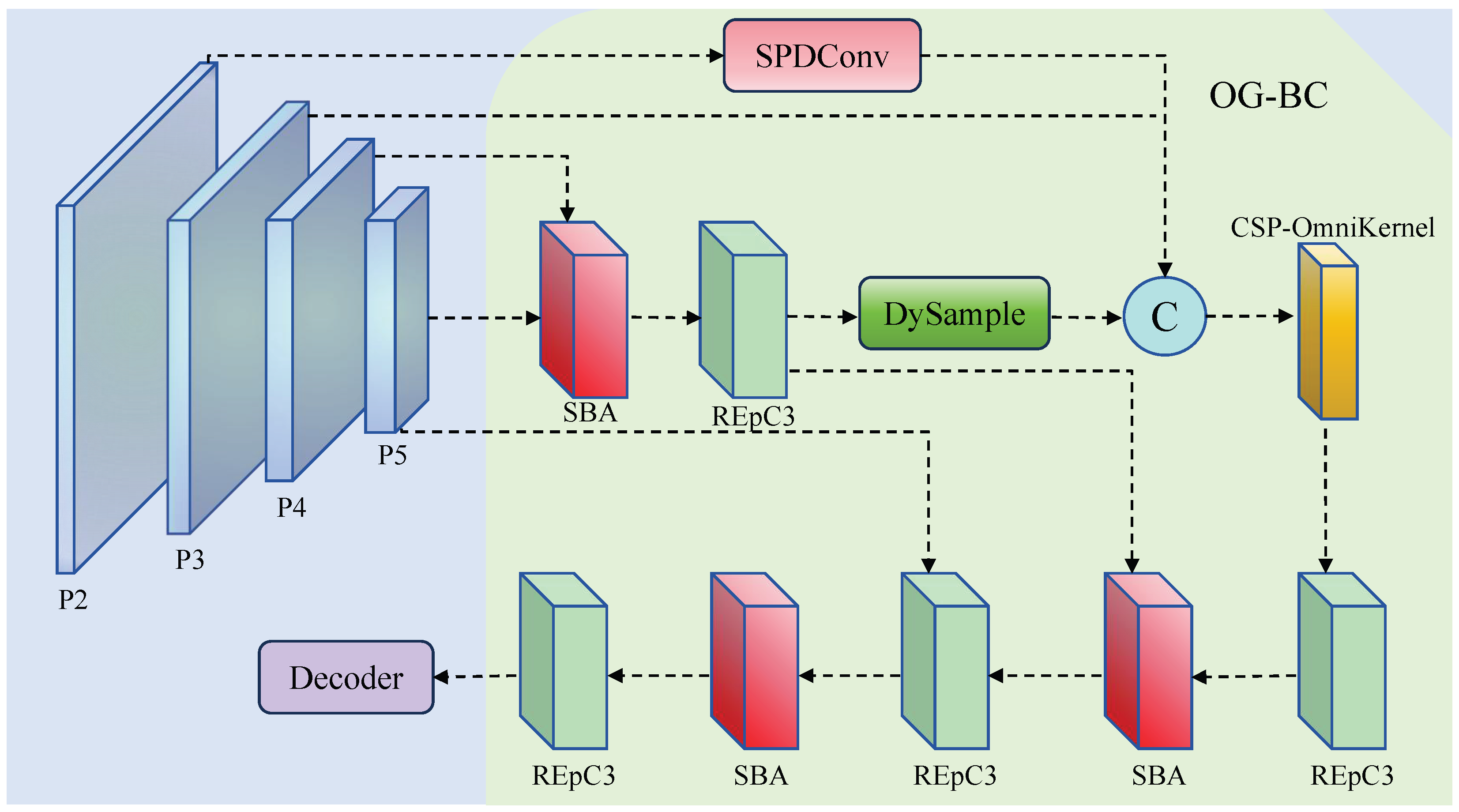

As illustrated in

Figure 3, the overall architecture of the OG-BC module adopts a hierarchical fusion strategy. Crucially, unlike traditional methods that create an additional P2 detection head, our approach uses P2 features as guidance information only. Specifically, the P2 feature map is utilized as a key guidance source but is no longer directly used as a detection head. Instead, a lightweight SPDConv [

16] operation is employed to extract spatial fine-grained structures and small object information from P2, which are then deeply fused into the P3 layer. This design maintains the same number of detection heads as the baseline while leveraging P2’s spatial richness, achieving enhanced perception capability without computational overhead of additional detection processing.

To further enhance feature consistency and expressiveness, OG-BC integrates a DySample [

17] module based on dynamic weight adjustment, enabling adaptive alignment of multi-scale semantics and effectively addressing the scale and semantic misalignment issues commonly found in feature pyramids.

Along the feature fusion pathway, OG-BC alternately employs the SBA and RepC3 modules to construct refined network blocks, thereby enhancing the network’s sensitivity to local boundaries and scale variations. Finally, the fused features are fed into an improved CSP-OmniKernel module for high-level semantic modeling. Through this design, OG-BC achieves explicit modeling and boundary enhancement for small objects, significantly improving detection accuracy and localization robustness for tiny objects without introducing additional detection heads.

3.2.1. CSP-OmniKernel

To effectively exploit large-kernel convolution while maintaining computational efficiency, we propose the CSP-OmniKernel module, which integrates the channel splitting strategy of the Cross Stage Partial (CSP) architecture with the multi-domain feature modeling capability of OmniKernel [

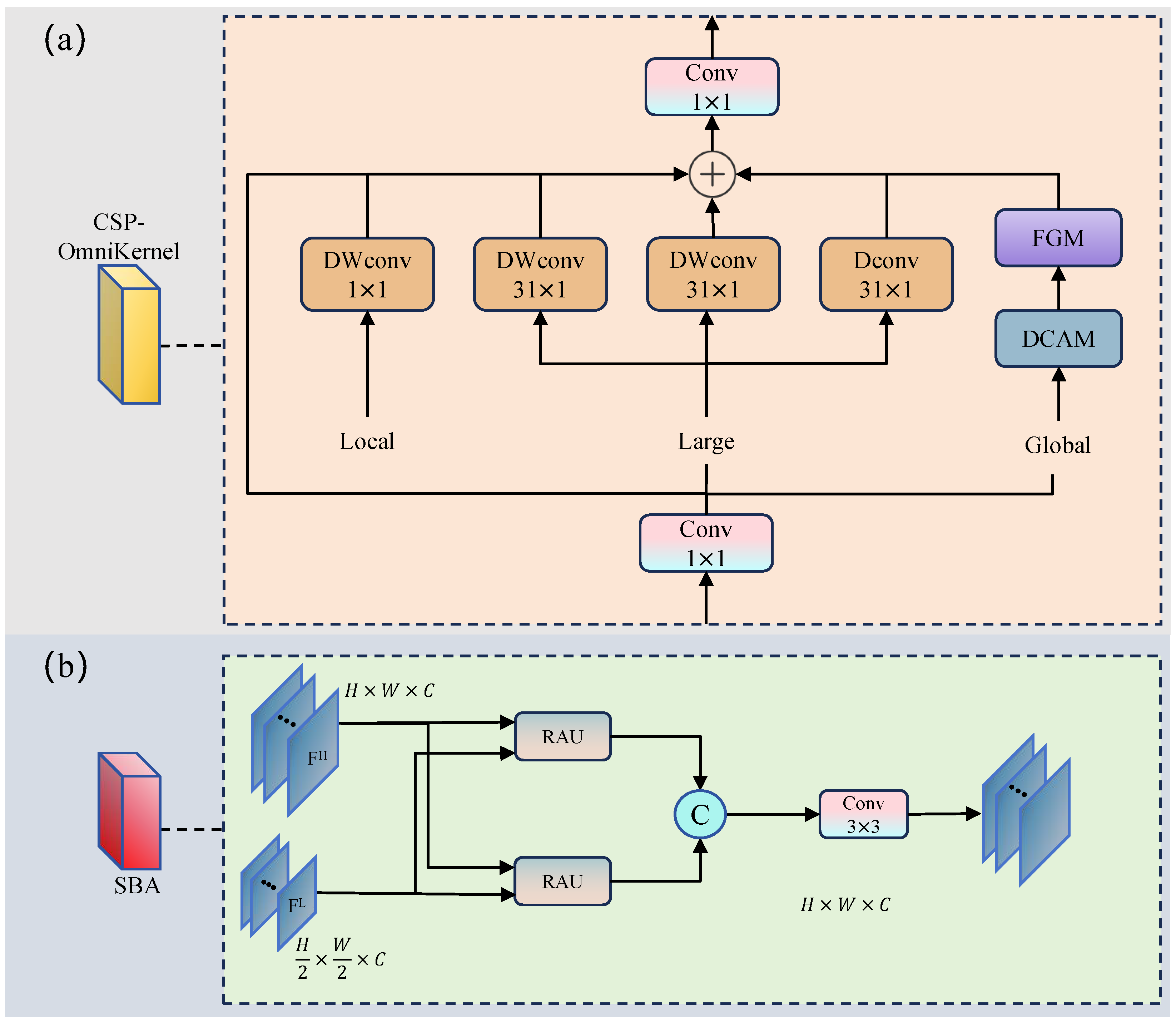

18]. As illustrated in

Figure 4a, the CSP-OmniKernel adopts a branch-and-fusion design that balances representation ability and computational overhead.

Given an input feature map

, the input is first projected via a

convolution and then split along the channel dimension:

where

e denotes the channel split ratio (set to

by default). The smaller portion

is processed through the OmniKernel branch, while the larger portion

undergoes identity mapping to preserve computational efficiency.

In the OmniKernel branch, multi-branch depthwise convolutions with different receptive fields are applied in parallel. Their outputs are fused via element-wise addition and combined with a dual-domain attention-enhanced residual connection:

where

is obtained through a

convolution followed by GELU activation and

k denotes the large kernel size (set to

by default).

The attention term

combines frequency-domain and spatial-domain enhancements using a dual-domain channel attention mechanism:

where

and

denote the Fourier transform and its inverse, respectively;

and

represent frequency channel attention and spatial channel attention modules. The combination of FCA and SCA constitutes the Dual-Domain Channel Attention Module (DCAM), which captures complementary frequency and spatial features to enhance channel representations.

is a frequency-enhanced gating module that further refines the attended features.

Finally, the outputs from the OmniKernel branch and the identity-mapped features are concatenated and projected to produce the final output via a

convolution:

The proposed CSP-OmniKernel module effectively models multi-scale spatial structures and captures global semantic information while maintaining low computational cost, thereby contributing to robust feature extraction for small-object detection tasks.

3.2.2. Semantic–Boundary Aggregation

In visual feature pyramids, shallow features typically possess higher spatial resolution and superior detail representation capabilities, particularly excelling in boundary localization and shape depiction. Conversely, deep features contain richer semantic context that aids in object classification and structural recognition. However, the disparities in scale and semantic abstraction between these feature types can introduce redundancy or semantic inconsistency when directly fused, thereby limiting the representational effectiveness and detection performance of multi-scale fusion.

To address these challenges, we propose the Semantic–Boundary Aggregation (SBA) module, which achieves precise object representation through bidirectional gated fusion between shallow boundary information and deep semantic features. The SBA module enhances feature complementarity while maintaining computational efficiency through simple yet effective operations.

As illustrated in

Figure 4b, the SBA module takes two scales of feature maps as input: high-resolution boundary features

and low-resolution semantic features

. Both feature maps are first projected through

convolutions for channel alignment, with attention gates simultaneously generated via sigmoid activations:

These gates adaptively regulate the contribution of features at different resolutions during the fusion process.

The projected features are further processed through lightweight convolutions to enhance their representational capacity:

The core of SBA lies in the Re-calibration Attention Unit (RAU), which performs bidirectional gated fusion through a three-term formulation. We define RAU as

where

X is the target feature,

Y is the source feature, and

and

are the corresponding gates. The enhanced features are computed as

The RAU design incorporates three essential components: (1) residual connection for gradient flow preservation, (2) self-gating for adaptive feature selection, and (3) cross-branch integration for complementary information enhancement. This mechanism ensures that each branch preserves its intrinsic characteristics while adaptively incorporating beneficial information from the complementary branch through a learnable gating strategy.

Finally, the enhanced high-resolution features are spatially aligned to match the low-resolution scale, and both branches are concatenated for final fusion:

Compared to traditional FPN structures, the SBA module offers several key advantages. First, the RAU-based gated fusion mechanism effectively balances self-enhancement and cross-branch information exchange, reducing redundancy while preserving complementary features. Second, the lightweight design incurs minimal computational overhead through simple convolution and gating operations, making it suitable for resource-constrained scenarios. Third, the bidirectional enhancement strategy enables superior multi-scale feature integration, which is particularly beneficial for small-object detection tasks that require both detailed boundary information and rich semantic context.

The proposed SBA module demonstrates that effective multi-scale fusion can be achieved through carefully designed gating mechanisms without complex attention computations, thereby offering a practical solution for real-time applications while maintaining strong representational capability.

3.3. DPB-AIFI

In RT-DETR, the traditional AIFI module processes the relationships between different positions in the input feature sequence through the Multi-Head Attention (MSA) mechanism. MSA divides the input sequence into multiple attention heads, allowing each head to independently perform self-attention calculations, thereby enabling efficient parallel processing of complex dependencies within the sequence. However, the fixed positional encoding used in traditional self-attention mechanisms has significant limitations. Its predefined positional representations struggle to adapt to input features of varying scales and has limited capability for modeling relative positional relationships in continuous space, which affects the model’s ability to accurately capture critical spatial information.

To address these issues, we introduce a dynamic position bias (DPB) [

19] mechanism in the feature interaction module, constructing the DPB-AIFI module. This module dynamically generates positional encodings that adapt to the scale of the input features, effectively enhancing the model’s ability to perceive spatial relationships at any scale, reducing information loss caused by fixed positional encodings, and improving the understanding of spatial structures in object detection tasks.

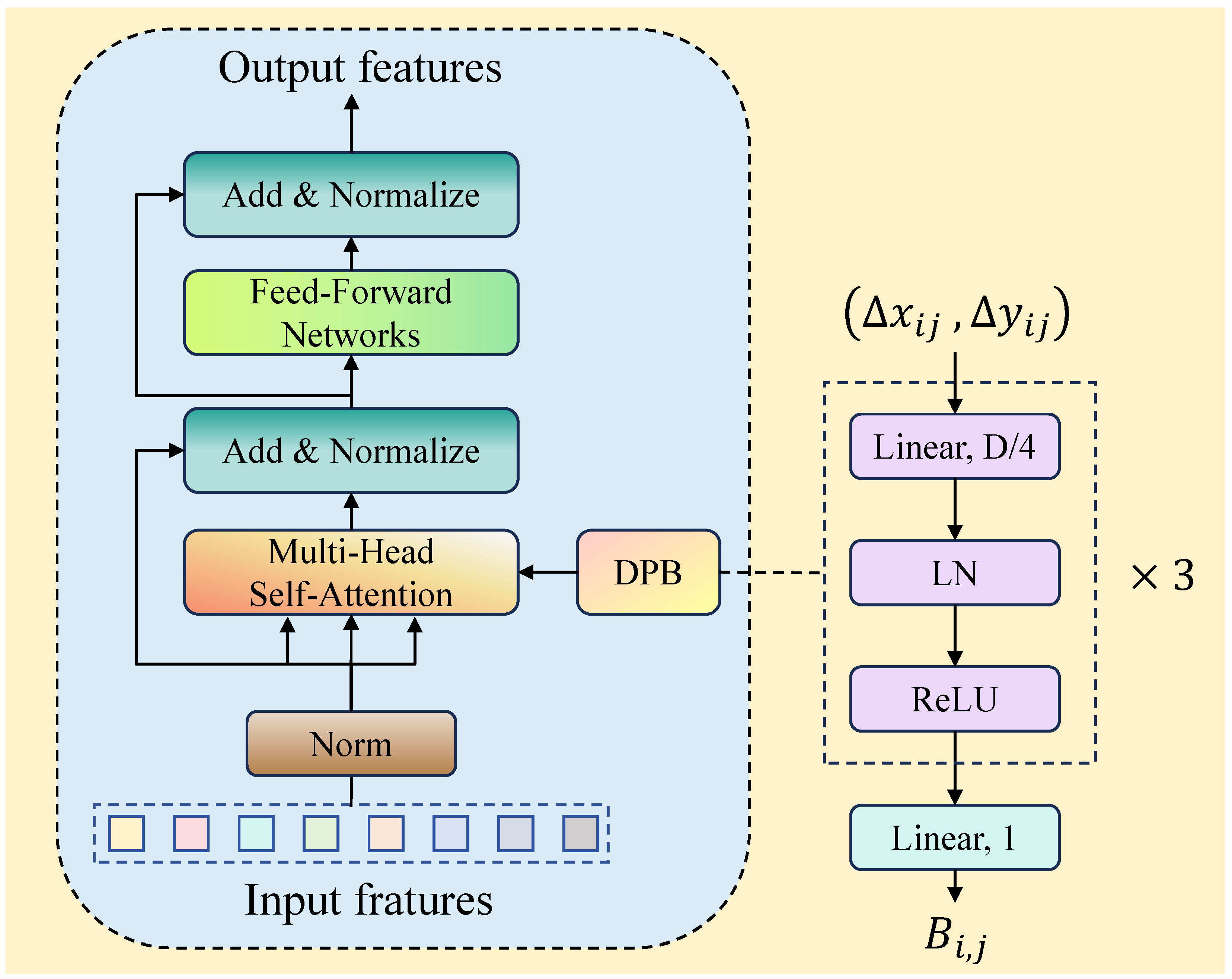

As shown in

Figure 5, the core innovation of the DPB-AIFI module lies in dynamically generating positional biases through a Multi-Layer Perceptron (MLP). The dynamic position bias generation process can be expressed as

where (DPB) represents the dynamic position bias generation function and (

) denotes the relative coordinate distance between position i and position j.

Specifically, this process first inputs the relative positional coordinates into an MLP composed of three fully connected layers, each containing linear transformations, layer normalization, and ReLU activation functions. The first layer maps the input 2D relative coordinates to a hidden space of D/4 dimensions, followed by nonlinear transformations through layer normalization and ReLU activation, ultimately outputting a one-dimensional positional bias value. This progressive feature transformation effectively captures the complex nonlinear spatial relationships between different positions in the input feature map, ensuring that the generated positional biases contain rich spatial structural information.

The introduction of dynamic position bias significantly improves the computation process of traditional attention mechanisms. In the attention enhancement calculation, the module adds the dynamically generated positional bias

to the traditional query–key dot-product attention scores, forming the enhanced attention calculation formula

This design allows each positional pair to obtain personalized positional weights, enabling the model to dynamically adjust the attention distribution based on specific spatial relationships. During computation, the dynamic position bias is added to the query–key dot-product scores and normalized through Softmax to generate the final attention weight distribution. In this way, DPB-AIFI not only retains the global modeling capability of traditional multi-head self-attention mechanisms but also enhances precise perception of local spatial structures, demonstrating stronger adaptability in object detection scenarios with complex spatial layouts.

Compared to traditional fixed positional encoding schemes, the dynamic position bias mechanism offers superior generalization and representational capabilities. Traditional methods often rely on predefined sinusoidal or cosine positional encodings, which struggle to adapt to input features of varying scales and resolutions. In contrast, the DPB mechanism uses a learnable parametric network to adaptively generate optimal positional representations based on specific tasks and data characteristics. This continuous relative positional modeling eliminates dependence on input scale and enables more precise capture of spatial relationships between any two positions, providing more accurate spatial priors for subsequent feature interactions and object detection.

In summary, the DPB-AIFI module integrates dynamic position bias with multi-head self-attention mechanisms to construct a feature interaction architecture that can precisely model positional relationships while capturing global semantic dependencies. This module significantly enhances RT-DETR’s understanding of complex spatial structures while maintaining computational efficiency, providing effective technical support for challenging visual tasks such as dense small object detection.

4. Experiments

4.1. Experimental Setup

The hardware and software configurations used during the experiments are detailed in

Table 1. All experiments were conducted under the same settings to ensure consistency in the experimental environment. The hardware setup includes an AMD EPYC 7742 64-Core Processor (Advanced Micro Devices, Inc., Santa Clara, CA, USA) and an NVIDIA A100-SXM4-80 GB GPU (NVIDIA Corporation, Santa Clara, CA, USA), while the software environment consists of Ubuntu 22.04.5 LTS, Python 3.10.17, CUDA 11.8, and PyTorch 2.3.1.

To optimize training efficiency and model performance, the training parameters were adjusted following the official best practices of the RT-DETR model. The core training parameters are listed in

Table 2, while other parameters were kept at their default values.

4.2. Datasets

This study evaluates the ACD-DETR model using two representative remote sensing image datasets: VisDrone [

20] and DOTA [

21]. VisDrone serves as the primary experimental dataset, while DOTA is employed as a supplementary dataset to further assess the applicability of our method on a different aerial detection benchmark.

Figure 6 shows representative examples from both datasets, illustrating the challenging nature of small object detection in aerial imagery.

VisDrone is a large-scale UAV aerial image dataset constructed by the Machine Learning and Data Mining Laboratory at Tianjin University. It contains over 10,000 high-resolution images, covering diverse geographic locations, weather conditions, lighting environments, and shooting altitudes. The dataset is divided into a training set (6471 images), a validation set (548 images), and a test set (1610 images). It defines 10 target categories: pedestrian, people, bicycle, car, van, truck, tricycle, awning-tricycle, bus, and motor. The image resolutions range from

to

pixels, reflecting the diversity of UAV shooting conditions.

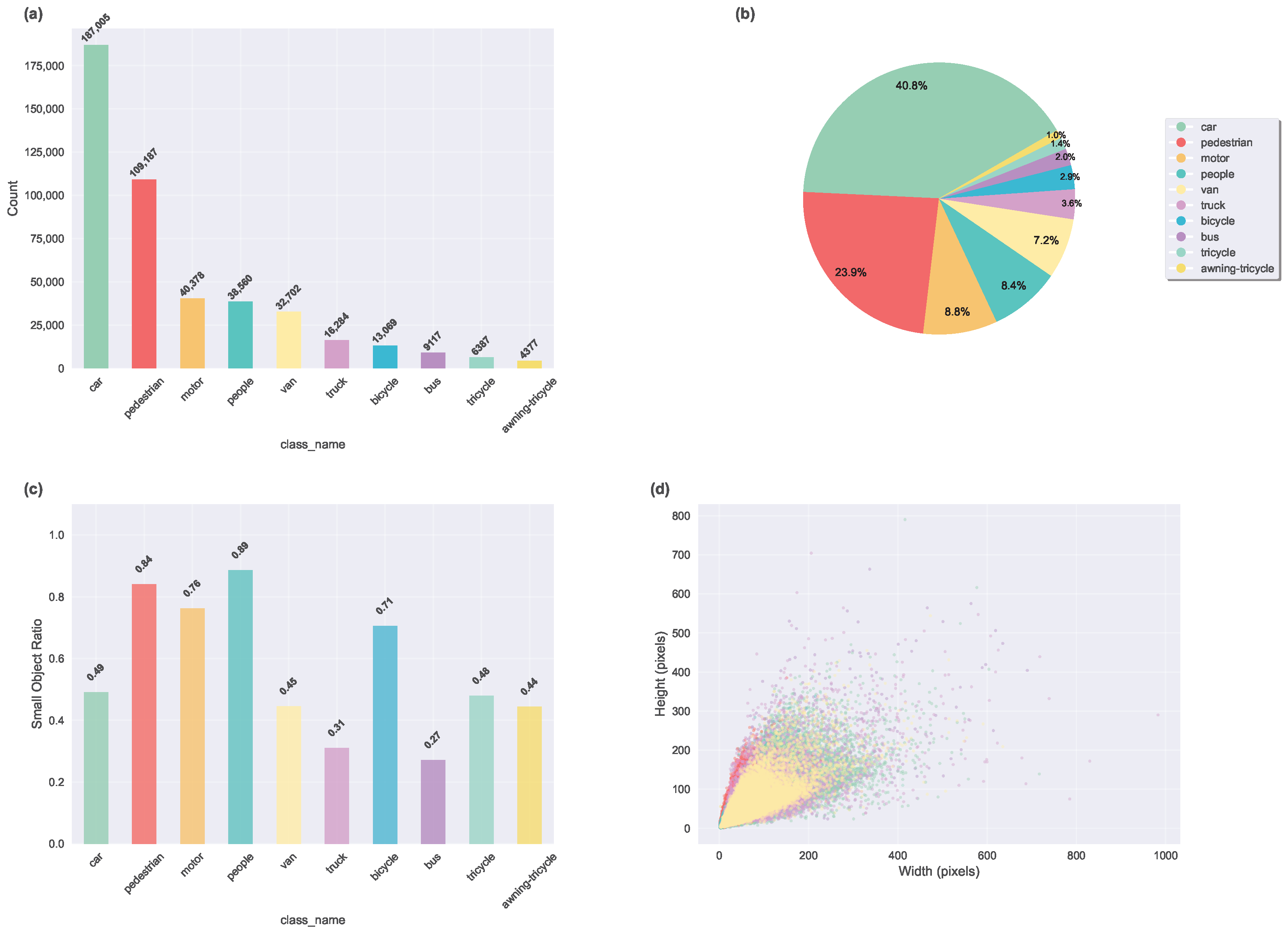

Figure 7 provides a unified statistical analysis of the VisDrone2019 dataset, including class frequency distribution, category proportions, the ratio of small objects (area

pixels) [

22] by class, and bounding-box size distributions.

DOTA is a large-scale aerial image object detection dataset jointly released by Huazhong University of Science and Technology and other institutions. It contains 2806 large-scale aerial images with resolutions ranging from

to

pixels. The dataset defines 15 target categories: plane, ship, storage-tank, baseball-diamond, tennis-court, basketball-court, ground-track-field, harbor, bridge, large-vehicle, small-vehicle, helicopter, roundabout, soccer-ball-field, and swimming-pool. To process the large and varying-sized original images, we applied a sliding window method to crop them into

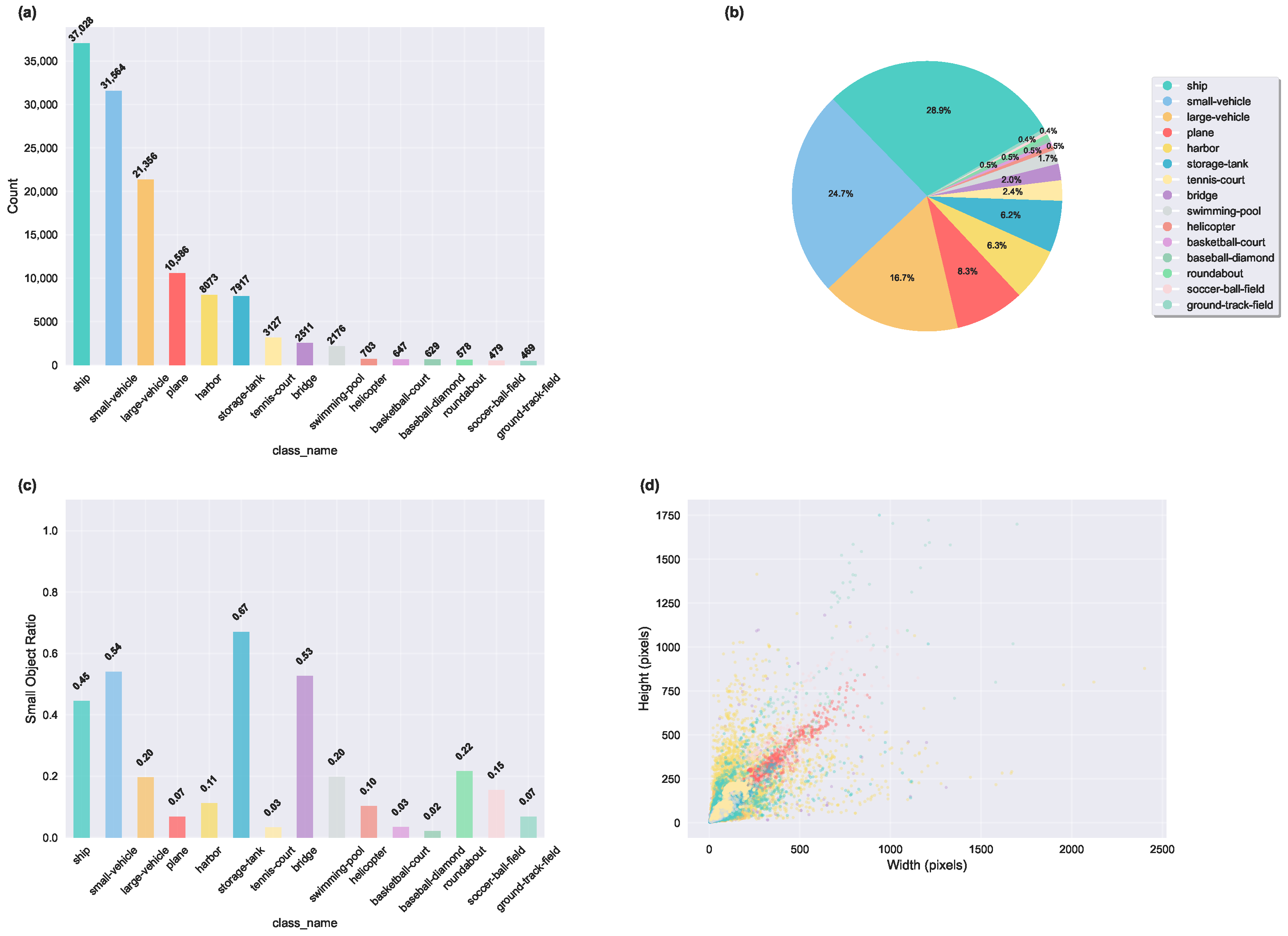

pixel sub-images with a 200-pixel overlap. After preprocessing, a total of 21,046 sub-images were generated, divided into 15,749 training images and 5297 test images. A statistical analysis of the dataset is presented in

Figure 8, including class frequency distribution, object proportions, the ratio of small objects, and bounding-box size distributions.

4.3. Evaluation Metrics

To validate the effectiveness of the proposed algorithm, we selected several evaluation metrics, including precision, recall, average precision (AP), and mean average precision (mAP). These metrics were used to compare and analyze the algorithm’s performance.

In object detection tasks, TP, FP, and FN denote the numbers of true positive, false positive, and false negative detections, respectively, while TN (true negatives, where negative samples are correctly predicted as negative) is typically not considered due to the vast number of possible negative locations. A detection is counted as a true positive (TP) if it has the same class label as a ground-truth object and the Intersection over Union (IoU) between the predicted bounding box and the ground truth exceeds a threshold (0.5 for mAP@0.5, 0.75 for mAP@0.75), following the standard PASCAL VOC/COCO matching protocol. Each ground-truth object is matched with at most one detection; unmatched predictions are counted as false positives (FP), and unmatched ground-truth objects are counted as false negatives (FN). During evaluation, detections are ranked by their confidence scores, and a confidence threshold of 0.001 is applied to filter low-confidence predictions before IoU-based matching.

Precision measures the accuracy of the algorithm’s predictions, representing the proportion of correctly predicted positive samples among all predicted positive samples. It is calculated as

Recall measures the sensitivity of the algorithm, representing the proportion of correctly predicted positive samples among all actual positive samples. It is calculated as

AP evaluates the detection performance for a single category, while mAP is a comprehensive metric for evaluating the overall performance of object detection. mAP is calculated by averaging the AP values across all categories, as shown in the formula below:

where

represents the number of categories and

is the sum of the average precision values for all categories.

Additionally, GFLOPs were used to measure computational complexity, and the number of parameters was used to reflect the model size.

4.4. Comparative Experiments

To evaluate the effectiveness of the proposed ACD-DETR, we conducted extensive comparative experiments on two challenging UAV datasets: VisDrone2019 and DOTA. All compared models were trained using identical configurations and hyperparameters to ensure a fair comparison. For each model and dataset combination, we performed three independent training runs with different random seeds, and the reported results represent the average performance across these runs to ensure statistical robustness.

Table 3 reports a detailed comparison of ACD-DETR with recent DETR variants on the VisDrone2019 dataset. ACD-DETR achieves 50.9% mAP@0.5, outperforming all compared DETR-based detectors while demonstrating significantly improved model efficiency. Specifically, it requires only 16.2M parameters, representing a 54.5% to 57.4% reduction compared to existing methods, and operates at 68.9 GFLOPs, which is substantially lower than Dynamic-DETR (85.0 GFLOPs) and DINO (84.3 GFLOPs). These results confirm that ACD-DETR achieves an optimal balance between detection performance and computational efficiency for UAV small-object detection tasks.

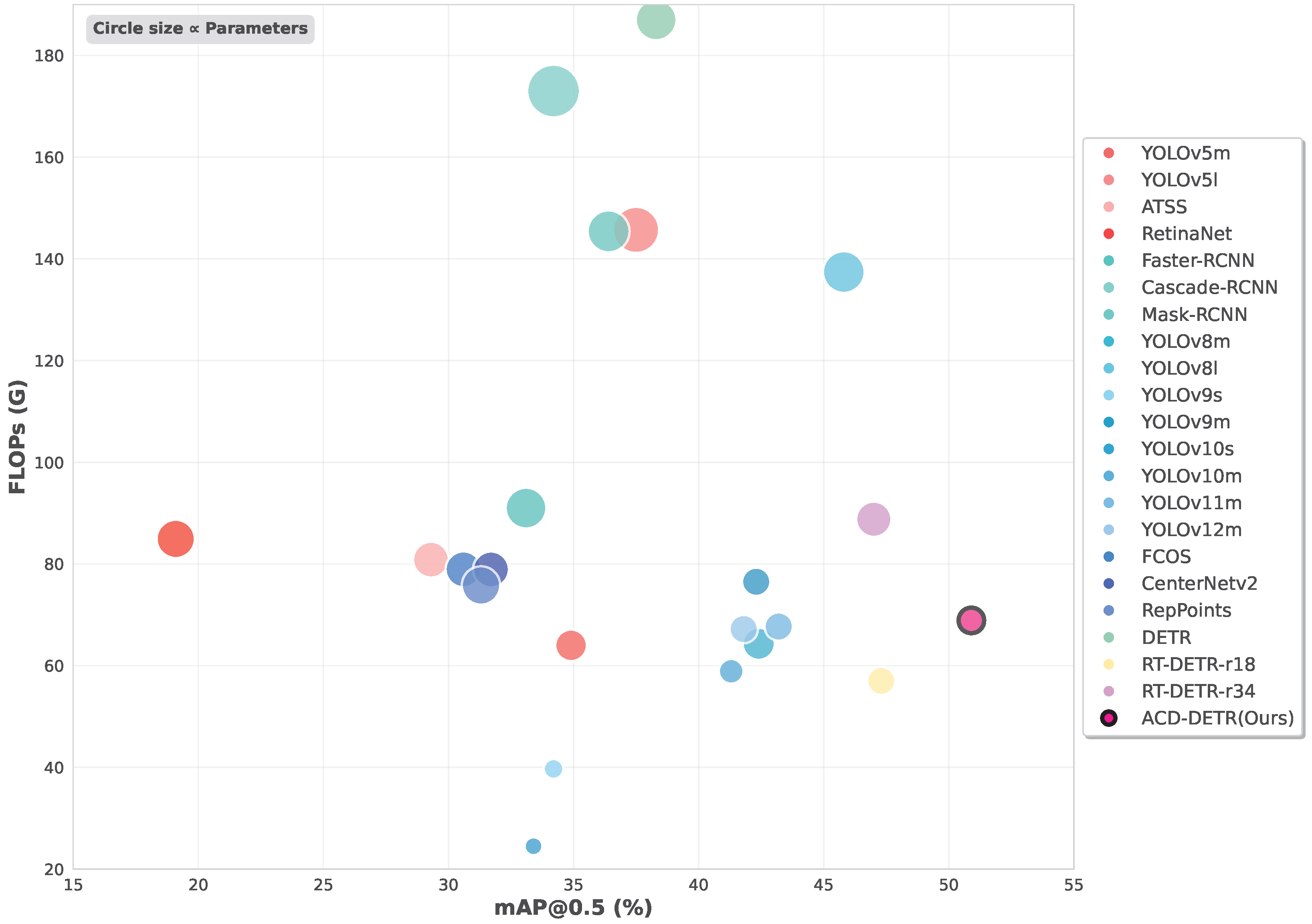

The comprehensive cross-paradigm comparison presented in

Table 4 demonstrates ACD-DETR’s superior performance on the VisDrone2019 dataset across multiple evaluation metrics. Among end-to-end detection methods, our proposed ACD-DETR achieves a 3.6% improvement in mAP@0.5 (50.9% vs. 47.3%) over the lightweight RT-DETR-r18 baseline while reducing parameter count by 16% (16.3 M vs. 19.88 M). Notably, this performance gain is accomplished with significantly fewer parameters than RT-DETR-r34 (16.3 M vs. 31.1 M) while maintaining comparable computational efficiency (68.9 G vs. 71.3 G FLOPs).

When compared with recent YOLO series models, ACD-DETR maintains substantial performance advantages, outperforming YOLOv11m and YOLOv12m by 7.7% and 9.1% in mAP@0.5, respectively. The model achieves an exceptional balance between precision and recall (63.8% precision and 49.0% recall), demonstrating robust capability in handling the challenging characteristics of aerial imagery, including dense small object detection and complex background scenarios. These results validate that our proposed MSEFM architecture, combined with OG-BC and DPB-AIFI modules, effectively enhances feature extraction, boundary refinement, and spatial modeling for drone-based imagery.

The anchor-free methods show competitive performance, with YOLOv8l achieving 45.8% mAP@0.5 at the cost of higher computational requirements (137.41 G FLOPs). Among anchor-based approaches, Mask-RCNN delivers the strongest results in this category (36.4% mAP@0.5), though this is still significantly behind our method. The performance gap between traditional detectors and end-to-end transformers is particularly evident in precision metrics, where ACD-DETR’s 63.8% surpasses all other methods by at least 2.5 percentage points.

Notably, our model achieves these advancements while maintaining parameter efficiency, with only YOLOv9s and YOLOv10s showing lower parameter counts among all compared methods. These comprehensive results establish ACD-DETR as a new state-of-the-art solution for aerial object detection, particularly in scenarios requiring accurate detection of densely distributed small objects.

Figure 9 visualizes the accuracy–efficiency trade-off of all compared models. ACD-DETR achieves the highest detection accuracy while maintaining computational complexity below 70 G FLOPs, thus occupying the Pareto-optimal frontier. Compared to lightweight anchor-free models that sacrifice accuracy to reduce computational cost, ACD-DETR maintains a superior precision–recall balance, highlighting its suitability for real-time aerial applications.

Table 5 presents a comprehensive performance comparison across the 10 object categories of the VisDrone2019 dataset, using mAP@0.5 as the evaluation metric. Our proposed ACD-DETR consistently achieves the highest detection accuracy across all categories, demonstrating substantial improvements over the baseline RT-DETR-r18 and other state-of-the-art methods.

Compared to the baseline RT-DETR-r18, ACD-DETR shows significant improvements across all object categories. For challenging small objects, our method achieves notable gains: +3.5% for pedestrian (58.9% vs. 55.4%), +1.9% for people (51.1% vs. 49.2%), and +4.3% for bicycle (26.1% vs. 21.8%). For medium-sized objects such as tricycle and awning-tricycle, ACD-DETR surpasses RT-DETR-r18 by 6.0% and 2.3%, respectively. Even for larger objects like car and bus, consistent improvements of +0.6% and +7.9% are observed, indicating that our proposed modules enhance detection performance across diverse object scales.

Evaluation on the DOTA dataset (

Table 6) further validates the effectiveness of ACD-DETR on a different aerial detection benchmark. The model achieves 69.3% mAP@0.5 with strong precision (75.7%) and recall (66.9%), maintaining consistent performance improvements across diverse aerial scenarios. The consistent performance improvements on both the VisDrone2019 and DOTA datasets demonstrate that ACD-DETR’s design is effective across different aerial object detection scenarios, confirming the robustness and broad applicability of our proposed method.

These comprehensive comparisons establish ACD-DETR as a significant advancement in efficient aerial object detection. The consistent improvements across multiple datasets, detection paradigms, and evaluation metrics demonstrate the robustness and practical applicability of our approach for real-world aerial surveillance and monitoring applications.

4.5. Ablation Studies

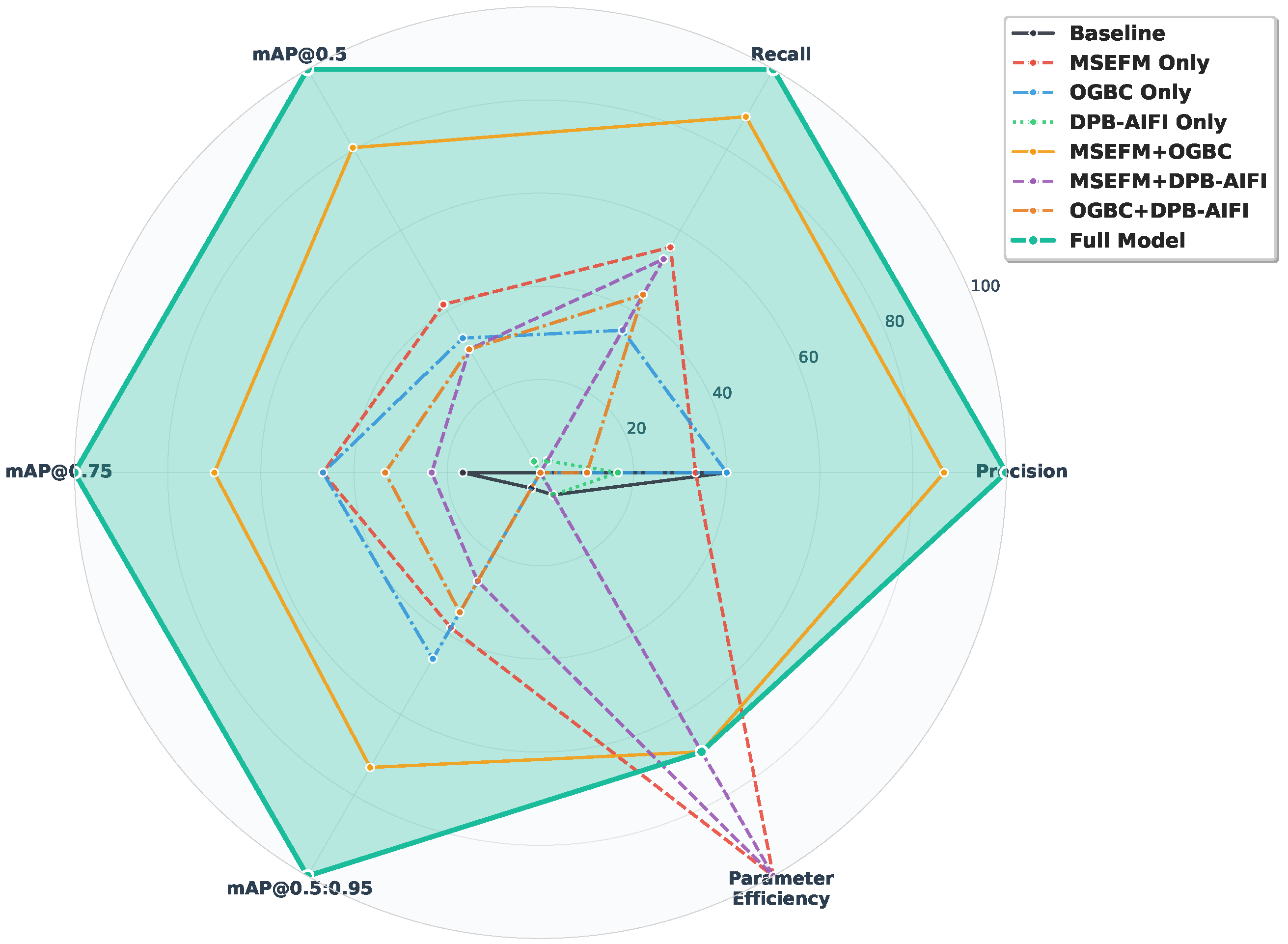

To comprehensively evaluate the contributions of our proposed architectural components, we conducted systematic ablation studies examining the individual and combined effects of the MSEFM, OG-BC, and DPB-AIFI modules. The results, presented in

Table 7 and visualized in

Figure 10, demonstrate the effectiveness of each component across multiple evaluation metrics, including precision, recall, mAP@0.5, mAP@0.75, mAP@0.5:0.95, and parameter efficiency.

Figure 10 presents the comprehensive ablation study results, where MSEFM denotes the Multi-Scale Edge-Enhanced Feature Fusion Module, OG-BC represents the Omni-Grained Boundary Calibrator, and DPB-AIFI indicates the DPB-enhanced AIFI. The radar chart visualization clearly illustrates the progressive performance improvements achieved through different module combinations, with the complete model incorporating all three proposed modules (highlighted with the most prominent line) demonstrating superior performance across all evaluation criteria.

The baseline configuration without any proposed modules achieves 62.0% precision, 45.6% recall, and 47.3% mAP@0.5 with 19.88M parameters. The baseline shows relatively low recall (45.6%) and moderate mAP scores across different IoU thresholds (mAP@0.75: 30.0%, mAP@0.5:0.95: 29.0%). These results highlight the inherent limitations in detecting small objects and achieving precise localization, thus establishing clear motivation for our proposed enhancements. As evident from the radar chart, this baseline configuration forms the innermost performance boundary, serving as a foundation for understanding the individual contributions of each proposed module to the overall system performance.

When integrating MSEFM alone, we observe significant improvements across multiple metrics, as clearly visualized in the radar chart expansion. The mAP@0.5 increases by 1.5 percentage points to 48.8%, while recall improves substantially to 47.5%, demonstrating the module’s effectiveness in enhancing feature extraction capabilities. Notably, the mAP@0.5:0.95 reaches 29.9%, indicating improved performance across varying IoU thresholds. Most remarkably, this enhancement is accompanied by a 26.7% reduction in parameters from 19.88M to 14.57M, demonstrating MSEFM’s dual benefit of improved performance and computational efficiency. The enhanced edge perception and multi-scale feature extraction capabilities of MSEFM prove particularly effective for small object detection scenarios, where precise feature representation is crucial for accurate localization.

The OG-BC module demonstrates comparable effectiveness when applied individually, achieving 48.5% mAP@0.5 and improving recall to 46.8%. The consistent improvements across all mAP metrics (mAP@0.75: 30.9%, mAP@0.5:0.95: 30.1%) validate the module’s capability in enhancing detection robustness through multi-scale semantic fusion and explicit boundary modeling. However, this improvement comes with a slight parameter increase to 20.19M, reflecting the computational cost associated with omni-grained boundary calibration mechanisms. The maintained precision at 62.0% indicates that OG-BC enhances recall without compromising detection accuracy, suggesting effective boundary refinement capabilities.

In contrast, DPB-AIFI alone yields minimal improvements, with mAP@0.5 increasing by only 0.1% to 47.4%, accompanied by slight degradation in precision to 61.3% and mAP@0.5:0.95 to 28.9%. This limited individual contribution, clearly visible as minimal radar chart expansion, suggests that dynamic position bias mechanisms require synergistic interaction with other components to realize their full potential. The minimal improvement indicates that positional encoding enhancements alone are insufficient to address the fundamental challenges in small object detection without complementary feature extraction and boundary modeling improvements.

The combination of MSEFM and OG-BC produces substantial performance gains, achieving 50.2% mAP@0.5, 48.6% recall, and 63.4% precision while maintaining computational efficiency at 16.30M parameters. The 2.9 percentage point improvement in mAP@0.5 compared to the baseline demonstrates strong complementarity between enhanced feature extraction and refined boundary modeling. All mAP metrics show consistent improvements (mAP@0.75: 31.6%, mAP@0.5:0.95: 30.8%), indicating robust performance across varying IoU thresholds. This synergistic effect, prominently displayed in the radar chart, suggests that the multi-scale edge-enhanced features provide a solid foundation for the boundary calibrator to perform more accurate refinements.

In contrast, combinations involving DPB-AIFI without both other modules show limited benefits or slight performance degradation, as evidenced by the constrained radar chart profiles. The MSEFM + DPB-AIFI configuration achieves 48.4% mAP@0.5 with reduced precision (60.8%), while the OG-BC + DPB-AIFI combination maintains similar mAP@0.5 (48.4%) but with lower precision (61.1%). These results suggest that DPB-AIFI’s effectiveness is contingent upon the presence of enhanced feature extraction and boundary modeling capabilities, indicating that dynamic position bias optimization requires a robust feature representation framework to be effective.

The complete ACD-DETR model that incorporates all three modules achieves optimal performance on all evaluation metrics, forming the outermost boundary in the radar chart visualization. The model demonstrates 63.8% precision, 49.0% recall, and 50.9% mAP@0.5 while maintaining computational efficiency with only 16.30M parameters. Compared to the baseline, this represents improvements of 1.8% in precision, 3.4% in recall, and 3.6% in mAP@0.5, with an 18% reduction in parameter count. The consistent improvements across all mAP metrics (mAP@0.75: 32.5%, mAP@0.5:0.95: 31.5%) demonstrate enhanced localization accuracy and robustness across varying detection thresholds. These results confirm that the three architectural components work in concert to maximize detection performance while preserving computational efficiency, with DPB-AIFI providing the final optimization layer that fine-tunes the synergistic interaction between MSEFM and OG-BC. The superior performance of the complete configuration, clearly visible as the most expanded radar chart profile, empirically validates our architectural design principles and confirms the theoretical soundness of the proposed multi-component integration strategy.

4.6. Fusion-Enhanced Variant: ACD-DETR-SBA+

Beyond the modular ablation studies, we further propose a fusion-enhanced variant termed ACD-DETR-SBA+, which removes the CSP-OmniKernel module and the DPB-AIFI module, while introducing additional SBA modules to intensify boundary–semantic fusion. This design focuses on enhancing feature-level fusion while discarding explicit positional modeling and omni-kernel calibration.

As shown in

Table 8, ACD-DETR-SBA+ achieves superior detection performance across all metrics compared to both the baseline RTDETR-R18 and the full ACD-DETR model. Notably, it outperforms ACD-DETR on mAP@0.5 and mAP@0.5:0.95, demonstrating the effectiveness of repeated semantic–boundary interaction. Although the removal of CSP-OmniKernel and DPB-AIFI reduces the parameter count to 15.67 M, the dense deployment of SBA modules leads to a significant increase in computational complexity, as reflected by the GFLOPs rising to 94.2. This increase is primarily due to the intensive attention mechanisms and repeated fusion operations within the SBA modules.

In summary, ACD-DETR-SBA+ represents a design that sacrifices inference efficiency in favor of improved detection precision. This variant is particularly suitable for scenarios where computational resources are sufficient but detection accuracy is prioritized, such as UAV-based monitoring and inspection tasks.

These results suggest that intensive semantic–boundary fusion, enabled by densely stacked SBA modules, can serve as a lightweight (in terms of parameter count) yet computationally intensive alternative to positional encoding and omni-kernel calibration for UAV small object detection. The ACD-DETR-SBA+ variant highlights the potential of purely feature-level fusion strategies when deployed in resource-rich environments.

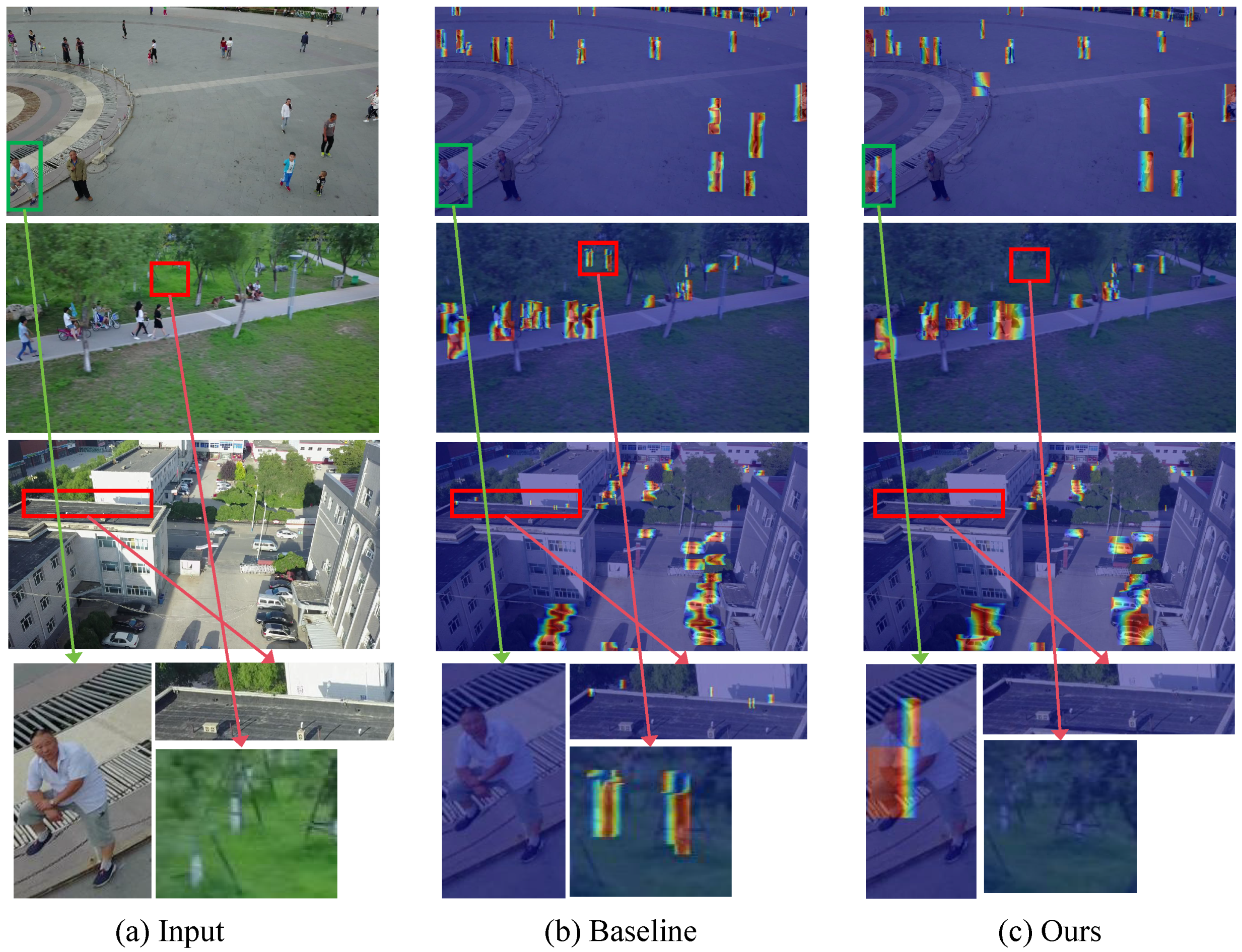

4.7. Visualization

To qualitatively evaluate the detection performance, we visualize the attention heatmaps of the baseline RT-DETR-R18 and the proposed ACD-DETR on the VisDrone dataset, as shown in

Figure 11. Compared to RT-DETR-R18, ACD-DETR exhibits more concentrated and accurate attention on small targets.

As observed from the red and green boxes in

Figure 11, the proposed ACD-DETR significantly reduces both false positives and missed detections compared to the baseline model. The attention responses generated by ACD-DETR are more concentrated around actual target regions, leading to more accurate localization. This demonstrates that the integration of multi-scale edge enhancement and spatial-aware encoding improves the model’s capability in detecting small or occluded objects in UAV imagery.

5. Conclusions

In this paper, we propose ACD-DETR, a lightweight Transformer-based framework tailored for small object detection in UAV imagery. By integrating MSEFM, OG-BC, and DPB-AIFI, ACD-DETR effectively enhances fine-grained feature extraction, boundary localization, and spatial relationship modeling.

To further investigate the trade-off between detection accuracy and computational cost, we introduce ACD-DETR-SBA+, a fusion-enhanced variant that replaces OG-BC and DPB-AIFI with densely stacked Semantic–Boundary Aggregation (SBA) modules. Although ACD-DETR-SBA+ incurs higher computational overhead (in terms of GFLOPs), it achieves superior detection performance (both mAP@0.5 and mAP@0.5:0.95), making it well-suited for deployment in computation-rich environments.

Extensive experiments on the VisDrone2019 and DOTA datasets demonstrate that ACD-DETR achieves a new state-of-the-art trade-off between accuracy and efficiency for UAV-based small object detection. In contrast, ACD-DETR-SBA+ illustrates the potential of pure feature-level fusion strategies when resources are not constrained. Ablation studies validate the individual and synergistic contributions of each proposed module, while qualitative visualizations show that our model more effectively attends to small and occluded targets than existing baselines.

In future work, we aim to further optimize the fusion strategy, reduce computational complexity, investigate true cross-dataset generalization by evaluating models trained on one aerial dataset directly on other datasets, and adapt the framework for real-time deployment in aerial edge computing scenarios.