Assessing the Generalizability of Foundation Models for the Recognition of Motor Examinations in Parkinson’s Disease

Abstract

1. Introduction

The Issue of Generalization into Clinical Reality

- 1.

- Fine-tuned foundation models trained through self-supervised learning on accelerometer data of healthy participants enhance the recognition of activities associated with motor examinations conducted by PD patients across datasets and recording paradigms;

- 2.

- The fine-tuned models show increased robustness to varying recording conditions and different data preprocessing commonly observed between studies.

2. Materials and Methods

2.1. Data

2.1.1. Datasets

2.1.2. Data Preprocessing

2.2. Model

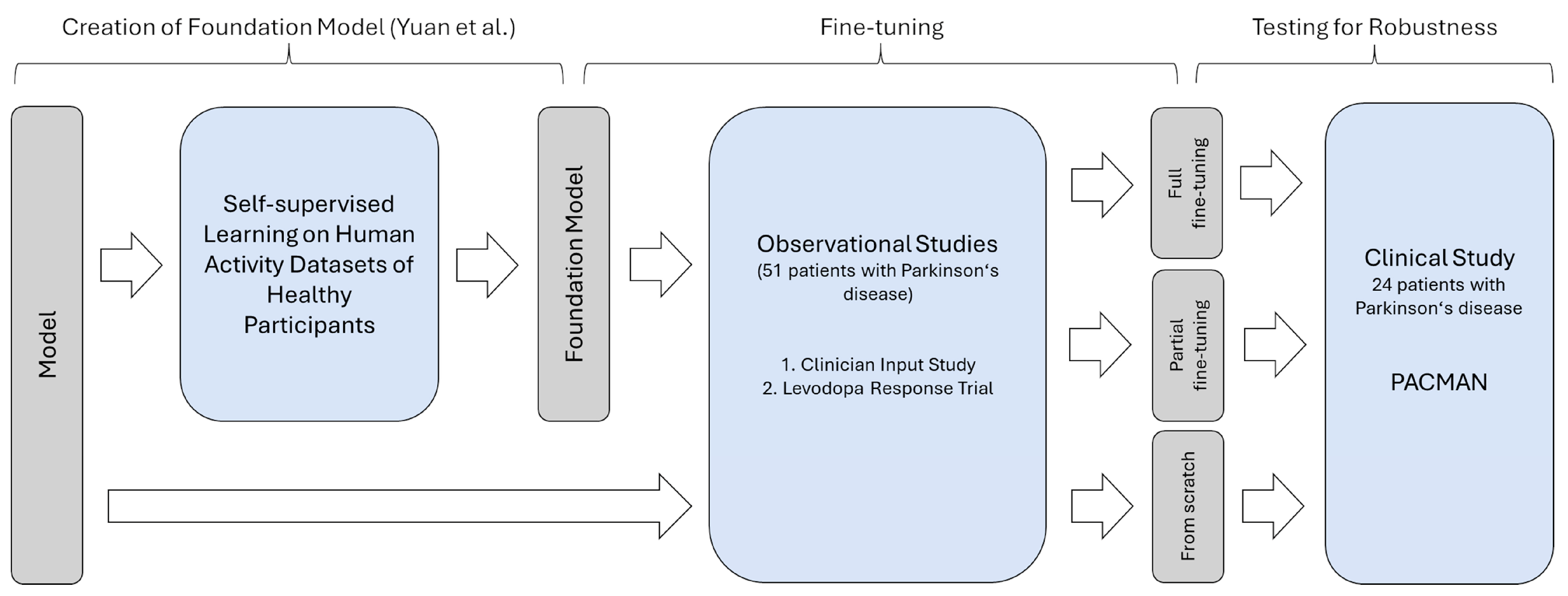

2.2.1. Foundation Model

2.2.2. Fine-Tuning the Foundation Model

2.3. Evaluation

- Training from scratch: The first evaluation was based on training the network from scratch. In this setup, the pre-trained weights of the self-supervised model were not used at all. Instead, a random initialization of the network according to the utilized PyTorch library (version 1.13) took place. Accordingly, the deep-learning architecture is trained as it would be if other data besides the data in the training set were unavailable. This condition represents the baseline and could be used to study effects such as the appropriateness of the model structure. However, the risk of overfitting is high.

- Partial fine-tuning: The second training paradigm, partial fine-tuning, was based on training only the last layers of the network for predicting the presence of motor examination. The remaining layers with their pre-trained weights serve as feature extractors and were frozen. While the number of parameters requiring training is the fewest and the risk of overfitting is reduced accordingly, the other layers may not account for a changed distribution in the input data, given the non-healthy study population.

- Full fine-tuning: The third evaluation, the full fine-tuning, consisted of training the full network while using the existing weights of the foundation model as a starting point. While the model may fully adapt to the changed input data, previously extracted representations of movements could be reused. However, overfitting might affect the performance on unseen data, given the size of the network and the few training samples.

2.4. Statistical Analysis

3. Results

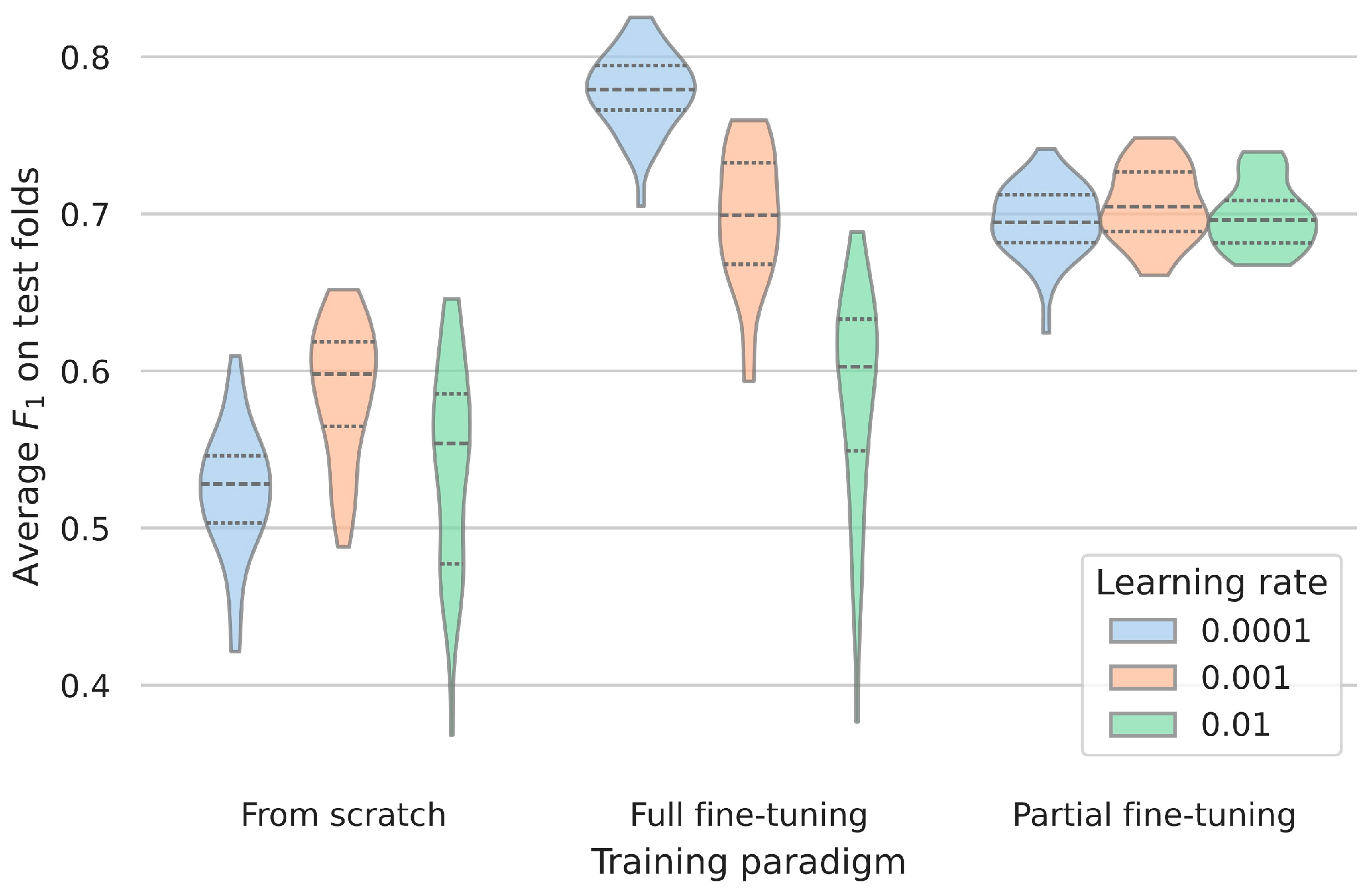

3.1. Impact of Training Procedures for Fine-Tuning

3.2. Influence of Learning Rate for Fine-Tuning

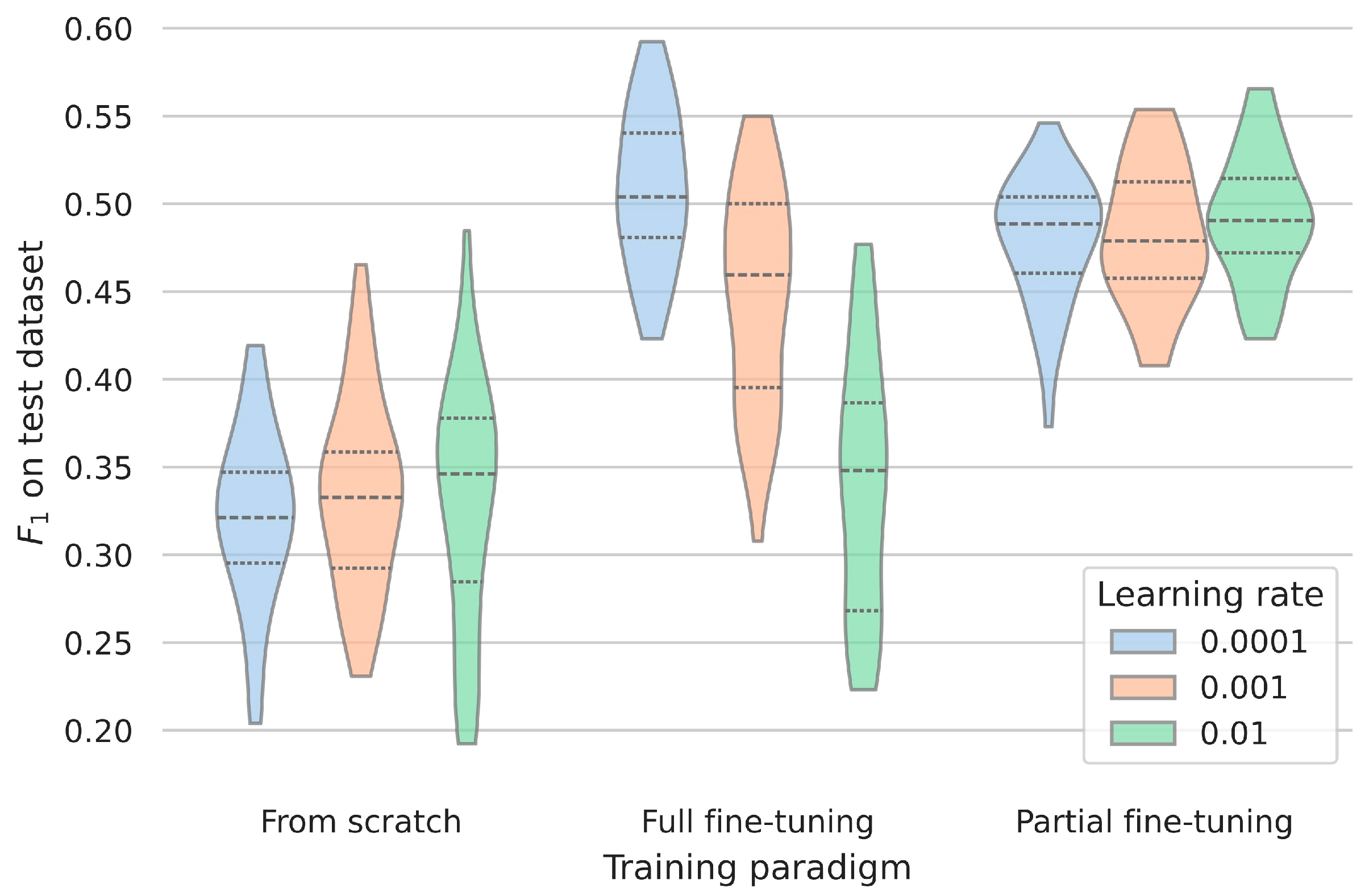

3.3. Assessing the Robustness and Generalizability of the Clinical Dataset

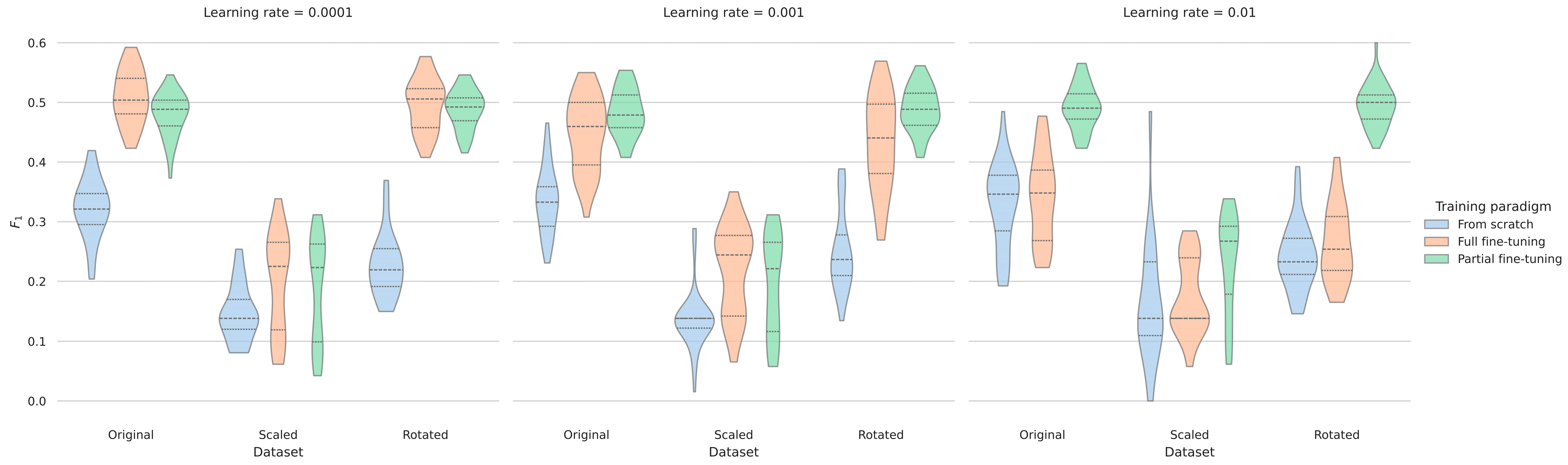

3.4. Evaluating the Robustness According to Recording Setups

4. Discussion

4.1. Benefits of Utilizing Data from Healthy Participants for Movement Disorder Research

4.2. Evidence for Increased Robustness Regarding Recording Setups

4.3. Utility of the Proposed Model for Recognizing Motor Examinations

4.4. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CIS-PD | Clinician Input Study |

| PACMAN | Parkinson’s Clinical Movement Assessment |

| PD | Parkinson’s disease |

| MDS-UPDRS | Movement Disorder Society unified Parkinson’s Disease Rating Scale |

References

- Dorsey, E.R.; Elbaz, A.; Nichols, E.; Abbasi, N.; Abd-Allah, F.; Abdelalim, A.; Adsuar, J.C.; Ansha, M.G.; Brayne, C.; Choi, J.-Y.J.; et al. Global, Regional, and National Burden of Parkinson’s Disease, 1990–2016: A Systematic Analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2018, 17, 939–953. [Google Scholar] [CrossRef]

- Postuma, R.B.; Berg, D.; Stern, M.; Poewe, W.; Olanow, C.W.; Oertel, W.; Obeso, J.; Marek, K.; Litvan, I.; Lang, A.E.; et al. MDS Clinical Diagnostic Criteria for Parkinson’s Disease. Mov. Disord. 2015, 30, 1591–1601. [Google Scholar] [CrossRef] [PubMed]

- Monje, M.H.G.; Foffani, G.; Obeso, J.; Sánchez-Ferro, Á. New Sensor and Wearable Technologies to Aid in the Diagnosis and Treatment Monitoring of Parkinson’s Disease. Annu. Rev. Biomed. Eng. 2019, 21, 111–143. [Google Scholar] [CrossRef] [PubMed]

- Di Biase, L.; Tinkhauser, G.; Martin Moraud, E.; Caminiti, M.L.; Pecoraro, P.M.; Di Lazzaro, V. Adaptive, Personalized Closed-Loop Therapy for Parkinson’s Disease: Biochemical, Neurophysiological, and Wearable Sensing Systems. Expert Rev. Neurother. 2021, 21, 1371–1388. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, A.M.; Coelho, L.; Carvalho, E.; Ferreira-Pinto, M.J.; Vaz, R.; Aguiar, P. Machine Learning for Adaptive Deep Brain Stimulation in Parkinson’s Disease: Closing the Loop. J. Neurol. 2023, 270, 5313–5326. [Google Scholar] [CrossRef]

- Giannakopoulou, K.-M.; Roussaki, I.; Demestichas, K. Internet of Things Technologies and Machine Learning Methods for Parkinson’s Disease Diagnosis, Monitoring and Management: A Systematic Review. Sensors 2022, 22, 1799. [Google Scholar] [CrossRef]

- Espay, A.J.; Bonato, P.; Nahab, F.; Maetzler, W.; Dean, J.M.; Klucken, J.; Eskofier, B.M.; Merola, A.; Horak, F.; Lang, A.E.; et al. Technology in Parkinson Disease: Challenges and Opportunities. Mov. Disord. 2016, 31, 1272–1282. [Google Scholar] [CrossRef]

- Sigcha, L.; Borzì, L.; Amato, F.; Rechichi, I.; Ramos-Romero, C.; Cárdenas, A.; Gascó, L.; Olmo, G. Deep Learning and Wearable Sensors for the Diagnosis and Monitoring of Parkinson’s Disease: A Systematic Review. Expert Syst. Appl. 2023, 229, 120541. [Google Scholar] [CrossRef]

- Hill, E.J.; Mangleburg, C.G.; Alfradique-Dunham, I.; Ripperger, B.; Stillwell, A.; Saade, H.; Rao, S.; Fagbongbe, O.; Von Coelln, R.; Tarakad, A.; et al. Quantitative Mobility Measures Complement the MDS-UPDRS for Characterization of Parkinson’s Disease Heterogeneity. Park. Relat. Disord. 2021, 84, 105–111. [Google Scholar] [CrossRef]

- Safarpour, D.; Dale, M.L.; Shah, V.V.; Talman, L.; Carlson-Kuhta, P.; Horak, F.B.; Mancini, M. Surrogates for Rigidity and PIGD MDS-UPDRS Subscores Using Wearable Sensors. Gait Posture 2022, 91, 186–191. [Google Scholar] [CrossRef]

- Silva De Lima, A.L.; Smits, T.; Darweesh, S.K.L.; Valenti, G.; Milosevic, M.; Pijl, M.; Baldus, H.; De Vries, N.M.; Meinders, M.J.; Bloem, B.R. Home-Based Monitoring of Falls Using Wearable Sensors in Parkinson’s Disease. Mov. Disord. 2020, 35, 109–115. [Google Scholar] [CrossRef]

- Morgan, C.; Rolinski, M.; McNaney, R.; Jones, B.; Rochester, L.; Maetzler, W.; Craddock, I.; Whone, A.L. Systematic Review Looking at the Use of Technology to Measure Free-Living Symptom and Activity Outcomes in Parkinson’s Disease in the Home or a Home-like Environment. J. Park. Dis. 2020, 10, 429–454. [Google Scholar] [CrossRef]

- Yue, P.; Wang, X.; Yang, Y.; Qi, J.; Yang, P. Up-Sampling Active Learning: An Activity Recognition Method for Parkinson’s Disease Patients. In Proceedings of the Pervasive Computing Technologies for Healthcare; Tsanas, A., Triantafyllidis, A., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 229–246. [Google Scholar]

- Cheng, W.-Y.; Scotland, A.; Lipsmeier, F.; Kilchenmann, T.; Jin, L.; Schjodt-Eriksen, J.; Wolf, D.; Zhang-Schaerer, Y.-P.; Garcia, I.F.; Siebourg-Polster, J.; et al. Human Activity Recognition from Sensor-Based Large-Scale Continuous Monitoring of Parkinson’s Disease Patients. In Proceedings of the 2017 IEEE/ACM International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE), Philadelphia, PA, USA, 17–19 July 2017; pp. 249–250. [Google Scholar]

- Denk, D.; Herman, T.; Zoetewei, D.; Ginis, P.; Brozgol, M.; Cornejo Thumm, P.; Decaluwe, E.; Ganz, N.; Palmerini, L.; Giladi, N.; et al. Daily-Living Freezing of Gait as Quantified Using Wearables in People With Parkinson Disease: Comparison with Self-Report and Provocation Tests. Phys. Ther. 2022, 102, pzac129. [Google Scholar] [CrossRef] [PubMed]

- Shawen, N.; O’Brien, M.K.; Venkatesan, S.; Lonini, L.; Simuni, T.; Hamilton, J.L.; Ghaffari, R.; Rogers, J.A.; Jayaraman, A. Role of Data Measurement Characteristics in the Accurate Detection of Parkinson’s Disease Symptoms Using Wearable Sensors. J. Neuroeng. Rehabil. 2020, 17, 52. [Google Scholar] [CrossRef] [PubMed]

- Liang, Y.; Wen, H.; Nie, Y.; Jiang, Y.; Jin, M.; Song, D.; Pan, S.; Wen, Q. Foundation Models for Time Series Analysis: A Tutorial and Survey. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 6555–6565. [Google Scholar]

- Sabo, A.; Mehdizadeh, S.; Iaboni, A.; Taati, B. Estimating Parkinsonism Severity in Natural Gait Videos of Older Adults with Dementia. IEEE J. Biomed. Health Inform. 2022, 26, 2288–2298. [Google Scholar] [CrossRef]

- Guo, Y.; Huang, D.; Zhang, W.; Wang, L.; Li, Y.; Olmo, G.; Wang, Q.; Meng, F.; Chan, P. High-Accuracy Wearable Detection of Freezing of Gait in Parkinson’s Disease Based on Pseudo-Multimodal Features. Comput. Biol. Med. 2022, 146, 105629. [Google Scholar] [CrossRef]

- Rahman, W.; Lee, S.; Islam, M.S.; Antony, V.N.; Ratnu, H.; Ali, M.R.; Mamun, A.A.; Wagner, E.; Jensen-Roberts, S.; Waddell, E.; et al. Detecting Parkinson Disease Using a Web-Based Speech Task: Observational Study. J. Med. Internet Res. 2021, 23, e26305. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Lei, H.; Huang, Z.; Li, Z.; Liu, C.-M.; Lei, B. Parkinson’s Disease Classification with Self-Supervised Learning and Attention Mechanism. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 4601–4607. [Google Scholar]

- Endo, M.; Poston, K.L.; Sullivan, E.V.; Li, F.-F.; Pohl, K.M.; Adeli, E. GaitForeMer: Self-Supervised Pre-Training of Transformers via Human Motion Forecasting for Few-Shot Gait Impairment Severity Estimation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2022, Resorts World Sentosa, Singapore, 18–22 September 2022; Wang, L., Dou, Q., Fletcher, P.T., Speidel, S., Li, S., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 130–139. [Google Scholar]

- Jiang, H.; Bryan Lim, W.Y.; Shyuan Ng, J.; Wang, Y.; Chi, Y.; Miao, C. Towards Parkinson’s Disease Prognosis Using Self-Supervised Learning and Anomaly Detection. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3960–3964. [Google Scholar]

- Sánchez-Fernández, L.P.; Garza-Rodríguez, A.; Sánchez-Pérez, L.A.; Martínez-Hernández, J.M. A Computer Method for Pronation-Supination Assessment in Parkinson’s Disease Based on Latent Space Representations of Biomechanical Indicators. Bioengineering 2023, 10, 588. [Google Scholar] [CrossRef]

- Xia, Y.; Sun, H.; Zhang, B.; Xu, Y.; Ye, Q. Prediction of Freezing of Gait Based on Self-Supervised Pretraining via Contrastive Learning. Biomed. Signal Process. Control. 2024, 89, 105765. [Google Scholar] [CrossRef]

- Yuan, H.; Chan, S.; Creagh, A.P.; Tong, C.; Clifton, D.A.; Doherty, A. Self-Supervised Learning for Human Activity Recognition Using 700,000 Person-Days of Wearable Data. npj Digit. Med. 2023, 7, 91. [Google Scholar] [CrossRef]

- Zhang, Y.; Ayush, K.; Qiao, S.; Heydari, A.A.; Narayanswamy, G.; Xu, M.A.; Metwally, A.A.; Xu, S.; Garrison, J.; Xu, X.; et al. SensorLM: Learning the Language of Wearable Sensors. arXiv 2025, arXiv:2506.09108. [Google Scholar] [CrossRef]

- Elm, J.J.; Daeschler, M.; Bataille, L.; Schneider, R.; Amara, A.; Espay, A.J.; Afek, M.; Admati, C.; Teklehaimanot, A.; Simuni, T. Feasibility and Utility of a Clinician Dashboard from Wearable and Mobile Application Parkinson’s Disease Data. NPJ Digit. Med. 2019, 2, 95. [Google Scholar] [CrossRef]

- Synapse.org. MJFF Levodopa Response Study. Available online: https://www.synapse.org/Synapse:syn20681023/wiki/594678 (accessed on 31 August 2025).

- Daneault, J.-F.; Vergara-Diaz, G.; Parisi, F.; Admati, C.; Alfonso, C.; Bertoli, M.; Bonizzoni, E.; Carvalho, G.F.; Costante, G.; Fabara, E.E.; et al. Accelerometer Data Collected with a Minimum Set of Wearable Sensors from Subjects with Parkinson’s Disease. Sci. Data 2021, 8, 48. [Google Scholar] [CrossRef] [PubMed]

- Vergara-Diaz, G.; Daneault, J.-F.; Parisi, F.; Admati, C.; Alfonso, C.; Bertoli, M.; Bonizzoni, E.; Carvalho, G.F.; Costante, G.; Fabara, E.E.; et al. Limb and Trunk Accelerometer Data Collected with Wearable Sensors from Subjects with Parkinson’s Disease. Sci. Data 2021, 8, 47. [Google Scholar] [CrossRef]

- Sieberts, S.K.; Schaff, J.; Duda, M.; Pataki, B.Á.; Sun, M.; Snyder, P.; Daneault, J.-F.; Parisi, F.; Costante, G.; Rubin, U.; et al. Crowdsourcing Digital Health Measures to Predict Parkinson’s Disease Severity: The Parkinson’s Disease Digital Biomarker DREAM Challenge. NPJ Digit. Med. 2021, 4, 53. [Google Scholar] [CrossRef] [PubMed]

- Wiederhold, A.J.; Zhu, Q.R.; Spiegel, S.; Dadkhah, A.; Pötter-Nerger, M.; Langebrake, C.; Ückert, F.; Gundler, C. Opportunities and Limitations of Wrist-Worn Devices for Dyskinesia Detection in Parkinson’s Disease. Sensors 2025, 25, 4514. [Google Scholar] [CrossRef] [PubMed]

- Activeinsights. GENEActiv with Software: Instructions for Use. Available online: https://activinsights.com/wp-content/uploads/2024/09/GENEActiv-1.2-IFU-rev-6.pdf (accessed on 26 August 2025).

- Gundler, C.; Zhu, Q.R.; Trübe, L.; Dadkhah, A.; Gutowski, T.; Rosch, M.; Langebrake, C.; Nürnberg, S.; Baehr, M.; Ückert, F. A Unified Data Architecture for Assessing Motor Symptoms in Parkinson’s Disease. Stud. Health Technol. Inform. 2023, 307, 22–30. [Google Scholar] [CrossRef]

- Subramanian, R.; Sarkar, S. Evaluation of Algorithms for Orientation Invariant Inertial Gait Matching. IEEE Trans. Inf. Forensics Secur. 2019, 14, 304–318. [Google Scholar] [CrossRef]

- An, S.; Bhat, G.; Gumussoy, S.; Ogras, U. Transfer Learning for Human Activity Recognition Using Representational Analysis of Neural Networks. ACM Trans. Comput. Healthc. 2023, 4, 1–21. [Google Scholar] [CrossRef]

| Levodopa Response Trial | Clinician Input Study | PACMAN Study | |

|---|---|---|---|

| Participants | 27 | 24 | 24 |

| Age range | 50–84 years | 36–75 years | 49–79 years |

| Average age (SD) | 67 (±9) years | 63 (±10) years | 65 (±8) years |

| Rotating hands (UPDRS 3.6) | 4912 | 908 | 23 |

| Other movements | 15,172 | 3012 | 36 |

| Sitting | 2077 | 436 | 66 |

| Standing | 2077 | 418 | 0 |

| Walking (UPDRS 3.10) | 7010 | 950 | 135 |

| Learning Rate | F1 Score (±SD) | ||

|---|---|---|---|

| From scratch | Full fine-tuning | Partial fine-tuning | |

| 0.0001 | 0.52 ± 0.04 | 0.78 ± 0.02 | 0.70 ± 0.02 |

| 0.001 | 0.59 ± 0.04 | 0.70 ± 0.04 | 0.71 ± 0.02 |

| 0.01 | 0.54 ± 0.06 | 0.58 ± 0.07 | 0.70 ± 0.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gundler, C.; Wiederhold, A.J.; Pötter-Nerger, M. Assessing the Generalizability of Foundation Models for the Recognition of Motor Examinations in Parkinson’s Disease. Sensors 2025, 25, 5523. https://doi.org/10.3390/s25175523

Gundler C, Wiederhold AJ, Pötter-Nerger M. Assessing the Generalizability of Foundation Models for the Recognition of Motor Examinations in Parkinson’s Disease. Sensors. 2025; 25(17):5523. https://doi.org/10.3390/s25175523

Chicago/Turabian StyleGundler, Christopher, Alexander Johannes Wiederhold, and Monika Pötter-Nerger. 2025. "Assessing the Generalizability of Foundation Models for the Recognition of Motor Examinations in Parkinson’s Disease" Sensors 25, no. 17: 5523. https://doi.org/10.3390/s25175523

APA StyleGundler, C., Wiederhold, A. J., & Pötter-Nerger, M. (2025). Assessing the Generalizability of Foundation Models for the Recognition of Motor Examinations in Parkinson’s Disease. Sensors, 25(17), 5523. https://doi.org/10.3390/s25175523