MDEM: A Multi-Scale Damage Enhancement MambaOut for Pavement Damage Classification

Abstract

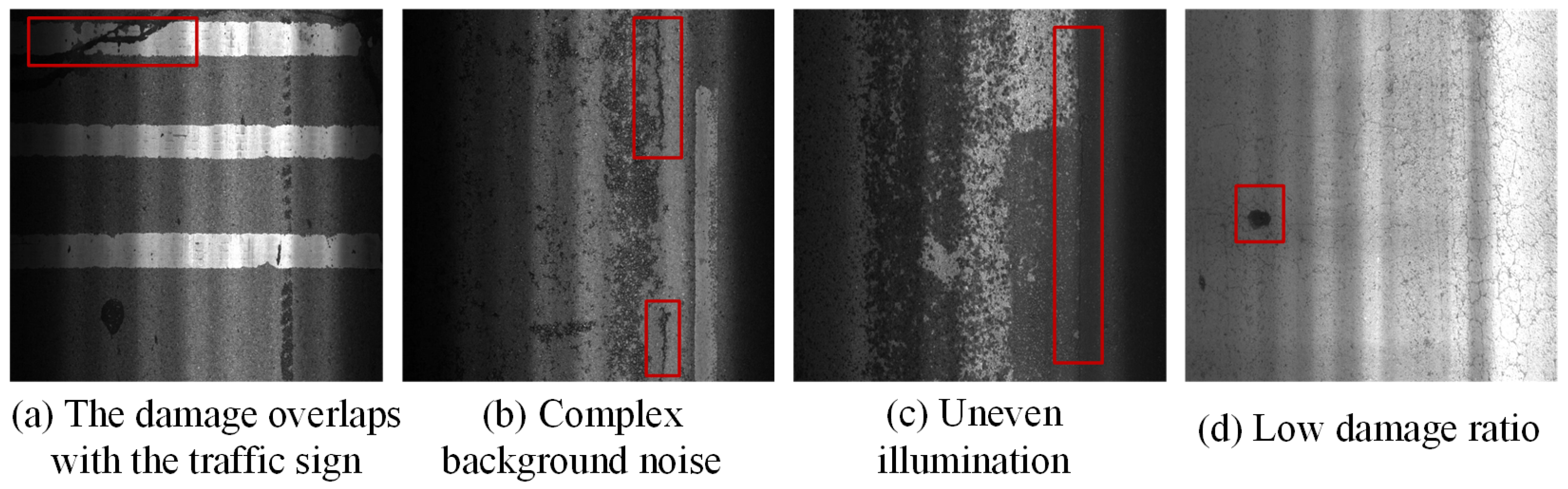

1. Introduction

2. Related Work

2.1. Pavement Damage Classification

2.2. Related Technologies

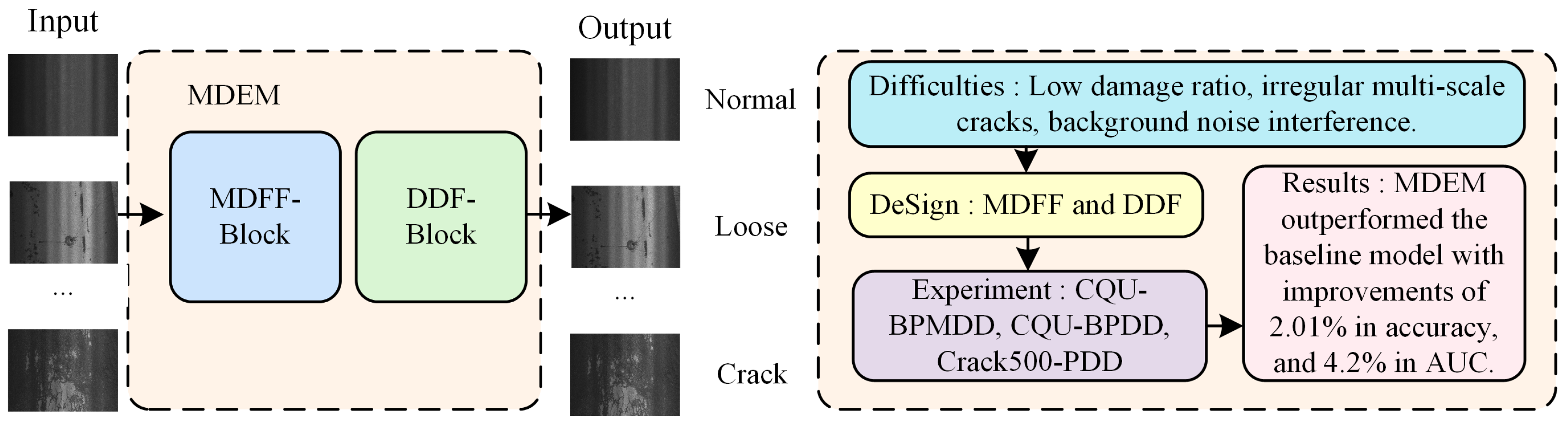

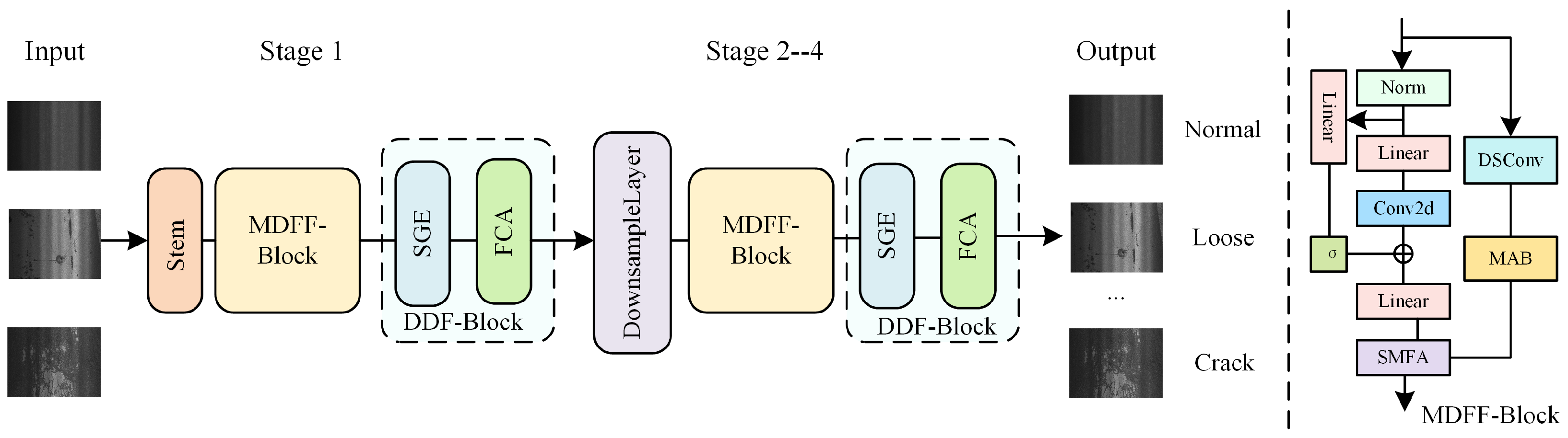

3. Methodology

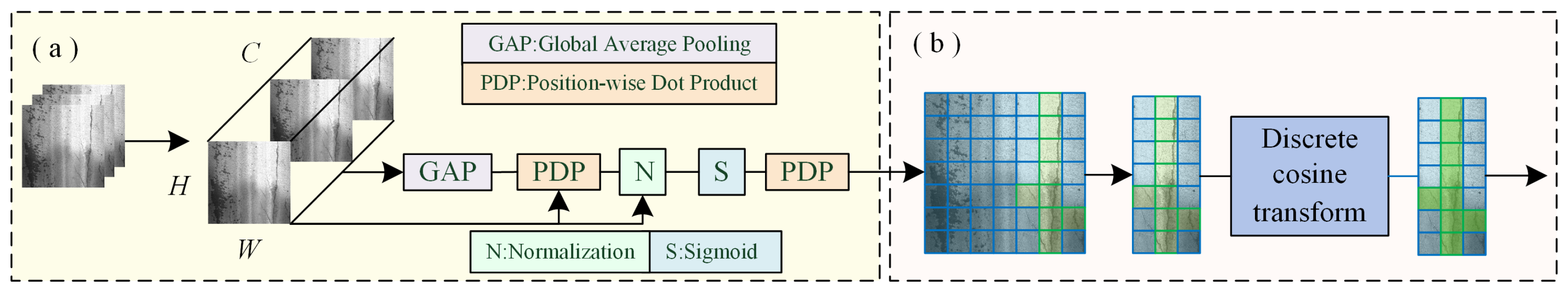

3.1. Multi-Scale Dynamic Feature Fusion Block

3.2. Damage Detail Enhancement Block

3.3. Loss Function

3.4. Multi-Scale Damage Enhancement MambaOut

4. Experiments

4.1. Experimental Setting and Evaluation Metrics

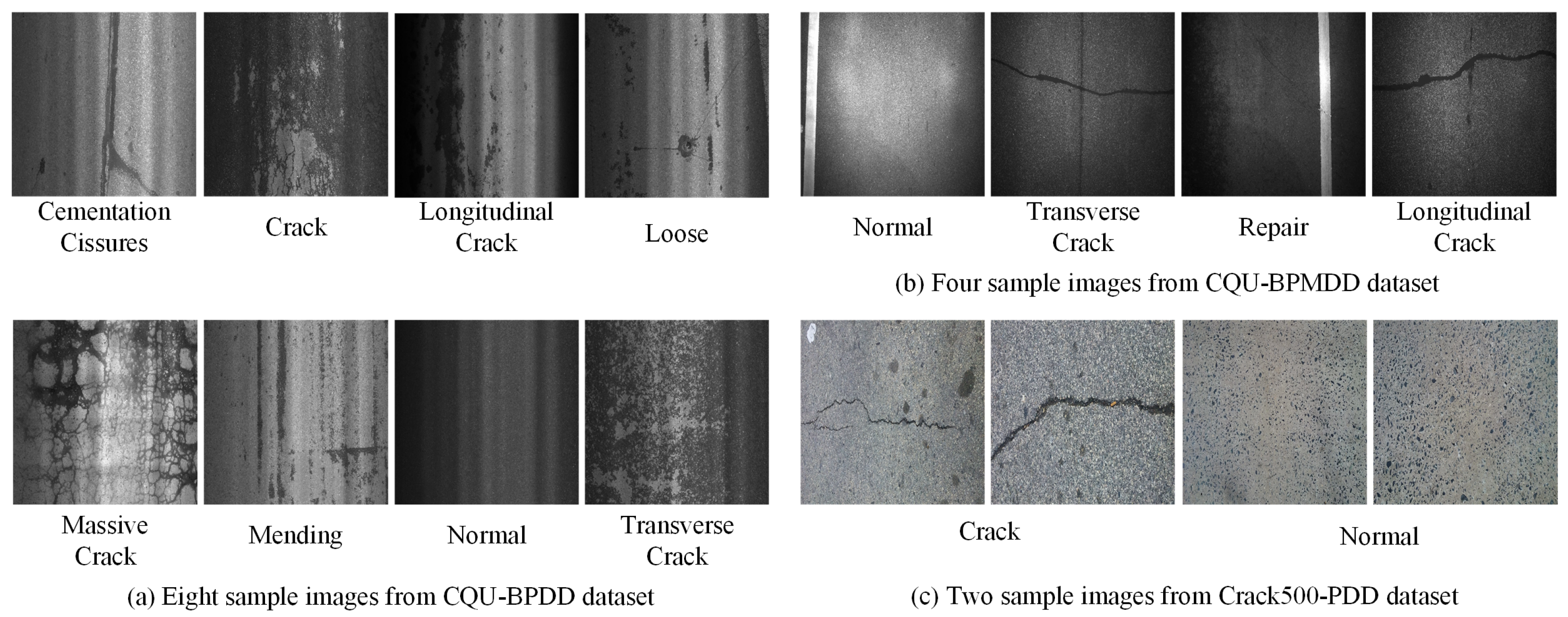

4.2. Dataset

4.3. Ablation Analysis

4.4. Detection Task Experimental Analysis

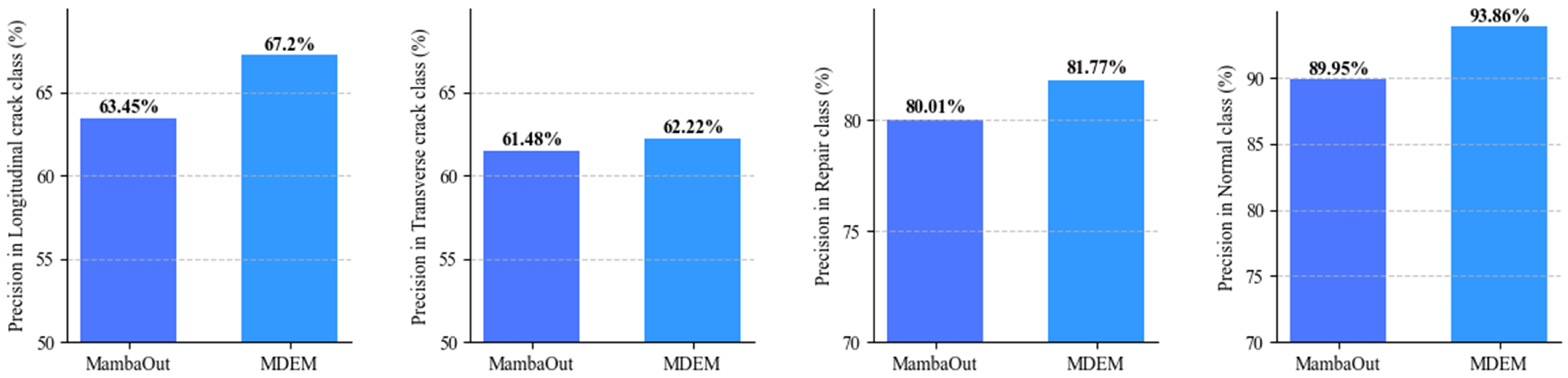

4.5. Classification Task Experimental Analysis

4.6. Visualization Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MDEM | Multi-scale Damage Enhancement MambaOut |

| MDFF | Multi-scale Dynamic Feature Fusion Block |

| DDE | Damage Detail Enhancement Block |

| CNN | Convolutional Neural Network |

| DCNNs | Deep Convolutional Neural Networks |

| AlexNet | ImageNet Classification with Deep Convolutional Neural Networks |

| CLA | Contrastive Learning Attention mechanism |

| RFEM | Region-Focused Enhancement Module |

| CAGM | Context-Aware Global Module |

| DFSS | Deep Fusion Selective Scanning Module |

| WSDD-CAM | Weakly Supervised Damage Detection with Class Activation Mapping |

| CAM | Class Activation Mapping |

| SSM | Selective State Space Model |

| DSConv | Dynamic Snake Convolution |

| ViT | Vision Transformer |

| Swin-T | Swin Transformer |

| ViM | Vision Mamba |

| VGG16 | Visual Geometry Group 16-layer network |

| FFVT | Feature Fusion Vision Transformer |

| TransFG | Transformer Architecture for Fine-Grained Recognition |

| DMTC | Dense Multi-scale Feature Learning Transformer |

| DDACDN | Deep Domain Adaptation for Pavement Crack Detection |

| PicT | Pavement image classification Transformer |

| STCL | Soft Target Cross-Entropy loss |

References

- Garita-Durán, H.; Stöcker, J.P.; Kaliske, M. Deep learning-based system for automated damage detection and quantification in concrete pavement. Results Eng. 2025, 25, 104546. [Google Scholar] [CrossRef]

- Abdelkader, M.F.; Hedeya, M.A.; Samir, E.; El-Sharkawy, A.A.; Abdel-Kader, R.F.; Moussa, A.; El-Sayed, E. EGY_PDD: A comprehensive multi-sensor benchmark dataset for accurate pavement distress detection and classification. Multimed. Tools Appl. 2025, 1–36. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, B.; Zhang, X.; Liang, J. Automatic extraction and evaluation of pavement three-dimensional surface texture using laser scanning technology. Autom. Construct. 2022, 141, 104410. [Google Scholar] [CrossRef]

- Zhang, D.; Zou, Q.; Lin, H.; Xu, X.; He, L.; Gui, R.; Li, Q. Automatic pavement defect detection using 3D laser profiling technology. Autom. Construct. 2018, 96, 350–365. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, Z.; Song, X.; Wu, J.; Liu, S.; Chen, X.; Guo, X. Road surface defects detection based on IMU sensor. IEEE Sens. J. 2022, 22, 2711–2721. [Google Scholar] [CrossRef]

- Fox, A.; Kumar, B.V.K.V.; Chen, J.; Bai, F. Multi-lane pothole detection from crowdsourced undersampled vehicle sensor data. IEEE Trans. Mobile Comput. 2017, 16, 3417–3430. [Google Scholar] [CrossRef]

- Wang, K.C.P.; Zhang, A.; Li, J.Q.; Fei, Y.; Chen, C.; Li, B. Deep Learning for Asphalt Pavement Cracking Recognition Using Convolutional Neural Network. In Airfield and Highway Pavements; ASCE: Reston, VA, USA, 2017; pp. 166–177. [Google Scholar]

- Eisenbach, M.; Stricker, R.; Seichter, D.; Amende, K.; Debes, K.; Sesselmann, M.; Ebersbach, D.; Stoeckert, U.; Gross, H.M. How to get pavement distress detection ready for deep learning? A systematic approach. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017. [Google Scholar]

- Kim, B.; Cho, S. Automated Vision-Based Detection of Cracks on Concrete Surfaces Using a Deep Learning Technique. Sensors 2018, 18, 3452. [Google Scholar] [CrossRef]

- Zhang, A.; Wang, K.C.P.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated Pixel-Level Pavement Crack Detection on 3D Asphalt Surfaces Using a Deep-Learning Network. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Shuai, B.; Zuo, Z.; Wang, B.; Wang, G. Scene Segmentation with DAG-Recurrent Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1480–1493. [Google Scholar] [CrossRef]

- Li, R.; Zheng, Y.; Zhang, C.; Duan, C.; Wang, B.; Atkinson, P.M. ABCNet: Attentive bilateral contextual network for efficient semantic segmentation of fine-resolution remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2021, 181, 84–98. [Google Scholar] [CrossRef]

- Tang, W.; Huang, S.; Zhang, X.; Huangfu, L. Pict: A slim weakly supervised vision transformer for pavement distress classification. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 3076–3084. [Google Scholar]

- Xu, C.; Zhang, Q.; Mei, L.; Shen, S.; Ye, Z.; Li, D.; Yang, W.; Zhou, X. Dense multiscale feature learning transformer embedding cross-shaped attention for road damage detection. Electronics 2023, 12, 898. [Google Scholar] [CrossRef]

- Tong, Z.; Ma, T.; Zhang, W.; Huyan, J. Evidential transformer for pavement distress segmentation. Comput. Aided Civ. Infrastruct. Eng. 2023, 38, 2317–2338. [Google Scholar] [CrossRef]

- Yu, W.; Wang, X. Mambaout: Do we really need mamba for vision? In Proceedings of the CVPR, Nashville, TN, USA, 11–15 June 2025; pp. 4484–4496. [Google Scholar]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road crack detection using deep convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Liu, Y.; Yao, J.; Lu, X.; Xie, R.; Li, L. Deepcrack: A deep hierarchical feature learning architecture for crack segmentation. Neurocomputing 2019, 338, 139–153. [Google Scholar] [CrossRef]

- Lin, C.; Tian, D.; Duan, X.; Zhou, J. TransCrack: Revisiting fine-grained road crack detection with a transformer design. Philos. Trans. R. Soc. A 2023, 381, 20220172. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Wang, K.; Liu, Z.; Huang, M.; Huang, S. The Fine Feature Extraction and Attention Re-Embedding Model Based on the Swin Transformer for Pavement Damage Classification. Algorithms 2025, 18, 369. [Google Scholar] [CrossRef]

- Kyem, B.A.; Asamoah, J.K.; Aboah, A. Context-cracknet: A context-aware framework for precise segmentation of tiny cracks in pavement images. Constr. Build. Mater. 2025, 484, 141583. [Google Scholar] [CrossRef]

- Sun, P.; Yang, L.; Yang, H.; Yan, B.; Wu, T.; Li, J. DSWMamba: A deep feature fusion mamba network for detection of asphalt pavement distress. Constr. Build. Mater. 2025, 469, 140393. [Google Scholar] [CrossRef]

- Tao, Z.; Gong, H.; Liu, L.; Cong, L.; Liang, H. A weakly-supervised deep learning model for end-to-end detection of airfield pavement distress. Int. J. Transp. Sci. Technol. 2025, 17, 67–78. [Google Scholar] [CrossRef]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A review on yolov8 and its advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 8–10 December 2023; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 6070–6079. [Google Scholar]

- Wang, Y.; Li, Y.; Wang, G.; Liu, X. Multi-scale attention network for single image super-resolution. In Proceedings of the CVPR, Seattle, WA, USA, 17–21 June 2024; pp. 5950–5960. [Google Scholar]

- Zheng, M.; Sun, L.; Dong, J.; Pan, J. SMFANet: A lightweight self-modulation feature aggregation network for efficient image super-resolution. In Proceedings of the ECCV, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 359–375. [Google Scholar]

- Li, X.; Hu, X.; Yang, J. Spatial group-wise enhance: Improving semantic feature learning in convolutional networks. arXiv 2019, arXiv:1905.09646. [Google Scholar] [CrossRef]

- Sun, H.; Wen, Y.; Feng, H.; Zheng, Y.; Mei, Q.; Ren, D.; Yu, M. Unsupervised bidirectional contrastive reconstruction and adaptive fine-grained channel attention networks for image dehazing. Neural Netw. 2024, 176, 106314. [Google Scholar] [CrossRef]

- Liu, H.; Yang, C.; Li, A.; Huang, S.; Feng, X.; Ruan, Z.; Ge, Y. Deep domain adaptation for pavement crack detection. IEEE Trans. Intell. Transp. Syst. 2022, 24, 1669–1681. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Wang, J.; Yu, X.; Gao, Y. Feature fusion vision transformer for fine-grained visual categorization. arXiv 2021, arXiv:2107.02341. [Google Scholar]

- He, J.; Chen, J.N.; Liu, S.; Kortylewski, A.; Yang, C.; Bai, Y.; Wang, C. Transfg: A transformer architecture for fine-grained recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36. [Google Scholar]

- Liu, X.; Zhang, C.; Zhang, L. Vision mamba: A comprehensive survey and taxonomy. arXiv 2024, arXiv:2405.04404. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Parameter | Value |

|---|---|

| Learning Rate | 0.00005 |

| Weight decay | 0.0005 |

| Batch Size | 32 |

| Epoch | 50 |

| Dataset | Class | Number |

|---|---|---|

| CQU-BPMDD | Normal | 29,143 |

| Longitudinal crack Transverse crack Repair | 9851 | |

| CQU-BPDD | Normal | 43,330 |

| Cementation issures Crack Longitudinal crack Loose Massive crack Mending Transverse crack | 16,726 | |

| Crack500-PDD | Normal | 268 |

| Crack | 494 |

| Dataset | MDFF | DDE | STCL | AUC | Precision | F1-Score | Recall | FLOPs |

|---|---|---|---|---|---|---|---|---|

| CQU-BPMDD | √ | 92.4% | 90.3% | 90.5% | 90.6% | 2.31 G | ||

| √ | √ | 96.0% | 92.95% | 92.97% | 93.01% | 8.34 G | ||

| √ | √ | 95.89% | 92.9% | 92.82% | 93.0% | 2.31 G | ||

| √ | √ | 95.8% | 92.6% | 92.56% | 92.8% | 8.34 G | ||

| √ | √ | √ | 96.25% | 93.03% | 93.03% | 93.11% | 8.34 G | |

| Crack500-PDD | √ | 99.9% | 99.4% | 99.4% | 99.4% | 2.31 G | ||

| √ | √ | 99.9% | 99.63% | 99.58% | 99.6% | 8.34 G | ||

| √ | √ | 99.9% | 99.47% | 99.52% | 99.56% | 2.31 G | ||

| √ | √ | 99.9% | 99.62% | 99.6% | 99.6% | 8.34 G | ||

| √ | √ | √ | 99.9% | 99.73% | 99.62% | 99.71% | 8.34 G |

| Dataset | MDFF | DDE | STCL | Accuracy | Precision | F1-Score | Recall | FLOPs |

|---|---|---|---|---|---|---|---|---|

| CQU-BPMDD | √ | 87.56% | 86.52% | 86.60% | 87.56% | 2.31 G | ||

| √ | √ | 89.25% | 88.68% | 88.89% | 89.25% | 8.34 G | ||

| √ | √ | 89.26% | 88.66% | 88.86% | 89.26% | 2.31 G | ||

| √ | √ | 88.90% | 88.43% | 88.50% | 88.90% | 8.34 G | ||

| √ | √ | √ | 89.32% | 88.80% | 88.93% | 89.32% | 8.34 G |

| Dataset | Model | AUC | Precision | F1-Score | Recall | FLOPs |

|---|---|---|---|---|---|---|

| CQU-BPMDD | MambaOut [16] | 92.4% | 90.3% | 90.5% | 90.6% | 2.31 G |

| DMTC [14] | 90.3% | 88.4% | 88.3% | 88.4% | 8.42 G | |

| DDACDN [32] | 89.6% | 87.9% | 87.7% | 87.9% | 7.81 G | |

| PicT [13] | 93.4% | 90.6% | 90.7% | 90.8% | 8.54 G | |

| MDEM | 96.25% | 93.03% | 93.05% | 93.11% | 8.34 G | |

| CQU-BPDD | MambaOut [16] | 92.5% | 90.4% | 90.4% | 90.5% | 2.31 G |

| DMTC [14] | 90.8% | 88.7% | 88.1% | 88.6% | 8.42 G | |

| DDACDN [32] | 90.2% | 88.3% | 87.9% | 88.5% | 7.81 G | |

| PicT [13] | 93.6% | 90.1% | 90.3% | 90.4% | 8.54 G | |

| MDEM | 94.21% | 91.33% | 91.37% | 91.44% | 8.34 G |

| Dataset | Model | Accuracy | Precision | F1-Score | Recall |

|---|---|---|---|---|---|

| CQU-BPMDD | MobileNetV3 [36] | 83.15% | 81.91% | 82.23% | 83.1% |

| MobileNetV2 [35] | 83.56% | 81.88% | 81.71% | 83.6% | |

| Alexnet [33] | 76.43% | 75.16% | 73.48% | 76.34% | |

| VGG16 [34] | 80.48% | 80.63% | 80.45% | 80.48% | |

| Swin-T [25] | 87.62% | 88.56% | 87.45% | 87.7% | |

| ViT [40] | 87.08% | 86.96% | 86.67% | 87.1% | |

| ViM [39] | 87.32% | 88.21% | 87.56% | 87.26% | |

| MambaOut [16] | 87.56% | 86.52% | 86.6% | 87.56% | |

| FFVT [37] | 82.56% | 82.85% | 81.62% | 82.42% | |

| TransFG [38] | 80.36% | 80.87% | 80.45% | 80.36% | |

| MDEM | 89.32% | 88.8% | 88.93% | 89.32% |

| Dataset | Model | Accuracy | Precision | F1-Score | Recall | FLOPs |

|---|---|---|---|---|---|---|

| CQU-BPMDD | MambaOut [16] | 87.56% | 86.52% | 86.6% | 87.56% | 2.31 G |

| DMTC [14] | 84.32% | 83.92% | 82.68% | 84.32% | 8.42 G | |

| DDACDN [32] | 81.62% | 81.37% | 80.93% | 81.62% | 7.81 G | |

| PicT [13] | 87.32% | 87.14% | 87.21% | 87.32% | 8.54 G | |

| MDEM | 89.32% | 88.8% | 88.93% | 89.32% | 8.34 G | |

| CQU-BPDD | MambaOut [16] | 87.37% | 88.61% | 87.63% | 87.35% | 2.31 G |

| DMTC [14] | 84.32% | 83.92% | 82.68% | 84.3% | 8.42 G | |

| DDACDN [32] | 83.62% | 83.73% | 83.57% | 83.61% | 7.81 G | |

| PicT [13] | 88.58% | 88.14% | 87.85% | 88.55% | 8.54 G | |

| MDEM | 89.07% | 89.71% | 88.93% | 89.07% | 8.34 G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Wang, K.; Li, P.; Huang, M.; Guo, J. MDEM: A Multi-Scale Damage Enhancement MambaOut for Pavement Damage Classification. Sensors 2025, 25, 5522. https://doi.org/10.3390/s25175522

Zhang S, Wang K, Li P, Huang M, Guo J. MDEM: A Multi-Scale Damage Enhancement MambaOut for Pavement Damage Classification. Sensors. 2025; 25(17):5522. https://doi.org/10.3390/s25175522

Chicago/Turabian StyleZhang, Shizheng, Kunpeng Wang, Pu Li, Min Huang, and Jianxiang Guo. 2025. "MDEM: A Multi-Scale Damage Enhancement MambaOut for Pavement Damage Classification" Sensors 25, no. 17: 5522. https://doi.org/10.3390/s25175522

APA StyleZhang, S., Wang, K., Li, P., Huang, M., & Guo, J. (2025). MDEM: A Multi-Scale Damage Enhancement MambaOut for Pavement Damage Classification. Sensors, 25(17), 5522. https://doi.org/10.3390/s25175522