1. Introduction

Medicinal plants play an irreplaceable role in traditional medicine due to their unique therapeutic properties. According to recent statistics, among the 2579 known medicinal plant species worldwide, 13% are listed as threatened on the International Union for Conservation of Nature (IUCN) Red List (2020) [

1]. This global trend underscores the urgency of conserving wild medicinal plants in regions such as Xinjiang. Notably, since the 1990s, over 60% of China’s grasslands have experienced degradation, compared with only 10% prior to the 1970s, and the rate of degradation continues to accelerate. In Northwest China, up to 95% of natural grasslands have undergone desertification or salinization as a result of environmental changes and anthropogenic activities [

2]. Such ecological degradation not only severely threatens the survival and development of local endangered medicinal plants but also poses significant challenges to socio-economic systems that rely on natural ecosystems.

Furthermore, the region harbors several rare medicinal plant species. Without timely and targeted conservation measures, many of these species face the risk of permanent extinction [

3]. Therefore, accurate identification and classification of medicinal plants are essential for both ecological conservation and the advancement of medical research.

Deep learning models provide strong support for efficient identification and classification of plant species. However, the accuracy of these models largely depends on the characteristics of the datasets [

4,

5].

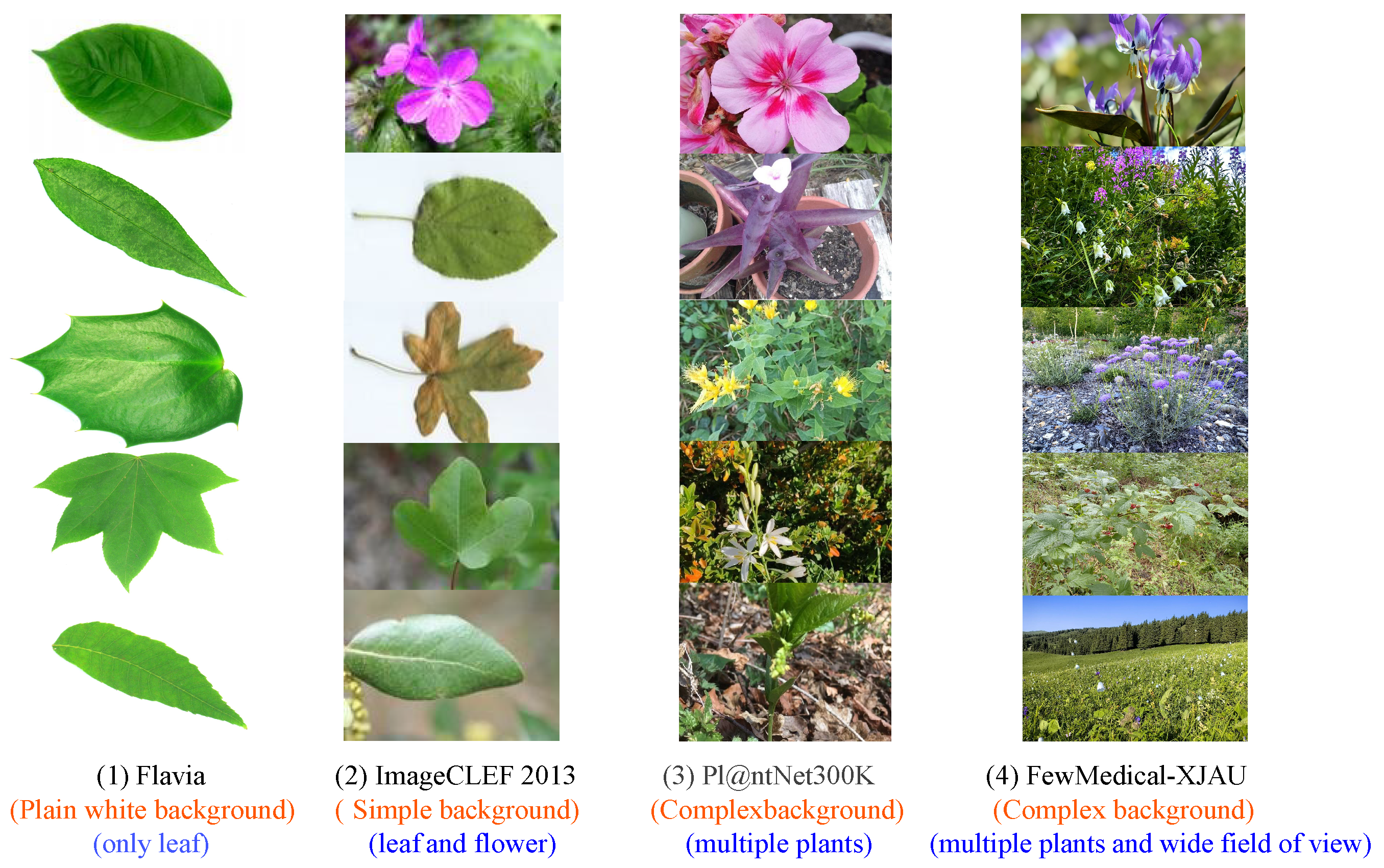

Early benchmark datasets such as Flavia (Wu et al., 2007) [

6] included only leaf images of 32 plant species with plain backgrounds. The ImageCLEF 2013 (Caputo et al. 2013) [

7] dataset expanded the number of categories to 250 and introduced diversity in backgrounds and plant organs, yet the backgrounds remained relatively simple and the classification hierarchy limited. Pl@ ntNet-300K (Garcin et al., 2021) [

8] significantly improved data diversity, but the image quality varied, annotation information was limited, and background complexity was still insufficient.

Although plant image databases have demonstrated multidimensional technical advancements in their design and construction, challenges remain. In terms of data acquisition, early datasets such as Flavia (Wu et al., 2007) [

6], Swedish Leaf ((Söderkvist et al. 2001) [

9], and LeafSnap (Kumar et al., 2012 ) [

10] relied on uniform white backgrounds and single-organ (leaf) imaging, ensuring feature visibility and annotation consistency. ImageCLEF 2013 (Caputo et al., 2013) [

7] introduced a dual classification scheme by adding plant organ types alongside species labels, and differentiated between plain and simple scene backgrounds, thereby enhancing data diversity. Further, Pl@ ntNet-300K (Garcin et al., 2021) [

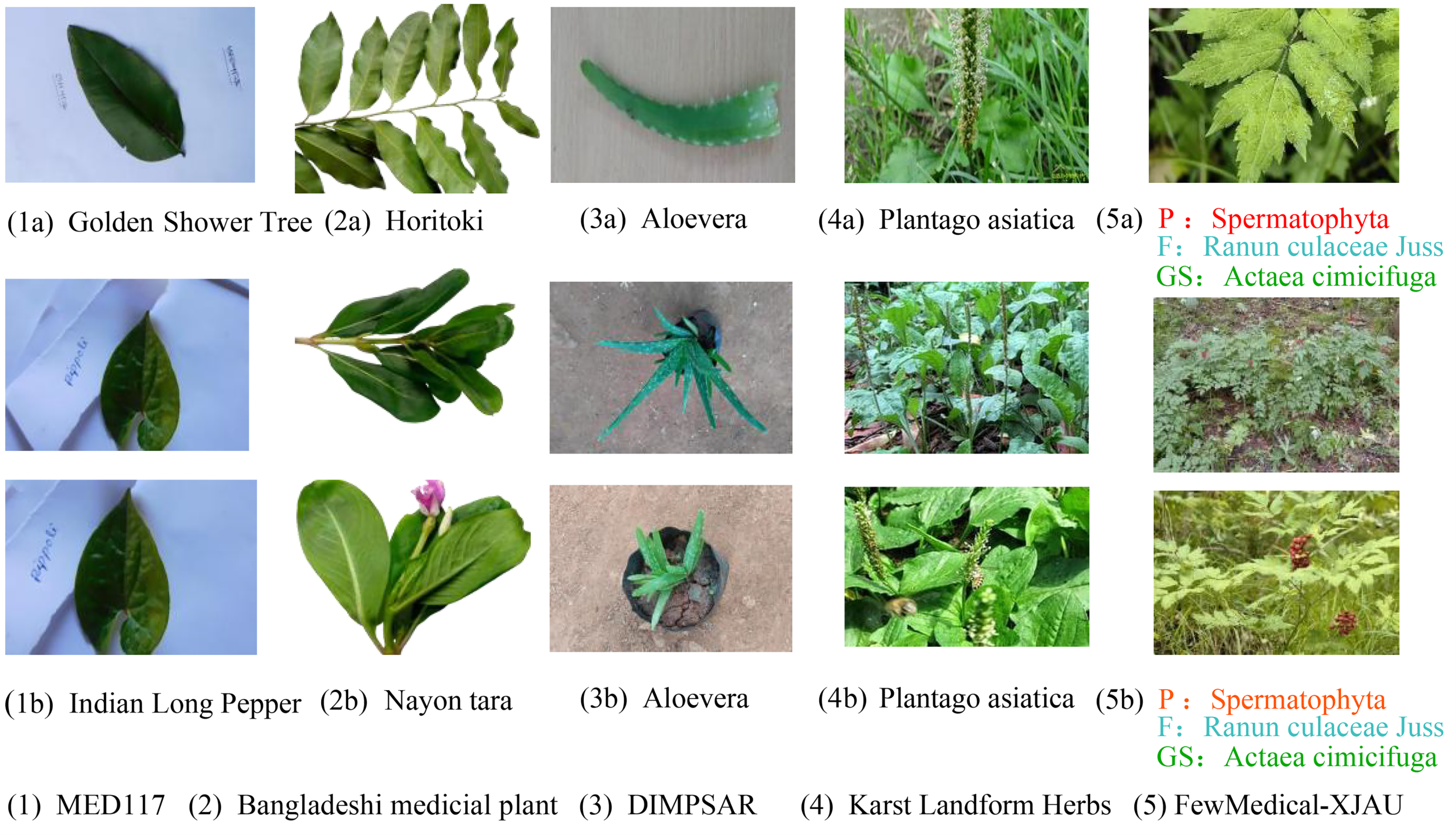

8] aggregated 300,000 images from worldwide distributions, covering over a thousand species, and significantly increased background complexity, although it still lacked wide-angle field-of-view samples. Among medicinal plant datasets, MED117 (Sarma et al., 2023) [

11] expanded intra-class samples by extracting frames from videos, but backgrounds and viewpoints remained highly consistent; the Bangladeshi medicinal plant dataset (Borkatulla et al., 2023) [

12] incorporated multiple leaf images to enrich morphological information; DIMPSAR (Pushpa et al., 2023) [

13] added whole-plant images; and Karst Landform Herbs (Tang et al., 2025) [

14] uniquely introduced complex natural backgrounds within medicinal datasets, combined with a dual-label system encompassing species and plant organs.

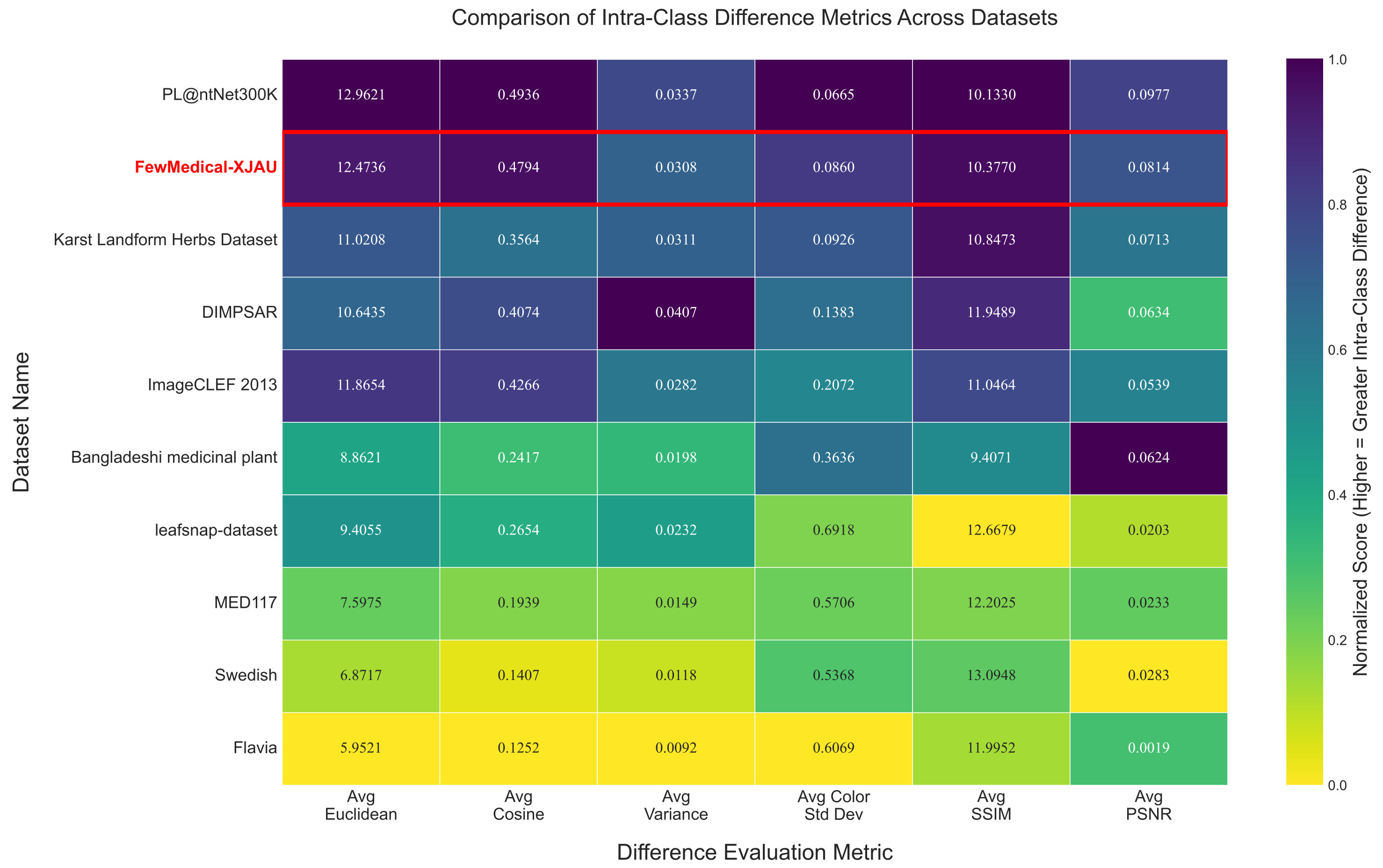

Despite these advancements, database construction faces several challenges. Some datasets exhibit insufficient species coverage, intra-class variability, and ecological diversity, limiting model generalization capabilities. Background complexity is polarized—either overly simplistic, failing to simulate real-world environments, or excessively cluttered in natural scenes, introducing noise. Annotation schemes vary significantly, ranging from flat species-level labels to multi-layered metadata annotations, but lack standardization; moreover, crowdsourced datasets are prone to mislabeling. Even expert-annotated datasets suffer from inadequate inter-expert consistency and deficient long-term version control, impeding cross-database integration and reproducible research.

An ideal plant image database should achieve comprehensive optimization in terms of species coverage, environmental diversity, temporal span, annotation schema, and quality control to meet the high standards required for fine-grained recognition and classification tasks.

Species Coverage and Ecological Representativeness

The database should encompass a wide range of plant species, including common, rare, endangered, and endemic taxa, supporting biodiversity monitoring, ecological conservation, and interdisciplinary research.

Environmental and Viewpoint Diversity

Images should be collected under diverse natural backgrounds, capturing variations in lighting, climate, and terrain to reflect authentic plant habitats and improve model generalization in complex environments. Multiple viewpoints (e.g., top, side, close-up, wide-angle) and scales (organ-level, individual-level, community-level) should be provided.

Temporal Dimension and Seasonal Variation

Data acquisition should span multiple seasons and time points to document the complete plant life cycle from germination to senescence, capturing dynamic changes in morphology, color, and structure, thereby facilitating phenotypic analysis and time-series modeling.

Scientific Annotation and Multimodal Extension

Annotations must adhere to strict botanical taxonomic standards, including hierarchical labels at least down to species and family levels, with fine-grained tags for plant parts and developmental stages when applicable. Multimodal metadata such as textual descriptions, structured attributes (e.g., leaf shape, flower color), geographic coordinates, and imaging conditions should be included to enrich contextual features for machine learning models.

Data Quality Control and Sustainable Management

Data collection should ensure image clarity and consistent exposure; annotations require multiple rounds of expert review and cross-validation to minimize errors and ambiguities. The database should implement version control and update mechanisms to ensure traceability and long-term usability, while providing open interfaces to facilitate academic and industrial sharing and reproducibility.

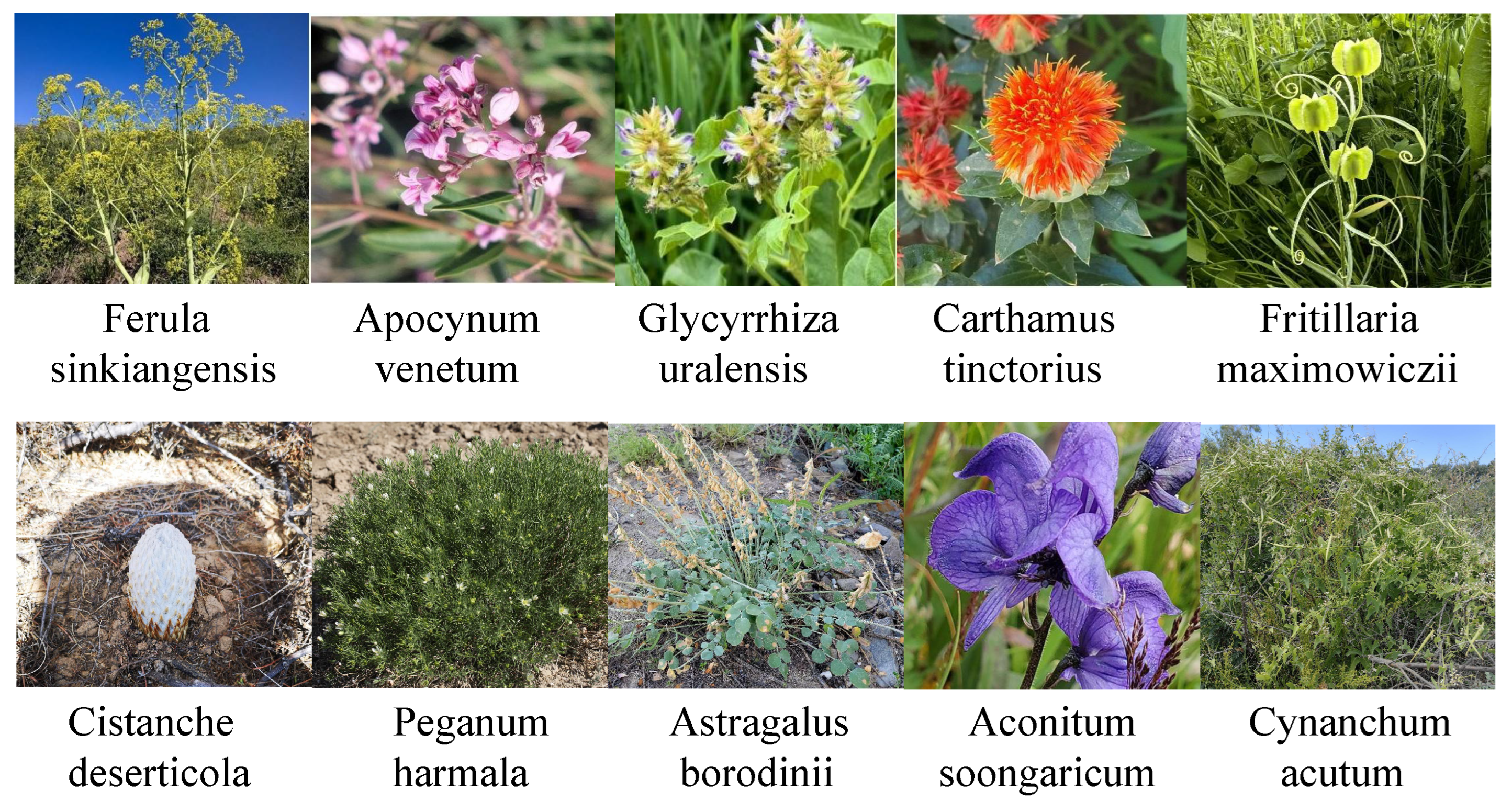

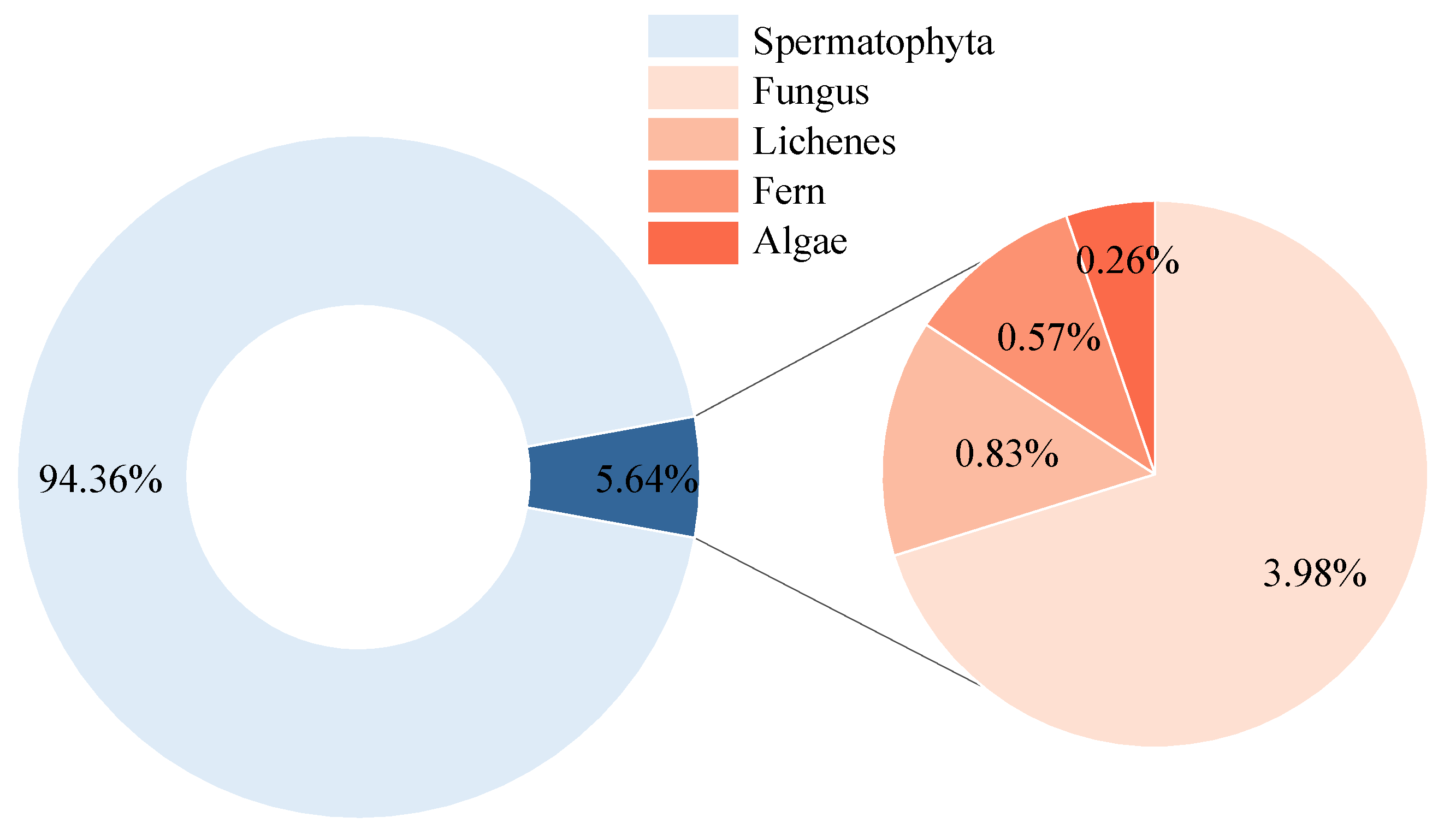

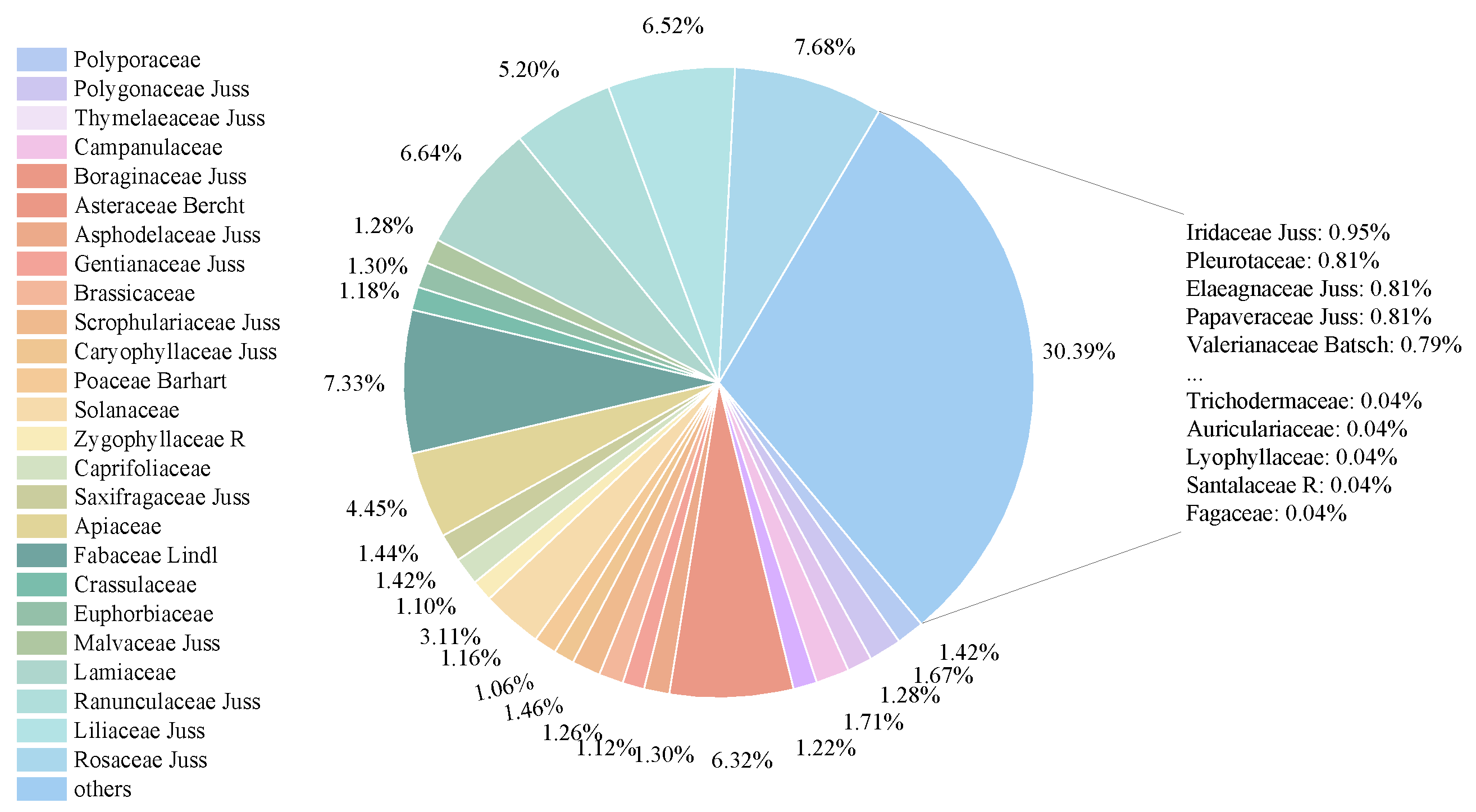

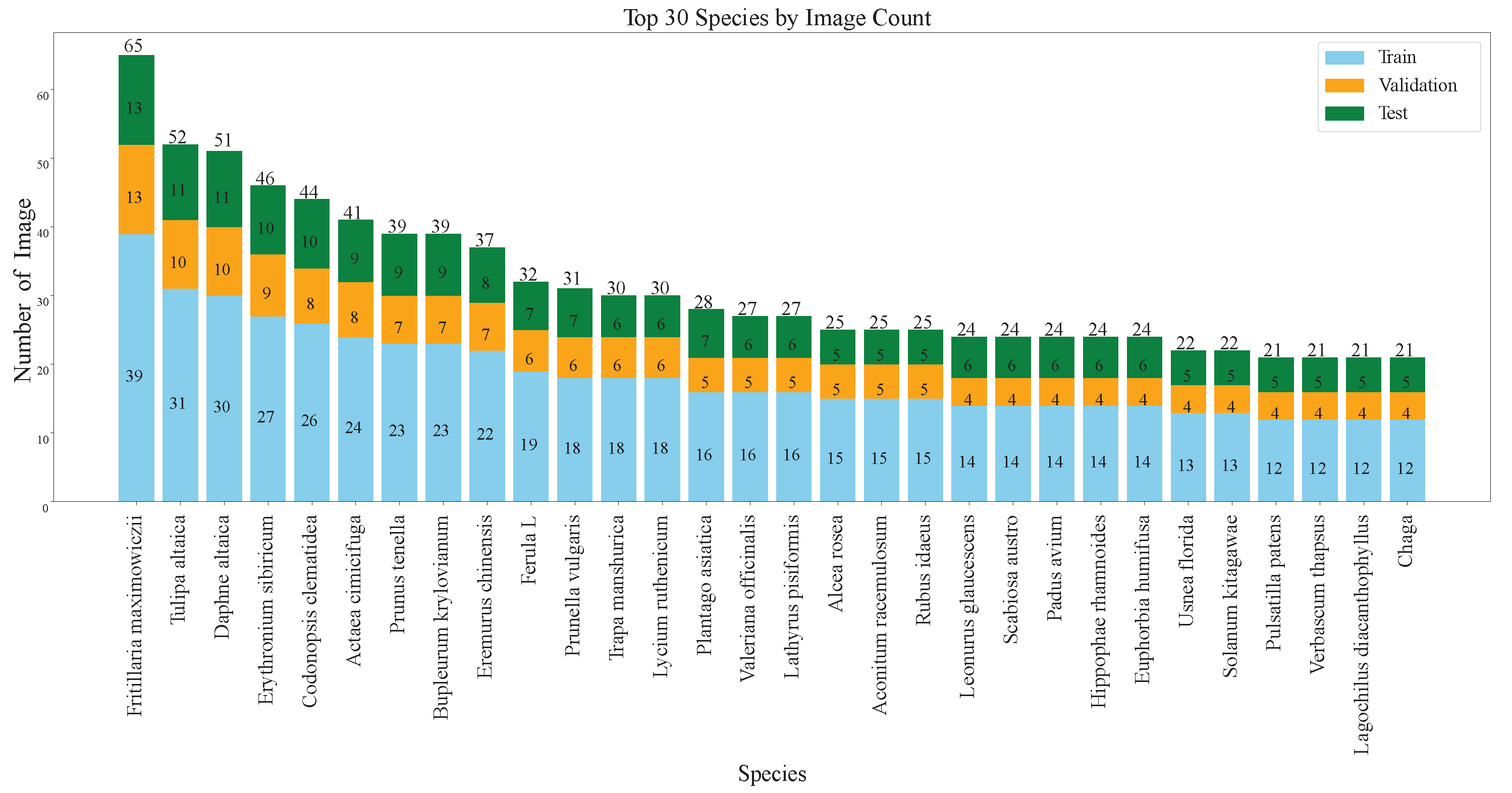

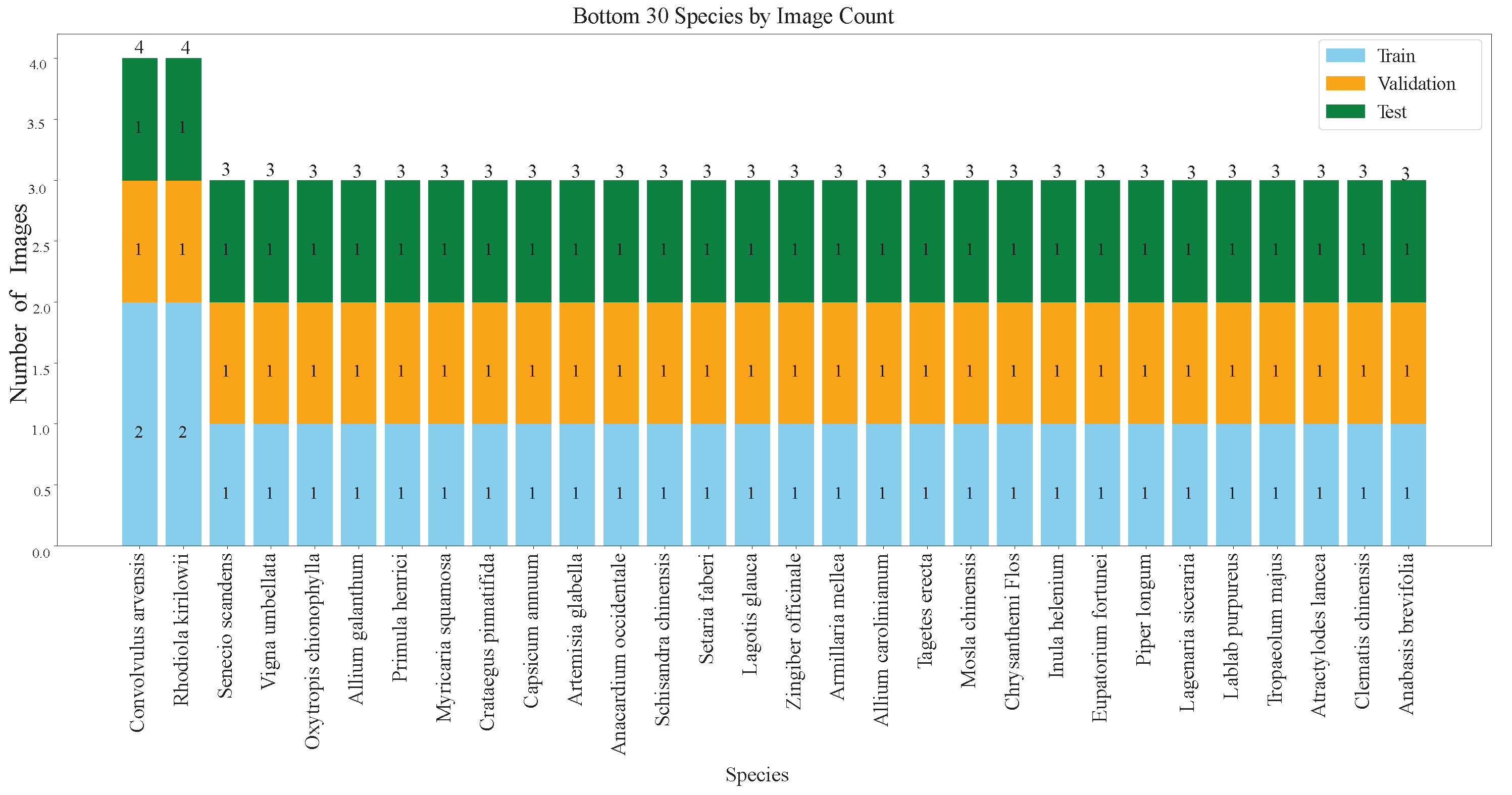

To address the aforementioned challenges and provide a benchmark, we constructed a rare medicinal plant image dataset named FewMedical-XJAU. The dataset features complex and varied backgrounds, rich species diversity, standardized annotations, and distinctive characteristics of the Xinjiang region. It contains a total of 4992 images covering 540 plant species, captured under diverse environments and from multiple angles.

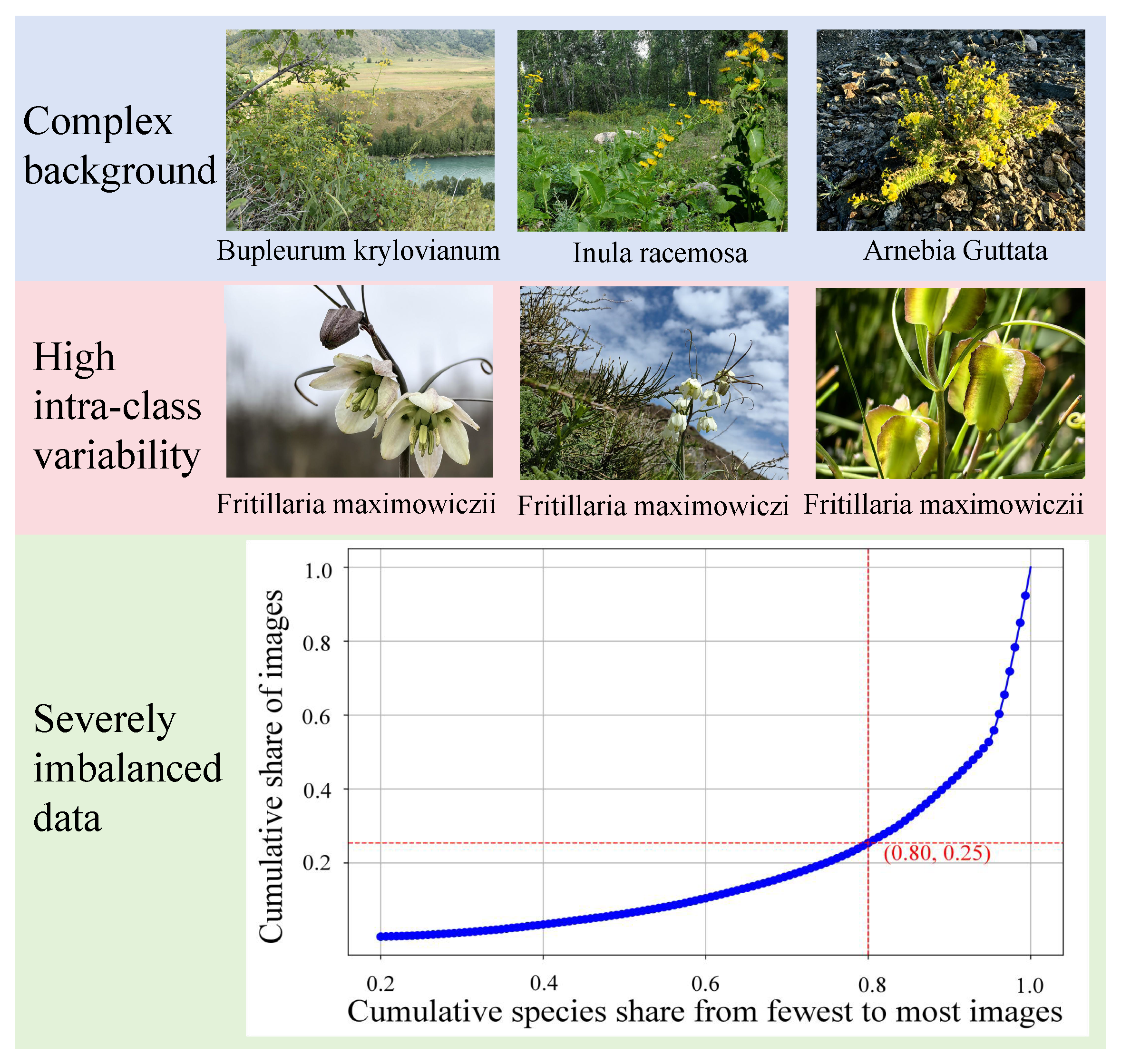

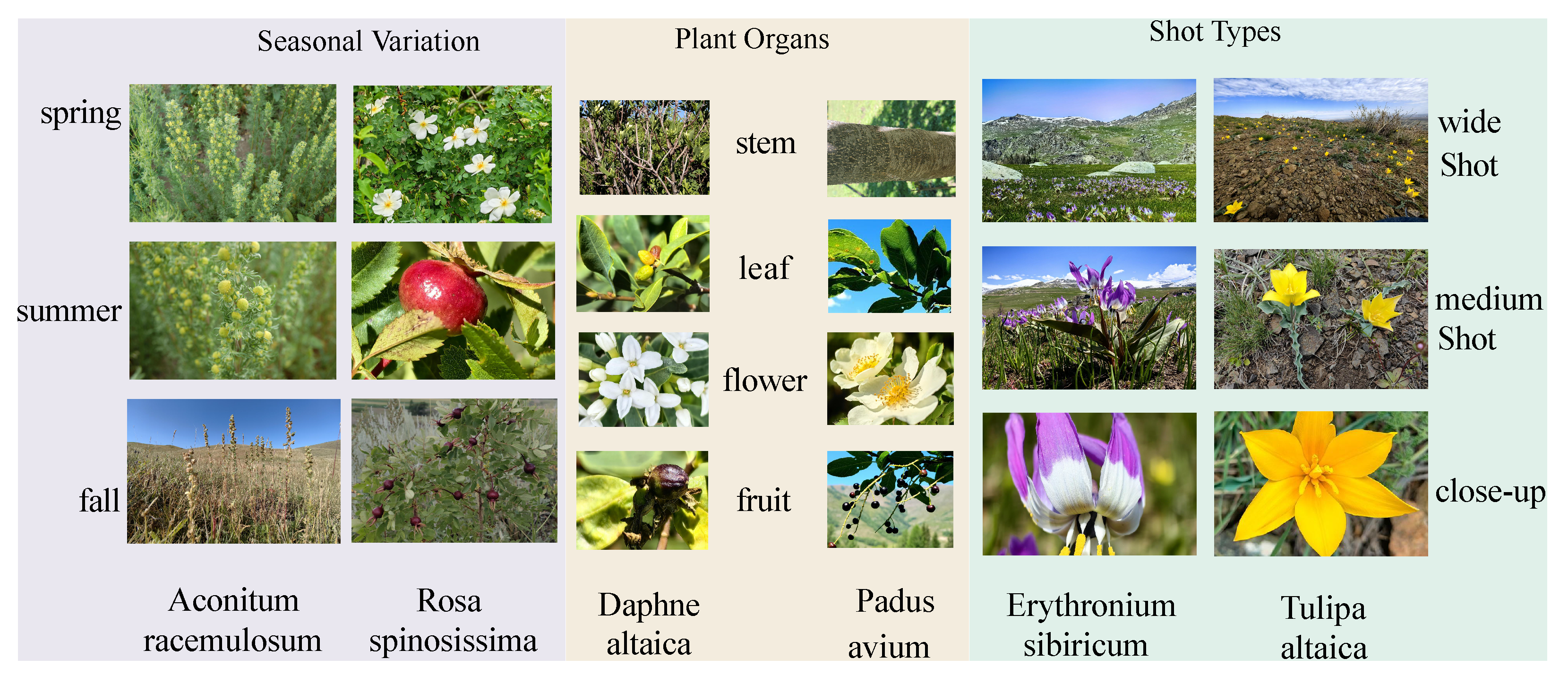

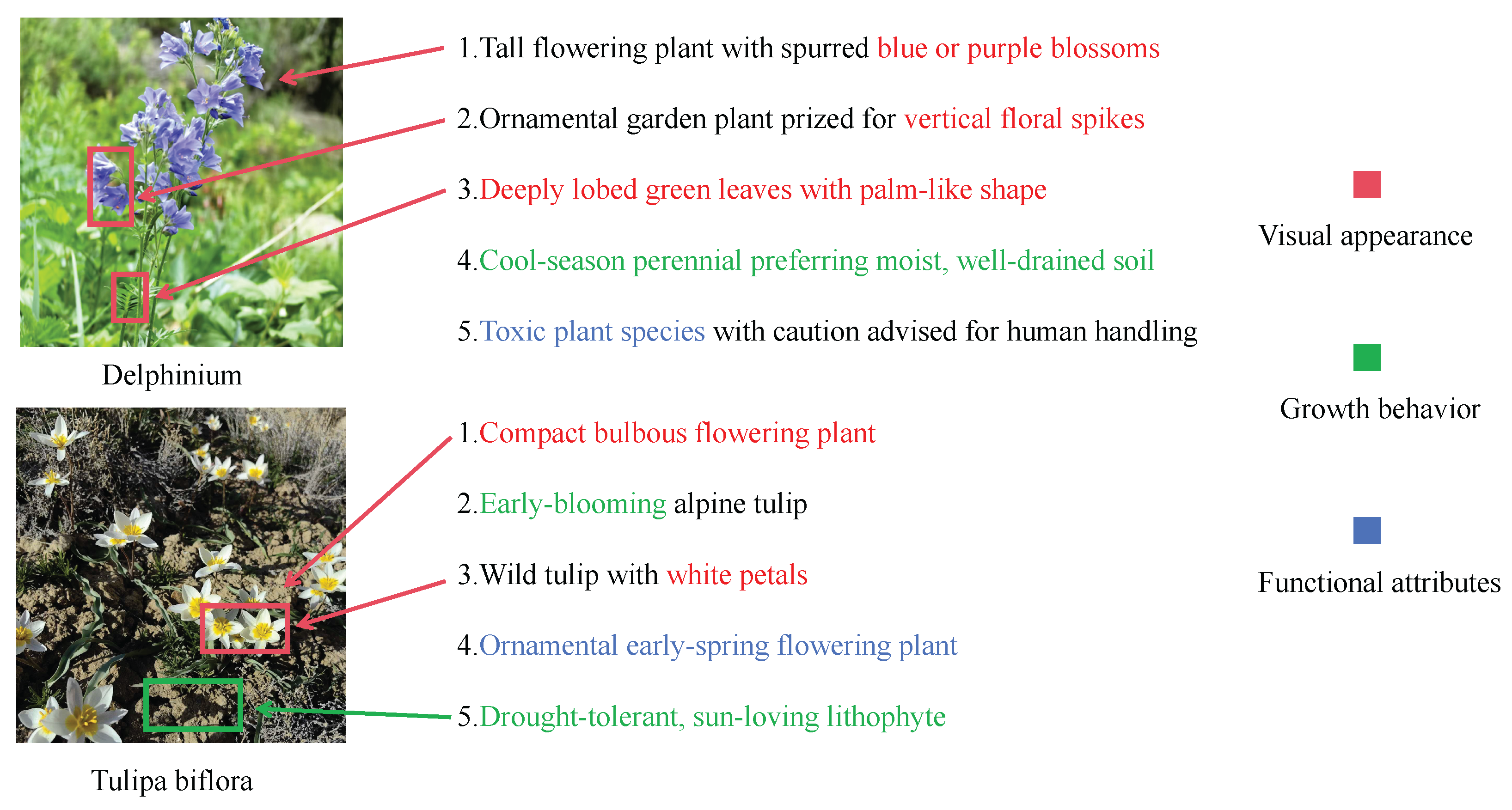

Figure 1 shows representative samples and the inherent characteristics of rare data in the dataset.

With the rise of Convolutional Neural Networks (CNNs), feature extraction for plant images has gradually shifted from handcrafted methods to automated deep learning approaches. Early studies mainly combined global and local features while optimizing model architectures to balance performance and computational cost. In recent years, various deep learning methods have been widely applied to plant classification, including CNN-based hybrid feature models, lightweight network designs, CNNs enhanced with optimization algorithms, and hybrid approaches combining handcrafted and deep features. Meanwhile, Transformer architectures and their variants, such as Vision Transformer (ViT), have demonstrated strong potential in large-scale and complex tasks.

Moreover, for rare or endangered plants with extremely limited samples, few-shot and zero-shot learning methods have become a research focus. Existing approaches primarily include transfer learning strategies and meta-learning frameworks. Transfer learning performs well when domain discrepancies are small, but its knowledge transfer efficiency drops significantly when the source and target domains differ greatly; meta-learning methods excel in rapid task adaptation but still exhibit limitations in capturing local fine-grained details in fine-grained classification scenarios.

At the same time, multimodal approaches have introduced new paradigms for cross-modal knowledge transfer. A representative example is the CLIP [

15] model, which, through large-scale image–text contrastive learning, maps visual and textual data into a shared semantic space, demonstrating strong generalization capabilities in zero-shot and fine-grained classification tasks. Nevertheless, existing CLIP-based methods for plant recognition remain constrained by insufficient semantic richness of category labels, a lack of contextual information, and inadequate characterization of subtle morphological differences under complex backgrounds. Particularly when dealing with plant categories exhibiting “large intra-class variation and small inter-class differences,” there remains significant room for improvement in both accuracy and stability.

To address the challenges in plant recognition—namely, large intra-class variation, small inter-class differences, complex natural scene backgrounds, and scarce samples—this paper proposes a few-shot classification framework based on bimodal collaborative enhancement, termed BDCC. This method integrates the covariance modeling advantages of DeepBDC with the semantic priors of CLIP [

15] through multimodal feature alignment and task-adaptive fusion, thereby enhancing discriminative power and robustness in fine-grained classification. BDCC employs structured textual prompts to generate category descriptions from multiple perspectives, including appearance and growth habits, aligning these with visual features in a shared space. This effectively mitigates confusion caused by complex backgrounds and visually similar categories, and dynamically allocates weights to visual and textual modalities based on their performance within each task, ensuring stable accuracy across diverse scenarios. Experimental results demonstrate that the proposed design effectively improves accuracy in fine-grained few-shot plant image classification, achieving the intended objectives.

The main contributions of this study are as follows:

- (1)

A rare medicinal plant image dataset from Xinjiang is constructed, highlighting its unique ecological and regional characteristics. Owing to species scarcity, the dataset is naturally suited for few-shot learning research.

- (2)

The images include complex backgrounds, multi-view angles, and seasonal variations, with large intra-class diversity and small inter-class differences, providing a solid foundation for fine-grained recognition in real-world conditions.

- (3)

A novel few-short learning framework that integrates image and text features is proposed, built on transfer learning. A class-aware structured text prompt method and an adaptive fusion strategy are introduced to enhance recognition robustness and accuracy.

4. Proposed Method

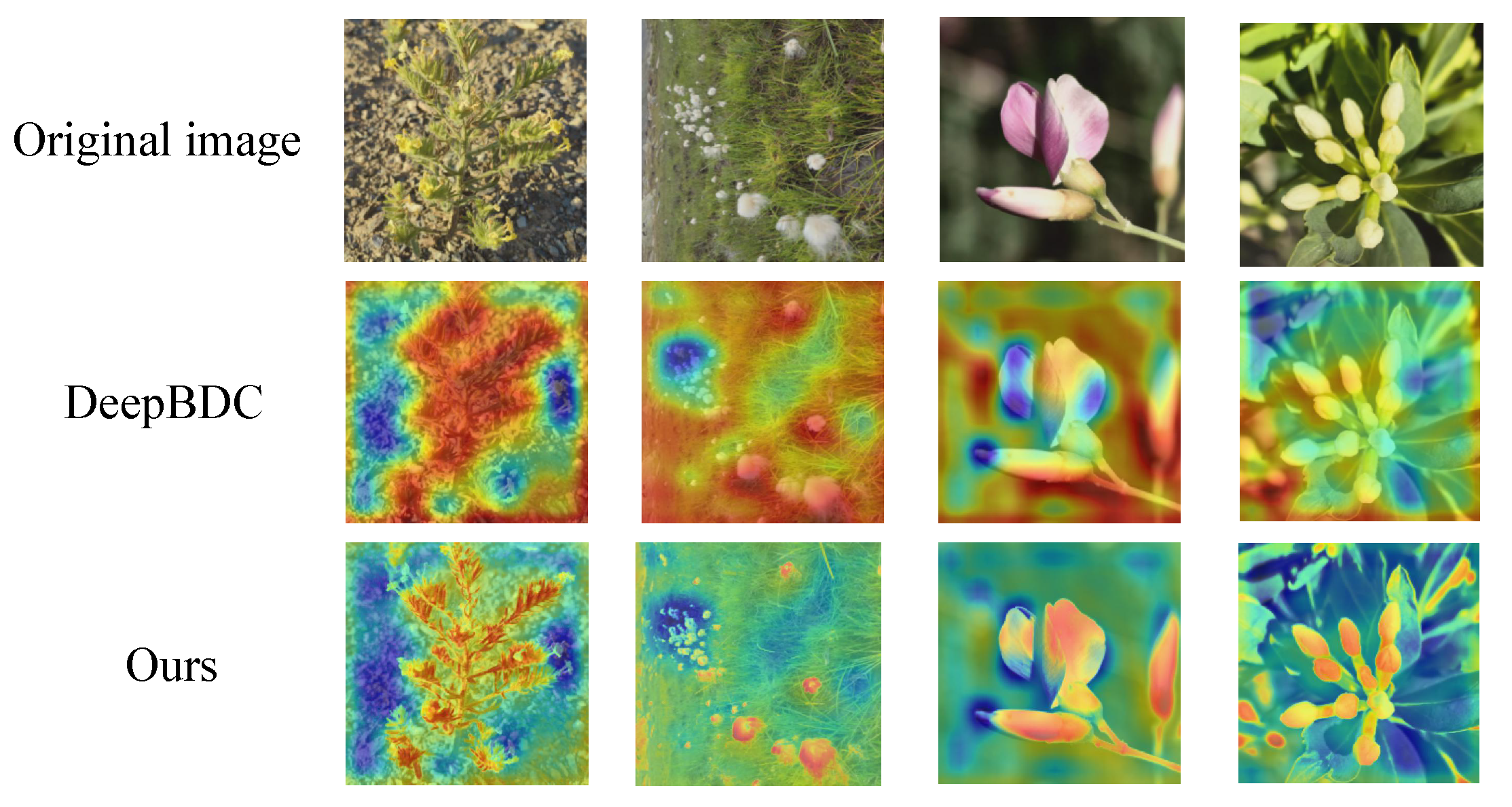

This chapter presents a few-shot image classification framework enhanced by cross-modal visual–textual integration. The capabilities of DeepBDC [

44] in capturing intra-class distributions through covariance modeling, and the effectiveness of CLIP [

15] in leveraging textual priors for fine-grained category discrimination, are studied. To address the challenges of large intra-class variance and subtle inter-class differences among plant species, a multimodal fusion strategy is incorporated within a deep metric learning framework.

To further enhance category representation and improve cross-modal generalization, a Class-Aware Structured Text Prompt Construction is proposed. This approach encodes rich semantic information—such as appearance characteristics, growth habits, and functional features—into the textual modality.

Finally, a task-adaptive fusion mechanism is introduced to dynamically weight each modality based on support set performance, thereby improving classification robustness under limited data conditions.

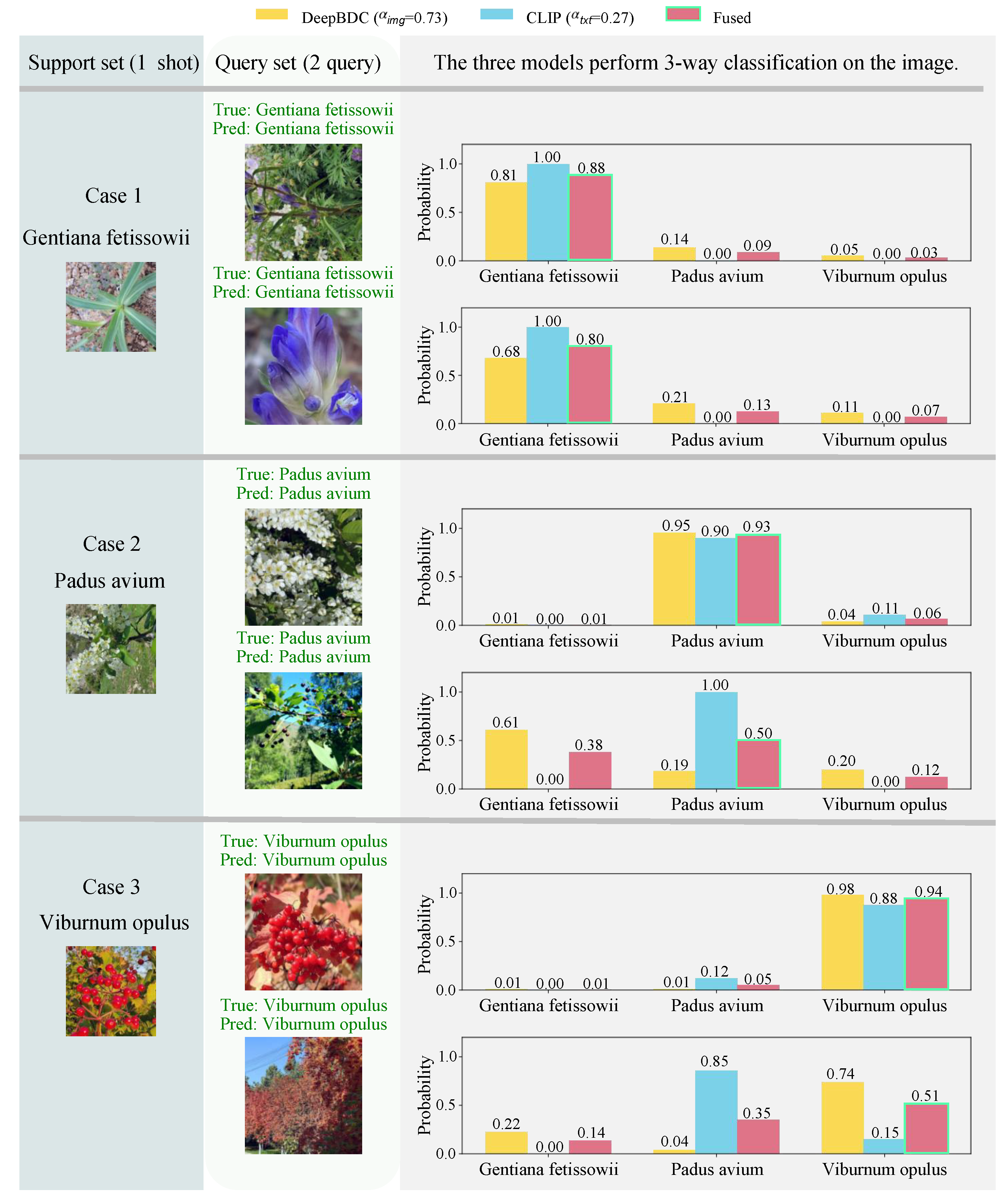

As illustrated in

Figure 9, the top-left box illustrates the use of the CLIP [

15] model to align visual information with class-aware structured text, producing a probability distribution representing the prediction from the text branch. The top-right box shows a second probability distribution generated by metric learning, representing the prediction from the pure visual branch. Finally, a dynamic fusion mechanism is applied to combine the two probability distributions, yielding the final prediction result.

4.1. Multimodal Feature Fusion Strategy

DeepBDC [

44] is a parameter-free spatial pooling layer designed to extract second-order statistical representations of images, thereby providing a more precise characterization of intra-class feature distributions. Given an input image

z, a parameterized network

maps it to a feature tensor of shape

, where

h and

w are the spatial dimensions, and

d is the number of channels. This tensor is then reshaped into a matrix

, where each row corresponds to a feature vector from a spatial location.

The Brownian Distance Covariance (BDC) representation of the image is computed through the following steps. First, a Euclidean distance matrix

is computed, where each element

represents the distance between feature vectors

and

:

Double-center the distance matrix

to obtain the final BDC matrix

A (the “BDC matrices” shown in

Figure 9):

In our method (as illustrated in

Figure 9), for each class in the support set (e.g., the “green” and “yellow” classes), we first generate a BDC matrix for every support sample using the backbone network and the DeepBDC module. Then, by averaging the BDC matrices of all support samples belonging to the same class element-wise, we obtain a compact class prototype matrix

(the “prototype” shown in

Figure 9), which serves as the representative of that class.

The similarity between the query image’s BDC matrix

and each class prototype

is computed using the matrix trace operation, as defined in the original DeepBDC paper [

44]:

This measures the dependency between the two distributions represented by the BDC matrices. Finally, the query is assigned to the class with the highest similarity score, enabling efficient and accurate classification with strong discriminative power and generalization capability under few-shot learning settings.

However, in scenarios involving visually similar yet semantically distinct categories, the model may suffer from misclassification. This limitation stems primarily from the exclusive reliance on visual modality, which lacks the incorporation of semantic priors related to class identity.

To compensate for the limitations of the image modality in semantic understanding, this study introduces the CLIP [

15] (Contrastive Language–Image Pretraining) model. CLIP [

15] leverages semantic prior knowledge from natural language prompts to construct a joint vision–language feature space.

For instance, by constructing prompts such as “a picture of a [plant species]”, CLIP [

15] can generate category embeddings that encapsulate relevant semantic information. These vectors serve as complementary representations to the class labels and help the model perform semantically guided differentiation of visual features.

Building upon this, a unified representation method, BDCC (Bilinear Deep Cross-modal Composition), is proposed to integrate image features extracted by DeepBDC [

44] and text features encoded by CLIP [

15] within a deep metric learning framework. Both modalities are first projected into a shared embedding space to achieve semantic alignment, followed by an efficient fusion mechanism that jointly models visual and textual information, effectively leveraging their complementary strengths.

4.2. Class-Aware Structured Text Prompt Construction

To enhance the model’s understanding of category-level semantics, we propose a Class-Aware Structured Text Prompt Construction strategy to replace the conventional single-template or prompt-ensemble methods typically used in CLIP [

15]. Specifically, as illustrated in

Figure 9, the Fine-grained Semantic Attributes Mining leverages GPT-4.1 mini to generate multiple semantically structured textual descriptions for each category, covering diverse aspects such as visual appearance, functional usage, and growth behavior. These generated descriptions are carefully reviewed to ensure correctness and consistency. Finally, for each category, five semantically relevant textual prompts are designed and stored in JSON format, as shown in

Figure 10.

Unlike the standard CLIP [

15] prompt templates such as

a photo of a class_name or

This is a photo of a class_name, the five category-specific descriptions are concatenated using English commas to generate a semantically rich compound sentence. For example, for the category

Delphinium, the resulting prompt is:

“Tall flowering plant with spurred blue or purple blossoms, ornamental garden plant prized for vertical floral spikes, deeply lobed green leaves with palm-like shape, cool-season perennial preferring moist, well-drained soil, toxic plant species with caution advised for human handling.”

This strategy effectively compresses and integrates category-related semantic information, enabling each prompt to convey not only the basic class name but also rich contextual and inter-class discriminative features [

45].

The implementation process is carried out by the text feature preparation module, which involves the following steps:

Reading multiple textual descriptions for each category from a predefined JSON file.

Concatenating these descriptions into a compound sentence using English commas.

Encoding the concatenated text using a pretrained language model to generate semantic embedding vectors.

4.3. Task-Adaptive Fusion Strategy Based on Support Set Performance with Weight Smoothing

4.3.1. Dynamic Weight Computation

For each test task (episode), the predictions of DeepBDC [

44] and CLIP [

15] are first obtained on the support set, and their cross-entropy losses with respect to the ground-truth labels are computed, denoted as

and

, respectively. To adaptively reflect the relative reliability of the two modalities in the current task, the raw weights are calculated as the inverse of the corresponding losses:

where

is a small constant to prevent division by zero. The weights are then normalized to obtain the fusion coefficients:

To avoid excessive bias toward a single modality,

and

are smoothed, improving stability across tasks. It is important to emphasize that these weights are not fixed during training; rather, they are computed in real time for each test task based on support set performance and used to fuse the query set predictions. This per-task dynamic weighting strategy follows the concept of dynamic multimodal decision weighting in few-shot learning [

46,

47], ensuring that the final prediction relies more on the modality that performs better on the current task’s support set, thereby enabling efficient and adaptive multi-modal fusion.

Furthermore, the method supports unimodal operation: when text prompts are unavailable, and only the image modality is used; conversely, when image data is missing or of poor quality, and the model operates solely based on the text modality, enhancing practical applicability and robustness under varying modality availability.

In the bottom panel of

Figure 9, solid green lines indicate that support set images are processed through the CLIP [

15] image encoder, while their corresponding species names are converted into textual descriptions via Fine-grained Semantic Attributes Mining (see

Section 4.2). These textual descriptions are stored as JSON files and encoded using the CLIP [

15] text encoder, with the green arrow representing the resulting BDCC text-branch weights for the support set. Red dashed lines indicate the BDCC image-branch weight computation; purple dashed lines denote the query set predictions from the BDCC text branch; and blue arrows indicate the BDCC image-branch predictions. Finally, the weights from both branches are applied to their respective predictions and fused into the final output, as indicated by the black arrow in the figure.

4.3.2. Weight Smoothing Mechanism

In practical tasks, the limited size of the support set may lead to significant fluctuations in loss values due to data variance, potentially causing extreme bias in the fusion weights toward a single modality and compromising overall prediction stability. To mitigate this, a weight smoothing mechanism is introduced, which linearly interpolates the dynamic fusion weights as follows:

where

is the smoothing factor (set to 0.5 in this work). When

, the fusion is fully dynamic; when

, the fusion reduces to an equal average. This mechanism effectively mitigates the instability caused by extreme weight allocations.

4.3.3. Fusion Prediction and Classification

With the smoothed weights obtained, the predictions of DeepBDC [

44] and CLIP [

15] on the query set are converted to probabilities by applying softmax, and then fused by weighted summation:

where

and

represent the class probability distributions predicted by the image and text models on the query set, respectively. The final predicted class corresponds to the category with the highest probability in

.