MineVisual: A Battery-Free Visual Perception Scheme in Coal Mine

Abstract

1. Introduction

- How to achieve stable and efficient energy harvesting from the mine ventilation system to power computation-intensive visual sensing under fluctuating wind conditions;

- How to design a lightweight ML model capable of dynamically adapting its computational complexity in real-time to maintain accuracy under constrained and variable power availability.

- The performance of lightweight deep neural network (DNN) models (including accuracy, inference latency, and energy efficiency) when executing critical safety monitoring tasks in the resource-constrained underground coal mine environment;

- The feasibility and effectiveness of the energy-aware adaptive pruning mechanism on ultra-low-power microcontroller platforms under real fluctuating power.

2. Scheme Overview

3. Scheme Design

3.1. Energy Harvesting in Coal Mine

3.2. Energy-Aware Model Pruning Mechanism

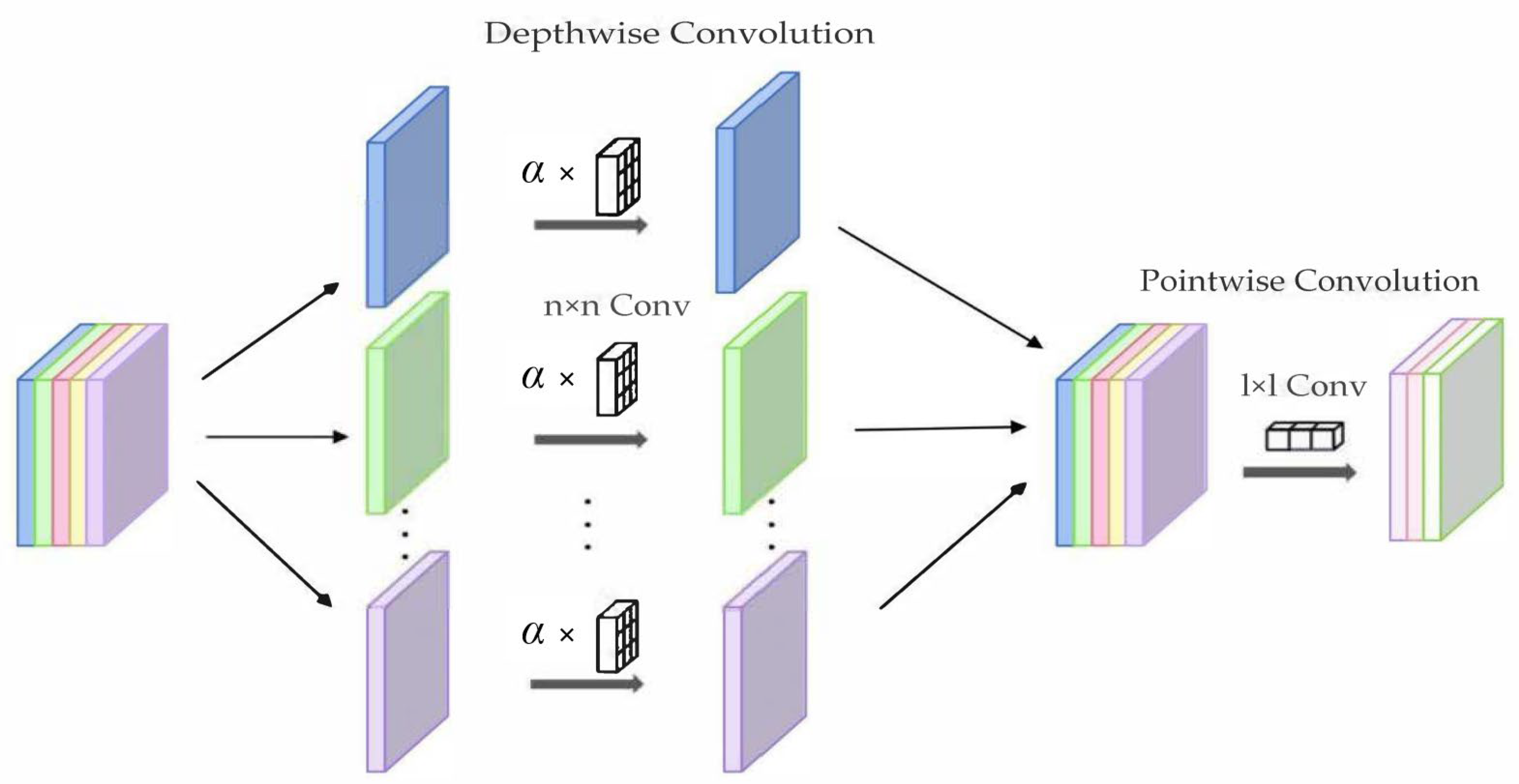

3.2.1. Network-Layer Pruning

3.2.2. Neuron Pruning

3.2.3. Pruning Strategy

| Algorithm 1. Low-Energy Consumption Model Based on Energy Awareness | |

| 1: | Input: P (real-time wind power), (average wind power over a time window), λ (scaling factor for energy state sensitivity), N (total network layers), M (total neurons per layer), trainSet, valSet |

| 2: | Output: Optimized neural network model |

| 3: | M ← initial-DNN(N, M)//Construct lightweight DNN with N layers and M neurons per layer |

| 4: | //Define energy state factor |

| 5: | β ← 1//Set initial pruning factor |

| 6: | for each training iteration do |

| 7: | β ← α//Set pruning factor based on energy state |

| 8: | Prune β·N layers with least importance//Perform layer pruning based on importance ranking |

| 9: | Prune β·M neurons per layer with least contribution//Perform neuron pruning |

| 10: | Fine-tune the pruned model M on trainSet//Fine-tune the model |

| 11: | end for |

| 12: | Evaluate the pruned model M on valSet//Evaluate the model on the validation set |

| 13: | if performance degradation exceeds threshold then |

| 14: | Roll back the last pruning operation//Restore the previous state |

| 15: | end if |

| 16: | Adjust energy state factor dynamically if P changes significantly//Recalculate pruning factor if P fluctuates |

| 17: | repeat |

| 18: | Repeat steps 3–9//Continue pruning and adjusting |

| 19: | until convergence or significant energy state change return optimized model M//Output the final optimized model |

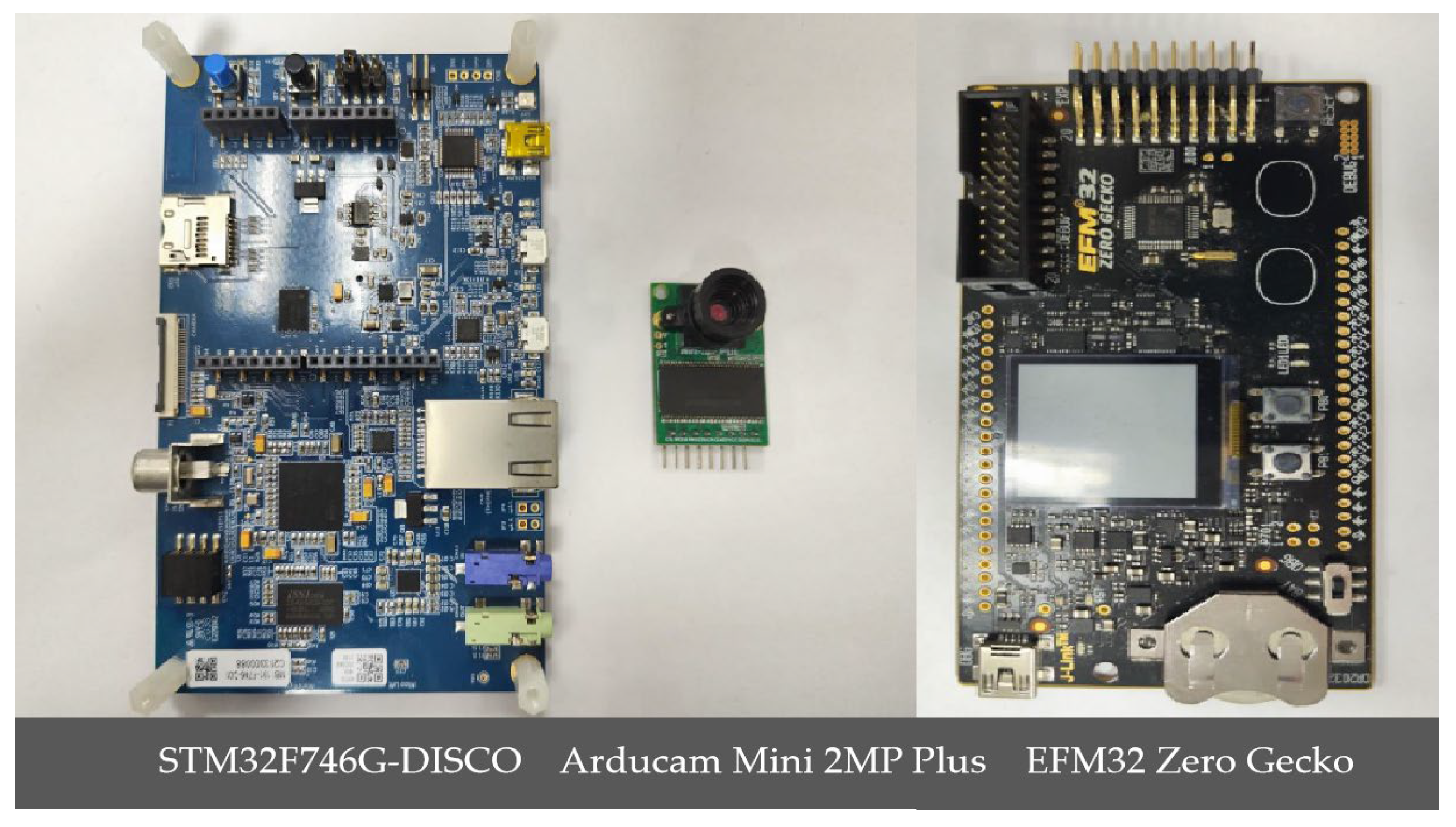

3.3. Battery-Free Inference in Mine

4. Experiments

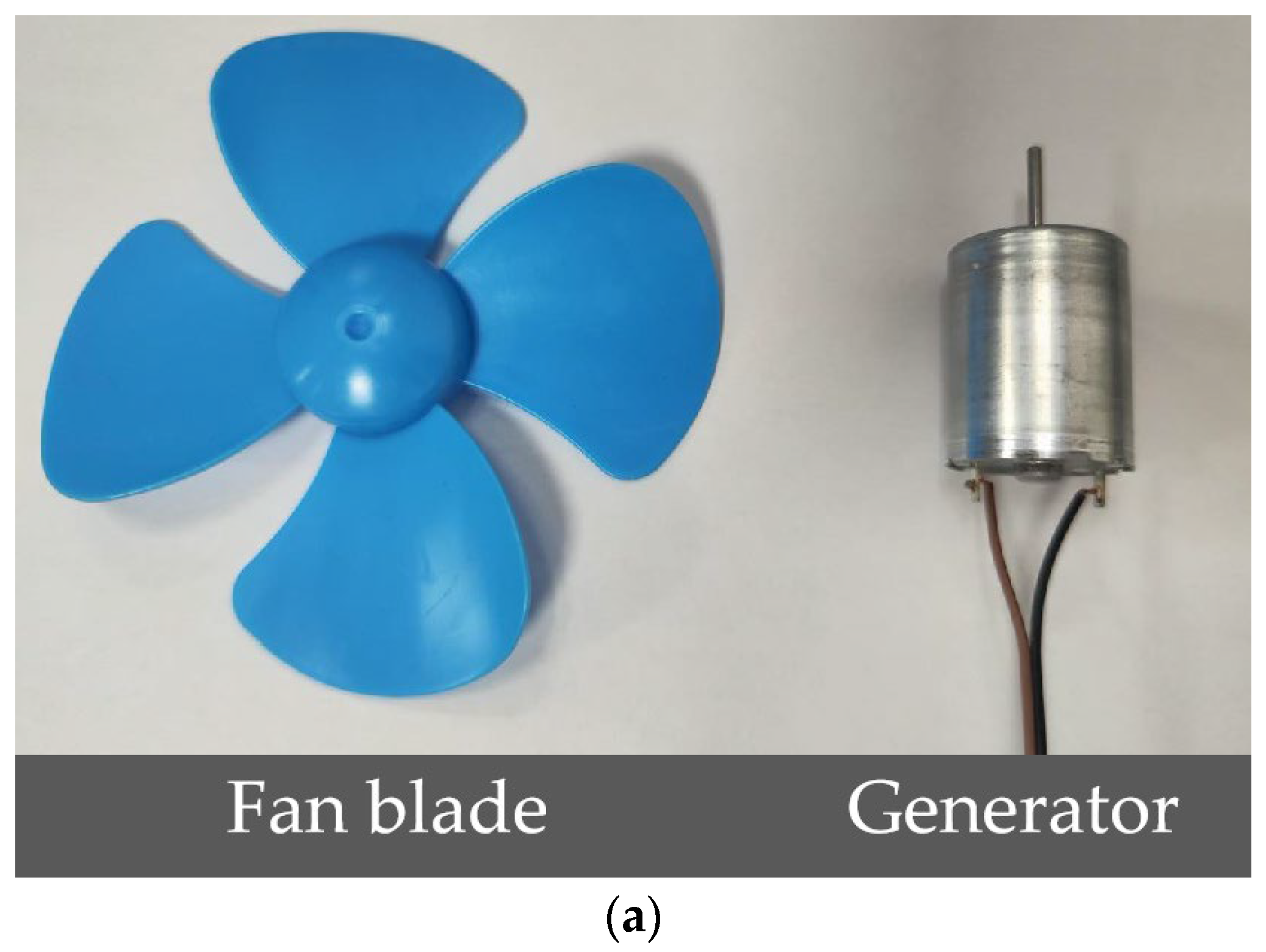

4.1. Wind Power Generation Device

4.2. Model Performance Evaluation

4.2.1. Benchmark Performance and Energy Grading

4.2.2. Dynamic Pruning Strategy Validation

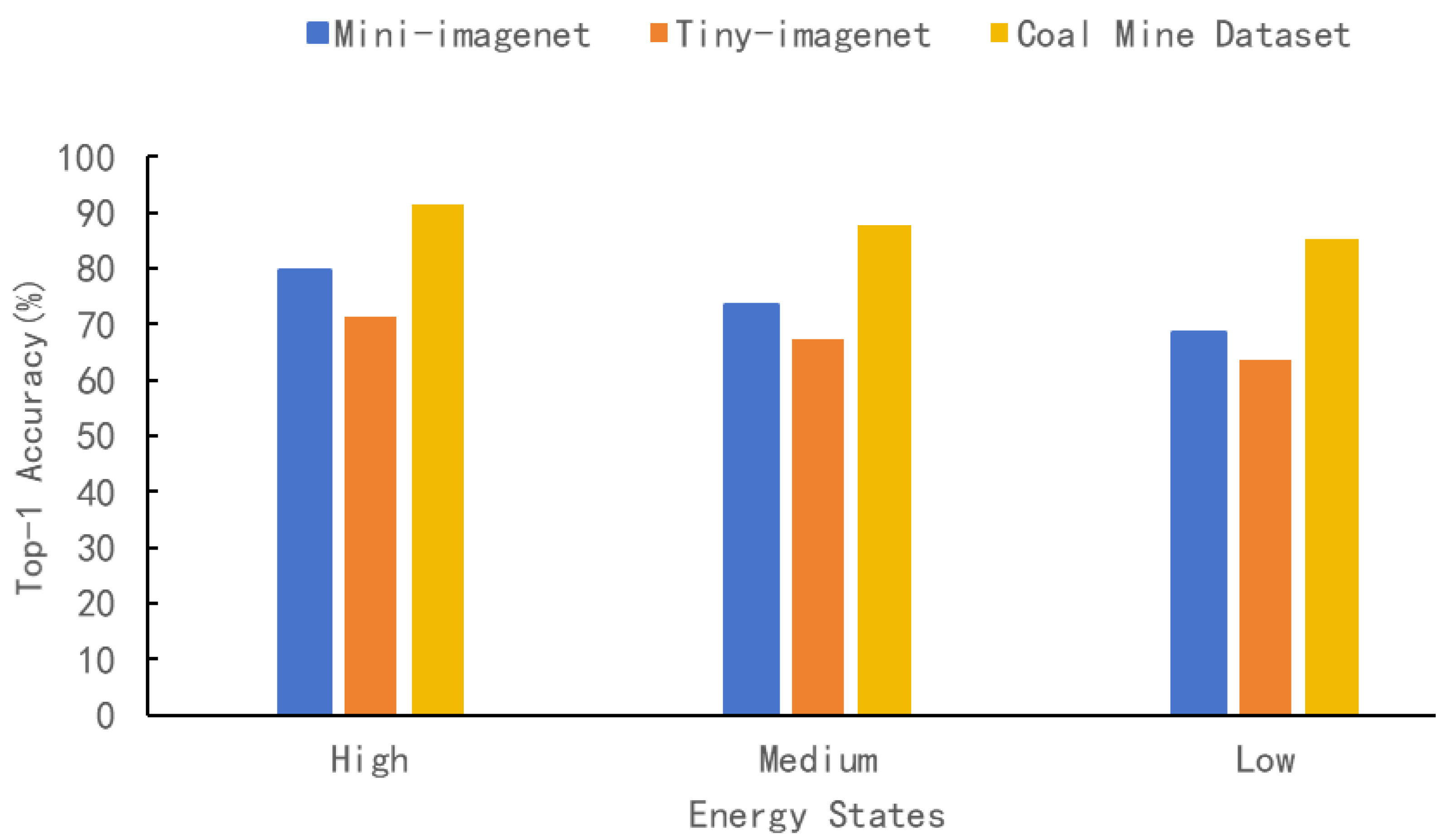

4.2.3. Robustness Evaluation Across Heterogeneous Datasets

4.2.4. Quantization Impact on Deployment Efficiency

4.2.5. Ablation Study on Energy-Aware Pruning

4.3. System-Level Energy Efficiency Assessment

- (1)

- Inference Power: Active power during model execution;

- (2)

- Inference Time: Duration per inference pass;

- (3)

- Inference Energy: Total energy consumed during inference (Einf = Pinf × tinf);

- (4)

- Total Runtime: End-to-end latency (image capture, preprocessing, inference);

- (5)

- Total Energy: System-wide consumption (Etotal = Pavg × ttotal).

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| TENGs | triboelectric nanogenerators |

| NFC | near-field communication |

| ML | machine learning |

| DNN | deep neural network |

| DSC | depthwise separable convolution |

| MSA | mean squared activation |

| ADC | analog-to-digital converter |

| FLOPs | floating-point operations |

References

- Avila, B.Y.L.; Vazquez, C.A.G.; Baluja, O.P.; Cotfas, D.T.; Cotfas, P.A. Energy harvesting techniques for wireless sensor networks: A systematic literature review. Energy Strategy Rev. 2025, 57, 101617. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, Y.; Deng, L.; Zhu, X.; Xu, C.; Xie, L.; Yang, Q.; Zhang, H. Efficient electrical energy conversion strategies from triboelectric nanogenerators to practical applications: A review. Nano Energy 2024, 132, 110383. [Google Scholar] [CrossRef]

- Li, J.; Cheng, L.; Wan, N.; Ma, J.; Hu, Y.; Wen, J. Hybrid harvesting of wind and wave energy based on triboelectric-piezoelectric nanogenerators. Sustain. Energy Technol. Assess. 2023, 60, 103466. [Google Scholar] [CrossRef]

- Gong, X.R.; Wang, X.Y.; Zhang, H.H.; Ye, J.C.; Li, X. Various energy harvesting strategies and innovative applications of triboelectric-electromagnetic hybrid nanogenerators. J. Alloys Compd. 2024, 1009, 176941. [Google Scholar] [CrossRef]

- Lazaro, A.; Villarino, R.; Lazaro, M.; Canellas, N.; Prieto-Simon, B.; Girbau, D. Recent advances in batteryless NFC sensors for chemical sensing and biosensing. Biosensors 2023, 13, 775. [Google Scholar] [CrossRef]

- Xu, F.; Hussain, T.; Ahmed, M.; Ali, K.; Mirza, M.A.; Khan, W.U.; Ihsan, A.; Han, Z. The state of AI-empowered backscatter communications: A comprehensive survey. IEEE Internet Things J. 2023, 10, 21763–21786. [Google Scholar] [CrossRef]

- Cui, Y.; Luo, H.; Yang, T.; Qin, W.; Jing, X. Bio-inspired structures for energy harvesting self-powered sensing and smart monitoring. Mech. Syst. Signal Process. 2025, 228, 112459. [Google Scholar] [CrossRef]

- Cai, Z.; Chen, Q.; Shi, T.; Zhu, T.; Chen, K.; Li, Y. Battery-free wireless sensor networks: A comprehensive survey. IEEE Internet Things J. 2022, 10, 5543–5570. [Google Scholar] [CrossRef]

- Liu, L.; Shang, Y.; Berbille, A.; Willatzen, M.; Wang, Y.; Li, X.; Li, L.; Luo, X.; Chen, J.; Yang, B.; et al. Self-powered sensing platform based on triboelectric nanogenerators towards intelligent mining industry. Nat. Commun. 2025, 16, 5141. [Google Scholar] [CrossRef]

- Belle, B. Real-time air velocity monitoring in mines a quintessential design parameter for managing major mine health and safety hazards. In Proceedings of the 13th Coal Operators’ Conference, Warsaw, Poland, 14–15 February 2013; pp. 184–198. [Google Scholar]

- Yeboah, D.; Ackor, N.; Abrowah, E. Evaluation of wind energy recovery from an underground mine exhaust ventilation system. J. Eng. 2023, 2023, 8822475. [Google Scholar] [CrossRef]

- Fazlizan, A.; Chong, W.T.; Yip, S.Y.; Hew, W.P.; Poh, S.C. Design and experimental analysis of an exhaust air energy recovery wind turbine generator. Energies 2015, 8, 6566–6584. [Google Scholar] [CrossRef]

- Zhao, L.C.; Zou, H.X.; Wei, K.X.; Zhou, S.X.; Meng, G.; Zhang, W.M. Mechanical intelligent energy harvesting: From methodology to applications. Adv. Energy Mater. 2023, 13, 2300557. [Google Scholar] [CrossRef]

- Zishan, S.; Molla, A.H.; Rashid, H.; Wong, K.H.; Fazlizan, A.; Lipu, M.S.H.; Tariq, M.; Alsalami, O.M.; Sarker, M.R. Comprehensive analysis of kinetic energy recovery systems for efficient energy harnessing from unnaturally generated wind sources. Sustainability 2023, 15, 15345. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar] [CrossRef]

- Nazari, N.; Salehi, M.E. Inter-layer hybrid quantization scheme for hardware friendly implementation of embedded deep neural networks. In Proceedings of the Great Lakes Symposium on VLSI 2023 (GLSVLSI), Knoxville, TN, USA, 5–7 June 2023; pp. 193–196. [Google Scholar] [CrossRef]

- Chu, Y.; Liu, K.; Jiang, S.; Sun, X.; Wang, B.; Wang, Z. Meta-pruning: Learning to Prune on Few-Shot Learning. In Proceedings of the 17th International Conference on Knowledge Science, Engineering and Management (KSEM), Birmingham, UK, 16–18 August 2024; pp. 74–85. [Google Scholar] [CrossRef]

- Wang, R.; Sun, H.; Nie, X.; Yin, Y. SNIP-FSL: Finding task-specific lottery jackpots for few-shot learning. Knowl. Based Syst. 2022, 247, 108427. [Google Scholar] [CrossRef]

- Zhao, Y.; Afzal, S.S.; Akbar, W.; Rodriguez, O.; Mo, F.; Boyle, D.; Adib, F.; Haddadi, H. Towards battery-free machine learning and inference in underwater environments. In Proceedings of the 23rd Annual International Workshop on Mobile Computing Systems and Applications (HotMobile ’22), Tempe, AZ, USA, 9–10 March 2022; pp. 29–34. [Google Scholar] [CrossRef]

- Afzal, S.S.; Akbar, W.; Rodriguez, O.; Doumet, M.; Ha, U.; Ghaffarivardavagh, R.; Adib, F. Battery-free wireless imaging of underwater environments. Nat. Commun. 2022, 13, 5546. [Google Scholar] [CrossRef] [PubMed]

- Dong, W.; Sheng, K.; Huang, B.; Xiong, K.; Liu, K.; Cheng, X. Stretchable self-powered TENG sensor array for human robot interaction based on conductive ionic gels and LSTM neural network. IEEE Sens. J 2024, 24, 37962–37969. [Google Scholar] [CrossRef]

- State Administration of Coal Mine Safety. Coal Mine Safety Regulation; State Administration of Coal Mine Safety: Beijing, China, 2022; p. 67. [Google Scholar]

- Chen, F.; Li, S.; Han, J.; Ren, F.; Yang, Z. Review of Lightweight Deep Convolutional Neural Networks. Arch. Comput. Method Eng. 2024, 31, 1915–1937. [Google Scholar] [CrossRef]

- Liu, H.I.; Galindo, M.; Xie, H.; Wong, L.K.; Shuai, H.H.; Li, Y.H.; Cheng, W.H. Lightweight deep learning for resource-constrained environments: A survey. ACM Comput. Surv. 2024, 56, 267. [Google Scholar] [CrossRef]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the 30th International Conference on Neural Information Processing Systems (NIPS), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Le, Y.; Yang, X. Tiny imagenet visual recognition challenge. CS 231N 2015, 7, 3. [Google Scholar]

- Cheng, D.Q.; Xu, J.Y.; Kou, Q.Q.; Zhang, H.X.; Han, C.G.; Yu, B.; Qian, J.S. Lightweight network based on residual information for foreign body classification on coal conveyor belt. J. China Coal Soc. 2022, 47, 1361–1369. [Google Scholar] [CrossRef]

- Kou, Q.; Cheng, Z.; Cheng, D.; Chen, J.; Zhang, J. Lightweight super resolution method based on blueprint separable convolution for mine image. J. China Coal Soc. 2024, 49, 4038–4050. [Google Scholar] [CrossRef]

- Cheng, H.; Zhang, M.; Shi, J.Q. A survey on deep neural network pruning: Taxonomy, comparison, analysis, and recommendations. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10558–10578. [Google Scholar] [CrossRef]

- Augustin, A.; Yi, J.; Clausen, T.H.; Townsley, W.M. A Study of LoRa: Long Range & Low Power Networks for the Internet of Things. Sensors 2016, 16, 1466. [Google Scholar] [CrossRef]

| Underwater Backscatter Imaging [19] | Underwater Battery-Free ML [20] | TESS [9] | TENG-SA [21] | MineVisual (Ours) | |

|---|---|---|---|---|---|

| Primary Feature | Wireless underwater imaging | Marine/underwater environmental monitoring | Air quality monitoring in gold mines | Human motion monitoring and human–robot interaction (HRI) | Safety monitoring in coal mines |

| Energy Source | Underwater sound | Underwater sound | Wind energy | Mechanical energy | Wind energy |

| Communication Method | Acoustic backscatter | Acoustic backscatter | LoRa | Bluetooth | LoRa |

| Processing and Intelligence | On-board processing unit (FPGA) controls image capture, data packetization, and communication | Small CNNs trained offline, Keras2C implementation | A data acquisition module aggregates and compiles data from multiple sensors | LSTM-based network with adjustable complexity | On-device deep learning inference with a lightweight model (EADP-Net) |

| Key Performance | Average power: ~276 µW (active imaging) data rate: ~1 kbps | Total energy/inference: 5.40 mJ accuracy: ~63% (small sample dataset) | Starting windspeed: 0.32 m/s power density: 16.36 mW/m2 lifetime: >3 months | Output voltage range: 1.8–10.5 V; recognition accuracy: ~90% | Inference energy: 6.89 mJ (low power) accuracy: 68.6–91.5% (top-1) |

| Target Environment | Underwater environments | Underwater environments | Underground gold mines | Wearable, on-body applications | Underground coal mines |

| Energy State | Real-Time Power Output |

|---|---|

| High | p > 90 mW |

| Medium | 40 mW < p < 90 mW |

| Low | p < 40 mW |

| Energy Status | Params | FLOPs | SRAM | Flash | Top-1 | Top-5 | |

|---|---|---|---|---|---|---|---|

| (M) | (M) | (KB) | (KB) | Acc (%) | Acc (%) | ||

| Original | High | 0.41 | 39.59 | 483.12 | 1610.56 | 79.7 | 86.7 |

| Pruning (Layer) | Medium | 0.38 | 29.86 | 457.23 | 1402.1 | 78.6 | 83.2 |

| Low | 0.36 | 21.17 | 387.2 | 1186.35 | 74.5 | 80.1 | |

| Pruning (Neurons) | Medium | 0.39 | 31.47 | 468.53 | 1215.36 | 78.1 | 83.1 |

| Low | 0.35 | 19.82 | 363.91 | 1076.54 | 73.2 | 79.7 | |

| Pruning (Layer-Neurons) | Medium | 0.35 | 19.27 | 359.56 | 1056.35 | 73.6 | 78.2 |

| Low | 0.28 | 15.26 | 312.78 | 979.56 | 68.6 | 75.3 |

| Energy Status | Mini-ImageNet | Tiny-ImageNet | Coal Mine Dataset | ||

|---|---|---|---|---|---|

| Top-1 (%) | Top-5 (%) | Top-1 (%) | Top-5 (%) | Top-1 (%) | |

| High | 79.7 | 86.7 | 71.2 | 76.5 | 91.5 |

| Medium | 73.6 | 78.2 | 67.2 | 71.4 | 87.7 |

| Low | 68.6 | 75.3 | 63.5 | 68.6 | 85.2 |

| Quant | Energy Status | SRAM (KB) | Flash (KB) | Inference Latency (ms) | Top-1 (%) | ||

|---|---|---|---|---|---|---|---|

| MINI-ImageNet | Coal Mine | MINI-ImageNet | Coal Mine | ||||

| FP32 | High | 483.12 | 1610.56 | 357 | 400 | 79.7 | 91.5 |

| Medium | 359.56 | 1056.35 | 289 | 324 | 73.6 | 87.7 | |

| Low | 312.78 | 979.56 | 248 | 278 | 68.6 | 85.2 | |

| FP16 | High | 356.17 | 1214.32 | 276 | 309 | 77.3 | 88.9 |

| Medium | 327.71 | 968.13 | 266 | 298 | 76.5 | 85.2 | |

| Low | 294.11 | 912.74 | 215 | 241 | 68.1 | 82.7 | |

| Int8 | High | 287.5 | 963.12 | 226 | 253 | 74.6 | 86 |

| Medium | 274.36 | 888.32 | 202 | 226 | 73.2 | 82.3 | |

| Low | 250.17 | 816.15 | 193 | 216 | 66.2 | 79.9 | |

| Config | Quant. | Pruning | Top-1 (%) | Top-5 (%) | Flash (KB) | SRAM (KB) | Latency (ms) | Notes |

|---|---|---|---|---|---|---|---|---|

| A | FP32 | ✗ | 79.7 | 86.7 | 1610.56 | 483.12 | 357 | Baseline-FP32 (High) |

| B-M | FP32 | EAP (mid) | 73.6 | 78.2 | 1056.35 | 359.56 | 289 | Pure pruning (Medium) |

| B-L | FP32 | EAP (low) | 68.6 | 75.3 | 979.56 | 312.78 | 280 | Pure pruning (Low) |

| C | INT8 | ✗ | 74.6 | 79.3 | 963.12 | 287.50 | 226 | Quantization only (High) |

| D-M | INT8 | EAP (mid) | 69.5 | 72.3 | 631.81 | 213.97 | 183 | (INT8 + Pruning, Medium) |

| D-L | INT8 | EAP (low) | 65.0 | 69.9 | 585.58 | 186.13 | 177 | (INT8 + Pruning, Low) |

| Energy Status | Inference Power | Inference Time | Inference Energy | Total Runtime | Total Energy |

|---|---|---|---|---|---|

| (mW) | (ms) | (mJ) | (ms) | (mJ) | |

| High | 102.8 | 226 | 23.23 | 329 | 28.95 |

| Medium | 68.1 | 202 | 13.76 | 332 | 21.24 |

| Low | 35.7 | 193 | 6.89 | 359 | 13.49 |

| Metric | Value/Description |

|---|---|

| End-to-end energy per cycle | ~30–35 mJ (including sensing, inference, and LoRa transmission) |

| Harvesting range | Self-sustainable at wind speeds ≥ 3.7 m/s (tested with micro-turbine energy harvester) |

| Wireless protocol | LoRa (868/915 MHz band), max payload 51 bytes per packet |

| Functionality | Image sensing (low-resolution grayscale), on-device EAP-Net inference, LoRa transmission of results |

| Payload size | ~32 KB image before compression; inference results compressed to <1 KB for LoRa |

| Energy breakdown | Sensing: ~3–5 mJ; inference: ~6.9–23.2 mJ (depending on pruning level); transmission: ~5–7 mJ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Bao, Z.; Li, S.; Yang, X.; Niu, Q.; Yang, M.; Chen, S. MineVisual: A Battery-Free Visual Perception Scheme in Coal Mine. Sensors 2025, 25, 5486. https://doi.org/10.3390/s25175486

Li M, Bao Z, Li S, Yang X, Niu Q, Yang M, Chen S. MineVisual: A Battery-Free Visual Perception Scheme in Coal Mine. Sensors. 2025; 25(17):5486. https://doi.org/10.3390/s25175486

Chicago/Turabian StyleLi, Ming, Zhongxu Bao, Shuting Li, Xu Yang, Qiang Niu, Muyu Yang, and Shaolong Chen. 2025. "MineVisual: A Battery-Free Visual Perception Scheme in Coal Mine" Sensors 25, no. 17: 5486. https://doi.org/10.3390/s25175486

APA StyleLi, M., Bao, Z., Li, S., Yang, X., Niu, Q., Yang, M., & Chen, S. (2025). MineVisual: A Battery-Free Visual Perception Scheme in Coal Mine. Sensors, 25(17), 5486. https://doi.org/10.3390/s25175486