Abstract

This paper presents a bilinear framework for real-time speech source separation and dereverberation tailored to wearable augmented reality devices operating in dynamic acoustic environments. Using the Speech Enhancement for Augmented Reality (SPEAR) Challenge dataset, we perform extensive validation with real-world recordings and review key algorithmic parameters, including the forgetting factor and regularization. To enhance robustness against direction-of-arrival (DOA) estimation errors caused by head movements and localization uncertainty, we propose a region-of-interest (ROI) beamformer that replaces conventional point-source steering. Additionally, we introduce a multi-constraint beamforming design capable of simultaneously preserving multiple sources or suppressing known undesired sources. Experimental results demonstrate that ROI-based steering significantly improves robustness to localization errors while maintaining effective noise and reverberation suppression. However, this comes at the cost of increased high-frequency leakage from both desired and undesired sources. The multi-constraint formulation further enhances source separation with a modest trade-off in noise reduction. The proposed integration of ROI and LCMP within the low-complexity frameworks, validated comprehensively on the SPEAR dataset, offers a practical and efficient solution for real-time audio enhancement in wearable augmented reality systems.

1. Introduction

Real-time speech source separation, dereverberation, and background noise suppression are essential to improve intelligibility and audio quality in diverse acoustic environments [1,2,3,4,5]. These processes are vital for applications including augmented reality (AR) [6], teleconferencing, and hearing aids [7,8,9,10,11,12,13]. The increasing demand for robust solutions in dynamic acoustic environments presents considerable challenges, including rapidly changing acoustic conditions, frequent head movements, and the presence of multiple speakers.

Deep learning (DL) methods have transformed the speech enhancement landscape, achieving state-of-the-art results across various tasks, including denoising, dereverberation, and source separation [14]. Architectures such as deep neural networks, recurrent neural networks, convolutional networks, and transformers have demonstrated remarkable effectiveness [15,16,17]. Particularly in challenging dynamic scenarios involving multiple speakers, head movements, and background noise—such as those presented in the SPEAR Challenge—DL models frequently dominate benchmarks [18]. Approaches that combine neural networks with classical beamforming, such as mask-based beamforming [19,20] and fully neural beamformers [21], have been particularly successful.

Despite these advances, DL methods typically require extensive computational resources and large training datasets, limiting their suitability for real-time deployment on wearable AR devices with constrained power and processing capacity. Moreover, their performance often deteriorates in unseen acoustic environments. Consequently, traditional signal processing techniques remain essential in such scenarios.

Beamforming is a spatial filtering technique that combines signals from multiple microphones to emphasize sounds from a desired direction while suppressing sounds from other directions [22,23]. Among the different approaches, the minimum variance distortionless response (MVDR) beamformer [24] is effective at separating sources; however, it often depends on additional information, such as the covariance matrices of the desired source and interference, as well as a voice activity detector (VAD). This reliance on such data can make it challenging to implement MVDR in real-time applications. On the other hand, the minimum power distortionless response (MPDR) beamformer does not require VAD, yet it remains vulnerable to steering vector errors [25], which are inevitable in dynamic environments. Region-of-interest (ROI) beamforming was recently proposed [26,27,28,29,30] to address this issue, offering a near-distortionless response over an angular sector rather than a single direction distortionless response.

Although beamformers suppress directional interference, they do not directly address late reverberation, which can significantly degrade speech intelligibility. Multichannel linear prediction (MCLP) has become a widely adopted method for estimating and removing these reverberant components from observed signals [2]. MCLP can be implemented in both the time domain and the short-time Fourier transform (STFT) domain [31]. Among its variants, the weighted prediction error (WPE) algorithm has shown powerful performance in reducing late reverberation [32,33]. However, additive noise, which exists in realistic acoustic environments, affects the correlation between observations and degrades the dereverberation performance. To mitigate this, beamforming is often applied as a spatial pre-filter to suppress noise and improve the reliability of MCLP-based estimation.

To jointly perform denoising and dereverberation, MCLP-based methods have been integrated with beamforming techniques such as the generalized sidelobe canceller [34], MVDR and weighted MPDR (wMPDR) [35,36]. Although these methods enhance overall performance, they also increase computational complexity. A more efficient approach combines beamforming and dereverberation in a bilinear framework via the Kronecker product [37,38,39]. This approach decouples spatial and temporal filtering, reducing computation while preserving effectiveness. Recently, such a bilinear MPDR-WPE design employing point-source steering with recursive least squares (RLSs) [40,41] cost functions demonstrated promising real-time source separation performance [42].

In this work, we first validate the framework by conducting a comprehensive parameter study of the previously proposed bilinear MPDR-WPE algorithm, assessing the influence of forgetting factor and regularization on real recordings from the SPEAR Challenge dataset [18,43], which captures realistic head-motion and multi-talker AR scenarios. As part of this study, we provide a comprehensive validation of the SPEAR dataset, highlighting its utility and the challenges it presents for robust real-time audio processing. Based on the optimal configurations identified in this study, we then introduce two extensions within the bilinear framework. First, we integrate ROI beamforming to improve resilience to DOA mismatches and reduce sensitivity to head motion. Second, we incorporate a linearly constrained minimum power (LCMP) beamforming design [44,45], enabling greater control over the spatial response—such as preserving multiple sources or suppressing known undesired sources—when additional DOA is available. Experimental results indicate that the baseline MPDR-WPE, its ROI-based variant, and the LCMP extension all effectively reduce interference, mitigate reverberation, and preserve target speech. While ROI steering enhances robustness to localization errors at the cost of some high-frequency leakage, LCMP offers better source separation at the cost of slightly degrading noise reduction. Together, these complementary designs extend the applicability of the framework to a broader range of dynamic wearable AR scenarios.

The remainder of the paper is organized as follows: Section 2 describes the signal model. Section 3 introduces the bilinear framework that combines beamforming with dereverberation. Section 4 presents a real-time RLS-based algorithm tailored for dynamic environments. Section 5 provides a comprehensive dataset and parameter analysis, evaluates ROI beamforming under steering errors, examines associated trade-offs, introduces multi-constraint beamforming, and compares the framework to DL methods.

2. Signal Model

We model a dynamic acoustic environment using real recordings from the SPEAR Challenge dataset. The scene includes multiple speakers, reverberation, background noise, and head movements, all captured by an array of M microphones. The analysis is performed in the STFT domain.

The multichannel observation vector at time frame ℓ and frequency bin k is given by

where denotes the STFT coefficient of the m-th microphone. The observed signal can be modeled as

where is the desired speech component at the reference microphone, is the late reverberation, is background noise, and represents interference from speech sources. The vector denotes the relative transfer function (RTF) for the target source arriving from direction at time frame ℓ and represented by

where is the elevation angle and is the azimuth angle.

The RTF is defined as

where is the acoustic transfer function from the target to the m-th microphone, and is the transfer function to the chosen reference microphone.

The objective is to estimate , the clean target speech at the reference microphone, by suppressing reverberation, background noise, and directional interference in the observed signal . For clarity, the frequency index k is omitted in the following sections.

3. Beamforming and Dereverberation Bilinear Framework

In this section, we extend the bilinear MPDR-WPE framework [42] by introducing two enhancements: ROI beamforming, which improves robustness to DOA errors, and LCMP beamforming, which enables directional interference suppression or multi-target support.

The ROI beamformer replaces the traditional point-source distortionless constraint with a least-distortion criterion defined over an angular sector. This formulation reduces sensitivity to steering inaccuracies caused by head movements or localization errors. Specifically, the ROI constraint is given by [29]

where denotes the ROI as illustrated in Figure 1 and

where denotes the Hermitian transpose.

Figure 1.

Illustration of the ROI.

We also incorporate an LCMP beamformer to support multiple constraints:

where contains the RTFs for two known directions. Setting preserves both sources (multi-target), while using a small value for suppresses the second source.

Since the temporal filter is sensitive to additive noise, we first apply the spatial filter to the multichannel observation :

where is the estimated desired speaker (consisting of the direct path and early reverberation) at the reference microphone, which may be slightly distorted due to the relaxed constraint of ROI beamforming, is the late reverberation component that remains after beamforming and originates from the target speaker, and is a linear combination of background noise and interference sources.

Next, a temporal filter of length L is applied to suppress the late reverberation that occurs after beamforming. We subtract a predicted reverberant component from by applying to a delayed version of :

where is a prediction delay chosen to preserve the correlation of the direct path speech signal. The vector contains the previous L beamformer outputs and is defined as

Given that is fixed, the second term in (10) is linear with respect to , or vice versa. We utilize the Kronecker product representation to estimate the desired signal:

where ⊗ represents the Kronecker product and is the column stacked observation vector of dimension :

By leveraging the relationship [46]

the spatial and temporal filters can be separated, enabling efficient adaptation in real-time scenarios, with denoting an identity matrix of appropriate dimension:

where denotes the observation vector processed by the spatial filter , and represents the corresponding beamformer output.

Similarly, (12) can be alternatively expressed as

where is the observation signal vector filtered by .

4. Real-Time RLS-Based Bilinear Framework

In this section, we derive a real-time source separation and dereverberation bilinear framework using the RLS algorithm [40] with the time-varying spatial filter and the time-varying temporal filter . The cost function for the spatial beamformer is defined as follows:

where is the forgetting factor and

The spatial beamformer is obtained by minimizing the cost function under various constraints. Although the least-distortion beamformer is mathematically rigorous, it is less suitable for real-time operation. Therefore, we adopt a simpler approach based on the dynamic controlled distortion constraint [26]:

whose solution is the minimum power ROI beamformer:

Alternatively, the beamformer can be constrained with multiple linear constraints as follows:

yielding the LCMP beamformer:

where

represents the covariance matrix of .

The temporal filter can be iteratively optimized by defining the following cost function:

where

and is the variance of the a priori estimate of the desired signal. This cost function assigns more weight to weaker parts of the signal, such as late reverberation, and less to dominant components like the direct path, making it well-suited for dereverberation.

The solution for the temporal filter can be obtained by minimizing the cost function :

where denotes the weighted covariance matrix of and represents the weighted correlation vector between and :

In practice, due to the dynamic environment and localization errors, regularization of is needed. This can be achieved as follows:

where is a diagonal matrix with small values with the same dimension as the sensors.

Computational complexity can be improved using Woodbury’s identity [40]. The updates of , , and are as follows:

where

are the Kalman gains.

The temporal filter can be iteratively updated by the derived Kalman gain:

As shown in [37], this approach significantly reduces the computational complexity from to by combining two sub-optimal solutions via the Kronecker product.

5. Experimental Results

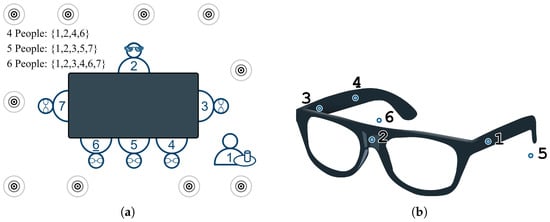

In this section, we present experimental results using the first dataset from the SPEAR Challenge. This dataset provides real six-channel recordings captured with a head-worn microphone array in a highly dynamic, noisy, and reverberant environment (Figure 2). The recording room measures and has a reverberation time of . The dataset includes speech from both a nearby (worn) speaker and distant speakers, alongside continuous head movements by the microphone wearer. Additionally, ten loudspeakers placed at different heights throughout the room emit uncorrelated, restaurant-like background noise. These combined factors—and the use of real, non-simulated recordings—make the task particularly challenging, resulting in relatively large standard deviations across evaluation metrics that reflect the inherent variability of the environment.

Figure 2.

(a) SPEAR Challenge scene setup showing speaker positions around the table. (b) Head-mounted microphone array worn by person ID2, with six microphones labeled 1–6 [18,43].

To accurately capture the array’s spatial characteristics, the dataset includes 1020 sphere-sampled points with corresponding impulse responses (IRs) measured on a mannequin in an anechoic chamber. We employed the Haversine formula [47] to identify the nearest-neighbor IR for each sampled direction, then derived the RTF with respect to sensor 2, which served as the reference sensor. Inevitably, steering errors emerged due to the finite sampled sphere points, head movements, and reverberation.

Each audio file is one minute long and was originally sampled at 48 kHz. For computational efficiency, we downsampled the recordings to 16 kHz, processed them using 1024-sample STFT frames (with overlap and a Hamming window), and applied a prediction delay of . The WPE filter lengths were set to 14, 12, and 10 taps for the 0–1 kHz, 1–3 kHz, and 3–8 kHz bands, respectively. The spatial filter, comprising microphones, was initially configured as a delay-and-sum beamformer, and the inverse covariance matrices were set to and . We used a forgetting factor and a regularization factor of , which together ensured convergence within a few hundred frames while balancing noise suppression and target preservation. Experiments were implemented in MATLAB (R2023b; MathWorks, Natick, MA, USA) and executed on a laptop with an Intel i7-1360P CPU (Intel Corporation, Santa Clara, CA, USA) and 16 GB RAM, demonstrating its feasibility for deployment in AR applications with comparable computational resources.

The source separation task was carried out by designating either Person 6 (ID6) or Person 4 (ID4) as the target speaker in separate experiments. Person 2 (the microphone wearer) and either Person 4 or Person 6, depending on the setup, were treated as interference speakers, with background noise serving as additional interference. Since clean reference signals were unavailable, a pseudo ground truth was generated using an MVDR-VAD beamformer that updates its noise covariance matrix only during intervals when the target speaker is silent, as described in [48], and nulls the signal during these periods. This approach is practical for deriving stable references; however, since MVDR–VAD is itself a beamforming method, it may introduce a bias in favor of beamforming-style algorithms and underestimate the performance of fundamentally different approaches such as deep learning models. All algorithm outputs were evaluated against this pseudo ground truth, and performance metrics, as reported in the tables, were averaged across five distinct one-minute recordings.

For quantitative evaluation, we employed several objective measures. Perceptual evaluation of speech quality (PESQ) [49] and short-time objective intelligibility (STOI) [50] assess overall speech quality and intelligibility, respectively. In addition, we computed the scale-invariant signal-to-distortion ratio (SI-SDR) [51], the segmental signal-to-noise ratio (SNRseg), and the frequency-weighted segmental SNR (fwSegSNR) [52] to quantify desired signal preservation and noise suppression. We also measured the average maximum correlation (AMC) [42], which evaluates the correlation of each speaker in the output relative to the MVDR-VAD reference. Lower AMC indicates stronger suppression of undesired sources, while higher AMC implies better preservation of the target. The results are reported as performance differences () between the proposed algorithm and the reference microphone, with both compared to the pseudo-ground truth.

In the following subsections, assuming an elevation of 0 degrees, we present a comprehensive parameter analysis, beginning with the forgetting factor and regularization under a point-source model, and proceeding through ROI-based beamforming, azimuthal error sensitivity, multi-constraint beamforming, and concluding with a comparison to DL methods.

5.1. Forgetting Factor Analysis

The forgetting factor in the RLS-based MPDR-WPE algorithm controls the balance between fast adaptation and stable convergence. Lower values of allow quick tracking of changes but may lead to instability. Higher values improve stability but slow down the adaptation process. We tested three values: , under a point-source setup, and compared them with two baseline methods using and : MPDR and wMPDR-WPE. Table 1 shows that the MPDR-WPE algorithm with offers the best balance across all metrics. A lower value () caused the algorithm to diverge, while a higher value () resulted in slower adaptation and degraded performance.

Table 1.

Comparison of forgetting factor values () for Person 4 () and Person 6 (). The algorithm does not converge for . Bold values indicate the best performance for each metric and each desired speaker.

Compared to the baselines, MPDR performs worse overall, as its ability to adapt is limited and it does not explicitly address reverberation. wMPDR-WPE shows strong intelligibility and target preservation but is less effective at suppressing interfering sources. As discussed in [42], this highlights a common trade-off: wMPDR-WPE prioritizes target preservation and background noise reduction, while MPDR-WPE emphasizes directional interference suppression and source separation. Despite introducing some distortion to the desired signal, MPDR-WPE provides better directional noise suppression, making it more suitable for source separation tasks in our setting.

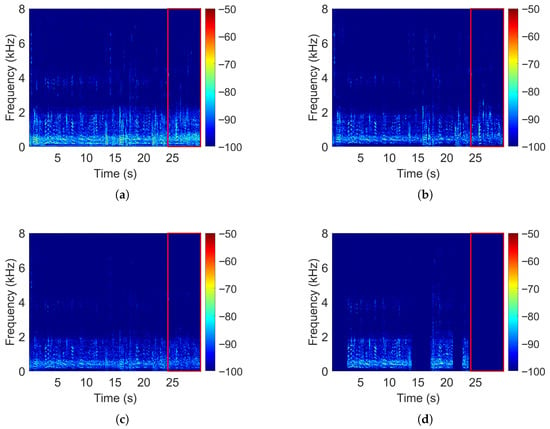

Figure 3 illustrates these differences. The proposed MPDR-WPE algorithm shows the strongest suppression of Person 2’s speech (red box), with better directional interference attenuation than both MPDR and wMPDR-WPE.

Figure 3.

Spectrogram comparisons for (a) MPDR, (b) wMPDR-WPE, (c) MPDR-WPE, and (d) MVDR-VAD (ground truth). The red box highlights the interfering speech signal from Person 2 (microphone wearer).

In summary, a forgetting factor of provides the most effective balance between adaptation speed and convergence stability for MPDR-WPE. Lower values lead to instability, while higher values slow adaptation and reduce performance. Compared to MPDR and wMPDR-WPE, the proposed method achieves superior source separation while maintaining strong intelligibility and noise suppression. These results make MPDR-WPE with a compelling choice for real-time speech enhancement in dynamic environments.

5.2. Regularization

Next, we investigate the effect of different regularization values on the point-source MPDR-WPE framework [42]. Regularizing the time-varying covariance estimate helps maintain robust filtering, particularly due to the MPDR beamformer’s vulnerability to steering errors in dynamic environments. We tested , representing no regularization, moderate regularization, and stronger regularization, respectively.

Table 2 shows that can yield strong performance but risks damaging the desired speaker. A larger (e.g., ) broadens the beam, effectively preserving the target, but reduces SNR and quality gains. Overall, provides both robustness and high performance. We therefore adopt in subsequent experiments.

Table 2.

Comparison of regularization values () for both ID4 and ID6 as the desired source. Bold values indicate the best performance for each metric and each desired speaker.

5.3. Region of Interest Impact

To demonstrate the effect of an ROI-based beamformer, we compared it against a point-source design assuming a precisely known DOA. In the ROI-based approach, the beamformer accommodates localization uncertainty by defining a symmetric angular sector around the nominal direction, which is set to .

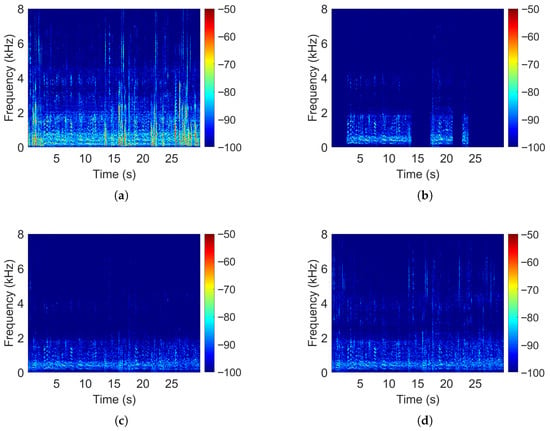

As shown in Figure 4, both beamformers produce similar outputs below about 3 kHz. Above that frequency, however, the ROI-based beamformer passes more high-frequency energy from both the desired and interfering sources. We interpret this as a form of “high-frequency regularization.” At lower frequencies, small changes in steering direction result in negligible variations in the steering vectors, which explains the minor differences between the ROI and point-source beamformers in this range. In contrast, at higher frequencies, the steering vectors vary more rapidly, so the ROI-based beamformer preserves more energy from those bands.

Figure 4.

Spectrogram comparisons for (a) the reference microphone, (b) MVDR-VAD (ground truth), (c) MPDR-WPE, and (d) an ROI-based MPDR-WPE.

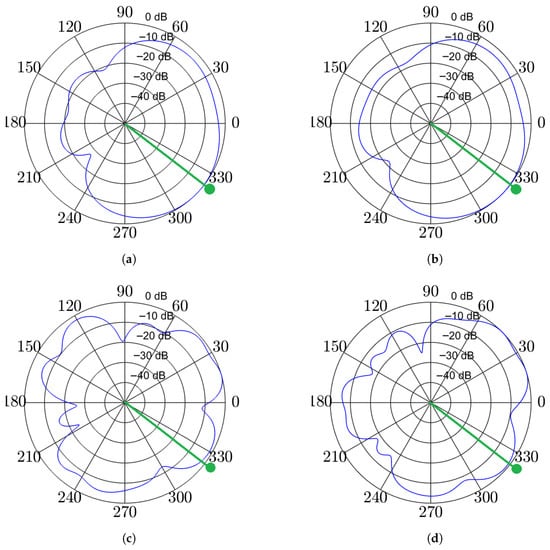

Figure 5 further validates our thesis. At 1 kHz, both ROI-based and point-source beamformers yield similar beampatterns. In contrast, at 4 kHz, the differences are more pronounced, especially near the target direction. The ROI-based design broadens the pass region, providing more robust preservation of the target speech in dynamic environments, which inevitably involve steering errors. This advantage comes at the cost of passing more interference from nearby angles. In general, ROI-based beamforming offers a practical compromise between strong performance and resilience to moderate angular mismatches.

Figure 5.

Example beampatterns at 1 kHz (top row) and 4 kHz (bottom row). The beamformer is steered toward a speaker at approximately (green line with dot). The blue lines represent the resulting spatial beampatterns. Figures (a,c) show a point-source MPDR-WPE, while (b,d) illustrate the ROI-based MPDR-WPE.

5.4. Azimuthal Error Sensitivity Analysis

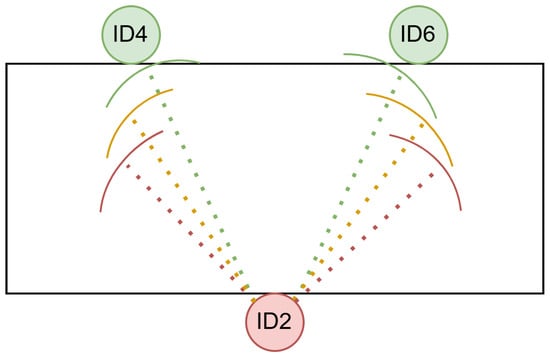

Next, we examine how azimuthal steering inaccuracies affect the two beamformer designs (point source versus ROI) for speakers and . Assuming that the given direction is precise, three levels of azimuth error were introduced synthetically (, , and ), simulating realistic mismatches between the actual speaker location and the steered beam. The ROI was defined as , as illustrated in Figure 6.

Figure 6.

Schematic illustration of the azimuth-error experiment. The microphone wearer (ID2) is located at the bottom, with two potential target speakers (ID4, ID6) in front. The dashed lines indicate possible DOAs under varying steering errors: green (no error), yellow (), and red ().

To better understand the frequency-dependent behavior of ROI beamforming under azimuthal mismatches, we divide the results into full-band and high-frequency (3–8 kHz) evaluations. This distinction is motivated by our earlier observations in Figure 4 and Figure 5, which showed that ROI-based designs diverge most significantly from point-source beamformers above approximately 3 kHz. In this regime, steering vectors change more rapidly with angle, making the ROI constraint more influential. Separating the metrics allows us to evaluate this trade-off more precisely, highlighting how the ROI beamformer improves robustness to angular error at the cost of increased high-frequency leakage.

Table 3 and Table 4 show that when there is no steering error, the point-source design generally performs best. However, as the azimuth error grows, the mismatch between the true target direction and the beam steering leads to significant performance degradation for the point-source beamformer. The ROI-based design proves more resilient to these errors—especially in SI-SDR—by admitting a spatial sector rather than a single direction. This broader pass region better preserves the desired speaker under moderate localization inaccuracies.

Table 3.

Comparison of Point and ROI configurations for full-band and high-frequency (3 kHz–8 kHz) performance across various azimuth errors for person ID4 as the desired speaker. Bold values indicate the best performance for each metric under each error condition.

Table 4.

Comparison of Point and ROI configurations for full-band and high-frequency (3 kHz–8 kHz) performance across various azimuth errors for person ID6 as the desired speaker. Bold values indicate the best performance for each metric under each error condition.

At higher frequencies, the ROI beamformer also allows more undesired signals to pass, resulting in reduced SNR gains compared to the strictly point-focused approach. Hence, while the ROI beamformer maintains robust preservation of the desired signal when azimuth errors increase, it comes with the trade-off of higher interference leakage in the upper frequency range.

5.5. Multi-Constraint Beamforming

We next explore an extension of the bilinear framework using an LCMP beamformer with multiple directional constraints. In the first setup, the beamformer is steered toward the desired speaker while strongly attenuating the undesired distant speaker by applying a constraint in that direction. Table 5 compares this LCMP-WPE configuration with the MPDR-WPE framework and a standard LCMP beamformer.

Table 5.

Performance comparison of LCMP, MPDR-WPE, and LCMP-WPE in a single-target scenario for speakers ID4 and ID6. LCMP and LCMP-WPE apply a directional constraint toward the undesired speaker. Bold values indicate the best performance for each metric and each speaker.

Table 5 shows that LCMP-WPE improves directional interference suppression compared to MPDR-WPE, as indicated by the AMC scores. It also improves signal quality. However, its intelligibility and noise suppression are slightly lower than MPDR-WPE, reflecting the trade-off introduced by the additional constraint. Compared to a simple LCMP beamformer, the LCMP-WPE framework introduces much better results.

We also evaluate LCMP-WPE in a dual-target setup that preserves both ID4 and ID6. In this experiment only, the pseudo ground truth is constructed by combining the individual MVDR-VAD outputs for each desired speaker. The results are shown in Table 6.

Table 6.

Performance of LCMP and LCMP-WPE in a dual-target configuration, preserving both ID4 and ID6. Bold values indicate the best performance for each metric.

As shown in Table 6, LCMP-WPE can preserve multiple speakers while suppressing interference and reverberation. It consistently outperforms LCMP in all metrics. Although the performance per speaker is lower than that of MPDR-WPE in a single-target setup, LCMP-WPE is more suitable when multiple sources must be enhanced simultaneously. For single-speaker scenarios, MPDR-WPE remains the preferred choice.

5.6. Comparison with Deep Learning Methods

The proposed bilinear framework and DL approaches, such as hybrid subband-fullband gated convolutional recurrent networks, represent two fundamentally different paradigms for multichannel speech enhancement. DL methods have demonstrated strong performance in benchmark settings, often surpassing traditional techniques in metrics like PESQ and SI-SDR when sufficient in-domain training data and computational resources are available [18,53]. However, they also pose practical limitations, particularly for real-time deployment in wearable AR systems.

From a generalization perspective, DL models typically exhibit sensitivity to mismatches between training and test conditions, including changes in speaker characteristics or room acoustics. This vulnerability arises from their reliance on supervised learning over finite datasets, which may encode bias toward the training domain [54]. In contrast, our bilinear framework method requires minimal prior training and adapts online to dynamic acoustic environments, including head rotations and moving speakers.

In terms of computational demands, DL systems often involve millions of parameters and billions of multiply–accumulate operations per second [55], resulting in high energy consumption and considerable latency. This hinders real-time execution on battery-powered AR devices. Our framework, on the other hand, operates with minimal memory and processing overhead, introducing a theoretical delay of only two frames, making it highly suitable for low-latency applications.

Another key distinction lies in interpretability and control. DL models function as black boxes and may hallucinate non-existent speech components or introduce perceptual artifacts under unseen conditions [56]. In contrast, our bilinear framework is based on well-established signal processing principles, offering predictable behavior and explicit control over spatial filtering and dereverberation. While DL may excel in perceptual metrics under favorable conditions, our method offers a transparent, adaptive, and computationally efficient alternative, providing faithful signal reconstruction that aligns well with the real-time requirements of wearable AR audio systems.

6. Conclusions

We have presented a comprehensive evaluation of a bilinear framework for real-time source separation and dereverberation in wearable AR systems, using the SPEAR dataset. We analyzed the influence of key parameters, including the forgetting factor and regularization strength, on the algorithm’s robustness in dynamic acoustic environments. To improve resilience against steering inaccuracies caused by localization uncertainty and head movements, the framework was extended with ROI beamforming, replacing point-source steering with an angular sector constraint. Experimental results demonstrate that ROI-based steering enhances preservation of the desired source under mismatches, particularly at high frequencies, though at the cost of increased off-axis leakage. We further introduced an LCMP-based extension that provides enhanced spatial control by enabling the enforcement of multiple directional constraints. This makes LCMP particularly effective when multiple DOA priors are available, allowing the beamformer to simultaneously preserve or suppress signals from several known directions. While LCMP improves source separation under these conditions, it may introduce a slight compromise in noise suppression.

Compared to deep learning-based approaches, the proposed bilinear framework offers strong real-time adaptability, robustness to unseen environments, and low computational complexity, making it highly suitable for deployment on wearable AR platforms. Future work will explore adaptive ROI strategies that adjust the angular sector in response to localization confidence and investigate the integration of data-driven beamforming with WPE to further enhance performance in challenging acoustic scenes. Another direction is to incorporate perceptual cues beyond spatial information. In particular, pitch-informed beamforming and loudness-based source weighting could improve robustness when spatial cues are degraded by reverberation or head motion.

Author Contributions

Conceptualization, methodology, validation, investigation, and writing—review and editing, A.N., G.I. and I.C.; software, formal analysis, and writing—original draft preparation, A.N.; resources and funding acquisition, I.C.; supervision, G.I. and I.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Israel Science Foundation (grant no. 1449/23) and the Pazy Research Foundation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. These data can be found here: SPEAR Challenge dataset—https://imperialcollegelondon.github.io/spear-challenge/ (accessed on 21 July 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AMC | Average Maximum Correlation |

| AR | Augmented Reality |

| DL | Deep Learning |

| DOA | Direction of Arrival |

| fwSegSNR | Frequency-Weighted Segmental SNR |

| IR | Impulse Response |

| LCMP | Linearly Constrained Minimum Power |

| MCLP | Multichannel Linear Prediction |

| MPDR | Minimum Power Distortionless Response |

| MVDR | Minimum Variance Distortionless Response |

| PESQ | Perceptual Evaluation of Speech Quality |

| RLS | Recursive Least Squares |

| ROI | Region of Interest |

| RTF | Relative Transfer Function |

| SI-SDR | Scale-Invariant Signal-to-Distortion Ratio |

| SNRseg | Segmental Signal-to-Noise Ratio |

| SPEAR | Speech Enhancement for Augmented Reality |

| STFT | Short-Time Fourier Transform |

| STOI | Short-Time Objective Intelligibility |

| VAD | Voice Activity Detector |

| wMPDR | Weighted Minimum Power Distortionless Response |

| WPE | Weighted Prediction Error |

References

- Schmid, D.; Enzner, G.; Malik, S.; Kolossa, D.; Martin, R. Variational Bayesian Inference for Multichannel Dereverberation and Noise Reduction. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1320–1335. [Google Scholar] [CrossRef]

- Nakatani, T.; Yoshioka, T.; Kinoshita, K.; Miyoshi, M.; Juang, B.-H. Speech Dereverberation Based on Variance-Normalized Delayed Linear Prediction. IEEE Trans. Audio Speech Lang. Process. 2010, 18, 1717–1731. [Google Scholar] [CrossRef]

- Löllmann, H.W.; Brendel, A.; Kellermann, W. Generalized coherence-based signal enhancement. In Proceedings of the 45th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 201–205. [Google Scholar] [CrossRef]

- Ikeshita, R.; Kinoshita, K.; Kamo, N.; Nakatani, T. Online Speech Dereverberation Using Mixture of Multichannel Linear Prediction Models. IEEE Signal Process. Lett. 2021, 28, 1580–1584. [Google Scholar] [CrossRef]

- Nakatani, T.; Ikeshita, R.; Kinoshita, K.; Sawada, H.; Araki, S. Blind and Neural Network-Guided Convolutional Beamformer for Joint Denoising, Dereverberation, and Source Separation. In Proceedings of the 46th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6129–6133. [Google Scholar] [CrossRef]

- Greengard, S. Virtual Reality; MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Doclo, S.; Gannot, S.; Moonen, M.; Spriet, A. Acoustic Beamforming for Hearing Aid Applications. In Handbook on Array Processing and Sensor Networks; Wiley Online Library: Hoboken, NJ, USA, 2010; pp. 269–302. [Google Scholar]

- Westhausen, N.L.; Kayser, H.; Jansen, T.; Meyer, B.T. Real-Time Multichannel Deep Speech Enhancement in Hearing Aids: Comparing Monaural and Binaural Processing in Complex Acoustic Scenarios. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 4596–4606. [Google Scholar] [CrossRef]

- Xiao, T.; Doclo, S. Effect of Target Signals and Delays on Spatially Selective Active Noise Control for Open-Fitting Hearables. In Proceedings of the 49th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 1056–1060. [Google Scholar] [CrossRef]

- Dalga, D.; Doclo, S. Combined Feedforward-Feedback Noise Reduction Schemes for Open-Fitting Hearing Aids. In Proceedings of the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 16–19 October 2011; pp. 245–248. [Google Scholar] [CrossRef]

- Cox, T.J.; Barker, J.; Bailey, W.; Graetzer, S.; Akeroyd, M.A.; Culling, J.F.; Naylor, G. Overview of the 2023 ICASSP SP Clarity Challenge: Speech Enhancement for Hearing Aids. In Proceedings of the 48th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–9 June 2023; pp. 1–2. [Google Scholar] [CrossRef]

- Beit-On, H.; Lugasi, M.; Madmoni, L.; Menon, A.; Kumar, A.; Donley, J.; Tourbabin, V.; Rafaely, B. Audio Signal Processing for Telepresence Based on Wearable Array in Noisy and Dynamic Scenes. In Proceedings of the 47th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 8797–8801. [Google Scholar] [CrossRef]

- Hafezi, S.; Moore, A.H.; Guiraud, P.; Naylor, P.A.; Donley, J.; Tourbabin, V.; Lunner, T. Subspace Hybrid Beamforming for Head-Worn Microphone Arrays. In Proceedings of the 48th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–9 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, D.; Chen, J. Supervised Speech Separation Based on Deep Learning: An Overview. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1702–1726. [Google Scholar] [CrossRef]

- Luo, Y.; Mesgarani, N. Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef]

- Kolbæk, M.; Yu, D.; Tan, Z.-H.; Jensen, J. Multitalker Speech Separation with Utterance-Level Permutation Invariant Training of Deep Recurrent Neural Networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1901–1913. [Google Scholar] [CrossRef]

- Subakan, C.; Ravanelli, M.; Cornell, S.; Bronzi, M.; Zhong, J. Attention Is All You Need in Speech Separation. IEEE Trans. Audio Speech Lang. Process. 2021, 29, 1703–1712. [Google Scholar] [CrossRef]

- Guiraud, P.; Hafezi, S.; Naylor, P.A.; Moore, A.H.; Donley, J.; Tourbabin, V.; Lunner, T. An Introduction to the Speech Enhancement for Augmented Reality (SPEAR) Challenge. In Proceedings of the 17th International Workshop Acoustic Signal Enhancement (IWAENC), Bamberg, Germany, 5–8 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Erdogan, H.; Hershey, J.R.; Watanabe, S.; Le Roux, J. Improved MVDR Beamforming Using Single-Channel Mask Prediction Networks. In Proceedings of the Interspeech, San Francisco, CA, USA, 8–12 September 2016; pp. 1981–1985. [Google Scholar] [CrossRef]

- Heymann, J.; Drude, L.; Haeb-Umbach, R. Neural Network Based Spectral Mask Estimation for Acoustic Beamforming. In Proceedings of the 41st IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 196–200. [Google Scholar] [CrossRef]

- Ochiai, T.; Delcroix, M.; Ikeshita, R.; Kinoshita, K.; Nakatani, T.; Araki, S. Beam-TasNet: Time-Domain Audio Separation Network Meets Frequency-Domain Beamformer. In Proceedings of the 45th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6384–6388. [Google Scholar] [CrossRef]

- Van Trees, H.L. Optimum Array Processing: Part IV of Detection, Estimation, and Modulation Theory; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- Van Veen, B.D.; Buckley, K.M. Beamforming: A Versatile Approach to Spatial Filtering. IEEE ASSP Mag. 1988, 5, 4–24. [Google Scholar] [CrossRef]

- Capon, J. High-Resolution Frequency-Wavenumber Spectrum Analysis. Proc. IEEE 1969, 57, 1408–1418. [Google Scholar] [CrossRef]

- Ehrenberg, L.; Gannot, S.; Leshem, A.; Zehavi, E. Sensitivity Analysis of MVDR and MPDR Beamformers. In Proceedings of the 26th IEEE Convention of Electrical and Electronics Engineers in Israel, Eilat, Israel, 17–20 November 2010; pp. 416–420. [Google Scholar] [CrossRef]

- Itzhak, G.; Cohen, I. Robust Beamforming for Multispeaker Audio Conferencing Under DOA Uncertainty. IEEE/ACM Trans. Audio Speech Lang. Process. 2025, 33, 139–151. [Google Scholar] [CrossRef]

- Itzhak, G.; Cohen, I. Region-of-Interest Oriented Constant-Beamwidth Beamforming with Rectangular Arrays. In Proceedings of the 2023 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 22–25 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Konforti, Y.; Cohen, I.; Berdugo, B. Array Geometry Optimization for Region-of-Interest Broadband Beamforming. In Proceedings of the 17th International Workshop Acoustic Signal Enhancement (IWAENC), Bamberg, Germany, 5–8 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Itzhak, G.; Cohen, I. STFT-Domain Least-Distortion Region-of-Interest Beamforming. IEEE Trans. Audio Speech Lang. Process. 2025, 33, 2803–2816. [Google Scholar] [CrossRef]

- Frank, A.; Cohen, I. Least-Distortion Maximum Gain Beamformer for Time-Domain Region-of-Interest Beamforming. IEEE Trans. Audio Speech Lang. Process. 2025, 33, 2286–2301. [Google Scholar] [CrossRef]

- Braun, S.; Habets, E.A.P. Linear prediction-based online dereverberation and noise reduction using alternating Kalman filters. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1119–1129. [Google Scholar] [CrossRef]

- Yoshioka, T.; Nakatani, T.; Miyoshi, M. Integrated speech enhancement method using noise suppression and dereverberation. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 231–246. [Google Scholar] [CrossRef]

- Nakatani, T.; Yoshioka, T.; Kinoshita, K.; Miyoshi, M.; Juang, B.-H. Blind Speech Dereverberation with Multi-Channel Linear Prediction Based on Short Time Fourier Transform Representation. In Proceedings of the 33rd IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Las Vegas, NV, USA, 30 March–4 April 2008; pp. 85–88. [Google Scholar] [CrossRef]

- Dietzen, T.; Spriet, A.; Tirry, W.; Doclo, S.; Moonen, M.; van Waterschoot, T. Comparative analysis of generalized sidelobe cancellation and multi-channel linear prediction for speech dereverberation and noise reduction. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 27, 544–558. [Google Scholar] [CrossRef]

- Boeddeker, C.; Nakatani, T.; Kinoshita, K.; Haeb-Umbach, R. Jointly optimal dereverberation and beamforming. In Proceedings of the 45th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 216–220. [Google Scholar] [CrossRef]

- Nakatani, T.; Kinoshita, K. A unified convolutional beamformer for simultaneous denoising and dereverberation. IEEE Signal Process. Lett. 2019, 26, 903–907. [Google Scholar] [CrossRef]

- Yang, W.; Huang, G.; Brendel, A.; Chen, J.; Benesty, J.; Kellermann, W.; Cohen, I. A bilinear framework for adaptive speech dereverberation combining beamforming and linear prediction. In Proceedings of the 17th International Workshop Acoustic Signal Enhancement (IWAENC), Bamberg, Germany, 5–8 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Cohen, I.; Benesty, J.; Chen, J. Differential Kronecker Product Beamforming. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 892–902. [Google Scholar] [CrossRef]

- Itzhak, G.; Cohen, I. Differential and Constant-Beamwidth Beamforming with Uniform Rectangular Arrays. In Proceedings of the 17th International Workshop Acoustic Signal Enhancement (IWAENC), Bamberg, Germany, 5–8 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Haykin, S. Adaptive Filter Theory, 4th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002; pp. 453–455. [Google Scholar]

- Cioffi, J.; Kailath, T. Fast, Recursive-Least-Squares Transversal Filters for Adaptive Filtering. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 304–337. [Google Scholar] [CrossRef]

- Nemirovsky, A.; Itzhak, G.; Cohen, I. A Bilinear Source Separation, Dereverberation, and Background Noise Suppression Algorithm for Augmented Reality Applications. In Proceedings of the 50th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Donley, J.; Tourbabin, V.; Lee, J.-S.; Broyles, M.; Jiang, H.; Shen, J.; Pantic, M.; Ithapu, V.K.; Mehra, R. EasyCom: An Augmented Reality Dataset to Support Algorithms for Easy Communication in Noisy Environments. arXiv 2021, arXiv:2107.04174. [Google Scholar] [CrossRef]

- Frost, O.L. An Algorithm for Linearly Constrained Adaptive Array Processing. Proc. IEEE 1972, 60, 926–935. [Google Scholar] [CrossRef]

- Griffiths, L.J.; Jim, C.W. An Alternative Approach to Linearly Constrained Adaptive Beamforming. IEEE Trans. Antennas Propag. 1982, 30, 27–34. [Google Scholar] [CrossRef]

- Harville, D.A. Matrix Algebra from a Statistician’s Perspective; Taylor & Francis: Boca Raton, FL, USA, 1998. [Google Scholar]

- Robusto, C.C. The Cosine-Haversine Formula. Am. Math. Mon. 1957, 64, 38–40. [Google Scholar] [CrossRef]

- Moore, A.H.; Hafezi, S.; Vos, R.R.; Naylor, P.A.; Brookes, M. A Compact Noise Covariance Matrix Model for MVDR Beamforming. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 2049–2061. [Google Scholar] [CrossRef]

- Rix, A.W.; Beerends, J.G.; Hollier, M.P.; Hekstra, A.P. Perceptual Evaluation of Speech Quality (PESQ)—A New Method for Speech Quality Assessment of Telephone Networks and Codecs. In Proceedings of the 26th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Salt Lake City, UT, USA, 7–11 May 2001; Volume 2, pp. 749–752. [Google Scholar] [CrossRef]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. An Algorithm for Intelligibility Prediction of Time–Frequency Weighted Noisy Speech. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2125–2136. [Google Scholar] [CrossRef]

- Le Roux, J.; Wisdom, S.; Erdogan, H.; Hershey, J.R. SDR—Half-Baked or Well Done? In Proceedings of the 44th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 626–630. [Google Scholar] [CrossRef]

- Hu, Y.; Loizou, P.C. Evaluation of Objective Quality Measures for Speech Enhancement. IEEE Trans. Audio Speech Lang. Process. 2007, 16, 229–238. [Google Scholar] [CrossRef]

- Hao, X.; Su, X.; Horaud, R.; Li, X. FullSubNet: A Full-Band and Sub-Band Fusion Model for Real-Time Single-Channel Speech Enhancement. In Proceedings of the 46th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6633–6637. [Google Scholar] [CrossRef]

- Lam, M.W.Y.; Wang, J.; Su, D.; Yu, D. Mixup-Breakdown: A Consistency Training Method for Improving Generalization of Speech Separation Models. In Proceedings of the 45th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6374–6378. [Google Scholar] [CrossRef]

- Braun, S.; Gamper, H.; Reddy, C.K.A.; Tashev, I. Towards Efficient Models for Real-Time Deep Noise Suppression. In Proceedings of the 46th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 656–660. [Google Scholar] [CrossRef]

- Close, G.; Hain, T.; Goetze, S. Hallucination in Perceptual Metric-Driven Speech Enhancement Networks. In Proceedings of the 32nd European Signal Processing Conference (EUSIPCO), Lyon, France, 26–30 August 2024; pp. 21–25. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).