Non-Contact Screening of OSAHS Using Multi-Feature Snore Segmentation and Deep Learning

Abstract

1. Introduction

2. Dataset and Preprocessing

2.1. PSG-Audio

2.2. Preprocessing

3. Methods

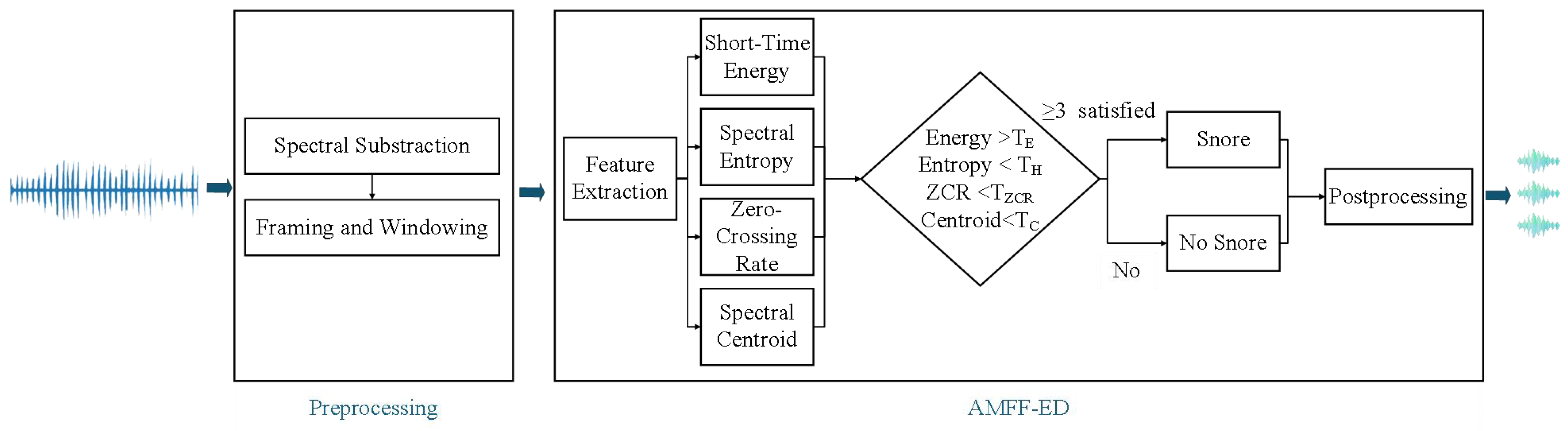

3.1. AMFF-ED

3.2. Mel Spectrogram

3.3. ERBG-Net

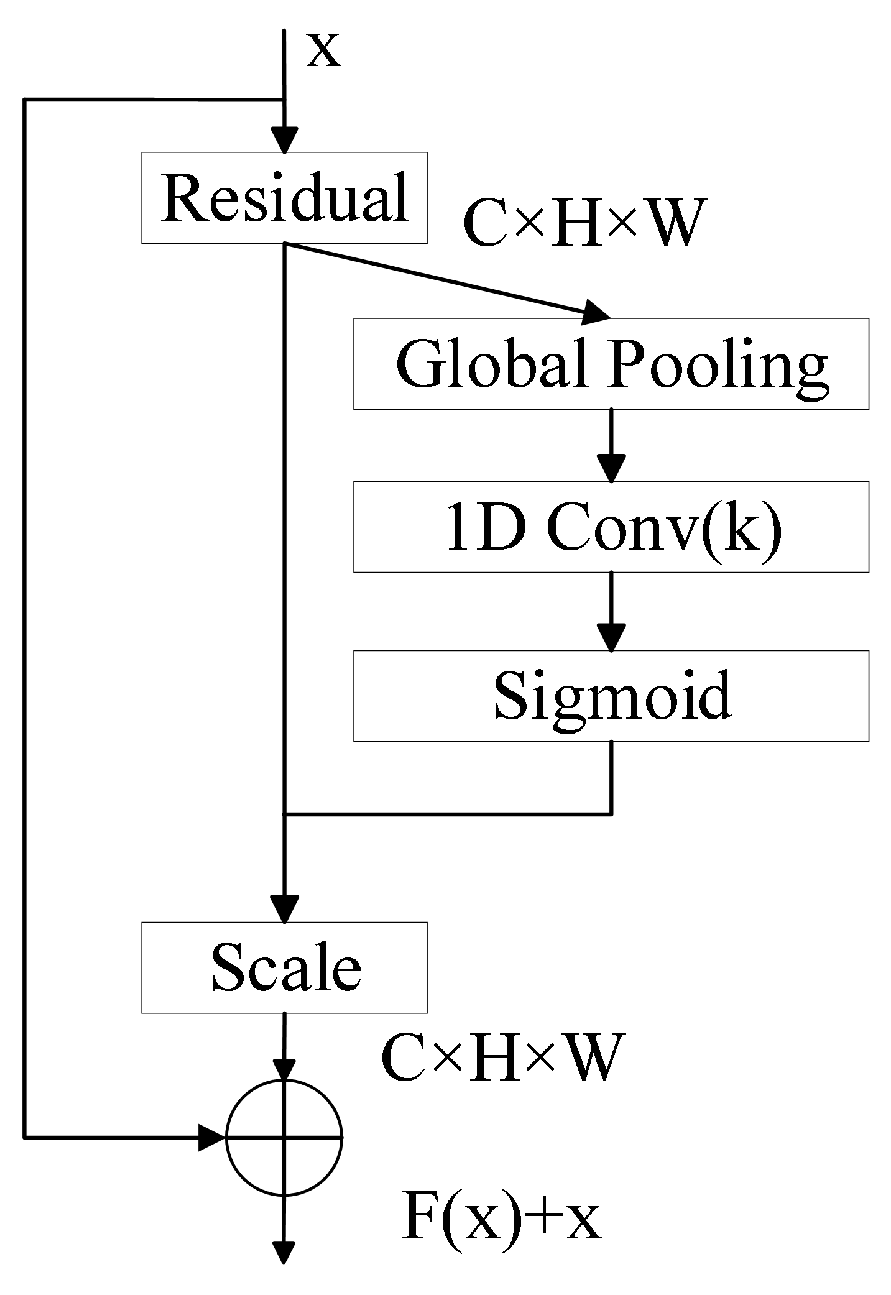

3.3.1. ResNet18 Enhanced with ECA

3.3.2. Bidirectional Gated Recurrent Unit

4. Experimental Results

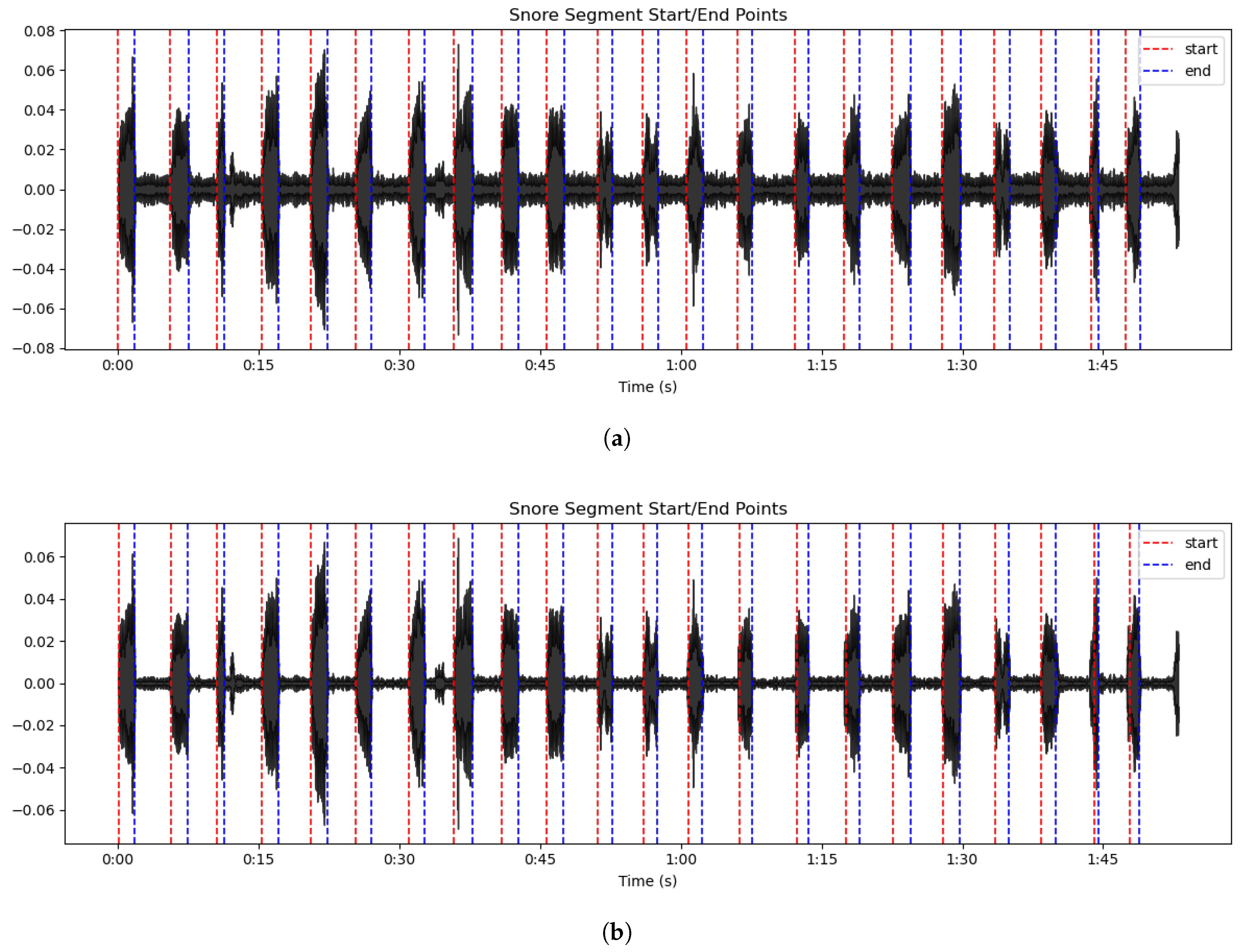

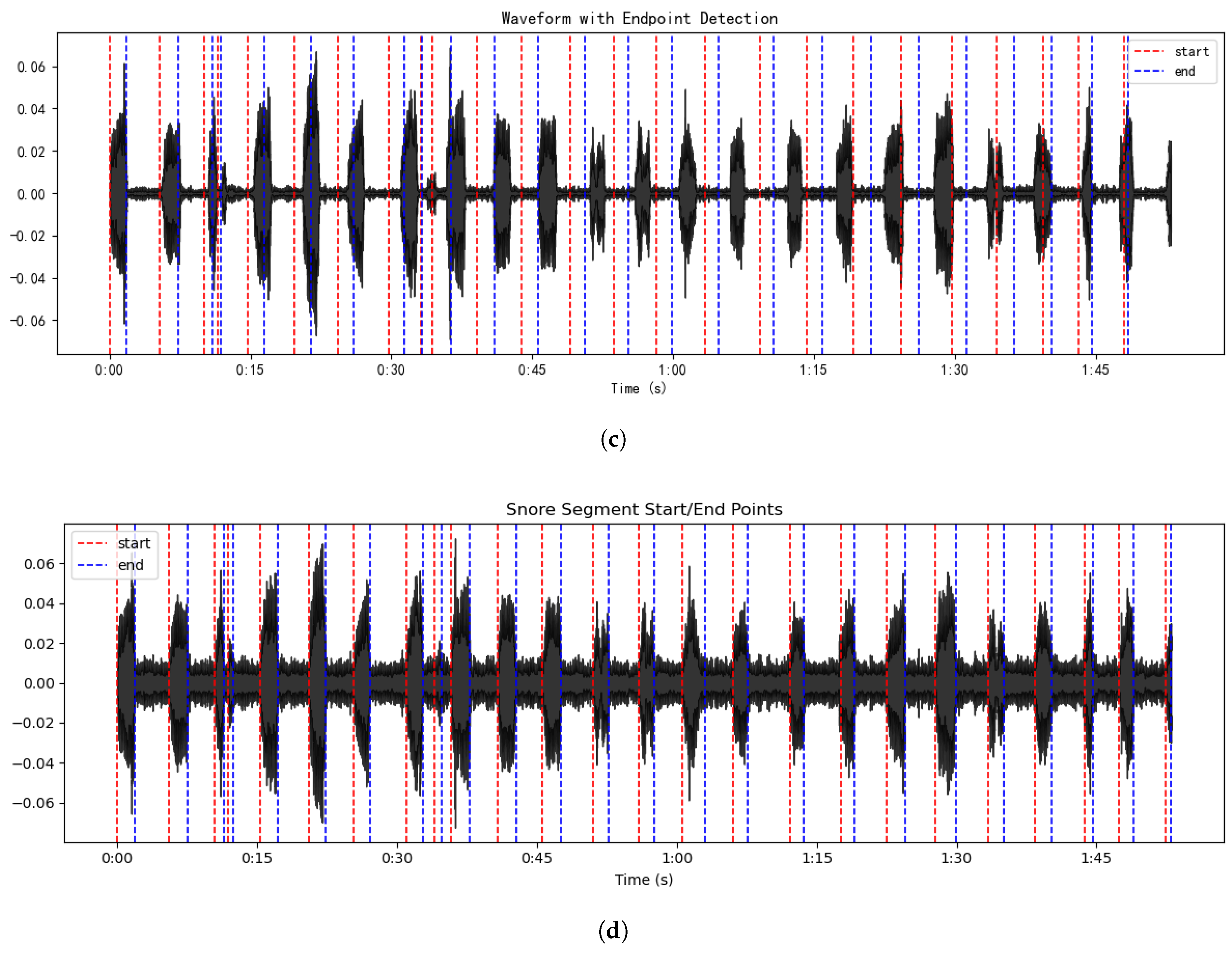

4.1. Results of Snoring Detection Experiments

- (1)

- AMFF-ED + original recordings;

- (2)

- AMFF-ED + noise-reduced recordings;

- (3)

- Short-time energy and ZCR + noise-reduced recordings;

- (4)

- AMFF-ED + original recordings with low-level conversational background noise.

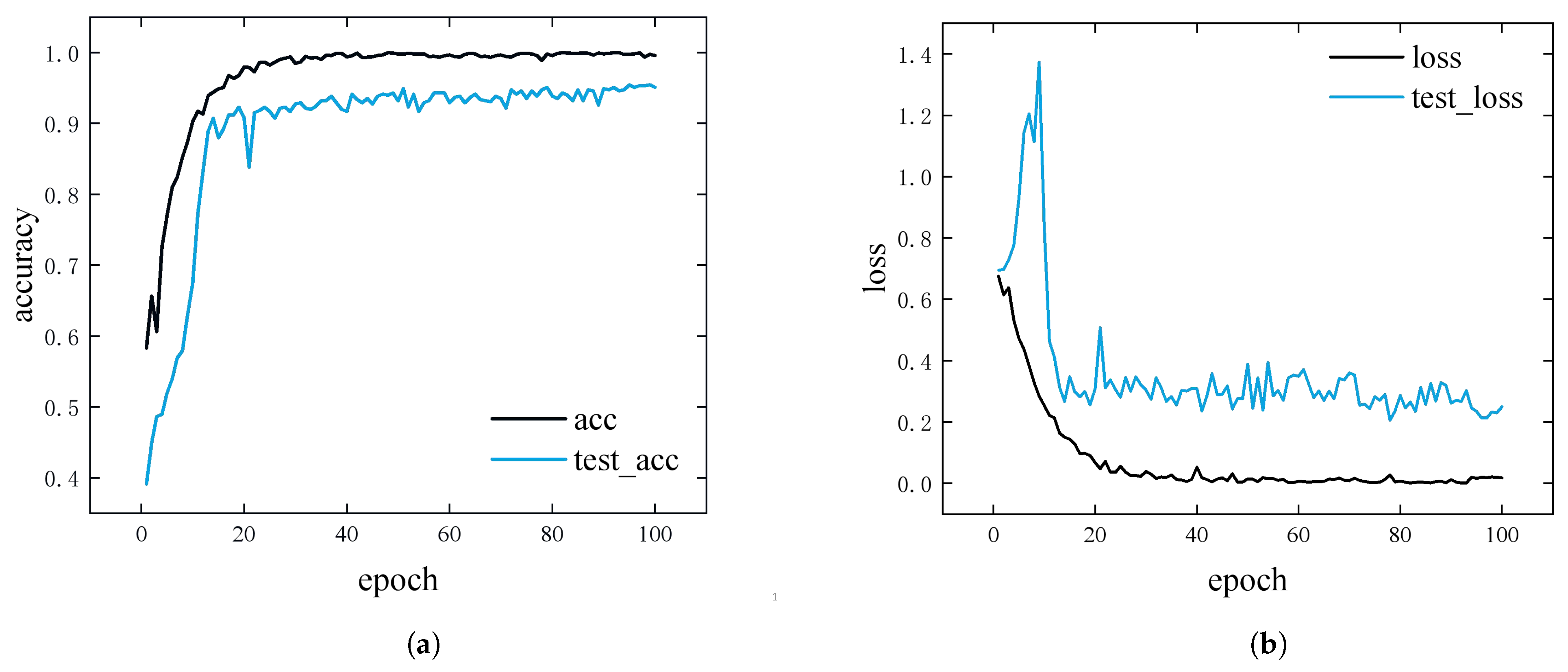

4.2. Model Training and Evaluation

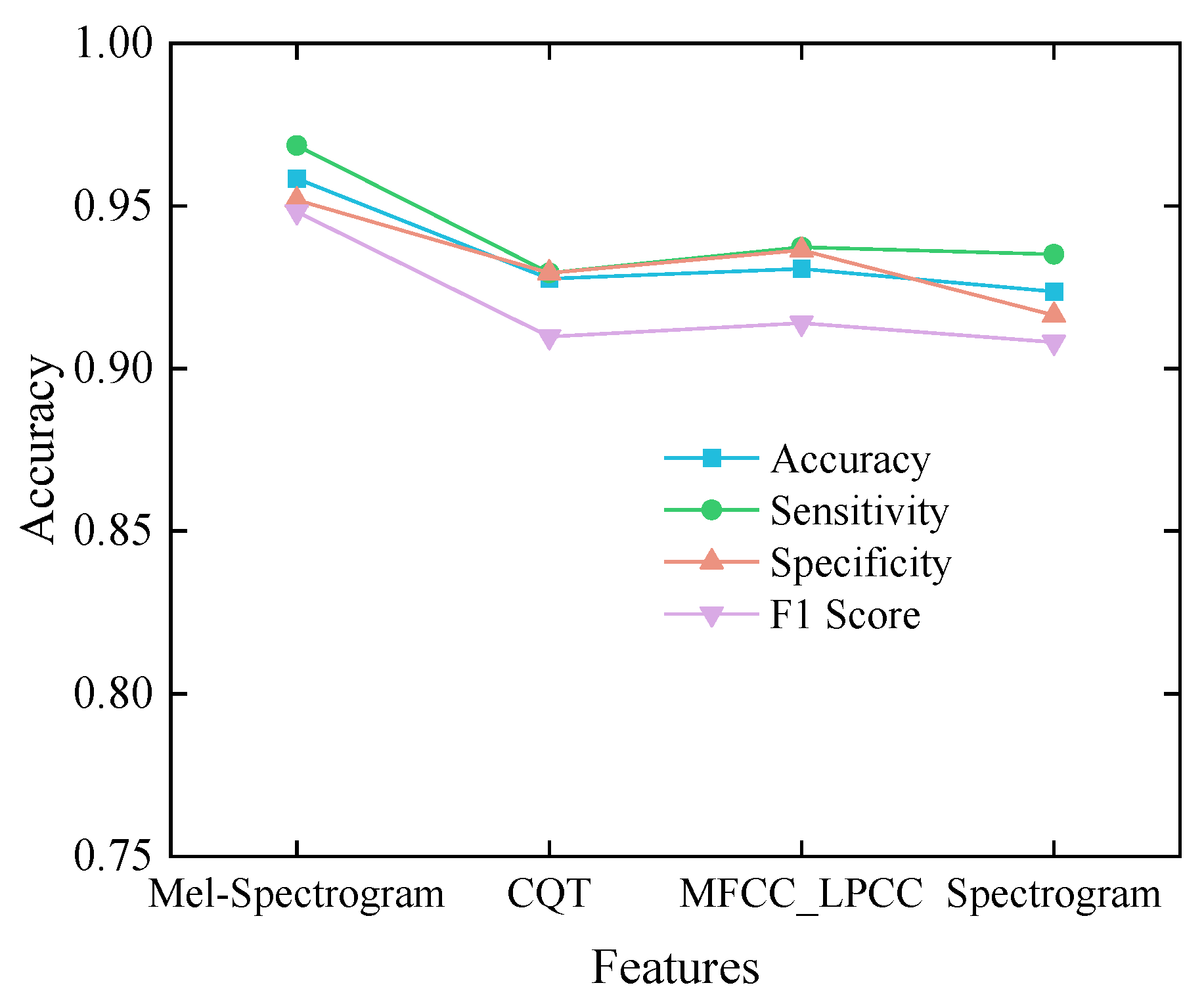

4.3. Ablation Experiment

4.4. Comparative Experiment

5. Discussion

| Author | Year | Subjects | Detection | Features | Model | Classification | Accuracy |

|---|---|---|---|---|---|---|---|

| Shen [18] | 2020 | 32 | Spectrogram boundary factor | MFCC | LSTM | Normal vs. abnormal snore | 87% |

| Cheng [20] | 2022 | 43 | Endpoint detection + manual check | MFCC, Fbanks, energy, LPC | LSTM | Normal vs. abnormal snore | 95.3% |

| Sillaparaya [29] | 2022 | 5 | Manual PSG-based segmentation | Mean MFCC | FC | Normal/apnea–hypopnea snore/non-snore | 85.3% |

| Castillo [30] | 2022 | 25 | Not Applicable | Spectrogram | CNN | Apnea vs. non-apnea sounds | 88.5% |

| Li [21] | 2023 | 124 | Unsupervised clustering | VG features | 2D-CNN | Normal vs. OSAHS snore | 92.5% |

| Song [28] | 2023 | 40 | Adaptive thresholding | MFCC, PLP, BSF, PR800, etc. | XGBoost + CNN + ResNet18 | Normal vs. abnormal snore | 83.4% |

| Ding [27] | 2024 | 120 | Adaptive thresholding | MFCC, VGG16, PANN features | XGBoost + KNN/RF | Normal vs. OSAHS snore | 100% |

| Ours | 2025 | 40 | AMFF-ED | Mel-spectrogram | ERBG-Net | Normal vs. OSAHS snore | 95.8% |

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AHI | Apnea–Hypopnea Index |

| AMFF-ED | Adaptive Multi-Feature Fusion Endpoint Detection |

| BiGRU | Bidirectional Gated Recurrent Unit |

| BSF | Band Spectral Features |

| CNN | Convolutional Neural Network |

| CQT | Constant-Q Transform |

| DNN | Deep Neural Network |

| ECA | Efficient Channel Attention |

| ECG | Electrocardiography |

| EEG | Electroencephalography |

| EMD | Empirical Mode Decomposition |

| EMG | Electromyography |

| ERBG-Net | Enhanced ResNet–BiGRU Network |

| FC | Fully Connected |

| FCM | Fuzzy C-Means |

| Fbanks | Filter Banks |

| KNN | k-Nearest Neighbor |

| LPC | Linear Predictive Coding |

| LSTM | Long Short-Term Memory |

| MFCC | Mel-Frequency Cepstral Coefficients |

| MFCC_LPCC | Mel-Frequency Cepstral Coefficients combined with Linearly Predicted Cepstral Coefficients |

| OSAHS | Obstructive Sleep Apnea–Hypopnea Syndrome |

| PCA | Principal Component Analysis |

| PLP | Perceptual Linear Prediction |

| PSG | Polysomnography |

| PR800 | Pitch Rhythm at 800 Hz |

| RAF | Respiratory Airflow |

| ResNet | Residual Network |

| RF | Random Forest |

| RERA | Respiratory Effort-Related Arousal |

| STFT | Short-Time Fourier Transform |

| SVM | Support Vector Machine |

| VG | Visibility Graph |

| ZCR | Zero-Crossing Rate |

References

- Zhang, P.; Zhang, R.; Zhao, F.; Heeley, E.; Chai-Coetzer, C.L.; Liu, J.; Feng, B.; Han, P.; Li, Q.; Sun, L.; et al. The prevalence and characteristics of obstructive sleep apnea in hospitalized patients with type 2 diabetes in China. J. Sleep Res. 2016, 25, 39–46. [Google Scholar] [CrossRef]

- Niiranen, T.J.; Kronholm, E.; Rissanen, H.; Partinen, M.; Jula, A.M. Self-reported obstructive sleep apnea, simple snoring, and various markers of sleep-disordered breathing as predictors of cardiovascular risk. Sleep Breath. 2016, 20, 589–596. [Google Scholar] [CrossRef]

- Grigg-Damberger, M.; Ralls, F. Cognitive dysfunction and obstructive sleep apnea: From cradle to tomb. Curr. Opin. Pulm. Med. 2012, 18, 580–587. [Google Scholar] [CrossRef] [PubMed]

- Witkowski, A.; Prejbisz, A.; Florczak, E.; Kądziela, J.; Śliwiński, P.; Bieleń, P.; Michałowska, I.; Kabat, M.; Warchoł, E.; Januszewicz, M.; et al. Effects of renal sympathetic denervation on blood pressure, sleep apnea course, and glycemic control in patients with resistant hypertension and sleep apnea. Hypertension 2011, 58, 559–565. [Google Scholar] [CrossRef] [PubMed]

- Reutrakul, S.; Mokhlesi, B. Obstructive sleep apnea and diabetes: A state of the art review. Chest 2017, 152, 1070–1086. [Google Scholar] [CrossRef] [PubMed]

- Cicek, D.; Lakadamyali, H.; Yağbasan, B.; Sapmaz, I.; Müderrisoğlu, H. Obstructive sleep apnoea and its association with left ventricular function and aortic root parameters in newly diagnosed, untreated patients: A prospective study. J. Int. Med. Res. 2011, 39, 2228–2238. [Google Scholar] [CrossRef]

- Punjabi, N.M. The epidemiology of adult obstructive sleep apnea. Proc. Am. Thorac. Soc. 2008, 5, 136–143. [Google Scholar] [CrossRef]

- Jennum, P.; Riha, R.L. Epidemiology of sleep apnoea/hypopnoea syndrome and sleep-disordered breathing. Eur. Respir. J. 2009, 33, 907–914. [Google Scholar] [CrossRef]

- Kim, J.; Kim, T.; Lee, D.; Kim, J.W.; Lee, K. Exploiting temporal and nonstationary features in breathing sound analysis for multiple obstructive sleep apnea severity classification. Biomed. Eng. Online 2017, 16, 6. [Google Scholar] [CrossRef]

- Volák, J.; Koniar, D.; Hargaš, L.; Jablončík, F.; Sekel’ová, N.; Ďurdík, P. RGB-D imaging used for OSAS diagnostics. In Proceedings of the 2018 ELEKTRO, Mikulov, Czech Republic, 21–23 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Patil, S.P.; Schneider, H.; Schwartz, A.R.; Smith, P.L. Adult obstructive sleep apnea: Pathophysiology and diagnosis. Chest 2007, 132, 325–337. [Google Scholar] [CrossRef]

- Jiang, Y.; Peng, J.; Zhang, X. Automatic snoring sounds detection from sleep sounds based on deep learning. Phys. Eng. Sci. Med. 2020, 43, 679–689. [Google Scholar] [CrossRef]

- Zarei, A.; Asl, B.M. Performance evaluation of the spectral autocorrelation function and autoregressive models for automated sleep apnea detection using single-lead ECG signal. Comput. Methods Programs Biomed. 2020, 195, 105626. [Google Scholar] [CrossRef] [PubMed]

- Dang, X.; Wei, R.; Li, G. Snoring and breathing detection based on empirical mode decomposition. In Proceedings of the 2015 International Conference on Orange Technologies (ICOT), Hong Kong, China, 19–22 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 79–82. [Google Scholar]

- Wang, C.; Peng, J.; Song, L.; Zhang, X. Automatic snoring sounds detection from sleep sounds via multi-features analysis. Australas. Phys. Eng. Sci. Med. 2017, 40, 127–135. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Peng, J.; Song, L. An OSAHS evaluation method based on multi-features acoustic analysis of snoring sounds. Sleep Med. 2021, 84, 317–323. [Google Scholar] [CrossRef] [PubMed]

- Fang, Y.; Liu, D.; Zhao, S.; Deng, D. Improving OSAHS prevention based on multidimensional feature analysis of snoring. Electronics 2023, 12, 4148. [Google Scholar] [CrossRef]

- Shen, F.; Cheng, S.; Li, Z.; Yue, K.; Li, W.; Dai, L. Detection of snore from OSAHS patients based on deep learning. J. Healthc. Eng. 2020, 2020, 8864863. [Google Scholar] [CrossRef]

- Shen, K.W.; Li, W.J.; Yue, K.Q. Support vector machine OSAHS snoring recognition by fusing LPCC and MFCC. J. Hangzhou Univ. Electron. Sci. Technol. (Nat. Sci. Ed.) 2020, 40, 1–5+12. [Google Scholar]

- Cheng, S.; Wang, C.; Yue, K.; Li, R.; Shen, F.; Shuai, W.; Li, W.; Dai, L. Automated sleep apnea detection in snoring signal using long short-term memory neural networks. Biomed. Signal Process. Control 2022, 71, 103238. [Google Scholar] [CrossRef]

- Li, R.; Li, W.; Yue, K.; Li, Y. Convolutional neural network for screening of obstructive sleep apnea using snoring sounds. Biomed. Signal Process. Control 2023, 86, 104966. [Google Scholar] [CrossRef]

- Korompili, G.; Amfilochiou, A.; Kokkalas, L.; Mitilineos, S.A.; Tatlas, N.A.; Kouvaras, M.; Kastanakis, E.; Maniou, C.; Potirakis, S.M. PSG-Audio, a scored polysomnography dataset with simultaneous audio recordings for sleep apnea studies. Sci. Data 2021, 8, 197. [Google Scholar] [CrossRef]

- Nguyen, M.T.; Huang, J.H. Snore detection using convolution neural networks and data augmentation. In Proceedings of the International Conference on Advanced Mechanical Engineering, Automation and Sustainable Development, Ha Long, Vietnam, 4–7 November 2021; Springer: Cham, Switzerland, 2021; pp. 99–104. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Lalapura, V.S.; Amudha, J.; Satheesh, H.S. Recurrent neural networks for edge intelligence: A survey. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Qin, H.; Liu, G. A dual-model deep learning method for sleep apnea detection based on representation learning and temporal dependence. Neurocomputing 2022, 473, 24–36. [Google Scholar] [CrossRef]

- Ding, L.; Peng, J.; Song, L.; Zhang, X. Automatically detecting OSAHS patients based on transfer learning and model fusion. Physiol. Meas. 2024, 45, 055013. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Sun, X.; Ding, L.; Peng, J.; Song, L.; Zhang, X. AHI estimation of OSAHS patients based on snoring classification and fusion model. Am. J. Otolaryngol. 2023, 44, 103964. [Google Scholar] [CrossRef]

- Sillaparaya, A.; Bhatranand, A.; Sudthongkong, C.; Chamnongthai, K.; Jiraraksopakun, Y. Obstructive sleep apnea classification using snore sounds based on deep learning. In Proceedings of the 2022 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Chiang Mai, Thailand, 7–10 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1152–1155. [Google Scholar]

- Castillo-Escario, Y.; Werthen-Brabants, L.; Groenendaal, W.; Deschrijver, D.; Jane, R. Convolutional neural networks for Apnea detection from smartphone audio signals: Effect of window size. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 666–669. [Google Scholar]

| Event Family | Specific Types of Snoring Events |

|---|---|

| Respiratory | Obstructive Apnea/Central Apnea/Mixed Apnea/Hypopnea/Cheyne Stokes Respiration/Periodic Respiration/Respiratory Effort-Related Arousal (RERA) |

| Neurological | Alternating Leg Muscle Activation/Hypnagogic Foot Tremor/Excessive Fragmentary Myoclonus/Leg Movement/Rhythmic Movement Disorder |

| Nasal | Snore |

| Cardiac | Bradycardia/Tachycardia/Long RR/Ptt Drop/Heart Rate Drop/Heart Rate Rise/Asystole/Sinus Tachycardia/Narrow Complex Tachycardia/Wide Complex Tachycardia/Atrial Fibrillation |

| Relative Desaturation/Absolute Desaturation |

| Parameter | Range/Value |

|---|---|

| Gender (Male/Female) | 3:1 |

| Age Range | 23–85 years |

| Mean Age | 57.5 years |

| AHI Severity Distribution (Mild/Moderate/Severe) | 2:7:31 |

| Method | Accuracy (%) |

|---|---|

| AMFF-ED + original recordings | 93.8 |

| AMFF-ED + noise-reduced recordings | 96.4 |

| Short-time energy and ZCR + noise-reduced recordings | 78.3 |

| AMFF-ED + original recordings with low-level conversational background noise | 91.6 |

| Model | Accuracy | Sensitivity | Specificity | F1 Score |

|---|---|---|---|---|

| ResNet18 | 0.9214 | 0.9294 | 0.9162 | 0.9029 |

| ResNet18-BiGRU | 0.9315 | 0.9412 | 0.9315 | 0.9195 |

| ECA-Resnet 18 | 0.9307 | 0.9176 | 0.9391 | 0.9123 |

| ECA-Resnet34-BiGRU | 0.9476 | 0.9412 | 0.9518 | 0.9339 |

| ERBG-Net | 0.9584 | 0.9686 | 0.9518 | 0.9482 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Gan, Y.; Yuan, X.; Cheng, Y.; Zhou, L. Non-Contact Screening of OSAHS Using Multi-Feature Snore Segmentation and Deep Learning. Sensors 2025, 25, 5483. https://doi.org/10.3390/s25175483

Xu X, Gan Y, Yuan X, Cheng Y, Zhou L. Non-Contact Screening of OSAHS Using Multi-Feature Snore Segmentation and Deep Learning. Sensors. 2025; 25(17):5483. https://doi.org/10.3390/s25175483

Chicago/Turabian StyleXu, Xi, Yinghua Gan, Xinpan Yuan, Ying Cheng, and Lanqi Zhou. 2025. "Non-Contact Screening of OSAHS Using Multi-Feature Snore Segmentation and Deep Learning" Sensors 25, no. 17: 5483. https://doi.org/10.3390/s25175483

APA StyleXu, X., Gan, Y., Yuan, X., Cheng, Y., & Zhou, L. (2025). Non-Contact Screening of OSAHS Using Multi-Feature Snore Segmentation and Deep Learning. Sensors, 25(17), 5483. https://doi.org/10.3390/s25175483