4.1. CNN Training Results

We employed statistical metrics, including recall, selectivity, precision, accuracy, and F1-score, to analyze the crack detection performance of different CNN models. Recall refers to the percentage of true positive cases correctly predicted by the model. Accuracy is the percentage of correctly predicted cases among all cases, and precision is the percentage of cases that are actually positive among cases predicted as positive. Selectivity is the percentage of measuring the model’s performance in identifying

TN (true negative), and F1-score is a harmonic mean of recall and precision, i.e., an evaluation metric considering the balance between the two metrics. A higher value of an evaluation metric indicates a better performance of the evaluated model. The mathematical expression for these metrics can be found in the following equations:

where true positive (

TP) refers to the number of cases where the model correctly predicts as positive, false positive (

FP) is the number of cases where the model incorrectly predicts as positive,

TN is the number of cases where the model correctly predicts as negative, and false negative (

FN) refers to the number of cases where the model incorrectly predicts as negative.

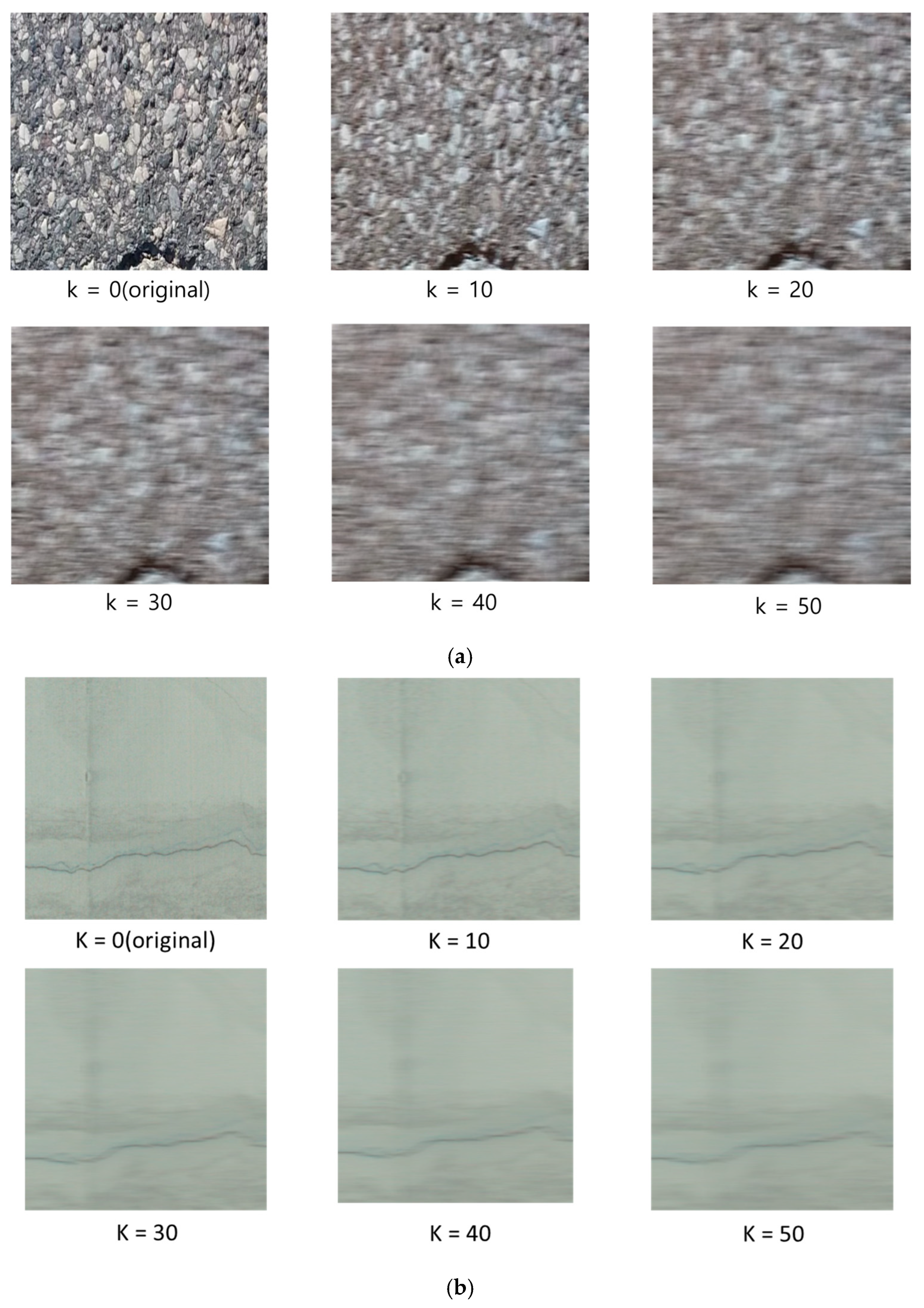

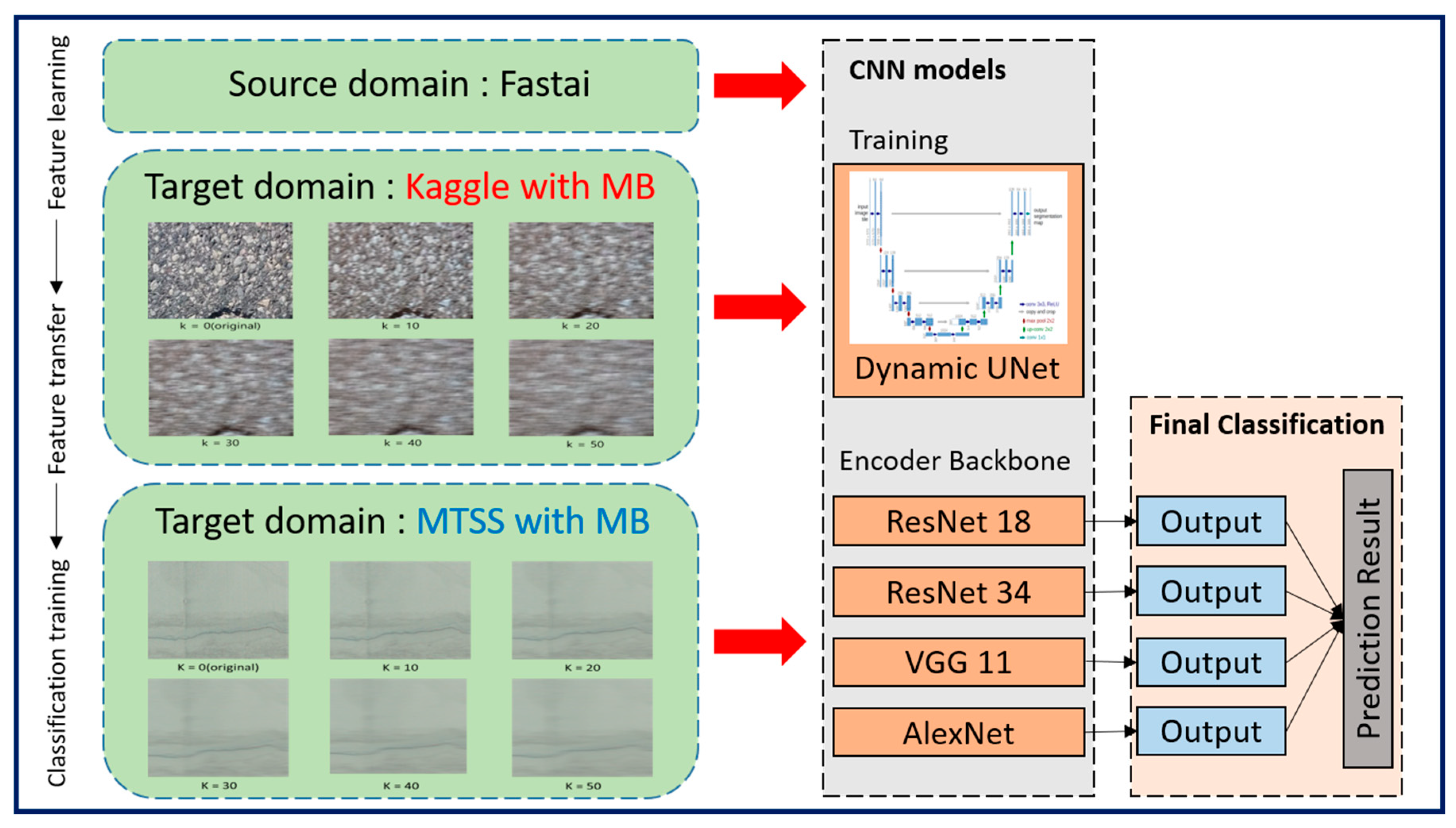

We analyzed the crack detection performance of the four CNN models based on the confusion matrix, utilizing images generated by assigning MB intensities of 10–50 to the Kaggle dataset.

Table 1 and

Figure 4 present the evaluated recall, selectivity, precision, accuracy, and F1-score. In

Figure 4, the

y-axis shows the CNN evaluation metrics, and the

x-axis denotes the MB intensity.

All the models showed a rapid downward tendency of recall as the blur intensity increased, indicating the limitation of detecting cracks with increasing blur intensity (

Figure 4a). In particular, the recall of ResNet18 was 64.96% at blur 0, but decreased to 2.97% at blur 50, and that of ResNet 34 also decreased from 64.32% to 7.23%. The recall of VGG11 and AlexNet decreased even more radically, with a drop to 2.79% at blur 50 in terms of AlexNet. These findings imply the dramatic drop of the CNN model’s capability of detecting cracks in highly blurred images, with VGG11 and AlexNet being particularly less tolerant to blurring.

All the models maintained a high selectivity of over 99% even as the blur intensity increased (

Figure 4b). This result indicates that the model’s capability to accurately exclude crack-free areas is not affected. However, the rapid decrease in recall indicates that the model is more inclined to correctly exclude non-cracks, instead of being unable to detect cracks.

Precision remained relatively constant with increased blur intensity, showing differences between models. As shown in

Figure 4c, ResNet18 and ResNet34 precision slightly increased with blur intensity, likely due to fewer false positives. The precision of VGG11 was 77.41% in the original images but decreased to 64.21% at blur 50, showing a significant reduction compared to other models. AlexNet maintained stable precision but became volatile after blur 40. These results suggest that as blur intensity increases, fewer detectable cracks maintain precision levels.

In

Figure 4d, accuracy shows a downward tendency as blur intensity increases; ResNet18 and ResNet34 accuracy decrease from 98.17% and 98.18% to 96.57% and 96.70%, respectively, and VGG 11 and AlexNet show similar patterns, maintaining a lowest accuracy of 96.51%. However, given the high selectivity and limitation in evaluating crack detection performance based on accuracy alone, analysis of recall and F1-score is necessary.

As illustrated in

Figure 4e, the F1-score drastically decreases as the blur intensity increases, with the F1-score of ResNet18 dropping from 71.41% to 5.73%, and that of ResNet34 decreasing from 71.25% to 13.31%. F1-score of VGG11 and AlexNet recorded extremely low values of 4.82% and 5.37%, respectively, at blur 50, which implies that the CNN models are practically ineffective at detecting cracks in highly blurred environments. The large decrease in the F1-score of VGG11 and AlexNet especially indicates that these models are more vulnerable in highly blurred images.

Table 2 and

Figure 5 illustrate the crack detection performance results for the four CNN models by utilizing images generated from the MTSS datasets with an MB intensity of 10–50: recall, selectivity, precision, accuracy, and F1-score.

As presented in

Figure 5a, as the blur intensity increased, the recall of all models drastically decreased, indicating that the more intense the blur, the less frequently cracks are detected. In particular, the recall of ResNet18 decreased from 92.46% to 1.12%, that of ResNet34 dropped from 93.41% to 2.29%, and those of VGG 11 and AlexNet showed an even faster downward tendency, plunging from 71.36% to 0.47% and from 47.05% to 0.88%, respectively. These results suggest that CNN models are ineffective at extracting crack features from blurred images.

All the models maintained a high selectivity of higher than 99% regardless of the blur intensity (

Figure 5b), implying that an increase in blur does not significantly affect the capability of identifying crack-free areas. However, when considered in conjunction with the rapid decrease in recall, the model tended to accurately exclude non-cracks instead of being unable to detect cracks.

As illustrated in

Figure 5c, precision did not show a rapid change even with increasing blur intensity, with most models maintaining relatively constant values. For instance, the precision of ResNet18 slightly decreased from 85.74% to 82.25%, that of ResNet34 also dropped from 85.77% to 76.44%, and those of VGG11 and AlexNet also showed a relatively small decrease. These outcomes indicate that the models can provide reliable results when detecting cracks, but cannot detect them when the blur increases.

All the models showed decreased accuracy with increasing blur intensity (

Figure 5d). The accuracy of ResNet18 decreased from 98.85% to 95.03%, that of ResNet3 decreased from 98.89% to 95.06%, and those of VGG11 and AlexNet decreased faster, dropping from 97.25% to 95.00% and 96.49% to 95.01%, respectively. The ResNet models maintained higher accuracy for blurring, while VGG11 and AlexNet degraded more quickly.

As shown in

Figure 5e, the F1-score is the metric that decreases most rapidly with increasing blur intensity. F1-score of ResNet18 plunged from 88.97% to 2.21%, and that of ResNet34 from 89.43% to 4.45%. The F1-score of VGG11 and AlexNet showed even more severe declines, plunging from 72.22% to 0.93% and from 57.33% to 1.74%, respectively. These outcomes show that the models lost their capability of detecting cracks as the blur increased.

Figure 6 compares crack detection performance between the Kaggle and MTSS datasets. Comparing F1-scores on original images shows significant performance differences across datasets, despite using the same training model. The F1-score of ResNet18 was 71.41% on Kaggle and 88.97% on MTSS, showing a 17% difference. ResNet34 showed an 18% performance difference, VGG11 around 23%, and AlexNet about 7%. On the Kaggle dataset, the F1-score (71.41%) of ResNet18 exceeded that of ResNet34 (71.25%), whereas in MTSS, ResNet34 (89.43%) outperformed ResNet18 (88.97%). VGG11 (72.22%) in MTSS showed higher values than those of ResNet18 (71.41%) and ResNet34 (71.25%) in Kaggle. VGG11 and AlexNet, showing similar performance on Kaggle, exhibited different trends on MTSS. VGG11 improved by over 24%, while AlexNet improved by 7%. These results indicate that CNN-based crack detection performance varies significantly based on image data quality. Thus, dataset quality is crucial for the CNN model’s crack detection performance.

The findings of this study showed that the performance of CNN-based crack detection models degraded drastically as the Gaussian blur intensity increased on the Kaggle and MTSS datasets. ResNet18 and ResNet34 were relatively more resistant to increasing blur, but their performance eventually dropped at high levels of blur intensity. VGG 11 and AlexNet were vulnerable to increased blur, and especially, the huge decreases in the recall and F1-score indicate significant degradation of actual crack detection performance. While precision is relatively constant, considering more cases where detection itself is impossible with increasing blur is crucial. Accuracy did not decrease significantly because of high selectivity, reflecting the lower performance of actual crack detection. In particular, the model’s capability of detecting cracks became almost neutralized in the case of severe blurring, as F1-score values plunged.

4.2. Correlation Between NR-IQA and CNN-Based Crack Detection Performance

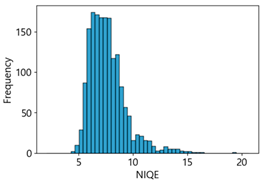

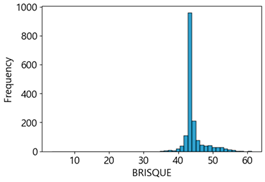

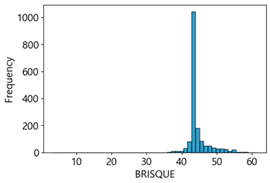

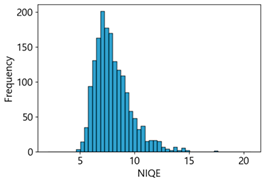

We found that the crack detection performance of the CNN models degraded due to MB in the images. We utilized NR-IQA metrics such as BRISQUE, Naturalness Image Quality Evaluator (NIQE) [

81], Perception-based Image Quality Evaluator (PIQE) [

82], and Cumulative Probability of Blur Detection (CPBD) [

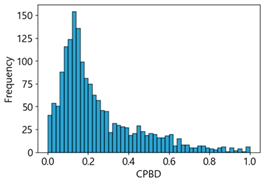

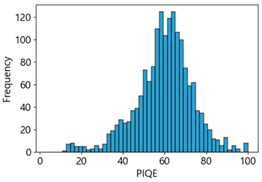

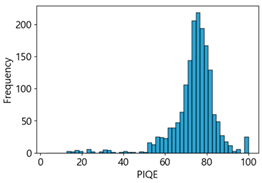

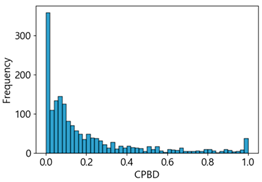

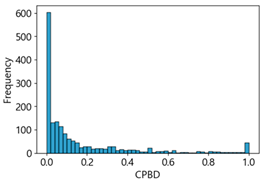

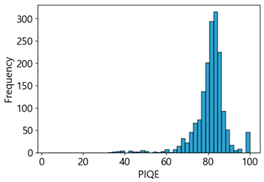

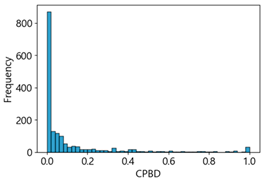

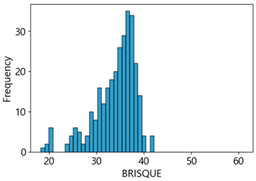

83], to evaluate the quality of these blurred images. BRISQUE, NIQE, and PIQE are measured on a scale from 0 (sharp) to 100 (blurry), and CPBD is measured on a scale from 0 (blurry) to 1.0 (sharp). We selected these metrics because they have defined categories for blurriness and sharpness of images, and make it easy to set threshold ranges for image quality scores through correlation analysis with deep learning performance.

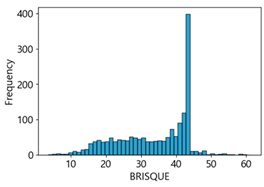

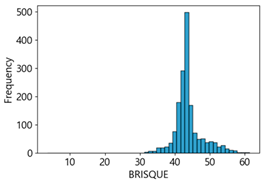

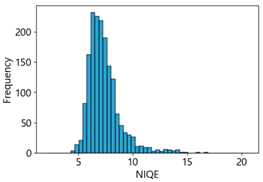

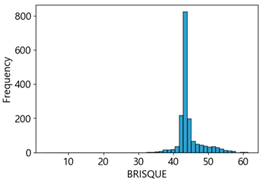

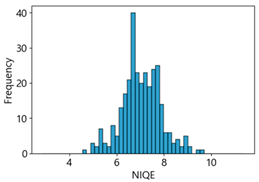

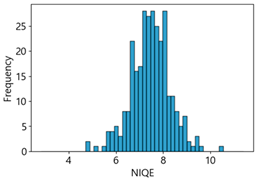

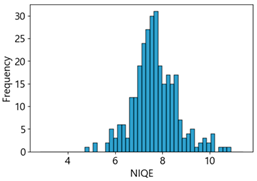

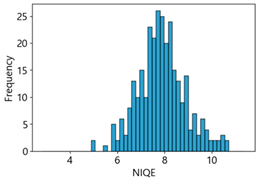

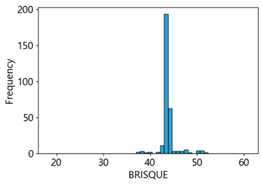

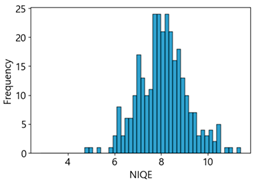

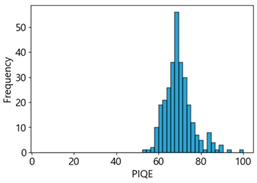

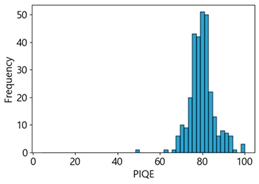

Table 3 and

Table 4 present the evaluation results of ResNet34 on the Kaggle dataset and the MTSS dataset. We utilized these outcomes and analyzed the correlation between the F1-score of the CNN model and the NR-IQA image quality metrics. The NR-IQA metric values shown in

Table 3 and

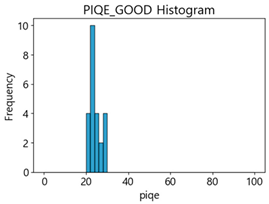

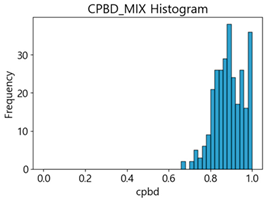

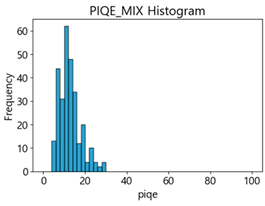

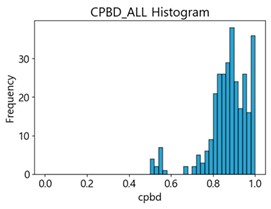

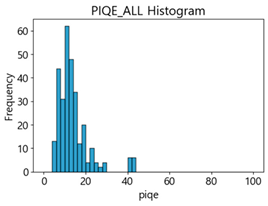

Table 4 represent the average of the image quality metrics measured on the test data in each dataset, and these are utilized in the quantitative evaluation of the impacts of image quality on the crack detection performance of CNN models (for reference, histograms of PIQE and CPBD versus MB intensity for the Kaggle and MTSS datasets are provided in

Appendix B).

Figure 7 shows Pearson correlation coefficients between F1-score and NR-IQA metrics for analyzing image quality impact on CNN-based crack detection performance on the Kaggle dataset. The F1-score and NR-IQA metrics, NIQE (−0.89) and PIQE (−0.83), have a high negative correlation, indicating that lower image quality results in reduced crack detection performance. F1-score and BRISQUE show a negative correlation of −0.65. CPBD (0.78) correlates positively with F1-score, indicating that less blurred images led to improved CNN performance. The NR-IQA metrics NIQE (−0.89), PIQE (−0.83), CPBD (0.78), and BRISQUE (−0.65) show high correlation with F1-score.

Figure 8 illustrates the Pearson correlation coefficients between the F1-score and various NR-IQA metrics on the MTSS dataset for analyzing the impact of image quality on CNN-based crack detection performance. As shown in

Figure 8, NIQE (−0.87), PIQE (−0.87), and BRISQUE (−0.76) have strong negative correlations with F1-score, showing similar patterns in the Kaggle dataset. Furthermore, CPBD (0.82) has a strong positive correlation with the F1-score, with a larger impact than on the Kaggle dataset. The NR-IQA evaluation metrics, i.e., NIQE (−0.87), PIQE (−0.87), CPBD (−0.82), and BRISQUE (−0.76), in this order, have a high correlation with the F1-score.

These results suggest the possibility of maximizing CNN performance by ensuring optimal image quality in MTSS through NR-IQA metrics. Therefore, utilizing NR-IQA metrics with high correlation with the F1-score is useful for maximizing CNN-based crack detection performance, and optimal image quality evaluation and selection strategies can be set based on the most influential metrics in each dataset. Therefore, setting quality threshold ranges using NR-IQA metrics, as well as a strategy of deleting images that do not meet the criteria, is necessary. Such a method is expected to reduce detection errors caused by highly blurred images and contribute to deriving highly reliable crack detection results.

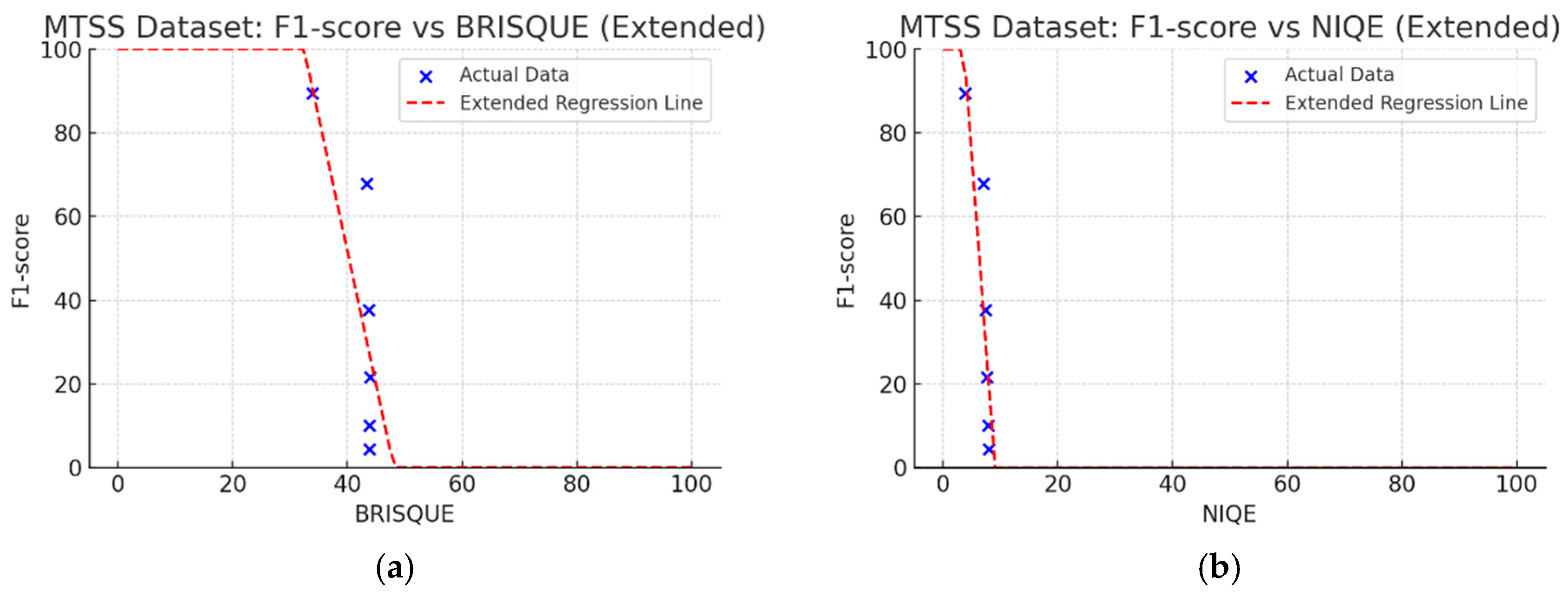

4.3. Linear Regression Analysis of NR-IQA Evaluation Metrics

In the Kaggle dataset, the metrics that are highly correlated with the F1-score are NIQE (−0.88), PIQE (−0.83), CPBD (0.78), and BRISQUE (−0.65), in this order. In the MTSS dataset, the metrics are NIQE (−0.87), PIQE (−0.87), CPBD (0.82), and BRISQUE (−0.76), in this order. Additionally, to select the most appropriate NR-IQA metrics for the two datasets and set a threshold range with the corresponding metrics, based on the F1-score and the regression results for each metric, the threshold range can be set considering the metrics with the highest intensity of change in quality score for each metric for MB.

We performed linear regression to analyze the CNN’s F1-score change with MB intensity for each quality metric in the Kaggle dataset (

Figure 9). The

X-axis had the range of unique scores for each quality metric. Analyzing regression coefficients, CPBD was 0.74, PIQE was 0.77, BRISQUE was 3.25, and NIQE was 31.26. For the coefficient of determination (R2), NIQE had the highest value of 0.79, followed by PIQE (0.69), CPBD (0.60), and BRISQUE (0.42). For

p-value, NIQE was 0.017, and PIQE was 0.0397, which are lower than 0.05, indicating statistical significance.

Figure 10 shows linear regression results on the MTSS dataset. Regression coefficients showed CPBD was 0.83, PIQE was 1.03, BRISQUE was 3.69, and NIQE was 18.45. For the coefficient of determination (R2), NIQE had the highest value of 0.76, followed by PIQE (0.75), CPBD (0.68), and BRISQUE (0.58). The

p-values were NIQE (0.0245), PIQE (0.0247), and CPBD (0.0434), all below 0.05, indicating statistical significance.

We aimed to devise and develop a dataset management plan that could maintain the consistency of high-quality data by removing low-quality images below a certain level after utilizing NR-IQA-based quantitative image quality scores during data collection, cleaning, and verification in MTSS. The correlation analysis revealed that NIQE had the highest correlation in both the Kaggle and MTSS datasets. However, in the regression analysis, the regression coefficient was 31.26 for the Kaggle dataset and 18.45 for the MTSS dataset, indicating that the F1-score changed significantly when the NIQE score changed by 1. Although image scores of NIQE range from 0 (sharp) to 100 (blurry), the variation in quality scores in the original images is rated around 1, which does not indicate a significant change in image quality. However, in the Kaggle dataset, NIQE was measured to be 6.42 in the original images and 8.09 in the MB 50 images; in the MTSS dataset, it was measured to be 3.82 in the original images and 8.06 in the MB 50 images. Therefore, the NIQE metric cannot be regarded as an appropriate evaluation metric because it cannot fully reflect the variation in quality scores, consistent with blur intensity.

BRISQUE has an image score range from 0 (sharp) to 100 (blurry); however, in these two datasets, the measured results showed that the quality score varied slightly within 45 points, consistent with changes in the intensity of the MB images. As analyzed by Giniatullina et al. [

70], regarding horizontal blur, the consistency between BRISQUE scores and actual image quality was likely to be reduced.

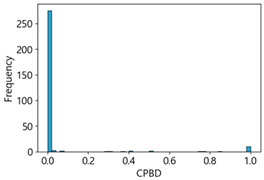

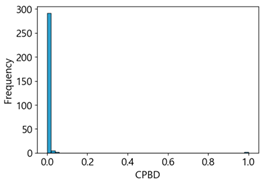

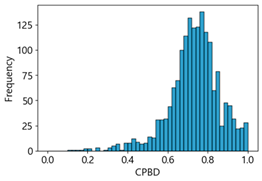

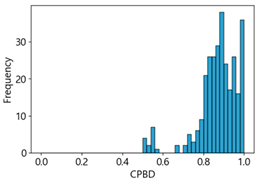

CPBD has an image quality range from 0 (blurry) to 1.0 (sharp). As shown in

Table 3 and

Table 4, when MB increased by 10, image quality decreased from 0.73 to 0.24 in the Kaggle dataset. In the MTSS dataset, when MB increased by 10, quality scores plunged from 0.87 to 0.12. The F1-score decreased from 72.25% to 62.23% in the Kaggle dataset, and dropped from 89.43% to 67.83% in the MTSS dataset, showing different percentage decreases due to MB impact. This impact is analyzed as statistically insignificant with a

p-value of 0.0702 in the Kaggle dataset. However, with high sensitivity to MB changes, such as CPBD, setting the MB threshold value may be advantageous as it indicates a high classification effect for sharp images.

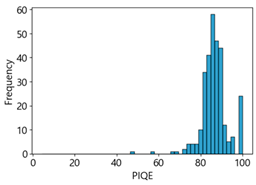

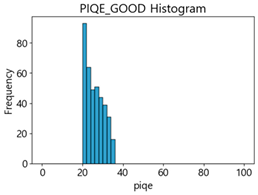

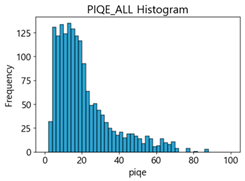

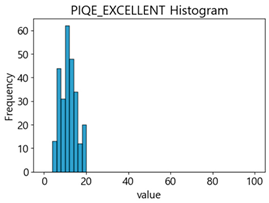

PIQE has an image quality score range from 0 (sharp) to 100 (blurry). In the Kaggle dataset, the score for blurring was measured, which increased from 21.04 in the original images, 59.7 in the MB 10 images, to 73.87 in the MB 20 images. In the MTSS dataset, the score soared from 13.77 in the original images to 69.35 in the MB 10 images, and the decrease in the score dropped less with the subsequent increase in MB intensity, similar to CPBD. PIQE also showed the highest results, except NIQE, in correlation and regression analysis. It was highly sensitive to changes in MB, and showed a high consistency even with changes in F1-score.

CPBD and PIQE can be considered appropriate NR-IQA metrics for horizontal MB images, and MTSS image quality can be classified using these metrics.