1. Introduction

Wearable sensors have become indispensable tools in the field of healthcare and personalized medicine, offering continuous, non-invasive monitoring of physiological parameters in real time [

1,

2]. Devices such as continuous glucose monitors (CGMs), physical activity trackers, and smartphone sensors provide valuable insights into an individual’s metabolic health, physical activity, and lifestyle habits [

3,

4]. These sensors enable the collection of granular data that can be used to understand complex physiological processes, predict health outcomes, and design interventions [

5,

6]. Wearable sensors also provide opportunities for developing robust solutions despite different challenges, such as small dataset, missing features, data in the wild, etc. [

7,

8,

9]. In metabolic health, wearable sensors allow for the precise tracking of blood glucose fluctuations and physical activity levels, offering individuals and clinicians the means to implement timely and tailored strategies for disease prevention and management [

10]. This accessibility to continuous health data marks a significant shift toward proactive and data-driven healthcare solutions.

Hyperglycemia, or high glucose concentration in the blood, occurs when the body cannot effectively regulate glucose levels. Hyperglycemia in the fasted state (i.e., no caloric intake for at least 8 h) is defined by a fasting plasma glucose level of ≥100 mg/dL (≥5.6 mmol/L), whereas hyperglycemia in the non-fasted state generally is defined as having a blood glucose level (BGL) of ≥140 mg/dL (7.8 mmol/L) two hours after a meal [

11,

12]. Lack of physical activity and relative overconsumption of carbohydrates are known to affect a person’s metabolism and their ability to regulate glucose [

13]. Other common risk factors often cited as potentially responsible for developing diabetes are being obese, overweight, or having a higher than normal body mass index (BMI) [

14]. Untreated hyperglycemia increases the risk of complications like retinopathy, nephropathy, neuropathy, cardiovascular disease, stroke, poor limb circulation, and depression [

15]. While hyperglycemia can affect anyone, individuals with prediabetes or diabetes are at a higher risk of developing complications arising from frequent exposure to hyperglycemia, compared to healthy people [

16,

17].

The Centers for Disease Control (CDC) estimates that 38% of American adults have prediabetes and 19% of them are unaware of their condition [

18]. To help prevent the increasing prevalence of hyperglycemia and prediabetes, the Food and Drug Administration (FDA) has approved the sale of Continuous Glucose Monitors (CGMs) over the counter in the U.S. in 2024 [

19]. This decision has made CGM devices more accessible to people with or without diabetes. Prediabetes can be reversed with proper lifestyle management, such as diet and physical activity [

20]. However, if left untreated, it can develop type 2 diabetes, which is an irreversible lifelong condition [

21]. Healthy individuals are generally expected to maintain their blood glucose levels (BGLs) within a range of 60 to 140 mg/dL [

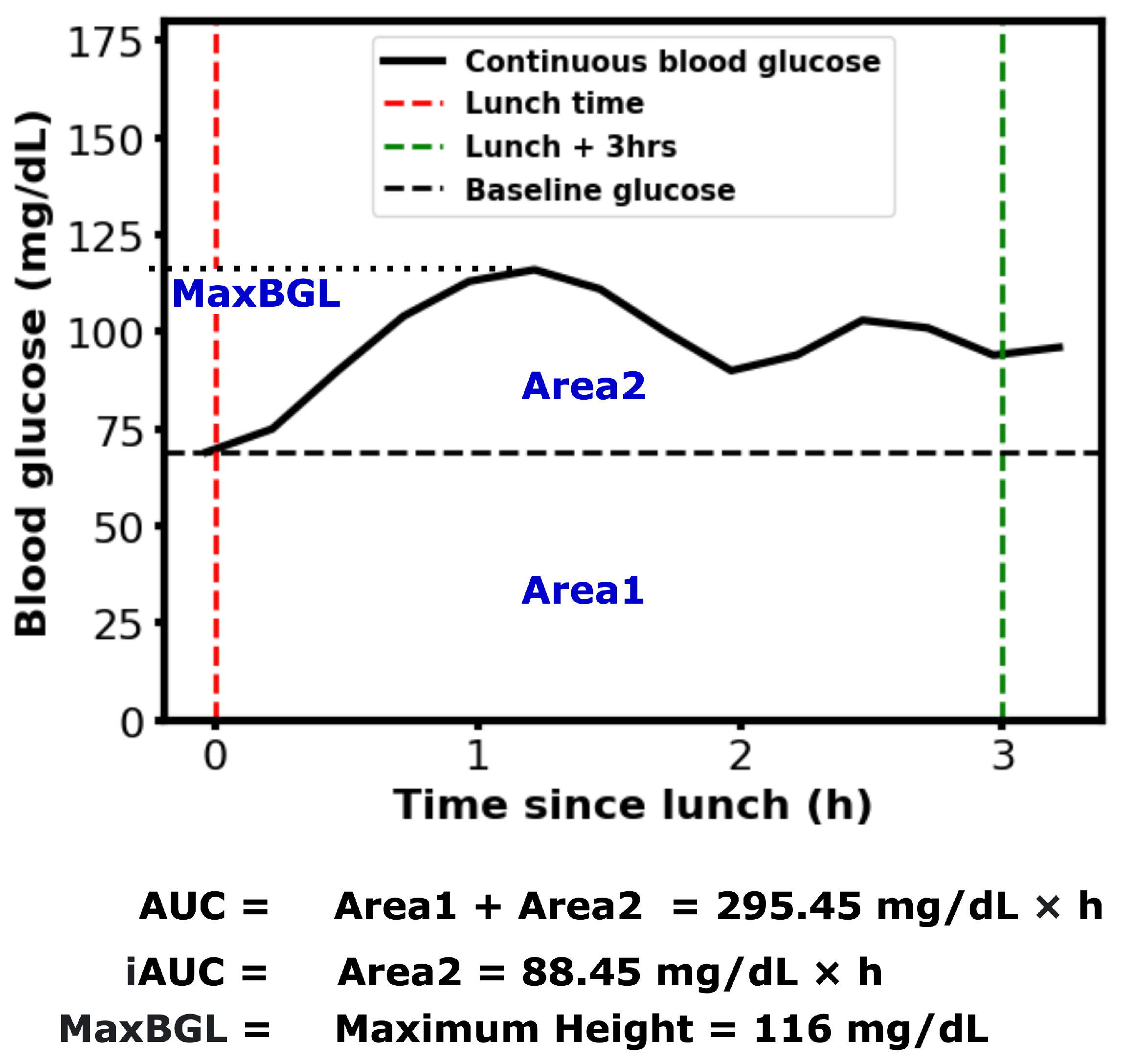

11]. However, temporary elevations above this range are common following certain meals. The area under the BGL curve during a specific post-meal period (e.g., 2–3 h) is referred to as the postprandial Area Under the Curve (AUC). Postprandial blood glucose and AUC are important indicators of BGL regulation and diabetes complications [

22,

23]. Numerous studies have explored methods for calculating AUC and the various factors influencing it [

24,

25,

26]. An example of calculating AUC, incremental AUC, and the maximum postprandial blood glucose level (MaxBGL) is shown in

Figure 1.

Blood glucose forecasting using wearable sensor data is an active area of research that has been addressed with different machine learning and deep learning approaches, including ensemble methods, attention methods, and knowledge distillation [

27,

28]. While the area under the glucose curve has gained attention as a promising metric for estimating hyperglycemia risk [

29,

30], existing research has not yet explored how comprehensive lifestyle factors, including diet, physical activity, work routines, and baseline glucose parameters, can be integrated to predict both hyperglycemia and glucose curve characteristics.

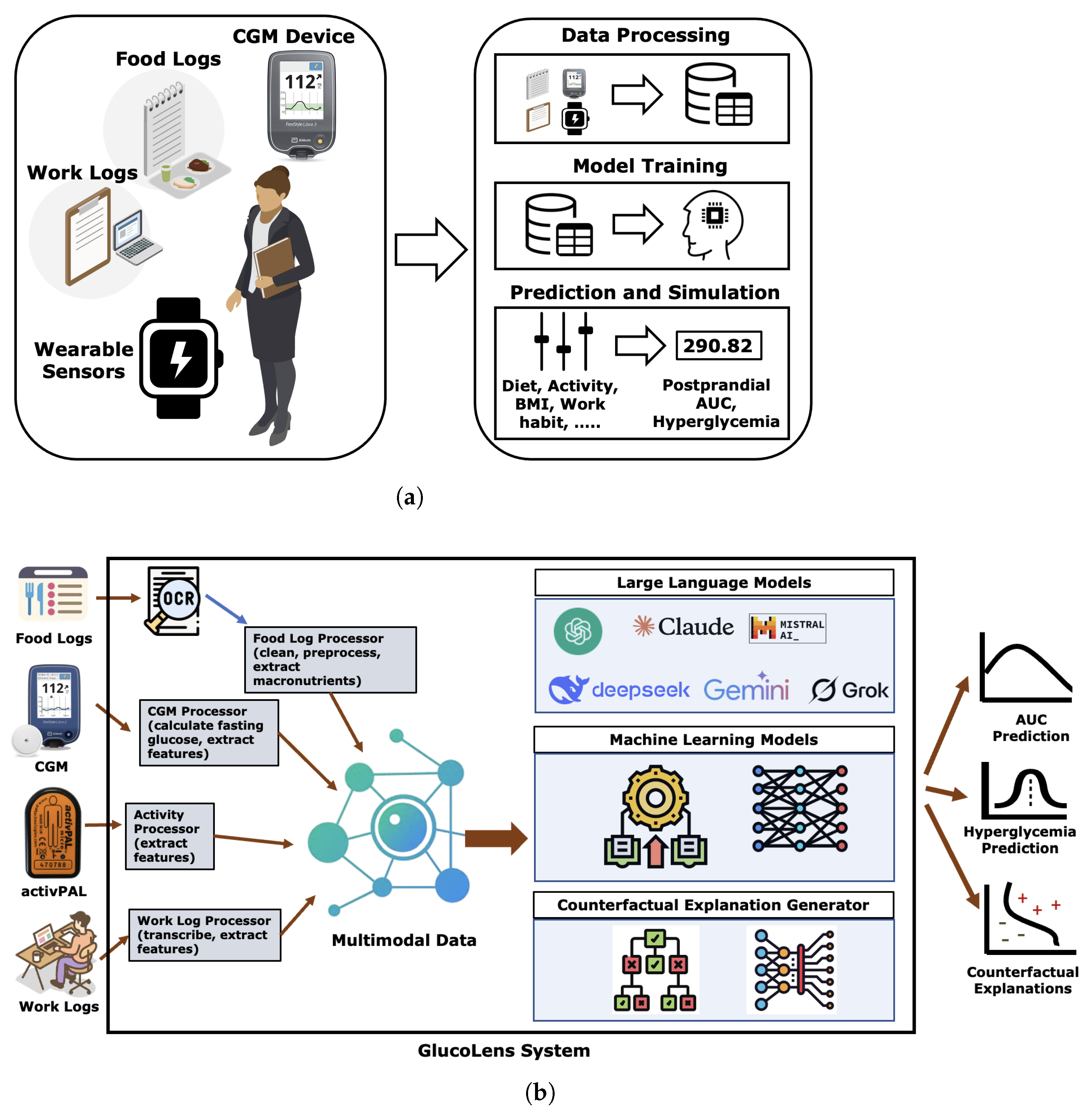

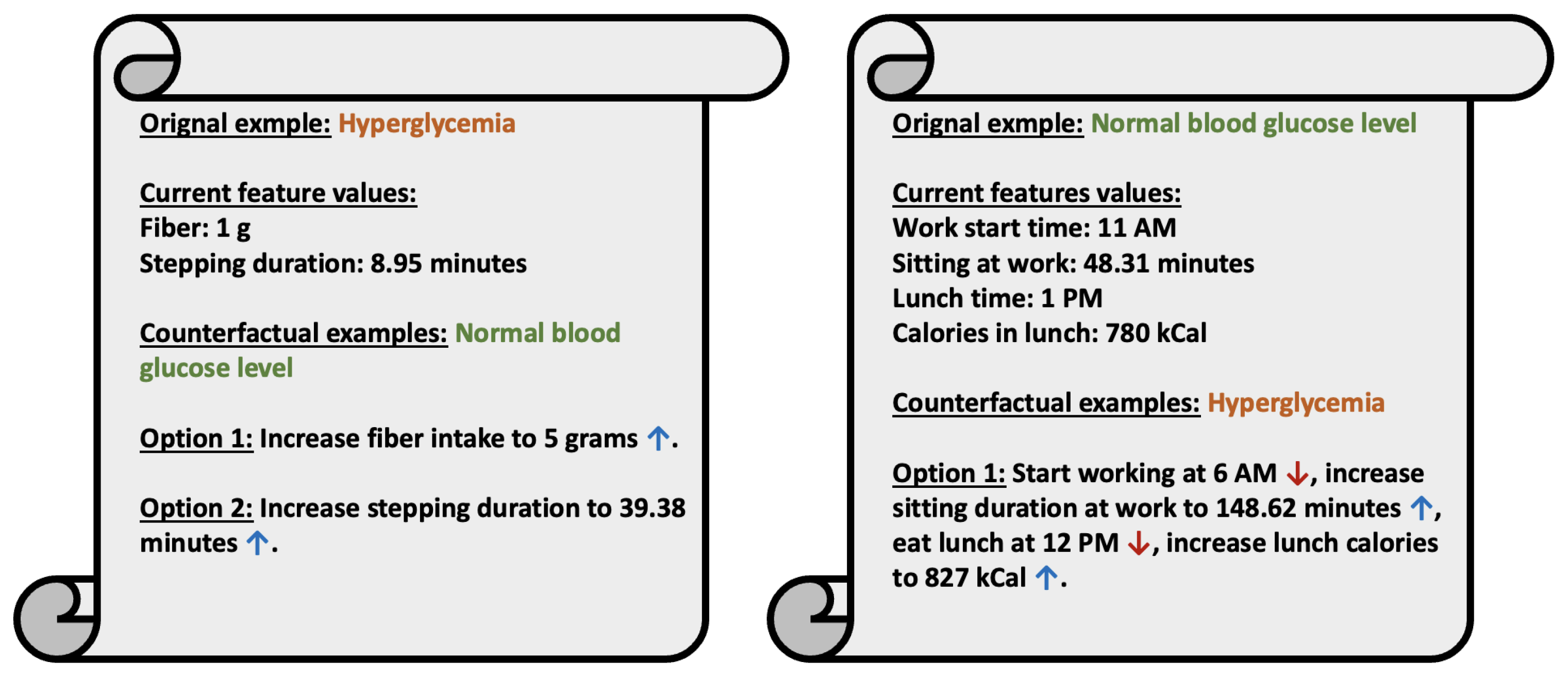

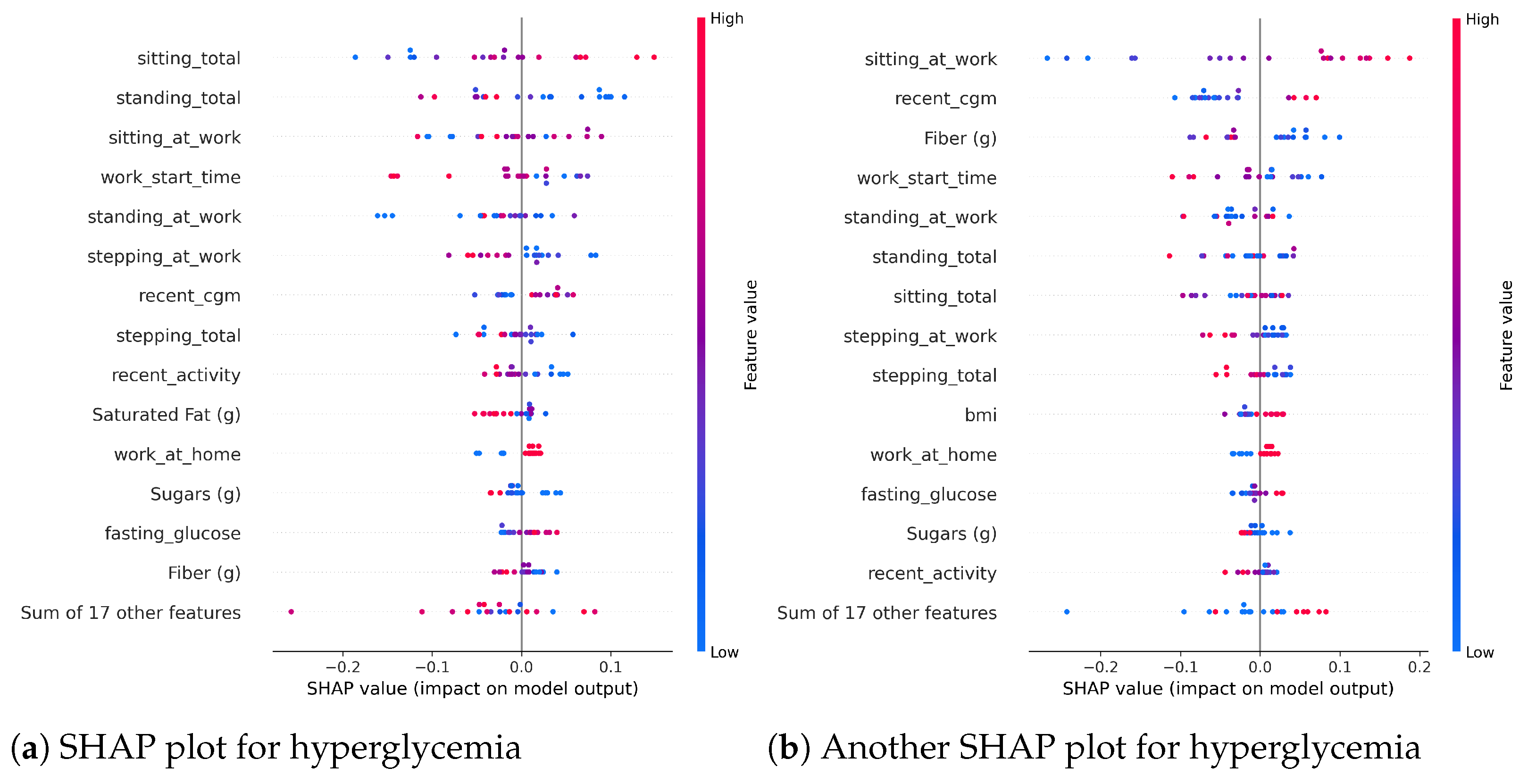

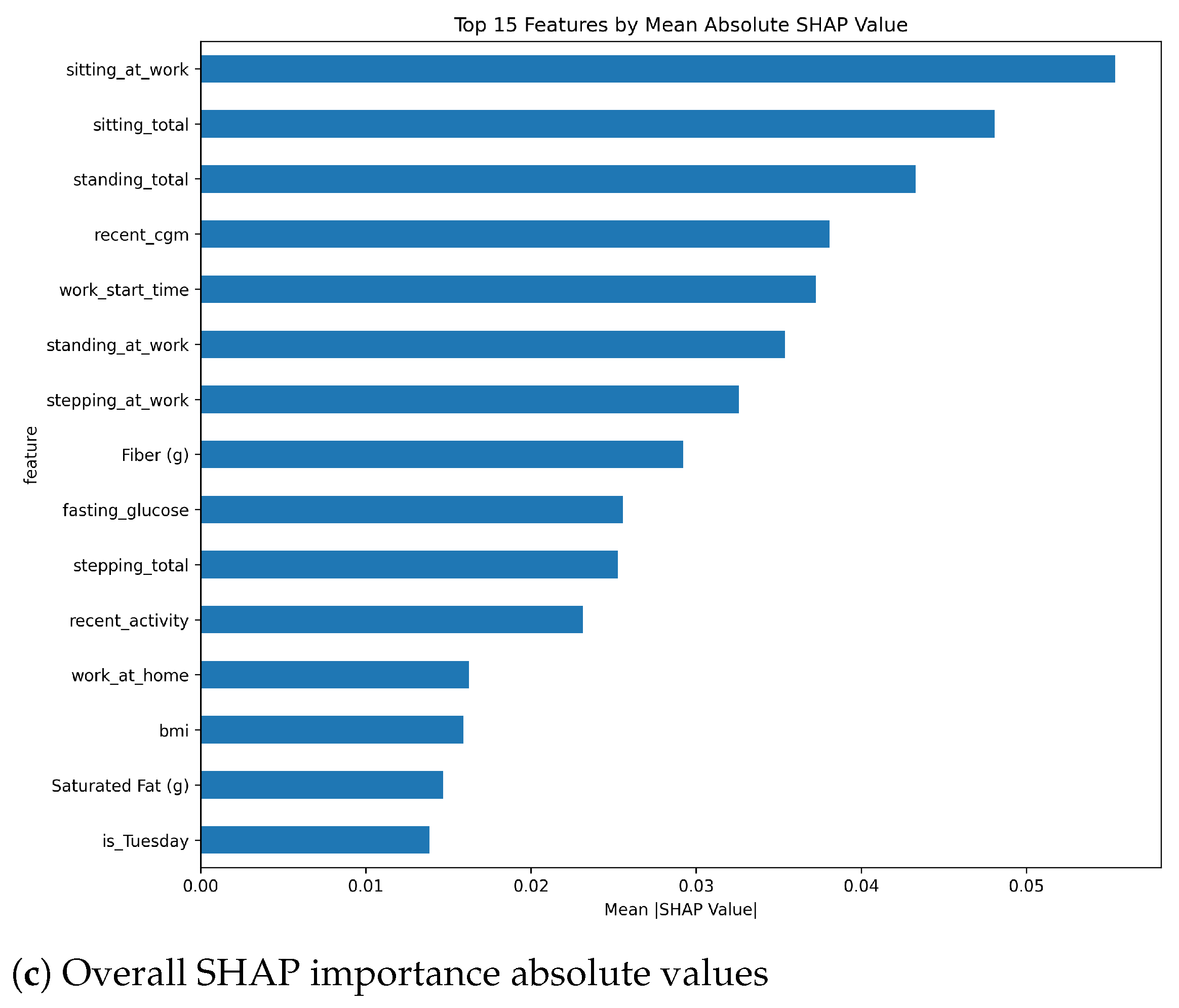

In this paper, we present GlucoLens, a machine learning framework that integrates continuous glucose monitoring (CGM) data, activity tracker measurements, and food and work logs to estimate postprandial area under the glucose curve (AUC) and predict hyperglycemia. To summarize, our contributions are as follows: (i) designing hyperglycemia and AUC prediction systems based on machine learning and large language models that combine wearable data, food logs, work logs, and recent CGM patterns; (ii) implementing GlucoLens for AUC and hyperglycemia prediction and diverse counterfactual (CF) explanations; (iii) training and testing our models on a dataset collected in a clinical trial conducted by our team; (iv) evaluating the explanations using objective metrics and by comparing them with the prior clinical research, and (v) providing a thorough discussion of our findings and how it advances the research of hyperglycemia prediction. Code available:

https://github.com/ab9mamun/GlucoLens (accessed on 29 June 2025).

4. Discussion

4.1. Summary of the Results

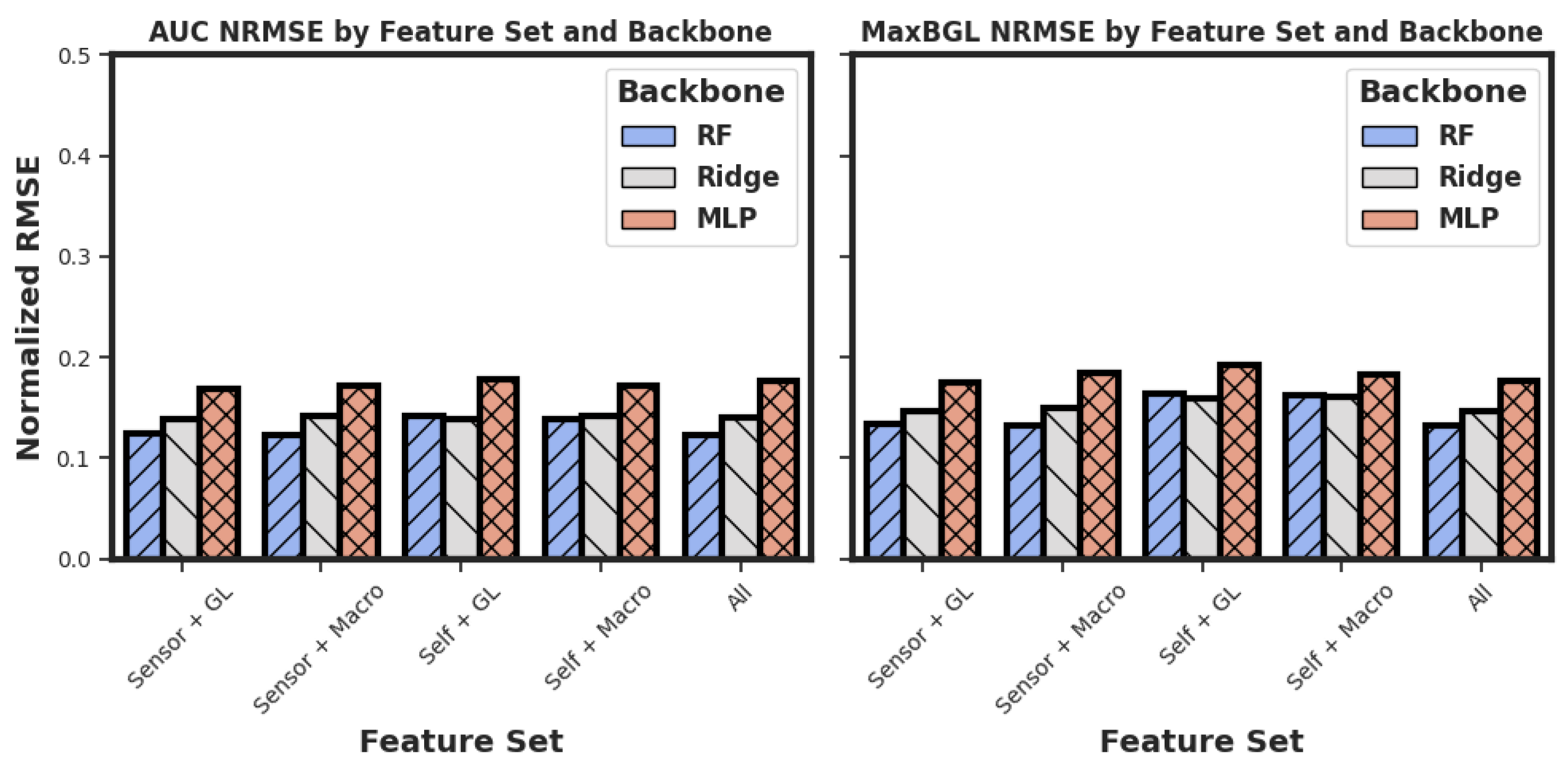

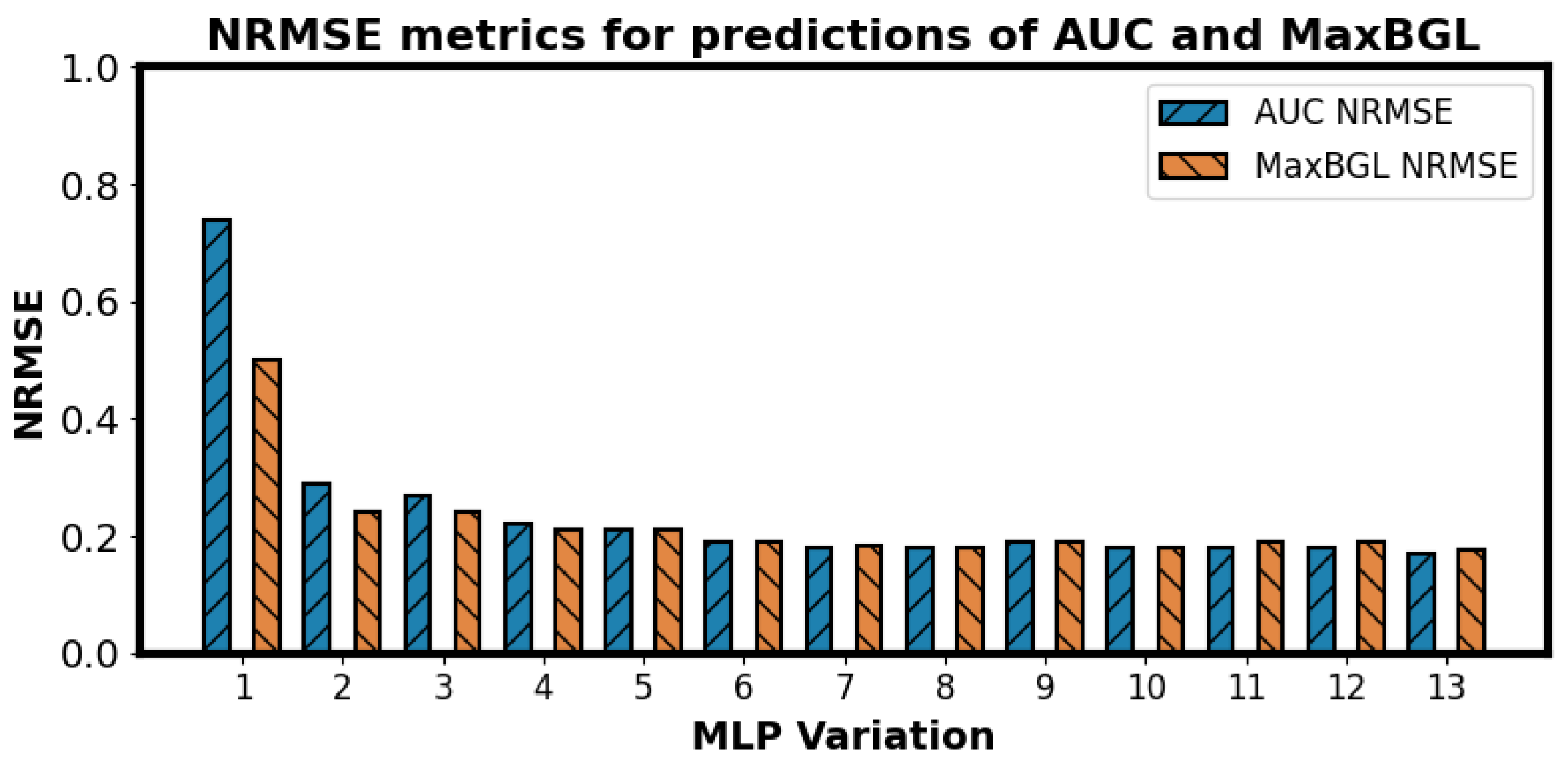

We have presented an important problem of estimating the postprandial area under the curve (AUC), the maximum postprandial blood glucose level (MaxBGL), and detecting hyperglycemia. Based on our experiments, we found that Random Forest (RF) models outperform Multilayer Perceptrons (MLPs), ridge regression, XGBoost, and TabNet models in AUC prediction, as shown in

Table 4. We also chose our features from five different feature sets, as illustrated in

Table 1. Although our feature sets use 31 features as input to the model when choosing the set of ‘All’ features, all these features are derived from only four sources of data, which makes it easier to have the required data easily available when needed for the model.

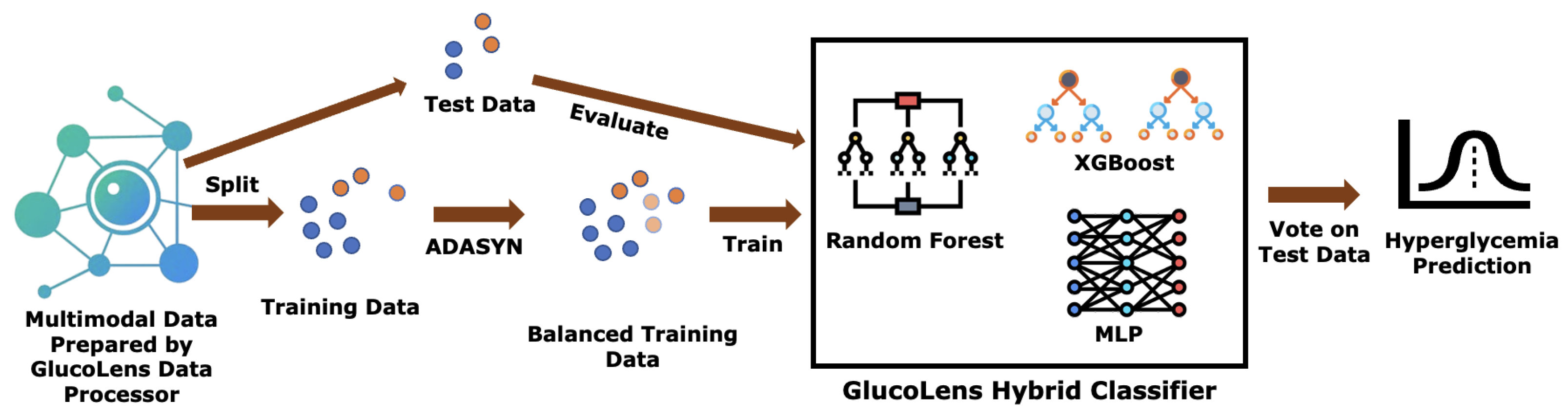

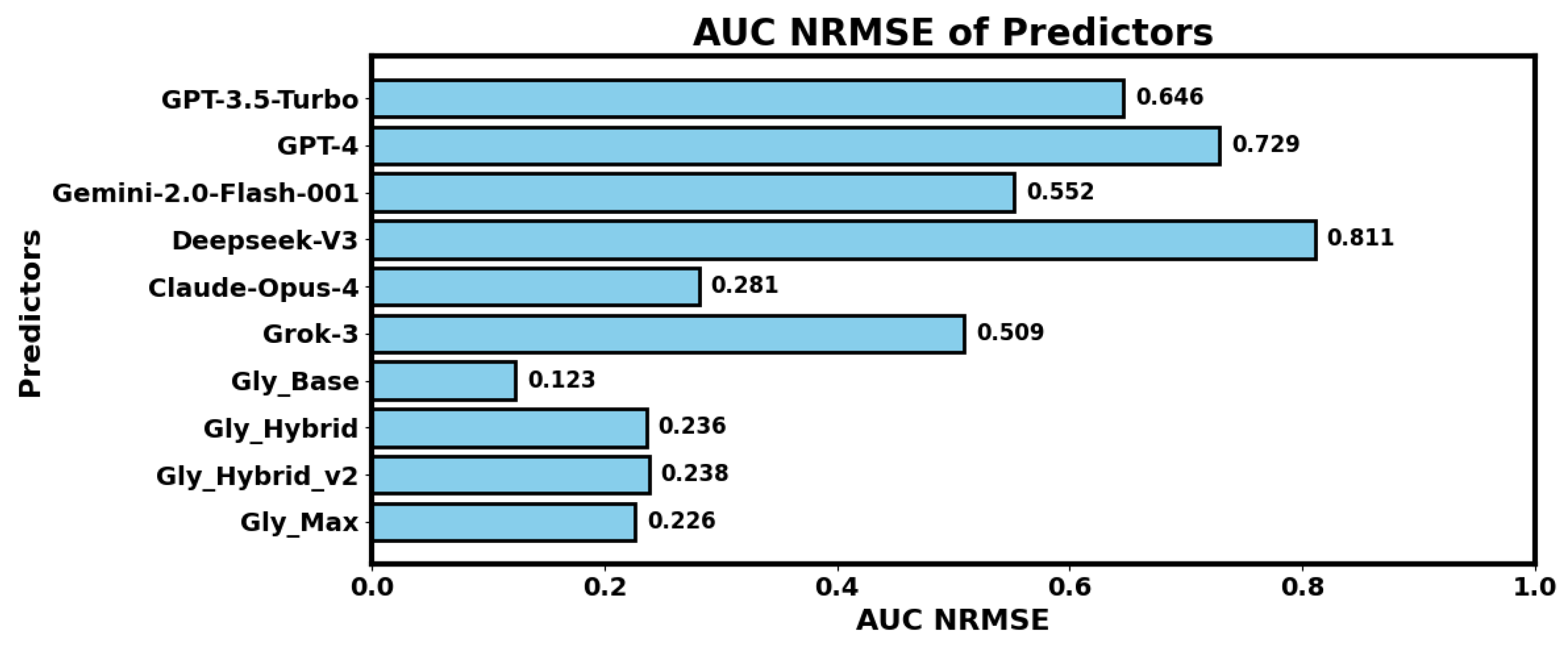

Our LLM-based experiments suggested that Claude Opus 4 outperforms GPT 3.5 Turbo, GPT 4, Gemini 2.0 Flash 001, DeepSeek V3, and Grok 3 in AUC predictions. We also found that hybrid models outperform LLM-only models, whereas augmented training data with Gaussian noise further improves the performance of the hybrid models. However, fully trainable base regressor backbones of our GlucoLens system outperform both LLM-based and hybrid versions of the predictors for AUC prediction.

Finally, in hyperglycemia prediction, we find that GlucoLens with ensemble classifiers RF, XGB, and MLP outperforms the system with individual classifiers, achieving an accuracy of 79% and an F1 score of 0.749, as shown in

Table 6,

Table 7 and

Table 8. Additionally, we empirically prove that the GlucoLens hyperglycemia prediction system monotonically improves its test set performance as we increase the size of the training data.

4.2. Different Interpretation of the Results

Our best-found NRMSE of 0.123 implies that the predicted AUC value was on average within a 12.3% error margin from the actual values of AUC. To interpret the performance in another way, we also explored the percentage of test cases falling within an error tolerance. From the actual AUC values and the predicted AUC values, the ratio of test cases within 5%, 10%, 15%, and 20% errors were calculated. Among the predictions made by our system, we have verified that 33% of the cases had an error of less than 5%, 61% of the cases had an error of less than 10%, 81% of the cases had an error of less than 15%, and 93% cases had an error of less than 20%. Thus only 19% of the cases had an error of more than 15%, and only 7% of the cases had an error over 20%. We believe that with more training data, our system will be able to predict most of the cases within a very small margin of error. The percentages of test cases within 5%, 10%, 15%, and 20% error margins for the XGBoost model were 30%, 55%, 78%, and 92%, respectively, all lower than our Random Forest model. Therefore, our Random Forest model outperforms XGBoost in this metric as well.

4.3. Alternative Feature Search Methods

We also explored another avenue of research to find possible feature sets other than the five options presented in

Table 1. One way could be to perform an exhaustive search within the features. The problem with that approach would be the computational cost. As we have 31 features, we would have

possibilities that would be infeasible to explore. However, we have performed an additional experiment to find effective feature subsets. Among all the models, our Random Forest (RF) was the best model so far with an NRMSE of 0.123. For finding alternative subsets, we limited the number of features by restricting the number of leaf nodes in RF models to 24, 48, and 96. The 48-leaf-node limit outperformed the others, achieving an NRMSE of 0.121, surpassing our previous best result of 0.123. This approach offers a more rational feature selection method, as limiting leaf nodes helps identify features with higher information gains, reduces overfitting, and improves generalization.

4.4. Reflection on LLM-Based Prediction

Our experimental results indicate that current large language models (LLMs) did not outperform classical machine learning approaches in predicting hyperglycemia. While LLMs offer promising generalization and reasoning capabilities, we believe they are not yet sufficiently optimized for accurate physiological prediction tasks such as postprandial glucose estimation. Despite their current limitations, the LLM-based interface we developed provides a flexible and scalable framework that can be reused or extended as more advanced LLMs become available. Our initial motivation for experimenting with multiple LLMs was to evaluate whether combining their outputs, through a hybrid or ensemble method, could improve prediction accuracy. However, our findings suggest that using the single best-performing LLM is more effective than relying on a mixture of models.

In practice, combining multiple LLMs adds marginal inference overhead as LLMs are not retrained or fine-tuned in this process. Furthermore, it does not create an additional burden on the end-user. Our future work will focus on optimizing LLMs with a manageable number of parameters for academic research and explore whether specialized LLMs and fine-tuned LLMs for lightweight, single-model inference is more practical.

Future work may revisit this approach as LLMs continue to evolve, especially with the emergence of models better aligned with domain-specific reasoning and capable of leveraging structured and temporal health data more effectively.

5. Limitations and Future Works

The proposed system addresses an important problem in metabolic health, namely glucose control in individuals with prediabetes. However, we also acknowledge several limitations. The LLM-based models did not outperform the classical models. However, we believe that future generations of the LLMs will perform better with the same interface. Secondly, because of a lack of existing research, our prompt design was based on simplicity and interpretability. In the future, we plan to explore different prompt designs and also fine-tune small language models for better results. Finally, the interventions provided by the counterfactual explanation tools will need to be evaluated by deploying such interventions in clinical trials. Additionally, we would like to design a digital twin solution to simulate the efficacy of the generated counterfactual interventions.

As a next step, we plan to explore the development of personalized glucose prediction models that account for individual variations in behavior and dietary habits. A potential approach involves a two-phase framework. In the initial training phase, each user would wear a continuous glucose monitor (CGM) for a period of 1–2 weeks, during which their food intake and daily activities are logged. These data would be used to train a personalized model capable of capturing the user’s unique glycemic response patterns. Once trained, the model would enter the deployment phase, where it could predict postprandial glucose levels and assess hyperglycemia risk without requiring ongoing CGM usage. Instead, predictions would rely solely on behavioral and dietary inputs, offering actionable insights and personalized lifestyle recommendations.

We also envision integrating automated tools that can extract caloric and macronutrient information from food photos or meal descriptions, which would streamline data collection and reduce user burden. Additionally, future work will address ethical and data privacy concerns associated with using personal health data, with an emphasis on secure data handling and privacy-preserving model deployment strategies.