1. Introduction

Object detection is a core technique in the field of computer vision, which aims to recognize and localize objects of interest in images or videos. And it can help individuals to extract key information from complex visual environments. Traditional object detection algorithms rely on hand-crafted features, with drawbacks, such as high computational costs, poor robustness, and low accuracy. In recent years, deep learning has gained popularity [

1], which can learn more robust and generalizable deep feature representations. Specifically, object detection has made impressive progress thanks to convolutional neural networks (CNNs). And it has become an essential part of many real-world applications. The mainstream three detectors are Faster RCNN [

2], You Only Look Once (YOLO) [

3], and Single Shot Multi-box Detector (SSD) [

4]. However, the superior performance of advance detectors relies on the availability of large number of high-quality annotated data. Acquiring such data is time consuming and labour intensive. Additionally, well-trained detectors may experience a sudden drop in performance due to the domain shift problem when processing new data or tasks.

The aforementioned problems seriously affect the application and deployment of the detection models when data distributions are different. Unsupervised Domain Adaptation (UDA) [

5,

6,

7] has been proposed to deal with the domain shift problems and reduce the dependence of model training on target labels. It aims to transfer the source knowledge to the target and reduce the distribution discrepancy between different domains, which enhances the model’s generalization ability and discriminative capability. Deep UDA methods can be divided into two categories: Moment matching methods [

8,

9,

10] explicitly match the feature distribution across domains during network training based on pre-defined metrics. Adversarial learning methods [

11,

12,

13] implicitly learn domain-invariant representations in an adversarial paradigm.

Unlike classification and semantic segmentation, the object detection task predicts bounding box localization and corresponding object category [

14]. This brings potential problems and challenges for cross-domain object detection, but has likewise raised a lot of concerns. Recent studies have made significant efforts to improve the cross-domain detection capabilities. Following the first try on cross-domain object detection, domain adaptive Faster RCNN [

15], most existing DAOD methods are still built on the two-stage detector Faster RCNN [

16,

17,

18,

19,

20,

21,

22,

23]. Few works have utilized one-stage detectors (e.g., SSD [

4] and FCOS [

24]) to consider the computational efficiency [

25,

26,

27,

28]. And some methods have also been proposed for light-weight and practical use based on the YOLO series [

29,

30,

31,

32]. Overall, the development of DAOD methods is closely related to the key technologies in the field of object detection and domain adaptation.

Since the scene layout, number of objects, patterns between objects, and the background may be quite different across domains in object detection tasks, a potential problem in DAOD tasks is that blindly and directly adapting feature distributions can lead to negative transfer, which degrades the model’s cross-domain performance. This is also the reason why strategies like prototype alignment and entropy regularization, which work well in image classification or segmentation, become inapplicable in object detection. In addition, some of the methods utilize image generation techniques to introduce auxiliary data [

22,

31], or adopt a student–teacher network paradigm [

32,

33] for training. The former makes the training process not an end-to-end mode, and the latter significantly increases the model’s complexity and the difficulty of the training model. These types of methods greatly restrict the training and application scenarios of detectors. Finally, the efficiency and accuracy of the dominant Faster RCNN are outdated, which is not suitable for resource-limited and time-critical real applications [

32].

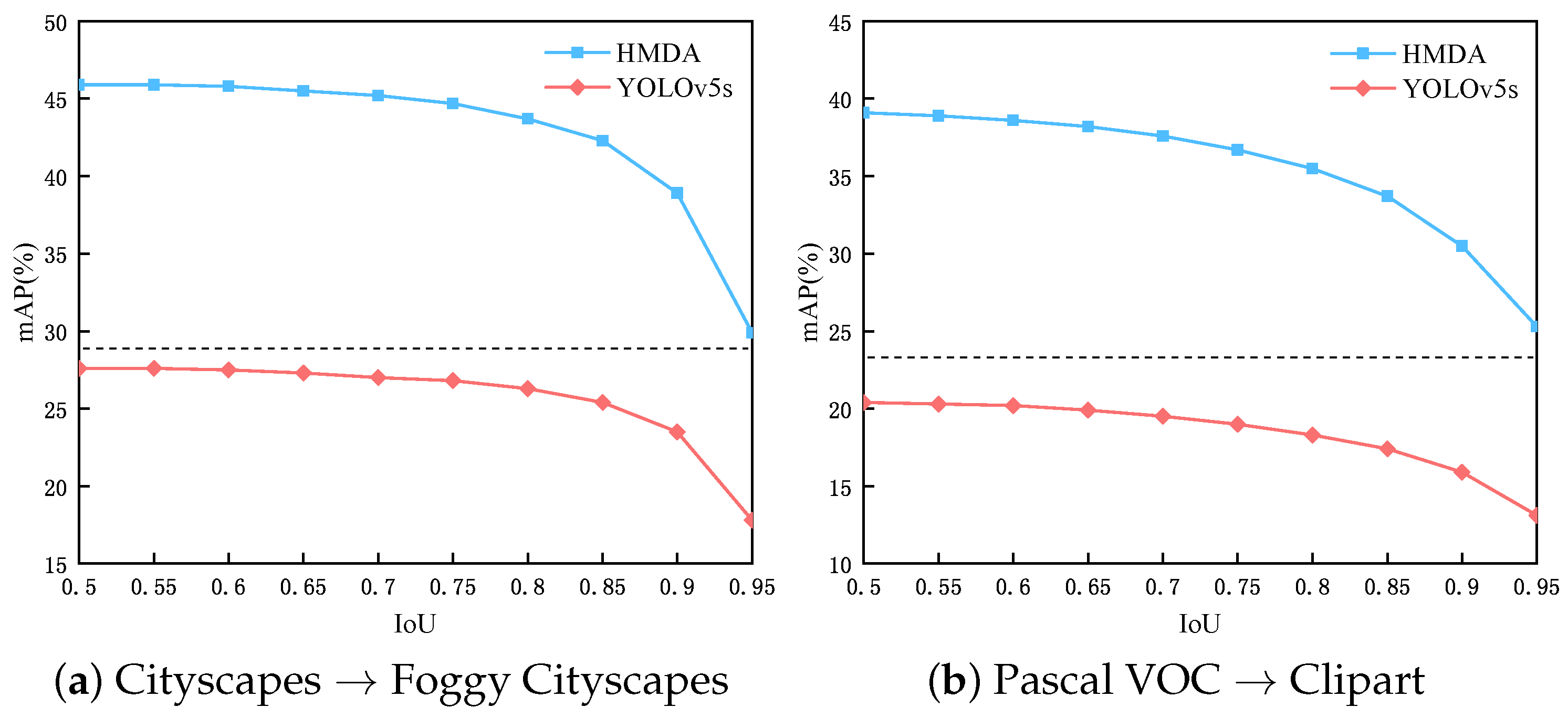

For the above reasons, we propose a novel Hierarchical Multi-scale Domain Adaptive method, HMDA-YOLO, based on the simple but effective one-stage YOLOv5 [

34] detector. HMDA-YOLO is easy to implement and has competitive performance, which consists of the hierarchical backbone adaptation and the multi-scale head adaptation. Considering the differences in representation information of features at various depths, we designed the hierarchical backbone adaptation strategy, which promotes comprehensive distribution alignment and suppresses the negative transfer. Specifically, we adopt the pixel-level adaptation, image-level adaptation, and weighted image-level adaptation for the adversarial training of shallow-level, middle-level, and deep-level feature maps, respectively. To make full use of the rich discriminative information of the feature maps to be detected, we further designed the multi-scale head adaptation strategy. It performs pixel-level adaptation across each detection scale, which reduces local instance discrepancy and the impact of background noise. Note that “pixel-level” denotes each location at the corresponding feature map and the “image-level” treats the entire feature map as a whole in this paper. The proposed HMDA-YOLO can significantly improve the model’s cross-domain capability. We empirically verify the HMDA-YOLO on four cross-domain object detection scenarios, comprising different domain shifts. Experimental results and analyses demonstrate that HMDA-YOLO can achieve competitive performance with high detection efficiency.

The contributions of this paper can be summarized as follows: (1) we propose a simple but effective DAOD method, HMDA-YOLO, for more accurate and efficient cross-domain detection; (2) a hierarchical adaptation strategy for the backbone network as well as a multi-scale adaptation strategy for the head network are designed to simultaneously ensure the model’s generalization and discriminating capability; (3) HMDA-YOLO can achieve competitive performance on several cross-domain object detection benchmarks, compared with state-of-the-art DAOD methods.

The remainder of this paper is organized as follows:

Section 2 reviews the techniques related to DAOD.

Section 3 explores the technical details of HMDA.

Section 4 presents the experimental results and analysis. Finally,

Section 5 provides a summary.

3. Method

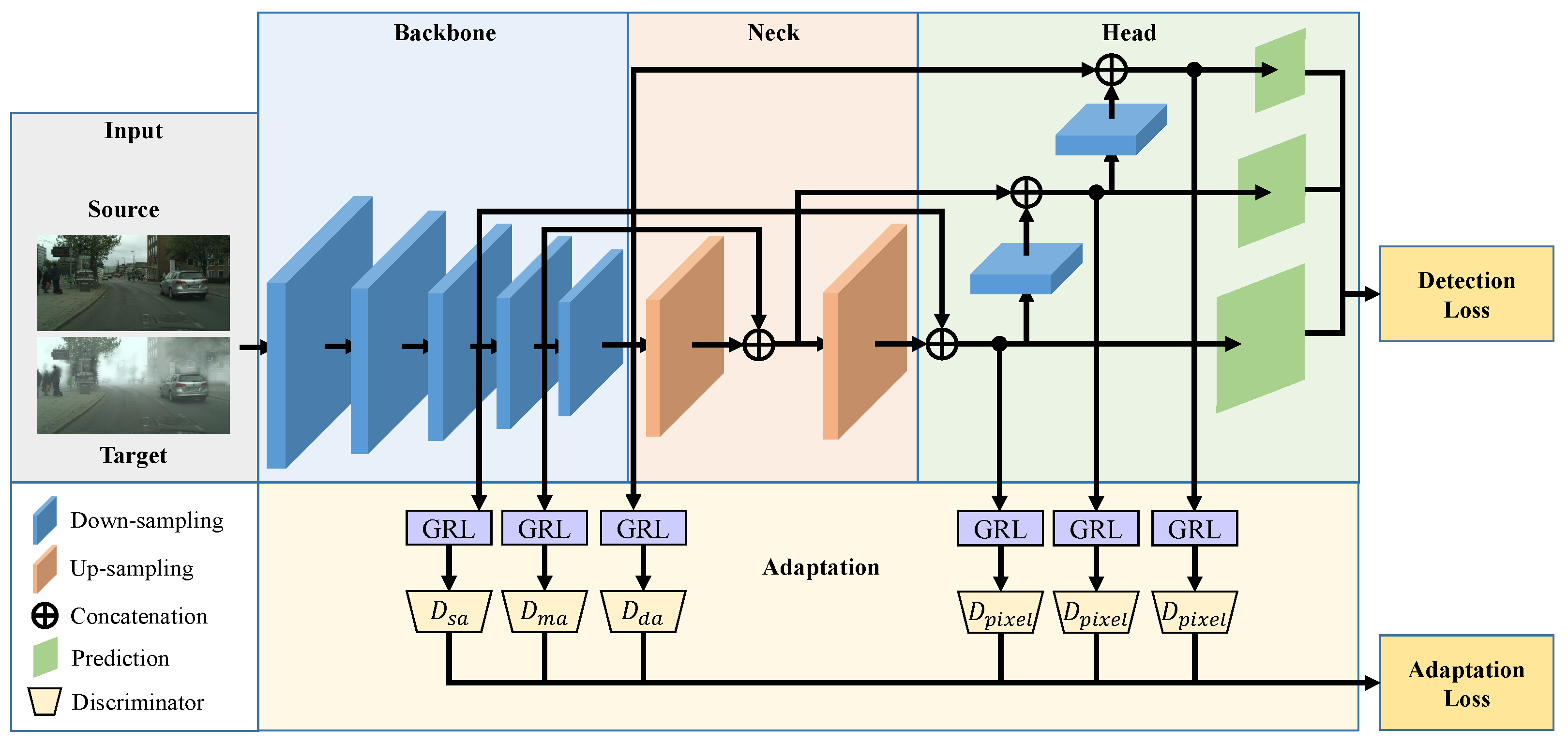

In this section, we introduce the technical details of the proposed HMDA. An overview of the HMDA based on YOLOv5 architecture is shown in

Figure 1. HMDA-YOLO hierarchically extracts the deep features of the image and performs specific adaptations at different depths by embedding multiple domain discriminators, which are distributed in the backbone network and the head network of YOLOv5. More information is described below.

3.1. Preliminary Knowledge

We will briefly introduce the YOLOv5 framework. YOLOv5 is a simple but effective one-stage detector and has been widely used in real-world applications. It mainly contains three parts, which is shown in the top part of

Figure 1. (1) The backbone network is responsible for deep feature extraction. The CSPDarknet53 [

37] is the most commonly used backbone network, composed of convolutional module, C3 module, and the Spatial Pyramid Pooling (SPP) module [

41]; (2) The neck network further processes the extracted features and performs feature fusion to enhance the feature representative capability. It can achieve top-down and bottom-up feature fusion by the utilization of the Feature Pyramid Network (FPN) [

42] and Path Aggregation Network (PAN) [

43]; (3) The head network implements multi-scale detection of small, medium, and large objects.

In the cross-domain object detection tasks, there are two domains: a labeled source domain with images, denoted as , where denotes the source image, is the coordinate of bounding box, and is the object category. An unlabeled target domain with images, denoted as . The label space of the source and target domains are identical, but their data distributions are different. The goal of domain adaptive object detection is to train a detector to reduce the domain shift and learn transferable representations that the model can generalize well to the target objects.

3.2. Hierarchical Multi-Scale Adaptation

The adversarial DAOD methods usually learn domain-invariant feature representations with the help of the domain discriminator (i.e., domain classifier). Assuming that the framework is composed of a feature extractor

F and a domain discriminator

G. The feature extractor

F tries to confuse the domain discriminator

G, which means maximizing the domain classification loss. Conversely, the domain discriminator

D is trained to distinguish the source samples from the target samples, which means minimizing the domain classification loss. The feature extractor

F also learns to minimize the source supervision loss. The adversarial loss can be written as follows:

and

denote the expected domain classification error over the source and target domain, respectively. By utilizing the Gradient Reverse Layer (GRL) [

11], the min–max adversarial training can be unified in one back-propagation.

For object detection tasks, each activation on the feature map corresponds to a patch of the input image, and detectors will perform classification and regression on each location. Therefore, the domain discriminator can implement not only image-level distribution alignment, but also pixel-level distribution alignment. Since HMDA-YOLO hierarchically aligns the feature distribution in the backbone network and the head network, we will focus on these two parts.

3.2.1. Hierarchical Backbone Adaptation

The backbone network hierarchically adapts the output features of each C3 module (i.e., the layer 4, 6, and 9 in YOLOv5 framework) through different ways, according to the representation information of the features at different depths.

The shallow feature can capture a lot of detailed information (e.g., edges, colors, textures, and angles), which not only facilitates the detection of small objects, but also helps the alignment of local features of cross-domain objects. According to [

44], the least-square loss function can stabilize the training process of the domain discriminator and have advantages on aligning shallow representations. Therefore, we use it as the criterion to implement shallow-level feature adaptation. The domain discriminator

is a fully convolutional network with

kernel to predict the pixel-level domain label of the source and target feature maps. The shallow-level adaptation loss can be formulated as follows:

where

and

are the source and target shallow-level feature map of the input image

, respectively. And

is the domain prediction in location

of the corresponding feature map.

The medium-level feature contains information about localized shapes and simple objects. And the Binary Cross Entropy (BCE) loss is used for adversarial loss calculation. The domain discriminator

is different from

that

treats each feature map as a whole and predicts image-level domain label. Specifically,

is composed of common convolutional layers, a global average pooling layer, and a fully connected layer. The middle-level adaptation loss can be formulated as follows:

where

is the medium-level feature map of the input image

. And

is the image-level domain prediction of

.

is the ground truth domain label, which is 0 for the source domain and 1 for the target domain in this paper.

The scene layout, number of objects, patterns between objects, and the background may be quite different across domains. According to [

16], it is likely to hurt performance for larger shifts. As an example, the source domain that contains rural images and the target domain that contains urban images may have large domain discrepancy, even if they share the same object category. In this case, blind alignment may lead to the negative transfer and impair the model’s capability between different domains. HMDA-YOLO alleviates the above problem by adjusting the weights of hard-to-classify and easy-to-classify samples to more robustly improve the model’s cross-domain performance. Specifically, for the deep-level feature adaptation, the BCE loss is extended with focal loss [

45] to re-weight different samples. The domain discriminator

is trained to distinguish the source from the target, which is similar to

. The deep-level adaptation loss can be written as follows:

where

is the deep-level feature map of the input image

.

is the parameter to control the weight of different samples. If a sample is easy-to-classify (i.e., far from the decision boundary), it is desired to have a low loss to avoid negative transfer. Conversely, if it is hard-to-classify, we want it to have a high loss for domain confusion. Therefore, the value of

needs to be greater than 1 to assign low weight for the easy-to-classify samples and high weight for the hard-to-classify samples.

Combining the hierarchical domain adaptation loss, HMDA-YOLO promotes comprehensive distribution alignment and suppresses the negative transfer. The overall adaptation loss function of the backbone network can be written as follows:

3.2.2. Multi-Scale Head Adaptation

The feature maps of the head network play an important role in multi-scale object detection, which are crucial for object recognition and localization. Therefore, HMDA-YOLO proposes to implement fine-grained pixel-level adaptation at each scale for efficient cross-domain training, based on the rich discriminative information of the feature maps to be detected. On the one hand, multi-scale adaptation can reduce the local instance divergence and guarantee the model’s multi-scale detection capability. On the other hand, it helps to distinguish between the object and the background, which reduces the impact of background noise. In this way, the model can learn more robust and generalizable representations to improve the detection accuracy.

Specifically, the head adaptation is performed at three different scales, i.e., layer 17, 20, and 23 in YOLOv5 framework. The pixel-level domain discriminator

is similar to

, which predicts pixel-level domain labels. And BCE loss function is used for loss calculation. The adaptation loss at each scale can be written as follows:

where

denotes the feature map to be detected at each scale. Note that

in three different scales do not share the weight. Considering all three scales feature adaptation, the head adaptation loss can be unified as follows:

where

s denotes different scales.

3.3. Overall Formulation

The main discriminative capability of the network is learned from the source labeled samples in supervised way. And the source supervised training loss of YOLOv5 can be formulated as follows:

where

,

, and

denote the set of

,

, and

.

is the classification loss and defaults to the BCE loss.

is the object confidence loss and defaults to the BCE loss.

is the bounding box regression loss and defaults to the CIoU loss.

The domain adversarial loss combined with the hierarchical backbone adaptation strategy and the multi-scale head adaptation strategy can be written as follows:

Combining the source supervised loss function with the cross-domain adversarial loss function, the overall optimization objective can be formulated:

where

D denotes the set of all domain discriminators, including

, and

.

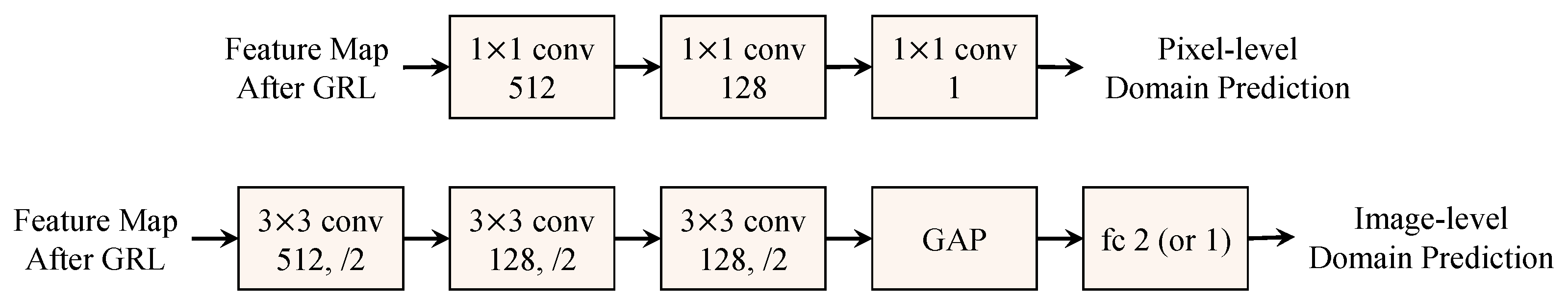

is the trade-off parameter to balance the detection loss and the domain adversarial loss. The network can be trained in an end-to-end manner using a standard stochastic gradient descent algorithm. And the adversarial training can be achieved by the utilization of GRL, which reverses the gradient during propagation. Structures of these domain discriminators are summarized in

Figure 2.

3.4. Theoretical Analysis

Reference [

46] designed

-distance to measure the divergence between two sets of samples that have different data distributions. Let us consider a source domain

, a target domain

, and a domain discriminator

, which tries to predict the source and target domain label to be 0 and 1, respectively. Assuming that

to be a set of possible domain discriminators, the

-distance can be defined as follows:

where

and

denote the expected domain classification errors over the source domain and the target domain, respectively. The combined error of the ideal hypothesis (e.g., domain discriminator) can be denoted as follows:

where

is the ideal joint hypothesis. The terms

and

are the expected risks on source and target domains, respectively. It can be used to measure the adaptability between different domains. If the ideal joint hypothesis performs poorly, i.e., the error

is large, the domain adaptation process is difficult to realize. Based on the above knowledge, Reference [

46] gives a upper bound on the target error as follows:

the target error is upper bounded by three terms, including the expected error on the source domain

, the domain divergence

, and few constant terms. Since the

can be directly minimized by the network supervised learning and the third term is difficult to handle, with the majority of existing methods minimize the upper bound on the target error by reducing the domain divergence between source and target domains. Our proposed method not only hierarchically optimizes the distribution discrepancy between different domains at the backbone network, but also alignment the multi-scale features before the detection in the head network. Thus, it can effectively reduce the distribution discrepancy and significantly improve the cross-domain detection performance.