GICEDCam: A Geospatial Internet of Things Framework for Complex Event Detection in Camera Streams

Abstract

1. Introduction

- -

- The design and implementation of a three-layer architecture that distributes CED workloads to reduce computational cost and increase scalability.

- -

- The development of a Spatial Event Corrector component that predicts missing spatial events and reduces false positives and false negatives in relationship matching.

- -

- We can access sufficient calibration data (e.g., camera calibration files or ground control points) to transform frame-based coordinates into geospatial coordinates.

- -

- Camera locations and orientations are fixed throughout the CED process.

- -

- Cameras submit frames to edge devices and the cloud.

2. Literature Review

3. Method Overview

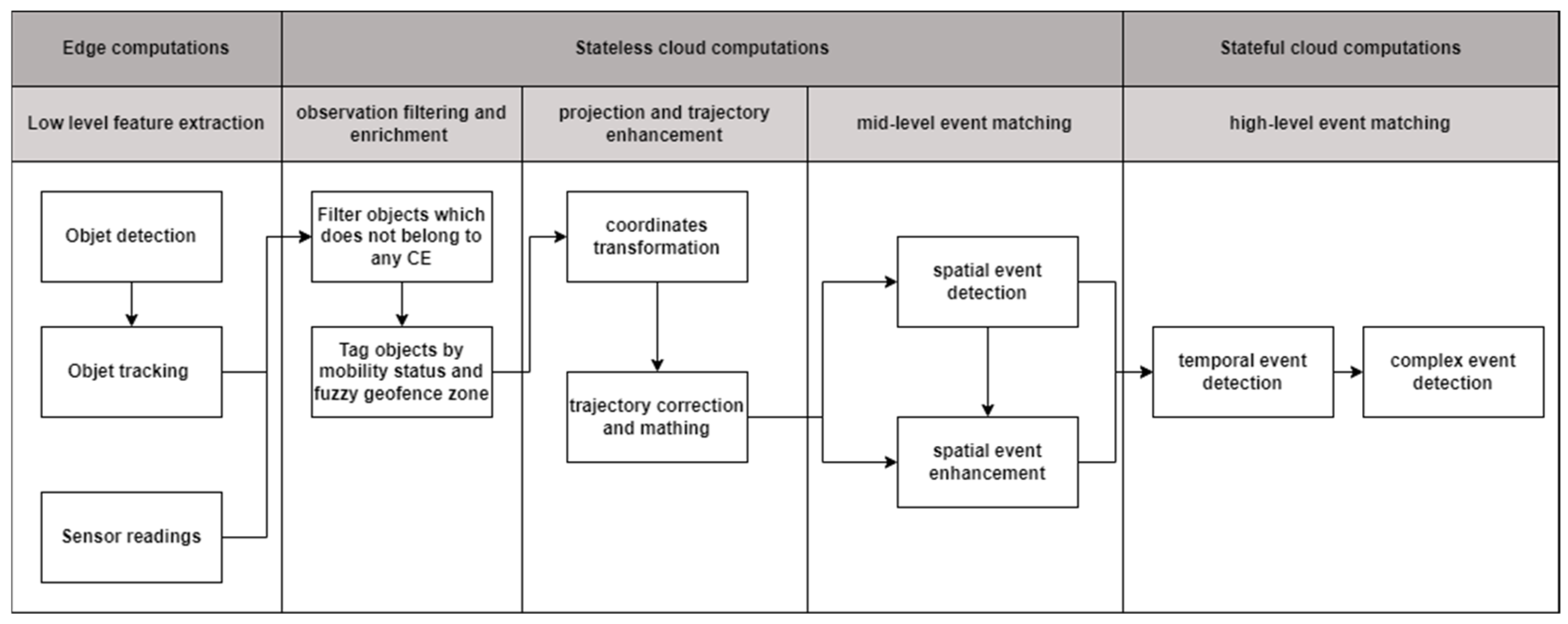

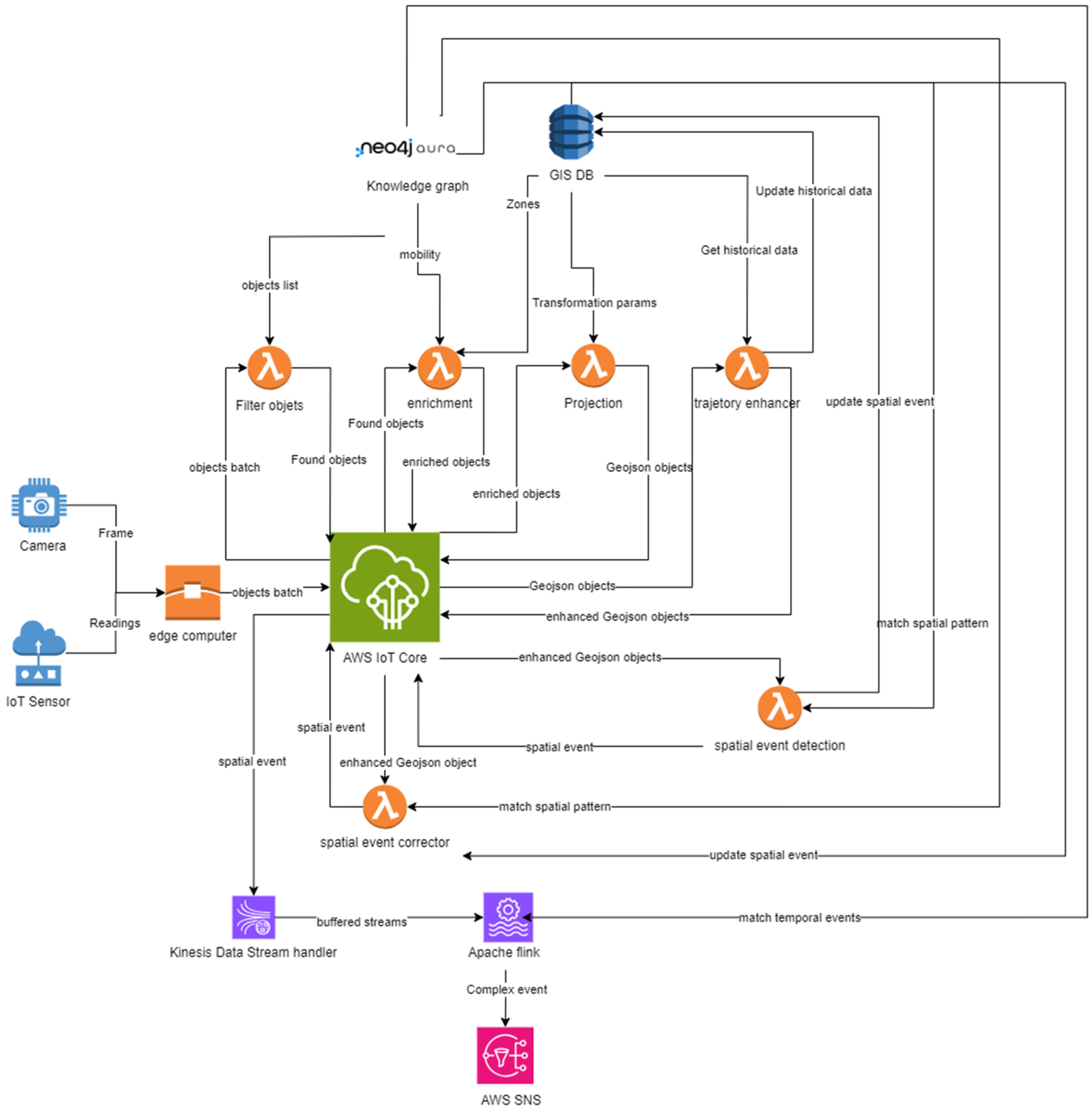

3.1. GICEDCAM Framework Design

- -

- Enhancing the Complex Event Knowledge Graph: The complex event knowledge graph (KG) is revised to incorporate additional geospatial functions and entities. This enhancement is crucial for effectively addressing the challenges posed by false negatives and false positives associated with spatial events.

- -

- Improving Computational Resource Allocation: The computational burden of low-level feature extraction is relocated to the edge computing components. This strategic shift will allow cloud computing components to concentrate on the more intricate tasks of spatial events, temporal events, and complex events matching.

- -

- Stateless Spatial Matching: The entire process of spatial event matching is transitioned to stateless data processing components. In contrast, the temporal and complex event matching will remain within the purview of stateful matching components.

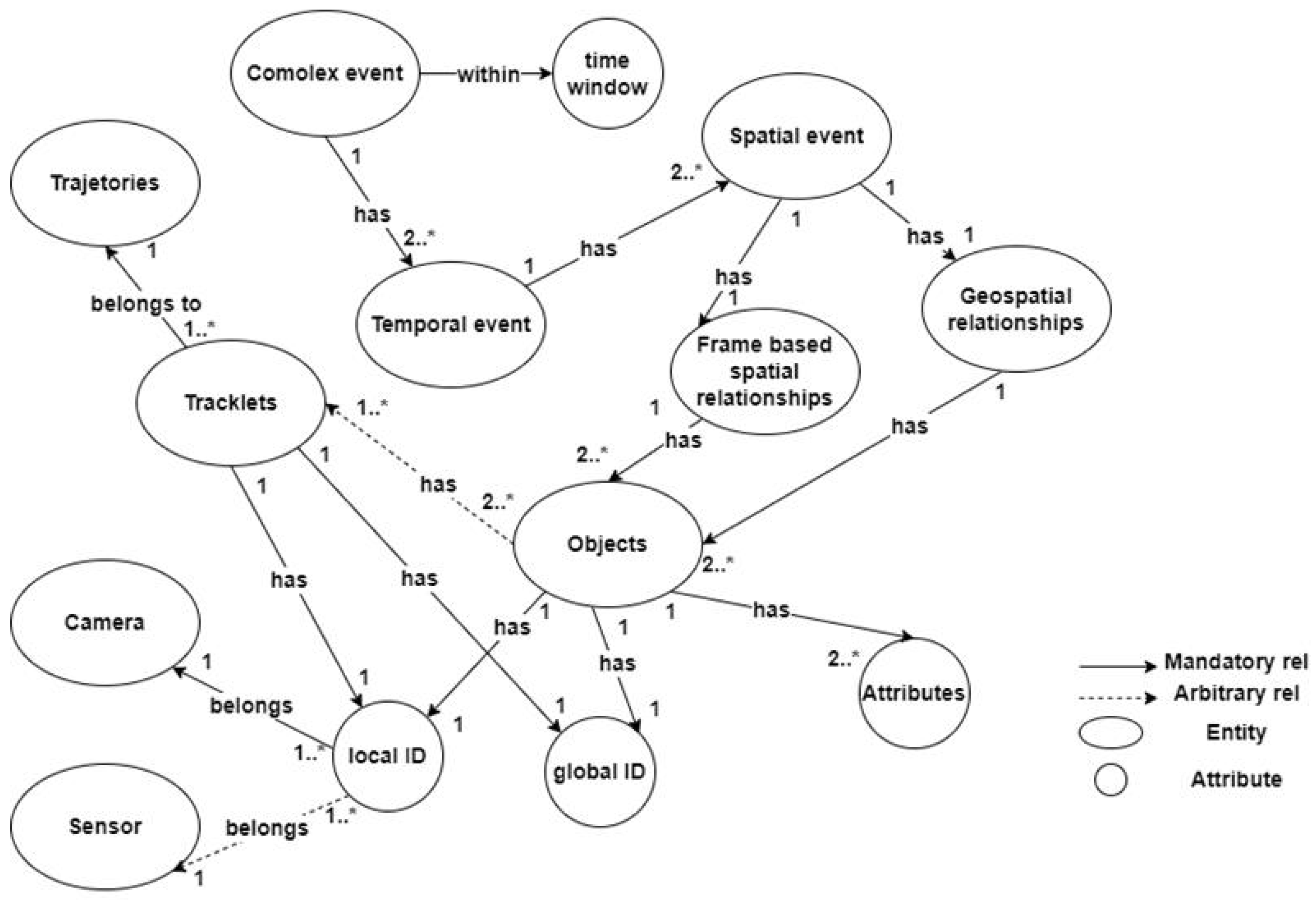

3.1.1. GICEDCAM Knowledge Graph

- -

- Camera: {camera_id, name, H (3 × 3), ts_calib, crs: cartesian-m}

- -

- Zone: {zone_id, polygon, version, crs, bbox, semantics}

- -

- Object: {global_id, class, attrs, ts_first, ts_last}

- -

- LocalObject (per sensor): {local_id, camera_id, class}

- -

- Tracklet: {tracklet_id, camera_id, local_id, ts_start, ts_end}

- -

- Trajectory: {traj_id, global_id, ts_start, ts_end}

- -

- Observation (immutable): {obs_id, ts, u, v, x, y, conf, bbox}

- -

- SpatialEvent: {se_id, type, ts, corrected:boolean, conf}

- -

- TemporalEvent: {te_id, type, t_start, t_end}

- -

- ComplexEvent: {ce_id, type, t_start, t_end, query_id}

- -

- (:LocalObject)-[:SAME_AS]->(:Object) (many→1)

- -

- (:Object)-[:HAS_TRACKLET]->(:Tracklet) (1→many)

- -

- (:Tracklet)-[:PART_OF_TRAJ]->(:Trajectory) (many→1)

- -

- (:Observation)-[:OF]->(:LocalObject) (many→1)

- -

- (:Observation)-[:PROJECTED_BY {camera_id}]->(:Camera)

- -

- (:Observation)-[:IN_ZONE {ts, μ}]->(:Zone)

- -

- (:SpatialEvent)-[:ABOUT]->(:Object) (many→1)

- -

- (:TemporalEvent)-[:COMPOSES]->(:SpatialEvent) (many→many)

- -

- (:ComplexEvent)-[:COMPOSES]->(:TemporalEvent) (many→many)

3.1.2. GICEDCAM Data Pipeline

| Algorithm 1. Stateful Complex Event Matching in GICEDCAM. |

| Input: stream of spatial events e = ⟨type, bindings, t, conf, corrected⟩ window W, watermark ω State: partial matches M[q] keyed by (q, bindings) upon spatial event e at time t: // events are pre-filtered & projected // 1) advance existing partials for each (q, b) in M where δ(q, e.type, b, t) is enabled q′ ← δ(q, e.type, b, t) // checks Allen relation & gap constraints b′ ← unify(b, e.bindings) // variable binding; reject on conflict upsert M[q′] with {bindings = b′, start_time(b), last_time = t} // 2) possibly start a new partial if δ(q0, e.type, e.bindings, t) enabled upsert M[q1] with {bindings = e.bindings, start_time = t, last_time = t} // 3) emit completes when safe for each partial in M with state q ∈ F if (last_time − start_time) ≤ W and last_time ≤ ω emit ComplexEvent(partial.bindings, [start_time, last_time]) delete partial // 4) prune stale partials (semantic bound) delete any partial with (current_time − start_time) > W |

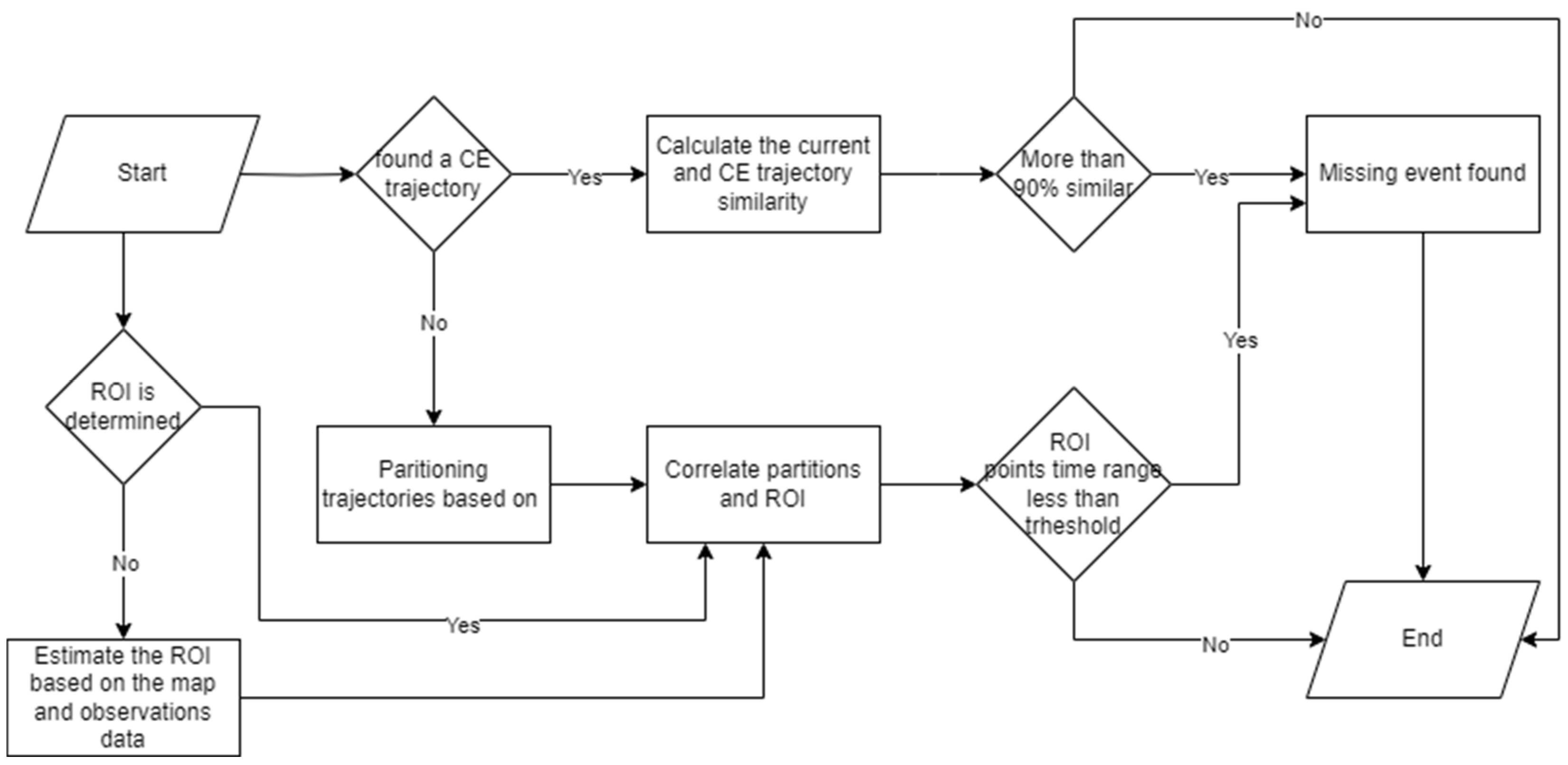

3.2. Spatial Event Corrector

| Algorithm 2. Spatial Event Corrector. |

| Inputs: Active expectations E_S = ⟨S, bindings, [t_a, t_b]⟩ // from pattern context & anchors Observation buffer O_K // last K s of {x(t), μ_Z(x), class, bbox flags} Config method ∈ {BN, LSTM, TRAJ}, ∆_probe, ε_t, δ, B Thresholds τ_BN, τ_LSTM, τ_TRAJ BN params Θ_BN LSTM params M_LSTM (model weights), T (window), stride Trajectory params μ_ROI(·), exemplars H, FastDTW radius r, blend β State: none (stateless; queries only) procedure CORRECTOR(E_S): // ----- GAP DETECTION ----- let ⟨S, bindings, [t_a, t_b]⟩ = E_S if now < t_b + Δ_probe: return // wait a small patience window if exists RAW SpatialEvent(type = S, bindings, t_raw ∈ [t_a, t_b]) in O_K: return // no gap → nothing to correct // ----- EVIDENCE ASSEMBLY ----- E ← slice O_K for variables in ‘bindings’ over [t_a − δ, t_b + δ] if E is empty: return // ----- SINGLE-METHOD IMPUTATION (chosen by config) ----- switch method: case BN: … if p < τ_BN: return conf ← p case LSTM: … if p_t(t*) < τ_LSTM: return conf ← p_t(t*) case TRAJ: … if best = Ø or best.score < τ_TRAJ: return t* ← argmax_{p∈best.σ} μ_ROI(p) // most plausible time inside segment conf ← best.score // ----- DEDUP AGAINST RAW EVENTS ----- if exists RAW SpatialEvent(type = S, bindings, t_raw) with |t_raw − t*| ≤ ε_t in O_K: return // prefer raw observation // ----- EMIT ADDITIVE CORRECTED EVENT ----- emit SpatialEvent ⟨type = S, bindings, t* = t*, conf = conf, corrected = true⟩ // ----- TIME BUDGET GUARD (optional) ----- ensure wall-clock time ≤ B ms (degrade by skipping LSTM or lowering DTW radius if needed) |

3.2.1. Bayesian Networks

3.2.2. Long Short-Term Memory

3.2.3. Trajectory Analysis

3.3. Real-Time Trajectories Corrector

3.3.1. Tracking Re-Identifier

3.3.2. Trajectory Spatial Enhancer

4. Implementations, Results, and Discussions

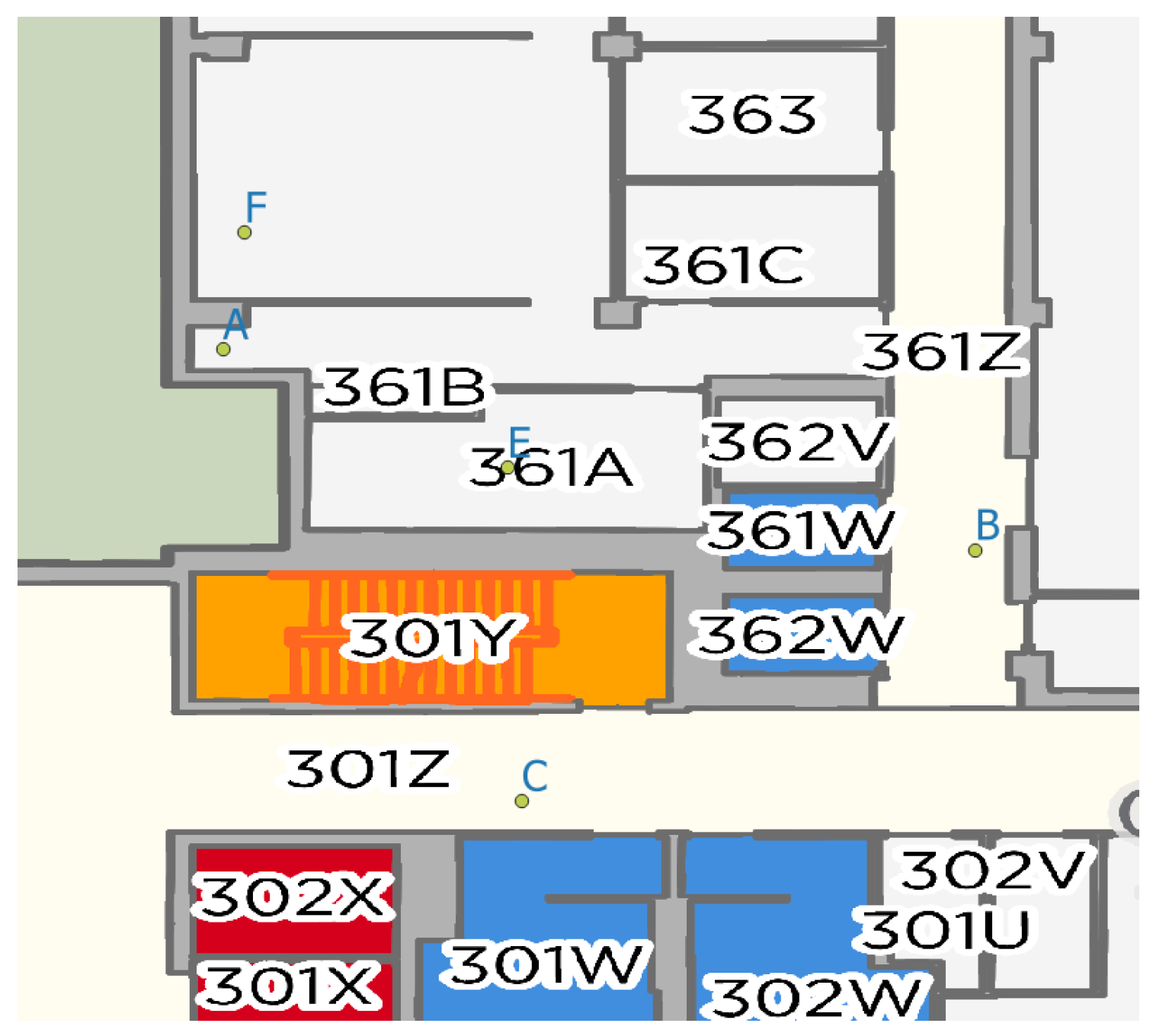

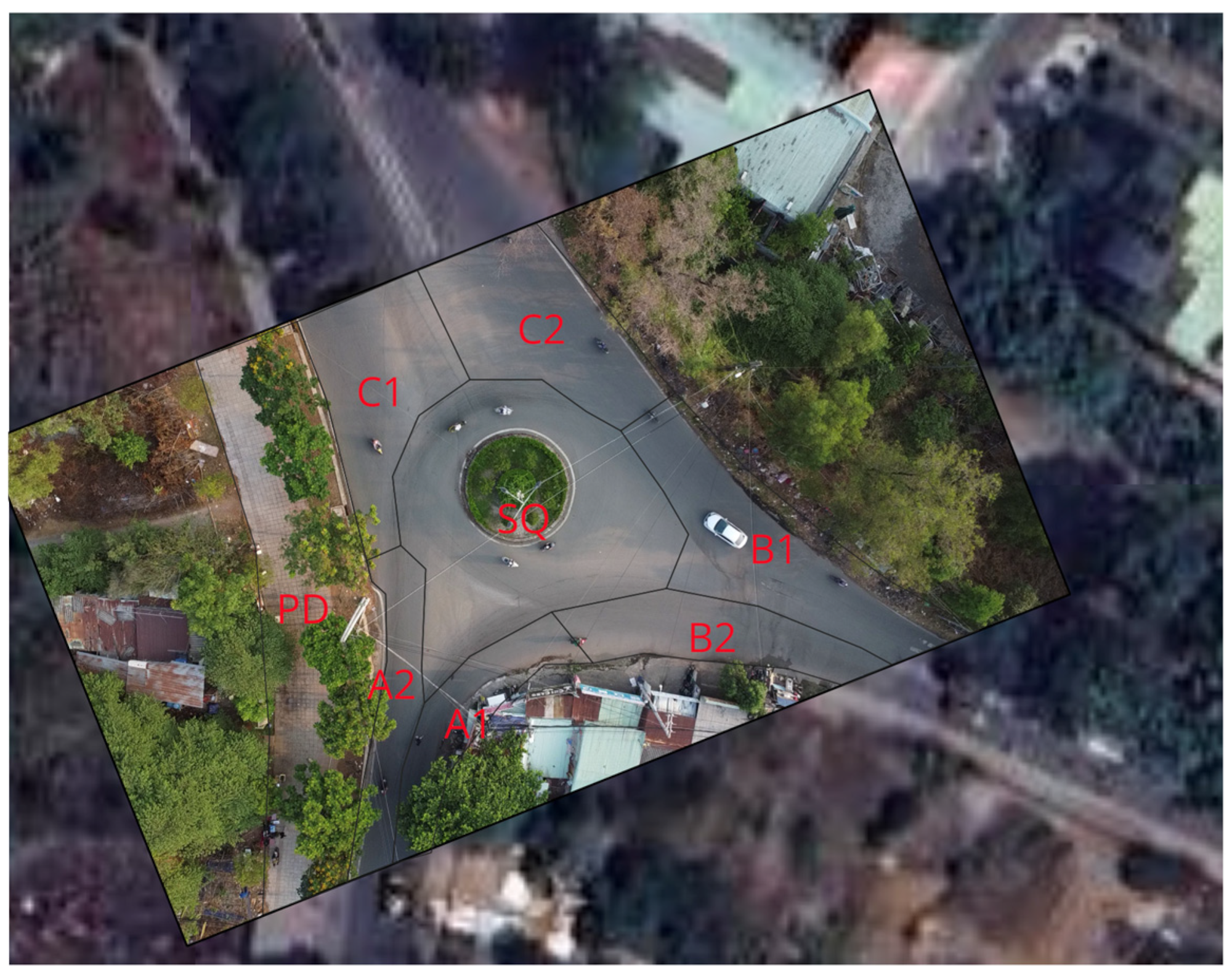

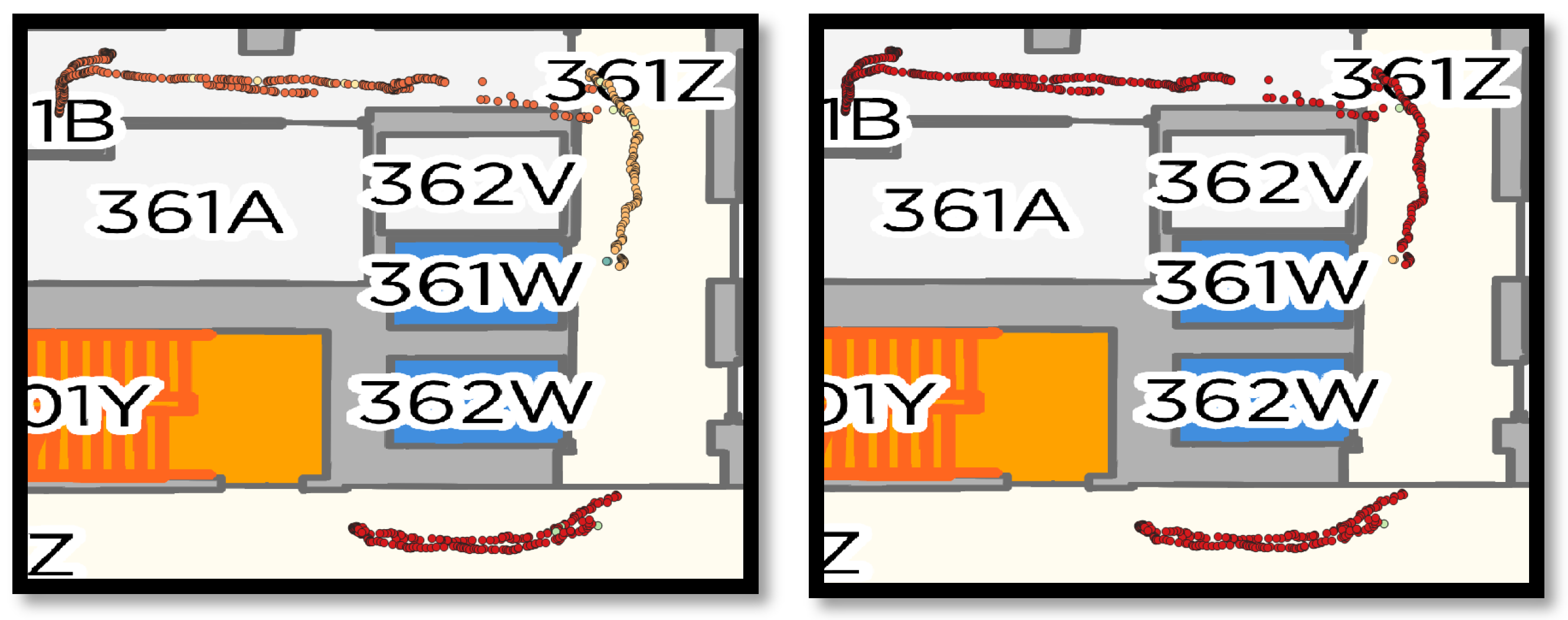

4.1. Data

4.2. GICEDCAM Framework Implementation

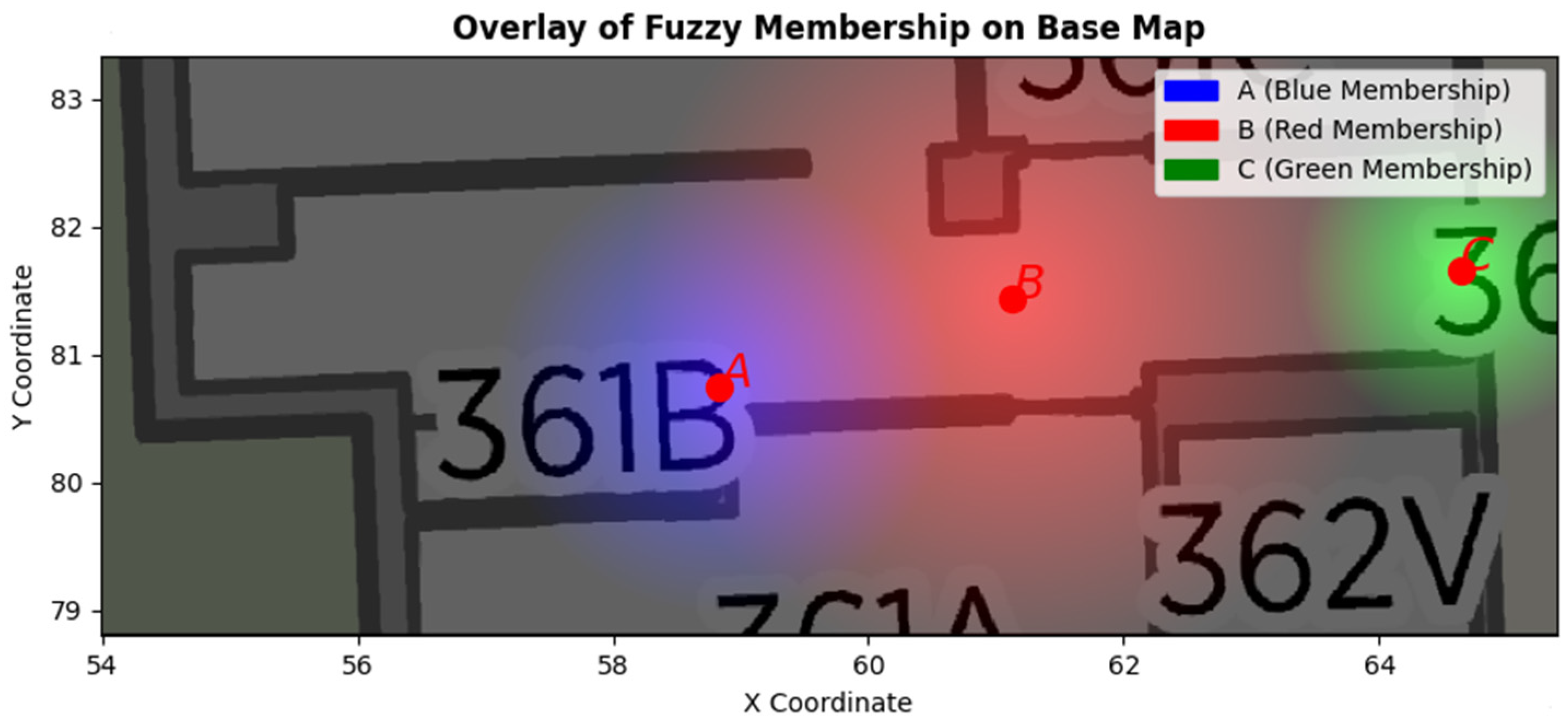

4.3. Spatial Event Correction

4.4. Trajectory Corrector

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

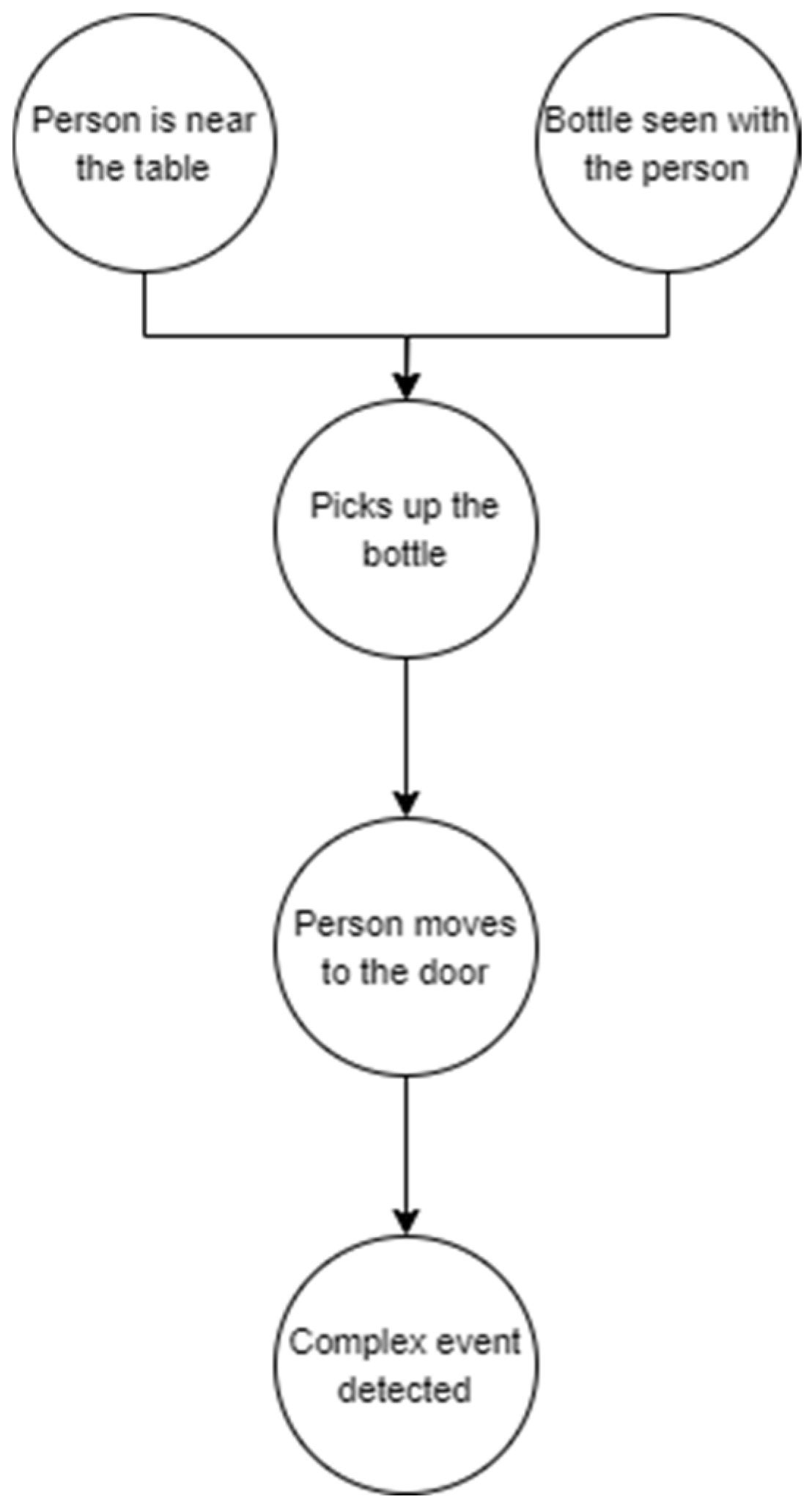

Appendix A

Appendix A.1

| CPD Name | Probability |

|---|---|

| Bottle Seen With Person = 1 | 0.6 |

| Person Near Table = 1 | 0.7 |

| Complex Event Detected∣Person Moves To Door = 1 | 0.95 |

| Person Moves To Door∣Person Picks Bottle | 0.9 |

| Person Near Table = 1, Bottle Seen With Person = 1 | 0.8 |

| Person Near Table = 1, Bottle Seen With Person = 0 | 0.4 |

| Person Near Table = 0, Bottle Seen With Person = 1 | 0.3 |

| Person Near Table = 0, Bottle Seen With Person = 0 | 0.1 |

Appendix A.2

| Time | X | Y | Person Intersects Table | Bottle Seen with Person |

|---|---|---|---|---|

| t-4 | 3 | 5 | 0 | 0 |

| t-3 | 3.5 | 5.5 | 0 | 0 |

| t-2 | 4 | 6 | 1 | 0 |

| t-1 | 4.5 | 6.5 | 1 | 0 |

Appendix B

Appendix B.1. Object Schema

Appendix B.2. Projected Object Schema

Appendix B.3. Events Schema

References

- Merriam-Webster. Event. Available online: https://www.merriam-webster.com/dictionary/event (accessed on 10 April 2025).

- Vandenhouten, R.; Holland-Moritz, R. A software architecture for intelligent facility management based on complex event processing. Wiss. Beiträge 2012 2012, 16, 57–62. [Google Scholar] [CrossRef]

- Giatrakos, N.; Alevizos, E.; Artikis, A.; Deligiannakis, A.; Garofalakis, M. Complex event recognition in the big data era: A survey. VLDB J. 2020, 29, 313–352. [Google Scholar] [CrossRef]

- Xu, C.; Lin, S.; Wang, L.; Qiao, J. Complex event detection in probabilistic stream. In Proceedings of the 2010 12th International Asia-Pacific Web Conference, Busan, Republic of Korea, 6–8 April 2010; pp. 361–363. [Google Scholar]

- Honarparvar, S.; Ashena, Z.B.; Saeedi, S.; Liang, S. A Systematic Review of Event-Matching Methods for Complex Event Detection in Video Streams. Sensors 2024, 24, 7238. [Google Scholar] [CrossRef]

- MRFR. AI Camera Market Research Report: By Type (Smartphone Cameras, Surveillance Cameras, DSLRs, Others), by Technology (Image/Face Recognition, Speech/Voice Recognition, Computer Vision, Others) and by Region (North America, Europe, Asia-Pacific, Middle East & Africa and South America)—Forecast till 2027; Market Research Future: New York, NY, USA, 2021; p. 111. [Google Scholar]

- Yadav, P.; Sarkar, D.; Salwala, D.; Curry, E. Traffic prediction framework for OpenStreetMap using deep learning based complex event processing and open traffic cameras. arXiv 2020, arXiv:2008.00928. [Google Scholar]

- Shahad, R.A.; Bein, L.G.; Saad, M.H.M.; Hussain, A. Complex event detection in an intelligent surveillance system using CAISER platform. In Proceedings of the 2016 International Conference on Advances in Electrical, Electronic and Systems Engineering (ICAEES), Putrajaya, Malaysia, 14–16 November 2016; pp. 129–133. [Google Scholar]

- Rahmani, A.M.; Babaei, Z.; Souri, A. Event-driven IoT architecture for data analysis of reliable healthcare application using complex event processing. Clust. Comput. 2021, 24, 1347–1360. [Google Scholar] [CrossRef]

- Bazhenov, N.; Korzun, D. Event-driven video services for monitoring in edge-centric internet of things environments. In Proceedings of the 2019 25th Conference of Open Innovations Association (FRUCT), Helsinki, Finland, 5–8 November 2019; pp. 47–56. [Google Scholar]

- Knoch, S.; Ponpathirkoottam, S.; Schwartz, T. Video-to-model: Unsupervised trace extraction from videos for process discovery and conformance checking in manual assembly. In Proceedings of the Business Process Management: 18th International Conference, BPM 2020, Seville, Spain, 13–18 September 2020; Proceedings 18. pp. 291–308. [Google Scholar]

- Liang, S.H.; Saeedi, S.; Ojagh, S.; Honarparvar, S.; Kiaei, S.; Jahromi, M.M.; Squires, J. An Interoperable Architecture for the Internet of COVID-19 Things (IoCT) Using Open Geospatial Standards—Case Study: Workplace Reopening. Sensors 2021, 21, 50. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Huang, Z.; Yang, Y.; Cao, J.; Sun, X.; Shen, H.T. Hierarchical latent concept discovery for video event detection. IEEE Trans. Image Process. 2017, 26, 2149–2162. [Google Scholar] [CrossRef]

- Kang, D.; Bailis, P.; Zaharia, M. BlazeIt: Optimizing declarative aggregation and limit queries for neural network-based video analytics. arXiv 2018, arXiv:1805.01046. [Google Scholar] [CrossRef]

- Yadav, P.; Curry, E. VidCEP: Complex Event Processing Framework to Detect Spatiotemporal Patterns in Video Streams. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 2513–2522. [Google Scholar]

- Vilamala, M.R.; Xing, T.; Taylor, H.; Garcia, L.; Srivastava, M.; Kaplan, L.; Preece, A.; Kimmig, A.; Cerutti, F. DeepProbCEP: A neuro-symbolic approach for complex event processing in adversarial settings. Expert Syst. Appl. 2023, 215, 119376. [Google Scholar] [CrossRef]

- Yang, Y.; Ma, Z.; Xu, Z.; Yan, S.; Hauptmann, A.G. How related exemplars help complex event detection in web videos? In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 2–8 December 2013; pp. 2104–2111. [Google Scholar]

- de Boer, M.; Schutte, K.; Kraaij, W. Knowledge based query expansion in complex multimedia event detection. Multimed. Tools Appl. 2016, 75, 9025–9043. [Google Scholar] [CrossRef]

- Brousmiche, M.; Rouat, J.; Dupont, S. Multimodal Attentive Fusion Network for audio-visual event recognition. Inf. Fusion 2022, 85, 52–59. [Google Scholar] [CrossRef]

- Kiaei, S.; Honarparvar, S.; Saeedi, S.; Liang, S. Design and Development of an Integrated Internet of Audio and Video Sensors for COVID-19 Coughing and Sneezing Recognition. In Proceedings of the 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, Canada, 27–30 October 2021; pp. 0583–0589. [Google Scholar]

- Chakraborty, I.; Cheng, H.; Javed, O. Entity Centric Feature Pooling for Complex Event Detection. In Proceedings of the 1st ACM International Workshop on Human Centered Event Understanding from Multimedia, Orlando, FL, USA, 7 November 2014; pp. 1–5. [Google Scholar]

- Ye, G.; Li, Y.; Xu, H.; Liu, D.; Chang, S.-F. Eventnet: A large scale structured concept library for complex event detection in video. In Proceedings of the 23rd ACM international conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 471–480. [Google Scholar]

- Chen, C.Y.; Fu, J.H.; Sung, T.; Wang, P.-F.; Jou, E.; Feng, M.-W. Complex event processing for the internet of things and its applications. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), New Taipei, Taiwan, 18–22 August 2014; pp. 1144–1149. [Google Scholar]

- Ke, J.; Chen, X.-J.; Chen, B.-D.; Xu, H.; Zhang, J.-G.; Jiang, X.-M.; Wang, M.-R.; Chen, X.-B.; Zhang, Q.-Q.; Cai, W.-H. Complex Event Detection in Video Streams. In Proceedings of the 2016 IEEE Symposium on Service-Oriented System Engineering (SOSE), Oxford, UK, 29 March–2 April 2016; pp. 172–179. [Google Scholar]

- Coşar, S.; Donatiello, G.; Bogorny, V.; Garate, C.; Alvares, L.O.; Brémond, F. Toward abnormal trajectory and event detection in video surveillance. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 683–695. [Google Scholar] [CrossRef]

- Zhang, H.; Ananthanarayanan, G.; Bodik, P.; Philipose, M.; Bahl, P.; Freedman, M.J. Live video analytics at scale with approximation and {Delay-Tolerance}. In Proceedings of the 14th USENIX Symposium on Networked Systems Design and Implementation (NSDI 17), Boston, MA, USA, 27–29 March 2017; pp. 377–392. [Google Scholar]

- Khan, A.; Serafini, L.; Bozzato, L.; Lazzerini, B. Event Detection from Video Using Answer Set Programing. In Proceedings of the 34th Italian Conference on Computational Logic (CILC), Trieste, Italy, 18–21 June 2019; pp. 48–58. [Google Scholar]

- Yadav, P.; Curry, E. Vekg: Video event knowledge graph to represent video streams for complex event pattern matching. In Proceedings of the 2019 First International Conference on Graph Computing (GC), Laguna Hills, CA, USA, 25–27 September 2019; pp. 13–20. [Google Scholar]

- Yadav, P. High-performance complex event processing framework to detect event patterns over video streams. In Proceedings of the 20th International Middleware Conference Doctoral Symposium, Davis, CA, USA, 9–13 December 2019; pp. 47–50. [Google Scholar]

- Yadav, P.; Curry, E. Visual Semantic Multimedia Event Model for Complex Event Detection in Video Streams. arXiv 2020, arXiv:2009.14525. [Google Scholar] [CrossRef]

- Yadav, P.; Salwala, D.; Das, D.P.; Curry, E. Knowledge Graph Driven Approach to Represent Video Streams for Spatiotemporal Event Pattern Matching in Complex Event Processing. Int. J. Semant. Comput. 2020, 14, 423–455. [Google Scholar] [CrossRef]

- Patel, A.S.; Merlino, G.; Puliafito, A.; Vyas, R.; Vyas, O.; Ojha, M.; Tiwari, V. An NLP-guided ontology development and refinement approach to represent and query visual information. Expert Syst. Appl. 2023, 213, 118998. [Google Scholar] [CrossRef]

- Kossoski, C.; Simão, J.M.; Lopes, H.S. Modelling and Performance Analysis of a Notification-Based Method for Processing Video Queries on the Fly. Appl. Sci. 2024, 14, 3566. [Google Scholar] [CrossRef]

- Petković, M.; Jonker, W.; Petković, M.; Jonker, W. Spatio-Temporal Formalization of Video Events. In Content-Based Video Retrieval: A Database Perspective; Springer: Boston, MA, USA, 2004; pp. 55–71. [Google Scholar]

- Honarparvar, S.; Saeedi, S.; Liang, S.; Squires, J. Design and Development of an Internet of Smart Cameras Solution for Complex Event Detection in COVID-19 Risk Behaviour Recognition. ISPRS Int. J. Geo-Inf. 2021, 10, 81. [Google Scholar] [CrossRef]

- Agarwal, S. Model Transfer for Event Tracking as Transcript Understanding for Videos of Small Group Interaction. In Proceedings of the International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022. [Google Scholar]

- Partanen, T.; Müller, P.; Collin, J.; Björklund, J. Implementation and accuracy evaluation of fixed camera-based object positioning system employing cnn-detector. In Proceedings of the 2021 9th European Workshop on Visual Information Processing (EUVIP), Paris, France, 23–25 June 2021; pp. 1–6. [Google Scholar]

- Robinson, B.; Langford, D.; Jetton, J.; Cannan, L.; Patterson, K.; Diltz, R.; English, W. Real-time object detection and geolocation using 3D calibrated camera/LiDAR pair. In Proceedings of the Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure, Online, 12–17 April 2021; SPIE: Bellingham, WA, USA, 2021; pp. 57–77. [Google Scholar]

- Wang, Y.; Gao, H.; Chen, G. Predictive complex event processing based on evolving Bayesian networks. Pattern Recognit. Lett. 2018, 105, 207–216. [Google Scholar] [CrossRef]

- Heckerman, D. A tutorial on learning with Bayesian networks. In Learning in Graphical Models; Springer: Dordrecht, The Netherlands, 1998; pp. 301–354. [Google Scholar]

- Uusitalo, L. Advantages and challenges of Bayesian networks in environmental modelling. Ecol. Model. 2007, 203, 312–318. [Google Scholar] [CrossRef]

- Kontkanen, P.; Myllymäki, P.; Silander, T.; Tirri, H.; Grünwald, P. Comparing predictive inference methods for discrete domains. In Proceedings of the Sixth International Workshop on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 4–7 January 1997; pp. 311–318. [Google Scholar]

- Al-Selwi, S.M.; Hassan, M.F.; Abdulkadir, S.J.; Muneer, A.; Sumiea, E.H.; Alqushaibi, A.; Ragab, M.G. RNN-LSTM: From applications to modelling techniques and beyond—Systematic review. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 102068. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, X.; Rao, M.; Qin, Y.; Wang, Z.; Ji, Y. Explicit speed-integrated LSTM network for non-stationary gearbox vibration representation and fault detection under varying speed conditions. Reliab. Eng. Syst. Saf. 2025, 254, 110596. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, K.; Li, J.; Lin, X.; Yang, B. LSTM-based traffic flow prediction with missing data. Neurocomputing 2018, 318, 297–305. [Google Scholar] [CrossRef]

- Raj, M.S.; George, S.N.; Raja, K. Leveraging spatio-temporal features using graph neural networks for human activity recognition. Pattern Recognit. 2024, 150, 110301. [Google Scholar] [CrossRef]

- Zhang, X.; Kim, G.-B.; Xia, Y.; Bae, H.-Y. Human activity recognition with trajectory data in multi-floor indoor environment. In Proceedings of the Rough Sets and Knowledge Technology: 7th International Conference, RSKT 2012, Chengdu, China, 17–20 August 2012; pp. 257–266. [Google Scholar]

- Zheng, Y.; Zhou, X. Computing with Spatial Trajectories; Springer Science & Business Media: New York, NY, USA, 2011. [Google Scholar]

- Zhu, S.; Guendel, R.G.; Yarovoy, A.; Fioranelli, F. Continuous human activity recognition with distributed radar sensor networks and CNN–RNN architectures. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Asghari, P.; Soleimani, E.; Nazerfard, E. Online human activity recognition employing hierarchical hidden Markov models. J. Ambient Intell. Humaniz. Comput. 2020, 11, 1141–1152. [Google Scholar] [CrossRef]

- Ouyang, X.; Xie, Z.; Zhou, J.; Huang, J.; Xing, G. Clusterfl: A similarity-aware federated learning system for human activity recognition. In Proceedings of the 19th Annual International Conference on Mobile Systems, Applications, and Services, Virtual, 24 June–2 July 2021; pp. 54–66. [Google Scholar]

- Tian, Z.; Yang, W.; Zhang, T.; Ai, T.; Wang, Y. Characterizing the activity patterns of outdoor jogging using massive multi-aspect trajectory data. Comput. Environ. Urban Syst. 2022, 95, 101804. [Google Scholar] [CrossRef]

- Azizi, Z.; Hirst, R.J.; Newell, F.N.; Kenny, R.A.; Setti, A. Audio-visual integration is more precise in older adults with a high level of long-term physical activity. PLoS ONE 2023, 18, e0292373. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Mueen, A.; Ding, H.; Trajcevski, G.; Scheuermann, P.; Keogh, E. Experimental comparison of representation methods and distance measures for time series data. Data Min. Knowl. Discov. 2013, 26, 275–309. [Google Scholar] [CrossRef]

- Yi, B.-K.; Jagadish, H.V.; Faloutsos, C. Efficient retrieval of similar time sequences under time warping. In Proceedings of the 14th International Conference on Data Engineering, Orlando, FL, USA, 23–27 February 1998; pp. 201–208. [Google Scholar]

- Xie, Z.; Bai, X.; Xu, X.; Xiao, Y. An anomaly detection method based on ship behavior trajectory. Ocean Eng. 2024, 293, 116640. [Google Scholar] [CrossRef]

- Jiao, W.; Fan, H.; Midtbø, T. A grid-based approach for measuring similarities of taxi trajectories. Sensors 2020, 20, 3118. [Google Scholar] [CrossRef]

- Rana, M.; Rahman, A.; Smith, D. A semi-supervised approach for activity recognition from indoor trajectory data. arXiv 2023, arXiv:2301.03134. [Google Scholar] [CrossRef]

- Zaman, M.I.; Bajwa, U.I.; Saleem, G.; Raza, R.H. A robust deep networks based multi-object multi-camera tracking system for city scale traffic. Multimed. Tools Appl. 2024, 83, 17163–17181. [Google Scholar] [CrossRef]

- Amosa, T.I.; Sebastian, P.; Izhar, L.I.; Ibrahim, O.; Ayinla, L.S.; Bahashwan, A.A.; Bala, A.; Samaila, Y.A. Multi-camera multi-object tracking: A review of current trends and future advances. Neurocomputing 2023, 552, 126558. [Google Scholar] [CrossRef]

- Berclaz, J.; Fleuret, F.; Turetken, E.; Fua, P. Multiple object tracking using k-shortest paths optimization. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1806–1819. [Google Scholar] [CrossRef]

- Alaba, S.Y. GPS-IMU Sensor Fusion for Reliable Autonomous Vehicle Position Estimation. arXiv 2024, arXiv:2405.08119. [Google Scholar] [CrossRef]

- Tran, T.M.; Vu, T.N.; Nguyen, T.V.; Nguyen, K. UIT-ADrone: A novel drone dataset for traffic anomaly detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5590–5601. [Google Scholar] [CrossRef]

| Framework | Event Matching Strategy | Strengths | Limitations |

|---|---|---|---|

| EventNet | Video ontology linking object relationships to complex event concepts; semantic querying | Structured semantic representation; supports ontology-driven search | Requires manual ontology creation; limited scalability for real-time multi-camera networks |

| Trajectory-based Models (e.g., hypergraph pairing) | Assign event semantics through trajectory pairing; cluster-based abnormal behavior detection | Good for motion-based behavior recognition; captures trajectory semantics | Sensitive to tracking errors; limited handling of static object interactions |

| Hierarchical Models | Multi-layered feature aggregation from frame to temporal concepts | Reduces error propagation; modular event detection | overfits on relatively simple events, leading to lower performance than certain baseline methods |

| VideoStorm | Lag- and quality-aware query processing | Resource-efficient allocation; adaptable query performance | Focused on query performance, not false positive/negative reduction |

| BLAZEIT | FrameQL-based query optimization over DNN inference | Lowers DNN computation cost; selective frame analysis | Limited to simpler patterns; less effective for multi-camera spatial relationships |

| Logical Reasoning Hybrid | Combines logical reasoning with simple event detection | Enhances semantic interpretation; flexible rule creation | Higher computation cost; less optimized for large-scale streaming |

| VIDCEP | ODSM with VEQL and Video Event Knowledge Graph (VEKG) | Flexible spatiotemporal queries; domain-independent | Fully stateful → high computational cost; scalability issues with large object counts |

| MERN | Semantic-rich event representation; multi-entity relation network | Strong semantic modelling; supports multi-entity interactions | Relies heavily on the completeness and accuracy of domain ontologies, which may limit generalization to new domains; integration of DL models with semantic CEP adds system complexity |

| NLP-guided Ontologies | Uses NLP to enhance video event ontologies | Improved semantic matching; better generalization from text cues | Dependent on high-quality NLP models; limited spatial reasoning |

| NOP | Notification-Oriented Paradigm with chain-based queries | Efficient event chaining for specific domains | Restricted to certain event types; lacks general-purpose scalability |

| # | Scenario | Camera Locations | Number of Videos |

|---|---|---|---|

| 1 | A person picks up the bottle from the chair in Room 361, then passes 361B corridor, then pours it into the mug located on the shelf in 361A | F, A, E | 2 |

| 2 | A person enters corridor 361B and picks up the bottle next to the printer. Then enter corridor 361Z and exit it. Then, enter 301Z and put the bottle on the bin door. | A, B, C | 2 |

| 3 | Roundabout right-of-way violation: A motorbike enters the roundabout SQ from street C1 before a car enters the roundabout SQ from street B1. Then, the car blocks the motorbike’s path and leaves the Square earlier than the motorbike. | International University—VNU-HCM roundabout | 12 |

| 4 | A person picks up the bottle from the table, moves to the door, opens the door, and exits corridor 361B. Every time a mandatory error is added to the spatial event (persons pick up the bottle). Occultation, losing track, and false negative object detection. | A | 8 |

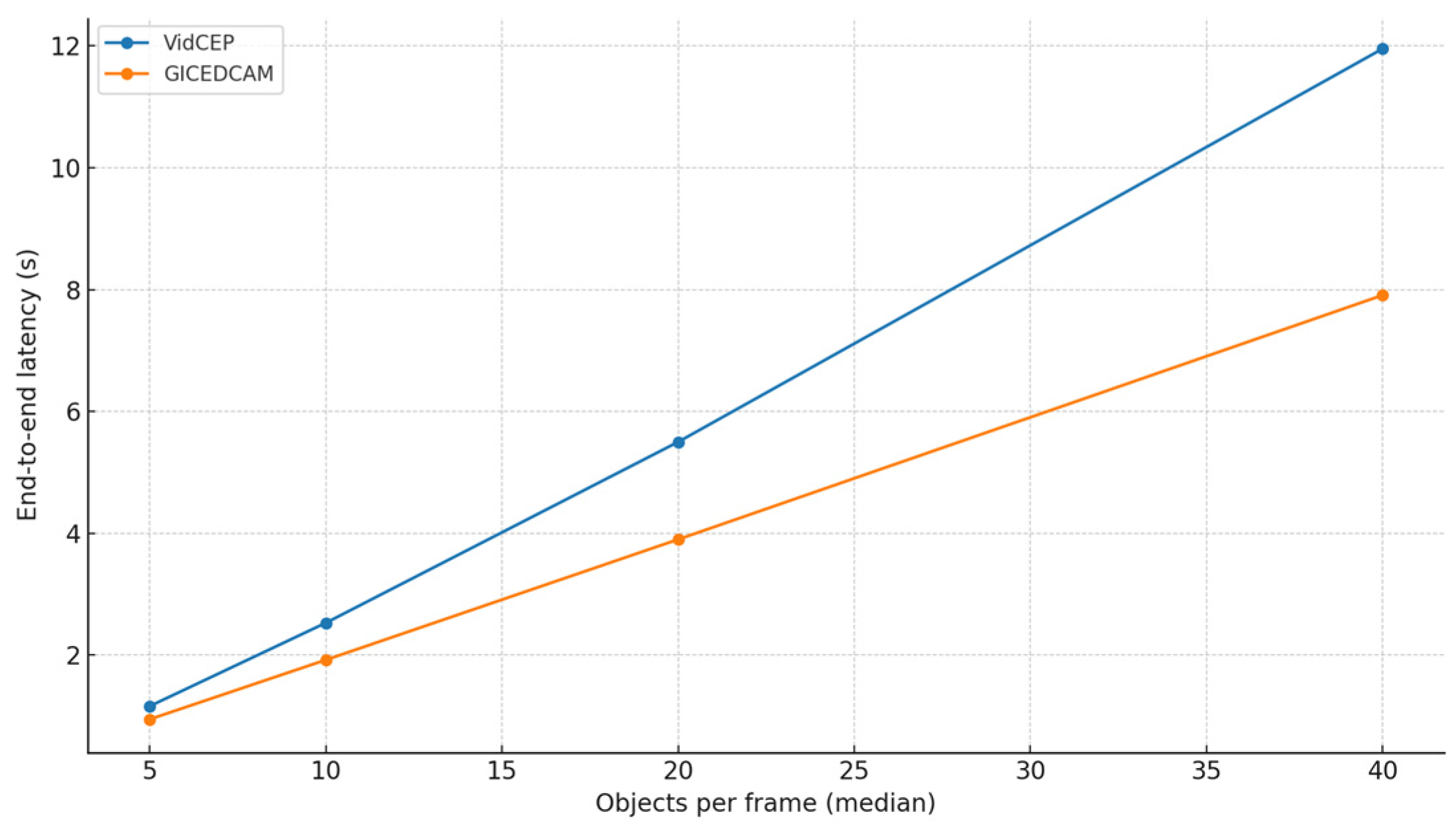

| Number of Video Streams | End-to-End Processing Time GICEDCAM | Latency Under Load GICEDCAM | End-to-End Processing Time VIDCEP | Latency Under Load VIDCEP |

|---|---|---|---|---|

| 1 | 1.4 s | 0 s | 2.8 s | 0 s |

| 2 | 2 s | 0.6 s | 5.1 s | 2.3 s |

| 4 | 2.8 s | 0.8 s | 9.2 s | 4.1 s |

| 8 | 4.1 s | 1.3 s | 15.8 s | 6.6 s |

| 16 | 5.9 s | 1.8 s | 27 s | 11.2 s |

| 20 | 6.8 s | 2.2 s | 36.4 s | 9.4 s |

| Scenario | GICEDCAM Latency | VIDCEP Latency |

|---|---|---|

| One | 2.0 s | 3.1 s |

| Two | 2.9 s | 4.2 s |

| Three | 3.9 s | 5.5 s |

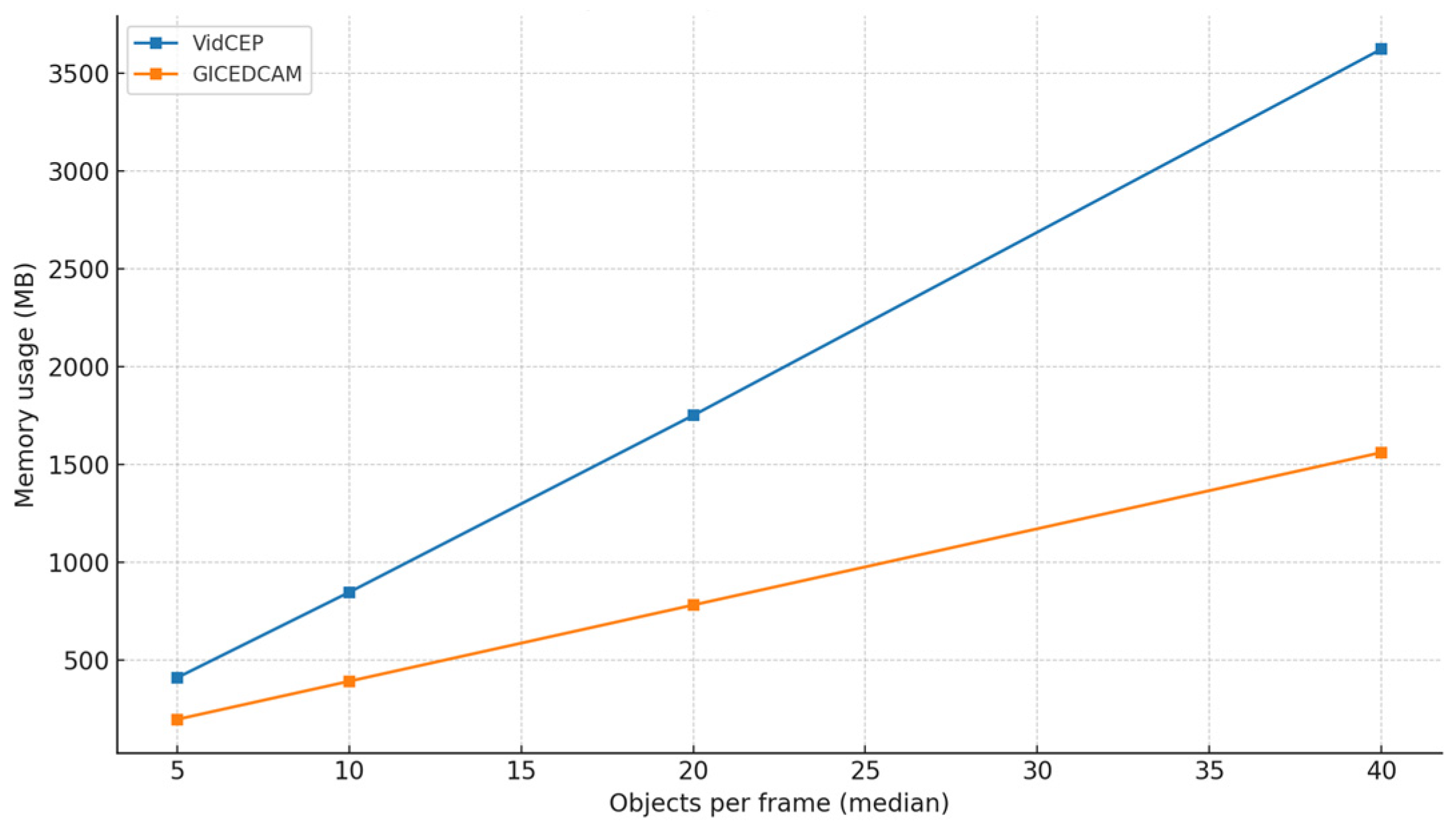

| Scenario | GICEDCAM Memory (MB) | VIDCEP Memory (MB) | GICEDCAM CPU (%) | VIDCEP CPU (%) |

|---|---|---|---|---|

| One | 540 | 1320 | 26 | 41 |

| Two | 670 | 1580 | 34 | 54 |

| Three | 780 | 1750 | 42 | 64 |

| Method | Precision | Recall | F-Score |

|---|---|---|---|

| BN | 0.79 | 0.70 | 0.74 |

| LSTM | 0.86 | 0.87 | 0.86 |

| Trajectory analysis | 0.82 | 0.76 | 0.78 |

| Scenario | BN Method | LSTM | Trajectory Analysis |

|---|---|---|---|

| One | 0.12 s | 1.60 s | 0.70 s |

| Two | 0.14 s | 1.65 s | 1.1 s |

| Three | 0.20 s | 1.75 s | 1.6 s |

| Four | 0.10 s | 1.42 s | 0.51 s |

| Metric | Scenario 2 | Scenario 3 | Scenario 4 |

|---|---|---|---|

| Precision (DeepSORT) | 91% | 78% | 70% |

| Precision (Event aware DeepSORT) | 96% | 85% | 85% |

| Latency (DeepSORT) | 0.15 s | 0.25 s | 0.25 s |

| Latency (Event aware DeepSORT) | 0.22 s | 0.37 s | 0.40 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Honarparvar, S.; Honarparvar, Y.; Ashena, Z.; Liang, S.; Saeedi, S. GICEDCam: A Geospatial Internet of Things Framework for Complex Event Detection in Camera Streams. Sensors 2025, 25, 5331. https://doi.org/10.3390/s25175331

Honarparvar S, Honarparvar Y, Ashena Z, Liang S, Saeedi S. GICEDCam: A Geospatial Internet of Things Framework for Complex Event Detection in Camera Streams. Sensors. 2025; 25(17):5331. https://doi.org/10.3390/s25175331

Chicago/Turabian StyleHonarparvar, Sepehr, Yasaman Honarparvar, Zahra Ashena, Steve Liang, and Sara Saeedi. 2025. "GICEDCam: A Geospatial Internet of Things Framework for Complex Event Detection in Camera Streams" Sensors 25, no. 17: 5331. https://doi.org/10.3390/s25175331

APA StyleHonarparvar, S., Honarparvar, Y., Ashena, Z., Liang, S., & Saeedi, S. (2025). GICEDCam: A Geospatial Internet of Things Framework for Complex Event Detection in Camera Streams. Sensors, 25(17), 5331. https://doi.org/10.3390/s25175331